1. Introduction

Vision is one of the senses that dominates our lives. It allows us to form perceptions of the surrounding world and give meaning to objects, concepts, ideas, and tastes [

1]. Therefore, any type of visual loss can have a great impact on our daily routines, significantly affecting even the simplest tasks in our day-to-day habits. Vision loss can be sudden and severe, or the result of a gradual deterioration, where objects at great distances become increasingly difficult to see. Therefore, the wording “vision impairment” encompasses all conditions in which vision deficiency exists [

2]. The individual who is born with the sense of sight and later loses it stores visual memories and can remember images, lights, and colors. This particularity is of the highest importance for re-adaptation. On the other hand, those who are born without the capacity of seeing can never form or possess visual memories. For both cases, clothing represents a demanding challenge.

Recently, there has been an increasing focus on assistive technology for people with visual impairments and blindness, aiming at improving mobility, navigation, and object recognition [

3,

4,

5]. Despite the high technology already available, some gaps remain, particularly in the area of aesthetics and image.

The ways in which we dress and the styles that we prefer for different occasions are part of our identity [

6]. Blind people do not have this sense, and dressing can be a difficult and stressful task. Taking advantage of the unprecedented technological advancements of recent years, it becomes essential to minimize the key limitations of a blind person when it comes to managing garments. Not knowing the colors, the types of patterns, or even the state of garments makes dressing a daily challenge. Moreover, it is important to keep in mind that blind people are more likely to have stains on their clothes, as they face more challenges in handling objects and performing simple tasks, such as eating, cleaning and painting surfaces, and leaning against dirty surfaces, among others. In these situations, most of the time, when we involuntarily drop something on our clothes that causes a stain, we immediately attempt to clean it, as we know that the longer we take to clean it, the greater the difficulty in removing the stain later—something that might happen more often with blind people.

Despite the already available cutting-edge technology and smart devices, some aspects of aesthetics and image still remain barely explored. This was the fundamental issue behind the motivation for this work—namely, how to enable blind people to feel equally satisfied with what they wear, functional, and without needing help. The scope of this research follows the previous work of the authors [

7,

8,

9,

10], as, through image processing techniques, it is possible to help blind people to choose their clothing and to manage their wardrobes.

In short, this work mainly contributes to the field with (i) the listing of relevant techniques used in image recognition for the identification of clothing items and for the detection of stains on garments; (ii) an annotated dataset of clothing stains, which could be later increased by new research; and (iii) a benchmark between different deep learning networks. The outcomes of this work are also foreseen to be implemented in a mobile application already identified as a preferred choice in a survey conducted with all departments of the Association of the Blind and Amblyopes of Portugal (ACAPO) [

11].

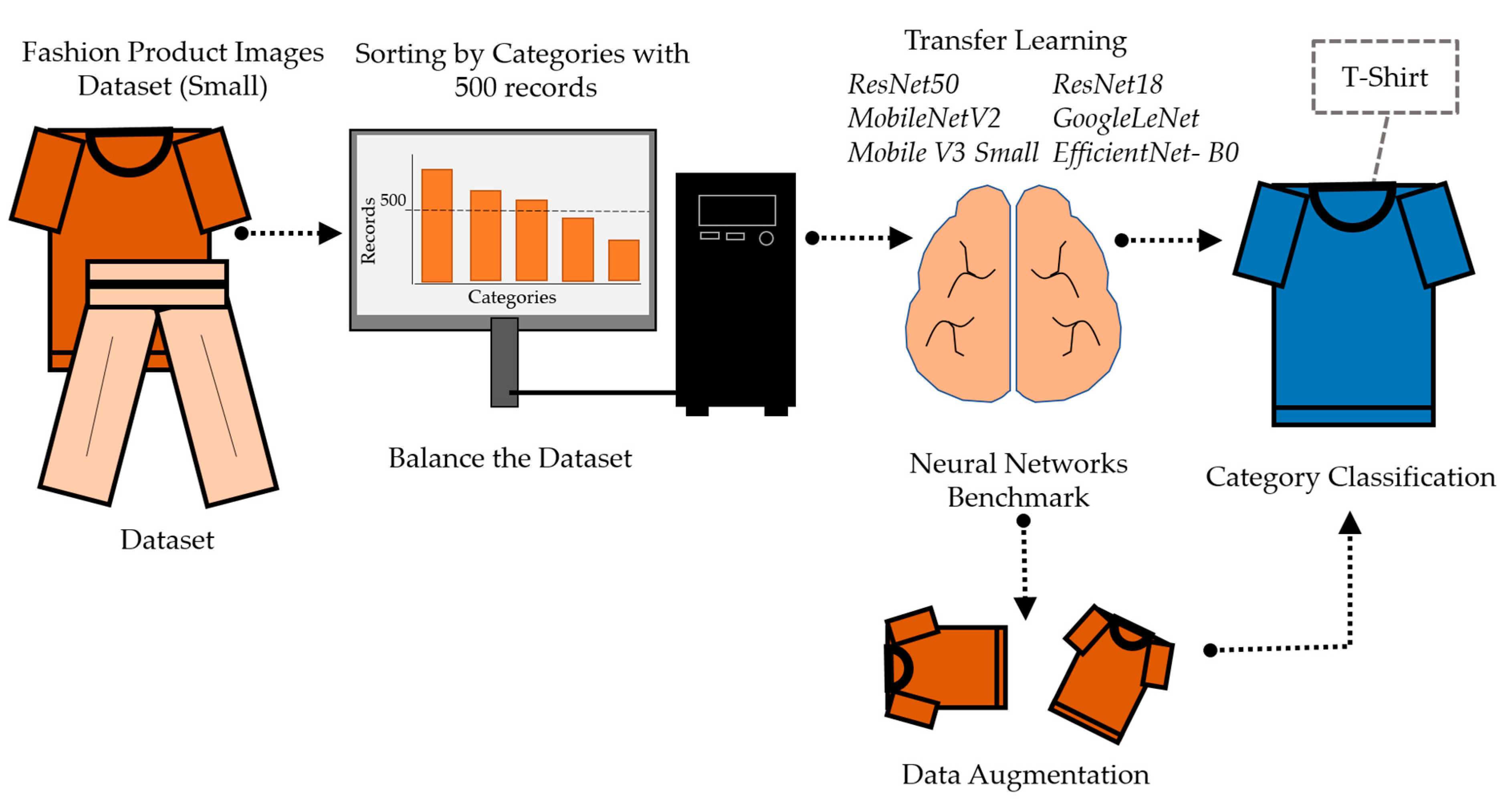

Following the model approach already identified by the authors [

12], the scope of the research presented here is focused on the algorithm for clothing category classification and stain detection. Alongside this, a mobile application and a mechatronic system, i.e., an automatic wardrobe, will complement all presented algorithms and methodology [

13]. Taking advantage of the partnership with ACAPO and with the Association of Support for the Visually Impaired of Braga (AADVDB), the developed work was validated and opportunities for future improvements were identified. In the five upcoming sections, related work is described (

Section 2), the methodology is explained (

Section 3), experiments are described (

Section 4) and the main conclusions and future work are finally presented (

Section 5).

2. Related Work

In the last few years, deep learning techniques have arisen as a great method to solve problems in computer vision, such as image classification, object detection, face recognition and language processing, where convolutional neural networks (CNNs) play an imperative role [

14].

The convolutional neural networks (CNNs) have exhibited excellent results and advances in image recognition since 2012 (CNNs) [

15,

16], when AlexNet [

17] was introduced in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [

18,

19]. The ImageNet competition consists of evaluating several algorithms for large-scale object detection and image classification, allowing researchers to compare detection across a variety of object classes. In recent years, several CNNs have been presented, such as VGG [

20], GoogLeNet [

21], SqueezeNet [

22], Inception [

23], ResNet [

24], ShuffleNet [

25], MobileNet [

26,

27], EfficientNet [

28] and RegNet [

29], among others, using these networks for different image classification problems.

In line with this premise, some researchers turned to the fashion world, making use of the more recent advances in computer vision to explore diverse areas such as fashion detection, fashion analysis and fashion recommendations, achieving promising results [

30]. Based on the scope of the conducted research, only fashion classification is covered in this work. A quick literature survey allowed the identification of several works that have attempted to handle the classification of fashion images. Most of the authors evaluate their models based on top

k accuracy regarding clothing attribute recognition, normally with top 3 and top 5 scores, which means that the correct label is among the top

k predicted labels.

The research of Chen et al. [

31] presented a network for describing people based on fine-grained clothing attributes, with an accuracy of 48.32%. Similarly, Liu et al. [

32] introduced FashionNet, which learns clothing features by jointly predicting clothing attributes and landmarks. Predicted landmarks are used to pool or gate the learned feature maps. The authors reported a top 3 classification accuracy score of 93.01% and a top 5 score of 97.01%. Another method to detect fashion items in a given image using deep convolutional neural networks (CNN), with a mean Average Precision (mAP) of 31.1%, was performed by Hara et al. [

33]. Likewise, Corbière et al. [

34] proposed another method based on weakly supervised learning for classifying e-commerce products, presenting a top 3 category accuracy score of 86.30% and a top 5 accuracy score of 92.80%. Later, Wang et al. [

35] proposed a fashion network to address fashion landmark detection and category classification with the introduction of intermediate attention layers for a better enhancement in clothing features, category classification and attribute estimation. In their work, accuracy scores of 90.99% and 95.75% were reported for top 3 and top 5, respectively. In another study by Li et al. [

36], the authors presented a two-stream convolutional neural with one branch dedicated to landmark detection and the other one to category and attribute classification, allowing the model to learn the correlations among multiple tasks and consequently achieve improvements in the results. Accuracy scores of 93.01% and 97.01% for top 3 and top 5 were reported, respectively. A novel fashion classification model proposed by Cho et al. [

37] improves the performance by taking into account the hierarchical dependences between class labels, reaching accuracy of 91.24% and 95.68% in top 3 and top 5, respectively. A multitask deep learning architecture was then proposed by Lu et al. [

38] that groups similar tasks and promotes the creation of separated branches for unrelated tasks, with accuracy results of 83.24% and 90.39% for top 3 and top 5, respectively. Seo and Shin [

39] proposed a Hierarchical Convolutional Neural Network (H-CNN) for fashion apparel classification. The authors demonstrated that hierarchical image classification could minimize the model losses and improve the accuracy, with a result of 93.3%. The research of Fengzi et al. [

40] applied transfer learning using pertained models for automatically labeling uploaded photos in the e-commerce industry. The authors reported an accuracy value of 88.65%. Additionally, a condition convolutional neural network (CNN) was proposed by Kolisnik, Hogan and Zulkernine [

41], based on branching convolutional neural networks. The proposed branching can predict the hierarchical labels of an image and the last label predicted in the hierarchy is reported with accuracy of 91.0%.

Table 1 summarizes the aforementioned works, including the used datasets.

Regarding the specific topic of stain detection, to the best of our knowledge, there are no relevant works available in the literature. Nonetheless, stain detection is an important sub-topic of defect detection in clothing and in the textile field; hence, considering a broader approach, i.e., defect detection in clothing and in the textile industry, some research works could be considered for comparative purposes.

C. Li et al. [

42] recently developed a survey based on fabric defect detection in textile manufacturing, where learning-based algorithms (machine learning and deep learning) have been the most popular methods in recent years. The deep learning-based object detector is divided into one-stage detectors and two-stage detectors. One-stage detectors such as You Look Once (YOLO) [

43] and the Single Shot Detector (SSD) [

44] are faster but less accurate when compared with two-stage detectors, such as Faster R-CNN[

45] and Mask R-CNN (region-based convolutional neural network) [

46]. On the other hand, two-stage detectors are more accurate, but also slower. Thus, choosing an adequate algorithm is necessary for the envisioned application. Therefore, in the first stage, a more accurate detector was considered instead of a real-time need, since the detection of stains is affected by different sizes, aspects, and locations on clothes.

In sum, it is visible that there has been great effort to build efficient methods for fashion category classification. Moreover, the aforementioned works (

Table 1) did not focus on developing systems to aid visually impaired people, and there is yet no solution capable of covering all the difficulties experienced by a blind person, namely an automatic system for clothing type identification and stain detection.

5. Conclusions

Blind people experience challenging difficulties regarding clothing and style on a daily basis, something that is often essential to an individual’s identity. Especially regarding stain detection in clothing, blind people need supporting tools to help them to identify when a clothing item has a stain, as cleanliness is often important for a person to feel comfortable and secure in their appearance. Such difficulties are often overcome with the help of family or friends, or others with great organizational capacity. However, for a blind person to feel self-confident in their clothing, the use of technology becomes imperative, namely the use of neural networks.

In this study, an analysis of clothing type identification and stain detection for blind people is presented. Through the transfer learning benchmark results, a deep learning model was demonstrated to be able to identify the clothing type category with up to a 91% F1 score, representing an improvement in comparison to the literature. Augmented data were proven to potentially improve even further the obtained results. Nevertheless, more tests should be performed in order to identify which type of augmented data fits better the model considered in this work.

A pioneering method to detect stains from a given clothing image was also presented based on a dataset comprising clothing with wine and coffee stains. This dataset was built to demonstrate that the proposed deep learning algorithm could accurately detect and locate stains on clothing autonomously. The results were promising and are expected to improve when a larger dataset is used.

This work was somehow limited by the fact that clothing type recognition was based on clothing worn by models, which can lead to an unwanted loss in the model and to categories that are not necessary due their redundancy. On the other hand, the stains dataset can be improved with more data and with the optimization of the number of categories considered for clothing type detection. Additionally, the development of a mobile application and its subsequent validation with the blind community can be performed, allowing these results to be integrated into an automatic wardrobe.

Nevertheless, the overall concept behind this work was fully demonstrated, as a system that can significantly improve the daily lives of blind people was developed and tested, allowing them to automatically recognize clothing and identify stains.