Abstract

Underwater images often suffer from low contrast, low visibility, and color deviation. In this work, we propose a hybrid underwater enhancement method consisting of addressing an inverse problem with novel Retinex transmission map estimation and adaptive color correction. Retinex transmission map estimation does not rely on channel priors and aims to decouple from the unknown background light, thus avoiding error accumulation problem. To this end, global white balance is performed before estimating the transmission map using multi-scale Retinex. To further improve the enhancement performance, we design the adaptive color correction which cleverly chooses between two color correction procedures and prevents channel stretching imbalance. Quantitative and qualitative comparisons of our method and state-of-the-art underwater image enhancement methods demonstrate superiority of the proposed method. It achieves the best performance in terms of full-reference image quality assessment. In addition, it also achieves superior performance in the non-reference evaluation.

1. Introduction

Underwater images are an important medium of ocean information. The quality of underwater images is essential for exploring and utilizing the coastal waters and deep sea, such as underwater surveying, target detection, submarine mineral, and marine energy exploration, to name a few. They are also crucial for the vision function of Remote Operated Vehicles (ROVs) and Autonomous Underwater Vehicles (AUVs). During the past few years, underwater image enhancement has drawn much attention in underwater vision communities [1,2]. Due to the complicated optical propagation and lighting conditions in the underwater environment, enhancing an underwater image is a challenging and ill-posed problem [3]. Usually, an underwater image is degraded by wavelength-dependent absorption and scattering including forward scattering and backward scattering. These adverse effects reduce visibility, decrease contrast, and introduce color deviations, hindering the practical applications of underwater images in marine exploration.

Due to the similarity between underwater image formation model and atmospheric haze image formation model, one line of research is to adapt the successful Dark Channel Prior (DCP) [4] for underwater image enhancement, such as Low-complexity Dark Channel Prior (LDCP) [5] and Underwater Dark Channel Prior (UDCP) [6]. These works have achieved good results in normal situations, but still may fail from time to time and produce unsatisfactory visual results. One reason is that the underwater dark channel prior or its variants are not always valid when there are white objects or artificial light in the underwater scene. In addition, in this line of work, transmission map estimation usually requires knowing the background light. Hence, the error in estimating background light will accumulate into the estimation of transmission map, leading to poor restoration.

Another line of research is physical model unrelated, aiming to modify image pixel values to improve visual quality. One example is the Fusion [7] method which blends a contrast-enhanced image and a color-corrected image in a multi-scale fusion strategy. Fusion has achieved remarkable results from the point of color and detail recovery. However, it is prone to introduce red artifacts after enhancement. Recently deep-learning based underwater image enhancement methods [3,8] have shown promising results. This category of methods takes a data-drive approach to train deep neural networks directly, usually regardless of the underwater imaging models. Despite the success, they often demand heavy computation and high memory which limits their usage in resource-limited application scenarios, such as underwater vision for ROVs and AUVs.

In this work, we propose a hybrid underwater enhancement method consisting of addressing the inverse problem and color correction. Different from DCP variant methods, our method does not rely on channel priors. Moreover, it aims to decouple the estimation of transmission map from the estimation of background light, avoiding the error accumulation problem. To this end, we perform global white balance before estimating the transmission map, and comprehensively use the multi-scale Retinex [9] to obtain a three-channel transmission map. Gaussian filtering is used to obtain the local backscattered light as the background light value. After addressing the inverse problem, a final adaptive color correction strategy is proposed to further improve the enhancement performance in case of possible unsatisfactory white balance results or unfaithful local backscattered light estimation.

To demonstrate superiority of the proposed method, we conduct quantitative and qualitative comparison experiments of our method and state-of-the-art (SOTA) underwater image enhancement methods on the Underwater Image Enhancement Benchmark (UIEB) [10] dataset. In quantitative comparison experiments, we perform both all-reference evaluation and non-reference evaluation.

The contributions of this paper are summarized as follows:

- A transmission map estimation method based on white balance and multi-scale Retinex is proposed, which does not need unknown backscattered light and avoids an error accumulation problem and block effect. The calculation is relatively simple, and no optimization by guided filtering [11] is required;

- In order to deal with possible unsatisfactory preliminary recovery results in some cases, an adaptive color correction method is proposed, which cleverly choose between two color correction procedures and prevent channel stretching imbalance;

- We conduct comprehensive quantitative and qualitative experiments on our method and compare them with SOTA underwater image enhancement methods. Experimental results demonstrate the superiority of the proposed method which achieves the best performance in terms of full-reference image quality assessment. In addition, it also achieves superior performance in the non-reference evaluation.

2. Related Works

McGlamery’s study [12] showed that, in the underwater medium, the total radiation reaching the observer consists of three components: Direct Component , Forward-scattering Component , and Backscattering Component . The direct component is the portion of the light reflected from the target scene that undergoes attenuation and reaches the observer. At each pixel x of the image, the direct component can be expressed as:

where is the radiation of the object at the x, refers to the color channel in the RGB color space, is the distance from the object to the observer, and is the attenuation coefficient. represents the absorption and scattering of light when it propagates in the underwater medium, and different wavebands of light have different attenuation coefficients. is called the transmission map of underwater medium.

The backscattering component, also known as veiling light, is the prime reason for losing contrast and color cast in underwater images. The backscattering component can be expressed as:

where is called backscattered light or background light.

Because the forward-scattering component has little effect on image distortion, it can be ignored. Therefore, in most underwater image restoration methods [13,14,15], the simplified underwater imaging model used is as follows:

where , and is the corresponding color channel of the observed image . Image restoration aims to recover the radiation of the scene from the observed image . If and have been estimated and substituted into Equation (3), the recovery formula of underwater image can be obtained as follows:

The recovery of underwater image in Equation (4) is an ill-posed problem. Thus, some sort of priors could be utilized to estimate the unknown transmission map and backscattered light. Many methods based on the dark channel prior or its variants have emerged in recent years. Yang et al. [5] proposed an efficient and low complexity underwater image enhancement method based on Dark Channel Prior which employed the median filter instead of the soft matting procedure to estimate the transmission map of image. However, since the original DCP was designed for atmospheric image dehazing, the transmission map obtained by DCP was not accurate when there were white objects or artificial light in the underwater scene. The UDCP [6] used the green and blue channel to estimate the transmission map and obtained a more accurate underwater transmission map compared with original DCP. However, such biased estimations of the transmission map lead to the darkening of the overall image in the final restored image. Peng et al. [16] proposed a Generalized Dark Channel Prior (GDCP) for image restoration, which first estimated ambient light using the depth-dependent color change and then estimated the scene transmission. Song et al. [17] proposed a transmission map optimization method based on statistical model-based background light estimation and the underwater light attenuation prior, which could better recover the details of underwater images. However, this method could not obtain accurate background light value for some close-range images, resulting in an unsatisfactory visual effect. Wang et al. [18] proposed a novel underwater image restoration method based on an adaptive attenuation-curve prior that relied on the statistical distribution of pixel values.

Since the scene depth map is very important for the estimation of the transmission map and background light intensity, Song et al. [19] proposed a rapid and effective scene depth estimation model based on underwater light attenuation prior (ULAP). In this method, the coarse estimated transmission map was calculated over local patches of the underwater image, resulting in blocking artifacts in the transmission . In order to solve such a problem, guided filter [11] was used to refine the coarse , which was computationally expensive. Peng et al. [20] proposed a depth estimation method based on image blurriness and light absorption, which can be used in the image formation model to restore and enhance underwater images.

Physical model unrelated methods, such as the well-known Fusion [7], directly modify image pixel values to improve visual quality. Ghani et al. [21] presented a method dubbed as dual-image Rayleigh-stretched contrast-limited adaptive histogram specification, which integrated global and local contrast correction. Huang et al. [22] proposed a Relative Global Histogram Stretching (RGHS) method, which obtained dynamic parameters based on the original image. It stretched and adjusted each channel, meanwhile used bilateral filtering to eliminate noise, enhanced detail information, and finally corrected colors. RGHS has achieved an excellent visual effect on many underwater blurred images, but it does not solve the color deviation problem.

Underwater image datasets are crucial to the development and evaluation of underwater image enhancement methods. Li et al. [10] presented the UIEB dataset, which contains 890 underwater images with corresponding reference images for training, and another 90 pairs of images for testing. Based on UIEB, they proposed a deep learning based underwater image enhancement network, named Water-Net, to demonstrate the potential of the UIEB dataset. Despite the effectiveness of Water-Net, it is a convolutional neural network and sometimes not suitable for power-limited or memory-limited devices. Recently, neural networks are also used to synthesize diverse underwater scenes and to build the neural rendering underwater dataset [2].

3. Proposed Underwater Image Enhancement Method

The proposed method follows the recovery formula in Equation (4), which requires the transmission map and the backscattered light to be estimated before performing recovery calculation. In addition, adaptive color correction is carried out subsequently to deal with possible low contrast and color shifts remaining in the output of the preliminary recovery calculation of Equation (4). Thus, the proposed underwater image enhancement method consists of four steps: retinex transmission map estimation, local backscattered light estimation, preliminary recovery and adaptive color correction.

3.1. Retinex Transmission Map Estimation

In most underwater image restoration methods [4,15], the backscattered light is needed to estimate the medium transmission map . Then, the error in estimating will accumulate into the estimation of . If using minimization filter to estimate , due to block effect, the estimated medium transmission map has to be refined by the Guided filter to reduce the negative influence of block effect, which in turn causes a certain amount of calculation burden. Our proposed transmission map estimation method is designed to avoid the above problems.

We begin by deriving from Equation (3):

In order to utilize the above relationship to estimate the medium transmission map without knowing in advance, we perform global white balance on the input image firstly, resulting in the white balanced version . Now, no chromatic component dominates the underwater image. Such operation can be considered as fixing background light to achromatic . In addition, we assume that the imaging model in Equation (3) is now valid for under the achromatic background light, however, with a color-shifting scene radiance from the original underwater target scene, denoted by . Note that still remains the same since it is scene radiance independent.

Thus, transmission map can be derived as in Equation (5) by using the white balanced , the achromatic , and the new scene radiance :

In Equation (6), is unknown, embodying the fictitious change of the target scene due to white balance operation. Fortunately, since has a piecewise smoothing property, multi-scale Retinex can be used to estimate [23]:

where represents a multi-scale Retinex operator presented in [9], which computes a triplet of lightness values for each pixel.

Therefore, the transmission map can be obtained as follows:

Compared with other transmission map estimation methods, the method we proposed avoids using the estimated to estimate the medium transmission map, and there is no negative influence of block effect, so the calculation is relatively simple. The obtained transmission map is shown in Figure 1. As mentioned earlier, we utilize white balance to change the scene chromaticity of the input image before estimating the medium transmission map. The white balance step we used is the gray world white balance algorithm [24]. The algorithm firstly computes the average value of , which is represented by , and the gray average value of the entire image can be obtained as . Finally, use the following formula for white balance:

where, are the gains of the three channels, , , .

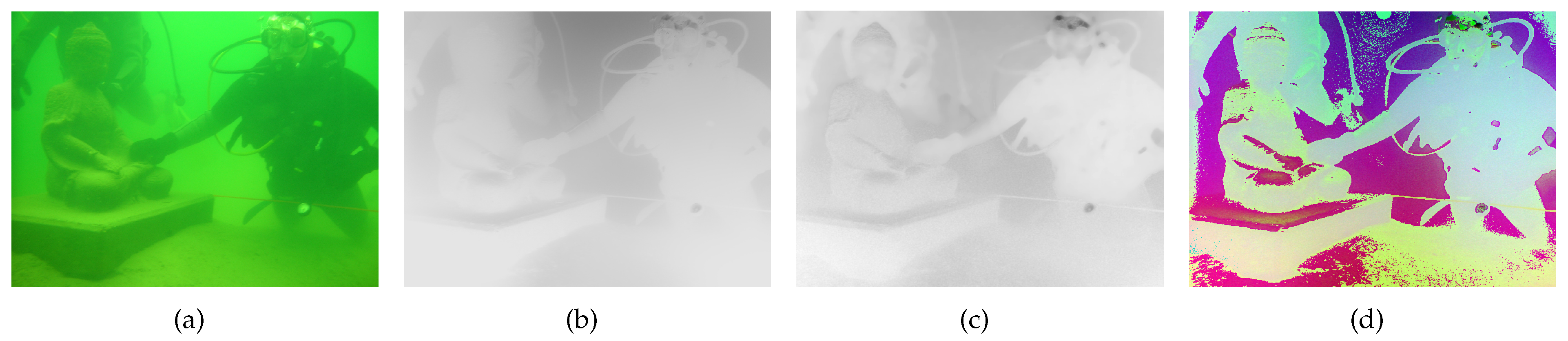

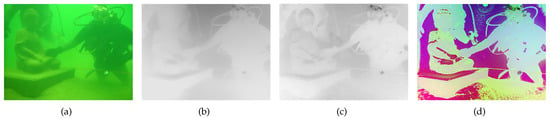

Figure 1.

(a) An underwater image; (b) transmission map estimated by UDCP; (c) transmission map estimated by LDCP; (d) transmission map estimated by the proposed algorithm.

3.2. Local Backscattered Light Estimation

The underwater medium is rich in suspended particles. When light is incident on the particles, the light will be scattered. Therefore, these particles can be regarded as a weak light source. The scattered light of these particles reaches the observer and constitutes a backscattered component. Most underwater restoration methods assume that the medium and illumination are uniform to estimate the global backscattered light. However, common underwater scenes often have non-uniform illumination and medium. To estimate a global backscattered light is not suitable because the backscattered light is non-uniform [14]. Moreover, when the scene’s depth is so deep that artificial lighting is needed, the estimation error of global backscattered light will become more prominent. Therefore, estimating a local backscattered light can better adapt to the underwater environment.

We utilize the method of [25] to estimate the local backscattered light. Given an underwater image , it is assumed that the distance between the local block around the image pixel x and the camera is uniform. The backscattered light is obtained by Gaussian low-pass filtering of the underwater observed image:

where is the Gaussian function, ⊗ is the convolution operation, and is a partial window around the pixel x. Utilizing local backscattered light has better detail recovery finally.

3.3. Preliminary Recovery

After using the above techniques to estimate the medium transmission map and the local backscattering light , they are substituted into Equation (4) to perform preliminary recovery of scene radiance .

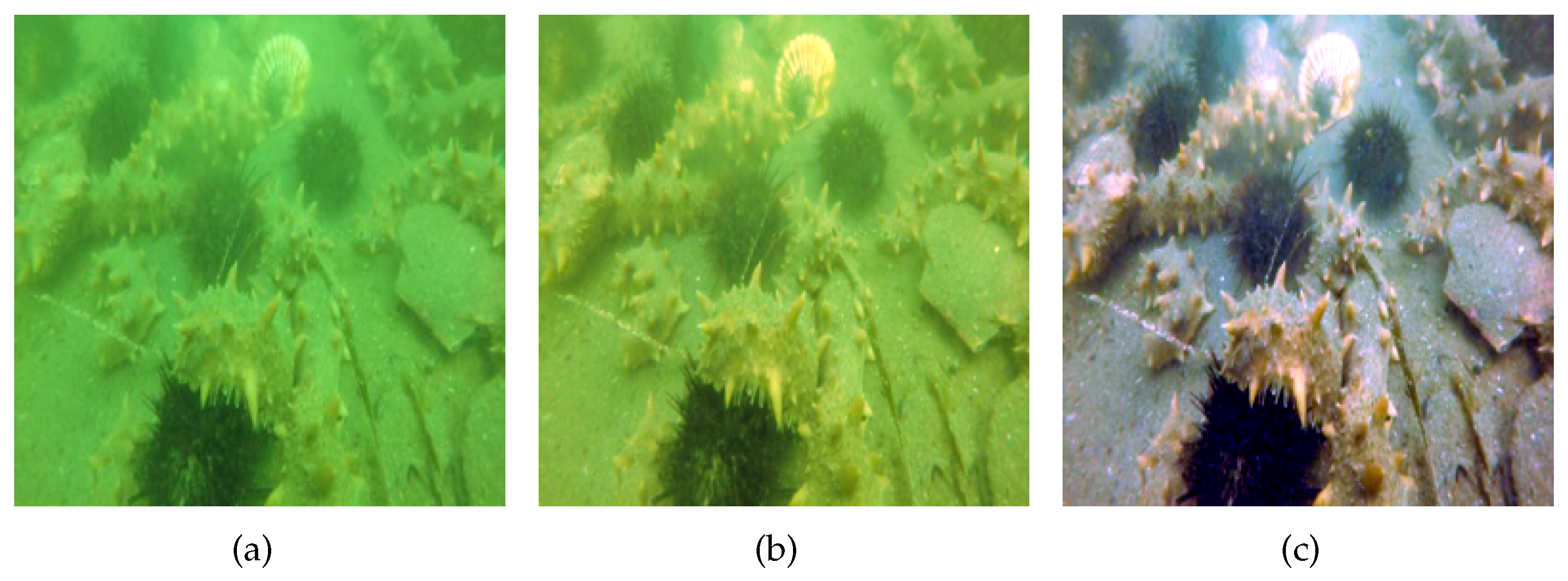

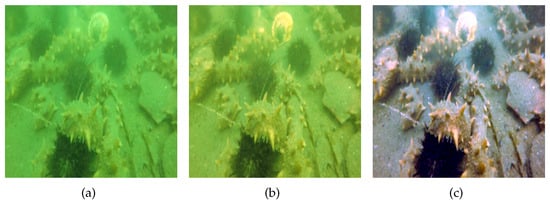

However, the recovered at this stage may still suffer from low contrast and color shifts, due to unsatisfactory white balance results or unfaithful local backscattered light estimation in some cases. One of such cases is given in Figure 2.

Figure 2.

Example of unsuccessful preliminary recovery result and the final adaptive color correction result. (a) the original image; (b) preliminary recovery result; (c) adaptive color correction result produced by the proposed method.

3.4. Adaptive Color Correction

In order to deal with possible low contrast and color shifts in some cases, we propose an adaptive color correction step to alleviate these problems and improve the enhancement result.

The basic formula of color correction is by

where and are the upper and lower stretching parameters of c channel, and is the stretch coefficient. There are two popular choices for stretching parameters and . The first one is to directly use the maximum and minimum channel intensity and , while the other one considers second-order statistics of the channel intensity [26]:

where is the mean value of the c channel, is the standard deviation of the c channel, and is an adjustment hyper-parameter, usually set among 2∼3.

We have investigated both these two choices. Empirically, we found that neither of them can produce good results for all situations. They both produce unwanted artifacts in the corrected results. However, they have the potential to compensate for each other. Specifically speaking, artifacts are prone to occur if one channel is over-enhanced compared to the other two channels, regardless of which choice of stretching parameters is selected. From the angle of Equation (11), when the stretch coefficient of one channel is too large compared with those of the other two channels, artifacts are prone to occur.

Therefore, we proposed an adaptive color correction strategy which uses variance of the three-channel stretching coefficients to indicate the degree of channel stretching imbalance. By comparing the variances of of the aforementioned two choices, the strategy selects the one with less variance, as detailed in Algorithm 1.

| Algorithm 1 Adaptive color correction |

Input: Preliminary recovery result Output: Adaptive color correction result

|

4. Results

To demonstrate the advantages achieved by the proposed underwater image enhancement method with Retinex transmission map and adaptive color correction, we compare it against several state-of-the-art underwater image enhancement methods. The experiments are conducted on the UIEB dataset [10], which is the first widely used real-world underwater image dataset with reference images. UIEB includes 890 raw underwater images with the corresponding high-quality reference images, within which 800 pairs are for training in data-driven learning and 90 pairs are for testing. Since the proposed method is not learning-based, we conducted experiments only on the testing set.

4.1. Quantitative Results

To quantitatively evaluate the performance of different methods, we performed not only non-reference evaluation, but also full-reference evaluation since there are ground-truth images in the UIEB testing dataset.

Here, we briefly introduce the image quality assessment metrics used in quantitative evaluations. For full-reference evaluation, we used two popular metrics, Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) [27]. A higher PSNR score means that the enhancement result is closer to the reference image in terms of image pixel values, while a higher SSIM score indicates that the result is more similar to the reference image in terms of image structure and texture. By using the reference images, PSNR and SSIM metrics provide direct evaluation of the enhancement performance to some extent, although the real ground truth images might be different from the reference images.

Underwater image quality was also evaluated by two commonly used metrics for non-reference images: Underwater Color Image Quality Evaluation (UCIQE) [28] and Metric and Underwater Image Quality Measurement (UIQM) [29] Metric. UCIQE quantifies the non-uniform color casts, blurring, and low contrast, and combines these three components in a linear manner. A higher UCIQE score means that the result has better balance among the chroma, saturation, and contrast. UIQM is comprised of three underwater image attributed measures: image colorfulness, sharpness, and contrast. A higher UIQM score indicates that the result is more consistent with human visual perception.

We compared the proposed method with six typical and SOTA underwater image enhancement methods: Fusion [7], RGHS [22], UDCP [6], GDCP [16], ULAP [19], and Peng et al. [20]. Among these six compared methods, Fusion and RGHS are independent of physical models, UDCP and GDCP rely on DCP-variant priors, ULAP and Peng et al. belong to the physical model based category of methods using other priors. Together, they form a representative group of traditional underwater enhancement methods. The quantitative results of both full-reference evaluation and non-reference evaluation are listed in Table 1.

Table 1.

Quantitative full-reference evaluation and non-reference evaluation of the proposed method and six other SOTA underwater image enhancement methods. The best results in terms of each metric are shown in bold.

In Table 1, the proposed underwater image enhancement with Retinex transmission map and adaptive color correction obtains the highest scores of PSNR, SSIM, and UIQM. In terms of UCIQE, it obtains the highest score along with Fusion, RGHS, and ULAP methods. Thus, our method achieves the best performance in terms of full-reference image quality assessment. In addition, it also achieves superior performance in the non-reference evaluation.

4.2. Qualitative Results

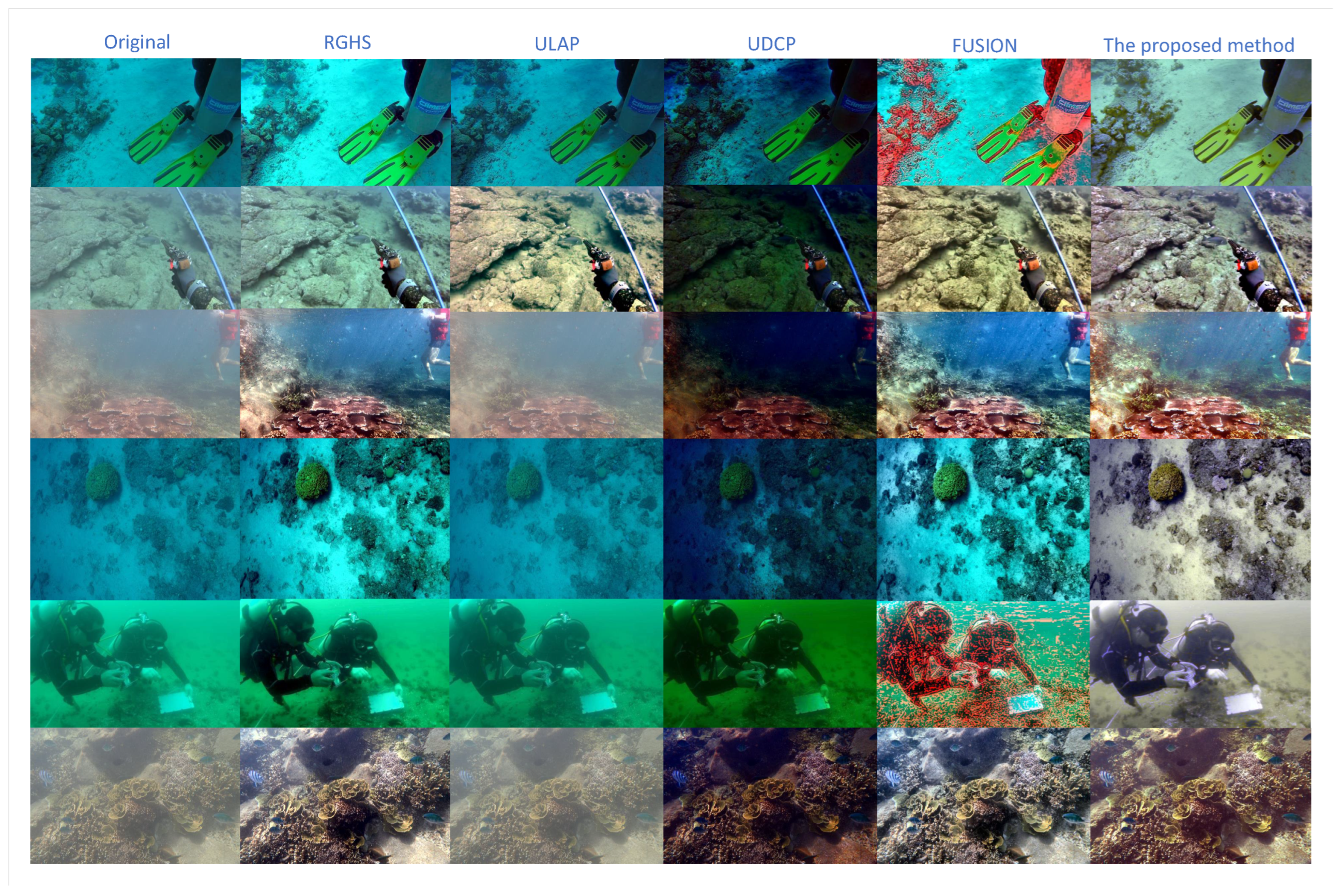

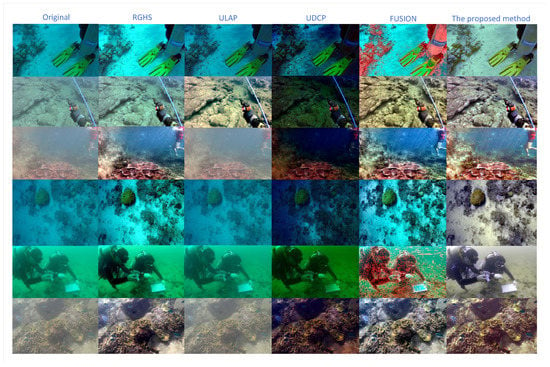

We also conducted qualitative evaluation of the proposed method and other SOTA methods on the UIEB test dataset. Some sample images and their corresponding enhanced results are shown in Figure 3.

Figure 3.

Visual comparison of the enhanced outputs produced by other SOTA underwater image enhancement methods and the proposed method. Original images are from the UIEB test dataset.

It can be seen that the proposed method produces visually pleasing images from these samples. In case of greenish and bluish underwater images, the proposed method can remove these unpleasant color deviations. Such capability is important since greenish or bluish color deviations are common for underwater images taken especially in open water due to the fact that the red light attenuates first, followed by the green light and then the blue light. In case of underwater images with low contrast, the proposed method can substantially remove the backscatter light, leading to clear results.

Compared with other underwater image enhancement methods, the proposed method produces results with higher visibility and less artifacts, proving the effectiveness of Retinex transmission map estimation and adaptive color correction.

4.3. Improvements over Multi-Scale Retinex

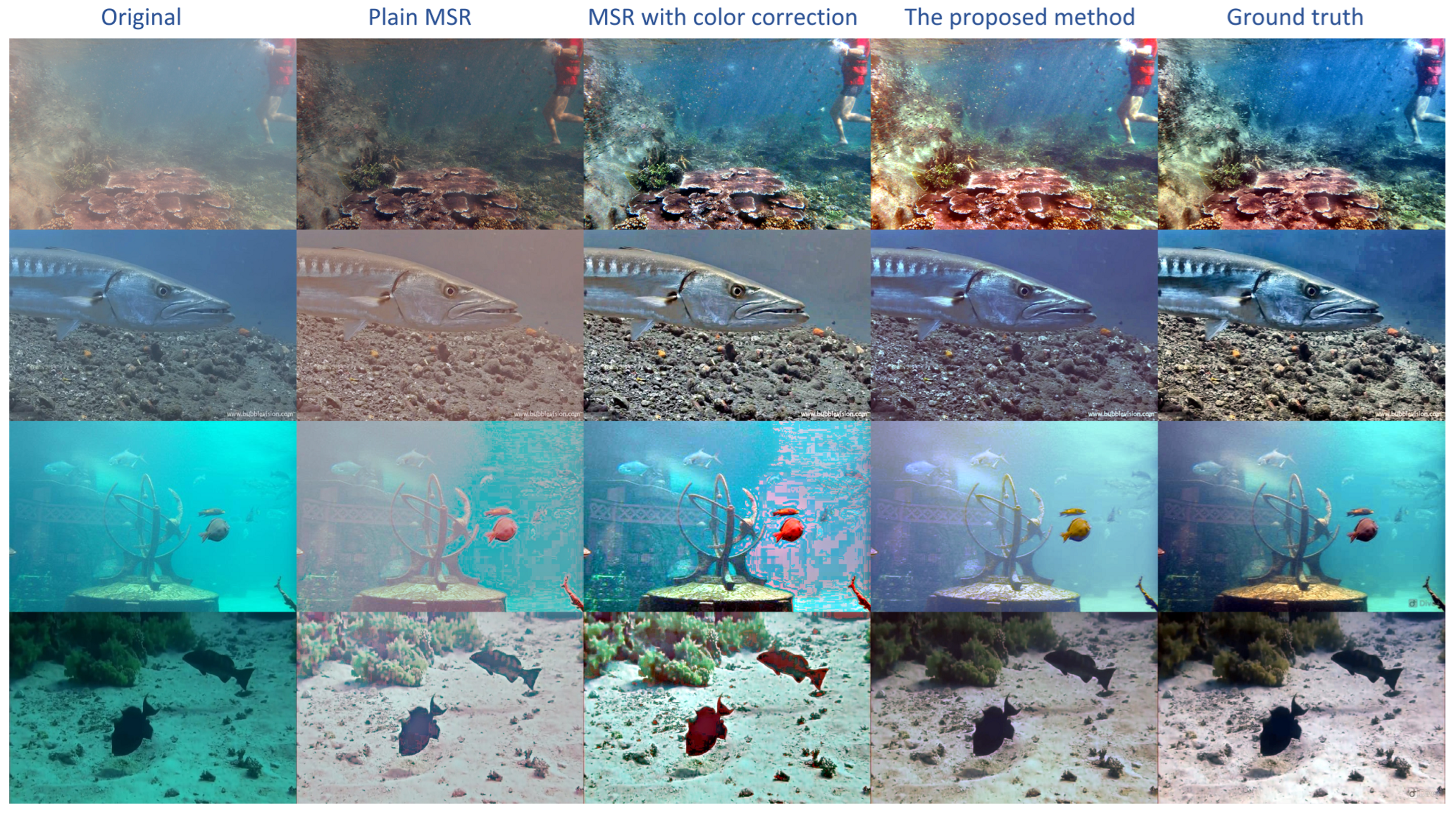

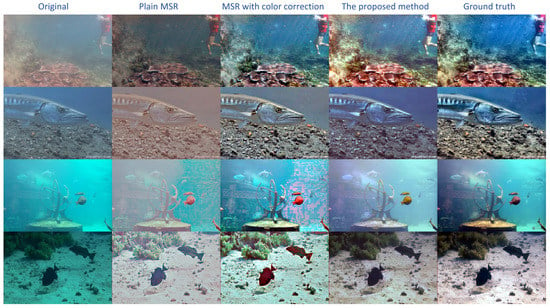

The proposed method uses multi-scale Retinex (MSR) in the estimation of transmission map. Since multi-scale Retinex is itself an image enhancement method, we conducted comparison experiments to demonstrate that the proposed method offers improvements over MSR.

We compared three methods: (1) plain MSR [9] used in Retinex Transmission Map Estimation; (2) MSR with color correction [30]; and (3) the proposed method. Note that plain MSR used in Retinex Transmission Map Estimation does not include the color restoration step as presented in [9], while MSR with color correction carries out the color restoration step as well as a final color balance step. In this comparison, the UIEB [10] test dataset was used again for evaluation. Quantitative results of PSNR, SSIM, UCIQE and UIQM metrics are listed in Table 2. It can be seen that the proposed method surpassed the other two methods in terms of the PSNR and UCIQE metrics.

Table 2.

Quantitative comparison of two MSR-based methods and the proposed method. The best results in terms of each metric are shown in bold. The UIEB test dataset was used for evaluation.

To compare these three methods qualitatively, the enhanced outputs on some test samples are given in Figure 4, along with the original images and the corresponding ground truth images. It can be seen that plain MSR tends to produce results with low-contrast appearances. MSR with color correction performs better than plain MSR. However, empirically, we observed that, for several test samples, MSR with color correction would introduce reddish artifacts into the enhanced results. Two such cases are shown in the last two rows of Figure 4. Unlike the other two methods, the proposed method performs MSR on the white balanced image rather than directly on the original image, and only uses it to estimate the transmission map. In addition, it is based on the underwater imaging model and utilizes an adaptive color correction and thus is more robust when dealing with complex degradations in underwater scenes.

Figure 4.

Visual comparison of the enhanced outputs produced by two MSR-based methods and the proposed method. Original images and their corresponding ground truth images are from UIEB test dataset.

5. Conclusions

In this work, we proposed a robust underwater image enhancement method based on the novel Retinex transmission map estimation and adaptive color correction. By taking advantage of white balance and Retinex operations, Retinex transmission map estimation is exempted from the unknown backscattered light. Adaptive color correction chooses suitable stretching parameters in order to avoid channel stretching imbalance. Quantitative evaluation demonstrates that our method outperforms other SOTA methods in terms of full-reference metrics. In addition, it also achieves superior performance in the non-reference evaluation. In visual comparison, the proposed method produces results with higher visibility and less artifacts.

Despite the superiority of our method, it may fail in some rare cases. Since we only use a simple gray world white balance algorithm, the proposed method may produce poor enhancement if white balance fails due to some reasons. In future work, we will investigate more robust white balance algorithms to improve the enhancement stability.

Author Contributions

Conceptualization, E.C. and T.Y.; methodology, E.C. and T.Y.; software, T.Y. and Q.C.; validation, T.Y. and Q.C.; formal analysis, B.H.; investigation, B.H.; resources, T.Y.; data curation, Q.C.; writing—original draft preparation, T.Y.; writing—review and editing, E.C. and Y.H.; project administration, Y.H.; funding acquisition, E.C. and Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Fujian Province of China under Grant (2021J01867), Education Department of Fujian Province under Grant (JAT190301), and the Foundation of Jimei University under Grant (ZP2020034).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. These data can be found here: https://li-chongyi.github.io/proj_benchmark.html, accessed on 1 March 2022.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AUV | Autonomous Underwater Vehicle |

| DCP | Dark Channel Prior |

| GDCP | Generalized Dark Channel Prior |

| LDCP | Low-complexity Dark Channel Prior |

| MSR | Multi-Scale Retinex |

| PSNR | Peak Signal-to-Noise Ratio |

| RGHS | Relative Global Histogram Stretching |

| ROV | Remote Operated Vehicle |

| SOTA | State-of-the-art |

| SSIM | Structural Similarity Index Measure |

| UCIQE | Underwater Color Image Quality Evaluation |

| UDCP | Underwater Dark Channel Prior |

| UIEB | Underwater Image Enhancement Benchmark |

| UIQM | Underwater Image Quality Measurement |

| ULAP | Underwater Light Attenuation Prior |

References

- Chen, S.; Chen, E.; Ye, T.; Xue, C. Robust backscattered light estimation for underwater image enhancement with polarization. Displays 2022, 75, 102296. [Google Scholar] [CrossRef]

- Ye, T.; Chen, S.; Liu, Y.; Ye, Y.; Chen, E.; Li, Y. Underwater Light Field Retention: Neural Rendering for Underwater Imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 488–497. [Google Scholar]

- Zhang, D.; Shen, J.; Zhou, J.; Chen, E.; Zhang, W. Dual-path joint correction network for underwater image enhancement. Opt. Express 2022, 30, 33412–33432. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Yang, H.Y.; Chen, P.Y.; Huang, C.C.; Zhuang, Y.Z.; Shiau, Y.H. Low complexity underwater image enhancement based on dark channel prior. In Proceedings of the 2011 Second International Conference on Innovations in Bio-Inspired Computing and Applications, Shenzhen, China, 16–18 December 2011; pp. 17–20. [Google Scholar]

- Drews, P.; Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission estimation in underwater single images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 825–830. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Liu, R.; Jiang, Z.; Yang, S.; Fan, X. Twin Adversarial Contrastive Learning for Underwater Image Enhancement and Beyond. IEEE Trans. Image Process. 2022, 31, 4922–4936. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 1–14. [Google Scholar]

- McGlamery, B. A computer model for underwater camera systems. In Ocean Optics VI; SPIE: Bellingham, WA, USA, 1980; Volume 208, pp. 221–231. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; De Vleeschouwer, C.; Garcia, R.; Bovik, A.C. Multi-scale underwater descattering. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 4202–4207. [Google Scholar]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Song, W.; Wang, Y.; Huang, D.; Liotta, A.; Perra, C. Enhancement of underwater images with statistical model of background light and optimization of transmission map. IEEE Trans. Broadcast. 2020, 66, 153–169. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Chau, L.P. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 992–1002. [Google Scholar] [CrossRef]

- Song, W.; Wang, Y.; Huang, D.; Tjondronegoro, D. A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, 21–22 September 2018; pp. 678–688. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Ghani, A.S.A.; Isa, N.A.M. Enhancement of low quality underwater image through integrated global and local contrast correction. Appl. Soft Comput. 2015, 37, 332–344. [Google Scholar] [CrossRef]

- Huang, D.; Wang, Y.; Song, W.; Sequeira, J.; Mavromatis, S. Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In Proceedings of the International Conference on Multimedia Modeling, Bangkok, Thailand, 5–7 February 2018; pp. 453–465. [Google Scholar]

- Galdran, A.; Alvarez-Gila, A.; Bria, A.; Vazquez-Corral, J.; Bertalmío, M. On the duality between retinex and image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8212–8221. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Yang, M.; Sowmya, A.; Wei, Z.; Zheng, B. Offshore underwater image restoration using reflection-decomposition-based transmission map estimation. IEEE J. Ocean. Eng. 2019, 45, 521–533. [Google Scholar] [CrossRef]

- Fu, X.; Zhuang, P.; Huang, Y.; Liao, Y.; Zhang, X.P.; Ding, X. A retinex-based enhancing approach for single underwater image. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4572–4576. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2015, 41, 541–551. [Google Scholar] [CrossRef]

- Petro, A.B.; Sbert, C.; Morel, J.M. Multiscale retinex. Image Process. Line 2014, 4, 71–88. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).