1. Introduction

Cancer, which is defined as the uncontrolled and disruptive growth of abnormal body cells, is one of the major causes of death globally. The two primary types of cancer are malignant and benign, based on benignity and malignancy. The benign cancer cell is often non-cancerous and develops extremely slowly. Malignant tumors, on the other hand, grow quickly, are dangerous, and spread to other parts of the body via blood flow [

1]. Among various cancers, lungs, bones, blood, brain, liver, and breast Cancer (BC) are commonly found in women. World Health Organization reports show that around 2.1 million women have life-threatening BC [

2].

Patients diagnosed with BC and a tumor less than 10 mm in size have a 98% probability of surviving the disease. The tumor size at the diagnosis is highly associated with BC survival, and 70% of BC cases are diagnosed when the tumor is 30 mm or larger. [

3]. Hence, t breast tumor size deeply impacts the survival of a patient and its detection. Various imaging techniques investigated the breast tumor include X-ray [

4] CT scan and ultrasound [

5,

6]. However, these methods are still unreliable, and sometimes misclassification leads to preventable surgery of a benign tumor that is non-cancerous [

7]. Early identification and treatment are part of the current approach to BC. In the United States, this strategy has a 10-year survival rate of 85%. Survival is substantially correlated with the disease stage upon diagnosis, with a 10-year survival rate of 98% for patients with stages 0 and I cancer and a 10-year survival rate of 65% for those with stage III cancer. Therefore, detecting BC immediately and accurately is crucial for avoiding needless surgery and improving patient survival [

8]. Separating normal and abnormal cells is challenging due to the size, shape, and location of tumor cells. Therefore, the medical image segmentation technique identifies abnormalities [

9].

In the recent past, DL has played a tremendous role in different tasks of medical image processing, such as detection [

10], classification [

11] Segmentation [

12]. A Convolutional neural network (CNN) requires an extensive data set for the training process. However, there is a limitation of BC data; therefore, we will use a TL technique to address the problem of limited data will increase the detection accuracy.

In CNN, the convolutional layers are used for feature extraction, pooling layers are utilized to reduce the feature and map and, finally, a fully connected layer classifies these features. More importantly, in convolutional neural networks, the initial layer extracts low-level features; however, as the number of layers increases, it extracts high-level features. Therefore, deep convolutional layers of CNN architecture extract more detailed and deep features. The inspiration to apply the pretrain DL model is that these models are trained on a huge dataset, i.e., ImageNet [

13]. Thus, when pre-train models are trained for a new task such as classification and detection, the training accuracy increases and computational time is reduced. The Transfer Learning (TL) methodology is commonly used in different detection and classification research problems.

In recent years, a number of DL algorithms have been applied to identify BC, producing cutting-edge results in a BC detection applications. Despite the extensive spectrum of research on the classification and identification of BC, there is still interest in creating high-accuracy automated methods for BC detection and classification. According to our research, BC does not have a lot of cutting-edge literature when it comes to AI-driven technologies that utilize image methods. The low accuracy of BC detection is one of the shortcomings of the BC detection study that is currently published. The majority of research used datasets with fewer images (small datasets). There is a lack of training data, the model is not completely generalizable, and the training samples may have been overfit. To identify and rate BC, the majority of research employ traditional ML and transfer learning methods. However, the biggest problem with classical ML (such as support vector machine (SVM)) is the lengthy training period for big datasets [

14]. The most problematic shortcomings in transfer learning systems, however, are negative transfer and generalization. The concept that pre-trained classification techniques are usually trained on the ImageNet database, which contains images irrelevant to medical imaging, is one of its disadvantages. As a result, developing efficient CADS to rapidly and accurately distinguish BC from ultrasound remains challenging. The DeepbreastcancerNet DL model is recommended to address these limitations. It makes use of filter-based feature extraction, which can aid in achieving excellent classification performance. Convolutional layer, clipped ReLu, and Leaky ReLu activation functions were used to develop the proposed model, which extracts the most essential and detailed features from the chest BC images. The proposed framework has 24 layers, including six convolutional layers, nine inception modules, and one fully connected layer. Through the use of a max-pooling operation, the design may reduce a number of weight characteristics. The suggested model, which is a novel method for BC detection and classification, includes batch and cross channel normalizing procedures as well as both clipped Relu and leaky ReLu activation functions. Furthermore, we adopted the strategy of TL for BC detection using nine pre-train DL model i.e., ResNet101 [

15], ResNet-50 [

16], ResNet-18 [

17,

18], GoogLeNet [

19], ShuffleNet [

19], AlexNet [

20], SqueezeNet [

21].XceptionNet [

22] The frozen weights of pretrain models are transformed to the target cancer dataset and the final softmax layer is replaced by the new one, as we have three classes. Therefore, the new softmax is adjusted three.

The main contribution of this research paper is as follows.

The rest of the paper is organized as follows.

Section 2 provides a literature review,

Section 3 materials and method,

Section 4 is about experiments and results, and

Section 5 has a conclusion and future work.

2. Literature Review

S. A. Mohammed et al. [

23] blanched two different imbalanced BC datasets by resampling and removing the missing attributes. They used three machine learning classifiers (Naïve Bayes, MO, and J48) to classify the balanced data. Classification results demonstrated that balancing data enhanced the classification results. M. Tahmooresi et al. [

24] used different machine learning classifiers such as SVM, KNN, ANN (Artificial neural network), and a Decision tree for BC detection. They attained an accuracy of 98.8% accuracy with SVM. Hussain et al. [

25] extracted different image features using different image descriptors such as texture (SIFT), (EFDs), morphological entropy and SVM, decision tree, and Bayesian as classifiers to discriminate normal mammography from BC. Goyal et al. [

26] Used machine learning for BC classification. They applied principal component analysis for dimensionality reduction in features extraction and utilized the classifier of CNN for BC dataset features classification. Jain et al. [

27] applied five different machine learning classifiers, namely SVM, DT, KNN, logistic regression, and random forest for BC detection. KNN and logistic regression in 10-fold cross-validation attained an accuracy of 96.52%. Twenty-eight different combination architectures for BC classification were proposed by Zerouaoui and Idri [

28]. These hybrid architectures combined seven different DL models along with feature extraction and four different classifiers (DT, SVM, KNN, with MLP) for BC classification. On the FNCA and BreakHis datasets, the authors match the total performance of several architectural approaches. On the FNCA dataset, the classification accuracy achieved by the Densenet-201 combine with the MLP classifier was 99%.

Alanazi et al. CNN-based system for automatic BC detection and compare the results with Machine learning algorithms. The experimental displayed that the proposed system performed 9% better than machine learning classifiers and reached 87% accuracy. Togaçar et al. [

29] proposed custom CNN model for BC ultrasound image detection. The proposed consist of only one convolution layer with 20 filters, which achieved 100% accuracy while outperforming eight different pre-train CNN models. Ting et al. [

30] presented CNN for BC detection (CNNI-BCC). This framework uses a supervised DL neural network to do the classification. The experimental findings demonstrated that CNNI-BCC performed better than previously conducted research and achieved an accuracy of 89.47% Nahid et al. [

31] Presented A novel deep neural model for BC classification utilizing Histopathological images, comprised of a clustering algorithm and CNN. The model is based on CNN and LSTM. At the classification layer of the model, Softmax and SVM are both used. The proposed model achieved 91% accuracy. Ragb et al. [

32] presented their own proposed CNN model for the diagnosis of BC in their study. In addition, they employed a TL technique to combine nine different pre-trained DL models to classify two BC datasets. The suggested model obtained the finest accuracy of 99.5%.

Bevilacqua et al. [

33] used MR images chosen for training and testing. They employed ANN to classify and detect BC after extracting data and analyzing it. However, When the Machine Learning model was applied to enhance the ANN, the maximum accuracy was increased to 100%. Khan et al. [

34] Proposed a framework for detecting and classifying BC TL approaches were adopted by the authors as opposed to GoogLeNet, VGG, and ResNet for feature extraction. Following that, a fully connected layer is provided with the combined extracted characteristics for classification. The unique framework that was suggested has a classification accuracy of 97.52%. Tang et al. [

35] provided a detailed overview of recent advanced development in computer-aided detection or diagnosis of BC detection. Joseph et al. [

36] Extracted handcrafted features such as textures, color, and shape from BC Histopathological images; classified these features with the deep neural network using softmax. Furthermore, the author applied data augmentation to address the problem of overfitting. Experimental results demonstrated that the projected approach accomplished an accuracy of 97.87% for 40x. Sharma and Kumar [

37] compared handcrafted features with the proposed XceptionNet model for features extraction for BC classification. The XceptionNet used as feature extractor and SVM as a classifier outperformed the handcrafted feature and achieved a classification accuracy of 96.25% for a 40x level of magnification. Inan et al. [

38] proposed a unified automatic framework for breast tumor segmentation and detection based on segmentation leading to classification. They utilized SLIC and K Means++ for tumor segmentation and VGG16, VGG19, DenseNet121, and ResNet50 for breast tumor classification. Furthermore, SLIC, UNET, and VGG16 outclassed all other combined combinations. Jabarani et al. [

39] proposed a new hybrid approach for BC detection. On images from the dataset, the researchers used an adaptive median filter to reduce noise and some preprocessing approaches to remove the background. They also modify the segmentation-related parameter values for K Means and the Gaussian mixture framework. The accuracy of the suggested hybrid model was 95.5%.

Saber et al. [

40] presented the TL approach, and six performance elevation matrices are used in the DL model suggested for automatically detecting BC. They deployed five pre-train DL models for feature extraction and classification. These models were the Inception-V3 ResNet, the VGG19 ResNet, the VGG16 ResNet, and the Inception-V3. It has been shown that the VGG16 model is suitable for BC detection, with an accuracy of 98.96 percent. A CNN-based hybrid model for BC classification was proposed by Eroglu et al. in [

41]. The Mobilenetv2, Resnet50, Alexnet, and Resnet50 neural networks extracted information from BC ultrasound images. The cooperation of the mRMR technique is used to link the extracted characteristics together in order to increase accuracy. The support vector machine (SVM) is then implemented to categorize these features. The suggested hybrid model attained an accuracy of classification of 95.6%. Convolutional Neural Network (CNN) and Transfer Learning are used in Chowdhury et al. [

42] suggested method to improve diagnosis by boosting the efficacy and accuracy of early breast cancer detection. Instead of creating the entire model from scratch, the thought process entailed leveraging a pre-trained model that already had certain weights given. This research primarily focuses on a Transfer Learning Model built on ResNet101 and used with the ImageNet dataset. The proposed framework gave us a 99.58% accuracy rate.

Moon et al. [

43] In their research, they intended to detect BC from ultrasound. In their research, they used two different data sets. They combined multiple CNN algorithms using the image fusion approach. In their initial attempt, they claimed to have attained an accuracy of 91.10%. They obtained 94.62% in their first dataset and 94.62% in their second Xiao et al. [

44] Examined InceptionV3, ResNet50, and XceptioNet and presented a basic model for identifying breast malignancies from the breast Neural Computing and Applications 123 ultrasound images dataset, consisting of three convolutional layers. There were 2058 images in the dataset, including benign 1370, while malignant 688 samples. According to their research, InceptionV3 had the highest accuracy (85.13%). Jean el al. [

45] the offered approach is composed of a few sequential steps. The breast ultrasound data are first enhanced, and then a DarkNet-53 deep learning model is used to retrain the data. The features were then retrieved from the pooling layer, and the two alternative optimization techniques, such as the reformed BGWO and the reformed DE, were used to choose the best feature. The last step involves fusing the chosen features using a proposed technique, which is then classified using machine learning techniques.

5. Conclusions

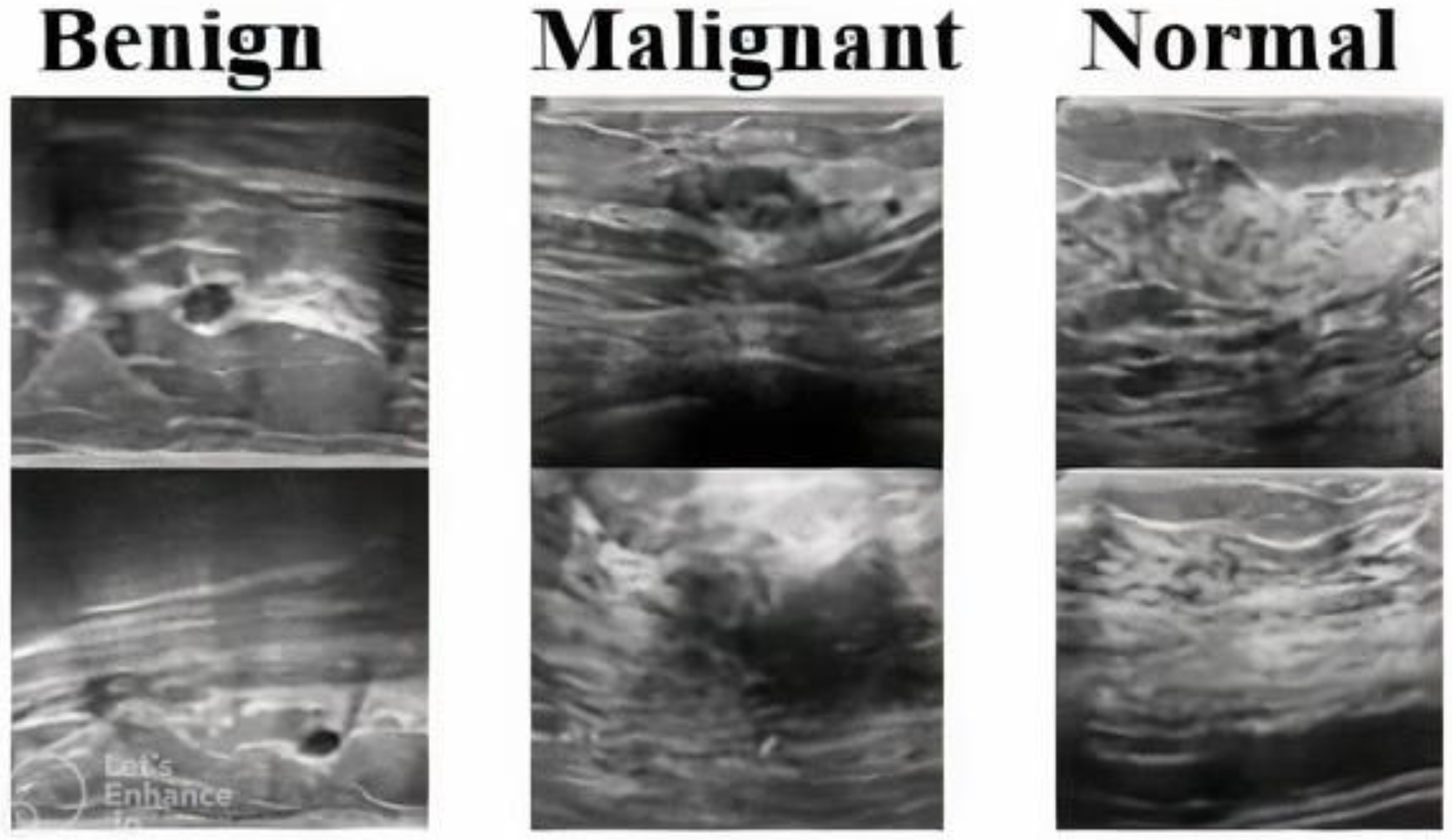

This paper presents a novel DeepBraestCancerNet deep learning model for BC detection and classification. The proposed framework has 24 layers, including six convolutional layers, nine inception modules and one fully connected layer. We observed that the proposed model reached the highest classification accuracy of 99.35%. Furthermore, we compared the performance of several Deep Learning models, and the experiment results showed that our model outperformed the others. Furthermore, we validated the proposed model using another standard, publically available dataset. The proposed model reached the highest accuracy of 99.35%. Moreover, this paper utilizes eight pre-train DL for BC detection using the TL technique. We evaluated the performance of nine DL-based models on a standard Dataset consisting of 537 benign, 360 malignant 133 normal ultrasound images. The limited images in the publicly available BC ultrasound imaging dataset, which impact DL models’ performance, are a limitation of this research. This research can be further improved by including more images in the dataset. Furthermore, we validated the proposed model using another standard, publically available binary dataset. The proposed model reached the highest accuracy of 99.63%.

Additionally, as the suggested model extracts increasingly in-depth, accurate, and discriminatory features, testing with the dataset reveals that there are very few images of malignant BC and a considerable number of images of normal Breast. Therefore, an effective segmentation approach should be applied to the breast cancer data before classifying the breast ultrasound images into two classes, namely malignant and normal. The segmented images may then be used by the proposed algorithm to accurately detect and identify normal and cancerous images. Furthermore, future research might be designed to answer clinically relevant inquiries. Moreover, In future we will explore models like ViT for BC detection. The success of improved DL algorithms could benefit radiologists and oncologists in accurately detecting BC from MRI and CT scans. However, the findings reported in this paper can assist professionals in making the right decision for their models, obviating the necessity for a thorough search. Using deep neural networks solves various problems connected with model training. It allows us to create effective models for BC diagnosis, which helps in early detection and care.