A Pineapple Target Detection Method in a Field Environment Based on Improved YOLOv7

Abstract

:1. Introduction

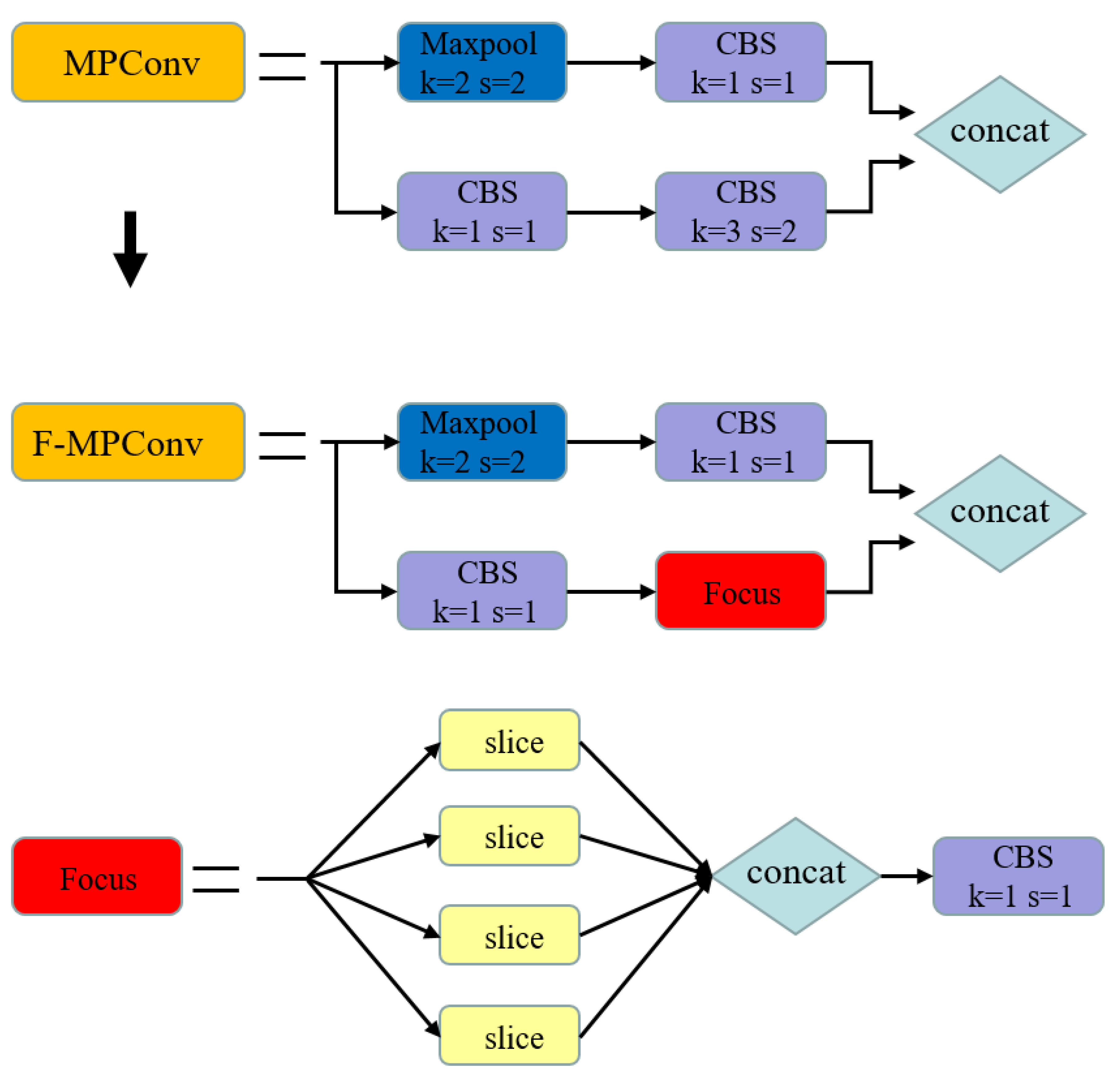

- A lightweight and efficient pineapple target-detection model was designed by introducing the attention mechanism SimAM for the original YOLOv7, modifying the the max-pooling convolution (MPConv) structure and replacing the non-maximum suppression (NMS) algorithm with the soft-NMS algorithm. The detection model takes into account the detection accuracy and speed on the premise that it can distinguish the three maturities of pineapples.

- Through comparative tests, the differences in the detection performance between the model proposed in this paper and other advanced models were verified, and it is proved that the model proposed in this paper is more suitable for the detection of pineapples in a field environment. At the same time, the classification performances of different models for pineapple with different maturities and the detection performances in different field environments were explored.

2. Materials and Methods

2.1. Pineapple Image Collection

2.2. Image Processing and Augmentation

2.3. YOLOv7 Object Detection Network

2.4. Improvement to the YOLOv7

2.4.1. Improvement on the Network Structure

2.4.2. Improvement of the Postprocessing

2.5. Evaluation Indicators

2.6. Experimental Details

3. Results and Discussion

3.1. The Results of Network Training

3.2. The Results of the Ablation Test

3.3. The Results of Comparing the Detection Performance of the Proposed Network with Other Networks

3.4. The Results of the Comparison of the Detection Performances of the Proposed Network for Different Maturities of Pineapple

3.5. The Results of the Detection Performance of the Proposed Network in Different Field Scenarios

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, D.J.; Zhang, L.Z.; Li, X.; Li, P.; Wang, T.Y. Design of automatic pineapple harvesting machine based on binocular machine vision. Anhui Agric. Sci. 2019, 13, 207–210. (In Chinese) [Google Scholar]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Fu, L.S.; Gao, F.F.; Wu, J.Z.; Li, R.; Manoj, K.; Zhang, Q. Application of consumer RGB-D cameras for fruit detection and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Supawadee, C.; Matthew, N.D. Texture-based fruit detection. Precis. Agric. 2014, 15, 662–683. [Google Scholar]

- Wang, C.L.; Lee, W.S.; Zou, X.J.; Choi, D.; Gan, H.; Diamond, J. Detection and counting of immature green citrus fruit based on the Local Binary Patterns (LBP) feature using illumination-normalized images. Precis. Agric. 2018, 19, 1062–1083. [Google Scholar] [CrossRef]

- He, Z.L.; Xiong, J.T.; Lin, R.; Zou, X.J.; Tang, L.Y.; Yang, Z.G.; Liu, Z.; Song, G. A method of green litchi recognition in natural environment based on improved LDA classifier. Comput. Electron. Agric. 2017, 140. [Google Scholar] [CrossRef]

- Zhao, C.Y.; Won, S.L.; He, D.J. Immature green citrus detection based on colour feature and sum of absolute transformed difference (SATD) using colour images in the citrus grove. Comput. Electron. Agric. 2016, 124, 243–253. [Google Scholar] [CrossRef]

- Liu, X.Y.; Zhao, D.; Jia, W.K.; Ji, W.; Sun, Y.P. A Detection Method for Apple Fruits Based on Color and Shape Features. IEEE Access 2019, 7, 67923–67933. [Google Scholar] [CrossRef]

- Sun, S.S.; Jiang, M.; He, D.J.; Long, Y.; Song, H.B. Recognition of green apples in an orchard environment by combining the GrabCut model and Ncut algorithm. Biosyst. Eng. 2019, 187, 201–213. [Google Scholar] [CrossRef]

- Efi, V.; Yael, E. Adaptive thresholding with fusion using a RGBD sensor for red sweet-pepper detection. Biosyst. Eng. 2016, 146, 45–56. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. IEEE international conference on computer vision (ICCV). arXiv 2015, arXiv:1504.08083. [Google Scholar] [CrossRef]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Lucena, F.; Breunig, F.M.; Kux, H. The Combined Use of UAV-Based RGB and DEM Images for the Detection and Delineation of Orange Tree Crowns with Mask R-CNN: An Approach of Labeling and Unified Framework. Future Internet 2022, 14, 275. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Using YOLOv3 Algorithm with Pre- and Post-Processing for Apple Detection in Fruit-Harvesting Robot. Agronomy 2020, 10, 1016. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. European Conference on Computer Vision (ECCV). arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Zheng, Z.H.; Xiong, J.T.; Lin, H.; Han, Y.L.; Sun, B.X.; Xie, Z.M.; Yang, Z.G.; Wang, C.L. A Method of Green Citrus Detection in Natural Environments Using a Deep Convolutional Neural Network. Front. Plant Sci. 2021, 12, 705737. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.X.; Song, Z.Z.; Fu, L.S.; Gao, F.F.; Li, R.; Cui, Y.J. Real-time kiwifruit detection in orchard using deep learning on Android™ smartphones for yield estimation. Comput. Electron. Agric. 2020, 179, 105856. [Google Scholar] [CrossRef]

- Gai, R.L.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2021. prepublish. [Google Scholar] [CrossRef]

- Tu, S.Q.; Pang, J.; Liu, H.F.; Zhuang, N.; Chen, Y.; Zheng, C.; Wan, H.; Xue, Y.J. Passion fruit detection and counting based on multiple scale faster R-CNN using RGB-D images. Precis. Agric. 2020. prepublish. [Google Scholar] [CrossRef]

- Tian, Y.N.; Yang, G.D.; Wang, Z.; Wang, H.; Li, E.; Liang, Z.Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Xu, Z.F.; Jia, R.S.; Sun, H.M.; Liu, Q.M.; Cui, Z. Light-YOLOv3: Fast method for detecting green mangoes in complex scenes using picking robots. Appl. Intell. 2020, 50, 4670–4687. [Google Scholar] [CrossRef]

- Fan, Y.C.; Zhang, S.Y.; Feng, K.; Qian, K.C.; Wang, Y.T.; Qin, S.Z. Strawberry Maturity Recognition Algorithm Combining Dark Channel Enhancement and YOLOv5. Sensors 2022, 22, 419. [Google Scholar] [CrossRef] [PubMed]

- Lawal, O.M. YOLOMuskmelon: Quest for Fruit Detection Speed and Accuracy Using Deep Learning. IEEE Access 2021, 9, 15221–15227. [Google Scholar] [CrossRef]

- Ji, W.; Pan, Y.; Xu, B.; Wang, J.C. A Real-Time Apple Targets Detection Method for Picking Robot Based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar] [CrossRef]

- Cui, Z.; Sun, H.M.; Yu, J.T.; Yin, R.N.; Jia, R.S. Fast detection method of green peach for application of picking robot. Appl. Intelligence. 2021. prepublish. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.Y.; Liu, Z.J.; Yang, F.Z. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Kang, H.W.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Li, G.; Chen, W.; Liu, B.; Chen, M.; Lu, S. Detection of Dense Citrus Fruits by Combining Coordinated Attention and Cross-Scale Connection with Weighted Feature Fusion. Appl. Sci. 2022, 12, 6600. [Google Scholar] [CrossRef]

- Zhang, X.; Gao, Q.; Pan, D.; Cao, P.C.; Huang, D.H. Research on spatial positioning system of fruits to be picked in feld based on binocular vision and SSD Model. J. Phys. 2021, 1748, 042011. [Google Scholar] [CrossRef]

- Liu, T.H.; Nie, X.N.; Wu, J.M.; Zhang, D.; Liu, W.; Cheng, Y.F.; Zheng, Y.; Qiu, J.; Qi, L. Pineapple (Ananas comosus) fruit detection and localization in natural environment based on binocular stereo vision and improved YOLOv3 model. Precis. Agric. 2022, 24, 139–160. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-time growth stage detection model for high degree of occultation using DenseNet-fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

- Wu, D.L.; Jiang, S.; Zhao, E.L.; Liu, Y.L.; Zhu, H.C.; Wang, W.W.; Wang, R.Y. Detection of Camellia oleifera Fruit in Complex Scenes by Using YOLOv7 and Data Augmentation. Appl. Sci. 2022, 12, 11318. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object de-tectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H. Scaled-YOLOv4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13024–13033. [Google Scholar]

- Ding, X.H.; Zhang, X.Y.; Man, N.N.; Han, J.G.; Ding, G.G.; Sun, J. RepVGG: Making VGG-style ConvNets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar]

- Jiang, T.T.; Cheng, J.Y. Target Recognition Based on CNN with LeakyReLU and PReLU Activation Functions. In Proceedings of the The 2019 IEEE Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Beijing, China, 15–17 August 2019; pp. 718–722. [Google Scholar]

- Ge, Z.; Liu, S.T.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference On Machine Learning(ICML), Virtual Event, 18–24 July 2021; pp. 11863–11974. [Google Scholar]

- Santana, A.; Colombini, E. Neural Attention Models in Deep Learning: Survey and Taxonomy. arXiv 2021, arXiv:2112.05909. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS:Improving object detection with one line of code. In Proceedings of the 2017 IEEE International Conference on Computer Vision(IC-CV), Venice, Italy, 22–29 October 2017; pp. 5562–5570. [Google Scholar]

| Model | Detection Target | Precision (%) | Recall(%) | F1 Score (%) | mAP (%) | Average Detection Time (ms) |

|---|---|---|---|---|---|---|

| Improved YOLOv3 [32] | pineapple | 94.45 | 88.48 | 91.38 | - | - |

| Dense-YOLOv4 [33] | mango | 91.45 | 95.87 | 93.61 | 96.20 | 22.62 |

| YOLO BP [18] | citrus | - | 91.00 | - | 91.55 | 55.56 |

| Improved YOLOv5 [28] | apple | 83.83 | 91.48 | 87.49 | 86.75 | 15.00 |

| ShufflenetV2-YOLOX [26] | apple | 95.62 | 93.75 | - | 96.76 | 15.38 |

| DA-YOLOv7 [34] | Camellia oleifera Fruit | 94.76 | 95.54 | 95.15 | 96.03 | 25.00 |

| Data Set | Image Resolution (Pixels) | Number of Images |

|---|---|---|

| Training set | 1280 × 720 | 3472 |

| Verification set | 1280 × 720 | 434 |

| Test set | 1280 × 720 | 434 |

| SimAM | F-MPConv | Soft-NMS | mAP (%) | R (%) | Average Detection Time (ms) |

|---|---|---|---|---|---|

| 93.11 | 86.42 | 20.83 | |||

| √ | 95.48 | 85.75 | 25.00 | ||

| √ | √ | 95.97 | 87.24 | 23.25 | |

| √ | √ | √ | 95.82 | 89.83 | 23.81 |

| Model | P (%) | R (%) | F1 (%) | mAP (%) | Average Detection Time (ms) |

|---|---|---|---|---|---|

| YOLOv4-tiny | 83.52 | 82.77 | 83.14 | 86.55 | 24.39 |

| YOLOv5s | 88.95 | 85.14 | 87.01 | 91.64 | 19.60 |

| YOLOv7 | 91.27 | 86.42 | 88.78 | 93.11 | 20.83 |

| Ours | 94.17 | 89.83 | 91.95 | 95.82 | 23.81 |

| Maturity | Model | Ground Truth Count | Correctly Identified | Falsely Identified | ||

|---|---|---|---|---|---|---|

| Amount | Rate (%) | Amount | Rate (%) | |||

| Unripe | YOLOv7 | 64 | 53 | 82.81 | 5 | 7.81 |

| Ours | 64 | 57 | 89.06 | 2 | 3.12 | |

| Semi-ripe | YOLOv7 | 73 | 60 | 82.19 | 3 | 4.10 |

| Ours | 73 | 66 | 90.41 | 1 | 1.37 | |

| Ripe | YOLOv7 | 75 | 68 | 90.66 | 2 | 2.66 |

| Ours | 75 | 69 | 92.00 | 1 | 1.33 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, Y.; Ma, R.; Chen, Y.; Wan, T.; Jiao, R.; He, H. A Pineapple Target Detection Method in a Field Environment Based on Improved YOLOv7. Appl. Sci. 2023, 13, 2691. https://doi.org/10.3390/app13042691

Lai Y, Ma R, Chen Y, Wan T, Jiao R, He H. A Pineapple Target Detection Method in a Field Environment Based on Improved YOLOv7. Applied Sciences. 2023; 13(4):2691. https://doi.org/10.3390/app13042691

Chicago/Turabian StyleLai, Yuhao, Ruijun Ma, Yu Chen, Tao Wan, Rui Jiao, and Huandong He. 2023. "A Pineapple Target Detection Method in a Field Environment Based on Improved YOLOv7" Applied Sciences 13, no. 4: 2691. https://doi.org/10.3390/app13042691

APA StyleLai, Y., Ma, R., Chen, Y., Wan, T., Jiao, R., & He, H. (2023). A Pineapple Target Detection Method in a Field Environment Based on Improved YOLOv7. Applied Sciences, 13(4), 2691. https://doi.org/10.3390/app13042691