Experimental Evaluation of Collision Avoidance Techniques for Collaborative Robots

Abstract

1. Introduction

- a method for obstacles identification and localization;

- a control law able to modify in real time the motion of robot based on obstacles coordinates.

2. Obstacle Avoidance Algorithm

- (a)

- ; the point is localized to the distal extremity of the link:

- (b)

- and ; the distance is orthogonal to the link, and the position of along the link is defined by the scalar parameter x:

- (c)

- ; the point is localized to the proximal extremity of the link:

2.1. Mode I: 6-DOF Perturbation

2.2. Mode II: 4-DOF Schoenflies Perturbation

2.3. Mode III: Perturbation with Fixed Orientation

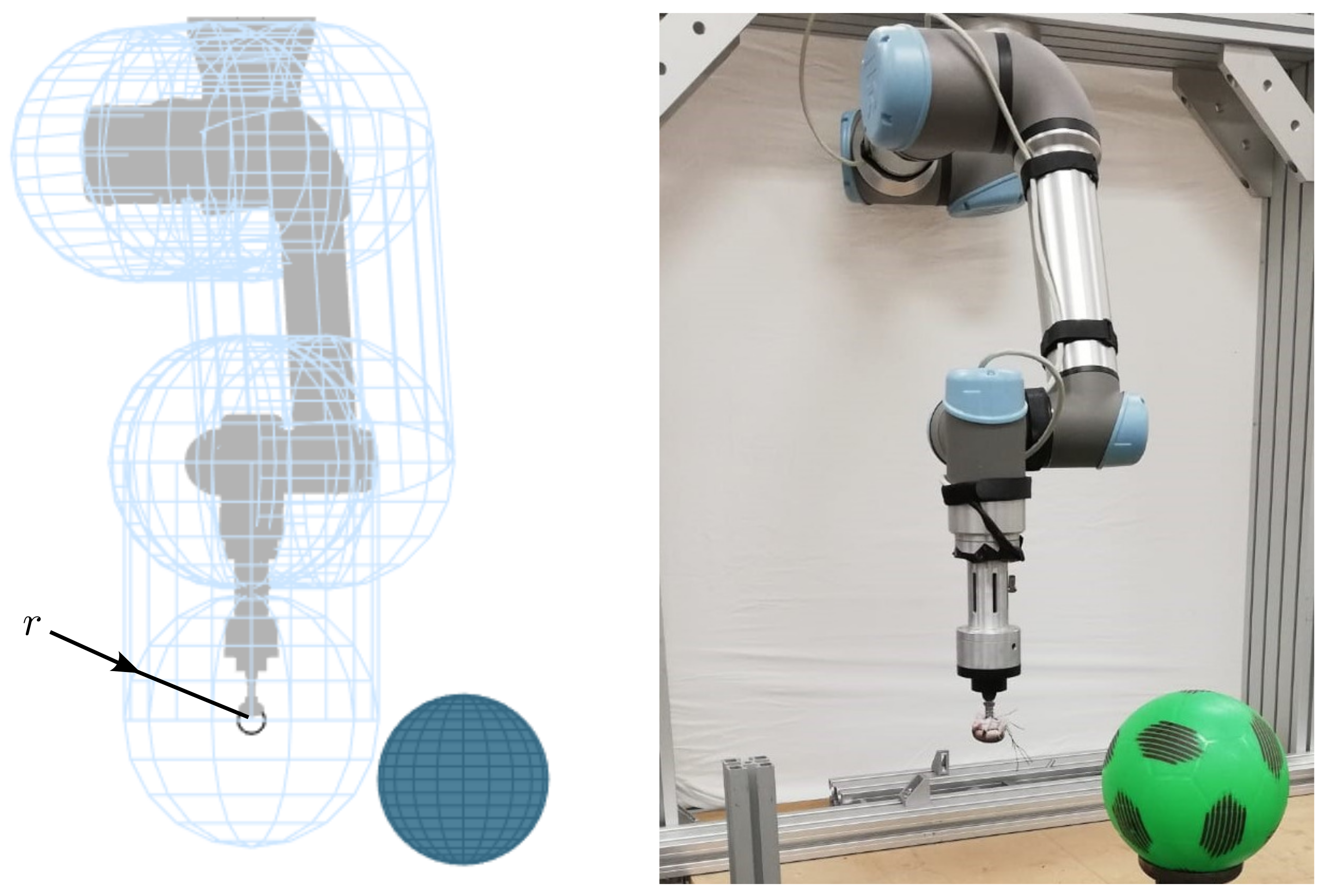

3. Implementation

3.1. System Architecture

3.2. Test Cases

4. Results

4.1. Test Case 1

4.2. Test Case 2

4.3. Test Case 3

5. Discussion

- the communication protocol between the external controller and the robot, thus, the frequency of data exchange;

- the accuracy of the robot controller in driving the motors via internal control loops to follow the external speed reference signal; this characteristic may vary among different manufacturers or robot models;

- the acquisition frequency of external sensors for obstacle detection, which should be as high as possible or at least comparable to the control frequency;

- the complexity of the algorithm, which, if too high, can increase the computation time, thus, reducing the speed of the control loop.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Chemweno, P.; Pintelon, L.; Decre, W. Orienting safety assurance with outcomes of hazard analysis and risk assessment: A review of the ISO 15066 standard for collaborative robot systems. Saf. Sci. 2020, 129, 104832. [Google Scholar] [CrossRef]

- Zbigniew, P.; Klimasara, W.; Pachuta, M.; Słowikowski, M. Some new robotization problems related to the introduction of collaborative robots into industrial practice. J. Autom. Mob. Robot. Intell. Syst. 2019, 13, 91–97. [Google Scholar] [CrossRef]

- Hofbaur, M.; Rathmair, M. Physische Sicherheit in der Mensch-Roboter Kollaboration. Elektrotechnik Und Informationstechnik 2019, 136, 301–306. [Google Scholar] [CrossRef]

- Yang, J.; Howard, B.; Baus, J. A collision avoidance algorithm for human motion prediction based on perceived risk of collision: Part 2-application. IISE Trans. Occup. Ergon. Hum. Factors 2021, 9, 211–222. [Google Scholar] [CrossRef]

- Safeea, M.; Béarée, R.; Neto, P. Collision avoidance of redundant robotic manipulators using Newton’s method. J. Intell. Robot. Syst. 2020, 99, 673–681. [Google Scholar] [CrossRef]

- Leonori, M.; Gandarias, J.M.; Ajoudani, A. MOCA-S: A Sensitive Mobile Collaborative Robotic Assistant exploiting Low-Cost Capacitive Tactile Cover and Whole-Body Control. arXiv 2022, arXiv:2202.13401. [Google Scholar] [CrossRef]

- Weyrer, M.; Brandstötter, M.; Husty, M. Singularity avoidance control of a non-holonomic mobile manipulator for intuitive hand guidance. Robotics 2019, 8, 14. [Google Scholar] [CrossRef]

- Mauro, S.; Pastorelli, S.; Scimmi, L.S. Collision avoidance algorithm for collaborative robotics. Int. J. Autom. Technol. 2017, 11, 481–489. [Google Scholar] [CrossRef]

- Liu, H.; Wang, L. Gesture recognition for human-robot collaboration: A review. Int. J. Ind. Ergon. 2018, 68, 355–367. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Chang, L.H.; Najjaran, H. A survey of robot learning strategies for human-robot collaboration in industrial settings. Robot. Comput.-Integr. Manuf. 2022, 73, 102231. [Google Scholar] [CrossRef]

- Coupeté, E.; Moutarde, F.; Manitsaris, S. Gesture recognition using a depth camera for human robot collaboration on assembly line. Procedia Manuf. 2015, 3, 518–525. [Google Scholar] [CrossRef]

- Mohammed, A.; Schmidt, B.; Wang, L. Active collision avoidance for human–robot collaboration driven by vision sensors. Int. J. Comput. Integr. Manuf. 2017, 30, 970–980. [Google Scholar] [CrossRef]

- Bekhtaoui, W.; Sa, R.; Teixeira, B.; Singh, V.; Kirchberg, K.; Chang, Y.J.; Kapoor, A. View invariant human body detection and pose estimation from multiple depth sensors. arXiv 2020, arXiv:2005.04258. [Google Scholar]

- Liu, C.; Wang, L. Fuzzy color recognition and segmentation of robot vision scene. In Proceedings of the 2015 8th International Congress on Image and Signal Processing (CISP), Shenyang, China, 14–16 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 448–452. [Google Scholar]

- Zhang, W.; Zhang, C.; Li, C.; Zhang, H. Object color recognition and sorting robot based on OpenCV and machine vision. In Proceedings of the 2020 IEEE 11th International Conference on Mechanical and Intelligent Manufacturing Technologies (ICMIMT), Cape Town, South Africa, 20–22 January 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 125–129. [Google Scholar]

- Guzov, V.; Mir, A.; Sattler, T.; Pons-Moll, G. Human poseitioning system (hps): 3d human pose estimation and self-localization in large scenes from body-mounted sensors. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4318–4329. [Google Scholar]

- Scoccia, C.; Palmieri, G.; Palpacelli, M.C.; Callegari, M. A collision avoidance strategy for redundant manipulators in dynamically variable environments: On-Line perturbations of off-line generated trajectories. Machines 2021, 9, 30. [Google Scholar] [CrossRef]

- Palmieri, G.; Scoccia, C. Motion planning and control of redundant manipulators for dynamical obstacle avoidance. Machines 2021, 9, 121. [Google Scholar] [CrossRef]

- Scalera, L.; Giusti, A.; Vidoni, R.; Gasparetto, A. Enhancing fluency and productivity in human-robot collaboration through online scaling of dynamic safety zones. Int. J. Adv. Manuf. Technol. 2022, 121, 6783–6798. [Google Scholar] [CrossRef]

- Chiriatti, G.; Palmieri, G.; Scoccia, C.; Palpacelli, M.C.; Callegari, M. Adaptive obstacle avoidance for a class of collaborative robots. Machines 2021, 9, 113. [Google Scholar] [CrossRef]

- Kebria, P.M.; Al-Wais, S.; Abdi, H.; Nahavandi, S. Kinematic and dynamic modelling of UR5 manipulator. In Proceedings of the 2016 IEEE international conference on systems, man, and cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 004229–004234. [Google Scholar]

- Elshatarat, H.; Biesenbach, R.; Younus, M.B.; Tutunji, T. MATLAB Toolbox implementation and interface for motion control of KUKA KR6-R900-SIXX robotic manipulator. In Proceedings of the 2015 16th International Conference on Research and Education in Mechatronics (REM), Bochum, Germany, 18-20 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 12–15. [Google Scholar]

- Maru, V.; Nannapaneni, S.; Krishnan, K. Internet of things based cyber-physical system framework for real-time operations. In Proceedings of the 2020 IEEE 23rd International Symposium on Real-Time Distributed Computing (ISORC), Nashville, TN, USA, 19–21 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 146–147. [Google Scholar]

- Kot, T.; Wierbica, R.; Oščádal, P.; Spurnỳ, T.; Bobovskỳ, Z. Using Elastic Bands for Collision Avoidance in Collaborative Robotics. IEEE Access 2022, 10, 106972–106987. [Google Scholar] [CrossRef]

- Scimmi, L.S.; Melchiorre, M.; Mauro, S.; Pastorelli, S.P. Implementing a vision-based collision avoidance algorithm on a UR3 Robot. In Proceedings of the 2019 23rd International Conference on Mechatronics Technology (ICMT), Salerno, Italy, 23–26 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Neri, F.; Scoccia, C.; Carbonari, L.; Palmieri, G.; Callegari, M.; Tagliavini, L.; Colucci, G.; Quaglia, G. Dynamic Obstacle Avoidance for Omnidirectional Mobile Manipulators. In Proceedings of the The International Conference of IFToMM ITALY, Naples, Italy, 7–9 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 746–754. [Google Scholar]

| 5 |

| Max Position Error | Max Speed Error | Average Position Error | Average Speed Error | |

|---|---|---|---|---|

| Test case 1 | 0.008 | 0.008 | 0.003 | 0.003 |

| Test case 2 | 0.13 | 1.50 | 0.02 | 0.10 |

| Test case 3 | 0.04 | 0.08 | 0.005 | 0.01 |

| Simulated | 2 | 10 | 30 |

| Real | 1 | 1 | 0.16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Neri, F.; Forlini, M.; Scoccia, C.; Palmieri, G.; Callegari, M. Experimental Evaluation of Collision Avoidance Techniques for Collaborative Robots. Appl. Sci. 2023, 13, 2944. https://doi.org/10.3390/app13052944

Neri F, Forlini M, Scoccia C, Palmieri G, Callegari M. Experimental Evaluation of Collision Avoidance Techniques for Collaborative Robots. Applied Sciences. 2023; 13(5):2944. https://doi.org/10.3390/app13052944

Chicago/Turabian StyleNeri, Federico, Matteo Forlini, Cecilia Scoccia, Giacomo Palmieri, and Massimo Callegari. 2023. "Experimental Evaluation of Collision Avoidance Techniques for Collaborative Robots" Applied Sciences 13, no. 5: 2944. https://doi.org/10.3390/app13052944

APA StyleNeri, F., Forlini, M., Scoccia, C., Palmieri, G., & Callegari, M. (2023). Experimental Evaluation of Collision Avoidance Techniques for Collaborative Robots. Applied Sciences, 13(5), 2944. https://doi.org/10.3390/app13052944