Exploring the Capabilities of a Lightweight CNN Model in Accurately Identifying Renal Abnormalities: Cysts, Stones, and Tumors, Using LIME and SHAP

Abstract

:1. Introduction

- For diagnosing three different kidney abnormalities, a fully automated lightweight DL architecture was proposed. The model’s capability to identify stones, cysts, and tumors was improved by utilizing a well-designed, customized convolutional neural network (CNN).

- The proposed model had fewer model parameters, surpassing current approaches, and was able to locate the target area precisely, so that it could operate effectively with internet of medical things (IoMT)-enabled devices.

- The explanatory classification of the model was conducted using XAI algorithms, a local interpretable model-agnostic explanation (LIME), and a Shapley additive explanation (SHAP).

- An ablation study of the proposed model was performed on a chest X-ray dataset for the diagnosis of COVID-19, pneumonia, tuberculosis, and healthy records.

2. Related Works

2.1. Conventional Practices

2.2. Machine-Learning Practices

2.3. Deep-Learning Approaches

3. Materials and Methods

3.1. Data Collection and Pre-Processing

3.2. Proposed Framework

3.2.1. CNN Model

3.2.2. Explainable AI

3.2.3. Implementation Details

4. Evaluation Metrics

5. Results and Discussion

5.1. CNN Results

5.2. Descriptive Analysis from XAI

5.2.1. SHAP

5.2.2. LIME

5.3. Class-Wise Study of Proposed CNN Model

5.4. Calculating the Floating-Point Operation

5.5. Comparison with the State-of-the-Art Methods

6. Result Analysis: Medical Opinion

6.1. Reception by Medical Professionals

6.2. Expert’s View towards to Dataset

7. Ablation Study of the Proposed Model

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pyeritz, R.E. Renal Tubular Disorders. In Emery and Rimoin’s Principles and Practice of Medical Genetics and Genomics; Elsevier: Amsterdam, The Netherlands, 2023; pp. 115–124. [Google Scholar]

- Kovesdy, C.P. Epidemiology of chronic kidney disease: An update 2022. Kidney Int. Suppl. 2022, 12, 7–11. [Google Scholar] [CrossRef]

- Maynar, J.; Barrasa, H.; Martin, A.; Usón, E.; Fonseca, F. Kidney Support in Sepsis. In The Sepsis Codex-E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2023; p. 169. [Google Scholar]

- Li, M.; Chi, X.; Wang, Y.; Setrerrahmane, S.; Xie, W.; Xu, H. Trends in insulin resistance: Insights into mechanisms and therapeutic strategy. Signal Transduct. Target. Ther. 2022, 7, 216. [Google Scholar] [CrossRef]

- Yener, S.; Pehlivanoğlu, C.; Yıldız, Z.A.; Ilce, H.T.; Ilce, Z. Duplex Kidney Anomalies and Associated Pathologies in Children: A Single-Center Retrospective Review. Cureus 2022, 14, e25777. [Google Scholar] [CrossRef]

- Sassanarakkit, S.; Hadpech, S.; Thongboonkerd, V. Theranostic roles of machine learning in clinical management of kidney stone disease. Comput. Struct. Biotechnol. J. 2022, 21, 260–266. [Google Scholar] [CrossRef]

- Kanti, S.Y.; Csóka, I.; Jójárt-Laczkovich, O.; Adalbert, L. Recent Advances in Antimicrobial Coatings and Material Modification Strategies for Preventing Urinary Catheter-Associated Complications. Biomedicines 2022, 10, 2580. [Google Scholar] [CrossRef]

- Ramalingam, H.; Patel, V. Decorating Histones in Polycystic Kidney Disease. J. Am. Soc. Nephrol. 2022, 33, 1629–1630. [Google Scholar] [CrossRef]

- Karimi, K.; Nikzad, M.; Kulivand, S.; Borzouei, S. Adrenal Mass in a 70-Year-Old Woman. Case Rep. Endocrinol. 2022, 2022, 2736199. [Google Scholar] [CrossRef] [PubMed]

- Saw, K.C.; McAteer, J.A.; Monga, A.G.; Chua, G.T.; Lingeman, J.E.; Williams, J.C., Jr. Helical CT of urinary calculi: Effect of stone composition, stone size, and scan collimation. Am. J. Roentgenol. 2000, 175, 329–332. [Google Scholar] [CrossRef]

- Park, H.J.; Kim, S.Y.; Singal, A.G.; Lee, S.J.; Won, H.J.; Byun, J.H.; Choi, S.H.; Yokoo, T.; Kim, M.J.; Lim, Y.S. Abbreviated magnetic resonance imaging vs. ultrasound for surveillance of hepatocellular carcinoma in high-risk patients. Liver Int. 2022, 42, 2080–2092. [Google Scholar] [CrossRef]

- Ahmad, S.; Nan, F.; Wu, Y.; Wu, Z.; Lin, W.; Wang, L.; Li, G.; Wu, D.; Yap, P.T. Harmonization of Multi-site Cortical Data across the Human Lifespan. In Machine Learning in Medical Imaging, Proceedings of the 13th International Workshop, MLMI 2022, Singapore, 18 September 2022; Springer: Cham, Switzerland, 2022; pp. 220–229. [Google Scholar]

- Mezrich, J.L. Is Artificial Intelligence (AI) a Pipe Dream? Why Legal Issues Present Significant Hurdles to AI Autonomy. Am. J. Roentgenol. 2022, 219, 152–156. [Google Scholar] [CrossRef]

- European Society of Radiology (ESR). Current practical experience with artificial intelligence in clinical radiology: A survey of the European Society of Radiology. Insights Into Imaging 2022, 13, 107. [Google Scholar] [CrossRef] [PubMed]

- Bazoukis, G.; Hall, J.; Loscalzo, J.; Antman, E.M.; Fuster, V.; Armoundas, A.A. The inclusion of augmented intelligence in medicine: A framework for successful implementation. Cell Rep. Med. 2022, 3, 100485. [Google Scholar] [CrossRef]

- van Leeuwen, K.G.; de Rooij, M.; Schalekamp, S.; van Ginneken, B.; Rutten, M.J. How does artificial intelligence in radiology improve efficiency and health outcomes? Pediatr. Radiol. 2022, 52, 2087–2093. [Google Scholar] [CrossRef]

- Jungmann, F.; Müller, L.; Hahn, F.; Weustenfeld, M.; Dapper, A.K.; Mähringer-Kunz, A.; Graafen, D.; Düber, C.; Schafigh, D.; Pinto dos Santos, D.; et al. Commercial AI solutions in detecting COVID-19 pneumonia in chest CT: Not yet ready for clinical implementation? Eur. Radiol. 2022, 32, 3152–3160. [Google Scholar] [CrossRef]

- Islam, K.T.; Wijewickrema, S.; O’Leary, S. A Deep Learning Framework for Segmenting Brain Tumors Using MRI and Synthetically Generated CT Images. Sensors 2022, 22, 523. [Google Scholar] [CrossRef] [PubMed]

- Charyyev, S.; Wang, T.; Lei, Y.; Ghavidel, B.; Beitler, J.J.; McDonald, M.; Curran, W.J.; Liu, T.; Zhou, J.; Yang, X. Learning-based synthetic dual energy CT imaging from single energy CT for stopping power ratio calculation in proton radiation therapy. Br. J. Radiol. 2022, 95, 20210644. [Google Scholar] [CrossRef]

- Mirakhorli, F.; Vahidi, B.; Pazouki, M.; Barmi, P.T. A Fluid-Structure Interaction Analysis of Blood Clot Motion in a Branch of Pulmonary Arteries. Cardiovasc. Eng. Technol. 2022; ahead of print. [Google Scholar]

- Lozano, P.F.R.; Kaso, E.R.; Bourque, J.M.; Morsy, M.; Taylor, A.M.; Villines, T.C.; Kramer, C.M.; Salerno, M. Cardiovascular Imaging for Ischemic Heart Disease in Women: Time for a Paradigm Shift. JACC Cardiovasc. Imaging 2022, 15, 1488–1501. [Google Scholar] [CrossRef]

- Araújo, J.D.L.; da Cruz, L.B.; Diniz, J.O.B.; Ferreira, J.L.; Silva, A.C.; de Paiva, A.C.; Gattass, M. Liver segmentation from computed tomography images using cascade deep learning. Comput. Biol. Med. 2022, 140, 105095. [Google Scholar] [CrossRef]

- Khanal, M.; Khadka, S.R.; Subedi, H.; Chaulagain, I.P.; Regmi, L.N.; Bhandari, M. Explaining the Factors Affecting Customer Satisfaction at the Fintech Firm F1 Soft by Using PCA and XAI. FinTech 2023, 2, 70–84. [Google Scholar] [CrossRef]

- Chapagain, P.; Timalsina, A.; Bhandari, M.; Chitrakar, R. Intrusion Detection Based on PCA with Improved K-Means. In Innovations in Electrical and Electronic Engineering; Mekhilef, S., Shaw, R.N., Siano, P., Eds.; Springer: Singapore, 2022; pp. 13–27. [Google Scholar]

- Chen, H.Y.; Lee, C.H. Deep Learning Approach for Vibration Signals Applications. Sensors 2021, 21, 3929. [Google Scholar] [CrossRef]

- Molinara, M.; Cancelliere, R.; Di Tinno, A.; Ferrigno, L.; Shuba, M.; Kuzhir, P.; Maffucci, A.; Micheli, L. A Deep Learning Approach to Organic Pollutants Classification Using Voltammetry. Sensors 2022, 22, 8032. [Google Scholar] [CrossRef]

- Bhandari, M.; Shahi, T.B.; Neupane, A.; Walsh, K.B. BotanicX-AI: Identification of Tomato Leaf Diseases using Explanation-driven Deep Learning Model. J. Imaging 2023, 9, 53. [Google Scholar] [CrossRef] [PubMed]

- Bhandari, M.; Panday, S.; Bhatta, C.P.; Panday, S.P. Image Steganography Approach Based Ant Colony Optimization with Triangular Chaotic Map. In Proceedings of the 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM), Gautam Buddha Nagar, India, 23–25 February 2022; Volume 2, pp. 429–434. [Google Scholar] [CrossRef]

- Islam, M.N.; Hasan, M.; Hossain, M.; Alam, M.; Rabiul, G.; Uddin, M.Z.; Soylu, A. Vision transformer and explainable transfer learning models for auto detection of kidney cyst, stone and tumor from CT-radiography. Sci. Rep. 2022, 12, 11440. [Google Scholar] [CrossRef] [PubMed]

- Qadir, A.M.; Abd, D.F. Kidney Diseases Classification using Hybrid Transfer-Learning DenseNet201-Based and Random Forest Classifier. Kurd. J. Appl. Res. 2023, 7, 131–144. [Google Scholar] [CrossRef]

- Rajinikanth, V.; Durai Raj Vincent, P.M.; Srinivasan, K.; Ananth Prabhu, G.; Chang, C.Y. Framework to Distinguish Healthy/Cancer Renal CT Images using Fused Deep Features. Front. Public Health 2023, 11, 39. [Google Scholar] [CrossRef]

- Yildirim, K.; Bozdag, P.G.; Talo, M.; Yildirim, O.; Karabatak, M.; Acharya, U.R. Deep learning model for automated kidney stone detection using coronal CT images. Comput. Biol. Med. 2021, 135, 104569. [Google Scholar] [CrossRef]

- Bayram, A.F.; Gurkan, C.; Budak, A.; Karataş, H. A Detection and Prediction Model Based on Deep Learning Assisted by Explainable Artificial Intelligence for Kidney Diseases. Avrupa Bilim Teknol. Derg. 2022, 40, 67–74. [Google Scholar]

- Loveleen, G.; Mohan, B.; Shikhar, B.S.; Nz, J.; Shorfuzzaman, M.; Masud, M. Explanation-Driven HCI Model to Examine the Mini-Mental State for Alzheimer’s Disease. ACM Trans. Multimed. Comput. Commun. Appl. 2022. [Google Scholar] [CrossRef]

- Gaur, L.; Bhandari, M.; Razdan, T.; Mallik, S.; Zhao, Z. Explanation-Driven Deep Learning Model for Prediction of Brain Tumour Status Using MRI Image Data. Front. Genet. 2022, 13, 822666. [Google Scholar] [CrossRef]

- Bhandari, M.; Shahi, T.B.; Siku, B.; Neupane, A. Explanatory classification of CXR images into COVID-19, Pneumonia and Tuberculosis using deep learning and XAI. Comput. Biol. Med. 2022, 150, 106156. [Google Scholar] [CrossRef]

- Longo, L.; Goebel, R.; Lecue, F.; Kieseberg, P.; Holzinger, A. Explainable artificial intelligence: Concepts, applications, research challenges and visions. In Machine Learning and Knowledge Extraction, Proceedings of the 4th IFIP TC 5, TC 12, WG 8.4, WG 8.9, WG 12.9 International Cross-Domain Conference, CD-MAKE 2020, Dublin, Ireland, 25–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 1–16. [Google Scholar]

- Huang, L.; Shi, Y.; Hu, J.; Ding, J.; Guo, Z.; Yu, B. Integrated analysis of mRNA-seq and miRNA-seq reveals the potential roles of Egr1, Rxra and Max in kidney stone disease. Urolithiasis 2023, 51, 13. [Google Scholar] [CrossRef] [PubMed]

- Yin, W.; Wang, W.; Zou, C.; Li, M.; Chen, H.; Meng, F.; Dong, G.; Wang, J.; Yu, Q.; Sun, M.; et al. Predicting Tumor Mutation Burden and EGFR Mutation Using Clinical and Radiomic Features in Patients with Malignant Pulmonary Nodules. J. Pers. Med. 2023, 13, 16. [Google Scholar] [CrossRef] [PubMed]

- Park, H.J.; Shin, K.; You, M.W.; Kyung, S.G.; Kim, S.Y.; Park, S.H.; Byun, J.H.; Kim, N.; Kim, H.J. Deep Learning–based Detection of Solid and Cystic Pancreatic Neoplasms at Contrast-enhanced CT. Radiology 2023, 306, 140–149. [Google Scholar] [CrossRef]

- Wu, Y.; Yi, Z. Automated detection of kidney abnormalities using multi-feature fusion convolutional neural networks. Knowl.-Based Syst. 2020, 200, 105873. [Google Scholar] [CrossRef]

- Cerrolaza, J.J.; Peters, C.A.; Martin, A.D.; Myers, E.; Safdar, N.; Linguraru, M.G. Ultrasound based computer-aided-diagnosis of kidneys for pediatric hydronephrosis. In Medical Imaging 2014: Computer-Aided Diagnosis, SPIE Proceedings of the Medical Imaging, San Diego, CA, USA, 15–20 February 2014; SPIE: Bellingham, WA, USA, 2014; Volume 9035, pp. 733–738. [Google Scholar]

- Raja, R.A.; Ranjani, J.J. Segment based detection and quantification of kidney stones and its symmetric analysis using texture properties based on logical operators with ultrasound scanning. Int. J. Comput. Appl. 2013, 975, 8887. [Google Scholar]

- Mangayarkarasi, T.; Jamal, D.N. PNN-based analysis system to classify renal pathologies in kidney ultrasound images. In Proceedings of the 2017 2nd International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 23–24 February 2017; pp. 123–126. [Google Scholar]

- Bommanna Raja, K.; Madheswaran, M.; Thyagarajah, K. A hybrid fuzzy-neural system for computer-aided diagnosis of ultrasound kidney images using prominent features. J. Med. Syst. 2008, 32, 65–83. [Google Scholar] [CrossRef]

- Viswanath, K.; Gunasundari, R. Analysis and Implementation of Kidney Stone Detection by Reaction Diffusion Level Set Segmentation Using Xilinx System Generator on FPGA. VLSI Design 2015, 2015, 581961. [Google Scholar] [CrossRef] [Green Version]

- Sudharson, S.; Kokil, P. An ensemble of deep neural networks for kidney ultrasound image classification. Comput. Methods Programs Biomed. 2020, 197, 105709. [Google Scholar] [CrossRef] [PubMed]

- Tsai, M.C.; Lu, H.H.S.; Chang, Y.C.; Huang, Y.C.; Fu, L.S. Automatic Screening of Pediatric Renal Ultrasound Abnormalities: Deep Learning and Transfer Learning Approach. JMIR Med. Inform 2022, 10, e40878. [Google Scholar] [CrossRef]

- Bhandari, M.; Neupane, A.; Mallik, S.; Gaur, L.; Qin, H. Auguring Fake Face Images Using Dual Input Convolution Neural Network. J. Imaging 2023, 9, 3. [Google Scholar] [CrossRef]

- Chowdary, G.J.; Suganya, G.; Premalatha, M.; Yogarajah, P. Nucleus Segmentation and Classification using Residual SE-UNet and Feature Concatenation Approach in Cervical Cytopathology Cell images. Technol. Cancer Res. Treat. 2022, 22, 15330338221134833. [Google Scholar]

- Shahi, T.; Sitaula, C.; Neupane, A.; Guo, W. Fruit classification using attention-based MobileNetV2 for industrial applications. PLoS ONE 2022, 17, e0264586. [Google Scholar] [CrossRef]

- Zhao, H.; Wu, J.; Li, Z.; Chen, W.; Zheng, Z. Double Sparse Deep Reinforcement Learning via Multilayer Sparse Coding and Nonconvex Regularized Pruning. IEEE Trans. Cybern. 2022, 53, 765–778. [Google Scholar] [CrossRef] [PubMed]

- Sitaula, C.; Hossain, M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl. Intell. 2021, 51, 2850–2863. [Google Scholar] [CrossRef] [PubMed]

- Sitaula, C.; Shahi, T.; Aryal, S.; Marzbanrad, F. Fusion of multi-scale bag of deep visual words features of chest X-ray images to detect COVID-19 infection. Sci. Rep. 2021, 11, 23914. [Google Scholar] [CrossRef]

- Samek, W.; Müller, K.R. Towards explainable artificial intelligence. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Cham, Switzerland, 2019; pp. 5–22. [Google Scholar]

- van der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Sharma, M.; Goel, A.K.; Singhal, P. Explainable AI Driven Applications for Patient Care and Treatment. In Explainable AI: Foundations, Methodologies and Applications; Springer: Cham, Switzerland, 2023; pp. 135–156. [Google Scholar]

- Ashraf, M.T.; Dey, K.; Mishra, S. Identification of high-risk roadway segments for wrong-way driving crash using rare event modeling and data augmentation techniques. Accid. Anal. Prev. 2023, 181, 106933. [Google Scholar] [CrossRef] [PubMed]

- Wong, T.T.; Yeh, P.Y. Reliable accuracy estimates from k-fold cross validation. IEEE Trans. Knowl. Data Eng. 2019, 32, 1586–1594. [Google Scholar] [CrossRef]

- Banerjee, P.; Barnwal, R.P. Methods and Metrics for Explaining Artificial Intelligence Models: A Review. In Explainable AI: Foundations, Methodologies and Applications; Springer: Cham, Switzerland, 2023; pp. 61–88. [Google Scholar]

- Sharma, V.; Mir, R.N.; Rout, R.K. Towards secured image steganography based on content-adaptive adversarial perturbation. Comput. Electr. Eng. 2023, 105, 108484. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Turuk, M.; Sreemathy, R.; Kadiyala, S.; Kotecha, S.; Kulkarni, V. CNN Based Deep Learning Approach for Automatic Malaria Parasite Detection. IAENG Int. J. Comput. Sci. 2022, 49, 1–9. [Google Scholar]

- Liang, W.; Tadesse, G.A.; Ho, D.; Fei-Fei, L.; Zaharia, M.; Zhang, C.; Zou, J. Advances, challenges and opportunities in creating data for trustworthy AI. Nat. Mach. Intell. 2022, 4, 669–677. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, M.; Zhang, F.; Wu, F.X.; Li, M. DeepCellEss: Cell line-specific essential protein prediction with attention-based interpretable deep learning. Bioinformatics 2023, 39, btac779. [Google Scholar] [CrossRef] [PubMed]

| S.N. | Layer Type | (F, K, S) | Output Shape | Parameters | FLOPs |

|---|---|---|---|---|---|

| 1 | Input | - | (150, 150, 3) | 0 | - |

| 2 | Conv2D + relu | (3, 3, 1) | (148, 148, 63) | 1792 | 25,233,408 |

| 3 | MaxPool2D (2) | - | (74, 74, 64) | 0 | 296 |

| 4 | Conv2D + relu | (3, 3, 1) | (72, 72, 64) | 36,928 | 5,971,968 |

| 5 | MaxPool2D (2) | - | (36, 36, 64) | 0 | 144 |

| 6 | Conv2D + relu | (3, 3, 1) | (34, 34, 64) | 36,928 | 1,331,712 |

| 7 | MaxPool2D (2) | - | (17, 17, 64) | 0 | 68 |

| 8 | Conv2D + relu | (3, 3, 1) | (15, 15, 64) | 36,928 | 259,200 |

| 9 | MaxPool2D (2) | - | (7, 7, 64) | 0 | 28 |

| 10 | Conv2D + relu | (3, 3, 1) | (5, 5, 64) | 36,928 | 5760 |

| 11 | MaxPool2D (2) | - | (2, 2, 64) | 0 | 8 |

| 12 | Flatten | - | 256 | 0 | 131,072 |

| 13 | dropout (0.2) | - | 256 | 0 | - |

| 14 | Dense + relu + l2 (0.0001) | - | 128 | 32,896 | 65,536 |

| 15 | Dense + Softmax + l2 (0.0001) | - | 4 | 512 | 1024 |

| Total parameters | 182,916 | ||||

| Total FLOPs | 33,000,224 | ||||

| K1 | K2 | K3 | K4 | K5 | K6 | K7 | K8 | K9 | K10 | Avg () | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TrA | 99.33 | 99.64 | 99.04 | 99.31 | 99.31 | 99.22 | 99.64 | 99.5 | 99.43 | 99.24 | 99.30 ± 0.18 |

| TrL | 0.0321 | 0.0192 | 0.0445 | 0.0348 | 0.0352 | 0.0350 | 0.0223 | 0.2680 | 0.0335 | 0.0323 | 0.0557 ± 0.07 |

| VaA | 99.76 | 99.68 | 96.47 | 100 | 99.76 | 99.76 | 99.84 | 99.68 | 99.76 | 99.2 | 99.39 ± 0.99 |

| VaL | 0.0208 | 0.0192 | 0.1518 | 0.0118 | 0.169 | 0.0195 | 0.0142 | 0.0201 | 0.0195 | 0.0452 | 0.0491 ± 0.06 |

| TsA | 99.84 | 99.76 | 97.19 | 100 | 100 | 100 | 100 | 99.68 | 99.84 | 98.88 | 99.52 ± 0.84 |

| TsL | 0.0221 | 0.0164 | 0.1106 | 0.0018 | 0.0145 | 0.0131 | 0.0117 | 0.0208 | 0.0178 | 0.0621 | 0.0291 ± 0.03 |

| Category | CT Image | Mask | LIME (Segmented) |

|---|---|---|---|

| Cyst |  |  |  |

| Normal |  |  |  |

| Stone |  |  |  |

| Tumor |  |  |  |

| K1 | K2 | K3 | K4 | K5 | K6 | K7 | K8 | K9 | K10 | Avg () | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pre | Cyst | 99 | 99 | 99 | 100 | 100 | 100 | 100 | 100 | 99 | 98 | 99.4 ± 0.66 |

| Normal | 100 | 100 | 97 | 100 | 100 | 100 | 100 | 99 | 100 | 100 | 99.5 ± 0.95 | |

| Stone | 100 | 100 | 98 | 100 | 100 | 100 | 100 | 99 | 100 | 100 | 99.7 ± 0.64 | |

| Tumor | 100 | 100 | 95 | 100 | 100 | 100 | 100 | 100 | 100 | 98 | 99.3 ± 1.55 | |

| Rec | Cyst | 100 | 100 | 99 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 99.9 ± 0.30 |

| Normal | 100 | 100 | 98 | 100 | 100 | 100 | 100 | 100 | 100 | 99 | 99.7 ± 0.64 | |

| Stone | 99 | 99 | 90 | 100 | 100 | 100 | 100 | 99 | 99 | 93 | 97.9 ± 3.30 | |

| Tumor | 100 | 100 | 96 | 100 | 100 | 100 | 100 | 99 | 100 | 100 | 99.5 ± 1.20 | |

| Fsc | Cyst | 100 | 100 | 99 | 100 | 100 | 100 | 100 | 100 | 100 | 99 | 99.8 ± 0.40 |

| Normal | 100 | 100 | 97 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 99.7 ± 0.89 | |

| Stone | 100 | 99 | 94 | 100 | 100 | 100 | 100 | 99 | 99 | 97 | 98.8 ± 1.83 | |

| Tumor | 100 | 100 | 96 | 100 | 100 | 100 | 100 | 100 | 100 | 99 | 99.5 ± 1.20 | |

| Ref. | Plane | Ev.P | Class | Model | Result | P.M | XAI | |

|---|---|---|---|---|---|---|---|---|

| [29] | Coronal, Axial | 64:16:20 10-fold | Normal—1300 Stone—1300 Cyst—1300 Tumor—1300 | Inception v3 | TsA | 61.60% | 22.32 | GradCAM |

| VGG16 | 98.20% | 14.74 | ||||||

| Resnet | 73.80% | 23.71 | ||||||

| EANet | 77.02% | 6 | ||||||

| Swin Transformers | 99.30% | 4.12 | ||||||

| CCT | 96.54% | 4.07 | ||||||

| [30] | Coronal, Axial and Sagittal | 80:20:00 | Normal—1350 Stone—1350 Cyst—1350 Tumor—1350 | Densenet201- Random Forest | TsA | 99.44% | 20 | N/A |

| [31] | Axial | 80:10:10 5-fold | Normal—1340 Tumor—1340 | VGG16-NB | TsA | 96.26% | 14.74 | N/A |

| Densenet121-KNN | 96.64% | 20 | ||||||

| VGG-DN-KNN | 100.00% | 14.74 | ||||||

| [32] | coronal | 80:20 | Normal: 1009 Stone: 790 | XResNet-50 | TsA | 96.82% | 23.7 | N/A |

| [33] | Axial | 75:10:15 | Normal—288 Stone—494 Cyst—498 | YOLOv7 | Pre. | 88.20% | 6 | GradCAM |

| Fs | 85.40% | |||||||

| YOLOv7 Tiny | Pre. | 88.20% | ||||||

| Fs. | 85.40% | |||||||

| Ours | Coronal, Axial | 80:10:10 10-fold | Cyst: 3709 Normal: 5077 Stone: 1377 Tumor: 2283 | Custom CNN | TsA | 99.39% | 0.18 | LIME SHAP |

| AP | 99.47% | |||||||

| AR | 99.25% | |||||||

| AF | 99.45% | |||||||

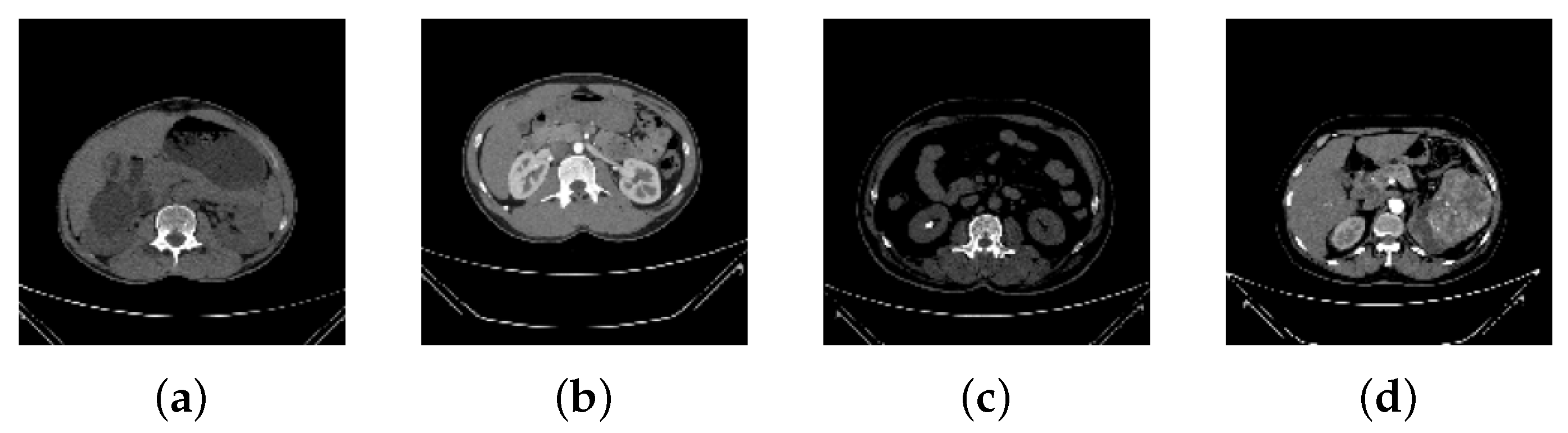

| CT Image | Radiological Findings | Impressions |

|---|---|---|

| Figure 2a | Simple cystic lesion arising from right renal pelvis. | Right parapelvic cyst. |

| Figure 2b | Both kidneys appear normal in size; parenchyma shows normal width and structure. | Normal-appearing bilateral kidneys in the given section. |

| Figure 2c | Radiopaque lesion visualized in right kidney. | Right renal calculus. |

| Figure 2d | Heterogeneously enhancing lesion likely originating from left kidney. | Malignant mass arising from left kidney. |

| K1 | K2 | K3 | K4 | K5 | K6 | K7 | K8 | K9 | K10 | Avg () | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TrA | 96.22 | 95.60 | 96.36 | 95.59 | 96.50 | 96.30 | 95.60 | 96.42 | 95.93 | 96.20 | 96.07 ± 0.34 |

| TrL | 0.1094 | 0.1360 | 0.1058 | 0.1278 | 0.1048 | 0.1120 | 0.1340 | 0.1589 | 0.1894 | 0.1012 | 0.1279 ± 0.03 |

| VaA | 95.93 | 92.85 | 94.53 | 92.99 | 94.24 | 95.22 | 92.91 | 94.41 | 94.13 | 94.78 | 94.20 ± 0.97 |

| VaL | 0.1281 | 0.1919 | 0.1804 | 0.2398 | 0.1964 | 0.2109 | 0.2034 | 0.2078 | 0.265 | 0.1831 | 0.2007 ± 0.03 |

| TsA | 94.26 | 89.92 | 94.40 | 92.30 | 94.54 | 94.30 | 93.12 | 94.55 | 93.30 | 94.36 | 93.50 ± 1.39 |

| TsL | 0.2009 | 0.2684 | 0.1618 | 0.2219 | 0.1589 | 0.1789 | 0.245 | 0.2719 | 0.2930 | 0.1680 | 0.2160 ± 0.047 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhandari, M.; Yogarajah, P.; Kavitha, M.S.; Condell, J. Exploring the Capabilities of a Lightweight CNN Model in Accurately Identifying Renal Abnormalities: Cysts, Stones, and Tumors, Using LIME and SHAP. Appl. Sci. 2023, 13, 3125. https://doi.org/10.3390/app13053125

Bhandari M, Yogarajah P, Kavitha MS, Condell J. Exploring the Capabilities of a Lightweight CNN Model in Accurately Identifying Renal Abnormalities: Cysts, Stones, and Tumors, Using LIME and SHAP. Applied Sciences. 2023; 13(5):3125. https://doi.org/10.3390/app13053125

Chicago/Turabian StyleBhandari, Mohan, Pratheepan Yogarajah, Muthu Subash Kavitha, and Joan Condell. 2023. "Exploring the Capabilities of a Lightweight CNN Model in Accurately Identifying Renal Abnormalities: Cysts, Stones, and Tumors, Using LIME and SHAP" Applied Sciences 13, no. 5: 3125. https://doi.org/10.3390/app13053125