1. Introduction

Advanced artificial intelligence (AI), especially machine learning (ML) and deep learning (DL) [

1], is a dominant factor in emerging technologies and traditional social and economic sciences. Human society expects ML and DL to solve intricate problems, alleviate human tiredness, and offer reliable projections [

2]. The demand for transparent and explainable methods has grown since AI is becoming part of our lives, with its decisions affecting our everyday activities [

3].

The response of the AI community to the above needs has catalyzed the research for explainable artificial intelligence (XAI) [

4,

5] methods. However, XAI is a new discipline, established just recently in 2018 [

6], though earlier significant research papers have pointed out the need for explainable models [

7]. XAI refers not only to the technical aspects of the DL models that ensure some level of interpretability but also integrates the concepts of data privacy and accountability [

8].

Combining learning from data with human knowledge drawn from the expertise and scientific training of those who develop artificial intelligence systems is a promising approach. Such an adhesive approach may be the fuzzy cognitive map (FCM) approach [

9,

10,

11]. FCMs are the elements and methods union of fuzzy logic and neural networks (NNs). It is a soft computational method of modelling fuzzy and unstable conditions. An FCM describes a system with a graphic display that includes concepts and their relationships. They intend to model human knowledge, not discover it from raw data. FCMs belong to traditional AI methods, but their evolvement makes them suitable candidates for solving complex problems, which ML and DL dominate.

FCMs’ nature makes them interpretable and explainable [

12]. In the present paper, we defend FCMs and their suitability for presenting explainable decisions and working with advanced ML and DL frameworks.

To the best of our knowledge, this research is the first to discuss the aspects and applications of FCMs under the XAI perspective. Recent literature provides insights into the functionalities of FCMs and their applicability in various domains. At the same time, much discussion has been held on the concepts of XAI and how they are taken care of in AI-based solutions. The relationship between FCMs and XAI is not well-documented and demonstrated yet.

The paper also aims to introduce essential FCM aspects to practitioners and newcomers to the field of artificial intelligence and to discuss the explainability issue through examples. The contributions of this research can be summarized as follows:

The nature and functions of FCMs are thoroughly analyzed from the point of view of explainable artificial intelligence and its properties;

Key literature is presented to demonstrate the significance of FCMs, both in terms of their performance and their interpretability;

The results demonstrate that FCMs are both in accordance with the XAI directives and have many successful applications in domains such as medical decision-support systems, energy savings, precision agriculture, environmental monitoring, and policy-making for the public sector;

The authors argue that the fields of ML, DL, and FCMs can be combined to develop robust AI solutions that are explainable and able to handle multiple sources of data, including human knowledge.

Critical studies deploying FCMs are briefly discussed to support the topic.

Section 2 briefly discusses the background theory of FCMs. New practitioners will be informed about the main aspects of AI methods focused on the learning process and knowledge extraction or encapsulation. Critical XAI perspectives of FCMs are presented in

Section 3. The reader will understand the inherent explainable nature of FCMs and their limitations in contrast with learning methods. The study discusses the potential cooperation of diverse practices in ensemble systems. Such systems merge the exciting learning opportunities of networks with the causality-oriented operations of FCMs. Conclusions are drawn in

Section 4.

2. Related Work

Recent review papers have focused on the applications of FCMs in various domains.

In [

13], the authors introduce fundamental concepts related to FCMs and examine their characteristics in a static and dynamic context. The existing algorithms used for building FCM structures are also explored. Afterwards, an overview of various theoretical advancements made in this field with a goal-oriented perspective is presented. The authors discuss the use of FCMs for time series forecasting tasks and classification. The paper reviews software tools for developing baseline FCMs.

In [

14], the authors explore FCMs’ potential for solving pattern classification problems. More precisely, recent developments in FCM-based classifiers and outstanding challenges to be addressed are presented and discussed. The authors concluded that, despite the identified limitations and obstacles, the transparency provided by cognitive mapping continues to inspire researchers to create interpretable FCM-based classifiers. FCM-based models offer several other appealing features, including detecting hidden patterns, flexibility, dynamic behaviour, combinability, and adjustability from multiple perspectives.

Amirkhani et al. [

15] reviewed the significant decision-making methods and medical applications of fuzzy cognitive maps (FCMs) used in the medical field in recent years. To evaluate the effectiveness and potential of various FCM models in creating medical decision-support systems (MDSSs), medical applications are classified into four essential categories: decision-making, diagnosis, prediction, and classification. The paper also examines different diagnostic and decision-support issues that FCMs have addressed in recent years, intending to present various FCMs and assess their contribution to medical diagnosis and treatment advancements.

Olazabal et al. [

16] presented a set of best practice measures to increase transparency and reproducibility in map-building processes to enhance the credibility of FCM results. A case study was used to illustrate the proposed good practices, which examine the impacts of heatwaves and adaptation options in an urban environment. Agents from various urban sectors were interviewed to obtain individual cognitive maps. The research suggests best practices for collecting, digitizing, interpreting, pre-processing, and aggregating individual maps in a traceable and coherent manner. The authors defined the following practices for building transparent FCMs: (i) design practices should include clear guidelines for building FCMs from individual maps to ensure comparability and reduce data treatment needs, (ii) a structured and understandable workbench should be used for data treatment to guarantee the traceability of any potential changes, (iii) homogenization methods should be aligned with both scientific requirements and stakeholder needs to ensure the coherency and usability of the final output, (iv) replicable aggregation methods built on clear aggregation rules should also be used to ensure reproducibility, and (v) metrics such as mean and standard deviation should be provided for concepts and connections to guarantee the interpretability of the final map.

The research mentioned above collects and deeply analyzes the main characteristics of FCMs. They also list the latest developments of these networks and the applications that have been proposed in several areas. In most papers, the explainability and transparency of the FCMs are simply mentioned without them being documented or being the main objective of the papers. This is why there is a need to more thoroughly document the relationship between FCMs and XAI at a theoretical level, analyzing their mode of operation, and at a practical level, by citing examples that prove their importance.

The latter is critical because FCM methodology is the only one that models the causality between phenomena and features. The cause-and-effect relationships are derived from collective human knowledge, which FCMs aim to model.

3. Materials and Methods

3.1. Fuzzy Cognitive Maps

Fuzzy cognitive maps (FCMs) are a type of mathematical modelling tool used to represent and analyze complex systems. FCMs are a form of artificial NN, inspired by how the human brain processes and stores information.

In an FCM, a system is represented by a set of interconnected nodes, or “concepts”, representing various aspects of the system. These nodes are connected by weighted edges, representing the concepts’ causal relationships.

Unlike traditional NNs, FCMs use fuzzy logic, which means that the edges between the nodes can take on continuous values between 0 and 1 rather than just binary values of 0 or 1. This allows FCMs to represent more complex and uncertain relationships between concepts.

FCMs are commonly used in engineering, economics, and management fields to model and analyze complex systems, such as supply chains, financial markets, and organizational structures. FCMs can be used to simulate the behaviour of a system under different conditions, to identify key drivers and feedback loops, and to explore the potential outcomes of different decision-making scenarios.

3.1.1. A Brief History of FCMs

FCM theories originated from the graph theory back in the 18th century. A graph is a union of nodes connected with arrows that imply causality. Graph theory combines graphs and mathematical formulas to model the relations between objects of the same collection [

17]. Later, Barnes [

18] linked the graph theory and network analysis. Robert Axelrod [

11] first introduced the concept of using digraphs to express causal relations among variables determined by groups of experts. This conception was titled sediagraphs, namely, cognitive maps (CM). Later, Kosko [

9] extended CMs, which were naturally binary. Fuzzy causal functions were introduced among the connections. As a result, FCMs emerged. In their classical formation, FCMs differ from ML and DL because they are not intended to learn from but to model human expertise (

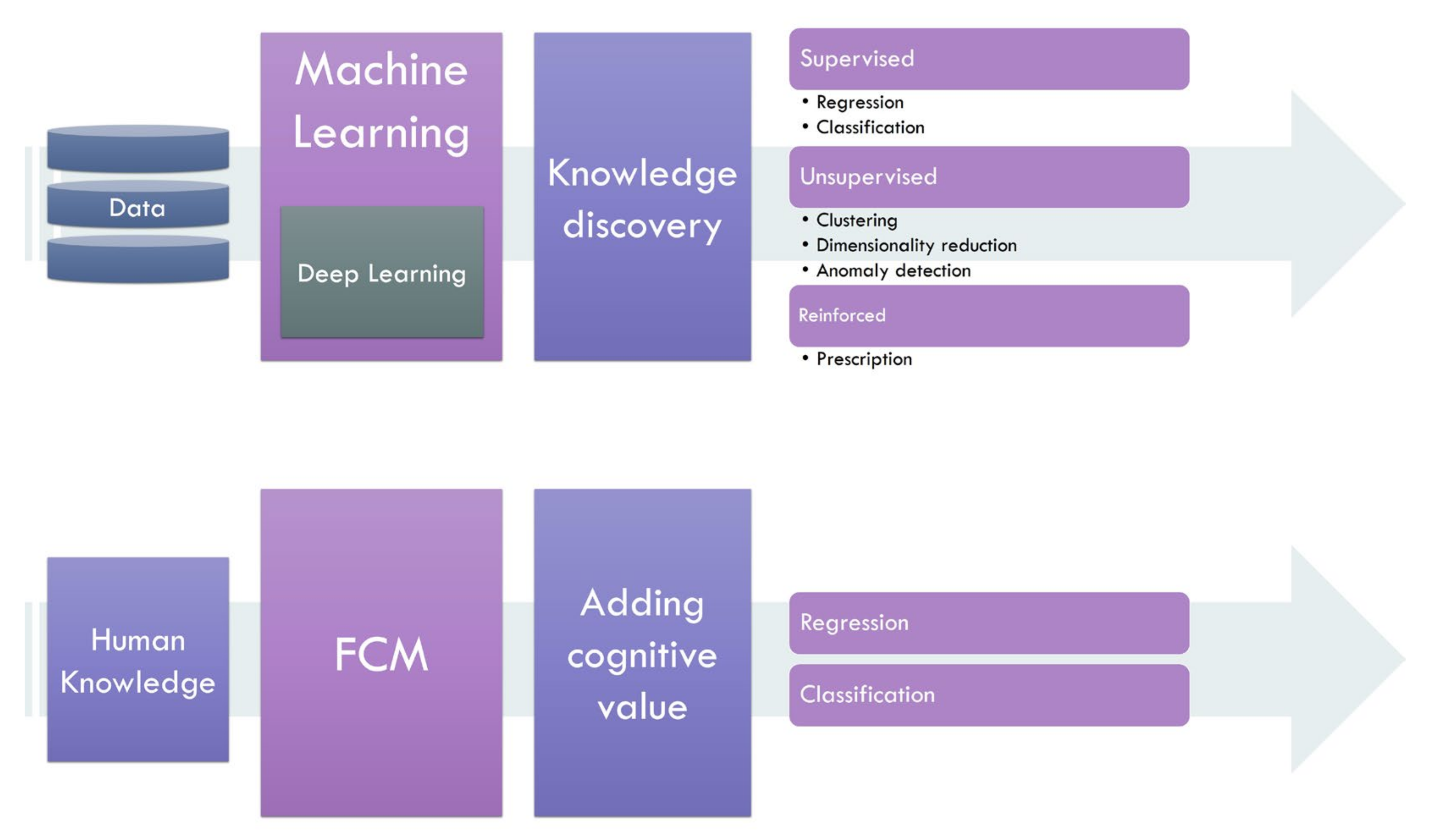

Figure 1).

3.1.2. FCMs in a Nutshell

In their visual formation, FCMs consist of a signed direct graph, which includes all the input concepts (C

i) and weighted arcs (Wij) to express their relationships [

10]. There may be one or more output concepts. In their classical representation, the experts determine initial weight values (Wij), usually expressed verbally. For example, concept C

1 on concept C

2 may be specified as “Very Strong”. This entails that a slight change in the value of C

1 would dramatically affect the value of C

2. Due to many interconnections, the computation of the concept values does not involve one forward procedure but requires many iterations until the system is stabilized.

Figure 2 illustrates a graph display of an FCM.

The mathematical operation formulates the influence of each concept on others and computes the updated values. The function, which determined the value of each concept at the end of each iteration, is usually described by the following expression [

12,

19,

20]:

In Equation (1), is the activation function, which normalizes the resulting mathematical operation and constraints within specific limits.

The construction of an FCM is achieved as follows:

The stakeholders are gathered, and they decide the input concepts, their potential initial state, and the output concepts;

The stakeholders determine the causal relationships among the concepts;

The engineers determine the concept value update function, the activation function, and the number of iterations. They also specify any other parameter involved.

The following pseudo-code describes the steps of FCM modelling and functionality:

Every input concept’s verbal expression or potential values are transformed into numerical forms;

Every verbally assigned weight is transformed into a numerical form that usually lies within the interval [−1, 1];

The FMC is allowed to update the concept values using Equation (1) for a specific number of iterations;

The engineers and the stakeholders examine the final states of the input and the output concepts and explain the results.

Recent advances in FCMs circumvent the static nature of the classical FCMs by introducing state concepts and their accompanying equations [

21,

22] or learning algorithms [

23,

24,

25]. In this way, FCMs are allowed to learn some of the interconnections, whereas the experts specify others. Therefore, FCMs may embody human knowledge and discover underlying patterns in data (

Figure 3). The rigorous description of the system’s inputs and outputs and the significance of their interactions are requirements for the operation of conventional FCMs [

21]. Advanced FCM topologies allow some degrees of freedom to learn from the input data, giving a preassigned baseline weight value that represents the baseline causality between two concepts or freezing some weights that are known beforehand and allowing some other interconnections to be learned [

26].

3.2. Explainable Artificial Intelligence

The reason behind the opaqueness of most ML and DL methods is their depth, their mathematical complexity, and the backpropagation approach. The presence of many layers and the repeating mathematical formulas impedes interpreting what the models have learned. ML and DL methods are supposed to learn from the training data, discover underlying and non-linear relationships between the data points, and, hopefully, generate new knowledge.

With the aid of explainability and interpretability methods, the engineers and developers of such models can discover hidden relations. However, correlation or relation does not imply causality. The reverse is always true. The cause-and-effect relationship between phenomena and data points can be validated only using human reasoning. ML and DL models can provide evidence of causality and can suggest potential interconnections. Human knowledge and experience can give definite answers as to whether causality exists.

XAI is an emerging field, referring to AI methods that explain decisions. It is worth mentioning that there is no strict definition of XAI, and several papers have aimed to set ground rules, define XAI principles, and discuss the topic. For example, Philips [

27] described XAI’s four principles: explanation, meaning, explanation accuracy, and knowledge limits. Arrieta et al. [

4] classified XAI aspects as follows: (a) trustworthiness, (b) causality, (c) transferability, (d) informativeness, (e) confidence, (f) fairness, (g) accessibility, (h) interactivity, and (i) privacy awareness.

Researchers are yet striving to determine universal criteria for providing and validating models’ explanations, despite theoretically recognizing the importance of XAI [

28]. However, it is generally agreed that XAI criteria are subjective and problem-oriented. For example, the requirements of an explainable model operating in the medical field are determined by the experts that will use the model in coordination with the relevant authorities. In addition, the type of problem AI solves is crucial for determining the XAI criteria.

4. Results

4.1. FCMs and Trust

Trust is directly related to the model’s effectiveness [

4]. In classification tasks, measuring specific metrics (e.g., accuracy, precision, recall, F-1 score, and AUC score) determines the robustness of the developed model. The F-1 score is the harmonic mean of the model’s precision and recall and is used when there is a class imbalance problem. The AUC score, or area under the curve score, is a metric used to measure the performance of a machine learning model. It measures the accuracy of a model by calculating the area under the curve of a receiver operating characteristic (ROC) curve. This AUC score is a value between 0 and 1, with 1 being the best model performance. AUC is a useful tool for comparing different models and their accuracy.

In studies on object detection, image segmentation, and regression, appropriate metrics are used to measure the model’s robustness.

However, as a notion itself, trust does not have a specific meaning regarding AI. It is a simplification to identify the algorithm’s accuracy with our confidence. Developing a reliable ML model typically involves the steps in

Table 1.

Fuzzy cognitive maps are typically not data-driven models but knowledge-driven models. Their object is to incorporate human expertise in problem-solving tasks. However, their effectiveness cannot be measured without using real-case examples, even if they are few. As long as we trust human knowledge, we trust that there is a suitable FCM to model it, even in very demanding challenges and changing environments. If we are sure of the authenticity of the scientific knowledge of those involved in creating the model, we are confident of the model itself, as long as it does, indeed, work. Trust is also related to our confidence in the model’s technical creators. However, this topic applies to everything that man creates, and it makes no sense to specialize it further in FCMs. Fuzzy cognitive maps are potentially trustworthy because they incorporate human knowledge. In [

28], the authors highlight the importance of the human-in-the-loop philosophy as a vital component of every AI method. That is the case with FCMs.

In [

22], the authors developed a state-space FCM to predict coronary artery disease from several clinical attributes, including symptoms, recurrent diseases, and diagnostic tests. The FCM model achieved an accuracy of 85.47% in discriminating healthy and diseased subjects from a dataset of 303 instances. Though this model did not contain any learning freedom, it succeeded in modelling human knowledge since the experts had defined the weights of each concept. The authors compared the model with ML algorithms, which performed worse and showed great under-fitting. In a similar study [

19], the authors benchmarked more ML algorithms for the same task. Again, ML failed to learn from the input data, obtaining an accuracy of 10% less than the FCM in [

22].

Trust is also related to the type of model evaluation and the associated data. Incomplete data is responsible for incomplete and biased models in ML and DL. There needs to be a very carefully designed validation process for assessing the model’s ability to adapt to changing attributes and variations in data distributions. ML and DL occasionally suffer from overfitting, which hinders their generalization capabilities. External validation is essential for determining the model’s effectiveness.

In modelling with FCMs, real-life data validation is also important. However, FCM modelling is inherently endowed with the option to perform slight modifications that ensure suitable adaption to new domains—for example, adjusting the initial weights of the concepts or adding new concepts to capture the contemporary notions of the target domains. In ML and DL, domain adaption is succeeded with re-training [

29,

30], which is time-consuming. The latter topics are discussed in-depth in the FCM and Transferability section.

4.2. FCMs and Transferability

Transferability usually applies to machine learning methods, such as NNs or convolutional neural networks (CNNs). We choose training and test data by randomly partitioning examples from the same distribution. We then judge a model’s generalization error by the discrepancy between its training and test data performance. It is often required to verify the transferability of the model using external validation test sets, which are expected to follow uncertain distributions compared to the training sets. That is how experts measure the generalization ability of the developed models. However, humans exhibit a far richer capacity to generalize, transferring learned skills to unfamiliar situations.

The most representative example of transferable ML networks comes from the DL domain. It is observed that DL models trained on the ImageNet database [

31,

32] are indeed transferrable and require fewer data for re-training. They can even adapt to feature extraction in entirely different domains [

33,

34]. However, they need at least some data to adjust to the new domain and classify the extracted features.

FCMs may or may not use data [

35]. The ability to generalize depends strongly on their design, degrees of learning freedom, and the problem they are solving [

35].

Table 2 presents a simplified pipeline for transferring AI models from one domain to another. It is shown that FCMs are more flexible on this front, but transferring them would require the assembly of new human expertise and stakeholders. Fuzzy cognitive maps (FCMs) are designed to capture cause-and-effect relationships between concepts or variables in a system, making them potentially transferable to new systems or problems. However, the extent to which FCMs can be transferred depends on several factors, such as the similarity between the original system and the new system, the availability and quality of data, and the complexity of the systems being modelled.

In general, FCMs are more transferable when the underlying systems or problems share similar structures and dynamics. For instance, an FCM developed to model a transportation system may be more transferable to a similar transportation system in a different city or region than to a completely different system, such as a financial market. Additionally, the transferability of FCMs can be improved by incorporating domain-specific knowledge and expertise to refine the model and ensure its applicability to the new system.

A very intuitive example of FCMs’ transferability is presented in [

36], where the authors present an FCM model to predict the spread of COVID-19 diseases across different countries. It is found that their model is applicable in three countries. In the latter example, the model is designed for global use. This is reflected in the input concepts, which have global applications. This is why it applies to varying situations.

In [

37], the authors propose an integration of FCMs and multi-agent systems for furnishing an online platform to improve decision-making in farming field management. The authors aimed to aid in resource reduction and income increase. Precision agriculture is enhanced by the utilization of sensors for monitoring crops. Although real-life scenarios did not validate the proposed pipeline, it is highly transferrable because it would only require the participation of the new domain’s human expertise to adjust its parameters.

Irani et al. [

38] proposed an approach to support policymakers in evaluating their policy options to enhance food security by reducing waste within the food chain. The study demonstrated that recurrent organizational factors substantially contribute to food waste reduction in a causal sense. Those factors include food market competition, the impact of food imports upon customers, standardized food regulations, ease of market access, level of bureaucracy, and more. Though subjective to stakeholders’ beliefs, the developed model is easily transferable to any food chain because its concepts fit any situation. Transferring the model would require gathering the involved policymakers of the new application domain.

On the contrary, many FCMs are not directly transferrable to new domains due to their dependency on local-domain concepts that are not met anywhere else. Such models demand much effort to become suitable for new tasks.

4.3. FCM and Causality

Causality, or the relationship between cause and effect, is a fundamental principle that applies universally to all phenomena. It is essential to many fields, including medicine, physics, technology, geology, economics, business, sociology, and law. Despite its importance, the main theories of causation and the debates surrounding them have not been well understood or comprehended. Causal factors are an integral part of any process, and they always lie in the past. It is impossible to deny that all phenomena in the world give rise to specific consequences and have been caused by other phenomena. In medicine, as in all fields, understanding causation is crucial.

All causes are part of a process, and the effects they produce can, in turn, become causes of other effects in the future. The connection between cause and effect occurs over time, and it is essential to understand this relationship in various fields of study, including medicine. It is universally recognized that cause always precedes effect and that there is an interval between the time when the cause begins to act, and the time the effect appears. Cause and effect coexist for some time, and then the cause dies out while the consequence ultimately becomes the cause of something else. This holds true in medicine, for example, where many medical problems are studied and treated over a period of time.

Correlation is the process of determining a relationship or connection between two or more things. It is a statistical measure of the strength of a linear relationship between two quantitative variables, such as temperature and humidity. In statistics, correlation measures the extent to which two or more attributes or measurements between two random variables in the same group of elements show a tendency to vary together. For instance, both excessive tobacco and alcohol consumption are independently associated with an increased risk of several types of cancer.

Non-trivial problems are not always linear. Therefore, correlation does not imply causality, while causality does not always mean correlation [

39]. We can measure how two variables correlate, but it does not reveal any cause-and-effect relationship at all. We can distinguish between five types of causality-related problems, as seen in

Table 3.

Although ML and DL methods cannot reveal causality, they can suggest potential cause-and-effect relationships [

40]. For example, the Random Forest algorithm can grade the importance of each dataset feature. Therefore, it can imply some causality among the attributes.

In deep networks, such as NNs and CNNs, implementing post-hoc explainability algorithms and methods is essential to get insights into the importance of the extracted features.

In problems wherein the causality between the nodes is defined by human expertise, there is no need for implementing learning methodologies. FCMs are suitable for modelling this knowledge and operation.

Various problems involve known interconnections between concepts and ambiguous relations that we are keen to discover. In such cases, FCMs yield their best potential as they can combine human knowledge modelling with the freedom to learn within specific constraints. ML and DL methods can adapt to this situation, but it would require much effort to narrow the learning freedom and freeze some weights.

In the work of Alipour [

41], the developed FCM revealed that Iran’s solar photovoltaics development is affected by severe economic uncertainty, exogenous factors, and proactive political and economic parameters. The authors not only modelled the known causal relationships but were able to suggest further relationships.

In the work of Morone et al. [

42], the authors demonstrated some policy drivers, such as “public food waste rules”, “investments and infrastructure”, and “small-scale farming”, that are particularly effective in supporting a new and sustainable food consumption model. Their FCM model modelled the causality of the involved attributes successfully.

In [

43], the authors developed an analytical framework for developing policy strategies to optimize municipal waste management systems (MWMSs) in the case of the Land of Fires (LoF) and considered simulations of different waste management strategies. The authors identified the optimal strategy for the LoF MWMS, such as fostering social innovation through information-based instruments and promoting innovation in material and process design.

Ziv et al. [

44] studied the potential impact of Brexit on the UK’s energy, water, and food (EWF) nexus. They designed an FCM that revealed an unexpected decrease in EWF demand under a “hard-Brexit” scenario.

In cases wherein there are undiscovered cause-and-effect relationships, training data is required. Learning from the training data is a feature of ML and DL models. However, they cannot adequately explain their decisions. On the contrary, FCMs can benefit from learning algorithms and reveal such interconnections, thereby neglecting their nature and becoming learning machines. A plethora of recent FCM-oriented studies implied cause-and-effect relationships.

4.4. FCM and Transparency

New regulations in the European Union have proposed that people affected by algorithmic decisions have a right to an explanation [

45]. Exactly what structure such clarification may take or how such clarification can be demonstrated right remains open. Moreover, the same regulations suggest that algorithmic decisions should be contestable.

In decision-making concerning economic issues or strategies that involve the public sector, users mandate transparent models. To this end, FCMs are broadly preferred for modelling. For example, in [

46], a grey-based green supplier-selection model was developed. The study aimed to help supplier selection by integrating environmental and economic criteria. Though the proposed model is subjective due to the presence of financial experts, it is transparent enough to avoid introduced biases. This model is also transferrable because it can fit any supplier-selection challenge regardless of the economic sector.

Ferreira et al. [

47] proposed an FCM model for determining the satisfaction of residential neighbourhoods in urban communities. The model was implemented in the central–west region of Portugal. The study revealed that some underestimated factors, such as abandoned buildings, besides physical and social factors, can severely affect human happiness in their place of living. The transparency of their model enables the stakeholders to inspect why and how such factors contribute to the outcome.

4.5. FCM’s Informativeness and Fairness

The comprehensiveness of FCMs, their ability to visualize the procedure, and their transparency in every step suggest that the users always maintain complete control of the decisions. The nature of the FCM enables them to present the user with the entire reasoning in both quantitative and qualitative ways.

FCMs are inherently transparent, increasing the information the user can leverage.

Table 4 presents the critical operations of FMCs and the level of human involvement.

FCMs are often depicted as directed graphs, where the nodes represent concepts or variables and the edges represent causal relationships between them. The strength and direction of the edges can be represented using fuzzy logic, which allows for uncertainty and imprecision in the relationships to be accounted for.

The transparency of FCMs also stems from their ability to allow for easy interpretation and modification by domain experts. FCMs can be easily modified by adding or removing concepts or variables, changing the strength of the relationships, or adding new relationships as needed. This allows domain experts to incorporate their knowledge and expertise into the model, which can help improve its accuracy and applicability to real-world problems.

However, the transparency of FCMs may be limited by the complexity of the system being modelled as well as the number of variables and relationships involved. In complex systems with many variables and relationships, it may be difficult to visualize and interpret the FCM, which can make it less transparent. Additionally, the use of fuzzy logic to represent relationships may add an additional layer of complexity to the model, which may make it more difficult to understand for those unfamiliar with the concept.

A very intuitive example of FCMs’ assertive informative behaviour is presented in [

48]. The authors developed an FCM to model the social acceptance for establishing waste biorefinery facilities. The study combined the expert’s opinions and stakeholders’ needs to determine the input concepts and their central weight values by distributing questionnaires. Their model suggested that the most influential factors in social acceptance are uncertainty towards the settlement of the new industry, risk perception, stakeholder participation, citizen awareness, and the equity of decision-making processes. The study presented the final model graph, the centrality of each concept, each concept’s final effect in quantifiable degrees, and the final concept values. Hence, their model can reveal cause-and-effect relationships and is fully transparent and informative simultaneously.

Humans often justify decisions verbally. Providing the user information regarding the reason the FCM predicts the specific class or suggests certain actions are methods to enhance the trust between the model and the user. Utilizing standard algorithms, FCMs can translate their predictions into any format. A significant advantage of FCMs, which aids in the post-hoc interpretability of a whole system, is their ability to play a unifying role. In other words, machine learning predictions, algorithms, and rules can be parts of a bigger system. Those parts shall be united under the FCM, which makes the final decision based on the accuracies of the classifiers and, perhaps, some other non-trainable parameters. This way, a non-interpretable classification method is embedded into an interpretable system that reduces ambiguity and is user-friendly. For example, a study by Papageorgiou et al. [

20] presented a very informative graph that reveals all the relationships among the concepts and informs the user about the assigned weights.

4.6. Cooperation

We are often in a favourable position to hold knowledge and data concerning a non-linear problem. Knowledge can ensure the proper development of FCMs, the strict definition of the concepts, and weight assignments. Data can validate our FCMs and suggest new relationships using ML methods. Hence, ensemble ML-FCM or FCM-DL procedures can be furnished in such situations (

Figure 4).

A particular category of challenges involves the diverse nature of data. For example, clinical factors and diagnostic images are essential for medical diagnosis and treatment policies. Although transparency is not guaranteed, image classification and object detection are a speciality of DL. FCMs can cooperate with DL and post-hoc explainability methods to make the overall approach more interpretable to the medical expert.

Figure 4 showcases an ensemble ML-DL-FCM pipeline that deals with problems involving multiple data types. In this pipeline, image and video data are processed by DL approaches because we are keen to extract new features. Tabular data are analyzed by ML and FCM methods. The outputs of each method serve as inputs to a decision-making FCM, enhancing the system’s explainability. Though such an approach does not tackle the explainability issue of image, video, and tabular data processing, it can cooperate with post-hoc explainability tools to improve this front.

From a theoretical viewpoint, the combination of ML, DL, and FCMs can be seen as a way to overcome some of the limitations of each technique. ML and DL are good at identifying patterns and correlations in large datasets, but they may not always provide a clear understanding of the causal relationships between the variables. FCMs, on the other hand, are specifically designed to represent causal relationships but may not be as effective at handling large amounts of data or capturing complex patterns. By combining these techniques, we can leverage the strengths of each to create more robust and accurate models.

In practice, the integration of these techniques can be challenging and may require expertise in multiple fields. However, with the increasing availability of tools and platforms that support the integration of different techniques, it is becoming increasingly feasible to build complex models that leverage the strengths of each technique.

One example of how these techniques can cooperate is in the field of medical diagnosis. ML and DL can be used to analyze large amounts of patient data and identify patterns and correlations that may be indicative of certain medical conditions. FCMs can then be used to represent the causal relationships between these conditions and other factors such as lifestyle choices, genetics, and environmental factors. By combining the results of ML and DL with the insights provided by FCMs, doctors and medical professionals can make more informed decisions about diagnosis and treatment.

5. Discussion

New regulations in the European Union have proposed that people affected by algorithmic decisions have a right to an explanation [

45]. This drives the research towards explainable AI forward. ML engineers put effort into developing explainability methods to fight against the black-box nature of most AI methods. The demand for transparent and explainable methods has grown since AI is becoming part of our lives, and its decisions affect our everyday activities. In medicine, for example, ML and DL decisions affect human lives directly unless supervised by human experts. In this context, the opaqueness of the new models, also called black boxes [

49], i.e., their inherent inability to explain their decisions, makes the medical experts reticent to adopt these models.

At the same time, FCMs offer a robust solution for complex decision-making problems. The present study has discussed the benefits of FCMs regarding their role in XAI. FCMs are a powerful tool for understanding the relationships between variables or concepts in a system, allowing for the analysis of system behaviour in a simple and symbolic way. They are unique in their ability to address the problem of causation in any physical or human-made system. Unlike statistical models, FCMs do not calculate statistical values but, rather, use membership functions to describe the relationships between variables. In most domains, it is important to distinguish between correlation and causation, and FCMs can be a valuable tool for exploring causal relationships between variables.

FCMs are generally considered highly interpretable because they represent the system using simple and intuitive concepts, such as nodes and edges. However, the interpretability of FCMs can be affected by the complexity of the modelled system and the number of nodes and edges in the model. FCMs are also transparent because their internal structure can be easily visualized and analyzed.

FCMs can be informative because they can identify key factors that influence the system and simulate different scenarios and their potential outcomes. However, the informativeness of FCMs can be affected by the quality of the data used to build the model and the accuracy of the model’s parameters.

FCMs can be transferable because they can be adapted and applied to different systems by changing the nodes and edges in the model. However, the transferability of FCMs can be affected by the degree of similarity between the systems being modelled and the availability of data and knowledge.

FCMs can be robust because they can tolerate some uncertainty and imprecision in the input data. However, the robustness of FCMs can be affected by the degree of uncertainty and the accuracy of the model’s parameters.

FCMs can be used to model causality by representing the strength and direction of the relationships between nodes in the model. However, it is important to note that FCMs only model correlation, not causation.

Table 5 summarizes recent FCM models in various domains. The presented models meet the demands of XAI models. In particular, each research study is discussed based on each uniqueness regarding the model trust, transferability, causality, and transparency.

FCMs have limitations. Firstly, there are limits to the number of attributes they can represent. FCMs are unable to cope with the dynamic nature of real-world problems. As a result, they cannot adequately represent the complex relationships between multiple variables.

Second, they can be computationally intensive. The operation of FCMs requires considerable processing power and can be slow and difficult to solve. This makes them difficult to use in real-time applications.

Third, they may not be sufficiently precise. FCMs lack the accuracy of other AI techniques, such as NNs, and their results may not be as accurate.

Fourth, FCMs are vulnerable to errors in their knowledge base. If the knowledge base is wrong or outdated, it can lead to incorrect results.

Finally, they lack the flexibility of other AI techniques. FCMs can only be used to solve problems within the specific structure they are designed for and cannot be adapted to different problem domains. This limits their application in real-world scenarios.

Future studies on FCMs should be focused on extending and improving the learning algorithms of FCMs. The implementation of learning algorithms has the potential to turn these models into black boxes. Therefore, there needs to be careful consideration before integrating learning algorithms. A more sound approach would be to furnish hybrid models, which rely on human knowledge but benefit from constrained learning to adapt to changing environments and use available data.

The study does not oppose FCMs to ML and DL but discusses the potential cooperation for formulating robust and explainable ensemble AI pipelines. In addition, integrating ML, DL, and FCM models into a universal pipeline that operates with data from multiple sources and nature has the potential to produce robust and explainable frameworks. Combining the learning capabilities of ML models with the transparency and informativeness of FCMs can result in more efficient methods that model causality and add cognitive knowledge simultaneously.

6. Conclusions

This paper discusses the XAI aspects of FCMs, reveals their transparent and interpretable nature, and presents key literature demonstrating these models’ effectiveness in various sectors. In addition, the study discusses recent advances in FCMs concerning learning algorithms that aid in the dynamism of such models.

Overall, FCMs are generally considered to be explainable and interpretable models that can provide useful insights into complex systems. However, their performance and accuracy depend on the quality and availability of the data and knowledge used to build the model and the complexity and similarity of the systems being modelled.

The findings of the reviewed research confirm the critical XAI aspects of FCMs and their tendency to evolve over the last few years, particularly with the integration of learning algorithms. However, the inherent limitations of FCMs still exist. Therefore, FCMs are not the definite cure for every problem but can cooperate with ML and DL and constitute a more robust and explainable AI framework.

Future studies on FCMs should be focused on extending and improving the learning algorithms of FCMs. In addition, there has to be a more systematic review of the benefits and limitations of recent FCM approaches, which differ from the classical FCMs of the past decade.