Agent-Based Virtual Machine Migration for Load Balancing and Co-Resident Attack in Cloud Computing

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

2.1. Load Balancing in Cloud Computing

2.2. Co-Resident Attack

3. Research Aim and Problem Statement

3.1. Research Aim and Metrics

3.2. Problem Statement

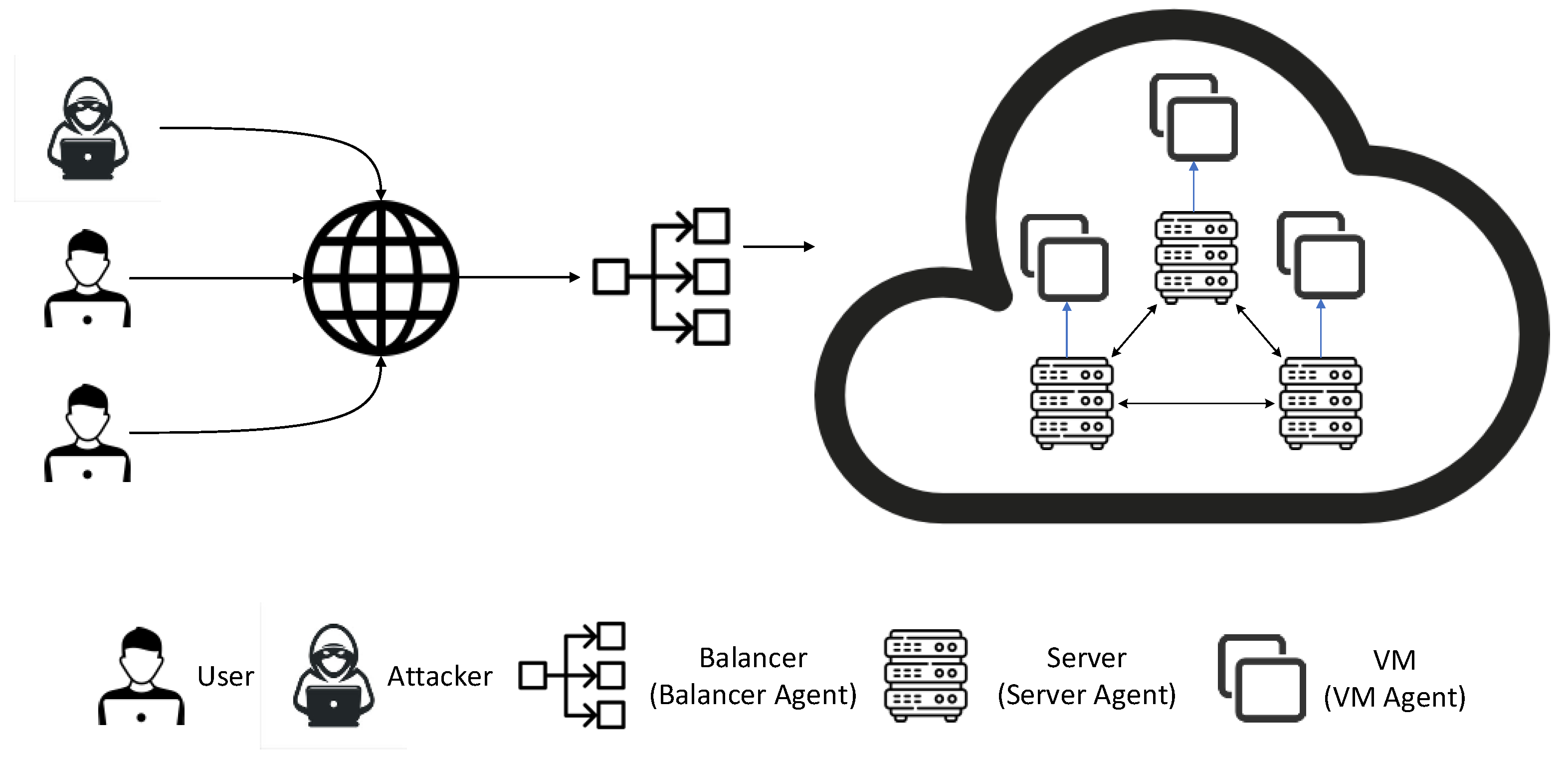

4. Agent-Based VM Migration Architecture

5. Agent-Based VM Migration

5.1. Agent-Based VM Migration for Load-Balancing and Co-Resident Attacks (ALBA)

5.2. VM Migration Policies

5.3. VM Acceptance Policies

5.4. VM Migration Heuristics

| Algorithm 1: Resource-based migration heuristic |

| Input: N (Vector of VMs), VM CPU usage, VM memory usage Output: VM for migration (Nk) 1. Sorting vector N based on ascending VM CPU usage 2. = , i.e., VM has a CPU weight of , VMs with higher CPU usage have higher CPU weight 3. Sorting vector M based on ascending VM memory usage 4. = , i.e., VM has a memory weight of , VMs with higher memory usage have higher memory weight 5. 6. Selecting the VM with the highest value of (ties are broken randomly) |

5.5. Allocating Heuristics of BA

6. Evaluation and Empirical Results

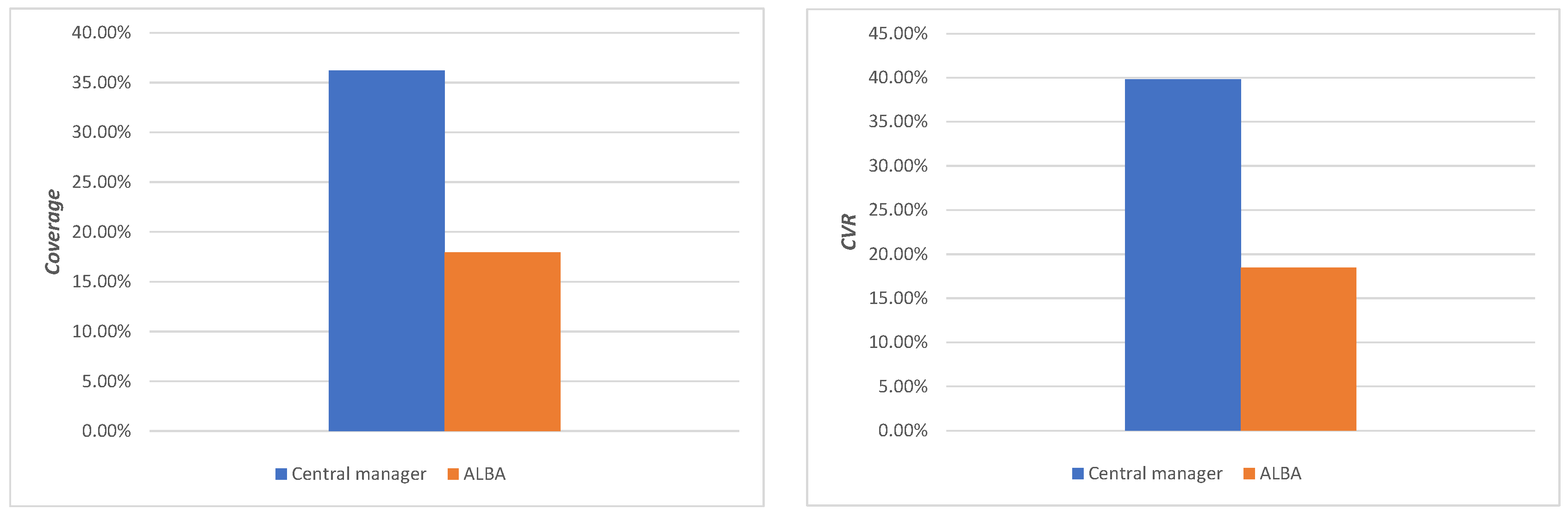

6.1. Agent-Based VM Migration (ALBA) versus Centralized VM Migration

6.1.1. Objective

6.1.2. Experimental Settings

- 1.

- Cloud Computing Parameter

- 2.

- Load-Related Parameters

- 3.

- Agent-Based Testbed Settings

6.1.3. Performance Indicator

6.1.4. Results and Analyses

- 4.

- Analysis 1

- 5.

- Analysis 2

- 6.

- Analysis 3

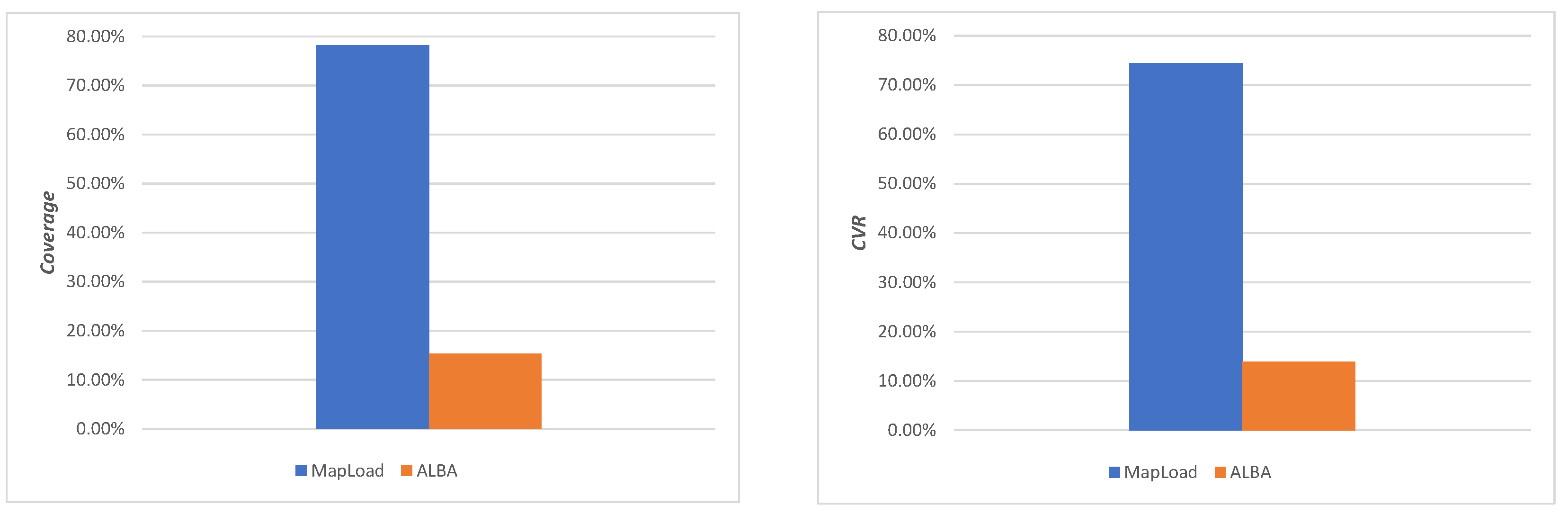

6.2. ALBA versus Agent-Based VM Migration without Considering CRA (MapLoad)

6.2.1. Objectives

6.2.2. Experimental Settings

6.2.3. Performance Indicators

6.2.4. Results and Analyses

- 7.

- Analysis 1

- 8.

- Analysis 2

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Metri, G.; Srinivasaraghavan, S.; Shi, W.; Brockmeyer, M. Experimental Analysis of Application Specific Energy Efficiency of Data Centers with Heterogeneous Servers. In Proceedings of the 2012 IEEE 5th International Conference on Cloud Computing, CLOUD 2012, Honolulu, HI, USA, 24–29 June 2012. [Google Scholar]

- Laghari, A.A.; Jumani, A.K.; Laghari, R.A. Review and State of Art of Fog Computing. Arch. Comput. Methods Eng. 2021, 28, 3631–3643. [Google Scholar] [CrossRef]

- Daniels, J. Server Virtualization Architecture and Implementation. XRDS Crossroads ACM Mag. Stud. 2009, 16, 8–12. [Google Scholar] [CrossRef]

- Vaquero, L.M.; Rodero-Merino, L.; Caceres, J.; Lindner, M. A Break in the Clouds: Towards a Cloud Definition. ACM Sigcomm Comput. Commun. Rev. 2008, 39, 50–55. [Google Scholar] [CrossRef]

- Speitkamp, B.; Bichler, M. A Mathematical Programming Approach for Server Consolidation Problems in Virtualized Data Centers. IEEE Trans. Serv. Comput. 2010, 3, 266–278. [Google Scholar] [CrossRef]

- Buyya, R.; Beloglazov, A.; Abawajy, J. Energy-Efficient Management of Data Center Resources for Cloud Computing: A Vision, Architectural Elements, and Open Challenges. arXiv 2010, arXiv:10060308. [Google Scholar]

- Kerr, A.; Diamos, G.; Yalamanchili, S. A Characterization and Analysis of GPGPU Kernels; Georgia Institute of Technology: Atlanta, GA, USA, 2009. [Google Scholar]

- Clark, C.; Fraser, K.; Hand, S.; Hansen, J.G.; Jul, E.; Limpach, C.; Pratt, I.; Warfield, A. Live Migration of Virtual Machines. In Proceedings of the 2nd conference on Symposium on Networked Systems Design & Implementation-Volume 2, Berkeley, CA, USA, 2–4 May 2005; pp. 273–286. [Google Scholar]

- Voorsluys, W.; Broberg, J.; Venugopal, S.; Buyya, R. Cost of Virtual Machine Live Migration in Clouds: A Performance Evaluation. In Proceedings of the Cloud Computing: First International Conference, CloudCom 2009, Beijing, China, 1–4 December 2009; Proceedings 1. Springer: Berlin/Heidelberg, Germany, 2009; pp. 254–265. [Google Scholar]

- Xing, L.; Tannous, M.; Vokkarane, V.M.; Wang, H.; Guo, J. Reliability Modeling of Mesh Storage Area Networks for Internet of Things. IEEE Internet Things J. 2017, 4, 2047–2057. [Google Scholar] [CrossRef]

- Mandava, L.; Xing, L.; Vokkarane, V.M.; Tannous, O. Reliability Analysis of Multi-State Cloud-RAID with Imperfect Element-Level Coverage. In Reliability Engineering; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Xing, L.; Zhao, G.; Xiang, Y. Phased-Mission Modelling of Physical Layer Reliability for Smart Homes. In Stochastic Models in Reliability Engineering; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Wang, Y.; Xing, L.; Wang, H.; Levitin, G. Combinatorial Analysis of Body Sensor Networks Subject to Probabilistic Competing Failures. Reliab. Eng. Syst. Saf. 2015, 142, 388–398. [Google Scholar] [CrossRef]

- Chugh, A.; Panda, S. Strengthening Clustering Through Relay Nodes in Sensor Networks. Procedia Comput. Sci. 2018, 132, 689–695. [Google Scholar] [CrossRef]

- Levitin, G.; Xing, L. Reliability and Performance of Multi-State Systems with Propagated Failures Having Selective Effect. Reliab. Eng. Syst. Saf. 2010, 95, 655–661. [Google Scholar] [CrossRef]

- Moghaddam, M.T.; Muccini, H. Fault-Tolerant IoT: A Systematic Mapping Study. In Proceedings of the Software Engineering for Resilient Systems: 11th International Workshop, SERENE 2019, Naples, Italy, 17 September 2019; Proceedings 11. Springer: Berlin/Heidelberg, Germany, 2019; pp. 67–84. [Google Scholar]

- Gutierrez-Garcia, J.O.; Sim, K.M. A Family of Heuristics for Agent-Based Elastic Cloud Bag-of-Tasks Concurrent Scheduling. Future Gener. Comput. Syst. 2013, 29, 1682–1699. [Google Scholar] [CrossRef]

- Gutierrez-Garcia, J.O.; Sim, K.M. Agent-Based Cloud Service Composition. Appl. Intell. 2013, 38, 436–464. [Google Scholar] [CrossRef]

- Laghari, A.A.; He, H.; Khan, A.; Kumar, N.; Kharel, R. Quality of Experience Framework for Cloud Computing (QoC). IEEE Access 2018, 6, 64876–64890. [Google Scholar] [CrossRef]

- Laghari, A.A.; Wu, K.; Laghari, R.A.; Ali, M.; Khan, A.A. A Review and State of Art of Internet of Things (IoT). Arch. Comput. Methods Eng. 2021, 29, 1395–1413. [Google Scholar] [CrossRef]

- Patel, P.; Bansal, D.; Yuan, L.; Murthy, A.; Greenberg, A.; Maltz, D.A.; Kern, R.; Kumar, H.; Zikos, M.; Wu, H. Ananta: Cloud Scale Load Balancing. ACM SIGCOMM Comput. Commun. Rev. 2013, 43, 207–218. [Google Scholar] [CrossRef]

- Lang, W.; Patel, J.M.; Naughton, J.F. On Energy Management, Load Balancing and Replication. Acm Sigmod Rec. 2010, 38, 35–42. [Google Scholar] [CrossRef]

- Kleiminger, W.; Kalyvianaki, E.; Pietzuch, P. Balancing Load in Stream Processing with the Cloud. In Proceedings of the 2011 IEEE 27th International Conference on Data Engineering Workshops, Hannover, Germany, 11–16 April 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 16–21. [Google Scholar]

- Chekuri, C.; Khanna, S. On Multi-Dimensional Packing Problems. SIAM J. Comput. 2004, 33, 837–851. [Google Scholar] [CrossRef]

- Skiena, S.S. The Algorithm Design Manual; Springer: New York, NY, USA, 1998; Volume 2. [Google Scholar]

- Hyser, C.; McKee, B.; Gardner, R.; Watson, B.J. Autonomic Virtual Machine Placement in the Data Center; Tech. Rep. HPL-2007-189; Hewlett Packard Laboratories: Palo Alto, CA, USA, 2007; pp. 189–198. [Google Scholar]

- Dover, Z.; Gordon, S.; Hildred, T. The Technical Architecture of Red Hat Enterprise Virtualization Environments–Edition 1. Red Hat Enterprise Virtualization 3.2-Technical Reference Guide. Available online: https://access.redhat.com/documentation/zh-cn/red_hat_enterprise_virtualization/3.6/pdf/technical_reference/red_hat_enterprise_virtualization-3.6-technical_reference-zh-cn.pdf (accessed on 1 March 2023).

- Gulati, A.; Holler, A.; Ji, M.; Shanmuganathan, G.; Waldspurger, C.; Zhu, X. Vmware Distributed Resource Management: Design, Implementation, and Lessons Learned. VMware Tech. J. 2012, 1, 45–64. [Google Scholar]

- Andreolini, M.; Casolari, S.; Colajanni, M.; Messori, M. Dynamic Load Management of Virtual Machines in Cloud Architectures. In Proceedings of the Cloud Computing: First International Conference, CloudComp 2009 Munich, Germany, 19–21 October 2009; Revised Selected Papers 1; Springer: Berlin/Heidelberg, Germany, 2010; pp. 201–214. [Google Scholar]

- Isci, C.; Wang, C.; Bhatt, C.; Shanmuganathan, G.; Holler, A. Process Demand Prediction for Distributed Power and Resource Management 2011. US Patent 8046468, 25 October 2011. [Google Scholar]

- Ji, M.; Waldspurger, C.A.; Zedlewski, J. Method and System for Determining a Cost-Benefit Metric for Potential Virtual Machine Migrations. US Patent 8095929, 10 January 2012. [Google Scholar]

- Ren, X.; Lin, R.; Zou, H. A Dynamic Load Balancing Strategy for Cloud Computing Platform Based on Exponential Smoothing Forecast. In Proceedings of the 2011 IEEE International Conference on Cloud Computing and Intelligence Systems, Beijing, China, 15–17 September 2011; IEEE: Piscataway, NJ, USA; pp. 220–224. [Google Scholar]

- Wood, T.; Shenoy, P.J.; Venkataramani, A.; Yousif, M.S. Black-Box and Gray-Box Strategies for Virtual Machine Migration. In Proceedings of the NSDI, Cambridge, MA, USA, 9–12 April 2007; Volume 7, p. 17. [Google Scholar]

- Wu, Y.; Yuan, Y.; Yang, G.; Zheng, W. Load Prediction Using Hybrid Model for Computational Grid. In Proceedings of the 2007 8th IEEE/ACM International Conference on Grid Computing, Austin, TX, USA, 19–21 September 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 235–242. [Google Scholar]

- Anderson, P.; Bijani, S.; Vichos, A. Multi-Agent Negotiation of Virtual Machine Migration Using the Lightweight Coordination Calculus. In Proceedings of the Agent and Multi-Agent Systems. Technologies and Applications: 6th KES International Conference, KES-AMSTA 2012, Dubrovnik, Croatia, 25–27 June 2012; Proceedings 6. Springer: Berlin/Heidelberg, Germany, 2012; pp. 124–133. [Google Scholar]

- Zhou, F.; Goel, M.; Desnoyers, P.; Sundaram, R. Scheduler Vulnerabilities and Coordinated Attacks in Cloud Computing. J. Comput. Secur. 2013, 21, 533–559. [Google Scholar] [CrossRef]

- Varadarajan, V.; Kooburat, T.; Farley, B.; Ristenpart, T.; Swift, M.M. Resource-Freeing Attacks: Improve Your Cloud Performance (at Your Neighbor’s Expense). In Proceedings of the Proceedings of the 2012 ACM conference on Computer and communications security, Raleigh, NC, USA, 16–18 October 2012; pp. 281–292. [Google Scholar]

- Yang, Z.; Fang, H.; Wu, Y.; Li, C.; Zhao, B.; Huang, H.H. Understanding the Effects of Hypervisor i/o Scheduling for Virtual Machine Performance Interference. In Proceedings of the 4th IEEE International Conference on Cloud Computing Technology and Science Proceedings, Taipei, Taiwan, 3–6 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 34–41. [Google Scholar]

- Ristenpart, T.; Tromer, E.; Shacham, H.; Savage, S. Hey, You, Get off of My Cloud: Exploring Information Leakage in Third-Party Compute Clouds. In Proceedings of the Proceedings of the ACM Conference on Computer and Communications Security, Chicago, IL, USA, 9–13 November 2009. [Google Scholar]

- Cloud, A.E.C. Amazon Web Services. Retrieved Novemb. 2011, 9, 2011. [Google Scholar]

- Osvik, D.A.; Shamir, A.; Tromer, E. Cache Attacks and Countermeasures: The Case of AES. In Proceedings of the Topics in Cryptology–CT-RSA 2006: The Cryptographers’ Track at the RSA Conference 2006, San Jose, CA, USA, 13–17 February 2005; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–20. [Google Scholar]

- Tromer, E.; Osvik, D.A.; Shamir, A. Efficient Cache Attacks on AES, and Countermeasures. J. Cryptol. 2010, 23, 37–71. [Google Scholar] [CrossRef]

- Zhang, Y.; Juels, A.; Reiter, M.K.; Ristenpart, T. Cross-VM Side Channels and Their Use to Extract Private Keys. In Proceedings of the 2012 ACM conference on Computer and communications security, Raleigh, NC, USA, 16–18 October 2012; pp. 305–316. [Google Scholar]

- Wang, Z.; Lee, R.B. Covert and Side Channels Due to Processor Architecture. In Proceedings of the 2006 22nd Annual Computer Security Applications Conference (ACSAC’06), Miami Beach, FL, USA, 11–15 December 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 473–482. [Google Scholar]

- Wang, Z.; Lee, R.B. New Cache Designs for Thwarting Software Cache-Based Side Channel Attacks. In Proceedings of the Proceedings of the 34th annual international symposium on Computer architecture, San Diego, CA, USA, 9–13 June 2007; pp. 494–505. [Google Scholar]

- Vattikonda, B.C.; Das, S.; Shacham, H. Eliminating Fine Grained Timers in Xen. In Proceedings of the 3rd ACM workshop on Cloud computing security workshop, Chicago, IL, USA, 21 October 2011; pp. 41–46. [Google Scholar]

- Wu, J.; Ding, L.; Lin, Y.; Min-Allah, N.; Wang, Y. Xenpump: A New Method to Mitigate Timing Channel in Cloud Computing. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 678–685. [Google Scholar]

- Aviram, A.; Hu, S.; Ford, B.; Gummadi, R. Determinating Timing Channels in Compute Clouds. In Proceedings of the 2010 ACM workshop on Cloud computing security workshop, Chicago, IL, USA, 8 October 2010; pp. 103–108. [Google Scholar]

- Shi, J.; Song, X.; Chen, H.; Zang, B. Limiting Cache-Based Side-Channel in Multi-Tenant Cloud Using Dynamic Page Coloring. In Proceedings of the 2011 IEEE/IFIP 41st International Conference on Dependable Systems and Networks Workshops (DSN-W), Hong Kong, China, 27–30 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 194–199. [Google Scholar]

- Szefer, J.; Keller, E.; Lee, R.B.; Rexford, J. Eliminating the Hypervisor Attack Surface for a More Secure Cloud. In Proceedings of the 18th ACM conference on Computer and communications security, Chicago, IL, USA, 17–21 October 2011; pp. 401–412. [Google Scholar]

- Bates, A.; Mood, B.; Pletcher, J.; Pruse, H.; Valafar, M.; Butler, K. Detecting Co-Residency with Active Traffic Analysis Techniques. In Proceedings of the 2012 ACM Workshop on Cloud computing security workshop, Raleigh, NC, USA, 19 October 2012; pp. 1–12. [Google Scholar]

- Yu, S.; Xiaolin, G.; Jiancai, L.; Xuejun, Z.; Junfei, W. Detecting Vms Co-Residency in Cloud: Using Cache-Based Side Channel Attacks. Elektron. Ir Elektrotechnika 2013, 19, 73–78. [Google Scholar] [CrossRef]

- Bates, A.; Mood, B.; Pletcher, J.; Pruse, H.; Valafar, M.; Butler, K. On Detecting Co-Resident Cloud Instances Using Network Flow Watermarking Techniques. Int. J. Inf. Secur. 2014, 13, 171–189. [Google Scholar] [CrossRef]

- Sundareswaran, S.; Squcciarini, A.C. Detecting Malicious Co-Resident Virtual Machines Indulging in Load-Based Attacks. In Proceedings of the Information and Communications Security: 15th International Conference, ICICS 2013, Beijing, China, 20–22 November 2013; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2013; pp. 113–124. [Google Scholar]

- Yu, S.; Gui, X.; Lin, J. An Approach with Two-Stage Mode to Detect Cache-Based Side Channel Attacks. In Proceedings of the International Conference on Information Networking 2013 (ICOIN), Bangkok, Thailand, 28–30 January 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 186–191. [Google Scholar]

- Azar, Y.; Kamara, S.; Menache, I.; Raykova, M.; Shepard, B. Co-Location-Resistant Clouds. In Proceedings of the 6th Edition of the ACM Workshop on Cloud Computing Security, Scottsdale, AZ, USA; 2014; pp. 9–20. [Google Scholar]

- Li, M.; Zhang, Y.; Bai, K.; Zang, W.; Yu, M.; He, X. Improving Cloud Survivability through Dependency Based Virtual Machine Placement. In Proceedings of the SECRYPT, Rome, Italy, 24–27 July 2012; pp. 321–326. [Google Scholar]

- Zhang, Y.; Li, M.; Bai, K.; Yu, M.; Zang, W. Incentive Compatible Moving Target Defense against Vm-Colocation Attacks in Clouds. In Proceedings of the Information Security and Privacy Research: 27th IFIP TC 11 Information Security and Privacy Conference, SEC 2012, Heraklion, Crete, Greece, 4–6 June 2012; Proceedings 27. Springer: Berlin/Heidelberg, Germany, 2012; pp. 388–399. [Google Scholar]

- Mustafa, S.; Sattar, K.; Shuja, J.; Sarwar, S.; Maqsood, T.; Madani, S.A.; Guizani, S. Sla-Aware Best Fit Decreasing Techniques for Workload Consolidation in Clouds. IEEE Access 2019, 7, 135256–135267. [Google Scholar] [CrossRef]

- Bashir, S.; Mustafa, S.; Ahmad, R.W.; Shuja, J.; Maqsood, T.; Alourani, A. Multi-Factor Nature Inspired SLA-Aware Energy Efficient Resource Management for Cloud Environments. Clust. Comput. 2022, 1–16. [Google Scholar] [CrossRef]

- Breitgand, D.; Marashini, A.; Tordsson, J. Policy-Driven Service Placement Optimization in Federated Clouds. IBM Res. Div. Tech. Rep. 2011, 9, 11–15. [Google Scholar]

- Lin, C.-C.; Liu, P.; Wu, J.-J. Energy-Efficient Virtual Machine Provision Algorithms for Cloud Systems. In Proceedings of the 2011 Fourth IEEE International Conference on Utility and Cloud Computing, Melbourne, VIC, Australia, 5–8 December 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 81–88. [Google Scholar]

- Tsai, Y.-L.; Huang, K.-C.; Chang, H.-Y.; Ko, J.; Wang, E.T.; Hsu, C.-H. Scheduling Multiple Scientific and Engineering Workflows through Task Clustering and Best-Fit Allocation. In Proceedings of the 2012 IEEE Eighth World Congress on Services, Honolulu, HI, USA, 24–29 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Zhang, H.; Jiang, G.; Yoshihira, K.; Chen, H.; Saxena, A. Intelligent Workload Factoring for a Hybrid Cloud Computing Model. In Proceedings of the 2009 Congress on Services-I, Los Angeles, CA, USA, 6–10 July 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 701–708. [Google Scholar]

- Tisue, S.; Wilensky, U. Netlogo: A Simple Environment for Modeling Complexity. In Proceedings of the International Conference on Complex Systems, Boston, USA, 16–21 May 2004; Citeseer: University Park, PA, USA, 2004; Volume 21, pp. 16–21. [Google Scholar]

- Gutierrez-Garcia, J.O.; Ramirez-Nafarrate, A. Agent-Based Load Balancing in Cloud Data Centers. Clust. Comput. 2015, 18, 1041–1062. [Google Scholar] [CrossRef]

- Von Laszewski, G.; Diaz, J.; Wang, F.; Fox, G.C. Comparison of Multiple Cloud Frameworks. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 734–741. [Google Scholar]

| Name | Definition |

|---|---|

| index of VMs in the Cloud , indicates the number of VMs in the Cloud. | |

| index of hosts in the Cloud , indicates the number of hosts in the Cloud. | |

| index of load-balancing decision time points , indicates the total operation time. | |

| Number of cores required by | |

| Percentage of CPU usage of at time | |

| Number of gigabytes of memory required by | |

| Percentage of memory usage of at time | |

| Security level of at time | |

| Idle number of cores of host at time | |

| Idle memory (expressed in gigabytes) of host at time | |

| Percentage of CPU usage of host at time | |

| Percentage of memory usage of host at time | |

| Security level of host at time | |

| Number of hosts covered by malicious VMs | |

| Number of VMs threated by malicious VMs |

| Parameter | Alternative Values |

|---|---|

| Number of VMs | 300 |

| Specifications of VMs | |

| Number of cores | {1, 2, 4, 6, 8} |

| Memory sizes | {4, 8, 12, 16, 20} GBs |

| User number | {1, 2, 3, …, 28, 29, 30} |

| Initial low-security users | {1, 2} |

| Low-security user detected in operation | {3} |

| User security level | {Low, High} |

| Mean inter-arrival time of VMs | 5 ticks |

| Mean inter-departure time of VMs | 100 ticks |

| Parameter | Experiment 6.1 | Experiment 6.2 |

|---|---|---|

| BA’s VM migration heuristics | {Round-robin, greedy, first-fit, best-fit} heuristics | {Round-robin, greedy, first-fit, best-fit} heuristics |

| SAs’ proposal selection mechanism | Greedy selection | Greedy selection |

| SAs’ CPU-based acceptance policy | Above threshold | Above threshold |

| SAs’ memory-based acceptance policy | Above threshold | Above threshold |

| SAs’ security-based acceptance policy | Level Matching | |

| SAs’ CPU acceptance threshold | 0% | 0% |

| SAs’ memory acceptance threshold | 0% | 0% |

| SAs’ CPU-based migration threshold | 50% | 50% |

| SAs’ memory-based migration threshold | 50% | 50% |

| SMAs’ activation threshold | 10 ticks | 10 ticks |

| SMAs’ monitoring frequency | 1 tick | 1 tick |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, B.; Lu, M. Agent-Based Virtual Machine Migration for Load Balancing and Co-Resident Attack in Cloud Computing. Appl. Sci. 2023, 13, 3703. https://doi.org/10.3390/app13063703

Xu B, Lu M. Agent-Based Virtual Machine Migration for Load Balancing and Co-Resident Attack in Cloud Computing. Applied Sciences. 2023; 13(6):3703. https://doi.org/10.3390/app13063703

Chicago/Turabian StyleXu, Biao, and Minyan Lu. 2023. "Agent-Based Virtual Machine Migration for Load Balancing and Co-Resident Attack in Cloud Computing" Applied Sciences 13, no. 6: 3703. https://doi.org/10.3390/app13063703

APA StyleXu, B., & Lu, M. (2023). Agent-Based Virtual Machine Migration for Load Balancing and Co-Resident Attack in Cloud Computing. Applied Sciences, 13(6), 3703. https://doi.org/10.3390/app13063703