Improving Many-to-Many Neural Machine Translation via Selective and Aligned Online Data Augmentation

Abstract

:1. Introduction

- Low-quality synthetic sentence pairs, such as samples generated by the vanilla ROBT algorithm, are not very useful for many-to-many MNMT models. Therefore, how can we generate suitable augmented samples to deliver greater and continued benefits?

- Another limitation of many-to-many MNMT is the language variant encoder representations, which means the encoder representations of parallel sentences generated by the model are dissimilar. Therefore, how can we align token representations and encourage transfer learning utilizing synthetic sentences?

- We propose the POBT and SOBT algorithms to generate and select suitable training samples to improve massively many-to-many MNMT, especially for non-English directions.

- We thoroughly study different selection criteria, such as CE loss and QE score, concluding that the combination of CE loss and QE scores performs best.

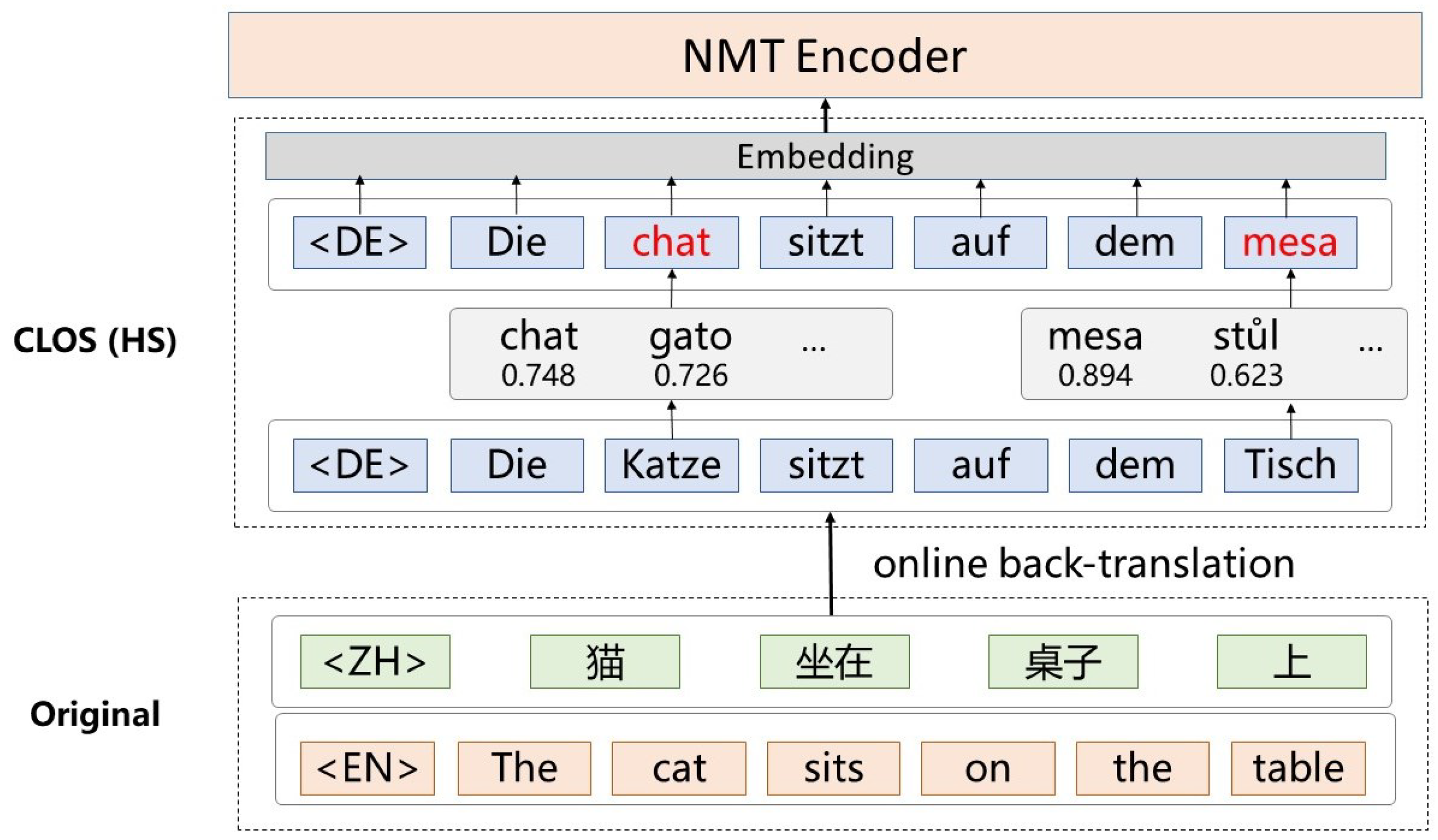

- We boost the SOBT algorithm with CLOS utilizing implicit alignment information instead of external resources to strengthen transfer learning between non-English directions.

- We conduct experiments on two multilingual translation benchmarks with detailed analysis, showing that our algorithms can achieve significant improvements in both English-centric and non-English directions compared with previous works.

2. Related Work

2.1. Pivot Translation

2.2. Zero-Shot Translation

- Spurious correlations between input and output language. During training, MNMT models are not exposed to unseen language pairs and can only learn associations between the observed language pairs. During testing, models tend to output languages observed together with the source languages during training [16,17].

- Language variant encoder representations. The encoder representations generated by MNMT models for equivalent source and target languages are dissimilar, partly because transfer learning performs worse between unrelated zero-shot language pairs [22].

2.3. Back-Translation

2.4. Aligned Augmentation

3. Methodology

3.1. Many-to-Many MNMT

3.2. Pivot Online Back-Translation

| Algorithm 1: Algorithm for Pivot Online Back-Translation |

Input : Training data, D; Initialized MNMT model, M; Language set, L; Output: Zero-shot improved MNMT model, M 17 end 18 return M |

3.3. Selective Online Back-Translation

3.3.1. Batch-Level Selection Criterion

3.3.2. Global-Level Selection Criterion

3.3.3. Fine-Tuning with Contrastive Loss

3.4. Cross-Lingual Online Substitution

3.5. Training Schedule

4. Experiments

4.1. Datasets and Evaluation

4.2. Training Details

4.3. Comparison of Methods

- ROBT. Zhang et al. [21] first demonstrated the feasibility of back-translation in massively multilingual settings and greatly increased zero-shot translation performance.

- mRASP2. Pan et al. [27] proposed a multilingual contrastive learning framework for translation, generating synthetic aligned sentence pairs to improve multilingual translation quality in a unified training framework.

- Pivot translation. This method first translates one source sentence into English (X → English), and then into the target language (English → Y). The pivoting can be performed by the baseline multilingual model or separately trained bilingual models, both of which are compared in this work.

4.4. Main Results

4.4.1. Results on the IWSLT-8

4.4.2. Results on OPUS-100

4.5. Analysis and Discussion

4.5.1. Ablation Study

4.5.2. Convergence of SOBT

4.5.3. Effect of Model Capacity

4.5.4. Case Study

4.5.5. Practical Applications

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7 May 2015. [Google Scholar]

- Barrault, L.; Bojar, O.; Costa-Jussà, M.R.; Federmann, C.; Fishel, M.; Graham, Y.; Haddow, B.; Huck, M.; Koehn, P.; Malmasi, S.; et al. Findings of the 2019 Conference on Machine Translation (WMT19). In Proceedings of the Association for Computational Linguistics, Florence, Italy, 1 August 2019. [Google Scholar]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Dabre, R.; Chu, C.; Kunchukuttan, A. A survey of multilingual neural machine translation. ACM Comput. Surv. (CSUR) 2020, 53, 1–38. [Google Scholar] [CrossRef]

- Wang, R.; Tan, X.; Luo, R.; Qin, T.; Liu, T.Y. A Survey on Low-Resource Neural Machine Translation. arXiv 2021, arXiv:2107.04239. [Google Scholar]

- Aharoni, R.; Johnson, M.; Firat, O. Massively Multilingual Neural Machine Translation. arXiv 2019, arXiv:1903.00089. [Google Scholar]

- Firat, O.; Cho, K.; Bengio, Y. Multi-Way, Multilingual Neural Machine Translation with a Shared Attention Mechanism. arXiv 2016, arXiv:1601.01073. [Google Scholar]

- Firat, O.; Sankaran, B.; Al-onaizan, Y.; Yarman Vural, F.T.; Cho, K. Zero-Resource Translation with Multi-Lingual Neural Machine Translation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1 November 2016; pp. 268–277. [Google Scholar] [CrossRef] [Green Version]

- Johnson, M.; Schuster, M.; Le, Q.V.; Krikun, M.; Wu, Y.; Chen, Z.; Thorat, N.; Viégas, F.; Wattenberg, M.; Corrado, G.; et al. Google’s multilingual neural machine translation system: Enabling zero-shot translation. Trans. Assoc. Comput. Linguist. 2017, 5, 339–351. [Google Scholar] [CrossRef] [Green Version]

- Lakew, S.M.; Cettolo, M.; Federico, M. A Comparison of Transformer and Recurrent Neural Networks on Multilingual Neural Machine Translation. In Proceedings of the International Conference on Computational Linguistics, Santa Fe, NM, USA, 20 August 2018. [Google Scholar]

- Tan, X.; Chen, J.; He, D.; Xia, Y.; Qin, T.; Liu, T.Y. Multilingual Neural Machine Translation with Language Clustering. arXiv 2019, arXiv:1908.09324. [Google Scholar]

- Ji, B.; Zhang, Z.; Duan, X.; Zhang, M.; Chen, B.; Luo, W. Cross-lingual pre-training based transfer for zero-shot neural machine translation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7 February 2020; pp. 115–122. [Google Scholar]

- Sen, S.; Gupta, K.K.; Ekbal, A.; Bhattacharyya, P. Multilingual unsupervised NMT using shared encoder and language-specific decoders. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July 2019; pp. 3083–3089. [Google Scholar]

- Al-Shedivat, M.; Parikh, A. Consistency by Agreement in Zero-Shot Neural Machine Translation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2 June 2019; Volume 1, pp. 1184–1197. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Y.; Cho, K.; Li, V.O. Improved Zero-shot Neural Machine Translation via Ignoring Spurious Correlations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July 2019; pp. 1258–1268. [Google Scholar] [CrossRef] [Green Version]

- Arivazhagan, N.; Bapna, A.; Firat, O.; Aharoni, R.; Johnson, M.; Macherey, W. The missing ingredient in zero-shot neural machine translation. arXiv 2019, arXiv:1903.07091. [Google Scholar]

- Pham, N.Q.; Niehues, J.; Ha, T.L.; Waibel, A. Improving Zero-shot Translation with Language-Independent Constraints. In Proceedings of the Fourth Conference on Machine Translation, Florence, Italy, 1 August 2019; Volume 1, pp. 13–23. [Google Scholar] [CrossRef] [Green Version]

- Lakew, S.M.; Lotito, Q.F.; Negri, M.; Turchi, M.; Federico, M. Improving Zero-Shot Translation of Low-Resource Languages. In Proceedings of the 14th International Conference on Spoken Language Translation, Tokyo, Japan, 26 May 2017; pp. 113–119. [Google Scholar]

- Aharoni, R.; Johnson, M.; Firat, O. Massively Multilingual Neural Machine Translation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2 June 2019; Volume 1, pp. 3874–3884. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Williams, P.; Titov, I.; Sennrich, R. Improving Massively Multilingual Neural Machine Translation and Zero-Shot Translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5 July 2020; pp. 1628–1639. [Google Scholar] [CrossRef]

- Kudugunta, S.; Bapna, A.; Caswell, I.; Firat, O. Investigating Multilingual NMT Representations at Scale. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3 November 2019; pp. 1565–1575. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Cartagena, V.M.; Esplà-Gomis, M.; Pérez-Ortiz, J.A.; Sánchez-Martínez, F. Rethinking Data Augmentation for Low-Resource Neural Machine Translation: A Multi-Task Learning Approach. arXiv 2021, arXiv:2109.03645. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Improving Neural Machine Translation Models with Monolingual Data. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7 August 2016; Volume 1, pp. 86–96. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, W.; Wang, F.; Xu, B. Effectively training neural machine translation models with monolingual data. Neurocomputing 2019, 333, 240–247. [Google Scholar] [CrossRef]

- Lin, Z.; Pan, X.; Wang, M.; Qiu, X.; Feng, J.; Zhou, H.; Li, L. Pre-training Multilingual Neural Machine Translation by Leveraging Alignment Information. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16 November 2020; pp. 2649–2663. [Google Scholar] [CrossRef]

- Pan, X.; Wang, M.; Wu, L.; Li, L. Contrastive Learning for Many-to-many Multilingual Neural Machine Translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 1 August 2021; Volume 1, pp. 244–258. [Google Scholar] [CrossRef]

- Utiyama, M.; Isahara, H. A comparison of pivot methods for phrase-based statistical machine translation. In Proceedings of the Human Language Technologies 2007: The Conference of the North American Chapter of the Association for Computational Linguistics, Rochester, NY, USA, 22 July 2007; pp. 484–491. [Google Scholar]

- Wu, H.; Wang, H. Revisiting pivot language approach for machine translation. In Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, Singapore, 2 August 2009; pp. 154–162. [Google Scholar]

- Chen, Y.; Liu, Y.; Cheng, Y.; Li, V.O. A Teacher-Student Framework for Zero-Resource Neural Machine Translation. In Proceedings of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July 2017. [Google Scholar]

- Cheng, Y.; Yang, Q.; Liu, Y.; Sun, M.; Xu, W. Joint training for pivot-based neural machine translation. In Proceedings of the International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19 August 2017. [Google Scholar]

- Ha, T.L.; Niehues, J.; Waibel, A. Effective Strategies in Zero-Shot Neural Machine Translation. In Proceedings of the 14th International Conference on Spoken Language Translation, Tokyo, Japan, 14 December 2017; pp. 105–112. [Google Scholar]

- Lakew, S.M.; Federico, M.; Negri, M.; Turchi, M. Multilingual neural machine translation for zero-resource languages. arXiv 2019, arXiv:1909.07342. [Google Scholar]

- Edman, L.; Üstün, A.; Toral, A.; van Noord, G. Unsupervised Translation of German–Lower Sorbian: Exploring Training and Novel Transfer Methods on a Low-Resource Language. In Proceedings of the Sixth Conference on Machine Translation, Online, 10 November 2021; pp. 982–988. [Google Scholar]

- Garcia, X.; Siddhant, A.; Firat, O.; Parikh, A. Harnessing Multilinguality in Unsupervised Machine Translation for Rare Languages. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6 June 2021; pp. 1126–1137. [Google Scholar] [CrossRef]

- Huang, H.; Liang, Y.; Duan, N.; Gong, M.; Shou, L.; Jiang, D.; Zhou, M. Unicoder: A Universal Language Encoder by Pre-training with Multiple Cross-lingual Tasks. arXiv 2019, arXiv:1909.00964. [Google Scholar]

- Lample, G.; Conneau, A. Cross-lingual Language Model Pretraining. arXiv 2019, arXiv:1901.07291. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Le, Q.V. Exploiting Similarities among Languages for Machine Translation. arXiv 2013, arXiv:1309.4168. [Google Scholar]

- Kepler, F.; Trénous, J.; Treviso, M.; Vera, M.; Góis, A.; Farajian, M.A.; Lopes, A.V.; Martins, A.F.T. Unbabel’s Participation in the WMT19 Translation Quality Estimation Shared Task. In Proceedings of the Fourth Conference on Machine Translation, Florence, Italy, 1 August 2019; Volume 3, pp. 78–84. [Google Scholar] [CrossRef]

- Rei, R.; Stewart, C.; Farinha, A.C.; Lavie, A. COMET: A Neural Framework for MT Evaluation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16 November 2020; pp. 2685–2702. [Google Scholar] [CrossRef]

- Conneau, A.; Lample, G.; Ranzato, M.; Denoyer, L.; Jégou, H. Word Translation without Parallel Data. arXiv 2017, arXiv:1710.04087. [Google Scholar]

- Lin, Z.; Wu, L.; Wang, M.; Li, L. Learning Language Specific Sub-network for Multilingual Machine Translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1 August 2021; pp. 293–305. [Google Scholar] [CrossRef]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7 August 2016; pp. 1715–1725. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6 July 2002; pp. 311–318. [Google Scholar]

- Post, M. A call for clarity in reporting BLEU Scores. In Proceedings of the WMT 2018, Brussels, Belgium, 31 October 2018. [Google Scholar]

- Lavie, A.; Agarwal, A. METEOR: An Automatic Metric for MT Evaluation with High Levels of Correlation with Human Judgments. In Proceedings of the Second Workshop on Statistical Machine Translation, Prague, Czech Republic, 23 June 2007; pp. 228–231. [Google Scholar]

- Snover, M.; Dorr, B.; Schwartz, R.; Micciulla, L.; Makhoul, J. A Study of Translation Edit Rate with Targeted Human Annotation. In Proceedings of the 7th Conference of the Association for Machine Translation in the Americas: Technical Papers, Cambridge, MA, USA, 8 August 2006; pp. 223–231. [Google Scholar]

- Koehn, P. Statistical Significance Tests for Machine Translation Evaluation. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 25 July 2004; pp. 388–395. [Google Scholar]

- Goyal, N.; Gao, C.; Chaudhary, V.; Chen, P.J.; Wenzek, G.; Ju, D.; Krishnan, S.; Ranzato, M.; Guzmán, F.; Fan, A. The Flores-101 Evaluation Benchmark for Low-Resource and Multilingual Machine Translation. Trans. Assoc. Comput. Linguist. 2022, 10, 522–538. [Google Scholar] [CrossRef]

- Ott, M.; Edunov, S.; Baevski, A.; Fan, A.; Gross, S.; Ng, N.; Grangier, D.; Auli, M. fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics (Demonstrations), Minneapolis, MN, USA, 2 June 2019; pp. 48–53. [Google Scholar] [CrossRef]

| Src | El doctor Moll cree que algunos pacientes podrían haber contraído el virus dentro del hospital. |

| Ref | Einige Patienten haben sich möglicherweise im Krankenhaus mit dem Erreger angesteckt, meint Dr. Moll. |

| Pivot | Der Arzt Moll glaubt, dass einige Patienten das Virus innerhalb des Krankenhauses behandelt haben. |

| MNMT | Der Arzt Moll believes that some patients might have had the virus in the hospital. |

| Src | Los ingresos del fabricante superarán los 420.000 millones de yuanes, de acuerdo con el informe. |

| Ref | Der Umsatz des Herstellers wird dem Bericht zufolge 420 Milliarden Yuan übersteigen. |

| Pivot | Dem Bericht zufolge wird der Umsatz des Herstellers 420 Milliarden Yuan übersteigen. |

| MNMT | Los ingresos del fabricante superarán los 420.000 millones de yuanes, de acuerdo con el informe. |

| Language | Family | Script | Size |

|---|---|---|---|

| Farsi | Iranian | Arabic | 89 k |

| Arabic | Arabic | Arabic | 140 k |

| Hebrew | Semitic | Hebrew | 144 k |

| Dutch | Germanic | Latin | 153 k |

| German | Germanic | Latin | 160 k |

| Italian | Romance | Latin | 167 k |

| Spanish | Romance | Latin | 169 k |

| Polish | Slavic | Latin | 128 k |

| Supervised | Zero-Shot | ||||||

|---|---|---|---|---|---|---|---|

| ID | Method | BLEU | METEOR | TER | BLEU | METEOR | TER |

| (1) | MNMT [42] | 25.80 | - | - | - | - | - |

| (2) | Bilingual and Pivot | 27.36 | 50.20 | 55.22% | 9.12 | 29.52 | 83.40% |

| (3) | MNMT | 27.13 | 49.07 | 55.77% | 2.50 | 10.70 | 95.20% |

| (4) | 3 + Pivot | - | - | - | 8.97 | 25.85 | 85.00% |

| (5) | mRASP2 [27] | 26.93 | - | - | 8.44 | 24.33 | 87.05% |

| (6) | 3 + ROBT [21] | 26.35 | - | - | 7.83 | 20.97 | 89.27% |

| (7) | 3 + POBT | 26.47 | 47.55 | 57.33% | 8.55 | 25.02 | 86.99% |

| (8) | 3 + SOBT | 27.25 | 49.88 | 55.35% | 11.27 | 33.50 | 75.42% |

| (9) | 3 + SOBT + CLOS | 27.74 | 51.05 | 54.20% | 12.89 | 36.85 | 70.44% |

| ID | Method | Ar-De-Fr-Nl-Ru-Zh | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| English → X | X → English | Zero-Shot | ||||||||

| BLEU | METEOR | TER | BLEU | METEOR | TER | BLEU | METEOR | TER | ||

| (1) | Pivot [21] | - | - | - | - | - | - | 12.98 | 36.54 | 69.22% |

| (2) | MNMT [21] | - | - | - | - | - | - | 3.97 | 12.05 | 95.89% |

| (3) | 2 + ROBT [21] | - | - | - | - | - | - | 10.11 | 32.11 | 74.73% |

| (4) | Pivot | 23.45 | 46.65 | 52.95% | 35.16 | 67.43 | 38.26% | 13.82 | 38.55 | 65.54% |

| (5) | MNMT | 22.53 | 46.28 | 53.46% | 34.96 | 66.55 | 39.63% | 3.09 | 10.77 | 96.22% |

| (6) | 5 + Pivot | - | - | - | - | - | - | 13.45 | 37.43 | 67.44% |

| (7) | mRASP2 | 22.22 | 44.56 | 55.89% | 34.87 | 63.74 | 39.93% | 12.44 | 33.33 | 74.01% |

| (8) | 5 + ROBT | 22.10 | 44.67 | 55.52% | 34.53 | 63.34 | 39.93% | 10.60 | 33.33 | 74.01% |

| (9) | 5 + POBT | 22.47 | 45.63 | 54.62% | 34.72 | 64.46 | 39.82% | 12.74 | 35.02 | 71.99% |

| (10) | 5 + SOBT | 22.96 | 46.02 | 52.69% | 35.10 | 68.94 | 37.64% | 13.88 | 41.41 | 61.66% |

| (11) | Ours | 23.78 | 47.52 | 51.13% | 35.44 | 69.99 | 36.95% | 15.80 | 44.75 | 60.07% |

| ID | SOBT | CLOS | English → X | X → English | Zero-Shot | ||

|---|---|---|---|---|---|---|---|

| BLS | GLS | HS | SS | ||||

| (1) | × | × | × | × | 22.10 | 34.53 | 10.60 |

| (2) | √ | × | × | × | 22.42 | 34.86 | 12.52 |

| (3) | √ | √ | × | × | 22.96 | 35.10 | 13.88 |

| (4) | √ | √ | √ | × | 23.04 | 35.00 | 14.55 |

| (5) | √ | √ | × | √ | 23.78 | 35.44 | 15.80 |

| (6) | × | × | × | √ | 23.04 | 35.15 | 3.38 |

| Method | English → X | X → English | Zero-Shot | |||||

|---|---|---|---|---|---|---|---|---|

| MNMT | 6 | 512 | 8 | 64 | 76M | 22.53 | 34.96 | 3.09 |

| 6 | 768 | 8 | 96 | 148M | 23.02 | 35.25 | 3.20 | |

| 6 | 1024 | 16 | 64 | 241M | 23.44 | 35.77 | 3.67 | |

| 1 + SOBT + CLOS | 6 | 512 | 8 | 64 | 76M | 23.78 | 35.44 | 15.80 |

| 6 | 768 | 8 | 96 | 148M | 23.92 | 35.68 | 16.37 | |

| 6 | 1024 | 16 | 64 | 241M | 24.24 | 36.05 | 17.25 |

| Es → Zh | |

| Src | Cuando la noticia de que yo había conseguido el Premio Nobel se extendió por China, mucha gente me felicitó, pero ella no lo podrá hacer nunca. |

| Ref | 当我获得诺贝尔奖的消息传遍中国时,很多人都向我表示了祝贺,但她却无法祝贺我了。 |

| baseline MNMT | 当新闻 de que yo había conseguido el Premio Nobel se extendió por China, mucha gente me felicitó, pero ella no lo podrá hacer nunca. |

| ROBT | 我获诺贝尔奖后,很多人向我表示祝贺,但她永远也做不到。 |

| Mrasp2 | 当中国传出我得到诺贝尔奖,许多人恭喜我,但她永远无法这样做。 |

| Ours | 当我获得诺贝尔奖的消息传遍中国时,很多人都祝贺我,但她却做不到了。 |

| De → Zh | |

| Src | Sie sind fertig und sie dürfen nach Hause gehen, Der Befund kommt mit der Post. |

| Ref | 他们完成了,他们被允许回家,检查结果会随邮件来。 |

| baseline MNMT | They are ready and they are allowed to go home, The findings come in the mail. |

| ROBT | 你完成了,你可以回家了,报告是寄来。 |

| Mrasp2 | 他们完成了,他们被允许回家,调查结果附在帖子上。 |

| Ours | 他们完成了,他们可以回家了,调查结果随邮件而来。 |

| Zh → Ms | |

| Src | 证监会表示,改革措施是透明的,将在社会监督下进行。 |

| Ref | kata CSRC, sambil menambah bahawa langkah pembaharuan adalah telus dan akan dijalankan di bawah penyeliaan masyarakat. |

| baseline MNMT | Suruhanjaya Kawal Selia Sekuriti China menyatakan |

| ROBT | kata CSRC, menambahkan bahawa tindakan reformasi adalah transparan dan akan dilakukan di bawah pengawasan sosial. |

| Mrasp2 | Suruhanjaya Pengawalseliaan Sekuriti China menyatakan dan menambah bahawa langkah-langkah pembaharuan itu telus dan akan dilakukan di bawah pengawasan sosial |

| Ours | Suruhanjaya Kawal Selia Sekuriti China menyatakan bahawa langkah pembaharuan adalah telus dan akan dijalankan di bawah pengawasan sosial. |

| ID | Method | Ar-De-Fr-Nl-Ru-Zh | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| English → X | X → English | Zero-Shot | ||||||||

| BLEU | METEOR | TER | BLEU | METEOR | TER | BLEU | METEOR | TER | ||

| (1) | Ours | 23.78 | 47.52 | 51.13% | 35.44 * | 69.99 * | 36.95%* | 15.80 | 44.75 | 60.07% |

| (2) | 23.69 | 47.91 | 50.74% | 35.01 | 69.05 | 37.44% | 13.21 | 40.96 | 63.25% | |

| (3) | DeepL | 24.54 | 48.22 | 49.95% | 35.46 * | 69.84 * | 37.01% * | 13.99 | 41.20 | 63.01% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Dai, L.; Liu, J.; Wang, S. Improving Many-to-Many Neural Machine Translation via Selective and Aligned Online Data Augmentation. Appl. Sci. 2023, 13, 3946. https://doi.org/10.3390/app13063946

Zhang W, Dai L, Liu J, Wang S. Improving Many-to-Many Neural Machine Translation via Selective and Aligned Online Data Augmentation. Applied Sciences. 2023; 13(6):3946. https://doi.org/10.3390/app13063946

Chicago/Turabian StyleZhang, Weitai, Lirong Dai, Junhua Liu, and Shijin Wang. 2023. "Improving Many-to-Many Neural Machine Translation via Selective and Aligned Online Data Augmentation" Applied Sciences 13, no. 6: 3946. https://doi.org/10.3390/app13063946

APA StyleZhang, W., Dai, L., Liu, J., & Wang, S. (2023). Improving Many-to-Many Neural Machine Translation via Selective and Aligned Online Data Augmentation. Applied Sciences, 13(6), 3946. https://doi.org/10.3390/app13063946