Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot

Abstract

1. Introduction

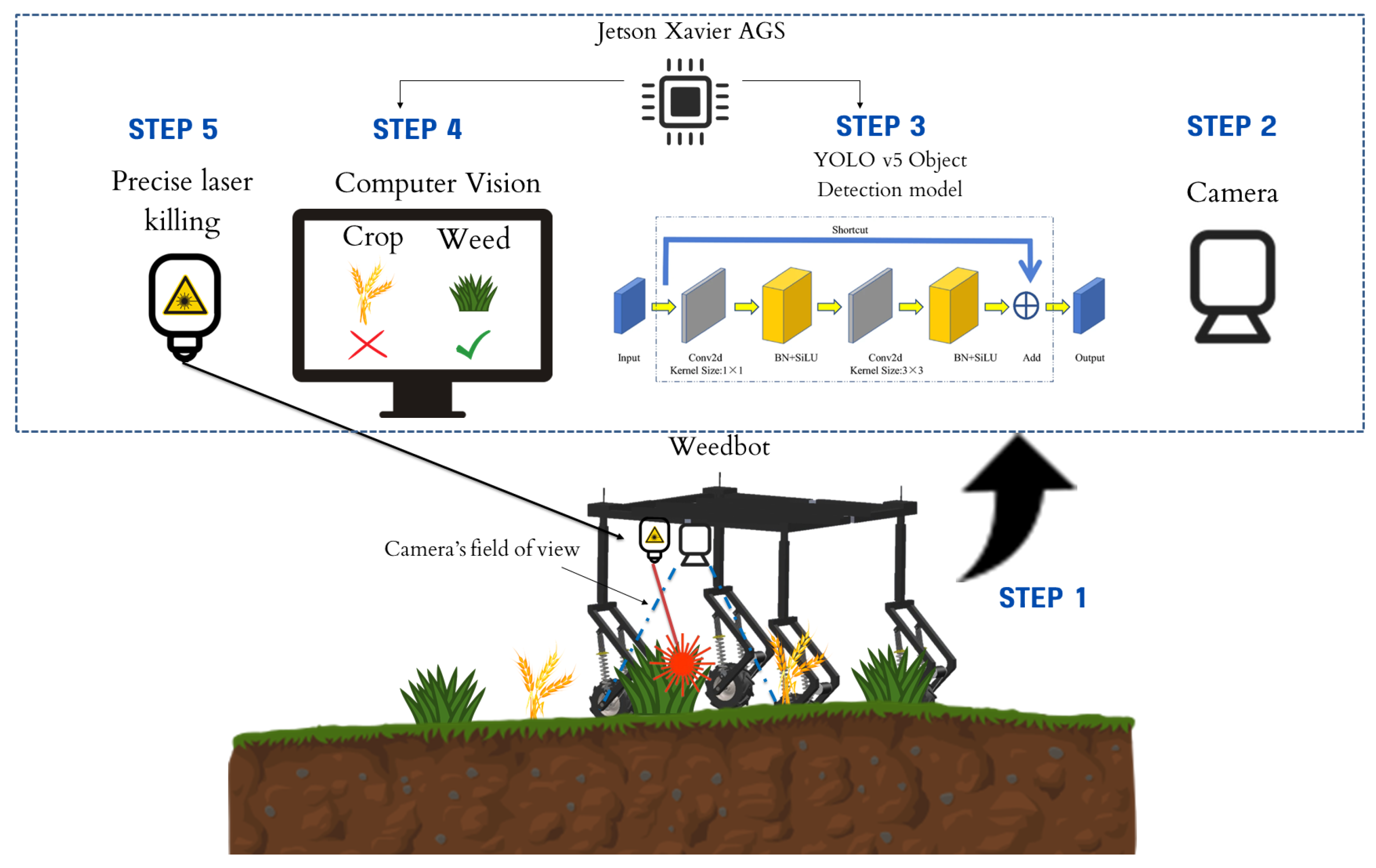

- A lightweight deep learning model is developed for a commercial autonomous laser weeding robot to kill weeds in real time.

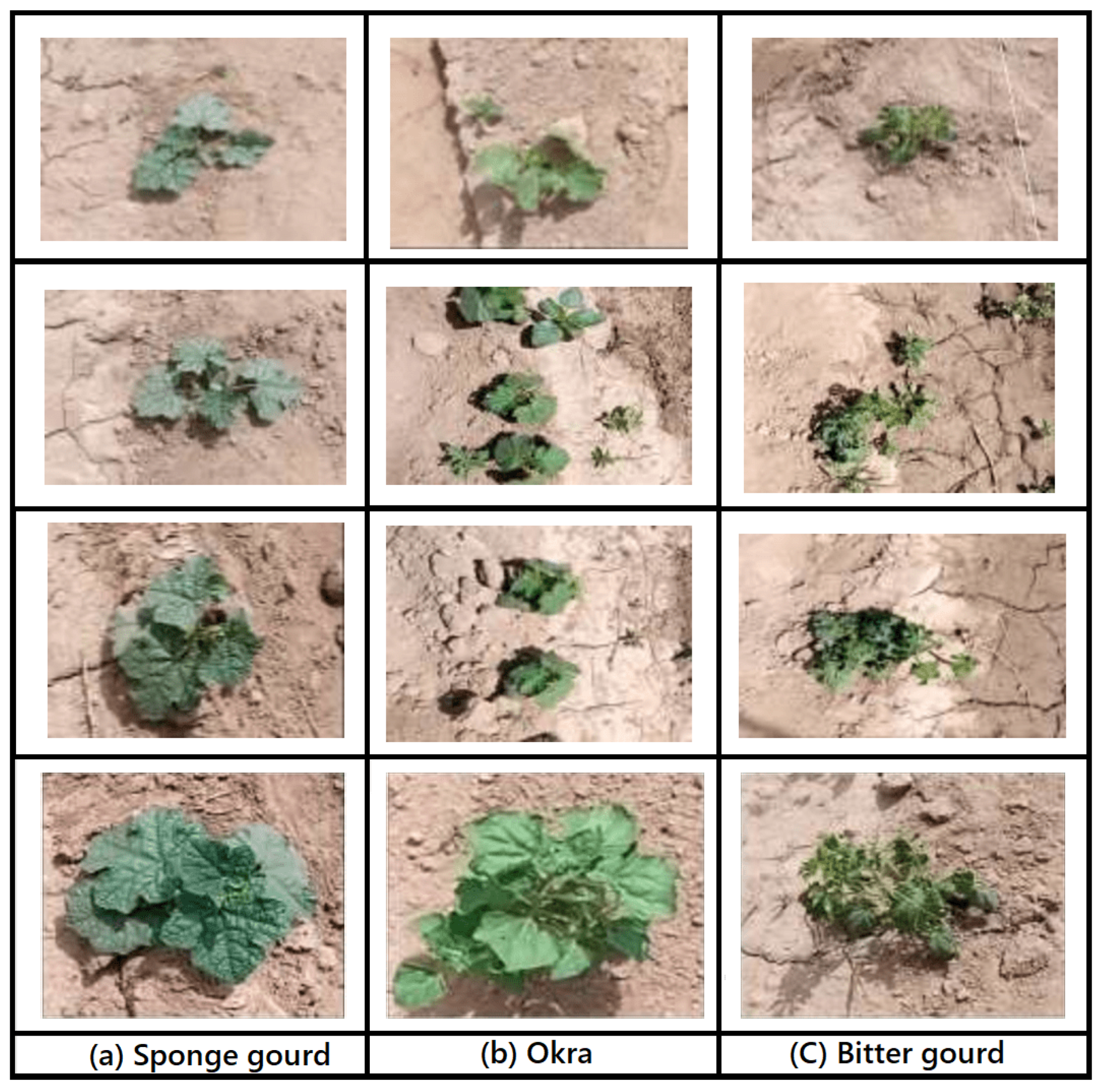

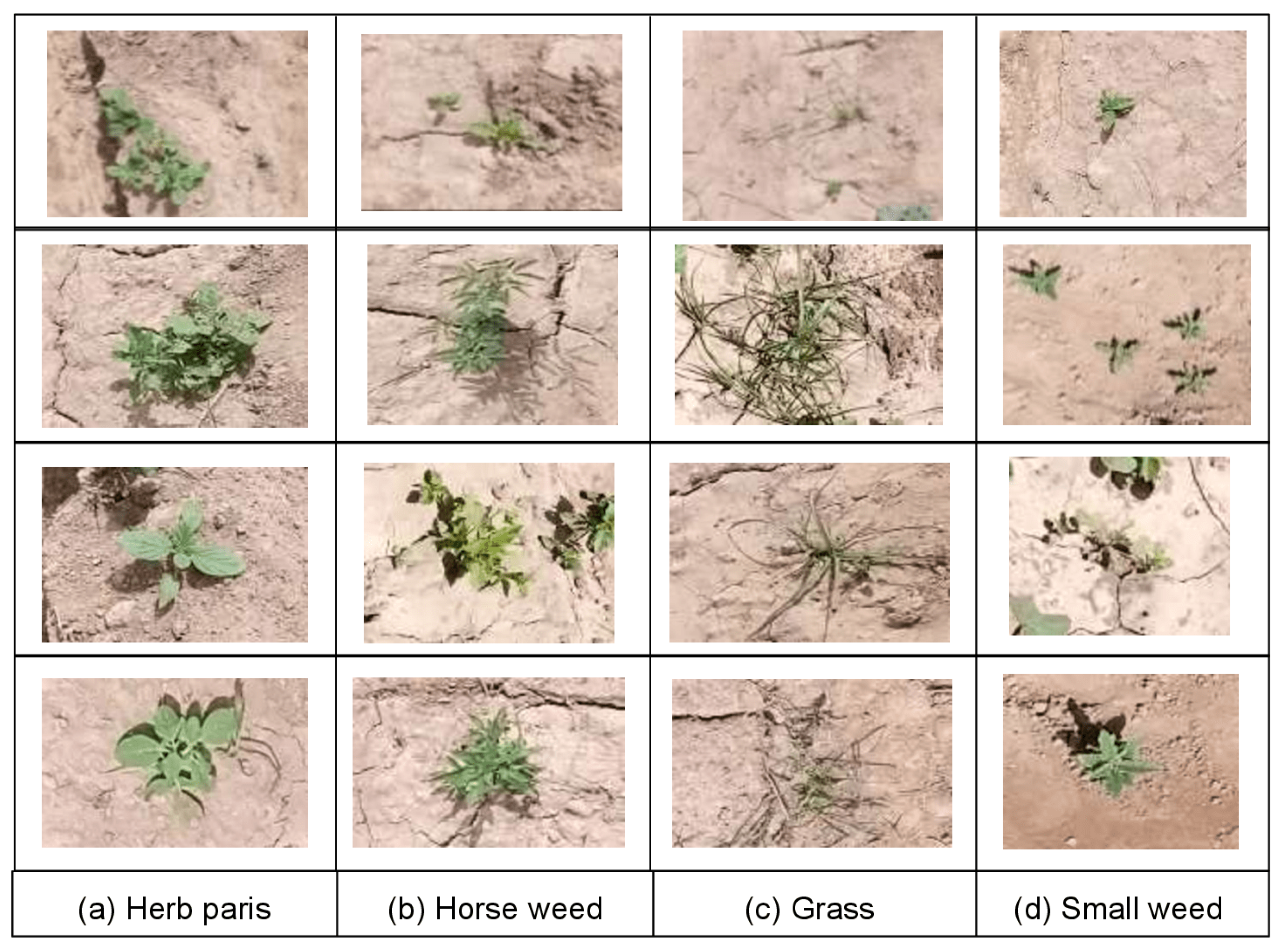

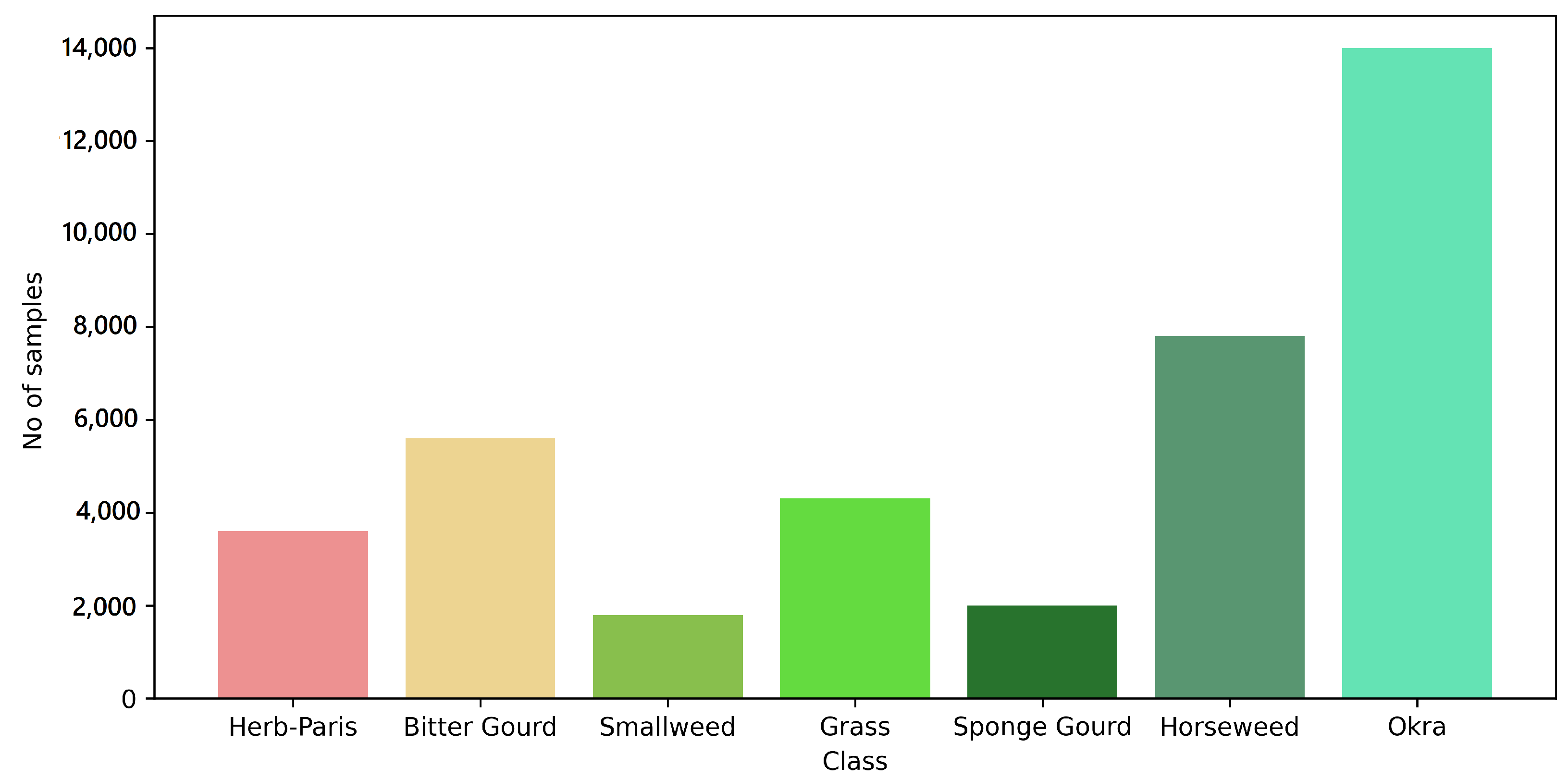

- A large dataset was created by visiting several local farming fields in the area of Gadap town in Karachi, with the goal of collecting data of the most common weeds and the crops of Gadap town. As a result, a model trained on this data is more robust for this selected field.

- Single-shot object detection models, YOLOv5 and SSD-RestNet, are used to detect and classify crops and weeds. The YOLO model’s high performance in terms of its inference time in frame extraction and detection makes it an ideal model for weed detection systems.

- The model is implemented on a Nvidia Xavier AGX embedded device [28] to make it a high-performance and low-power standalone detection system.

2. Literature Review

3. Methodology

3.1. Data Acquisition

Establishment of the Real-Time Experimental Setup for Data Acquisition

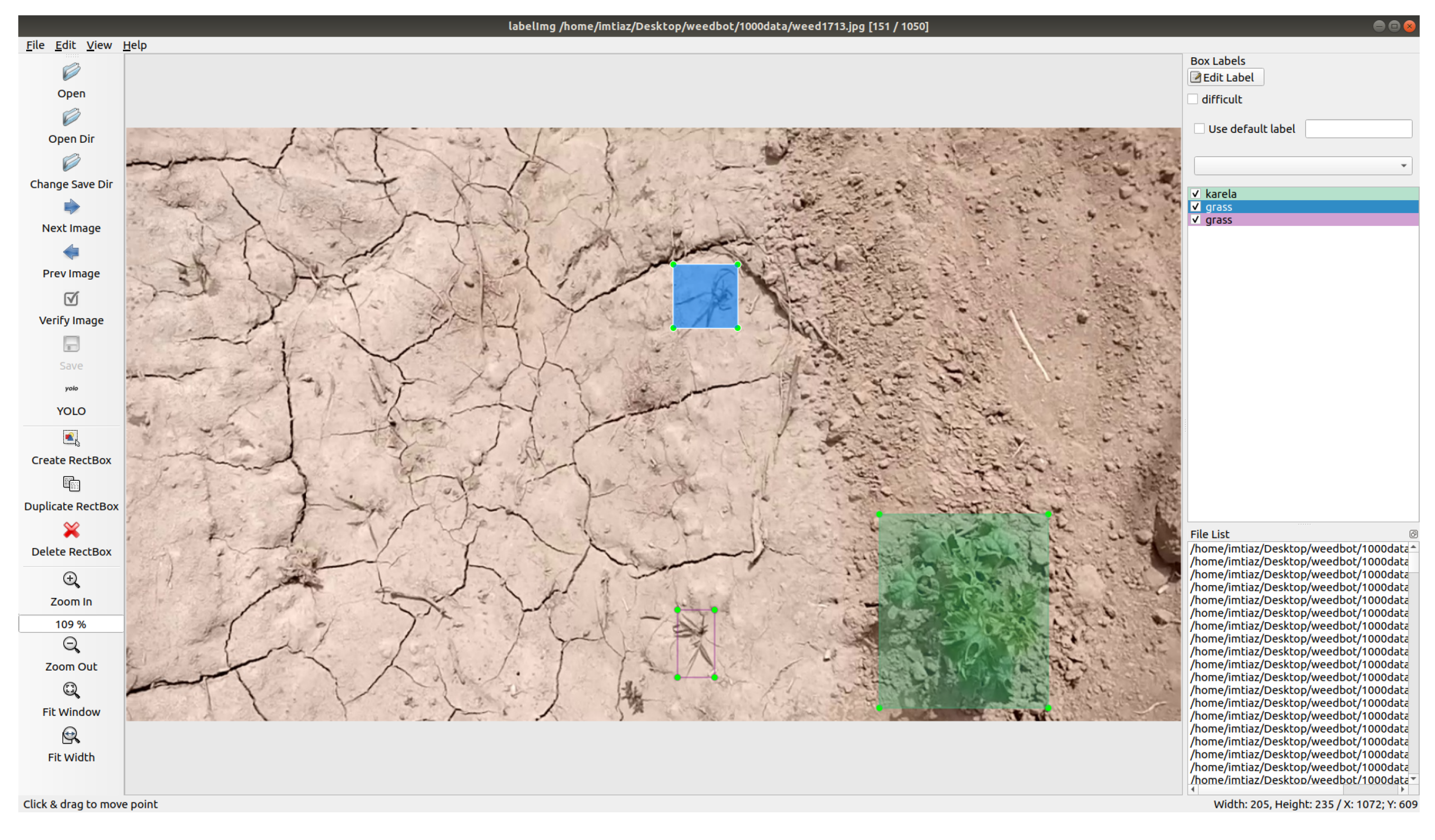

3.2. Data Preprocessing and Annotation

3.3. Model Development

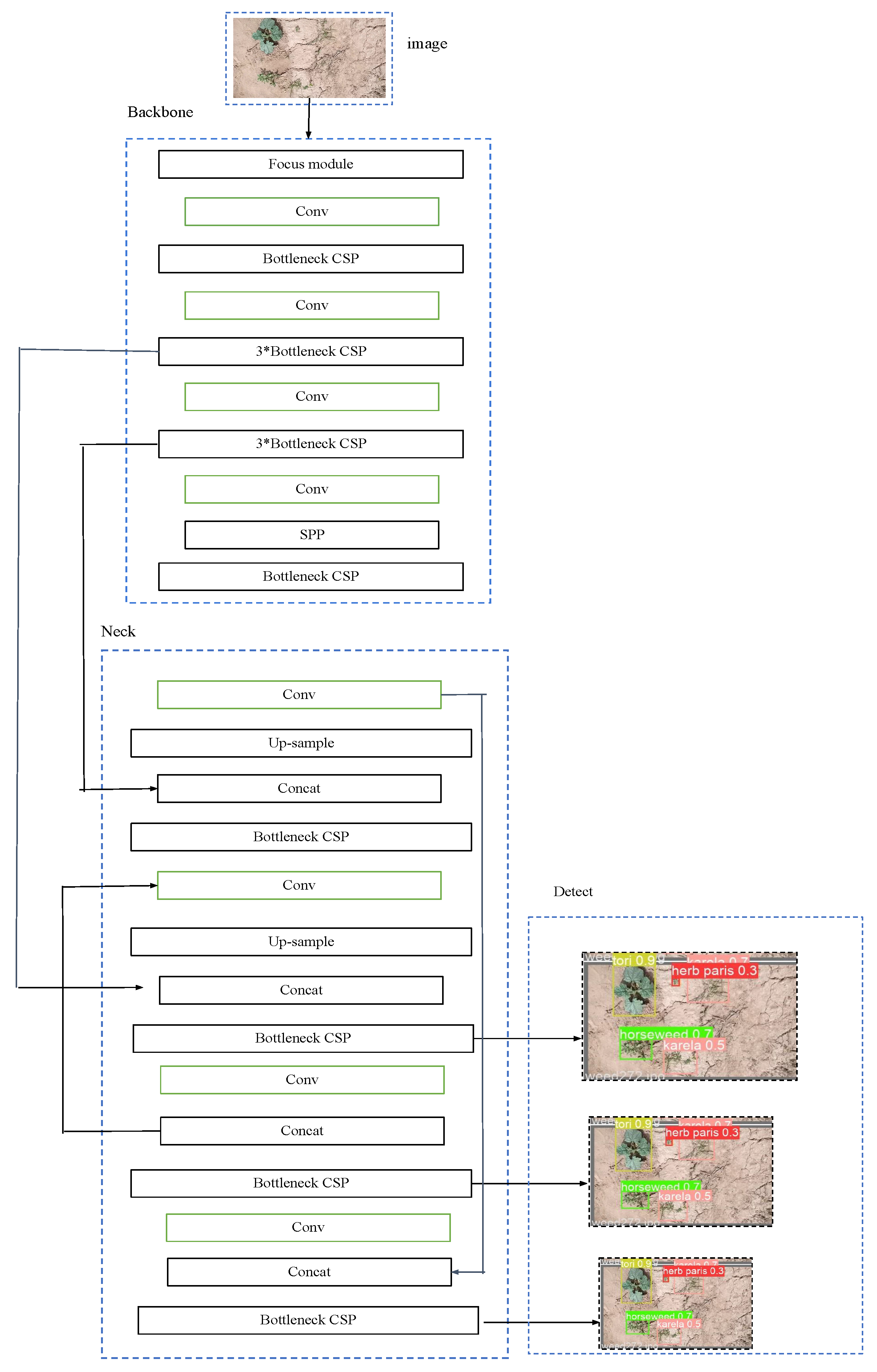

3.4. YOLOv5 Network

3.5. SSD-ResNet Network

3.6. Performance Metrics

3.6.1. Precision

3.6.2. Recall

3.6.3. Average Precision (AP) and Mean Average Precision mAP

4. Results

4.1. Model Training Setup

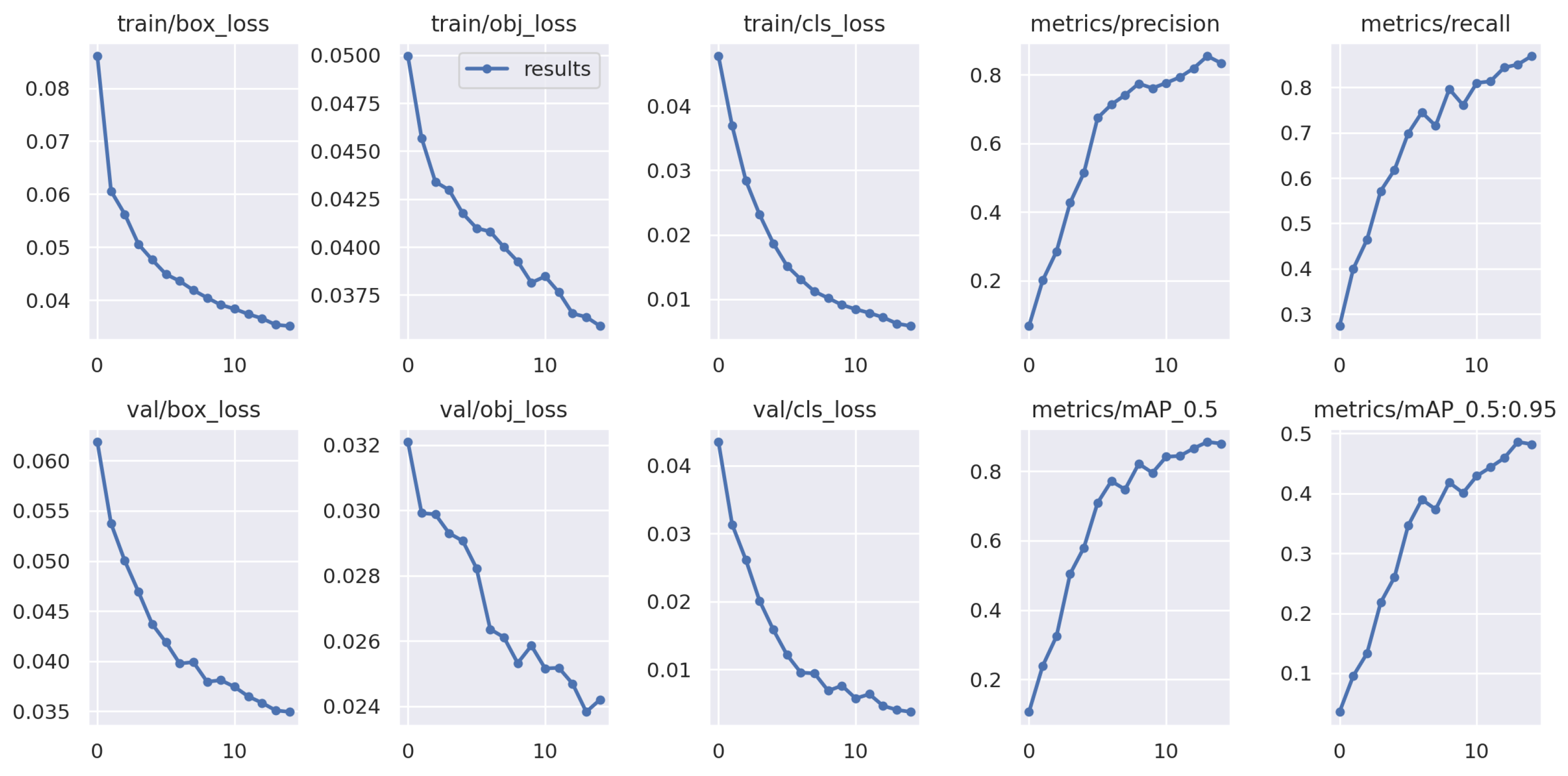

4.2. Training Results

4.3. Test Results

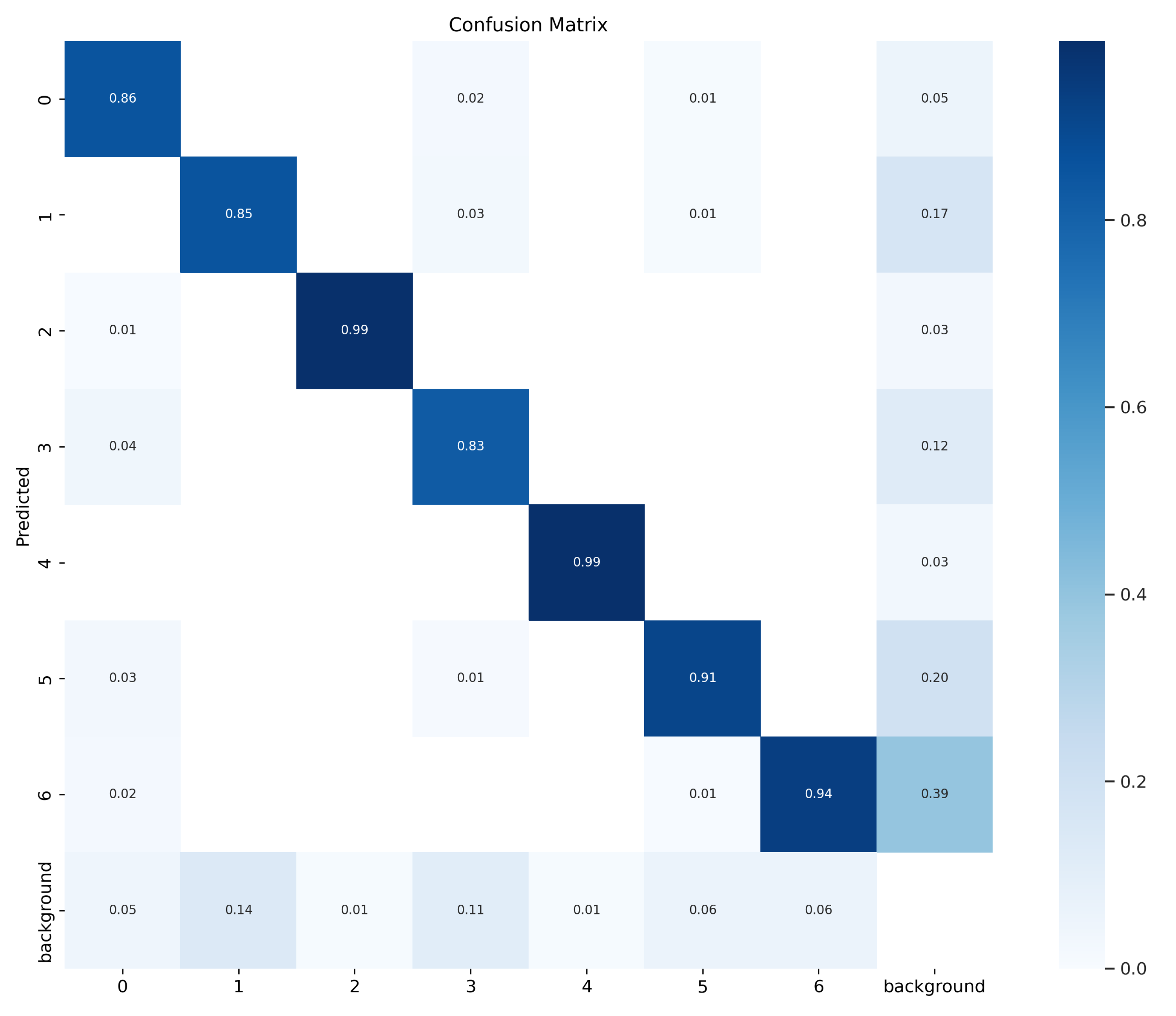

Confusion Matrix

4.4. Comparison of the Models, YOLOv5 and SSD-ResNet

4.5. Deployment on the Standalone Embedded Device

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Distribution of Gross Domestic Product (GDP) across Economics Sector 2020. 15 February 2022. Available online: https://www.statista.com/statistics/383256/pakistan-gdp-distribution-across-economic-sectors/ (accessed on 19 August 2022).

- Ali, H.H.; Peerzada, A.M.; Hanif, Z.; Hashim, S.; Chauhan, B.S. Weed management using crop competition in Pakistan: A review. Crop Prot. 2017, 95, 22–30. [Google Scholar] [CrossRef]

- Fennimore, S.A.; Slaughter, D.C.; Siemens, M.C.; Leon, R.G.; Saber, M.N. Technology for automation of weed control in specialty crops. Weed Technol. 2016, 30, 823–837. [Google Scholar] [CrossRef]

- Chauhan, B.S. Grand challenges in weed management. Front. Agron. 2020, 1, 3. [Google Scholar] [CrossRef]

- Weeds Cause Losses Amounting to Rs65b Annually. 20 July 2017. Available online: https://tribune.com.pk/story/1461870/weeds-cause-losses-amounting-rs65b-annually (accessed on 19 August 2022).

- Bai, S.H.; Ogbourne, S.M. Glyphosate: Environmental contamination, toxicity and potential risks to human health via food contamination. Environ. Sci. Pollut. Res. 2016, 23, 18988–190017. [Google Scholar] [CrossRef]

- Chauhan, B.S. Weed ecology and weed management strategies for dry-seeded rice in Asia. Weed Technol. 2012, 26, 1–13. [Google Scholar] [CrossRef]

- Bronson, K. Smart Farming: Including Rights Holders for Responsible Agricultural Innovation. Technol. Innov. Manag. Rev. 2018, 8, 7–14. [Google Scholar] [CrossRef]

- Dammer, K.H. Real-time variable-rate herbicide application for weed control in carrots. Weed Res. 2016, 56, 237–246. [Google Scholar] [CrossRef]

- Dar, M.A.; Kaushik, G.; Chiu, J.F.V. Pollution status and biodegradation of organophosphate pesticides in the environment. In Abatement of Environmental Pollutants; Elsevier: Amsterdam, The Netherlands, 2020; pp. 25–66. [Google Scholar]

- Westwood, J.H.; Charudattan, R.; Duke, S.O.; Fennimore, S.A.; Marrone, P.; Slaughter, D.C.; Swanton, C.; Zollinger, R. Weed management in 2050: Perspectives on the future of weed science. Weed Sci. 2018, 66, 275–285. [Google Scholar] [CrossRef]

- Mhlanga, D. Artificial intelligence in the industry 4.0, and its impact on poverty, innovation, infrastructure development, and the sustainable development goals: Lessons from emerging economies? Sustainability 2021, 13, 5788. [Google Scholar] [CrossRef]

- Ali, M.M.; Bachik, N.A.; Muhadi, N.A.; Yusof, T.N.T.; Gomes, C. Non-destructive techniques of detecting plant diseases: A review. Physiol. Mol. Plant Pathol. 2008, 108, 101426. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine vision systems in precision agriculture for crop farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef]

- Eli-Chukwu, N.C. Applications of artificial intelligence in agriculture: A review. Eng. Technol. Appl. Sci. Res. 2019, 9, 4377–4383. [Google Scholar] [CrossRef]

- Di Cicco, M.; Potena, C.; Grisetti, G.; Pretto, A. Automatic model based dataset generation for fast and accurate crop and weeds detection. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5188–5195. [Google Scholar]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2018, 184, 106067. [Google Scholar] [CrossRef]

- Firmansyah, E.; Suparyanto, T.; Hidayat, A.A.; Pardamean, B. Real-time Weed Identification Using Machine Learning and Image Processing in Oil Palm Plantations. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2022. [Google Scholar]

- Pushpanathan, K.; Hanafi, M.; Mashohor, S.; Fazlil Ilahi, W.F. Machine learning in medicinal plants recognition. Artif. Intell. Rev. 2021, 30, 823–837. [Google Scholar] [CrossRef]

- Cope, J.S.; Corney, D.; Clark, J.Y.; Remagnino, P.; Wilkin, P. Plant species identification using digital morphometrics: A review. Expert Syst. Appl. 2012, 39, 7562–7573. [Google Scholar] [CrossRef]

- Le-Khac, P.H.; Healy, G.; Smeaton, A.F. Contrastive representation learning: A framework and review. J. Framew. Rev. 2020, 10, 193907–193934. [Google Scholar] [CrossRef]

- Lee, S.H.; Chan, C.S.; Mayo, S.J.; Remagnino, P. How deep learning extracts and learns leaf features for plant classification. Pattern Recognit. 2017, 71, 1–13. [Google Scholar] [CrossRef]

- Chang, J.; Sitzmann, V.; Dun, X.; Heidrich, W.; Wetzstein, G. Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification. Sci. Rep. 2018, 8, 7562–7573. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A review of object detection models based on convolutional neural network. In Intelligent Computing: Image Processing Based Applications; Springer: Berlin, Germany, 2020; pp. 1–16. [Google Scholar]

- Chen, W.; Huang, H.; Peng, S.; Zhou, C.; Zhang, C. YOLO-face: A real-time face detector. Vis. Comput. 2021, 37, 805–813. [Google Scholar] [CrossRef]

- Tan, Y.; Cai, R.; Li, J.; Chen, P.; Wang, M. Automatic detection of sewer defects based on improved you only look once algorithm. Autom. Constr. 2021, 131, 103912. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Deploy AI-Powered Autonomous Machines at Scale. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-agx-xavier/ (accessed on 31 October 2022).

- Sethia, G.; Guragol, H.K.S.; Sandhya, S.; Shruthi, J.; Rashmi, N.; Sairam, H.V. Automated Computer Vision based Weed Removal Bot. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020. [Google Scholar]

- Smith, L.N.; Byrne, A.; Hansen, M.F.; Zhang, W.; Smith, M.L. Weed classification in grasslands using convolutional neural networks. Appl. Mach. Learn. 2019, 11139, 334–344. [Google Scholar]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Alam, M.; Alam, M.S.; Roman, M.; Tufail, M.; Khan, M.U.; Khan, M.T. Real-time machine-learning based crop/weed detection and classification for variable-rate spraying in precision agriculture. In Proceedings of the 2020 7th International Conference on Electrical and Electronics Engineering (ICEEE), Antalya, Turkey, 14–16 April 2020. [Google Scholar]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, J. A review of object detection based on convolutional neural network. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11104–11109. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Abdulsalam, M.; Aouf, N. Deep weed detector/classifier network for precision agriculture. In Proceedings of the 2020 28th Mediterranean Conference on Control and Automation (MED), Saint-Rapha, France, 15–18 September 2020. [Google Scholar]

- Sanchez, P.R.; Zhang, H.; Ho, S.S.; De Padua, E. Comparison of one-stage object detection models for weed detection in mulched onions. In Proceedings of the 2021 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 24–26 August 2021. [Google Scholar]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef]

- Veeranampalayam Sivakumar, A.N.; Li, J.; Scott, S.; Psota, E.; Jhala, A.J.; Luck, J.D.; Shi, Y. Comparison of object detection and patch-based classification deep learning models on mid-to late-season weed detection in UAV imagery. Pest Manag. Sci. 2022, 78, 2136. [Google Scholar] [CrossRef]

- Olaniyi, O.M.; Daniya, E.; Abdullahi, I.M.; Bala, J.A.; Olanrewaju, E. Weed recognition system for low-land rice precision farming using deep learning approach. In Proceedings of the International Conference on Artificial Intelligence & Industrial Applications, Meknes, Morocco, 19–20 March 2020; pp. 385–402. [Google Scholar]

- YOLOv5: The Friendliest AI Architecture You’ll Ever Use. 2022. Available online: https://ultralytics.com/yolov5 (accessed on 22 November 2022).

- Zhou, L.; Pan, S.; Wang, J.; Vasilakos, A.V. Machine learning on big data: Opportunities and challenges. Neurocomputing 2017, 237, 350–361. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A forest fire detection system based on ensemble learning. Forests 2017, 12, 217. [Google Scholar] [CrossRef]

- Lu, X.; Kang, X.; Nishide, S.; Ren, F. Object detection based on SSD-Ren. In Proceedings of the 2019 IEEE 6th International Conference on Cloud Computing and Intelligence Systems (CCIS), Singapore, 19–21 December 2019. [Google Scholar]

- Confusion Matrix, Accuracy, Precision, Recall, F1 Score. 2019. Available online: https://medium.com/analytics-vidhya/confusion-matrix-accuracy-precision-recall-f1-score-ade299cf63cd (accessed on 10 December 2019).

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020. [Google Scholar]

| mAP@IOU 0.5 | mAP@IOU 0.95 | Precision | Recall |

|---|---|---|---|

| 0.88 | 0.48 | 0.83 | 0.86 |

| Model | mAP@IOU 0.5 | mAP@IOU 0.95 | FPS (Frame per Second) |

|---|---|---|---|

| YOLO v5 | 0.88 | 0.48 | 40 |

| SSD | 0.53 | 0.25 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fatima, H.S.; ul Hassan, I.; Hasan, S.; Khurram, M.; Stricker, D.; Afzal, M.Z. Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot. Appl. Sci. 2023, 13, 3997. https://doi.org/10.3390/app13063997

Fatima HS, ul Hassan I, Hasan S, Khurram M, Stricker D, Afzal MZ. Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot. Applied Sciences. 2023; 13(6):3997. https://doi.org/10.3390/app13063997

Chicago/Turabian StyleFatima, Hafiza Sundus, Imtiaz ul Hassan, Shehzad Hasan, Muhammad Khurram, Didier Stricker, and Muhammad Zeshan Afzal. 2023. "Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot" Applied Sciences 13, no. 6: 3997. https://doi.org/10.3390/app13063997

APA StyleFatima, H. S., ul Hassan, I., Hasan, S., Khurram, M., Stricker, D., & Afzal, M. Z. (2023). Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot. Applied Sciences, 13(6), 3997. https://doi.org/10.3390/app13063997