FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection

Abstract

1. Introduction

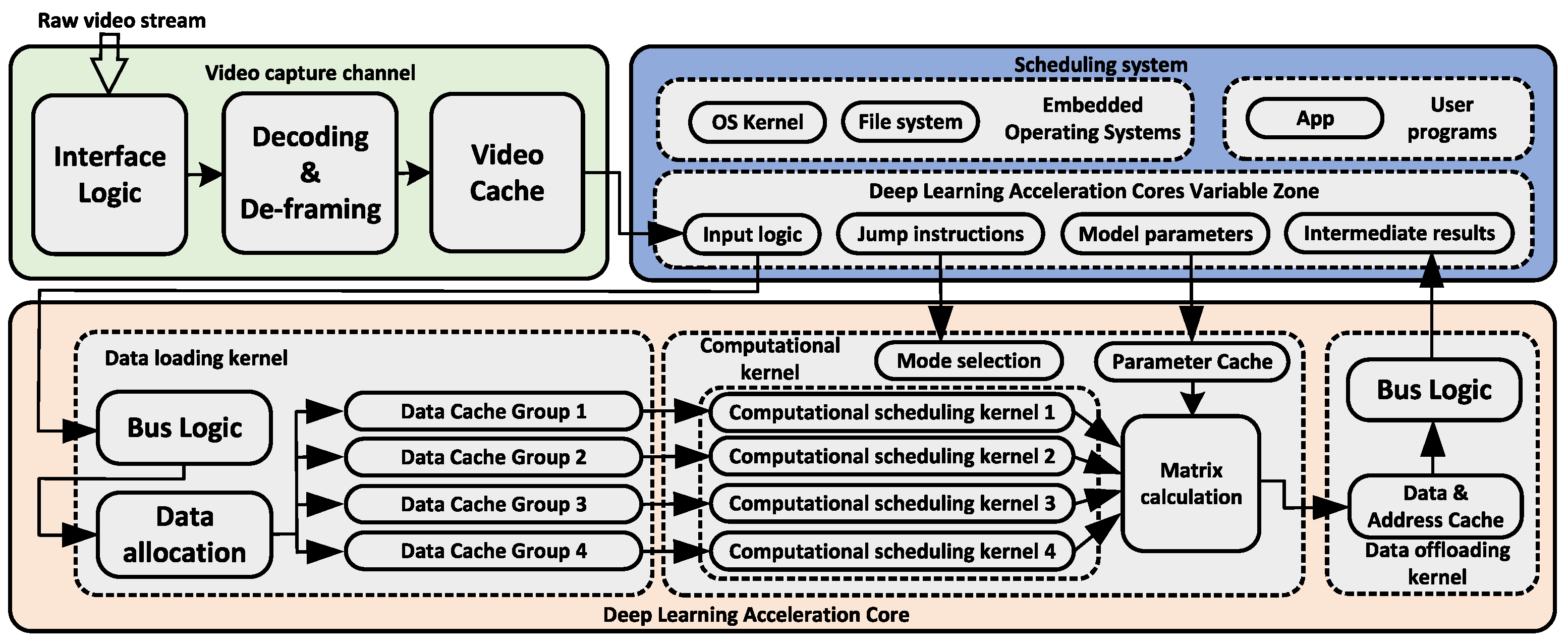

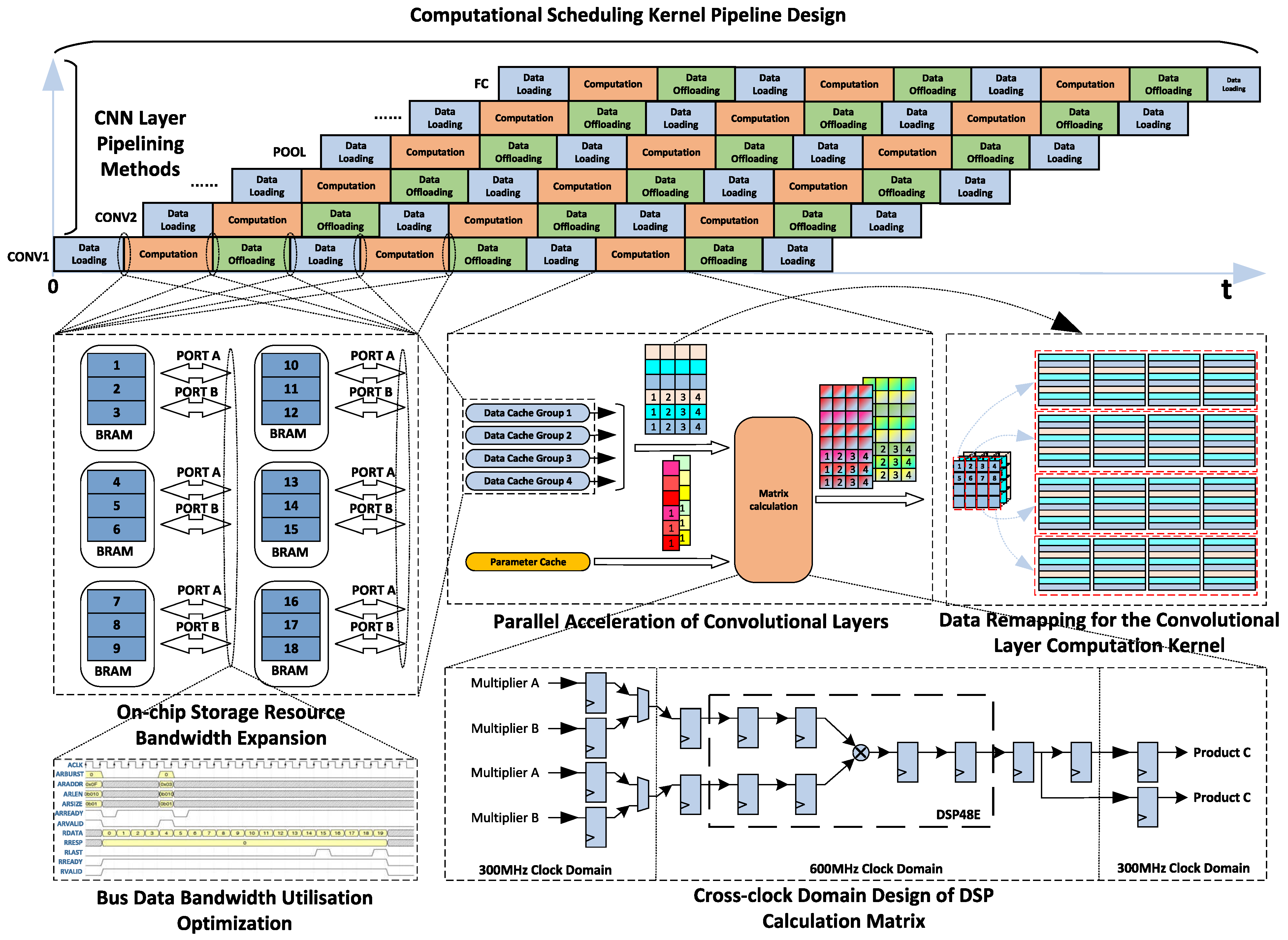

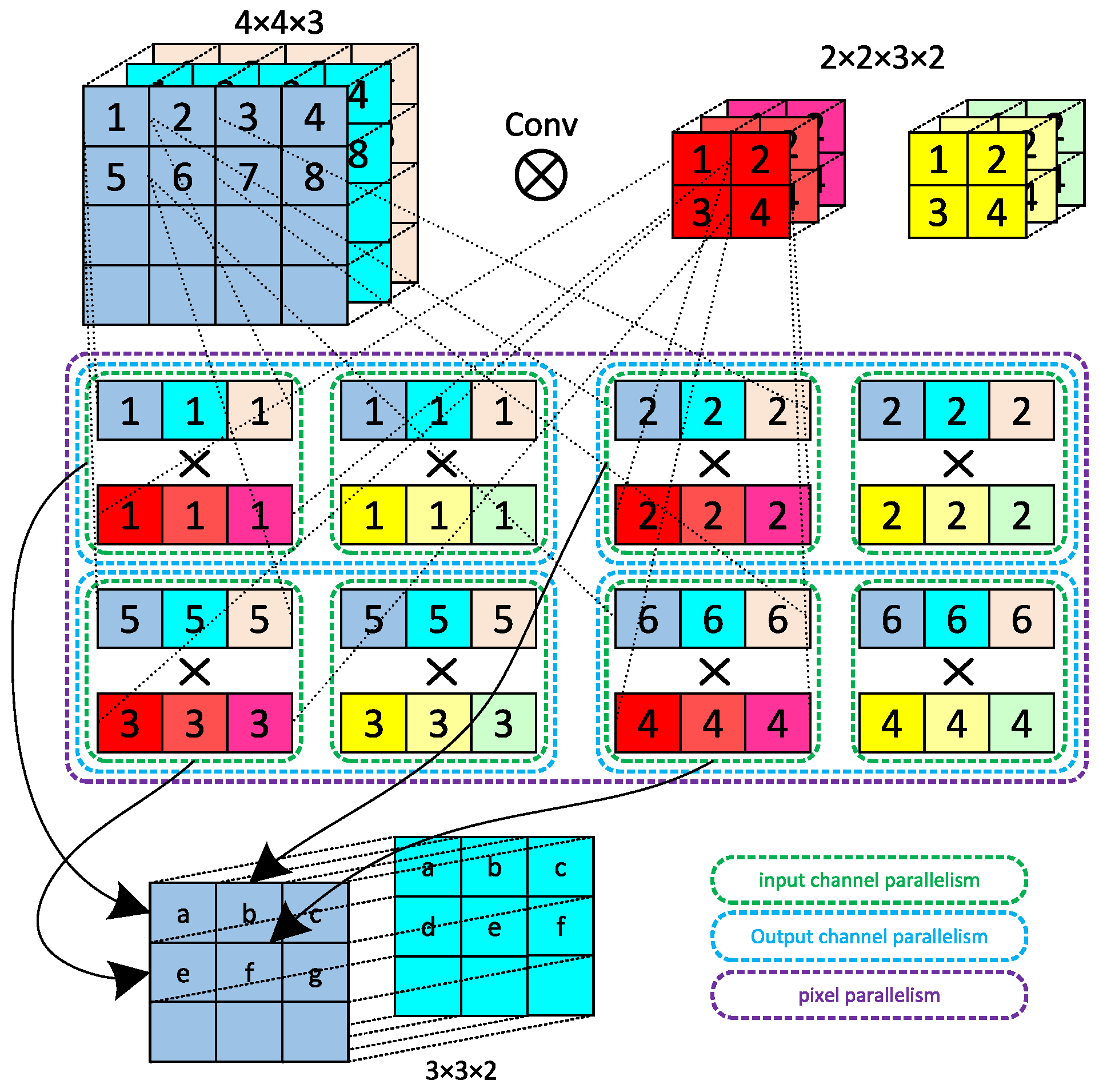

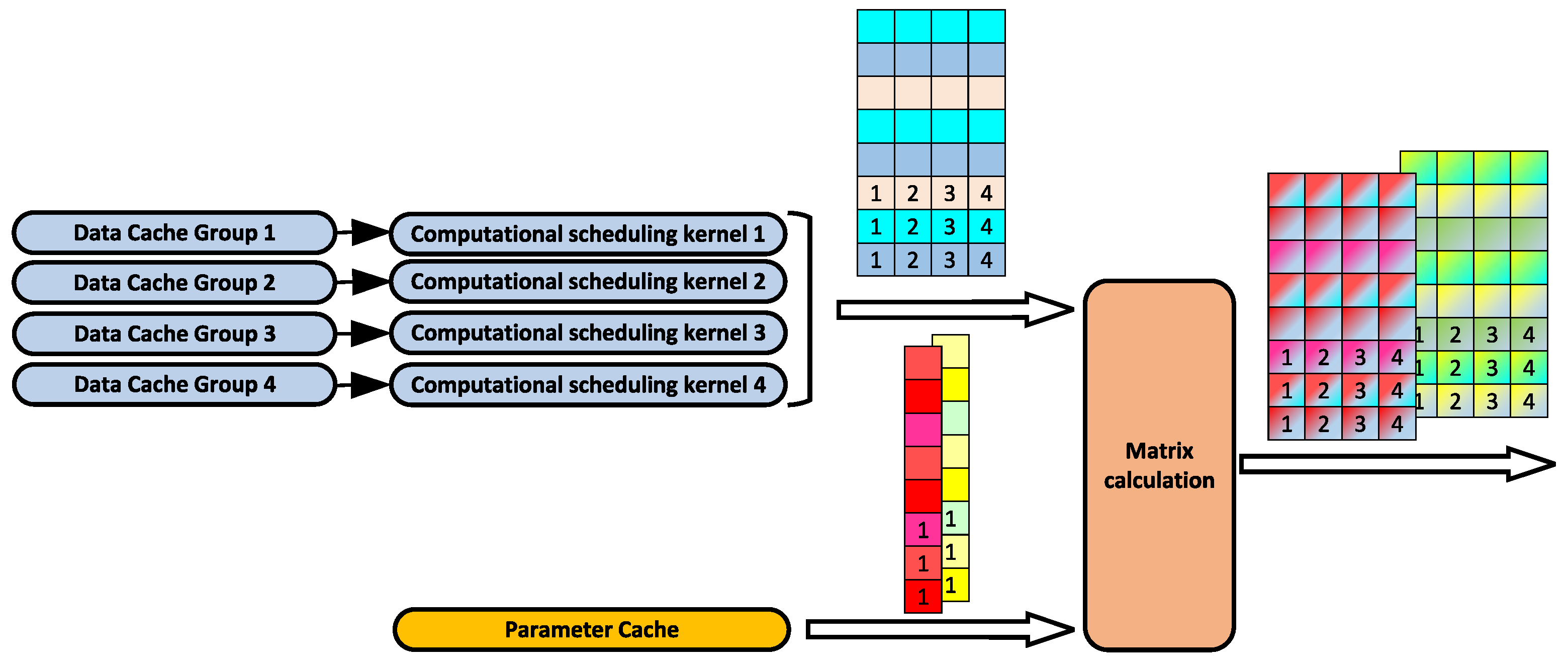

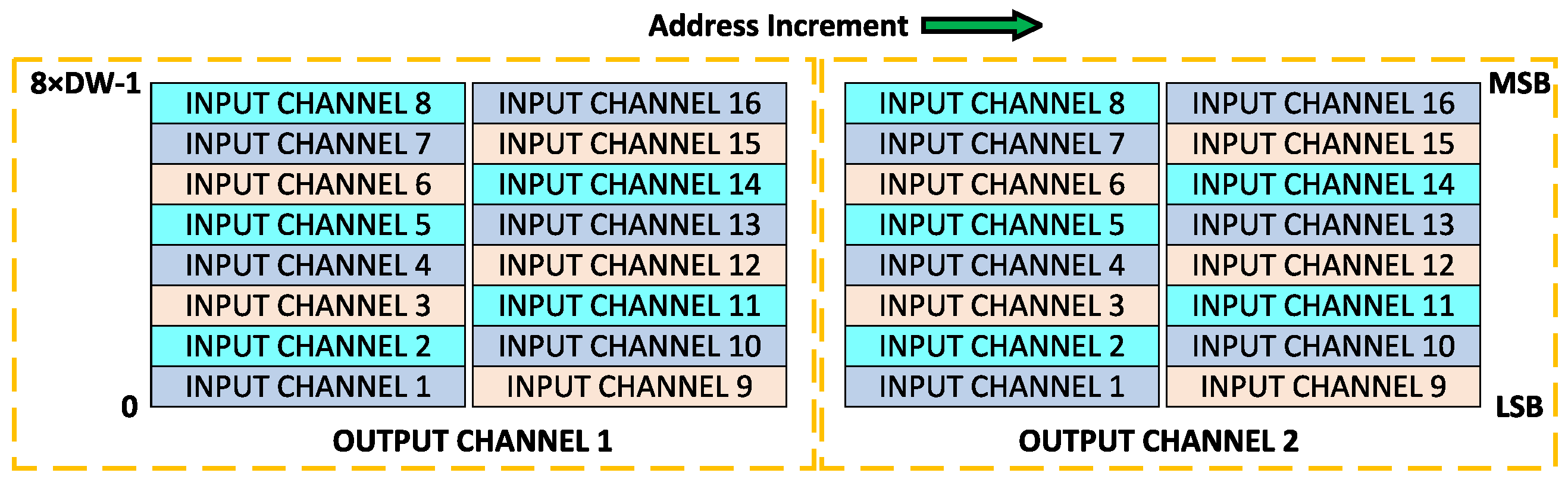

- We propose a parallel acceleration scheme for the three leading operators in CNN: convolutional layer, pooling layer, and fully connected layer. We improve the inference speed of CNN by a parallel acceleration in three dimensions: input channel parallelism, output channel parallelism, and pixel parallelism. We adopt a data address remapping strategy to match the bandwidth needs of parallel computation of FPGAs.

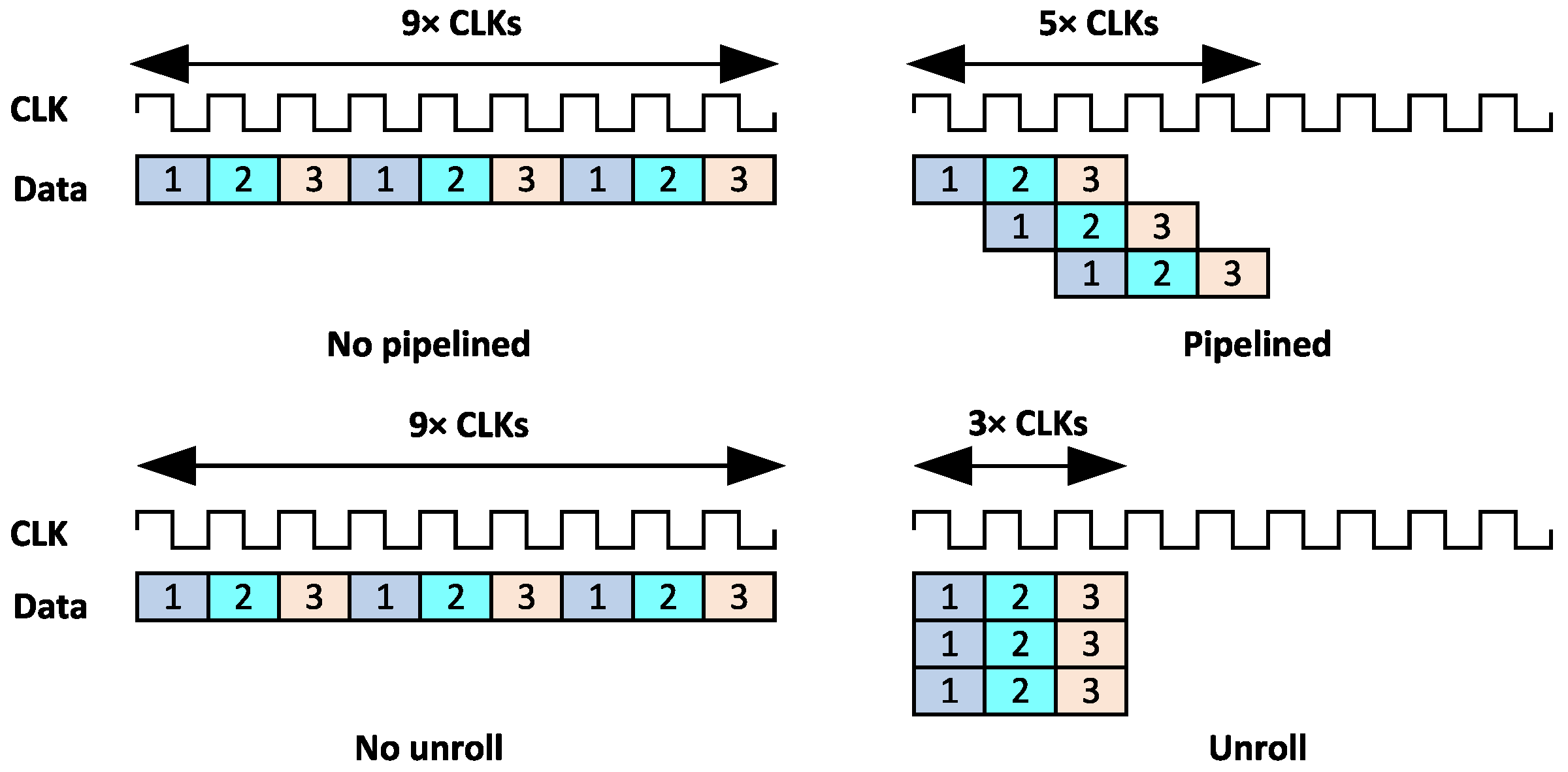

- We propose an architectural optimization strategy of the deep learning acceleration core to improve the efficiency of data stream transfer. The task scheduling is designed in a pipelined manner. Data accessing and parallel computing are carried out simultaneously to avoid data flow operations affecting the computational efficiency of the parallel acceleration core.

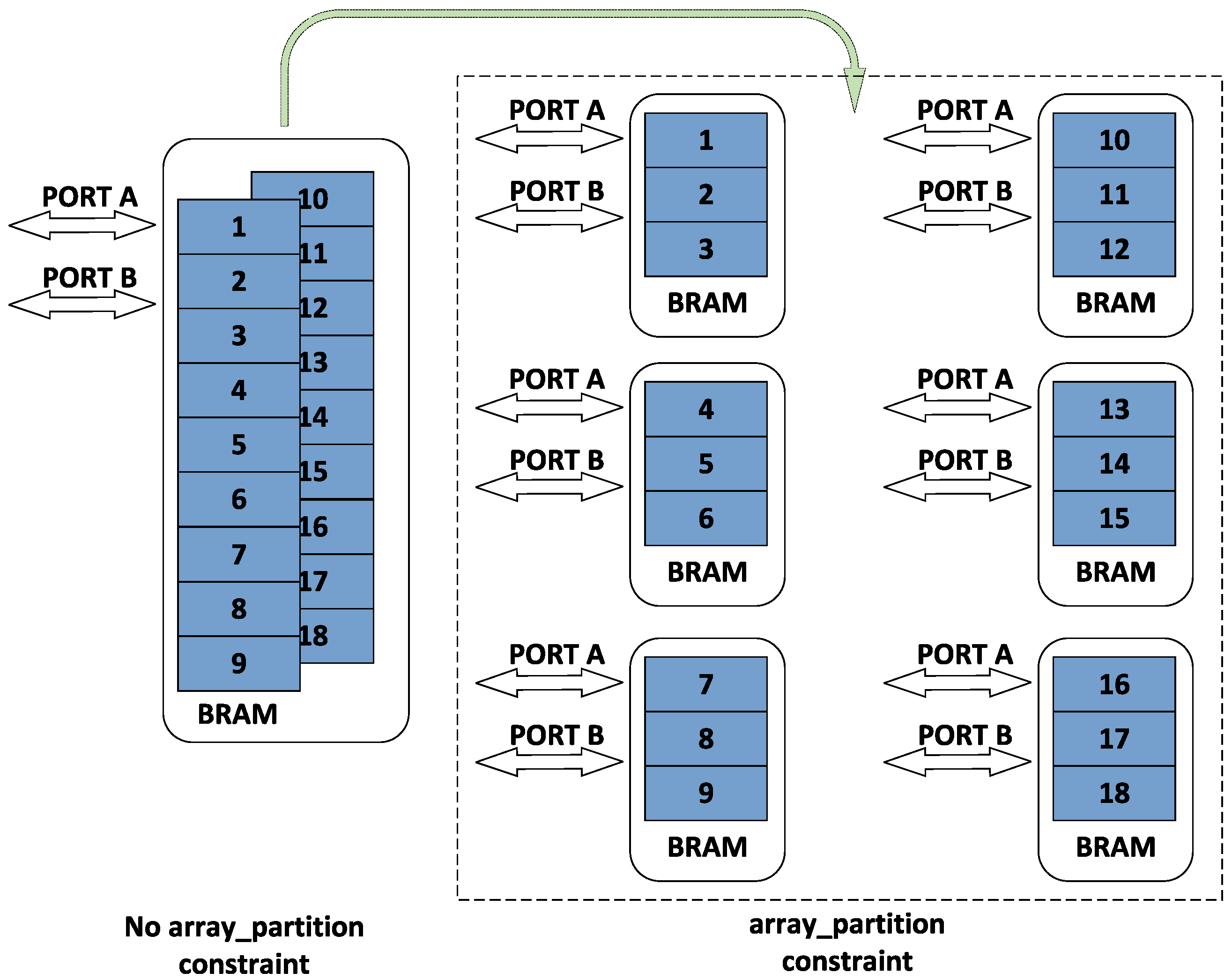

- The three-level data cache structure of off-chip storage, on-chip storage, and registers is proposed. The on-chip storage is sliced to provide sufficient data access bandwidth for parallel computing units. The implementation strategy of the computation matrix across clock domains is adopted to reduce the DSP resource occupation rate to half of the original one and improve the utilization of DSP resources on FPGAs.

2. Proposed Method

2.1. Parallel Acceleration of Computational Layers in CNN

2.1.1. Parallel Acceleration of Convolutional Layers

2.1.2. Parallel Acceleration of Pooling Layer and Fully Connected Layer

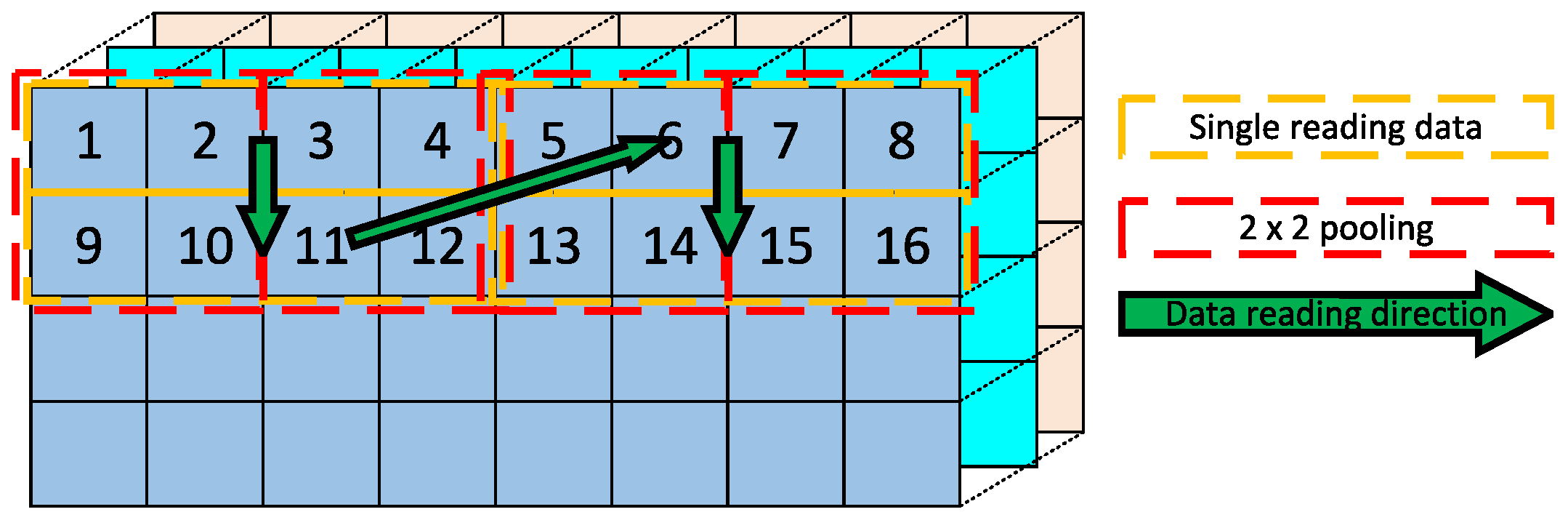

- The mapping strategy of the input data remains unchanged compared to that of the convolutional layer, using input channel parallelism plus pixel parallelism so that the pooling layer computational kernel accesses eight input channels and four pixels at the same time.

- The pooling layer differs from the convolutional layer in that the convolutional layer needs to iterate through the length and width of the convolutional kernel on a pixel. In contrast, the pooling layer must only iterate through each pixel once. Therefore, after reading 4 pixels of data in this paper, we change rows and read 4 pixels in the second row. After two reads of 2 × 4 pixels, the maximum value in the channel is taken, and the data of the two output pixels is obtained. The specific pooling layer parallelism method is shown in Figure 5.

2.1.3. Data Remapping for the Convolutional Layer Computation Kernel

2.2. Computational Scheduling Kernel Pipeline Design

2.2.1. Convolutional Layer, Pooling, Fully Connected Layer Pipelining Methods

| Algorithm 1: Convolutional layer loop nesting algorithm | |

| 1: | for each do |

| 2: | loop level 1 |

| 3: | the traversal of the number of rows of the input tensor |

| 4: | for each do |

| 5: | loop level 2 |

| 6: | the traversal of the number of columns of the input tensor |

| 7: | for each do |

| 8: | loop level 3 |

| 9: | the traversal of the number of the output channels |

| 10: | for each do |

| 11: | loop level 4 |

| 12: | the traversal of the convolution kernel tensor rows |

| 13: | for each do |

| 14: | loop level 5 |

| 15: | the traversal of the convolution kernel tensor columns |

| 16: | for each do |

| 17: | loop level 6 |

| 18: | the traversal of the convolution kernel input channels |

| 19: | #pragma HLS pipeline |

| 20: | for each do |

| 21: | loop level 7 |

| 22: | loops of pixel parallelism |

| 23: | for each do |

| 24: | loop level 8 |

| 25: | loops of output channel parallelism |

| 26: | for each do |

| 27: | loop level 9 |

| 28: | loops of input channel parallelism |

| 29: | end for |

| 30: | end for |

| 31: | end for |

| 32: | end for |

| 33: | end for |

| 34: | end for |

| 35: | end for |

| 36: | end for |

| 37: | end for |

| Algorithm 2: Pooling layer loop nesting algorithm | |

| 1: | for each do |

| 2: | loop level 1 |

| 3: | traversal of the input tensor row direction |

| 4: | for each do |

| 5: | loop level 2 |

| 6: | traversal of the input tensor column direction |

| 7: | for each do |

| 8: | loop level 3 |

| 9: | traversal of the output channels |

| 10: | for each do |

| 11: | loop level 4 |

| 12: | traversal of the pooling range in the row direction |

| 13: | for each do |

| 14: | loop level 5 |

| 15: | traversal of the pooling range in the column direction |

| 16: | #pragma HLS pipeline |

| 17: | for each do |

| 18: | loop level 6 |

| 19: | pixel parallelism |

| 20: | for each do |

| 21: | loop level 7 |

| 22: | output channel parallelism |

| 23: | end for |

| 24: | end for |

| 25: | end for |

| 26: | end for |

| 27: | end for |

| 28: | end for |

| 29: | end for |

| Algorithm 3: Fully connected layer loop nesting algorithm | |

| 1: | for each do |

| 2: | loop level 1 |

| 3: | traverses the input tensor channels |

| 4: | for each do |

| 5: | loop level 2 |

| 6: | traverses the output tensor channels |

| 7: | #pragma HLS pipeline |

| 8: | for each do |

| 9: | loop level 3 |

| 10: | pixel parallelism |

| 11: | for each do |

| 12: | loop level 4 |

| 13: | input channel parallelism |

| 14: | end for |

| 15: | end for |

| 16: | end for |

| 17: | end for |

2.2.2. On-Chip Storage Resource Bandwidth Expansion

2.2.3. Partial Loading and Partial Calculation of Data within Layers

2.3. Bus Access and DSP Resource Optimization Strategies

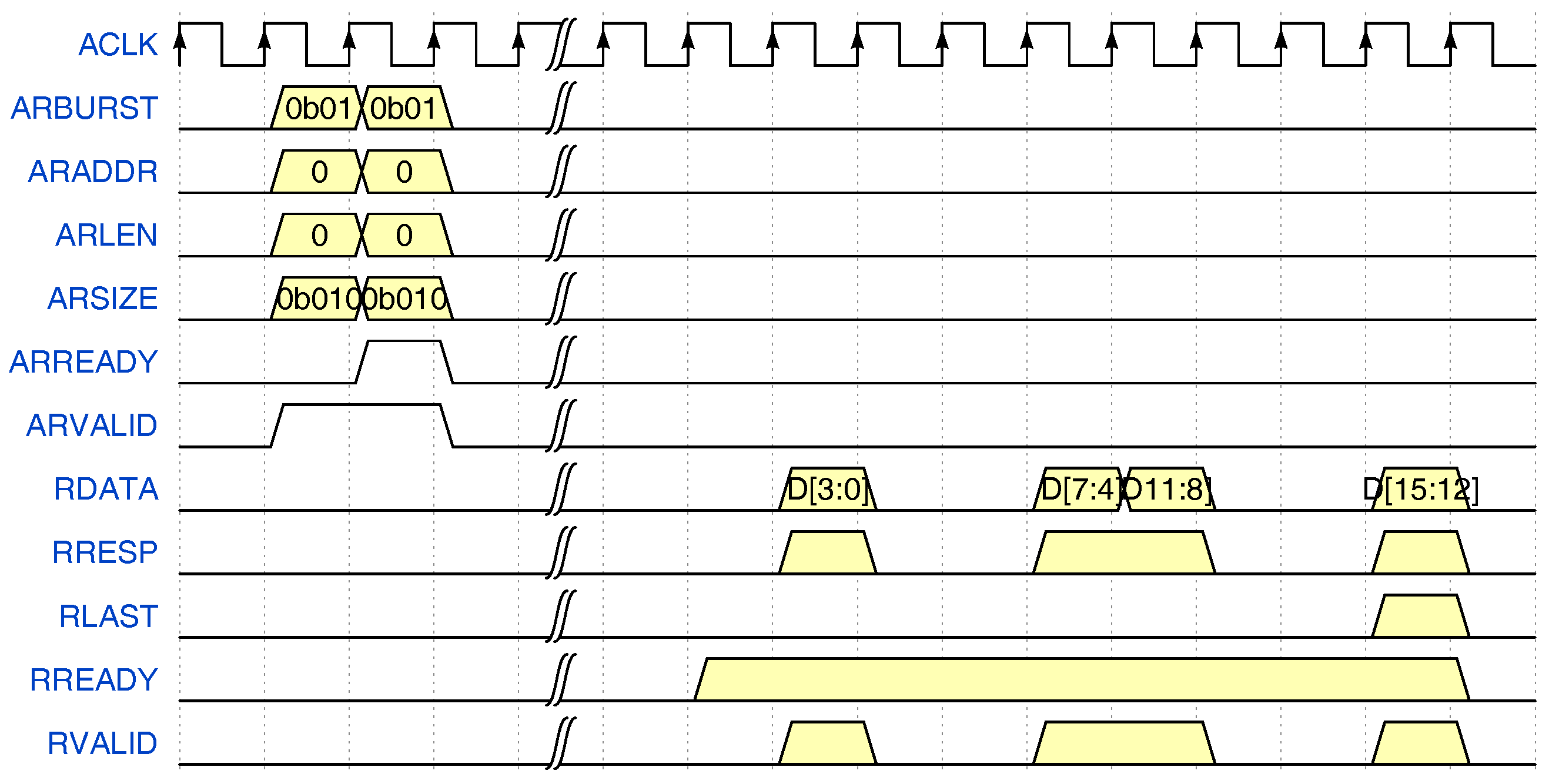

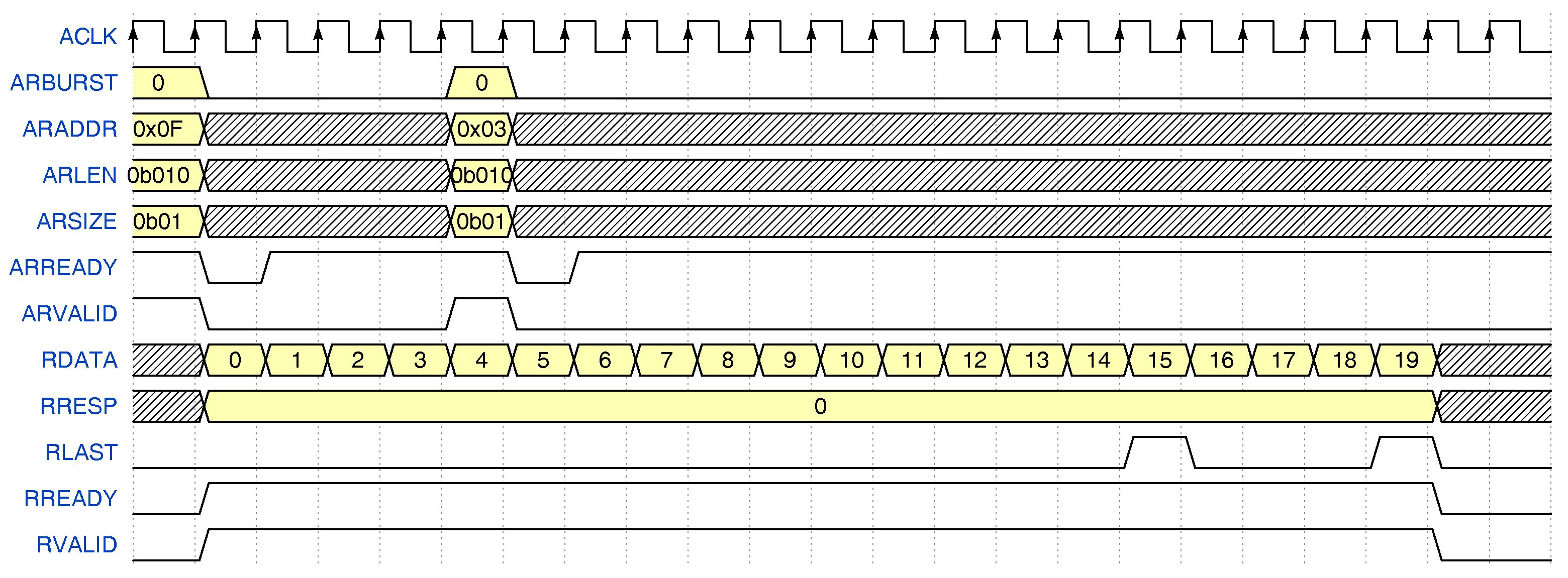

2.3.1. Bus Data Bandwidth Utilisation Optimization

- Using Burst mode increases data access and reduces the number of clocks occupied by handshaking and transferring.

- Using Outstanding mode to increase the number of reads and write, which AXI host can launch before blocking.

- Increasing the pipeline length to avoid the pipeline blocking caused by the AXI-MM read and write instructions not returning.

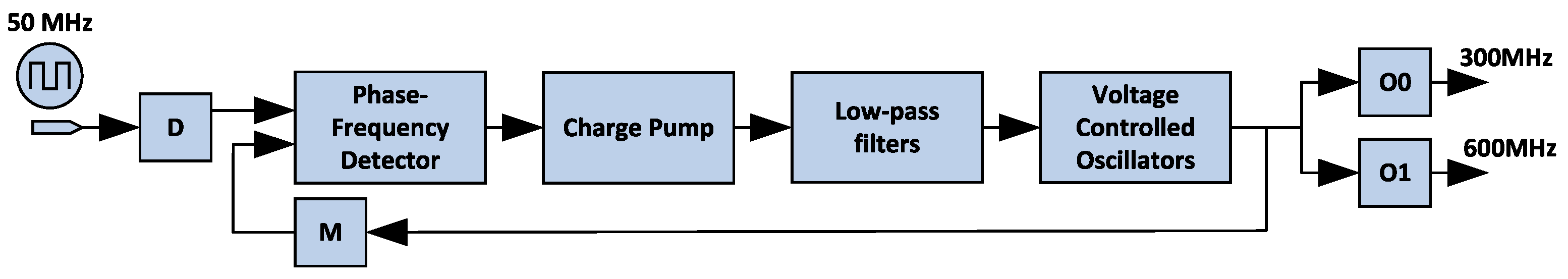

2.3.2. Cross-Clock Domain Design of DSP Calculation Matrix

3. Results and Discussion

- Our proposed method will not be interrupted by unordered scheduling instructions during network inference tasks, which include parameter updates, recovery after video stream interruptions, and interruption exception handling.

- The automatic optimization function of the synthesis tool is turned off since we have already performed manual optimization specifically for the proposed method. The secondary optimization of the automatic tool will affect the results.

- When selecting other methods proposed in the literature for comparison, we try to select FPGA chips of the same architecture system because the internal structure of chips of different architectures is different, which will affect the evaluation of the effect of resource optimization.

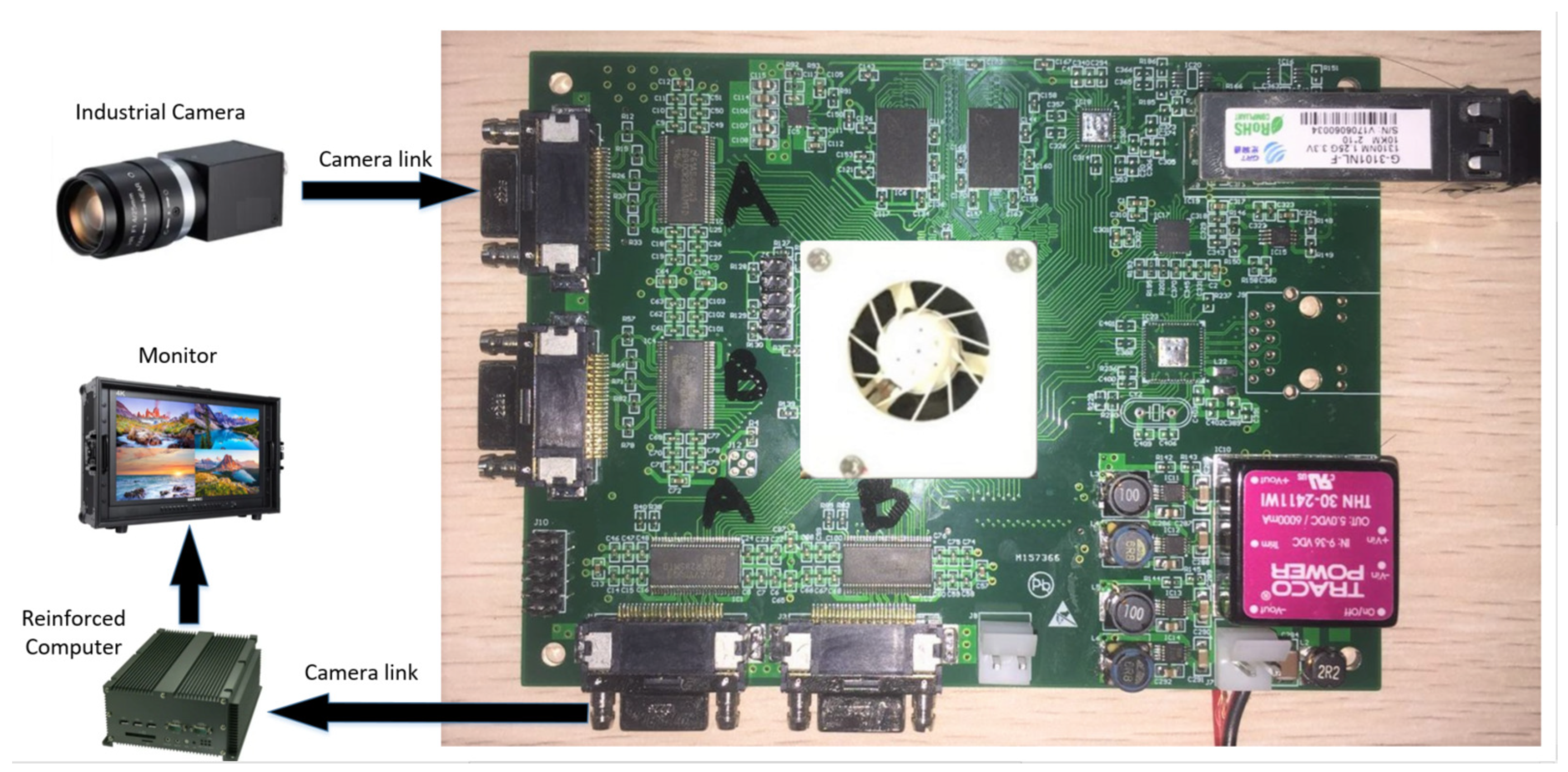

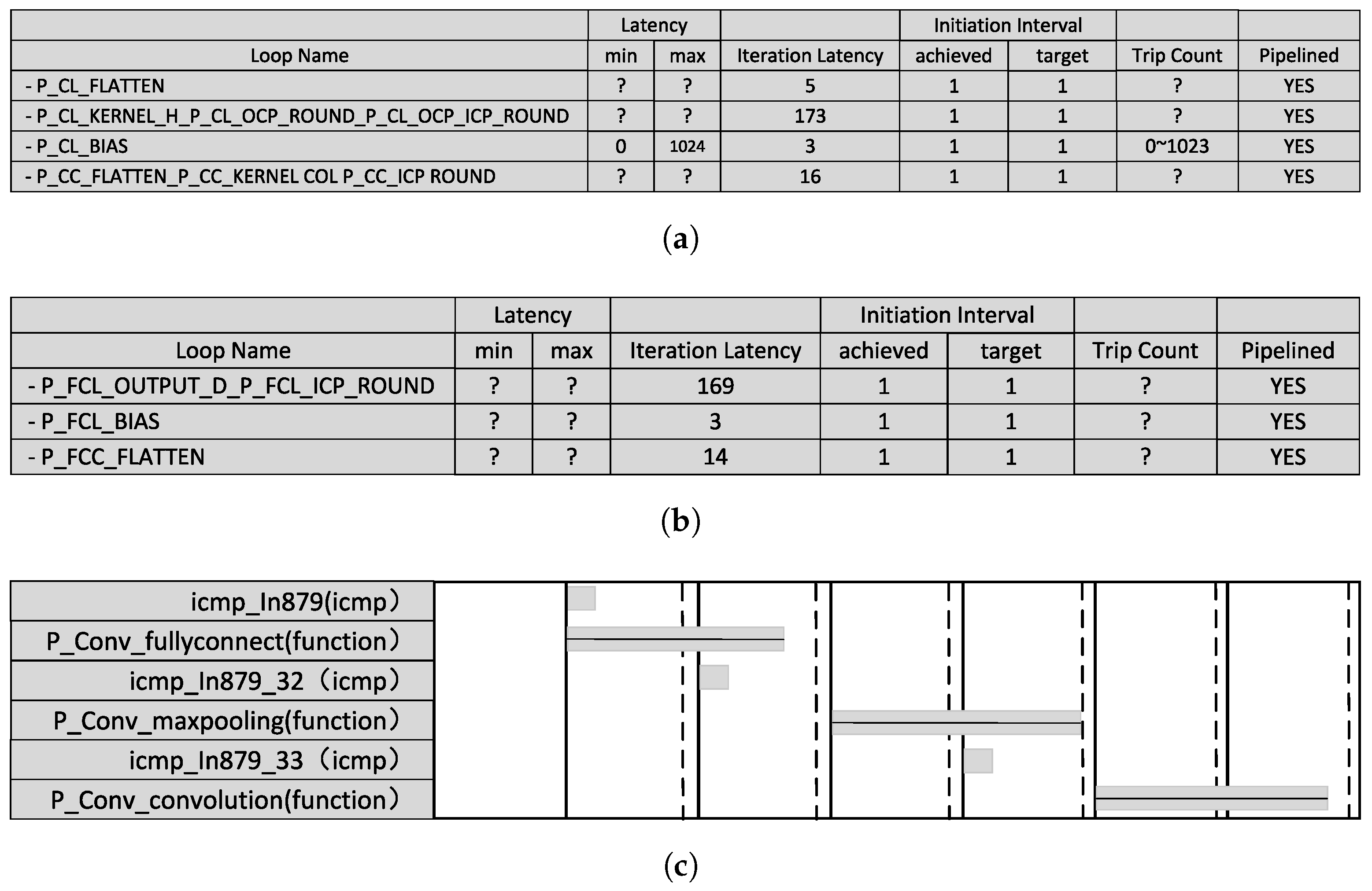

3.1. Computational Scheduling Kernel Implementation and Validation

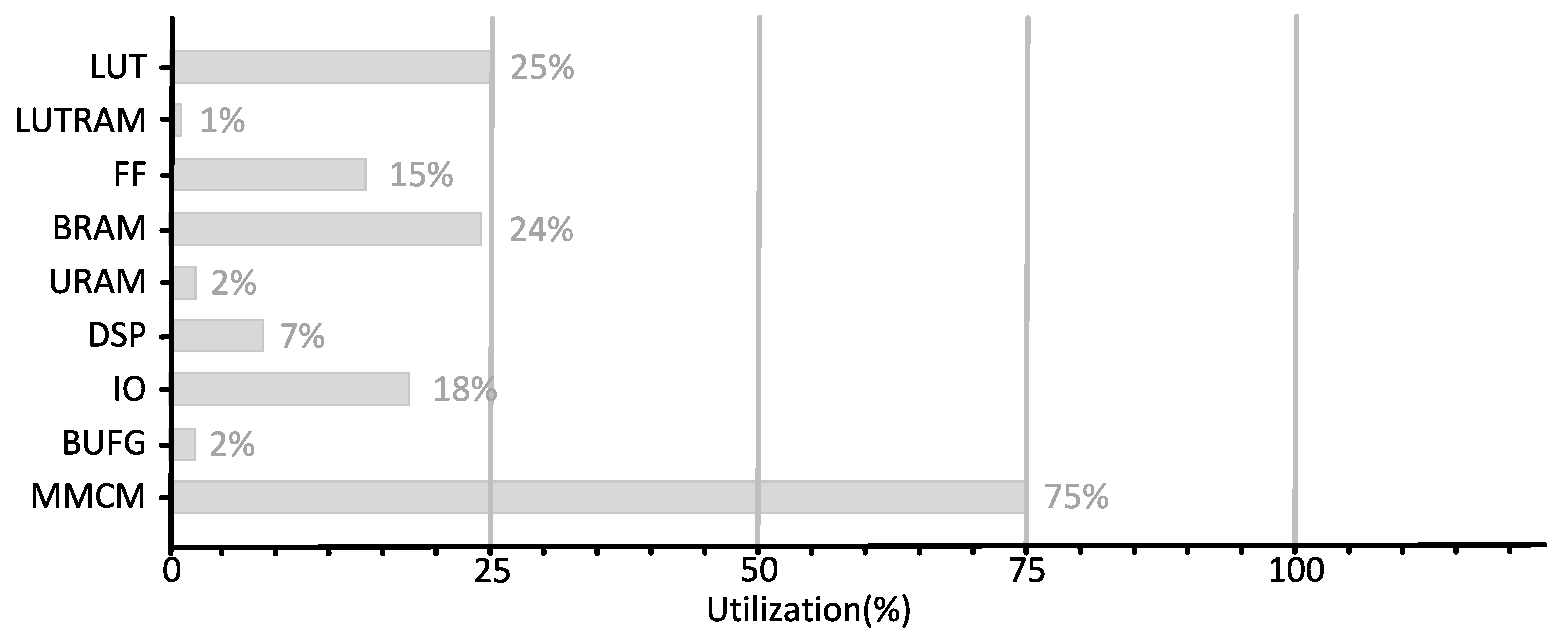

3.2. Deep Learning Acceleration Core Implementation and Verification

3.3. Performance Analysis of Deep Learning Acceleration Core Inference

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FPGA | Field Programmable Gate Array |

| CNN | Convolutional Neural Networks |

| DSP | Digital Signal Processing |

| YOLO | You Only Look Once |

| Faster R-CNN | Faster Region Convolutional Neural Networks |

| SAR | Synthetic Aperture Radar |

| mAP | Mean of Average Precision |

| AP | Average Precision |

| AR | Average Recall |

| UAV | Unmanned Aerial Vehicle |

| RES-YOLO | Residual YOLO |

| GPU | Graphics Processing Unit |

| DSPs | Digital Signal Processors |

| NPUs | Neural Processor Units |

| FPS | frames per second |

| GOPS | Giga Operations Per Second |

| COCO | Microsoft Common Objects in Context |

| VOC | Visual Object Classes |

| RS | Row stationery |

| VGG | Visual Geometry Group |

| HLS | High-Level Synthesis Tool |

| AXI-MM | Advanced eXtensible Interface Memory Map |

| BRAM | Block Random Access Memory |

| DRAM | Dynamic Random Access Memory |

| HDL | Hardware Description Language |

| MCMM | Mixed Mode Clock Manager |

| PLL | Phase Lock Loop |

| PR | Placing and Routing |

| BUFG | Global Buffer |

| FIFO | First Input First Output |

| DDR | Double Data Rate |

| HP | High Performance |

| PS | Processing System |

| KNN | k-Nearest Neighbor |

| RNN | Recurrent Neural Networks |

| GAN | Generative Adversarial Nets |

| MSRANet | Multi-scale Residual Aggregation Network |

| LSTM | Long Short-Term Memory |

References

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91. [Google Scholar] [CrossRef]

- Sun, B.; Wang, X.; Oad, A.; Pervez, A.; Dong, F. Automatic Ship Object Detection Model Based on YOLOv4 with Transformer Mechanism in Remote Sensing Images. Appl. Sci. 2023, 13, 2488. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A novel YOLO-based method for arbitrary-oriented ship detection in high-resolution SAR images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Hu, J.; Zhi, X.; Shi, T.; Zhang, W.; Cui, Y.; Zhao, S. PAG-YOLO: A portable attention-guided YOLO network for small ship detection. Remote Sens. 2021, 13, 3059. [Google Scholar] [CrossRef]

- Li, L.; Jiang, L.; Zhang, J.; Wang, S.; Chen, F. A complete YOLO-based ship detection method for thermal infrared remote sensing images under complex backgrounds. Remote Sens. 2022, 14, 1534. [Google Scholar] [CrossRef]

- Ye, J.; Yuan, Z.; Qian, C.; Li, X. Caa-yolo: Combined-attention-augmented yolo for infrared ocean ships detection. Sensors 2022, 22, 3782. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Ma, C.; Li, L.; Xing, X.; Zhang, Y.; Wang, Z.; Xu, J. A vehicle detection method for aerial image based on YOLO. J. Comput. Commun. 2018, 6, 98–107. [Google Scholar] [CrossRef][Green Version]

- Al-Batat, R.; Angelopoulou, A.; Premkumar, S.; Hemanth, J.; Kapetanios, E. An end-to-end automated license plate recognition system using YOLO based vehicle and license plate detection with vehicle classification. Sensors 2022, 22, 9477. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-Time Vehicle Detection Based on Improved YOLO v5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. Uav-yolo: Small object detection on unmanned aerial vehicle perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, J.; Huang, J.; Li, Y. Research on Deep Learning Automatic Vehicle Recognition Algorithm Based on RES-YOLO Model. Sensors 2022, 22, 3783. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Weng, T.; Xing, J.; Pan, Z.; Yuan, Z.; Xing, X.; Zhang, P. A new deep learning network for automatic bridge detection from SAR images based on balanced and attention mechanism. Remote Sens. 2020, 12, 441. [Google Scholar] [CrossRef]

- Li, X.; Meng, Q.; Wei, M.; Sun, H.; Zhang, T.; Su, R. Identification of Underwater Structural Bridge Damage and BIM-Based Bridge Damage Management. Appl. Sci. 2023, 13, 1348. [Google Scholar] [CrossRef]

- Du, F.; Jiao, S.; Chu, K. Application research of bridge damage detection based on the improved lightweight convolutional neural network model. Appl. Sci. 2022, 12, 6225. [Google Scholar] [CrossRef]

- Lin, Y.C.; Chen, W.D. Automatic aircraft detection in very-high-resolution satellite imagery using a YOLOv3-based process. J. Appl. Remote Sens. 2021, 15, 018502. [Google Scholar] [CrossRef]

- Madasamy, K.; Shanmuganathan, V.; Kandasamy, V.; Lee, M.Y.; Thangadurai, M. OSDDY: Embedded system-based object surveillance detection system with small drone using deep YOLO. EURASIP J. Image Video Process. 2021, 2021, 1–14. [Google Scholar] [CrossRef]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Artamonov, N.; Yakimov, P. Towards real-time traffic sign recognition via YOLO on a mobile GPU. J. Phys. Conf. Ser. 2018, 1096, 012086. [Google Scholar] [CrossRef]

- Güney, E.; Bayilmiş, C.; Cakan, B. An implementation of real-time traffic signs and road objects detection based on mobile GPU platforms. IEEE Access 2022, 10, 86191–86203. [Google Scholar] [CrossRef]

- Feng, W.; Zhu, Y.; Zheng, J.; Wang, H. Embedded YOLO: A real-time object detector for small intelligent trajectory cars. Math. Probl. Eng. 2021, 2021, 6555513. [Google Scholar] [CrossRef]

- Zhang, S.; Cao, J.; Zhang, Q.; Zhang, Q.; Zhang, Y.; Wang, Y. An fpga-based reconfigurable cnn accelerator for yolo. In Proceedings of the 2020 IEEE 3rd International Conference on Electronics Technology (ICET), Chengdu, China, 8–11 May 2020; pp. 74–78. [Google Scholar]

- Babu, P.; Parthasarathy, E. Hardware acceleration for object detection using YOLOv4 algorithm on Xilinx Zynq platform. J. Real-Time Image Process. 2022, 19, 931–940. [Google Scholar] [CrossRef]

- Xiong, Q.; Liao, C.; Yang, Z.; Gao, W. A Method for Accelerating YOLO by Hybrid Computing Based on ARM and FPGA. In Proceedings of the 2021 4th International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 22–24 December 2021; pp. 1–7. [Google Scholar]

- Chen, Y.H.; Emer, J.; Sze, V. Eyeriss: A spatial architecture for energy-efficient dataflow for convolutional neural networks. ACM SIGARCH Comput. Archit. News 2016, 44, 367–379. [Google Scholar] [CrossRef]

- Liu, Z.; Dou, Y.; Jiang, J.; Xu, J.; Li, S.; Zhou, Y.; Xu, Y. Throughput-optimized FPGA accelerator for deep convolutional neural networks. ACM Trans. Reconfigurable Technol. Syst. 2017, 10, 1–23. [Google Scholar] [CrossRef]

- Peemen, M.; Setio, A.A.; Mesman, B.; Corporaal, H. Memory-centric accelerator design for convolutional neural networks. In Proceedings of the 2013 IEEE 31st International Conference on Computer Design (ICCD), Asheville, NC, USA, 6–9 October 2013; pp. 13–19. [Google Scholar]

- Zhang, C.; Sun, G.; Fang, Z.; Zhou, P.; Pan, P.; Cong, J. Caffeine: Toward uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2018, 38, 2072–2085. [Google Scholar] [CrossRef]

- Shen, Y.; Ferdman, M.; Milder, P. Maximizing CNN accelerator efficiency through resource partitioning. ACM SIGARCH Comput. Archit. News 2017, 45, 535–547. [Google Scholar] [CrossRef]

- Peng, H.; Chen, S.; Wang, Z.; Yang, J.; Weitze, S.A.; Geng, T.; Li, A.; Bi, J.; Song, M.; Jiang, W.; et al. Optimizing fpga-based accelerator design for large-scale molecular similarity search (special session paper). In Proceedings of the 2021 IEEE/ACM International Conference On Computer Aided Design (ICCAD), Munich, Germany, 1–4 November 2021; pp. 1–7. [Google Scholar]

- Azari, E.; Vrudhula, S. ELSA: A throughput-optimized design of an LSTM accelerator for energy-constrained devices. ACM Trans. Embed. Comput. Syst. 2020, 19, 1–21. [Google Scholar] [CrossRef]

- Gong, H.J. Research and Implementation of FPGA-Based Acceleration Method for Convolutional Neural Networks. Master’s Thesis, University of Chinese Academy of Sciences, National Space Science Center, Chinese Academy of Sciences, Beijing, China, 2021. [Google Scholar]

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-eye: A complete design flow for mapping CNN onto embedded FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2017, 37, 35–47. [Google Scholar] [CrossRef]

- Liu, S.; Fan, H.; Niu, X.; Ng, H.C.; Chu, Y.; Luk, W. Optimizing CNN-based segmentation with deeply customized convolutional and deconvolutional architectures on FPGA. ACM Trans. Reconfigurable Technol. Syst. 2018, 11, 1–22. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C.S. fpgaConvNet: Mapping regular and irregular convolutional neural networks on FPGAs. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 326–342. [Google Scholar] [CrossRef]

| Input Tensor/Output Tensor | Partial Loading Input Tensor/ Output Tensor | Occupy the Data Loading Area Depth | Occupy Data Storage Area Depth | Occupy the Parameter Storage Area Depth | |

|---|---|---|---|---|---|

| CONV1 | 448 × 448 × 3/ 448 × 448 × 16 | 56 × 56 × 3/ 56 × 56 × 16 | 784 | 6272 | 18 |

| POOL2 | 448 × 448 × 16/ 224 × 224 × 16 | 56 × 56 × 16/ 28 × 28 × 16 | 1568 | 1568 | 0 |

| CONV3 | 224 × 224 × 16/ 224 × 224 × 32 | 28 × 28 × 32/ 28 × 28 × 32 | 784 | 3136 | 72 |

| POOL4 | 224 × 224 × 32/ 112 × 112 × 32 | 28 × 28 × 32/ 14 × 14 × 32 | 784 | 784 | 0 |

| CONV5 | 112 × 112 × 32/ 112 × 112 × 64 | 28 × 28 × 32/ 28 × 28 × 64 | 784 | 6272 | 288 |

| POOL6 | 112 × 112 × 64/ 56 × 56 × 64 | 28 × 28 × 64/ 14 × 14 × 64 | 1568 | 1568 | 0 |

| CONV7 | 56 × 56 × 64/ 56 × 56 × 128 | 14 × 14 × 64/ 14 × 14 × 128 | 392 | 3136 | 1152 |

| POOL8 | 56 × 56 × 128/ 28 × 28 × 128 | 14 × 14 × 128/ 7 × 7 × 128 | 784 | 784 | 0 |

| CONV9 | 28 × 28 × 128/ 28 × 28 × 256 | 14 × 14 × 128/ 14 × 14 × 64 | 784 | 1568 | 1152 |

| POOL10 | 28 × 28 × 256/ 14 × 14 × 256 | 14 × 14 × 256/ 7 × 7 × 256 | 1568 | 1568 | 0 |

| CONV11 | 14 × 14 × 256/ 14 × 14 × 512 | 14 × 14 × 256/ 14 × 14 × 32 | 1568 | 784 | 1152 |

| POOL12 | 14 × 14 × 512/ 7 × 7 × 512 | 2 × 2 × 512/ 1 × 1 × 512 | 128 | 64 | 0 |

| CONV13 | 7 × 7 × 512/ 7 × 7 × 1024 | 7 × 7 × 512/ 7 × 7 × 16 | 896 | 98 | 1152 |

| CONV14 | 7 × 7 × 1024/ 7 × 7 × 1024 | 7 × 7 × 1024/ 7 × 7 × 8 | 1792 | 49 | 1152 |

| CONV15 | 7 × 7 × 1024/ 7 × 7 × 1024 | 7 × 7 × 1024/ 7 × 7 × 8 | 1792 | 49 | 1152 |

| FC17 | 1 × 1 × 50,176/ 1 × 1 × 256 | 1 × 1 × 50,176/ 1 × 1 × 2 | 1568 | 1 | 1568 |

| FC18 | 1 × 1 × 256/ 1 × 1 × 4096 | 1 × 1 × 256/ 1 × 1 × 512 | 8 | 64 | 2048 |

| FC19 | 1 × 1 × 4096/ 1 × 1 × 1470 | 1 × 1 × 4096/ 1 × 1 × 32 | 128 | 4 | 2048 |

| LUT | FF | DSP | BRAM | |

|---|---|---|---|---|

| Computational scheduling kernel as a whole | 47739 | 33316 | 54 | 69.5 |

| Convolutional Computing Pipeline | 36847 | 25028 | 41 | 0 |

| Pooling Computing pipeline | 2400 | 1864 | 9 | 0 |

| Fully Connected Computing Pipeline | 7169 | 4756 | 4 | 0 |

| ZU15EG on-chip resources | 341280 | 682560 | 3528 | 744 |

| Computational scheduling kernel Occupancy | 13.9% | 4.8% | 1.5% | 9.3% |

| LUT | FF | DSP | BRAM | |

|---|---|---|---|---|

| Computational scheduling kernel | 47739 | 33316 | 54 | 69.5 |

| Data Storage kernel | 5563 | 5868 | 21 | 8 |

| Data Loading kernel | 2619 | 3428 | 6 | 7.5 |

| Internal Storage | 732 | 100 | 8 | 0 |

| DSP Calculation Matrix | 4994 | 31139 | 128 | 0 |

| Acceleration core overall | 61634 | 73761 | 209 | 165 |

| Overall Occupancy | 18.0% | 10.8% | 5.9% | 22.1% |

| Layer | Input Tensor | Output Tensor | Number of Parameters | Data Volume | Calculated Volume |

|---|---|---|---|---|---|

| Input0 | 448 × 448 × 3 | 0 | 1605632 | 0 | |

| CONV1 | 448 × 448 × 3 | 448 × 448 × 16 | 1152 | 3211264 | 231211008 |

| POOL2 | 448 × 448 × 16 | 224 × 224 × 16 | 0 | 802816 | 3211264 |

| CONV3 | 224 × 224 × 16 | 224 × 224 × 32 | 4608 | 1605632 | 231211008 |

| POOL4 | 224 × 224 × 32 | 112 × 112 × 32 | 0 | 401408 | 1605632 |

| CONV5 | 112 × 112 × 32 | 112 × 112 × 64 | 18432 | 802816 | 231211008 |

| POOL6 | 112 × 112 × 64 | 56 × 56 × 64 | 0 | 200704 | 802816 |

| CONV7 | 56 × 56 × 64 | 56 × 56 × 128 | 73728 | 401408 | 231211008 |

| POOL8 | 56 × 56 × 128 | 28 × 28 × 128 | 0 | 100352 | 401408 |

| CONV9 | 28 × 28 × 128 | 28 × 28 × 256 | 294912 | 200704 | 231211008 |

| POOL10 | 28 × 28 × 256 | 14 × 14 × 256 | 0 | 50176 | 200704 |

| CONV11 | 14 × 14 × 256 | 14 × 14 × 512 | 1179648 | 100352 | 231211008 |

| POOL12 | 14 × 14 × 512 | 7 × 7 × 512 | 0 | 25088 | 100352 |

| CONV13 | 7 × 7 × 512 | 7 × 7 × 1024 | 4718592 | 50176 | 231211008 |

| CONV14 | 7 × 7 × 1024 | 7 × 7 × 1024 | 9437182 | 50176 | 462422016 |

| CONV15 | 7 × 7 × 1024 | 7 × 7 × 1024 | 9437182 | 50176 | 462422016 |

| FLAT16 | 7 × 7 × 1024 | 1 × 1 × 50,176 | 0 | 50176 | 0 |

| FC17 | 1 × 1 × 50,176 | 1 × 1 × 256 | 12845056 | 256 | 12845056 |

| FC18 | 1 × 1 × 256 | 1 × 1 × 4096 | 1048576 | 4096 | 1048576 |

| FC19 | 1 × 1 × 4096 | 1 × 1 × 1470 | 6021120 | 1470 | 6021120 |

| Total | 45080192 | 9664702 | 2569558016 |

| Layer | Data Loading Time | Data Storage Time | Parameter Loading Time | Calculation Time | Actual Time |

|---|---|---|---|---|---|

| CONV1 | 0.67 ms | 1.33 ms | 0.00 ms | 3.01 ms | 3.01 ms |

| CONV3 | 0.33 ms | 0.67 ms | 0.00 ms | 3.01 ms | 3.01 ms |

| CONV5 | 0.16 ms | 0.33 ms | 0.00 ms | 3.01 ms | 3.01 ms |

| CONV7 | 0.08 ms | 0.16 ms | 0.03 ms | 3.01 ms | 3.14 ms |

| CONV9 | 0.04 ms | 0.08 ms | 0.12 ms | 3.01 ms | 3.13 ms |

| CONV11 | 0.02 ms | 0.04 ms | 0.49 ms | 3.01 ms | 3.50 ms |

| CONV13 | 0.01 ms | 0.02 ms | 1.96 ms | 3.01 ms | 4.97 ms |

| CONV14 | 0.02 ms | 0.02 ms | 3.93 ms | 6.02 ms | 9.95 ms |

| CONV15 | 0.02 ms | 0.02 ms | 3.93 ms | 6.02 ms | 9.95 ms |

| Total | 1.35 ms | 2.67 ms | 10.46 ms | 33.11 ms | 43.57 ms |

| Layer | Data Loading Time | Data Storage Time | Parameter Loading Time | Calculation Time | Actual Time |

|---|---|---|---|---|---|

| FC17 | 0.02 ms | 0.00 ms | 5.35 ms | 1.33 ms | 6.68 ms |

| FC18 | 0.00 ms | 0.00 ms | 0.44 ms | 0.11 ms | 0.55 ms |

| FC19 | 0.00 ms | 0.00 ms | 2.50 ms | 0.63 ms | 3.13 ms |

| Total | 0.02 ms | 0.00 | 8.29 ms | 2.07 ms | 10.36 ms |

| Method | Literature [30] | Literature [31] | Literature [29] | Literature [32] | Literature [33] | Literature [34] | Literature [35] | This Paper |

|---|---|---|---|---|---|---|---|---|

| Hardware Platform | VC707 | VC707 | Stratix-V GSD8 | XC7Z020 | XC7Z045 | XC7Z045 | XC7Z045 | ZU15EG |

| Clock frequency | 100 MHz | 100 MHz | 120 MHz | 214 MHz | 225 MHz | 200 MHz | 125 MHz | 300 MHz |

| DSP/Quantity | 2800 | 2800 | 1963 | 220 | 576 | 900 | 900 | 209 |

| Performance/GOPS | 85.2 | 61.62 | 72.4 | 84.3 | 45.13 | 107 | 124 | 153.60 |

| DSP efficiency/ | ||||||||

| (GOPS/Quantity) | 0.030 | 0.022 | 0.037 | 0.38 | 0.078 | 0.12 | 0.14 | 0.734 |

| Power | 7.6 W | 6.6 W | 10.4 W | 3.5 W | 5.7 W | 9.6 W | 4.8 W | 5.3 W |

| Energy Efficiency/ | ||||||||

| (GOPS/W) | 11.2 | 9.336 | 6.96 | 24.08 | 7.91 | 11.14 | 25.8 | 28.98 |

| Model | KNN | LSTM | CNN | CNN | VGG16 | U-Net | VGG | TinyYOLO |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Zhuang, C.; Feng, W.; Yang, Z.; Wang, Q. FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection. Appl. Sci. 2023, 13, 4144. https://doi.org/10.3390/app13074144

Yang X, Zhuang C, Feng W, Yang Z, Wang Q. FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection. Applied Sciences. 2023; 13(7):4144. https://doi.org/10.3390/app13074144

Chicago/Turabian StyleYang, Xu, Chen Zhuang, Wenquan Feng, Zhe Yang, and Qiang Wang. 2023. "FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection" Applied Sciences 13, no. 7: 4144. https://doi.org/10.3390/app13074144

APA StyleYang, X., Zhuang, C., Feng, W., Yang, Z., & Wang, Q. (2023). FPGA Implementation of a Deep Learning Acceleration Core Architecture for Image Target Detection. Applied Sciences, 13(7), 4144. https://doi.org/10.3390/app13074144