Fine-Tuning BERT-Based Pre-Trained Models for Arabic Dependency Parsing

Abstract

1. Introduction

- Examining the different aspects of fine-tuning BERT-based models for syntactic dependency analysis of the Arabic language.

- Identifying the models and methods that provide the best results and highlighting their weaknesses.

2. Background

2.1. Arabic Language

2.2. Sequence Labeling for Dependency Parsing

2.3. BERT Pre-Trained Language Models

3. Related Work

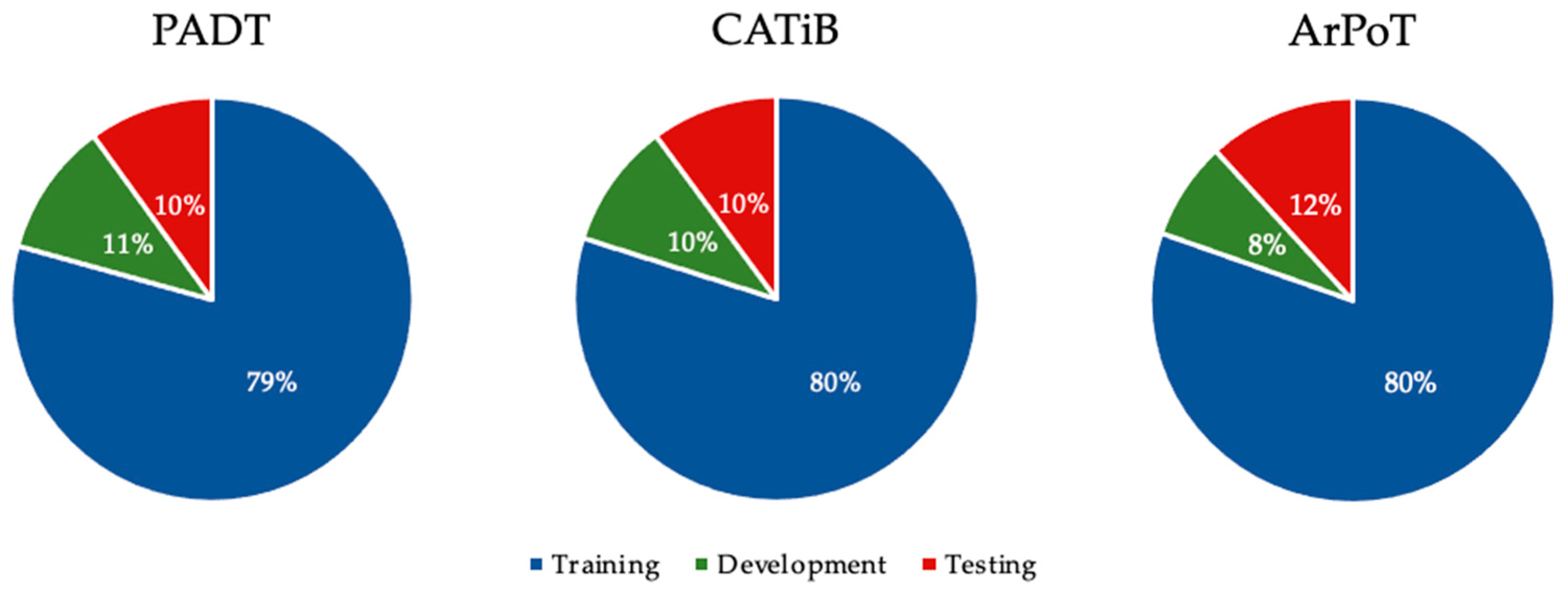

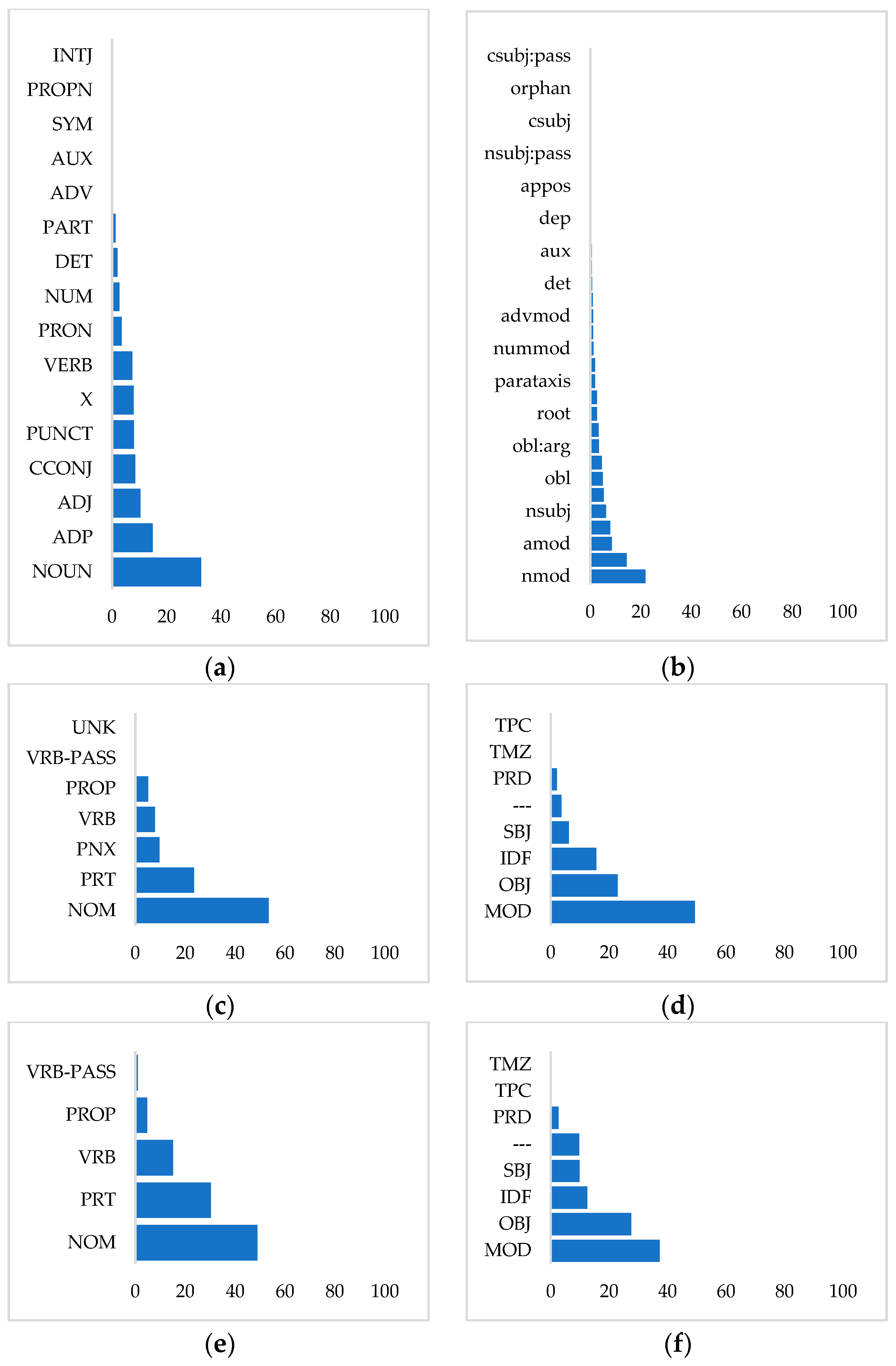

4. Corpora

Corpora Statistics

- Sentences: the number of sentences in the corpus.

- Max_L: the maximum length for the corpus sentences.

- Avg_L: the average number of tokens for sentences divided by the total number of words (tokens) in the corpus.

- Max_DL: the maximum dependency length (distance between dependents and heads).

- Avg_DL: the average dependencies length.

- TTR: the type–token ratio (TTR), which is the total number of unique words (types) divided by the total number of words (tokens) in a corpus.

5. Methodology

- The most effective head position encoding method for each treebank is identified in Section 6.1.

- The accurate pre-trained model for the syntactic parsing task on each corpus was differentiated by tuning all Arabic pre-trained models under study. In the first experiment, head position encoding methods with higher scores were utilized. In this process, out-of-vocabulary (OOV) words and the average number of tokens per word (P/W) created by tokenizers were verified (see Section 6.2).

- A comparison of fine-tuning techniques is presented in Section 6.3. Based on the previous sections of the experiments, we utilized the best encoding method and pre-trained model for each treebank.

6. Evaluation and Discussion

6.1. Choosing the Best Encoding Method

6.2. Choosing the Best BERT Model

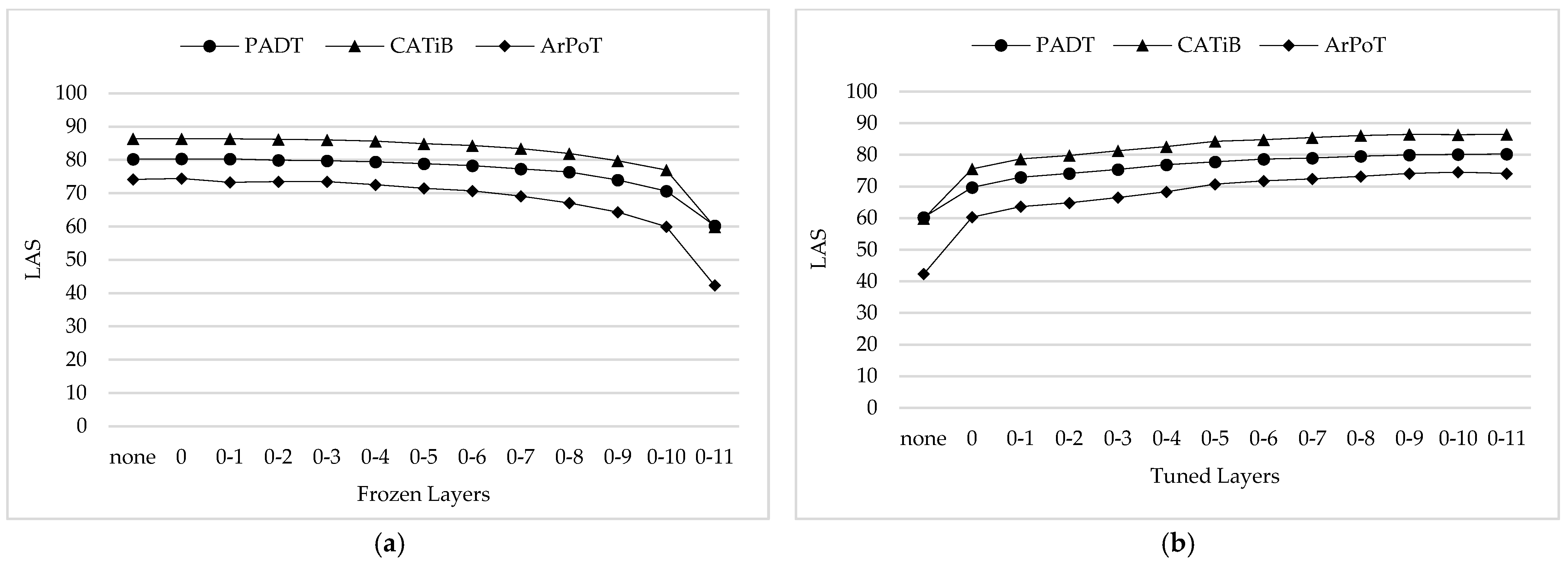

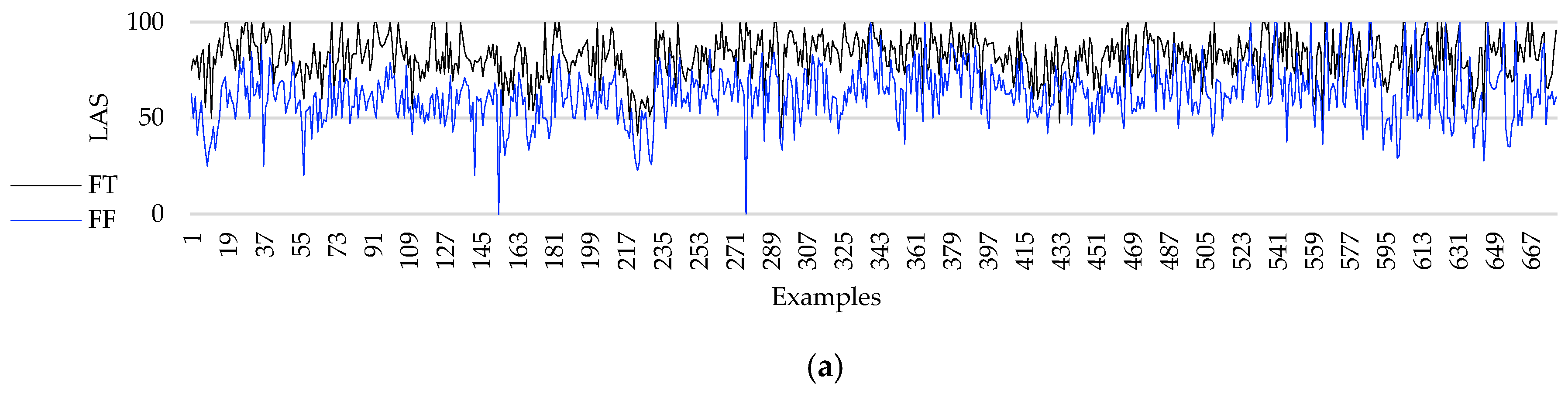

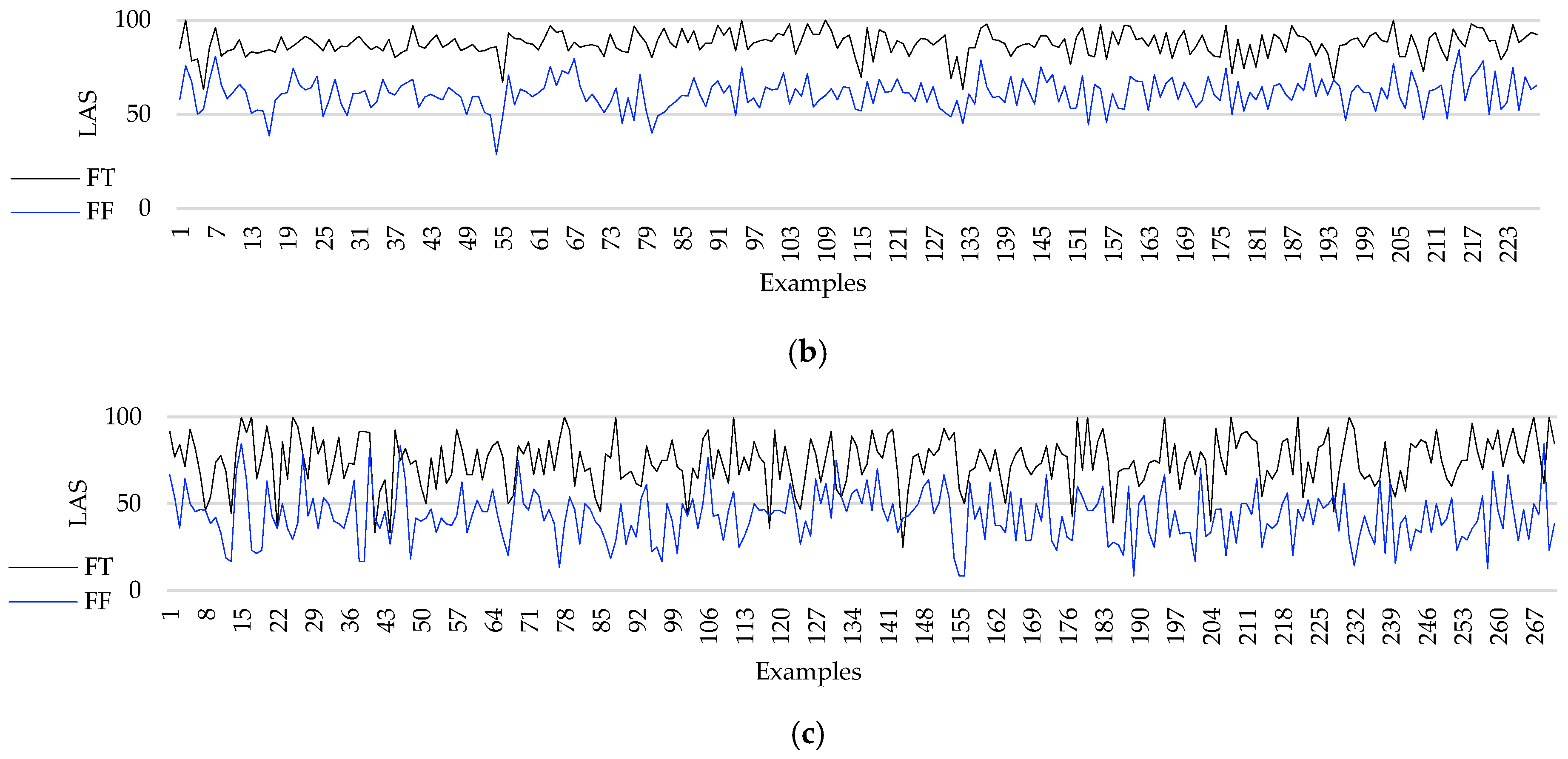

6.3. Evaluation of the Tuning Techniques

- Full Tuning (FT): Further train the entire architecture of the pre-trained model on the experimental datasets.

- Full Freezing (FF): All layers of the pre-trained model are frozen and tested on the datasets under study. In this case, the weights of the 12 layers are not updated.

- Partial Freezing (PF): The weights of some layers of the pre-trained model are kept frozen, while other layers are retrained.

7. Error Analysis

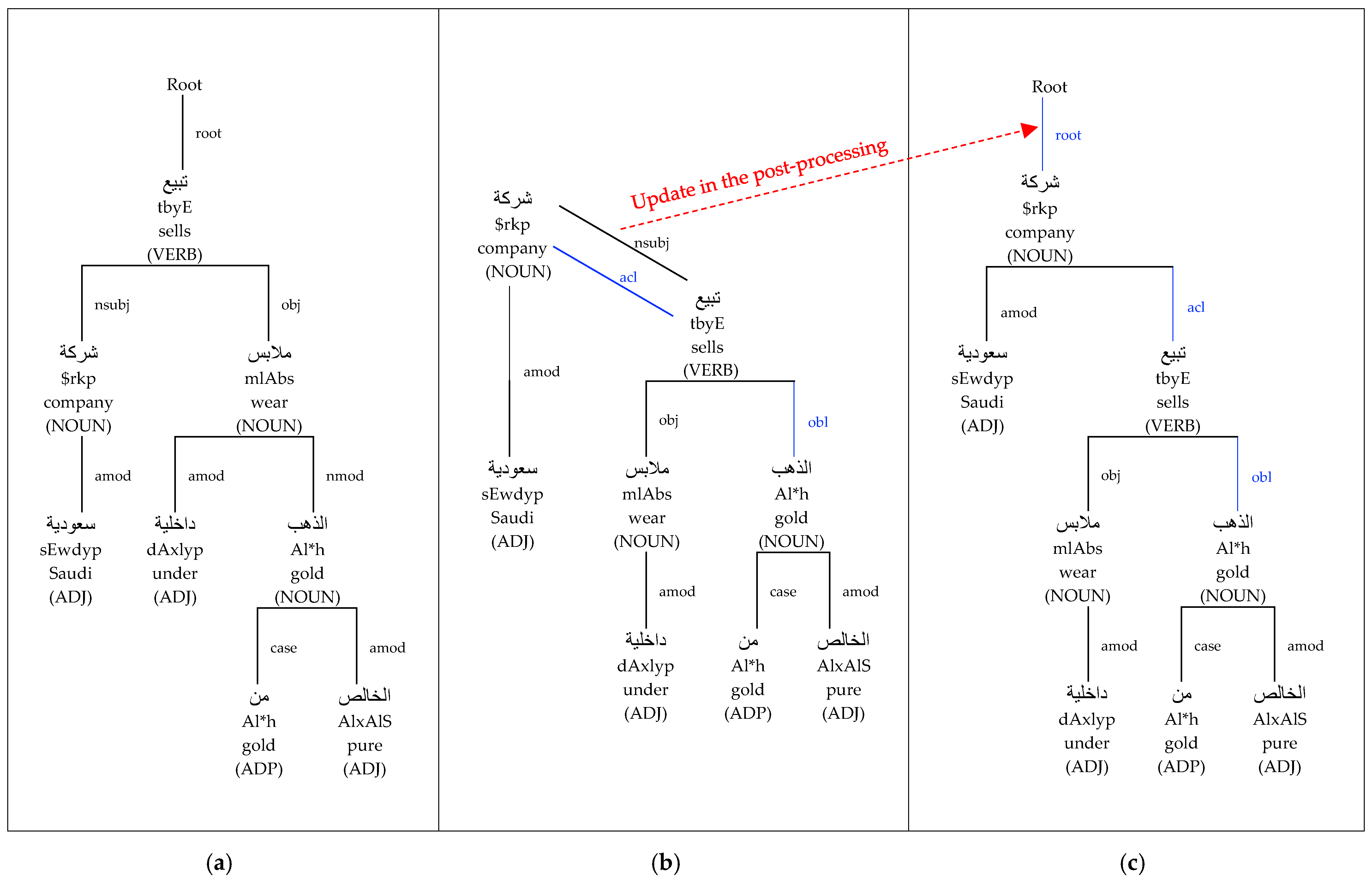

7.1. Parse Tree Post-Processing

7.2. Contextualized Embeddings

7.3. Erroneous POS Annotation

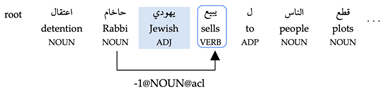

- The model correctly labeled the adjective word “اول/Awl/first” with (−1@NOUN@nmod). Thus, it should be headed by the preceding noun word “عقد/Eaqd/held”. The algorithm assigned “اول/Awl/first” to the root since no previous token with a “NOUN” POS tag is in the gold annotation (see Figure 8a).

- The model correctly labeled the noun word “مناظرة/mnAZrp/debate” with (−1@ADJ@nmod); thus, it should be headed by the preceding adjective word “اول/Awl/first”. The algorithm assigned “مناظرة/mnAZrp/debate” to the root since no previous token with an “ADJ” POS is in the gold annotation (see Figure 8b).

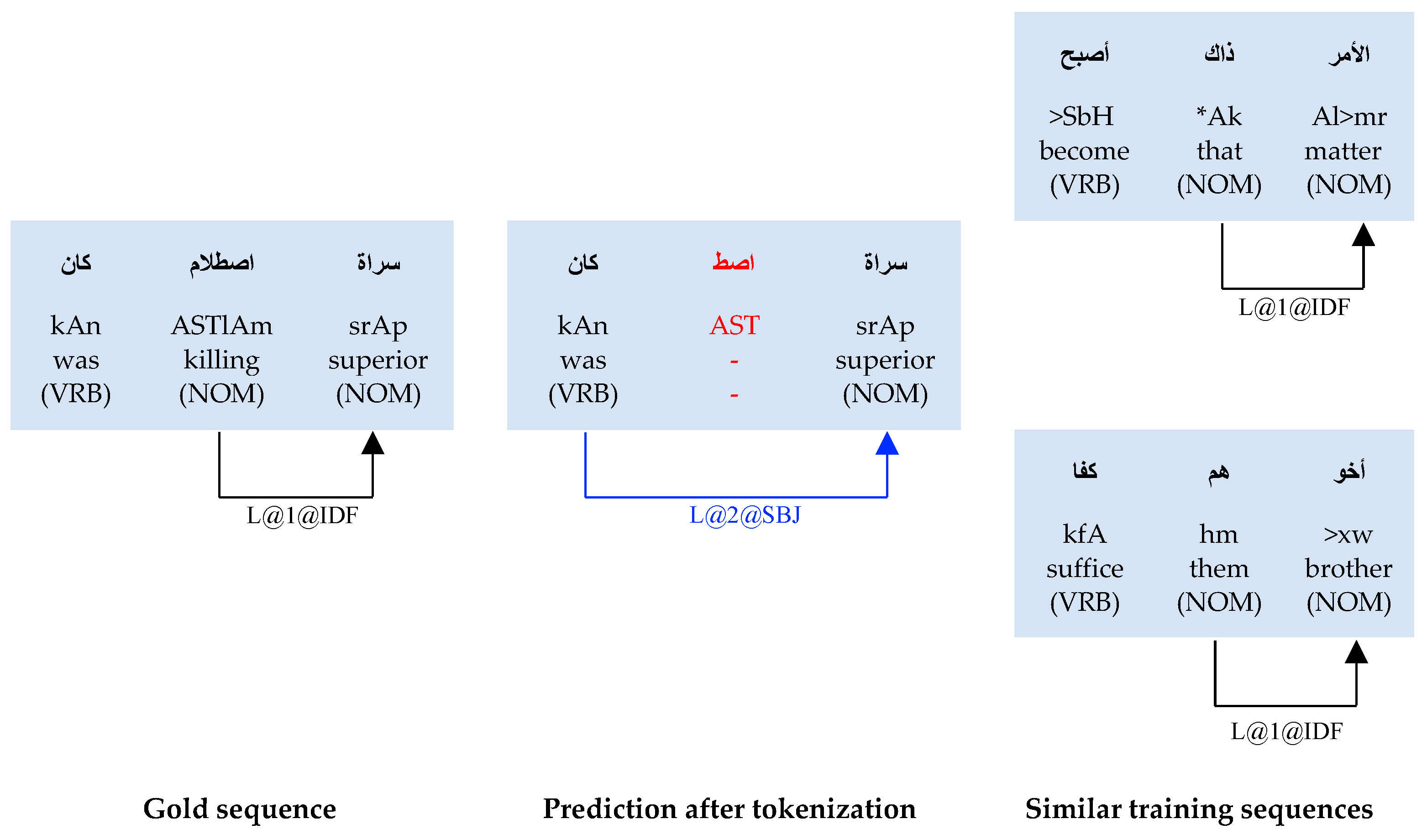

7.4. Erroneous Tokenization

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jurafsky, D.; Martin, J. Speech and Language Processing, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2019. [Google Scholar]

- Chowdhary, K. Natural Language Processing. In Fundamentals of Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 603–649. [Google Scholar]

- Kübler, S.; McDonald, R.; Nivre, J. Dependency Parsing. Synth. Lect. Hum. Lang. Technol. 2009, 1, 1–127. [Google Scholar]

- Zhang, M.; Li, Z.; Fu, G.; Zhang, M. Syntax-Enhanced Neural Machine Translation with Syntax-Aware Word Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 1151–1161. [Google Scholar]

- Sachan, D.S.; Zhang, Y.; Qi, P.; Hamilton, W.L. Do Syntax Trees Help Pre-Trained Transformers Extract Information? In Proceedings of the EACL, Online, 19–23 April 2021. [Google Scholar]

- Guo, Q.; Qiu, X.; Xue, X.; Zhang, Z. Syntax-Guided Text Generation via Graph Neural Network. Sci. China Inf. Sci. 2021, 64, 152102. [Google Scholar] [CrossRef]

- Legrand, J.; Collobert, R. Deep Neural Networks for Syntactic Parsing of Morphologically Rich Languages. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Berlin, Germany, 7–12 August 2016; pp. 573–578. [Google Scholar]

- Dehdari, J.; Tounsi, L.; van Genabith, J. Morphological Features for Parsing Morphologically-Rich Languages: A Case of Arabic. In Proceedings of the Second Workshop on Statistical Parsing of Morphologically Rich Languages, Dublin, Ireland, 6 October 2011; pp. 12–21. [Google Scholar]

- Tsarfaty, R. Syntax and Parsing of Semitic Languages. In Natural Language Processing of Semitic Languages; Springer: Berlin/Heidelberg, Germany, 2014; pp. 67–128. [Google Scholar]

- Nivre, J. Multilingual Dependency Parsing from Universal Dependencies to Sesame Street. In Proceedings of the International Conference on Text, Speech, and Dialogue, Brno, Czech Republic, 8–11 September 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 11–29. [Google Scholar]

- Barry, J. Investigating Multilingual Approaches for Parsing Universal Dependencies. Ph.D. Thesis, Dublin City University, Dublin, Ireland, 2022. [Google Scholar]

- Seyoum, B.E.; Miyao, Y.; Mekonnen, B.Y. Comparing Neural Network Parsers for a Less-Resourced and Morphologically-Rich Language: Amharic Dependency Parser. In Proceedings of the First Workshop on Resources for African Indigenous Languages, Marseille, France, 11–16 May 2020; pp. 25–30. [Google Scholar]

- Kumar, C.A.; Maharana, A.; Murali, S.; Premjith, B.; Kp, S. BERT-Based Sequence Labelling Approach for Dependency Parsing in Tamil. In Proceedings of the Second Workshop on Speech and Language Technologies for Dravidian Languages, Dublin, Ireland, 26 May 2022; pp. 1–8. [Google Scholar]

- Sarveswaran, K.; Dias, G. ThamizhiUDp: A Dependency Parser for Tamil. In Proceedings of the 17th International Conference on Natural Language Processing (ICON), Patna, India, 18–21 December 2020; pp. 200–207. [Google Scholar]

- Prokopidis, P.; Piperidis, S. A Neural NLP Toolkit for Greek. In Proceedings of the 11th Hellenic Conference on Artificial Intelligence, Athens, Greece, 2–4 September 2020; pp. 125–128. [Google Scholar]

- Fankhauser, P.; Do, B.-N.; Kupietz, M. Evaluating a Dependency Parser on DeReKo. In Proceedings of the 8th Workshop on Challenges in the Management of Large Corpora, Marseille, France, 11–16 May 2020; pp. 10–14. [Google Scholar]

- Özateş, Ş.B.; Özgür, A.; Güngör, T.; Başaran, B.Ö. A Hybrid Deep Dependency Parsing Approach Enhanced With Rules and Morphology: A Case Study for Turkish. IEEE Access 2022, 10, 93867–93886. [Google Scholar] [CrossRef]

- Shaalan, K.; Siddiqui, S.; Alkhatib, M.; Abdel Monem, A. Challenges in Arabic Natural Language Processing. In Computational Linguistics, Speech and Image Processing for Arabic Language; World Scientific: Singapore, 2019; pp. 59–83. [Google Scholar]

- Al-Ghamdi, S.; Al-Khalifa, H.; Al-Salman, A. A Dependency Treebank for Classical Arabic Poetry. In Proceedings of the Sixth International Conference on Dependency Linguistics (Depling, SyntaxFest 2021), Sofia, Bulgaria, 21–25 March 2021; pp. 1–9. [Google Scholar]

- Strzyz, M.; Vilares, D.; Gómez-Rodríguez, C. Viable Dependency Parsing as Sequence Labeling. In Proceedings of the 2019 Conference of the North, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 717–723. [Google Scholar]

- Han, X.; Zhang, Z.; Ding, N.; Gu, Y.; Liu, X.; Huo, Y.; Qiu, J.; Yao, Y.; Zhang, A.; Zhang, L. Pre-Trained Models: Past, Present and Future. AI Open 2021, 2, 225–250. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Inoue, G.; Alhafni, B.; Baimukan, N.; Bouamor, H.; Habash, N. The Interplay of Variant, Size, and Task Type in Arabic Pre-Trained Language Models. In Proceedings of the Sixth Arabic Natural Language Processing Workshop, Kiev, Ukraine, 19 April 2021; pp. 92–104. [Google Scholar]

- Safaya, A.; Abdullatif, M.; Yuret, D. Kuisail at Semeval-2020 Task 12: Bert-Cnn for Offensive Speech Identification in Social Media. In Proceedings of the Fourteenth Workshop on Semantic Evaluation, Online, 12–13 December 2020; pp. 2054–2059. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. Arabert: Transformer-Based Model for Arabic Language Understanding. arXiv 2020, arXiv:2003.00104. [Google Scholar]

- Abdul-Mageed, M.; Elmadany, A.; Nagoudi, E.M.B. ARBERT & MARBERT: Deep Bidirectional Transformers for Arabic. arXiv 2020, arXiv:2101.01785. [Google Scholar]

- Lan, W.; Chen, Y.; Xu, W.; Ritter, A. Gigabert: Zero-Shot Transfer Learning from English to Arabic. In Proceedings of the 2020 Conference on Empirical Methods on Natural Language Processing (EMNLP), Online, 19–20 November 2020. [Google Scholar]

- Vilares, D.; Strzyz, M.; Søgaard, A.; Gómez-Rodríguez, C. Parsing as Pretraining. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 9114–9121. [Google Scholar]

- Alsaaran, N.; Alrabiah, M. Classical Arabic Named Entity Recognition Using Variant Deep Neural Network Architectures and BERT. IEEE Access 2021, 9, 91537–91547. [Google Scholar] [CrossRef]

- Merchant, A.; Rahimtoroghi, E.; Pavlick, E.; Tenney, I. What Happens to Bert Embeddings during Fine-Tuning? arXiv 2020, arXiv:2004.14448. [Google Scholar]

- Rogers, A.; Kovaleva, O.; Rumshisky, A. A Primer in Bertology: What We Know about How Bert Works. Trans. Assoc. Comput. Linguist. 2020, 8, 842–866. [Google Scholar] [CrossRef]

- Wu, Z.; Liu, N.F.; Potts, C. Identifying the Limits of Cross-Domain Knowledge Transfer for Pretrained Models. arXiv 2021, arXiv:2104.08410. [Google Scholar]

- Bouma, G.; Seddah, D.; Zeman, D. Overview of the IWPT 2020 Shared Task on Parsing into Enhanced Universal Dependencies. In Proceedings of the 16th International Conference on Parsing Technologies and the IWPT 2020 Shared Task on Parsing into Enhanced Universal Dependencies, Seattle, WA, USA, 9 July 2020; pp. 151–161. [Google Scholar]

- Diab, M.; Habash, N.; Rambow, O.; Roth, R. LDC Arabic Treebanks and Associated Corpora: Data Divisions Manual. arXiv 2013, arXiv:1309.5652. [Google Scholar]

- Habash, N.; Faraj, R.; Roth, R. Syntactic Annotation in the Columbia Arabic Treebank. In Proceedings of the MEDAR International Conference on Arabic Language Resources and Tools, Cairo, Egypt, 22–23 April 2009; Volume 83. [Google Scholar]

- Richards, B. Type/Token Ratios: What Do They Really Tell Us? J. Child Lang. 1987, 14, 201–209. [Google Scholar] [CrossRef] [PubMed]

- Green, N. Dependency Parsing. In Proceedings of the WDS 2011, Proceedings of Contributed Papers, Matfyzpress, Prague, 31 May–3 June 2011; pp. 137–142. [Google Scholar]

- Abramovich, F.; Pensky, M. Classification with Many Classes: Challenges and Pluses. J. Multivar. Anal. 2019, 174, 104536. [Google Scholar] [CrossRef]

- Van Thinh, T.; Duong, P.C.; Nasahara, K.N.; Tadono, T. How Does Land Use/Land Cover Map’s Accuracy Depend on Number of Classification Classes? Sola 2019, 15, 28–31. [Google Scholar] [CrossRef]

- Anil, R.; Ghazi, B.; Gupta, V.; Kumar, R.; Manurangsi, P. Large-Scale Differentially Private Bert. arXiv 2021, arXiv:2108.01624. [Google Scholar]

- Graves, A. Long Short-Term Memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Wiedemann, G.; Remus, S.; Chawla, A.; Biemann, C. Does BERT Make Any Sense? Interpretable Word Sense Disambiguation with Contextualized Embeddings. arXiv 2019, arXiv:1909.10430. [Google Scholar]

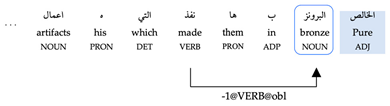

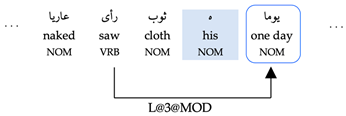

| Identifier | Encoding | Label | Label Meaning |

|---|---|---|---|

| E1 | Naive positional | 4@OBJ | Id of the head is 4, and the syntactic relation is OBJ |

| E2 | Relative positional | R@2@MOD | The head is the second right (R) token, and the syntactic relation is MOD |

| E3 | Relative POS-based | −1@VRB@MOD | The head is the first left token that has VRB pos tag, and the syntactic relation is MOD |

| Model Name * | Variants | Ref. | |

|---|---|---|---|

| Camel-MSA | CAMeL-Lab/BERT-based-arabic-camelbert-msa | MSA | [23] |

| Camel-CA | CAMeL-Lab/BERT-based-arabic-camelbert-ca | CA | [23] |

| Camel-mix | CAMeL-Lab/BERT-based-arabic-camelbert-mix | MSA/DA/CA | [23] |

| multilingual | BERT-based-multilingual-uncased | MSA | [22] |

| Arabic | asafaya/BERT-based-arabic | MSA | [24] |

| AraBERTv1 | aubmindlab/BERT-based-AraBERTv01 | MSA | [25] |

| AraBERTv2 | aubmindlab/BERT-based-AraBERTv02 | MSA | [25] |

| ARBERT | UBC-NLP/ARBERT | MSA | [26] |

| GigaBERT | lanwuwei/GigaBERT-v4-Arabic-and-English | MSA | [27] |

| Treebank | All | Training | Development | Testing |

|---|---|---|---|---|

| PADT | 282,384 | 223,881 * | 30,239 | 28,264 |

| CATiB | 169,319 | 135,219 * | 16,972 * | 17,128 |

| ArPoT | 35,459 | 28,506 | 2771 | 4182 |

| Treebank | POS Tags | Dependency Relations Labels |

|---|---|---|

| PADT | 16 | 33 |

| CATiB | 6 * | 8 |

| ArPoT | 5 | 8 |

| PADT | CATiB | ArPoT | |

|---|---|---|---|

| Sentences | 7664 | 2591 | 2400 * |

| Max_L | 398 | 609 | 68 |

| Avg_L | 36.85 | 65.28 | 14.77 |

| Max_DL | 397 | 479 | 46 |

| Avg_DL | 4.07 | 4.21 | 2.07 |

| TTR | 9.34 | 9.5 | 28.26 |

| E1 | E2 | E3 | |

|---|---|---|---|

| PADT | 4513 | 2017 | 1793 |

| CATiB | 1923 | 563 | 297 |

| ArPoT | 305 | 164 | 173 |

| Treebank (Model) | DEV | TEST | ||||

|---|---|---|---|---|---|---|

| E1 | E2 | E3 | E1 | E2 | E3 | |

| PADT (Camel-MSA) | 64.25/60.65 | 81.79/77.46 | 83.12/79.00 | 85.48/55.40 | 82.18/77.77 | 83.06/79.17 |

| CATiB (Camel-MSA) | 46.88/45.89 | 83.89/82.40 | 86.35/85.23 | 44.26/43.07 | 83.75/82.20 | 86.52/85.33 |

| ArPoT (Camel-CA) | 64.63/57.77 | 78.74/70.77 | 75.47/70.28 | 64.39/58.38 | 78.91/72.39 | 76.70/72.06 |

| TEST UAS/LAS | |||

|---|---|---|---|

| Model | PADTE3 | CATiBE3 | ArPoTE2 |

| Camel-MSA | 83.10/79.17 | 86.47/85.29 | 78.72/71.83 |

| Camel-CA | 80.78/76.60 | 84.95/83.32 | 79.65/72.21 |

| Camel-mix | 82.37/78.37 | 85.64/84.35 | 78.48/71.95 |

| multilingual | 74.02/68.54 | 76.22/72.50 | 72.33/60.71 |

| Arabic | 80.02/76.52 | 82.65/80.59 | 73.58/64.30 |

| AraBERTv1 | 82.76/78.82 | 86.76/85.57 | 77.76/70.95 |

| AraBERTv2 | 84.03/80.26 | 87.54/86.41 | 79.79/74.13 |

| ARBERT | 80.37/76.11 | 78.31/75.95 | 75.06/66.19 |

| GigaBERT | 80.41/76.06 | 83.29/81.28 | 73.39/62.31 |

| Source\Model | Camel-MSA | Camel-CA | Camel-mix | Multilingual | Arabic | AraBERTv1 | AraBERTv2 | ARBERT | GigaBERT |

|---|---|---|---|---|---|---|---|---|---|

| AR_Wiki |  |  |  |  |  |  |  | ||

| El-Khair |  |  |  |  |  | ||||

| OSIAN |  |  |  |  |  | ||||

| OpenITI |  |  | |||||||

| OSCAR 2019 |  |  | |||||||

| OSCAR 2020 |  |  |  |  | |||||

| Gigaword |  |  |  |  | |||||

| Dialectal Corpora |  | ||||||||

| Multi_Wiki |  | ||||||||

| News |  | ||||||||

| As-safir |  | ||||||||

| Hindawi |  | ||||||||

| Code-switch |  |

| Model | (MLM) Pre-Training Objective | Tokenizer | Batch Size | MSL | TD (Days) | Total Steps | Words | Data Size | Vocabulary Size |

|---|---|---|---|---|---|---|---|---|---|

| Camel-MSA | WWM + NSP | WP | 1024/256 | 128/512 | ~4.5 | 1 M | 12.6 B | 107 GB | 30 K |

| Camel-CA | WWM + NSP | WP | 1024/256 | 128/512 | ~4.5 | 1 M | 847 M | 6 GB | 30 K |

| Camel-mix | WWM + NSP | WP | 1024/256 | 128/512 | ~4.5 | 1 M | 17.3 B | 167 GB | 30 K |

| multilingual | SWM + NSP | WP | - | - | - | - | - | - | 120 K |

| Arabic | WWM + NSP | WP | 128 | - | - | 4 M | 8.2 B | 95 GB | 32 K |

| AraBERTv1 | WWM + NSP | SP | 512/128 | 128/512 | 4 | 1.25 M | 2.7 B | 23 GB | 60 K |

| AraBERTv2 | WWM + NSP | WP | 2560/384 | 128/512 | 36 | 3 M | 8.6 B | 77 GB | 60 K |

| ARBERT | WWM + NSP | WP | 256 | 128 | 16 | 8 M | 6.5 B | 61 GB | 100 K |

| GigaBERT | SWM + NSP | WP | 512 | 128/512 | - | 1.48 M | 10.4 B | - | 50 K |

| Camel-MSA,CA,mix | Multilingual | Arabic | AraBERTv1 | AraBERTv2 | ARBERT | GigaBERT | |

|---|---|---|---|---|---|---|---|

| PADTE3 | 1.27 | 10.82 | 10.82 | 1.76 | 1.54 | 12.46 | 10.82 |

| CATiBE3 | 0.15 | 17.10 | 14.71 | 2.59 | 0.32 | 18.42 | 14.71 |

| ArPoTE2 | 0.00 | 3.43 | 3.43 | 0.00 | 0.00 | 3.43 | 3.43 |

| Camel-MSA,CA,mix | Multilingual | Arabic | AraBERTv1 | AraBERTv2 | ARBERT | GigaBERT | |

|---|---|---|---|---|---|---|---|

| PADTE3 | 1.1 | 1.6 | 1.1 | 1.1 | 1.1 | 1.0 | 1.1 |

| CATiBE3 | 1.2 | 1.5 | 1.1 | 1.1 | 1.1 | 1.1 | 1.1 |

| ArPoTE2 | 1.3 | 1.7 | 1.2 | 1.4 | 1.2 | 1.1 | 1.2 |

| FT | FT+LSTM | FT+GRU | FF | FF+LSTM | FF+GRU | |

|---|---|---|---|---|---|---|

| PADTE3 | 84.03/80.26 | 82.90/78.92 | 83.64/79.74 | 65.30/60.25 | 72.54/67.72 | 75.44/70.98 |

| CATiBE3 | 87.54/86.41 | 85.92/84.82 | 87.50/86.39 | 62.88/59.88 | 77.29/74.74 | 79.76/77.66 |

| ArPoTE2 | 79.79/74.13 | 76.88/70.83 | 79.51/73.63 | 56.84/42.32 | 69.87/60.93 | 72.64/64.73 |

| Frozen Layers | PADTE3 | CATiBE3 | ArPoTLR | Tuned Layers | PADTE3 | CATiBE3 | ArPoTLR |

|---|---|---|---|---|---|---|---|

| 0 | 84.01/80.34 | 87.41/86.32 | 80.27/74.41 | 0 | 74.11/69.72 | 77.92/75.54 | 69.58/60.28 |

| 0–1 | 83.85/80.28 | 87.39/86.32 | 79.48/73.27 | 0–1 | 77.08/72.93 | 80.83/78.72 | 71.66/63.61 |

| 0–2 | 83.68/79.93 | 87.24/86.15 | 79.63/73.48 | 0–2 | 78.41/74.12 | 81.56/79.76 | 73.08/64.83 |

| 0–3 | 83.58/79.74 | 87.13/86.02 | 79.48/73.46 | 0–3 | 79.49/75.37 | 82.95/81.29 | 74.03/66.48 |

| 0–4 | 83.18/79.40 | 86.71/85.62 | 78.86/72.55 | 0–4 | 80.92/76.88 | 84.12/82.62 | 75.59/68.29 |

| 0–5 | 82.65/78.85 | 86.02/84.86 | 78.00/71.47 | 0–5 | 81.80/77.78 | 85.51/84.27 | 76.83/70.73 |

| 0–6 | 82.06/78.28 | 85.48/84.32 | 77.21/70.73 | 0–6 | 82.59/78.64 | 86.01/84.77 | 77.79/71.76 |

| 0–7 | 81.07/77.32 | 84.59/83.41 | 75.63/69.11 | 0–7 | 82.97/79.01 | 86.62/85.46 | 78.55/72.36 |

| 0–8 | 80.17/76.35 | 83.21/81.94 | 73.58/67.07 | 0–8 | 83.48/79.57 | 87.21/86.09 | 78.89/73.19 |

| 0–9 | 77.89/73.99 | 81.28/79.73 | 71.64/64.32 | 0–9 | 83.83/79.98 | 87.65/86.49 | 80.01/74.10 |

| 0–10 | 74.54/70.66 | 78.75/76.95 | 67.86/59.97 | 0–10 | 84.00/80.15 | 87.46/86.39 | 80.32/74.53 |

| 0–11 | 65.30/60.25 | 62.88/59.88 | 56.84/42.32 | 0–11 | 84.03/80.26 | 87.54/86.41 | 79.79/74.13 |

| Treebank | Token | POS | TP (Train-Dev) | TP (Test) | Labels | Accuracy |

|---|---|---|---|---|---|---|

| PADT | % | SYM (UC) | 87.76 | 97.5 | 2 | 100 |

| . | PUNCT (C) | 33.79 | 34.92 | 159 | 60.53 | |

| CATiB | مصر/mSr/Egypt | PROP (UC) | 4.81 | 3.75 | 16 | 100.0 |

| و/w/and | PRT (C) | 25.69 | 26.73 | 130 | 70.86 | |

| ArPoT | الله/Allh/God | PROP (UC) | 4.63 | 6.8 | 8 | 92.86 |

| و/w/and | PRT (C) | 22.43 | 21.28 | 28 | 62.19 |

| No | Corps | Example |

|---|---|---|

| 1 | PADT | شركة سعودية تبيع ملابس داخلية من الذهب الخالص $rkp sEwdyp tbyE mlAbs dAxlyp mn Al*hb AlxAlS A Saudi company sells pure gold underwear |

| 2 | PADT | عقد اول مناظرة بين المرشحين الديمقراطيين ل الرئاسة فى الولايات المتحدة Eqd Awl mnAZrp byn Almr$Hyn AldymqrATyyn l Alr}Asp fy AlwlAyAt AlmtHdp AlAmrykyp Held the first debate between the Democratic candidates for the presidency in the United States |

| 3 | ArPoT | وفي ناتق كان اصطلام سراة هم ليالي أفنى القرح جل إياد w fy nAtq kAn ASTlAm srAp hm lyAly >fnY AlqrH jl <yAd Extermination of Ayad’s lofty was at Nateq’s nights when a surgeon killed most of them |

| Test Example | Training Example with Similar Words | |

|---|---|---|

| 1 PADT |  |  |

|  | |

|  | |

| 3 ArPoT |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Ghamdi, S.; Al-Khalifa, H.; Al-Salman, A. Fine-Tuning BERT-Based Pre-Trained Models for Arabic Dependency Parsing. Appl. Sci. 2023, 13, 4225. https://doi.org/10.3390/app13074225

Al-Ghamdi S, Al-Khalifa H, Al-Salman A. Fine-Tuning BERT-Based Pre-Trained Models for Arabic Dependency Parsing. Applied Sciences. 2023; 13(7):4225. https://doi.org/10.3390/app13074225

Chicago/Turabian StyleAl-Ghamdi, Sharefah, Hend Al-Khalifa, and Abdulmalik Al-Salman. 2023. "Fine-Tuning BERT-Based Pre-Trained Models for Arabic Dependency Parsing" Applied Sciences 13, no. 7: 4225. https://doi.org/10.3390/app13074225

APA StyleAl-Ghamdi, S., Al-Khalifa, H., & Al-Salman, A. (2023). Fine-Tuning BERT-Based Pre-Trained Models for Arabic Dependency Parsing. Applied Sciences, 13(7), 4225. https://doi.org/10.3390/app13074225