1. Introduction

Detecting a stain defect image is challenging because the ambiguous boundary of a stain defect with an image processing–based method is complex. A stain defect is caused by an error in the manufacturing process, such as external contaminants, uneven paint coating, and other errors. It is slightly bright or has a dark brightness in contrast to a local area, and this contrast is difficult to detect with the naked eye.

Previous stain defect detection studies have detected stain defects using image processing techniques [

1,

2,

3,

4,

5,

6,

7]. Kong et al. [

1] proposed a method for calculating the variance of the inspection area image and judging it as a stain defect when it exceeds a certain threshold. Zhang et al. [

3] detected the stain defect by calculating the Just Noticeable Difference (JND) value after applying the watershed method in the forward and reverse directions of the image to detect the boundary of the stain defect area. Han and Shi [

6] extracted the wavelet image of an input image using wavelet transform and detected a stain defect by removing the background component with the difference image between the wavelet image and the input image. The above methods are methods for modeling and removing the background component and have a disadvantage in that accurate modeling of the background component is required.

A study using the Gabor filter to detect stain defects has been proposed to solve this shortcoming [

8,

9,

10,

11]. The Gabor filter was presented as a human visual system model and as a filter that extracts texture features using a sine wave with a specific frequency and direction. The Gabor filter detected and modeled the texture feature of the background component having the same continuous pattern and was used to detect the stain defect by removing the background component of the input image. Bi et al. [

8] extracted the Gabor filter image using the Gabor filter’s actual part and created a binarized image based on a specific threshold to detect the stain defect on the liquid crystal display (LCD). Mao et al. [

10] selected Gabor filter parameters with high stain defect classification performance by applying them to the training image. The stain defect was then detected using the selected Gabor filter.

Recently, with the development of deep learning, several studies to detect defects using a CNN have been proposed [

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24]. Xiao et al. [

12] proposed a hierarchical feature–based CNN (H-CNN) structure that generates Regions Of Interest (ROI) using region-based CNN (R-CNN) and detects the defect using a fully CNN (F-CNN). Singh et al. [

14] compared the classification performance for stain defects on metal surfaces using a CNN such as ResNet [

25] and YOLO [

26]. Yang et al. [

15] created a defect detection model by learning stain defects using the transfer learning technique of a deep CNN extractor trained in advance on the ImageNet Large Scale Visual Recognition Challenge 2012 (ILSVRC2012) dataset. Hou et al. [

24] proposed a directional kernel to detect weak scratches.

The segmentation method can accurately explore the shape of the defect, but it takes a long time to compute. In addition, several studies to detect defects using segmentation have been proposed [

27,

28,

29]. Tabernik [

27] proposed After applying segmentation, the defect was detected using the method using the detection. In addition, Huang [

28] introduced The MT set first, and defects were detected using the McuePushU network.

The previous deep learning–based stain defect classification method used a single inspection image as input [

14,

15]. The Gabor filter image has an advantage in image texture analysis, so performance improvement is expected when used as an input for CNN. However, it is necessary to design a CNN to extract features advantageous for stain defect classification using different domains, such as inspection and Gabor filter images.

This paper proposes a CNN for stain defect classification using inspection images and Gabor filter images intending to improve the performance of stain defect classification. First, a Gabor filter image is created using a Gabor filter on the inspection image obtained from the camera. A GLCM is constructed for the Gabor filter image, and an IDM value, a local homogeneity feature, is extracted from GLCM. The Gabor filter image is selected based on the IDM, and the inspection image and the selected Gabor filter image are used as input to the CNN.

The contribution of this paper is as follows.

1. Gabor images with Gabor filters applied were used to improve the accuracy of stain defect detection. A method of adding features for spot detection using Gabor images was used rather than a method without Gabor filter images. It was confirmed through experiments that the method using the Gabor image improved the accuracy.

2. The stain defect classification performance was improved through a Dual-stream extraction based on inspection image and Gabor filter images, compared to the existing Single-stream extraction. The most suitable image for stain defect classification from the extracted Gabor filter image was selected and used as an input to a Dual-stream extraction that connects two streams in parallel. Through this, the features of each input image were extracted independently and merged in a fully connected layer to improve the stain defect classification accuracy.

This paper is structured as follows.

Section 2 describes the structure of the stain defect classification system proposed in this paper.

Section 3 describes the Gabor filter image creation and selection process.

Section 4 describes the structure of a Single-stream extraction and a Dual-stream extraction.

Section 5 demonstrates the performance of the proposed method, the Dual-stream extraction, by conducting experiments on the MT and CCM datasets. Lastly,

Section 6 describes the conclusion of this paper and future research.

2. Stain Defect Classification System

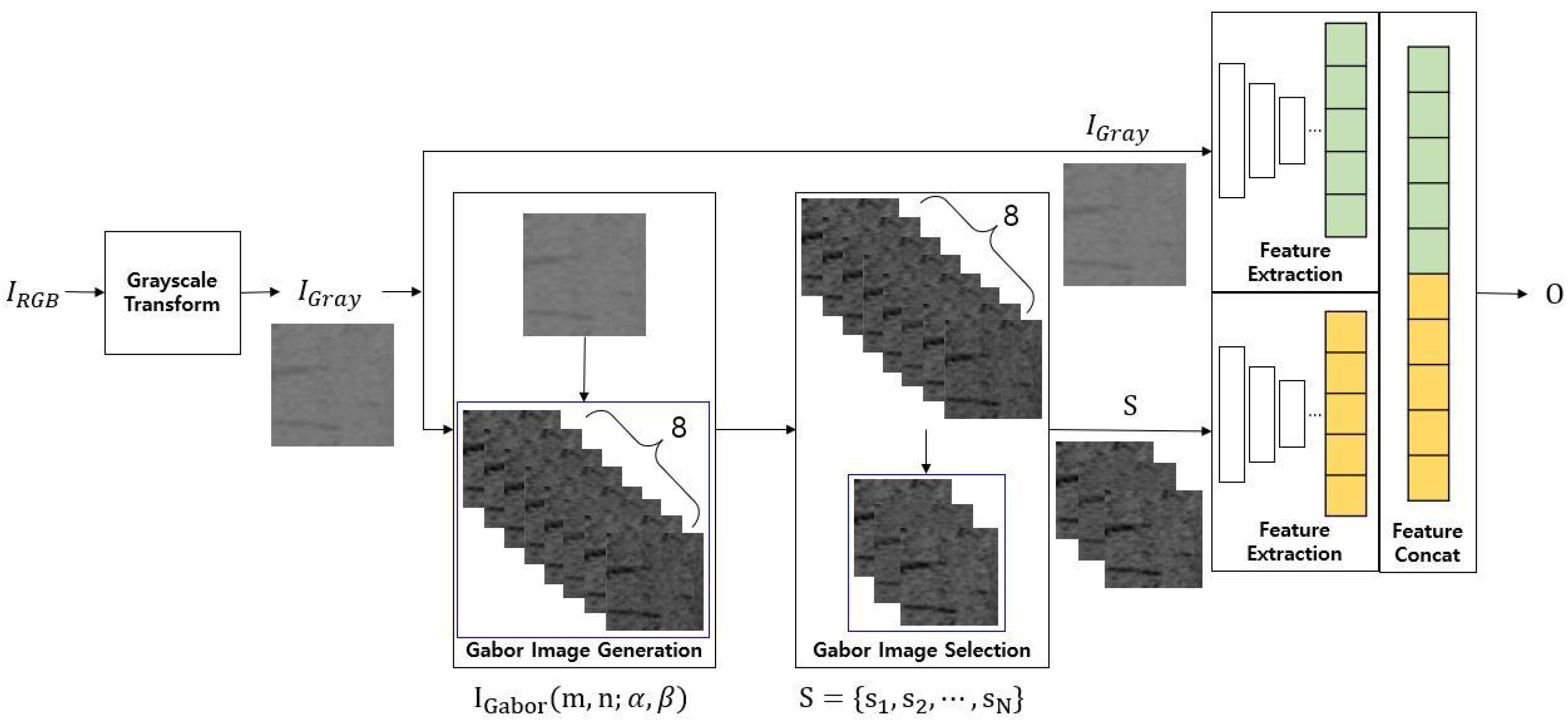

The structure of the stain defect classification system proposed in this paper is shown in

Figure 1. The proposed method comprises gray-scale transform, Gabor image generation, Gabor image selection, and CNN. The general process is as follows. The input inspection image

is converted to a gray-scale inspection image

through gray-scale transform. After this, the Gabor image was generated and selected from the input image. Finally, the classification result

O was obtained using a CNN with the selected Gabor images and gray-scale inspection image.

Let

,

, and

are red channel, green channel, and blue channel of the image, respectively, while

M and

N are the height and width of the image, respectively.

can be obtained as follows.

The Gabor image generation step is a step to obtain the Gabor filter image

for each phase. The Gabor filter transform uses a complex kernel with real and imaginary parts.

In the Gabor filter image selection step, a Gabor filter image with features useful for stain defects is selected among the generated Gabor filter images. By inputting the gray-scale inspection image

and the selected Gabor filter image

into the CNN

, the classification result

O for the inspection image is the output.

3. Gabor Image from Stain Defect Image

Gabor filter is used for image processing for reasons like how the human visual system responds. Gabor filter is a Gaussian filter modulated by a sine function. The Gabor filter image is the result of a convolution operation between the Gabor filter and the image. Because the Gabor filter can acquire the result according to the direction and frequency, it is possible to check what features exist in the input image in a specific direction and frequency band.

Let

x and

y be the pixel coordinates of the Gabor filter. Gabor filter can be defined as the following equation.

is a variable that determines the width of the Gabor filter kernel.

is a variable that determines the addition/subtraction period of the Gabor filter kernel.

is a variable that determines the direction of the Gabor filter kernel, and

is a mobility variable of the filter.

is a variable for adjusting the horizontal and vertical ratio of the filter. Lastly,

and

are the

x and

y coordinates of the Gabor filter rotated by

, respectively.

Gabor filter can obtain various result values according to internal parameters. Among these results, to select an image to improve the stain defect classification performance, the GLCM and the Haralick texture feature based on it were used. GLCM transformation is an algorithm that transforms two neighboring pixel values in an image into spatial coordinates of an

M ×

N matrix. Let the Δm and Δn are offsets in the width and height directions, respectively. the GLCM result matrix

can be defined as follows.

The GLCM matrices for

,

,

, and

directions can be obtained, respectively, according to the Δm and Δn. The Δm and Δn values for each angle are respectively (0,1), (1,0), (−1,1), (−1,−1). As the stain defect has an unspecified texture, features for all four directions should be used. Therefore, the GLCM matrix for four directions was obtained, and then the average value was used. The average matrix

P for the GLCM can be calculated as follows.

This paper used the Inverse Difference Moment (IDM) feature, one of the Haralick texture features, as a selection criterion for the generated Gabor filter image. IDM is an index indicating the local homogeneity of an image and it can be expressed as the following equation. Let

W and

H be the width and height of GLCM, respectively, and

W and

H have the same value (

W =

H).

The IDM value was used to select the Gabor filter images for stain defect classification. The denominator of the IDM equation becomes 1 in the diagonal component of the GLCM, having the same brightness value as the neighboring pixels, and the IDM value becomes the maximum in the main diagonal section. Meanwhile, as the distance from the diagonal component of the GLCM increases, the denominator’s value rises, and the IDM value decreases. In other words, a large IDM value suggests the presence of multiple pixels having similar brightness, and a small IDM value means that there are many pixels with a difference between neighboring pixels. Therefore, the smaller the IDM value, the lower the local homogeneity in the image, and it can be said that the image has a relatively clear boundary of the stain defect.

3.1. Gabor Image Generation

To extract the stain defect features for various frequency bands and directional, a total of 64 Gabor filters are generated according to the 8 amplitudes

and eight phases

as shown in the following equation, respectively. The size of each Gabor filter is 3 × 3.

Gabor filter image

are created through convolution operation between

and Gabor filter created.

3.2. Gabor Image Selection

Among the 8 Gabor images created in

Section 3.1, N images with the smallest IDM value are selected. An image with a small IDM is an image with a large change in values between pixels. Efficient feature extraction for stain detection is possible using the selected Gabor image. The Gabor image selection steps consist of follows.

Step (1) For the Gabor image created in

Section 3.1, create an average image

for each phase.

Step (2) Normalization is performed on the Gabor filter image

.

Step (3) According to Equations (6) and (7), the Gabor filter image (m,n;) is transformed into a GLCM, and the average matrix is obtained.

Step (4) IDM feature values are extracted from each average matrix

.

Step (5) The top N Gabor filter images with the highest IDM feature values are selected. The Gabor filter image selection Algorithm 1 proceeds as follows.

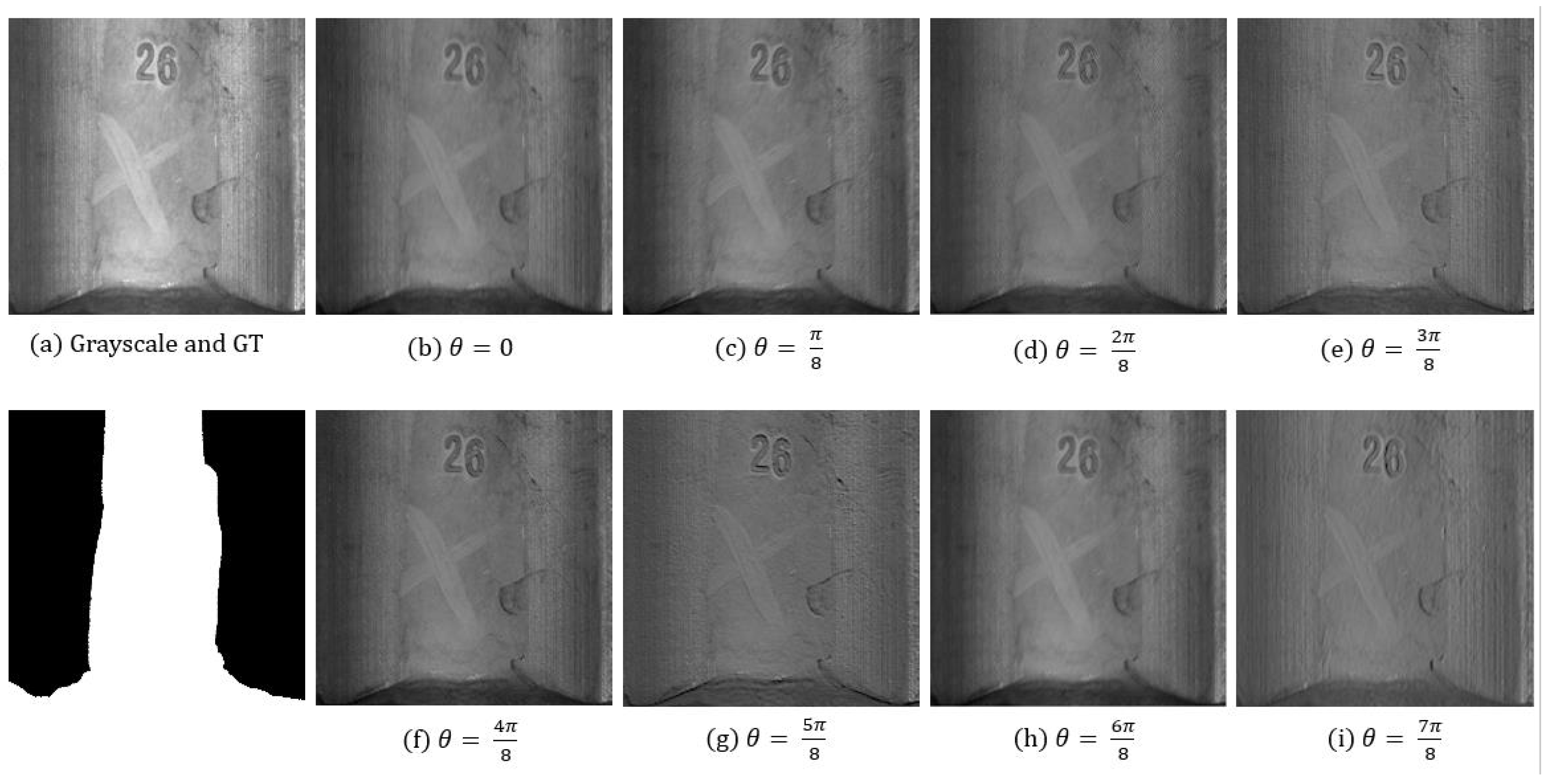

Figure 2 and

Figure 3 show the Gabor filter images for each phase concerning the stain defect image generated on the MT dataset and CCM dataset.

Figure 2a and

Figure 3a are a gray-scale image for the inspection image, and it also is a ground truth image showing the location of the stain defect.

Figure 2b–i and

Figure 3b–i are Gabor filter images according to phase, selected for CNN model creation according to their IDM values. The clarity of the boundary difference between the stain defect and the non-defect one depends on the Gabor filter’s phase. In the MT dataset of

Figure 2, the IDM values increase in the order of (b), (c), (h), (d), (f), (i), (e), and (g), and in the CCM dataset of

Figure 3, the IDM values increase in the order of (b), (c), (d), (i), (f), (h), (e), (g) increase.

| Algorithm 1 Select N Gabor filter image |

Require: Gabor filter image , IDM value F Ensure: Selected Gabor filter image set S for do end for

|

4. Feature Vector Extraction Based CNN

According to the previous process, the input of the CNN is a and a set of Gabor filter images . In general, CNN has one stream and uses a method of inputting a single image with N+1 channels by combining a gray-scale inspection image and a Gabor filter image. However, a Gabor filter image is generated through a Gabor filter and has a different domain from a gray-scale inspection image. When features are extracted from images of other parts with a CNN composed of a Single-stream, the process of extracting unique features that can be extracted from each part may not be performed smoothly. In this case, constructing a CNN with multiple streams to analyze the features of each part and then combining them can increase the detection accuracy. This section describes the structures of a Single-stream extraction and a Dual-stream extraction, respectively.

4.1. Single-Stream Extraction

Single-stream extraction is the most common type of CNN. Single-stream extraction is a structure that has been used in many CNNs, such as GoogLeNet. Single-stream extraction has a funnel-shaped structure in which layers are stacked and descended.

The CNN used in this paper was designed based on Res_Block in

Figure 4 consisting of three convolution layers, is a method devised in Res Net [

25] that uses the residual learning method. Res_Block simplifies the loss function with a shortcut structure between the input and output and is designed to alleviate the gradient vanishing problem that occurs when designing a deep CNN. The CNN used in this paper was designed based on Res_Block in

Figure 4. Res_Block was applied to both Single-stream and Dual-stream extraction structures.

Figure 4 shows the structure of the Single-stream extraction used in this paper. The

was constructed by stacking Gabor filter image

N channels and gray-scale inspection images in parallel, and this

is used as an input to CNN. A feature map with 64 channels was extracted through a convolution layer using a 7 × 7 kernel and sampled at a size of 112 × 112 using max pooling. After that, the feature map was extracted through 4 Res_Blocks, and max pooling was applied between each Res_Block. The numbers in parentheses of Res_Block mean

,

,

of Res_Block, and the number of repetitions of Res_Block, respectively. The number at the bottom of Res_Block means the width and height of the feature map, which is the intermediate result of the layer. 2048 × 1 size feature vector

V Merge is output from the last Res_Block, and when this vector passes through the fully connected layer, it is converted to

O as a classification result. The output result

O consists of a confidence score for OK and stain defects.

4.2. Dual-Stream Extraction

A stream is an independent layer structure before the feature maps merge in a CNN. In a Single-stream extraction, there is only one stream because there is no merging process. The feature map of the Gabor filter image and the gray-scale inspection image pass through a Single-stream, so the features of the Gabor filter image and the gray-scale inspection image are mixed in the training process. This process may cause a decrease in classification accuracy.

Alternatively, a Dual-stream extraction is composed of two streams. In this study, separate streams for the gray-scale inspection image and the Gabor filter image were designed to solve this decrease in classification accuracy. A CNN independently extracted features for each gray-scale inspection image and the Gabor filter image was constructed through the separate streams.

Figure 5 shows the dual-stream extraction of the network proposed in this paper. The Dual-stream extraction has two streams that extract features from the input gray-scale inspection image

and Gabor filter image

, and each stream for extracting features can be configured. The upper stream extracts a 2048 × 1 size feature vector

for the gray-scale inspection image through the convolution layer, pooling layer, and Res_Block. At the same time, the stream at the bottom, which has the same structure as the upper stream but has a different weight, extracts the 2048 × 1 size feature vector

for the Gabor filter image through the convolution layer, pooling layer, and Res_Block. The feature vector

of 4096 × 1 size, connecting the two feature vectors

and

, is converted to

O as a classification result of the fully connected layer. The two are separate streams that do not share weights, and their features are preserved independently without mixing.

Table 1 shows the Dual-stream extraction structure.

stream is the first flow in

Figure 5, and gray-scale images are input. For the structure, the resnet50 structure was used. The

stream is the second flow in

Figure 5 and inputs the selected Gabor images. The structure uses resnet50 in the same way.

and

in

Figure 5 output to each flow are merged with

, and the classification result is output as a fully connected layer. In this paper, ResNet is used as an example, but other networks for feature extraction may be used. We propose improving classification performance depending on the input and Gabor images, where the network is unimportant.

6. Conclusions

This paper proposed a Dual-stream network using a Gabor filter image and gray-scale inspection image as input to detect stain defects. The Gabor filter image was selected according to the IDM score based on the GLCM and used as the input of the Dual-stream network for stain defect classification.

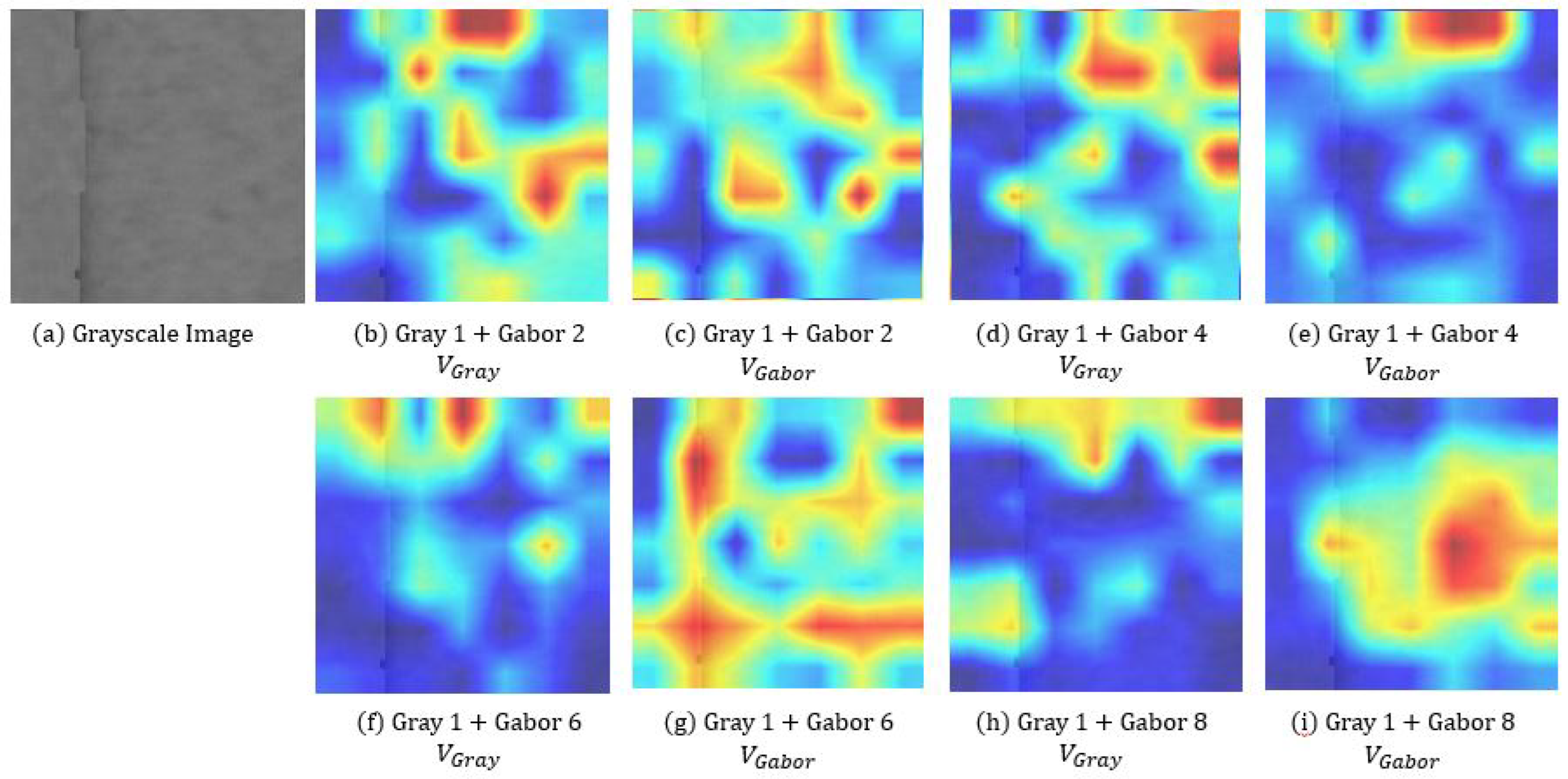

As a result of the experiment, the Gabor filter method was more accurate than the method without using it, and the single-stream structure in the MT dataset and the dual-stream structure in the CCM dataset had higher accuracy. Through this, the performance of single and dual-stream structures can be different depending on the dataset’s characteristics (background, degree of stain). In addition, it was confirmed that selecting N Gabor filter images based on the IDM value is more accurate than using all eight images without selecting them. Through this, it was confirmed that among the Gabor filter images, there are images with important information and other images with lower accuracy due to noise. In addition, it was confirmed that the stream in which the Gabor image was input by outputting the CAM image finds stain.

The disadvantage of the proposed method is that the method for selecting N Gabor filter images depends on the dataset. Although it was confirmed that the method using Gabor filter images is more accurate than the method without, the value of selecting N Gabor filter images according to the dataset must be directly compared with learning and evaluation. It would be nice always to find a good method, but you have to find N chapters while comparing yourself.

Developing a method to calculate optimized Gabor filter parameters for defect classification of datasets through deep learning without using static Gabor filter parameters is planned as a future study. In addition, a study on the structure of a CNN that effectively detects stain defects will be conducted.