Multirobot Task Planning Method Based on the Energy Penalty Strategy

Abstract

1. Introduction

2. Problem and Algorithm Description

3. Algorithm Model

4. GA for Multirobot Task Planning

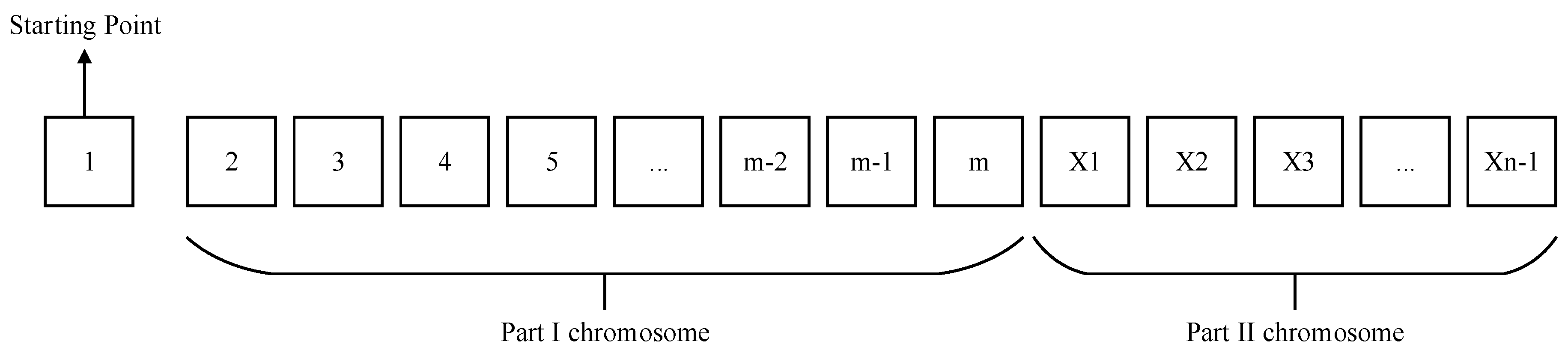

4.1. Coding Method

4.2. Population Initialization and Fitness Function

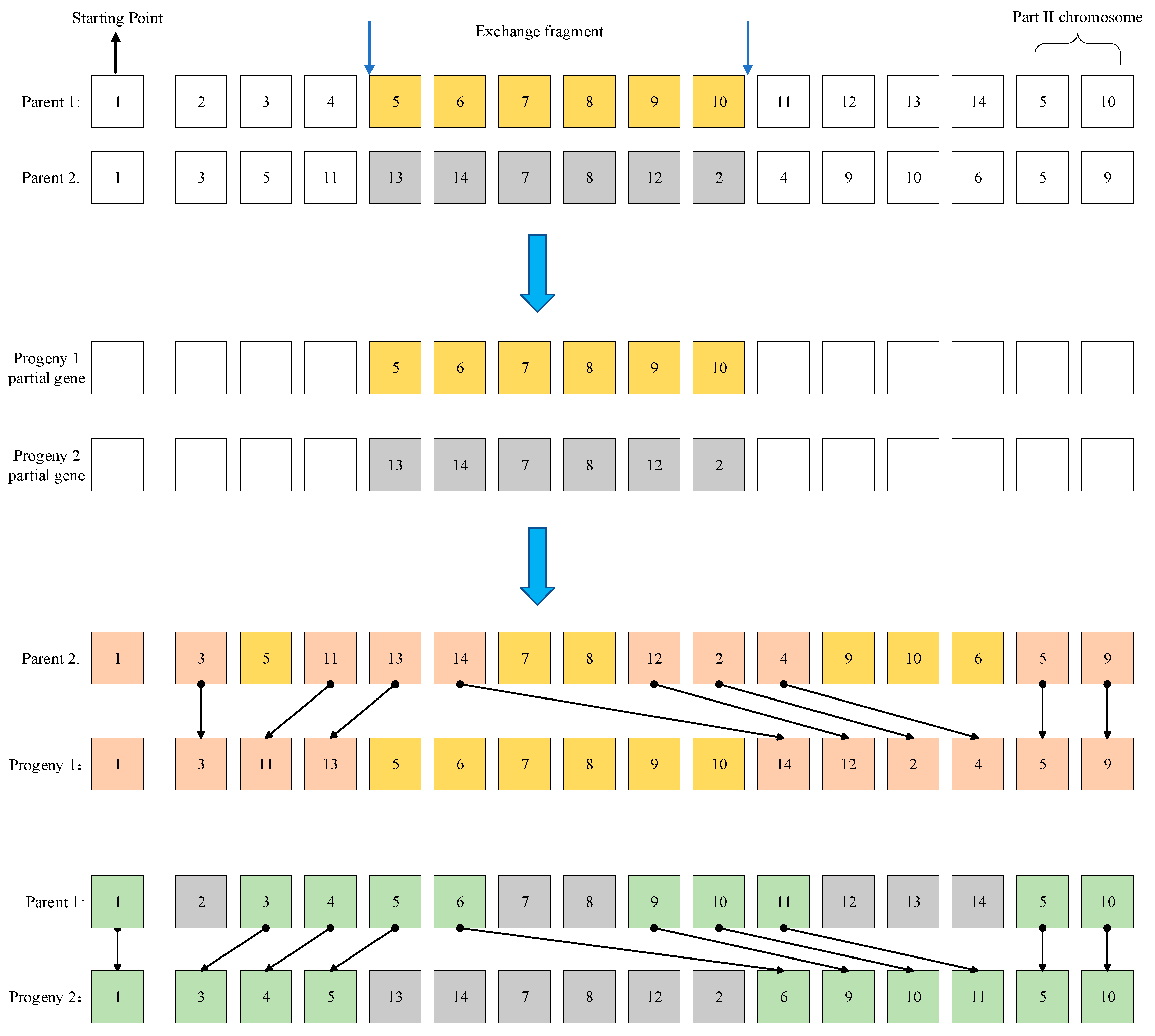

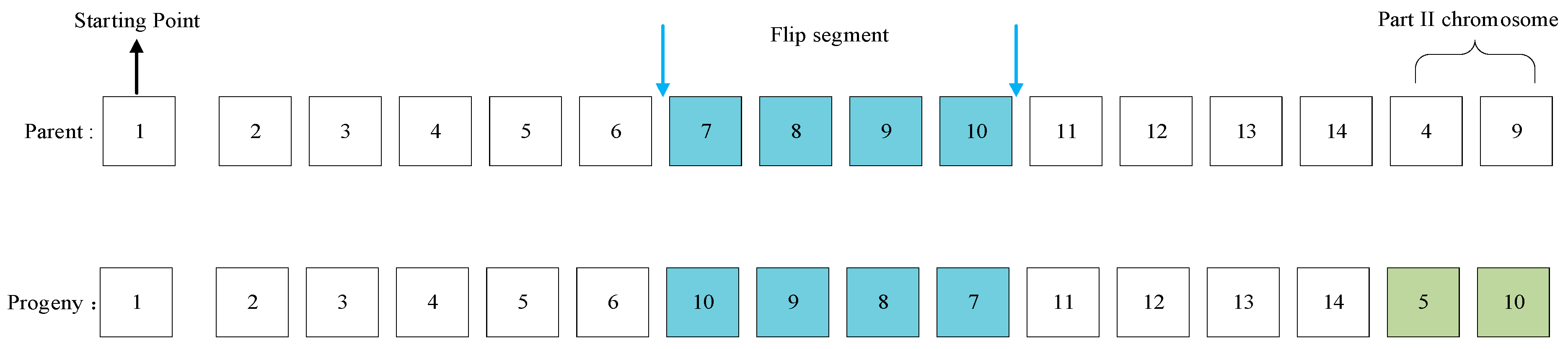

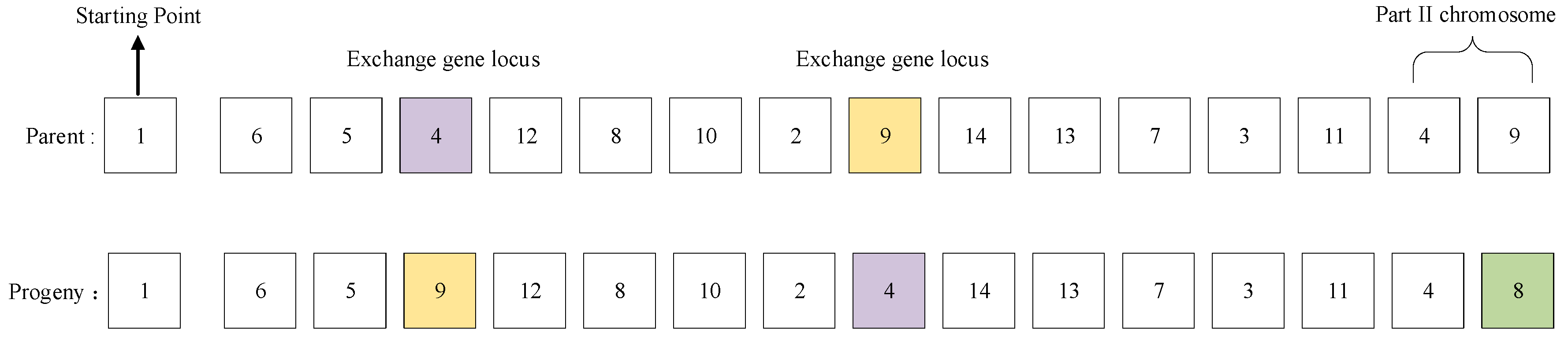

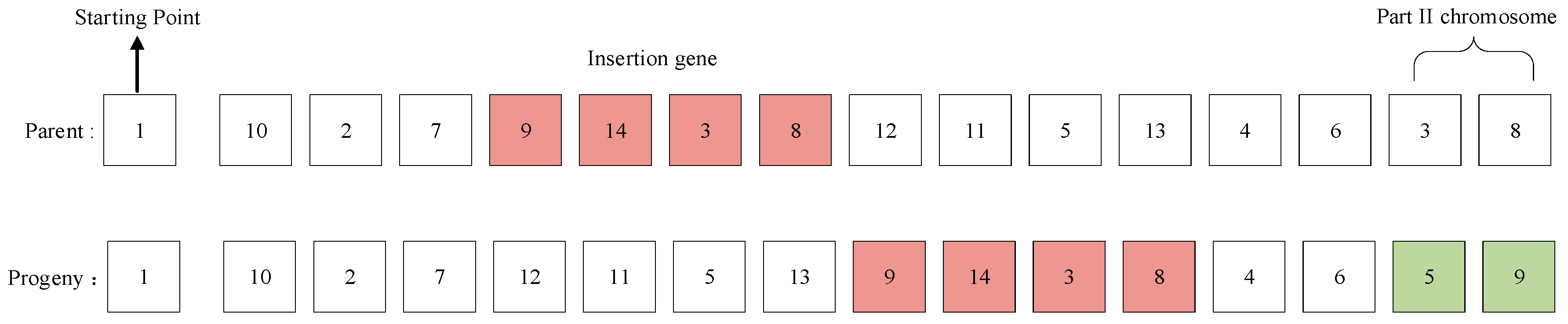

4.3. Genetic Manipulation

4.4. Algorithm Implementation

5. Simulation Experiment Analysis

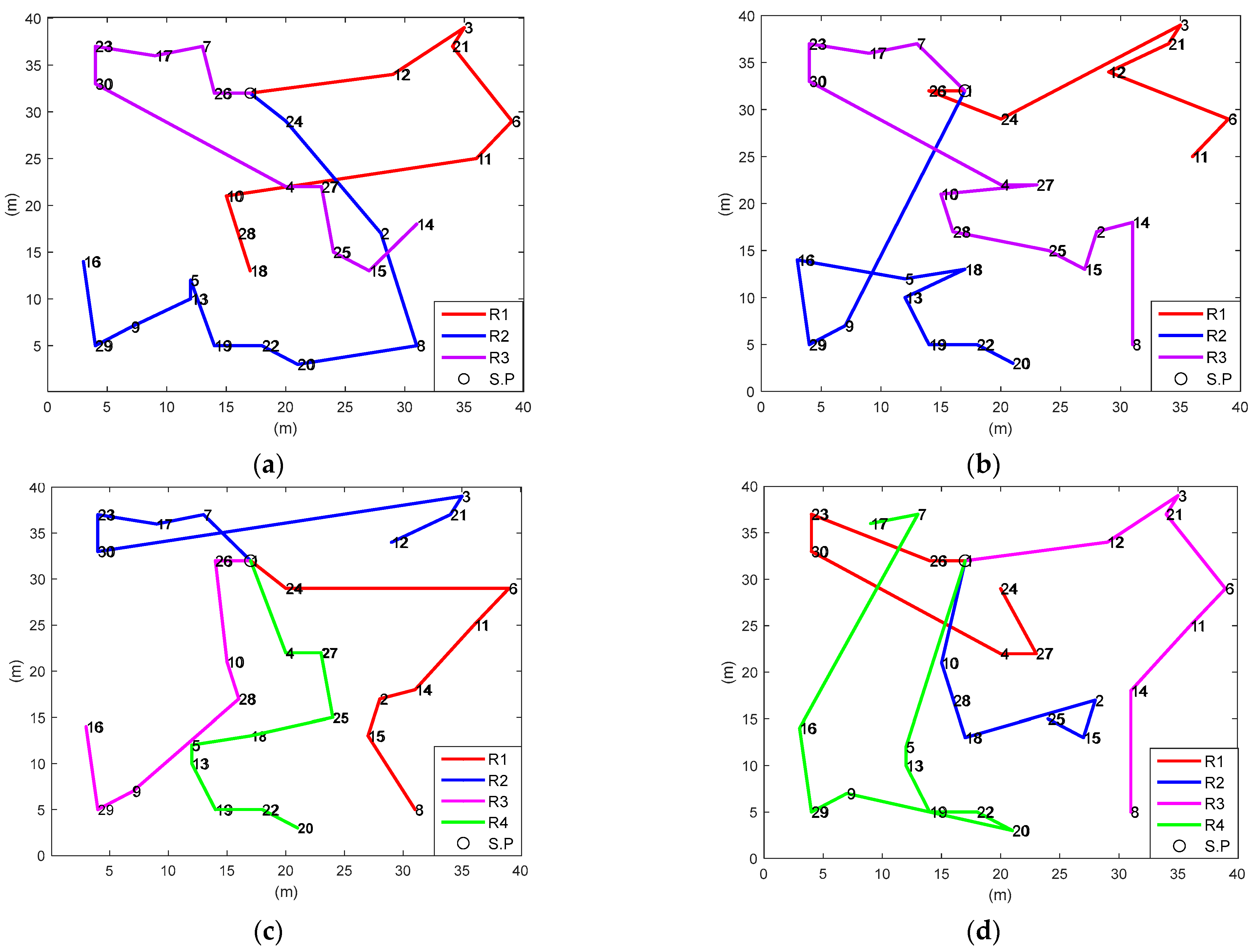

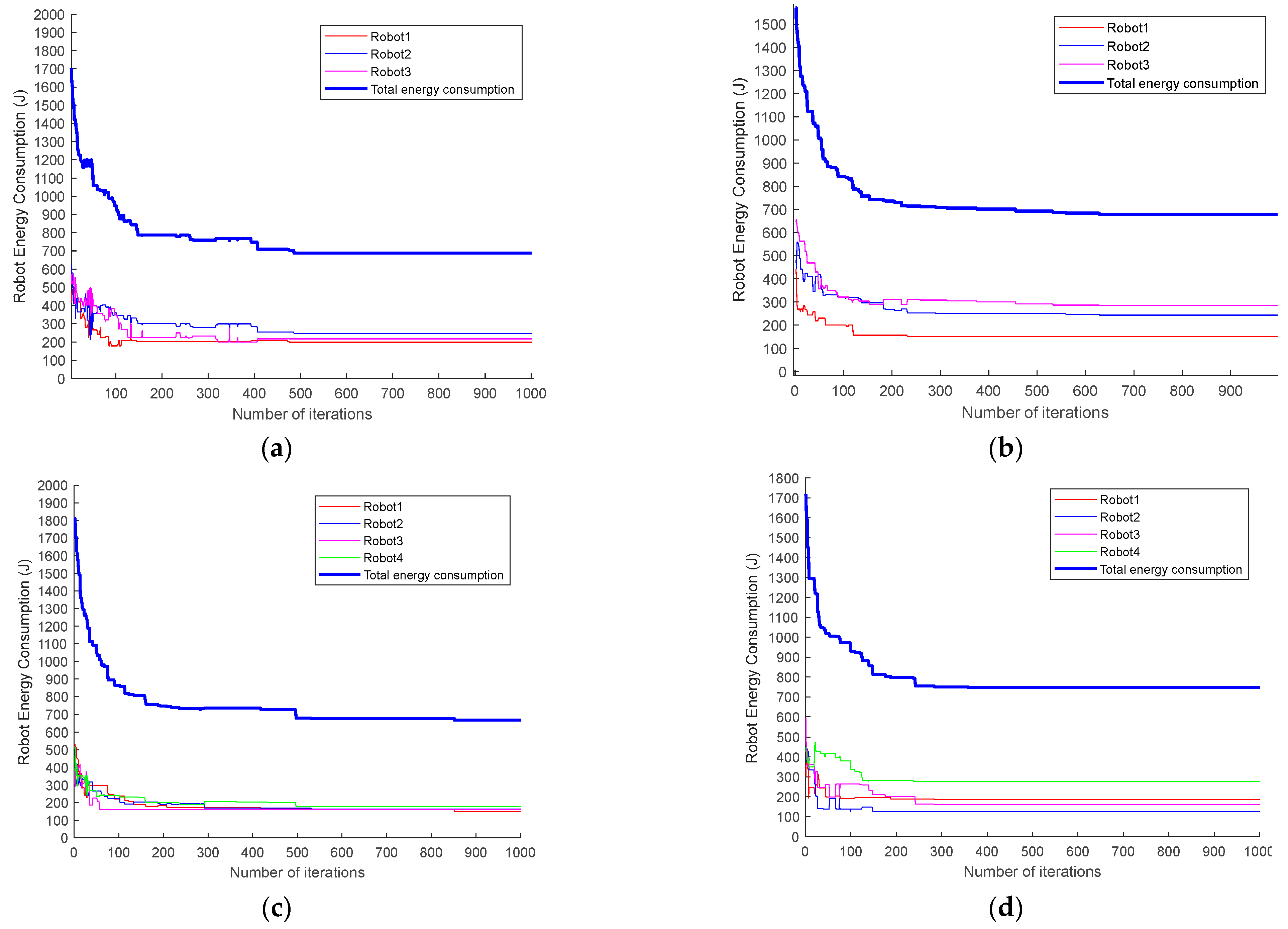

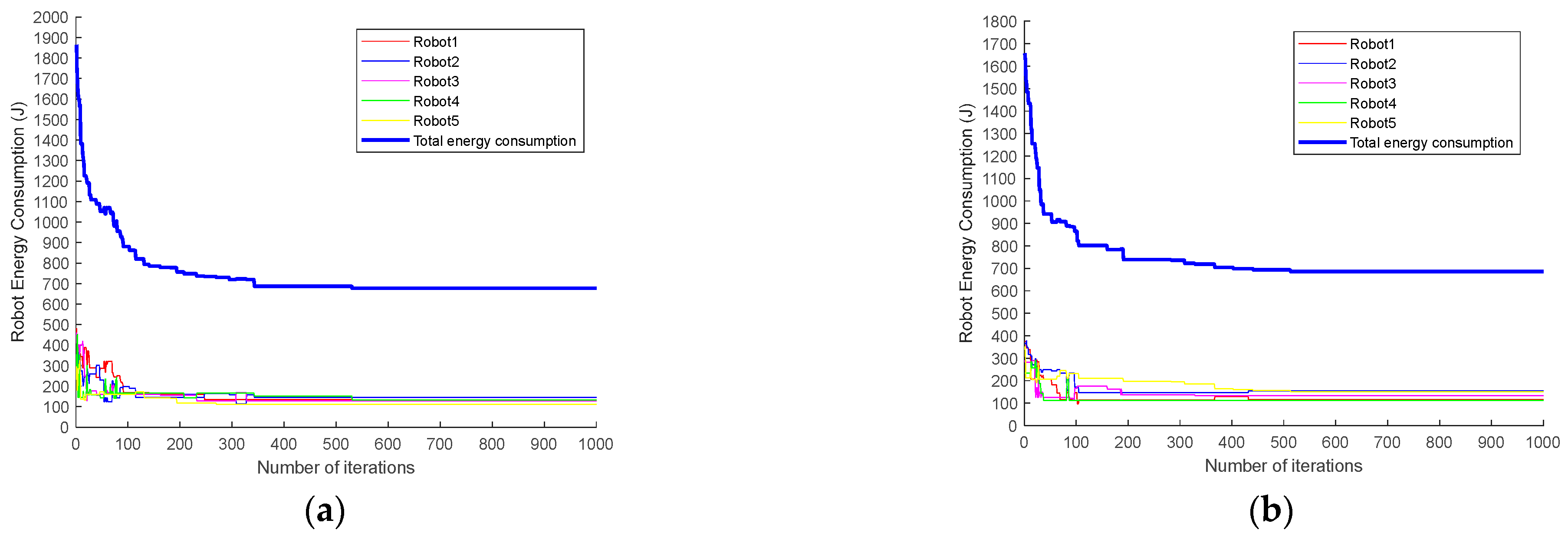

5.1. MRTA Optimization

5.2. Task Sequence Optimization of A* + GA

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MRTA | Multirobot task allocation |

| TSP | Traveling Salesman Problem |

| MTSP | Multiple Traveling Salesman Problem |

| VRP | Vehicle Routing Problem |

| GA | Genetic Algorithm |

| PE | Penalty Energy |

| OX | Order Cross |

| CX | Circular Cross |

| A* | A-STAR |

| TS | Task Sequence |

| TN | Task Number |

References

- Zhang, F.; Lin, L. Architecture and Related Problems of Multi-Mobile Robot Coordination System. Robots 2001, 23, 554–558. [Google Scholar]

- Zhang, Z.; Gong, S.; Xu, D.; Meng, Y.; Li, X.; Feng, G. Multi-Robot Task Assignment and Path Planning Algorithm. J. Harbin Eng. Univ. 2019, 40, 1753–1759. [Google Scholar]

- Xiong, Q.; Dong, C.; Hong, Q. Multi-robot Task Allocation Method for Raw Material Supply Link in Intelligent Factory. Minicomicter Syst. 2022, 43, 1625–1630. [Google Scholar]

- Lei, B.; Jin, Y.; Wang, Z.; Zhao, R.; Hu, F. Present Situation and Development of Warehouse Logistics Robot Technology. Mod. Manuf. Eng. 2021, 12, 143–153. [Google Scholar]

- Song, W.; Gao, Y.; Shen, L.; Zhang, Y. A Task Allocation Algorithm for Multiple Robots Based on Near Field Subset Partitioning. Robots 2021, 43, 629–640. [Google Scholar]

- Xu, S.; Wang, Z. Research Progress and Key Technologies of Fire Rescue Multi-Robot System. Sci. Technol. Innov. Appl. 2022, 12, 155–157+161. [Google Scholar]

- Siwek, M.; Panasiuk, J.; Baranowski, L.; Kaczmarek, W.; Prusaczyk, P.; Borys, S. Identification of Differential Drive Robot Dynamic Model Parameters. Materials 2023, 16, 683. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Zhu, P.; Zeng, Z.; Xiao, J.; Lu, H.; Zhou, Z. Robot navigation in a crowd by integrating deep reinforcement learning and online planning. Appl. Intell. 2022, 52, 15600–15616. [Google Scholar] [CrossRef]

- Fox, D.; Ko, J.; Konolige, K.; Limketkai, B.; Schulz, D.; Stewart, B. Distributed Multirobot Exploration and Mapping. Proc. IEEE 2006, 94, 1325–1339. [Google Scholar] [CrossRef]

- Vasconcelos, J.V.R.; Brandão, A.S.; Sarcinelli-Filho, M. Real-Time Path Planning for Strategic Missions. Appl. Sci. 2020, 10, 7773. [Google Scholar] [CrossRef]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A Formal Basis for the Heuristic Determination of Minimum Cost Paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Chaimowicz, L.; Sugar, T.; Kumar, V.; Campos, M.F.M. An Architecture for Tightly Coupled Multi-Robot Cooperation. In Proceedings of the 2001 ICRA IEEE International Conference on Robotics and Automation (Cat. No. 01CH37164), Seoul, Republic of Korea, 21–26 May 2001; Volume 3, pp. 2992–2997. [Google Scholar]

- Liu, C.; Kroll, A. A Centralized Multi-Robot Task Allocation for Industrial Plant Inspection by Using A* and Genetic Algorithms. In Proceedings of the 11th International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 29 April–3 May 2012. [Google Scholar]

- Turner, J.; Meng, Q.; Schaefer, G.; Whitbrook, A.; Soltoggio, A. Distributed Task Rescheduling with Time Constraints for the Optimization of Total Task Allocations in a Multirobot System. IEEE Trans. Cybern. 2018, 48, 2583–2597. [Google Scholar] [CrossRef] [PubMed]

- Zlot, R.; Stentz, A. Complex Task Allocation for Multiple Robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Barcelona, Spain, 18–22 April 2005; pp. 1515–1522. [Google Scholar]

- Luo, L.; Chakraborty, N.; Sycara, K. Provably-Good Distributed Algorithm for Constrained Multi-Robot Task Assignment for Grouped Tasks. IEEE Trans. Robot. 2015, 31, 19–30. [Google Scholar] [CrossRef]

- Odili, J.B.; Noraziah, A.; Sidek, R.M. Swarm Intelligence Algorithms’ Solutions to the Travelling Salesman’s Problem. IOP Conf. Ser. Mater. Sci. Eng. 2020, 769, 1–10. [Google Scholar] [CrossRef]

- Jin, L.; Li, S.; La, H.M.; Zhang, X.; Hu, B. Dynamic Task Allocation in Multi-Robot Coordination for Moving Target Tracking: A distributed approach. Automatica 2019, 100, 75–81. [Google Scholar] [CrossRef]

- Jiang, G.; Lam, S.; Ning, F.; He, P.; Xie, J. Peak-Hour Vehicle Routing for First-Mile Transportation: Problem Formulation and Algorithms. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3308–3321. [Google Scholar] [CrossRef]

- Al-Dulaimi, B.F.; Ali, H.A. Enhanced Traveling Salesman Problem Solving by Genetic Algorithm Technique (TSPGA). Int. J. Math. Comput. Sci. 2008, 2, 123–129. [Google Scholar]

- Zhang, C.; Zhou, Y.; Li, Y. Review of Multi-Robot Collaborative Navigation Technology. Unmanned Syst. Technol. 2020, 13, 26–34. [Google Scholar]

- Yuan, Q.; Yi, G.; Hong, B.; Meng, X. Multi-Robot Task Allocation Using CNP Combines with Neural Network. Neural Comput. Appl. 2013, 23, 1909–1914. [Google Scholar] [CrossRef]

- Cao, R.; Li, S.; Ji, Y.; Xu, H.; Zhang, M.; Li, M. Task Planning of Multi-Machine Cooperative Work Based on Ant Colony Algorithm. Trans. Agric. Mach. Soc. 2019, 50, 34–39. [Google Scholar]

- Shuai, H.; Huang, Z.; Wang, L.; Rui, Z.; Yu, W. An Optimal Task Decision Method for a Warehouse Robot with Multiple Tasks Based on Linear Temporal Logic. In Proceedings of the 2017 IEEE International Conference on Systems, Man and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1453–1458. [Google Scholar]

- Yi, Z.; Wang, F.; Fu, F.; Su, Z. Multi-AGV Path Planning for Indoor Factory by Using Prioritized Planning and Improved Ant Algorithm. J. Eng. Technol. Sci. 2018, 50, 534–547. [Google Scholar]

- Weerakoon, T.; Ishii, K.; Nassiraei, A. An Artificial Potential Field Based Mobile Robot Navigation Method to Prevent from Deadlock. J. Artif. Intell. Soft Comput. Res. 2015, 5, 189–203. [Google Scholar] [CrossRef]

- Tuazon, J.; Prado, K.; Cabial, N.; Enriquez, R.; Rivera, F.; Serrano, K. An Improved Collision Avoidance Scheme Using Artificial Potential Field with Fuzzy Logic. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 291–296. [Google Scholar]

- Zhang, D.; Sun, X.; Fu, S.; Zheng, B. Multi-Robot Collaborative Path Planning Method in Intelligent Warehouse. Comput. Integr. Manuf. Syst. 2018, 24, 410–418. [Google Scholar]

- Yuan, Y.; Ye, F.; Lai, Y.; Zhan, Y. Multi-AGV Path Planning Combined with Load Balancing and A* Algorithm. Comput. Eng. Appl. 2020, 56, 251–256. [Google Scholar]

- Zhao, J.; Zhang, Y.; Ma, Z. Improvement and Validation of A-Star Algorithm for AGV Path Planning. Comput. Eng. Appl. 2018, 54, 222–228. [Google Scholar]

- Zhang, Y.; Tan, Z.; Zhou, W.; Fang, D. Research on Stochastic Path Optimization Based on Shortest Distance Fitness. Intell. Comput. Appl. 2021, 11, 153–156. [Google Scholar]

- Wu, Y.; Jiang, L.; Liu, Q. Multi-Travel Salesman Problem Solving Based on Parallel Genetic Algorithm. Microcomput. Appl. 2011, 27, 45–47+71. [Google Scholar]

- Yu, D.; Yang, Y. Optimal Genetic Algorithm for Emergency Rescue Vehicle Scheduling at multiple disaster Sites. Comput. Syst. Appl. 2016, 25, 201–207. [Google Scholar]

- Gao, X.; Huang, D.; Ding, S. PID Parameter Optimization Based on Penalty Function and Genetic Algorithm. J. Jilin Inst. Chem. Technol. 2021, 38, 57–60. [Google Scholar]

- Zhu, G. C++ Implementation of Genetic Algorithm and Roulette Choice. J. Dongguan Univ. Technol. 2007, 14, 70–74. [Google Scholar]

| m = 30, n = 3 | |||||||

|---|---|---|---|---|---|---|---|

| Algorithm | E(R1) (J) | E(R2) (J) | E(R3) (J) | Sum(E) (J) | T (s) | ||

| This paper | 199.2 | 246.4 | 217.1 | 662.7 | 23.8 | 10.4 | |

| Basic GA | 150.0 | 243.4 | 285.1 | 678.5 | 69.2 | 7.6 | |

| m= 30, n = 4 | |||||||

| Algorithm | E(R1) (J) | E(R2) (J) | E(R3) (J) | E(R4) (J) | Sum(E) (J) | T (s) | |

| This paper | 151.3 | 164.6 | 160.9 | 176.3 | 653.1 | 10.3 | 11.5 |

| Basic GA | 184.6 | 124.0 | 161.5 | 276.9 | 747.0 | 65.1 | 9.7 |

| m = 40, n = 3 | |||||||

|---|---|---|---|---|---|---|---|

| Algorithm | E(R1) (J) | E(R2) (J) | E(R3) (J) | Sum(E) (J) | T (s) | ||

| This paper | 657.6 | 743.7 | 732.8 | 2134.1 | 46.9 | 19.2 | |

| Basic GA | 762.3 | 614.9 | 850.4 | 2227.6 | 119.0 | 16.4 | |

| m = 40, n = 4 | |||||||

| Algorithm | E(R1) (J) | E(R2) (J) | E(R3) (J) | E(R4) (J) | Sum(E) (J) | T (s) | |

| This paper | 632.3 | 570.1 | 558.0 | 568.0 | 2328.4 | 33.9 | 26.3 |

| Basic GA | 453.1 | 528.8 | 608.0 | 797.1 | 2387.0 | 147.8 | 22.6 |

| Algorithm | E(R1) (J) | E(R2) (J) | E(R3) (J) | E(R4) (J) | E(R5) (J) | Sum€ (J) | T (s) | |

|---|---|---|---|---|---|---|---|---|

| This paper | 146.0 | 145.0 | 128.3 | 132.8 | 112.2 | 664.3 | 13.8 | 13.6 |

| Paper [34] | 115.3 | 155.2 | 133.0 | 112.2 | 150.4 | 666.1 | 19.6 | 15.4 |

| Robot No. | L(R) (m) | E (R) | P (%) |

|---|---|---|---|

| Robot1 | 202 | 840.75 | - |

| Robot2 | 232 | 957.45 | 5.56% |

| Robot3 | 186 | 922.95 | 1.75% |

| Task Optimization | TS(R2) | L(R2) (m) | E(R2) (J) |

|---|---|---|---|

| Before | {(1),20,35,6,21,3,26,16,31,23,24,22,29,5,18} | 232 | 957.45 |

| After | {(1),29,20,35,6,21,3,26,16,31,23,24,22,5,18} | 224 | 936.15 |

| Task Optimization | E(R1) (J) | E(R2) (J) | E(R3) (J) | Sum(E) (J) | |

|---|---|---|---|---|---|

| Before | 840.75 | 957.45 | 922.95 | 2721.15 | 60.0 |

| After | 840.75 | 936.15 | 922.95 | 2699.85 | 51.69 |

| Robot No. | L(R) (m) | E(R) | P (%) |

|---|---|---|---|

| Robot1 | 86 | 307.80 | - |

| Robot2 | 102 | 343.05 | 10.71% |

| Robot3 | 91 | 278.70 | - |

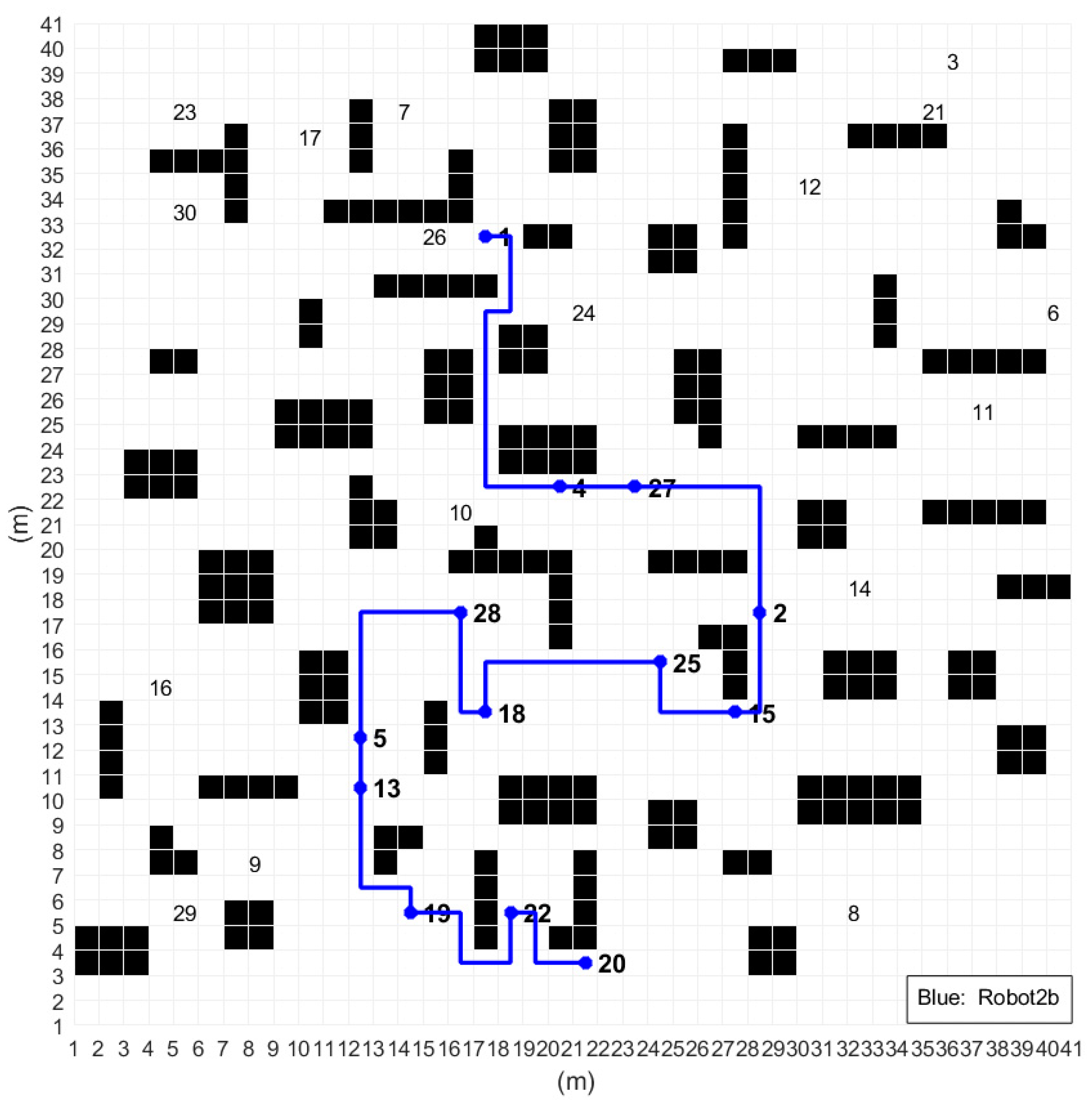

| Task Optimization | TS(R2) | L(R2) (m) | E(R2) (J) |

|---|---|---|---|

| Before | {(1),2,25,15,20,22,19,13,5,18,28,4,27} | 102 | 343.05 |

| After | {(1),4,27,2,15,25,18,28,5,13,19,22,20} | 83 | 283.50 |

| Task Optimization | E(R1) | E(R2) | E(R3) | Sum(E) (J) | |

|---|---|---|---|---|---|

| Before | 307.80 | 343.05 | 278.70 | 929.55 | 32.22 |

| After | 307.80 | 283.50 | 278.70 | 870.00 | 15.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, L.; Zhu, L.; Jia, W.; Cheng, X. Multirobot Task Planning Method Based on the Energy Penalty Strategy. Appl. Sci. 2023, 13, 4887. https://doi.org/10.3390/app13084887

Liang L, Zhu L, Jia W, Cheng X. Multirobot Task Planning Method Based on the Energy Penalty Strategy. Applied Sciences. 2023; 13(8):4887. https://doi.org/10.3390/app13084887

Chicago/Turabian StyleLiang, Lidong, Liangheng Zhu, Wenyou Jia, and Xiaoliang Cheng. 2023. "Multirobot Task Planning Method Based on the Energy Penalty Strategy" Applied Sciences 13, no. 8: 4887. https://doi.org/10.3390/app13084887

APA StyleLiang, L., Zhu, L., Jia, W., & Cheng, X. (2023). Multirobot Task Planning Method Based on the Energy Penalty Strategy. Applied Sciences, 13(8), 4887. https://doi.org/10.3390/app13084887