An Efficient Bidirectional Point Pyramid Attention Network for 3D Point Cloud Completion

Abstract

:1. Introduction

- (1)

- We offer an encoder–decoder network for point cloud completion. A set of experiments proved the effectiveness and robustness of the proposed method on several challenging datasets.

- (2)

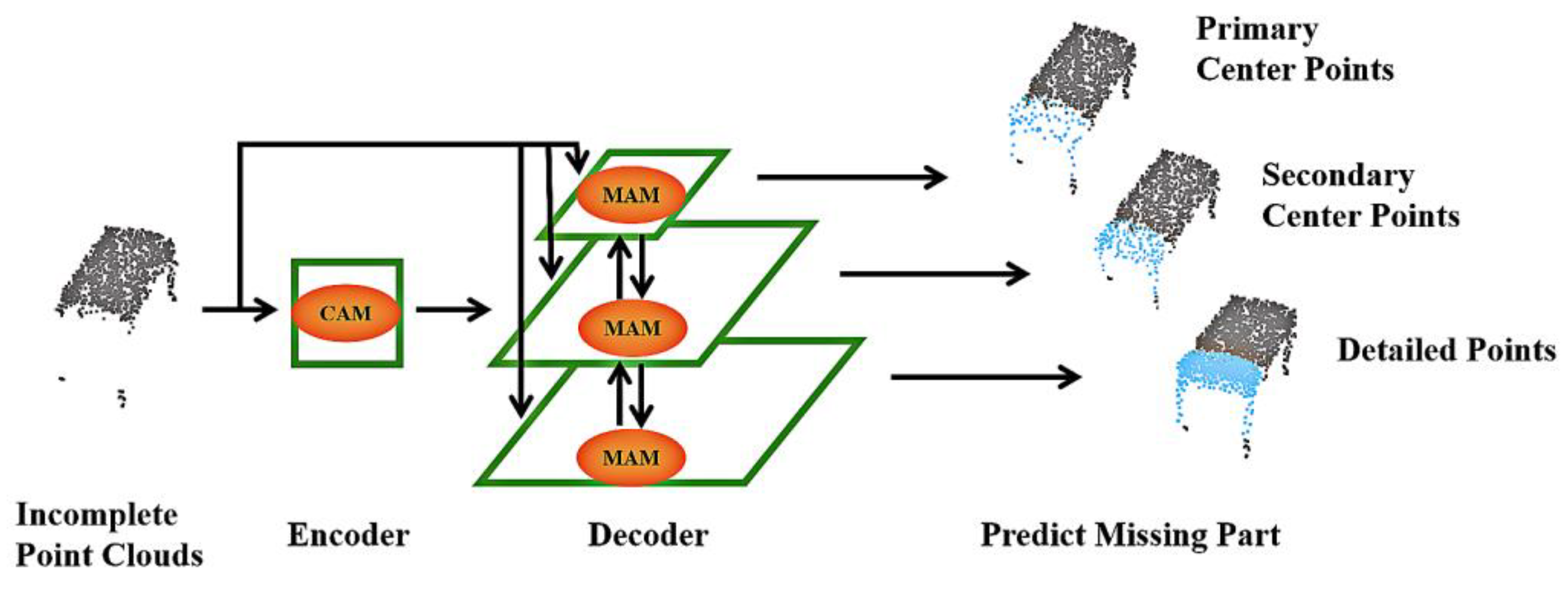

- We applied a Channelwise Attention Module (CAM) and a Mixed Attention Module (MAM) to introduce a reasonable weight to learn the importance of distinct features. While fusing different input features, the contributions of the fused output features are not equal. The attention mechanism allows for the network to infer the missing regions from incomplete point clouds, exploiting more effective geometric information.

- (3)

- We designed a simple and highly effective decoder that consists of skip connections and a Bidirectional Point Pyramid Attention Network (BiPPAN). BiPPAN applies top–down and bottom–up multiscale feature fusion. In fact, a multilayer pyramid network model was used on PF-Net [14]. However, a point cloud generation method propagating low-level features to improve the entire feature hierarchy has not yet been invented.

2. Related Work

2.1. 3D Shape Completion

2.2. Multiscale Feature Representations

3. Methods

3.1. Mixed Attention Module

3.2. Point Feature Encoder

3.3. Point Pyramid Decoder

3.4. Loss Function

4. Experimental

4.1. Data Generation and Model Training

4.2. Completion Results on ShapeNet

4.3. Ablation Study

4.4. Robustness Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wen, X.; Han, Z.; Cao, Y.-P.; Wan, P.; Zheng, W.; Liu, Y. Cycle4completion: Unpaired point cloud completion using cycle transformation with missing region coding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13080–13089. [Google Scholar]

- Sun, Y.; Wang, Y.; Liu, Z.; Siegel, J.E.; Sarma, S.E. PointGrow: Autoregressively Learned Point Cloud Generation with Self-Attention. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar]

- Chen, C.; Li, O.; Tao, D.; Barnett, A.; Rudin, C.; Su, J.K. This looks like that: Deep learning for interpretable image recognition. In Advances in Neural Information Processing Systems; NeurIPS: San Diego, CA, USA, 2019; Volume 32. [Google Scholar]

- Santhanavijayan, A.; Kumar, D.N.; Deepak, G. A semantic-aware strategy for automatic speech recognition incorporating deep learning models. In Intelligent System Design; Springer: Singapore, 2021; pp. 247–254. [Google Scholar]

- Pandey, B.; Pandey, D.K.; Mishra, B.P.; Rhmann, W. A comprehensive survey of deep learning in the field of medical imaging and medical natural language processing: Challenges and research directions. J. King Saud Univ. Comput. Inf. Sci. 2021, 34, 5083–5099. [Google Scholar] [CrossRef]

- Zhang, W.; Li, H.; Li, Y.; Liu, H.; Chen, Y.; Ding, X. Application of deep learning algorithms in geotechnical engineering: A short critical review. Artif. Intell. Rev. 2021, 54, 5633–5673. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. Comput. Sci. 2015. [Google Scholar] [CrossRef]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep learning for image and point cloud fusion in autonomous driving: A review. IEEE Trans. Intell. Transp. Syst. 2021, 23, 722–739. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Piscataway Township, NJ, USA, 2020; Volume 43, pp. 4338–4364. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Alliegro, A.; Valsesia, D.; Fracastoro, G.; Magli, E.; Tommasi, T. Denoise and contrast for category agnostic shape completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4629–4638. [Google Scholar]

- Xiang, P.; Wen, X.; Liu, Y.-S.; Cao, Y.-P.; Wan, P.; Zheng, W.; Han, Z. Snowflakenet: Point cloud completion by snowflake point deconvolution with skip-transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5499–5509. [Google Scholar]

- Huang, Z.; Yu, Y.; Xu, J.; Ni, F.; Le, X. PF-Net: Point Fractal Network for 3D Point Cloud Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. PCN: Point Completion Network. In Proceedings of the International Conference on 3D Vision, Verona, Italy, 5–8 September 2018. [Google Scholar]

- Sarmad, M.; Lee, H.J.; Kim, Y.M. RL-GAN-Net: A Reinforcement Learning Agent Controlled GAN Network for Real-Time Point Cloud Shape Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hu, T.; Han, Z.; Shrivastava, A.; Zwicker, M. Render4Completion: Synthesizing Multi-View Depth Maps for 3D Shape Completion. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Huang, T.; Zou, H.; Cui, J.; Yang, X.; Wang, M.; Zhao, X.; Zhang, J.; Yuan, Y.; Xu, Y.; Liu, Y. Rfnet: Recurrent forward network for dense point cloud completion. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12508–12517. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Miculicich, L.; Ram, D.; Pappas, N.; Henderson, J. Document-level neural machine translation with hierarchical attention networks. In Empirical Methods in Natural Language Processing; Association for Computational Linguistics: Brussels, Belgium, 2018. [Google Scholar]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Wen, X.; Li, T.; Han, Z.; Liu, Y.S. Point Cloud Completion by Skip-attention Network with Hierarchical Folding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yu, X.; Rao, Y.; Wang, Z.; Liu, Z.; Lu, J.; Zhou, J. Pointr: Diverse point cloud completion with geometry-aware transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Montreal, QC, Canada, 10–17 October 2021; pp. 12498–12507. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Piscataway Township, NJ, USA, 2018. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yi, L.; Kim, V.G.; Ceylan, D.; Shen, I.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A scalable active framework for region annotation in 3D shape collections. ACM Trans. Graph. 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning Representations and Generative Models for 3D Point Clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhao, Y.; Birdal, T.; Deng, H.; Tombari, F. 3D Point Capsule Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, J.; Cui, Y.; Guo, D.; Li, J.; Liu, Q.; Shen, C. Pointattn: You only need attention for point cloud completion. arXiv 2022, arXiv:2203.08485. [Google Scholar]

- Bai, Y.; Wang, X.; Ang, M.H., Jr.; Rus, D. BIMS-PU: Bi-Directional and Multi-Scale Point Cloud Upsampling. In IEEE Robotics and Automation Letters; IEEE: Piscataway Township, NJ, USA, 2022; pp. 7447–7454. [Google Scholar]

| Category | LGAN-AE | PCN | 3D Capsule | PF-Net | BiPPAN |

|---|---|---|---|---|---|

| Airplane | 3.357/1.130 | 5.060/1.243 | 2.676/1.401 | 1.091/1.070 | 0.964/0.875 |

| Bag | 5.707/5.303 | 3.251/4.314 | 5.228/4.202 | 3.929/3.768 | 2.864/2.823 |

| Cap | 8.968/4.608 | 7.015/4.240 | 11.04/4.739 | 5.290/4.800 | 4.371/3.489 |

| Car | 4.531/2.518 | 2.741/2.123 | 5.944/3.508 | 2.489/1.839 | 2.28/1.681 |

| Chair | 7.359/2.339 | 3.952/2.301 | 3.049/2.207 | 2.074/1.824 | 1.758/1.442 |

| Guitar | 0.838/0.536 | 1.419/0.689 | 0.625/0.662 | 0.456/0.429 | 0.377/0.376 |

| Lamp | 8.464/3.627 | 11.61/7.139 | 9.912/5.847 | 5.122/3.460 | 3.731/2.513 |

| Laptop | 7.649/1.413 | 3.070/1.422 | 2.129/1.733 | 1.247/0.997 | 1.118/0.864 |

| Motorbike | 4.914/2.036 | 4.962/1.922 | 8.617/2.708 | 2.206/1.775 | 1.931/1.562 |

| Mug | 6.139/4.735 | 3.590/3.591 | 5.155/5.168 | 3.138/3.238 | 3.036/2.914 |

| Pistol | 3.944/1.424 | 4.484/1.414 | 5.980/1.782 | 1.122/1.055 | 0.929/0.831 |

| Skateboard | 5.613/1.683 | 3.025/1.740 | 11.49/2.044 | 1.136/1.337 | 1.007/1.029 |

| Table | 2.658/2.484 | 2.503/2.452 | 3.929/3.098 | 2.235/1.934 | 1.744/1.626 |

| Mean | 5.395/2.603 | 4.360/2.661 | 5.829/3.008 | 2.426/2.117 | 2.008/1.694 |

| Category | PF-Net | BiPPAN | ||

|---|---|---|---|---|

| Pred → GT | GT → Pred | Pred → GT | GT → Pred | |

| Airplane | 1.084 | 1.119 | 0.963 | 0.872 |

| Bag | 3.979 | 4.668 | 2.83 | 2.624 |

| Cap | 5.254 | 4.897 | 4.004 | 3.614 |

| Car | 2.548 | 1.914 | 2.316 | 1.634 |

| Chair | 2.154 | 2.019 | 1.738 | 1.428 |

| Earphone | 6.003 | 8.058 | 3.194 | 3.690 |

| Guitar | 0.464 | 0.546 | 0.377 | 0.382 |

| Knife | 0.555 | 0.563 | 0.464 | 0.465 |

| Lamp | 4.943 | 3.883 | 3.863 | 2.557 |

| Laptop | 1.309 | 1.072 | 1.135 | 0.888 |

| Motorbike | 2.328 | 1.836 | 1.944 | 1.581 |

| Mug | 3.080 | 3.580 | 3.147 | 2.893 |

| Pistol | 1.284 | 1.053 | 0.920 | 0.811 |

| Rocket | 1.052 | 0.762 | 0.853 | 0.620 |

| Skateboard | 1.196 | 1.362 | 1.025 | 1.004 |

| Table | 2.305 | 2.123 | 1.760 | 1.629 |

| Mean | 2.471 | 2.466 | 1.908 | 1.668 |

| Params (×106) | 76.571 | 11.763 | ||

| Simple | Moderate | Hard | CD-S | CD-M | CD-H | CD- Ave | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Table | Chair | Airplane | Bed | Camera | Rifle | Birdhouse | Bag | Keyboard | |||||

| PF-Net | 1.963 /1.882 | 2.101 /1.975 | 1.162 /1.414 | 3.327 /4.742 | 4.827 /5.613 | 1.235 /1.029 | 3.689 /3.650 | 3.896 /3.107 | 1.911 /1.006 | 1.845 /1.737 | 2.464 /2.142 | 2.744 /2.362 | 2.463 /2.150 |

| BiPPAN | 1.386 /1.156 | 1.591 /1.177 | 0.884 /0.874 | 2.741 /1.987 | 3.258 /2.321 | 0.541 /0.488 | 2.015 /2.770 | 1.889 /1.289 | 0.766 /0.594 | 1.449 /1.134 | 1.801 /1.301 | 1.647 /1.245 | 1.738 /1.274 |

| Model | AM | BiPPN | Skip-C | Pred → GT (×103) | GT → Pred (×103) |

|---|---|---|---|---|---|

| A | 2.498 | 2.300 | |||

| B | √ | √ | 2.125 | 1.869 | |

| C | √ | √ | 2.242 | 1.837 | |

| D | √ | √ | 2.004 | 2.011 | |

| BiPPAN | √ | √ | √ | 1.908 | 1.668 |

| Missing Ratio | 25% | 50% | 75% | |||

|---|---|---|---|---|---|---|

| CD (×103) | Pred → GT | GT → Pred | Pred → GT | GT → Pred | Pred → GT | GT → Pred |

| Airplane | 0.963 | 0.872 | 0.971 | 0.809 | 0.968 | 0.896 |

| Car | 2.316 | 1.634 | 2.494 | 1.786 | 2.479 | 1.841 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Xiao, Y.; Gang, J.; Yu, Q. An Efficient Bidirectional Point Pyramid Attention Network for 3D Point Cloud Completion. Appl. Sci. 2023, 13, 4897. https://doi.org/10.3390/app13084897

Li Y, Xiao Y, Gang J, Yu Q. An Efficient Bidirectional Point Pyramid Attention Network for 3D Point Cloud Completion. Applied Sciences. 2023; 13(8):4897. https://doi.org/10.3390/app13084897

Chicago/Turabian StyleLi, Yang, Yao Xiao, Jialin Gang, and Qingjun Yu. 2023. "An Efficient Bidirectional Point Pyramid Attention Network for 3D Point Cloud Completion" Applied Sciences 13, no. 8: 4897. https://doi.org/10.3390/app13084897