AR Long-Term Tracking Combining Multi-Attention and Template Updating

Abstract

1. Introduction

2. Related Work

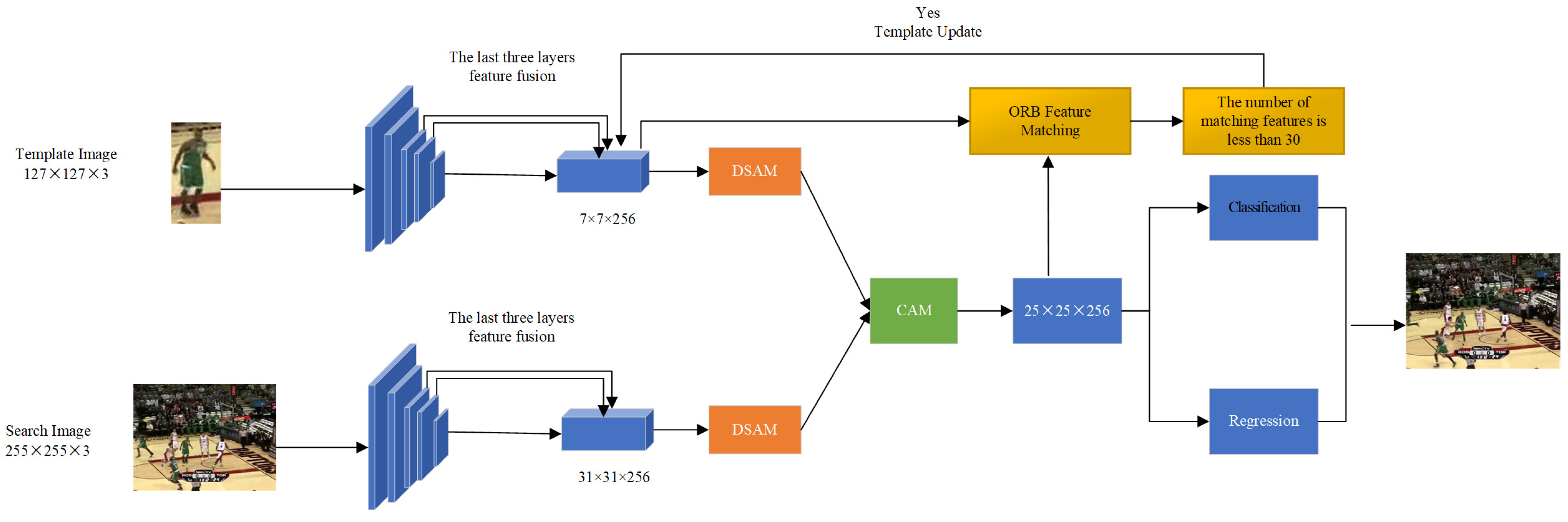

3. Methods

3.1. Backbone

- Reduce the effective stride of Res4 and Res5 to 8 pixels.

- In order to obtain greater feature resolution, we changed the convolution stride of the downsampling unit of the last stage of ResNet-50 to the unit convolution step.

- Adding a 1 × 1 convolution reduces the number of channels to 256, reduces the number of parameters, and keeps the same number of channels (the number is 256).

- Res3, Res4, and Res5 were fused successively, and the shallow and deep feature information was effectively fused layer by layer to obtain more refined feature information and raise the accuracy of follow-up tracking.

3.2. Feature Fusion

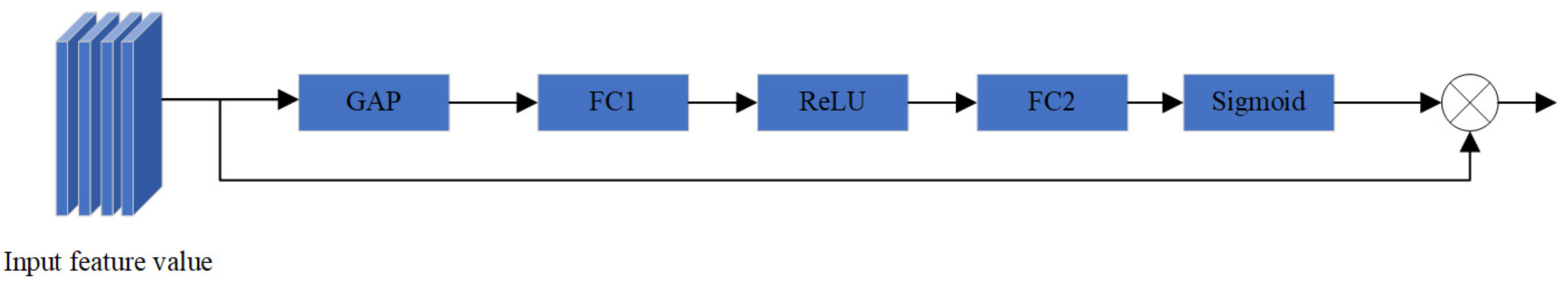

3.2.1. Dual Self-Attention Module

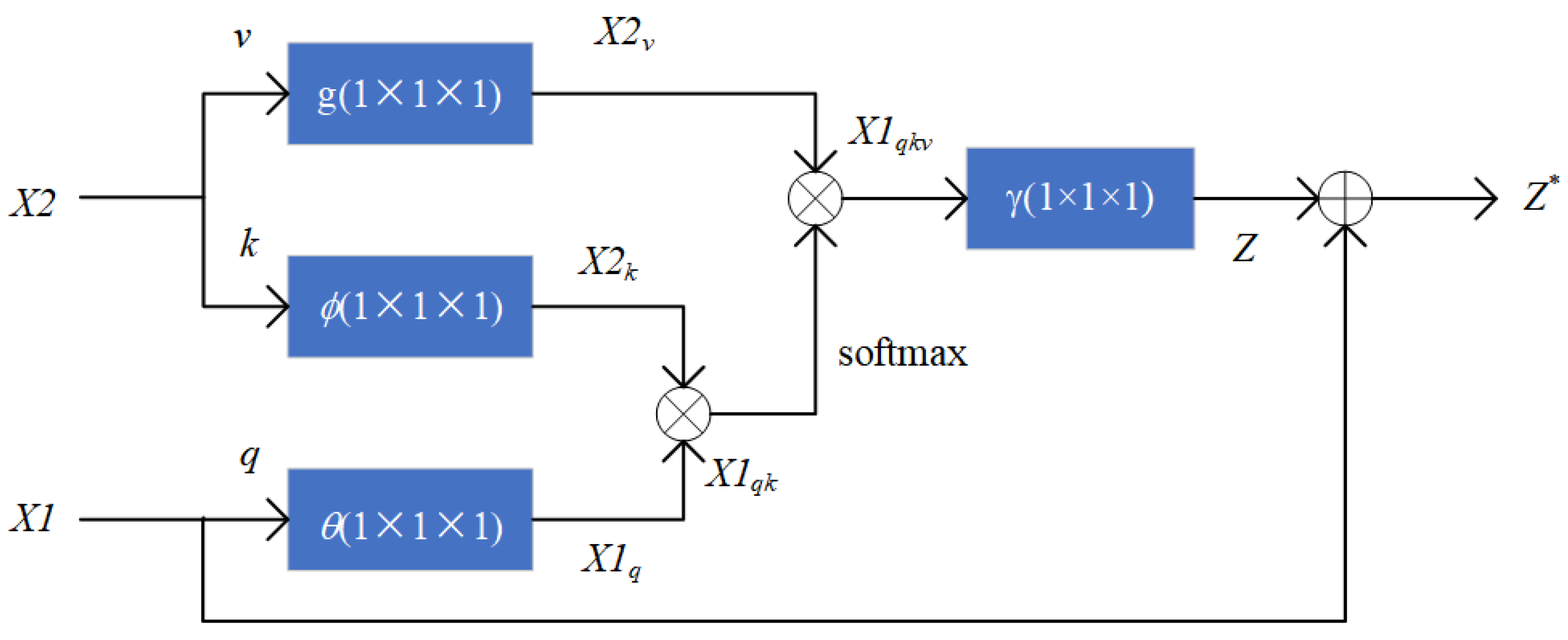

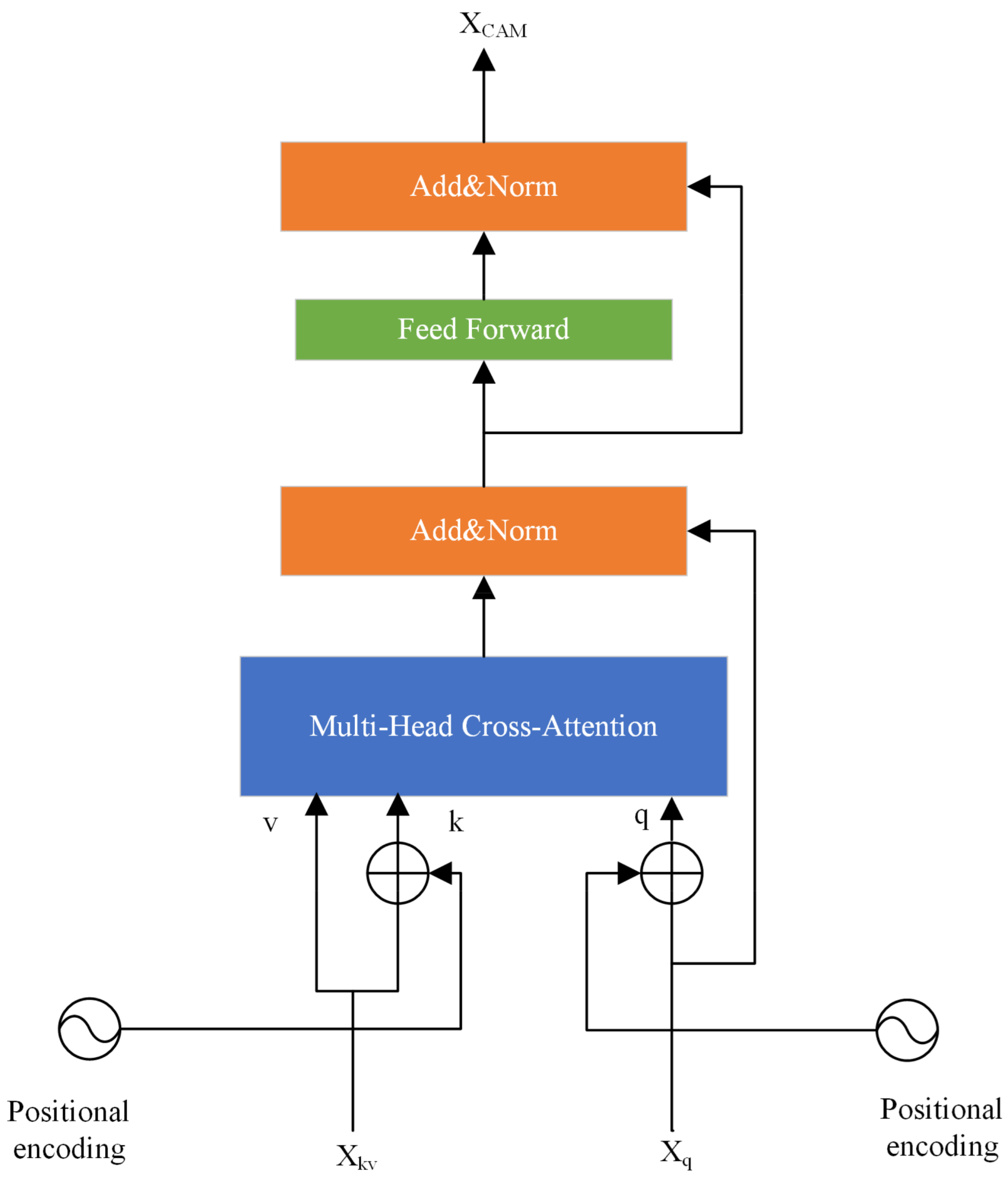

3.2.2. Cross-Attention Module

3.3. The Matching Feature Points Threshold Template Updating

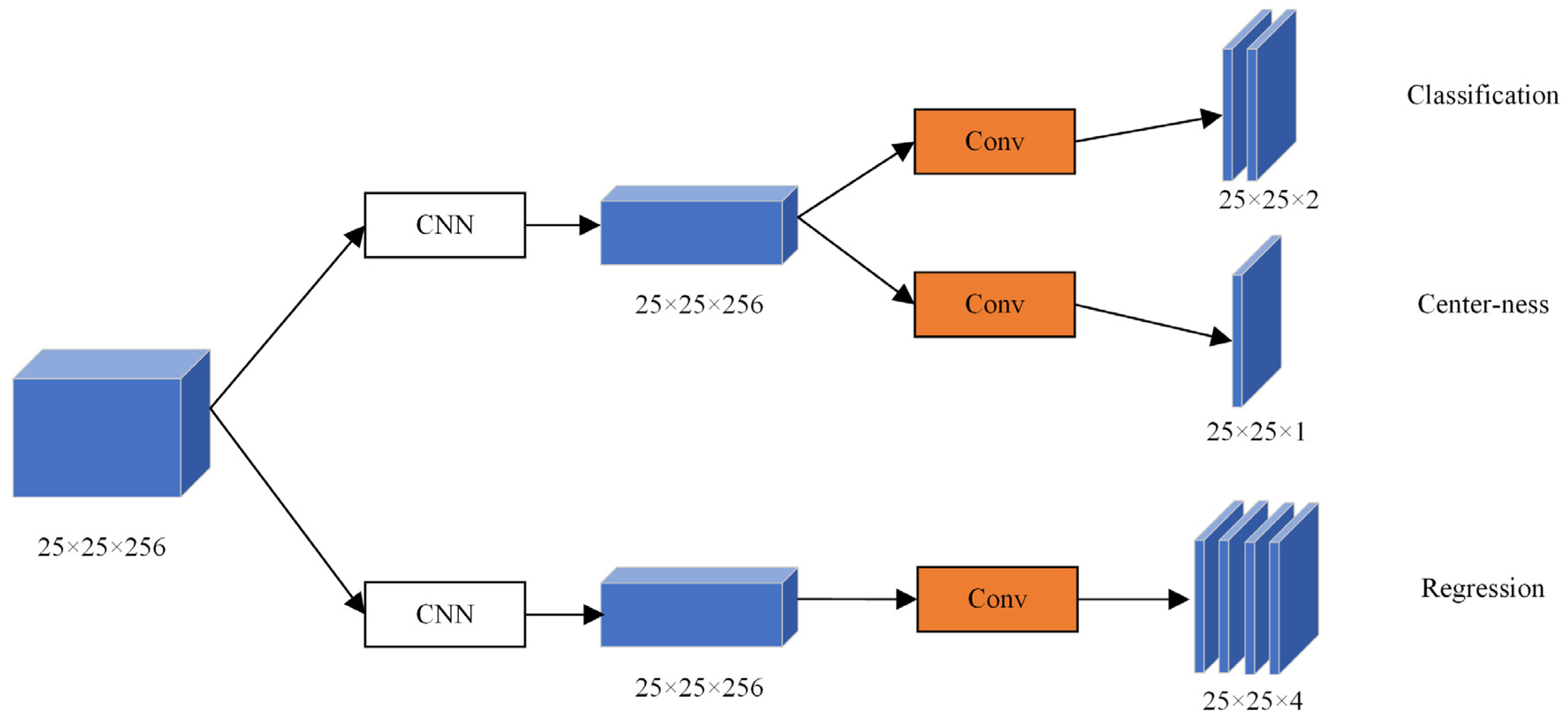

3.4. Classification and Regression Network

3.5. Loss

4. Experimental Analysis

4.1. Implementation Details

4.2. Analysis of Results

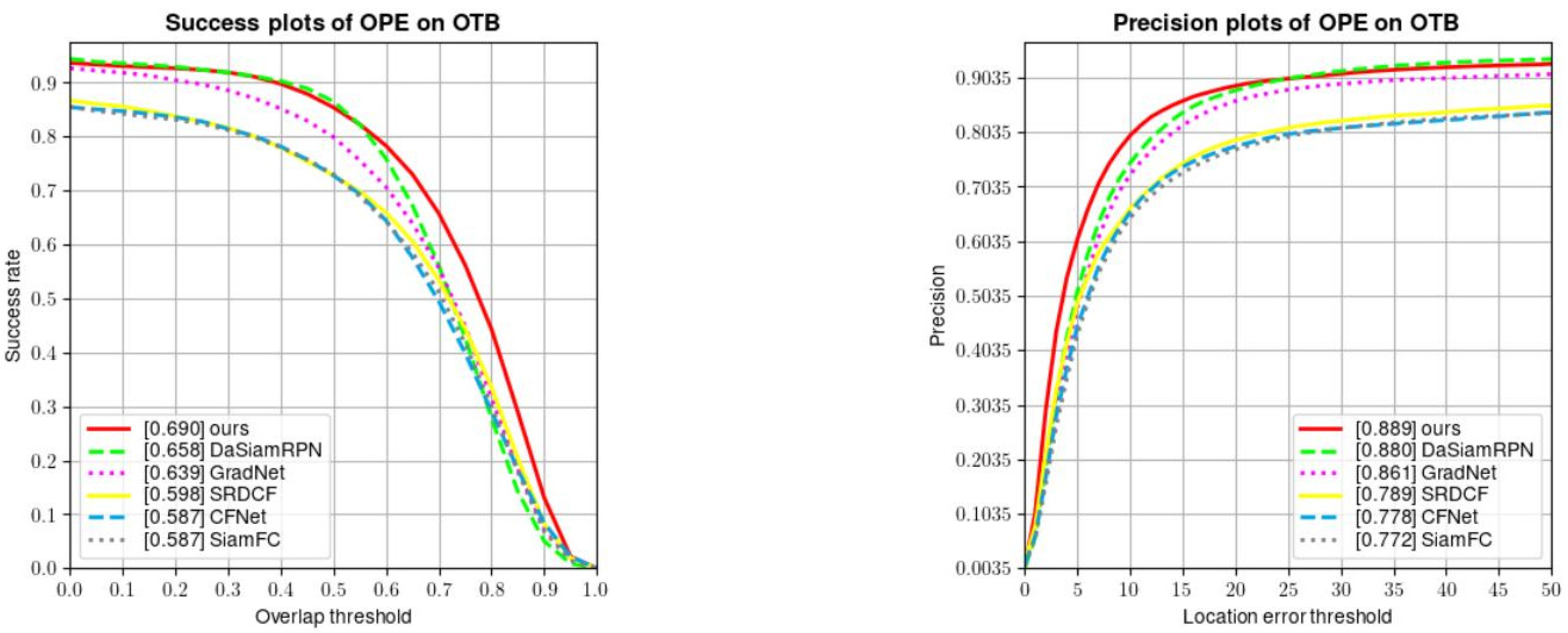

4.2.1. Quantitative Analysis

4.2.2. Visualization of the Tracking Effect

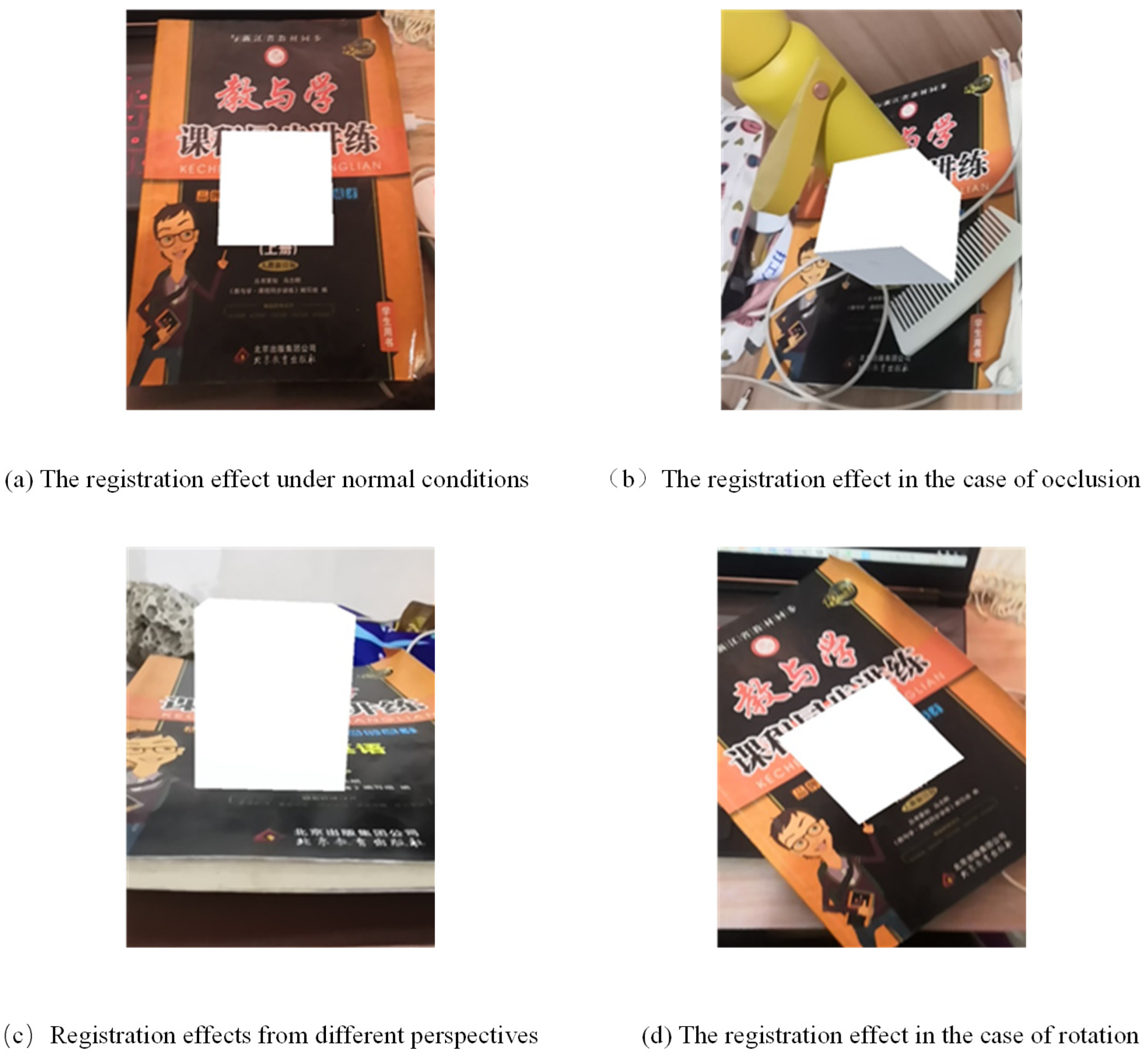

4.2.3. Tracking Registration Experiment

4.2.4. Ablation Study

4.2.5. Speed, FLOPs, and Params

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Thomas, P.C.; David, W.M. Augmented reality: An application of heads-up display technology to manual manufacturing processes. In Proceedings of the Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; Volume 2. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. Lect. Notes Comput. Sci. 2006, 3951, 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; BMVA Press: Durham, UK, 2014. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Liu, C.; Chen, X.F.; Bo, C.J.; Wang, D. Long-term Visual Tracking: Review and Experimental Comparison. Mach. Intell. Res. 2022, 19, 512–530. [Google Scholar] [CrossRef]

- Li, X.; Hu, W.; Shen, C.; Zhang, Z.; Dick, A.; Hengel, A.V.D. A survey of appearance models in visual object tracking. ACM Trans. Intell. Syst. Technol. 2013, 4, 1–48. [Google Scholar] [CrossRef]

- Ondrašovič, M.; Tarábek, P. Siamese visual object tracking: A survey. IEEE Access 2021, 9, 110149–110172. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-convolutional siamese networks for object tracking. In Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 8971–8980. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable siamese attention networks for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6728–6737. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese instance search for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4591–4600. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Annual Conference on Neural Information Processing Systems, Long Beach, CN, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Fan, H.; Bai, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Huang, M.; Liu, J.; Xu, Y.; et al. Lasot: A high-quality large-scale single object tracking benchmark. Int. J. Comput. Vis. 2021, 129, 439–461. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. Gradnet: Gradient-guided network for visual object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6162–6171. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H. Staple: Complementary learners for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1401–1409. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Yan, B.; Zhao, H.; Wang, D.; Lu, H.; Yang, X. ‘Skimming-Perusal’ Tracking: A framework for real-time and robust long-term tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Dai, K.; Zhang, Y.; Wang, D.; Li, J.; Lu, H.; Yang, X. High-performance long-term tracking with meta-updater. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1135–1143. [Google Scholar]

- Zhu, G.; Porikli, F.; Li, H. Beyond local search: Tracking objects everywhere with instance-specific proposals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 943–951. [Google Scholar]

- Ma, C.; Yang, X.; Zhang, C.; Qi, J.; Lu, H. Long-term correlation tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wang, L.; Wang, D.; Qi, J.; Lu, H. Learning regression and verification networks for robust long-term tracking. Int. J. Comput. Vis. 2021, 129, 2536–2547. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Globaltrack: A simple and strong baseline for long-term tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11037–11044. [Google Scholar]

| Trackers | Success | Precision |

|---|---|---|

| KCF [6] | 0.477 | 0.696 |

| SiamFC [12] | 0.587 | 0.772 |

| CFNet [32] | 0.587 | 0.778 |

| SiamDW [20] | 0.627 | 0.828 |

| SaimRPN [13] | 0.629 | 0.847 |

| GradNet [33] | 0.639 | 0.861 |

| DaSiamRPN [18] | 0.658 | 0.880 |

| ours | 0.690 | 0.889 |

| Trackers | Illumination Variation | Occlusion | Deformation | Motion Blur | Fast Motion | Out-of-View | Background Clutters |

|---|---|---|---|---|---|---|---|

| KCF | 0.728 | 0.749 | 0.740 | 0.650 | 0.602 | 0.650 | 0.753 |

| SiamFC | 0.709 | 0.802 | 0.744 | 0.700 | 0.721 | 0.777 | 0.732 |

| SaimRPN | 0.817 | 0.790 | 0.810 | 0.777 | 0.831 | 0.824 | 0.813 |

| CFNet | 0.743 | 0.768 | 0.669 | 0.676 | 0.718 | 0.721 | 0.785 |

| Staple [34] | 0.727 | 0.775 | 0.788 | 0.670 | 0.642 | 0.669 | 0.730 |

| DaSiamRPN | 0.848 | 0.838 | 0.900 | 0.786 | 0.791 | 0.757 | 0.868 |

| SiamDW | 0.841 | 0.855 | 0.906 | 0.716 | 0.732 | 0.734 | 0.847 |

| ours | 0.888 | 0.884 | 0.860 | 0.946 | 0.959 | 0.805 | 0.869 |

| Trackers | Illumination Variation | Occlusion | Deformation | Motion Blur | Fast Motion | Out-of-View | Background Clutters |

|---|---|---|---|---|---|---|---|

| KCF | 0.581 | 0.618 | 0.671 | 0.595 | 0.557 | 0.650 | 0.672 |

| SiamFC | 0.679 | 0.769 | 0.705 | 0.666 | 0.699 | 0.754 | 0.705 |

| SaimRPN | 0.757 | 0.736 | 0.719 | 0.715 | 0.784 | 0.824 | 0.777 |

| CFNet | 0.688 | 0.727 | 0.628 | 0.618 | 0.680 | 0.706 | 0.725 |

| Staple | 0.692 | 0.732 | 0.774 | 0.628 | 0.605 | 0.586 | 0.698 |

| DaSiamRPN | 0.822 | 0.817 | 0.877 | 0.731 | 0.758 | 0.761 | 0.847 |

| SiamDW | 0.772 | 0.808 | 0.846 | 0.699 | 0.705 | 0.726 | 0.775 |

| ours | 0.771 | 0.845 | 0.852 | 0.804 | 0.844 | 0.776 | 0.848 |

| Trackers | Success | Norm Precision | Precision |

|---|---|---|---|

| KCF | 0.178 | 0.190 | 0.166 |

| Staple | 0.243 | 0.27 | 0.239 |

| SiamFC | 0.336 | 0.420 | 0.339 |

| SiamDW | 0.347 | 0.437 | 0.329 |

| SiamPPN++ | 0.495 | 0.570 | 0.493 |

| ATOM | 0.499 | 0.570 | 0.497 |

| DaSiamRPN | 0.515 | 0.605 | 0.529 |

| STARK | 0.671 | 0.770 | 0.713 |

| ours | 0.651 | 0.740 | 0.692 |

| Trackers | ROT | DEF | IV | OV | SV | BC | ARC |

|---|---|---|---|---|---|---|---|

| KCF | 0.198 | 0.256 | 0.179 | 0.345 | 0.278 | 0.197 | 0.252 |

| Staple | 0.256 | 0.287 | 0.367 | 0.268 | 0.398 | 0.376 | 0.296 |

| SiamFC | 0.348 | 0.395 | 0.486 | 0.269 | 0.365 | 0.285 | 0.342 |

| SiamDW | 0.375 | 0.435 | 0.414 | 0.376 | 0.459 | 0.398 | 0.452 |

| SiamPPN++ | 0.469 | 0.548 | 0.534 | 0.598 | 0.497 | 0.398 | 0.586 |

| ATOM [36] | 0.496 | 0.534 | 0.601 | 0.576 | 0.612 | 0.614 | 0.409 |

| DaSiamRPN | 0.565 | 0.676 | 0.698 | 0.549 | 0.538 | 0.659 | 0.711 |

| STARK [35] | 0.790 | 0.765 | 0.734 | 0.854 | 0.789 | 0.613 | 0.654 |

| ours | 0.658 | 0.787 | 0.790 | 0.804 | 0.817 | 0.657 | 0.531 |

| Trackers | F-Score | Pr | Re |

|---|---|---|---|

| ADiMPLT | 0.501 | 0.489 | 0.514 |

| SuperDiMP | 0.503 | 0.510 | 0.496 |

| CoCoLoT | 0.584 | 0.591 | 0.577 |

| SaimDW_LT | 0.601 | 0.618 | 0.585 |

| SpLT [37] | 0.635 | 0.587 | 0.544 |

| CLGS | 0.640 | 0.689 | 0.598 |

| LTMU_B [38] | 0.650 | 0.665 | 0.635 |

| STARK-ST50 | 0.702 | 0.710 | 0.695 |

| ours | 0.695 | 0.678 | 0.713 |

| Trackers | MaxGM | TPR | TNR |

|---|---|---|---|

| Staple | 0.261 | 0.273 | - |

| BACF [39] | 0.281 | 0.316 | - |

| EBT [40] | 0.283 | 0.321 | - |

| SiamFC | 0.313 | 0.391 | - |

| ECO-HC | 0.314 | 0.395 | - |

| LCT [41] | 0.396 | 0.292 | 0.537 |

| DaSiam_LT | 0.415 | 0.689 | - |

| TLD [42] | 0.431 | 0.208 | 0.895 |

| MBMD [43] | 0.544 | 0.609 | 0.485 |

| GlobalTrack [44] | 0.603 | 0.574 | 0.633 |

| SPLT [37] | 0.622 | 0.498 | 0.776 |

| STARK | 0.782 | 0.841 | 0.727 |

| ours | 0.733 | 0.723 | 0.743 |

| Method | DSAM | CAM | Template Update | Success | PNorm | P |

|---|---|---|---|---|---|---|

| ours | ✓ | ✓ | ✓ | 0.651 | 0.740 | 0.692 |

| ours | ✓ | ✓ | 0.524 | 0.621 | 0.534 | |

| ours | ✓ | ✓ | 0.623 | 0.697 | 0.649 | |

| ours | ✓ | ✓ | 0.576 | 0.637 | 0.590 |

| Trackers | Speed (fps) | Params (M) | FLOPs (G) |

|---|---|---|---|

| SiamFC | 68.2 | 6.596 | 9.562 |

| ATOM | 55.7 | 18.965 | 50.623 |

| SiamRPN | 38.5 | 22.633 | 36.790 |

| SaimRPN++ | 35.4 | 54.355 | 48.900 |

| STARK-ST50 | 41.8 | 28.238 | 12.812 |

| ours | 51.9 | 12.659 | 10.723 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, M.; Chen, Q. AR Long-Term Tracking Combining Multi-Attention and Template Updating. Appl. Sci. 2023, 13, 5015. https://doi.org/10.3390/app13085015

Guo M, Chen Q. AR Long-Term Tracking Combining Multi-Attention and Template Updating. Applied Sciences. 2023; 13(8):5015. https://doi.org/10.3390/app13085015

Chicago/Turabian StyleGuo, Mengru, and Qiang Chen. 2023. "AR Long-Term Tracking Combining Multi-Attention and Template Updating" Applied Sciences 13, no. 8: 5015. https://doi.org/10.3390/app13085015

APA StyleGuo, M., & Chen, Q. (2023). AR Long-Term Tracking Combining Multi-Attention and Template Updating. Applied Sciences, 13(8), 5015. https://doi.org/10.3390/app13085015