Exploring Bi-Directional Context for Improved Chatbot Response Generation Using Deep Reinforcement Learning

Abstract

1. Introduction

- Using historical information in the conversation for the Seq2Seq model can improve consistency in the conversation. When combined with future contextual information, the Seq2Seq model can evaluate the current response based on long-term objectives that the Reinforcement Learning (RL) algorithm will accumulate. This method enables the chatbot to maintain a conversation that adheres to a specific objective. The primary objective of utilizing bi-directional context is to ensure the conversation stays on track toward the desired goal. Our aim is to develop a new conversational model that takes advantage of effective context to improve the coherence and consistency of a conversation.

- RL techniques require a forward-looking function, which is an essential component that scores the quality of each response. In order to enhance the training of models for achieving the desired goal, we have introduced two additional forward-looking functions.

- Our proposed method for training conversational agents involves using deep reinforcement learning algorithms that leverage action spaces obtained from simulating conversations between two pre-trained virtual agents.

2. Related Work

3. The Proposed Model

3.1. Left-Context with BERT-Based Model

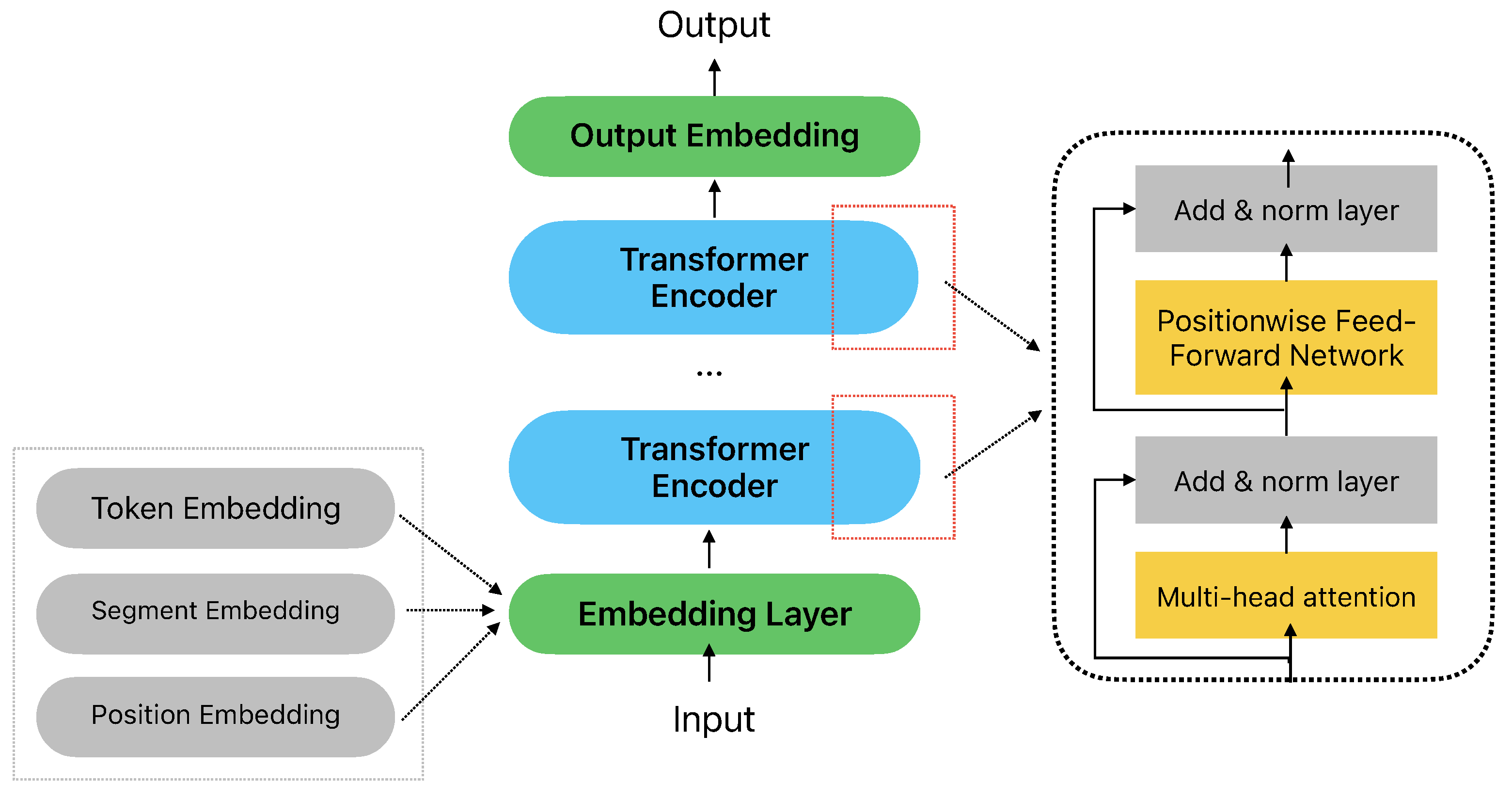

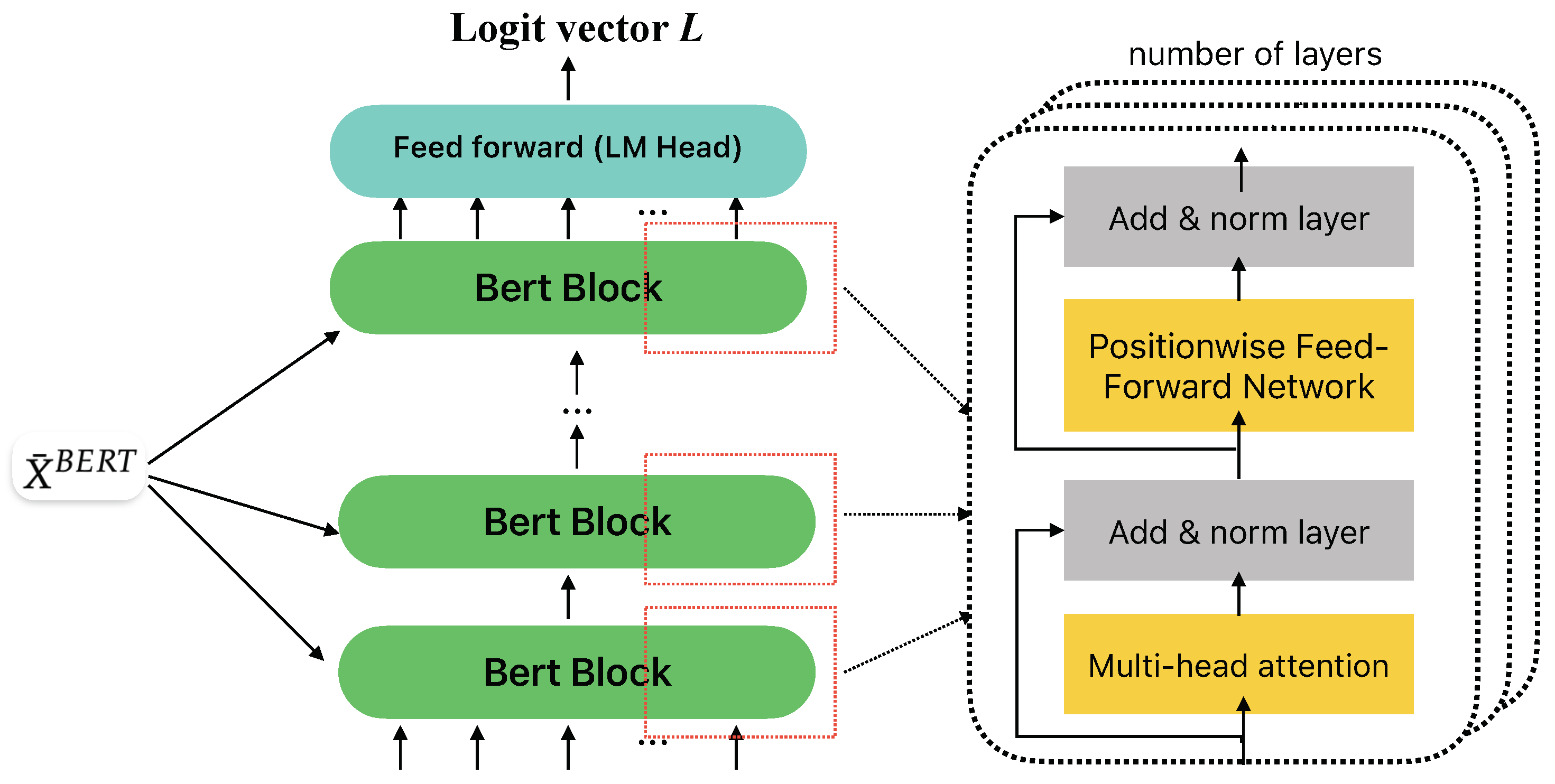

3.1.1. BERT Pre-Trained Model

3.1.2. Integrating Left-Context with BERT2BERT

3.2. Bi-Directional Context Using Deep Reinforcement Learning

3.2.1. Reward Definition

3.2.2. Conversation Simulation

| Algorithm 1 DRL-Chat |

| Require: Input sequence (X), ground-truth output sequence (Y), and conversation history (). |

| ▹Pre-training Policy with Left-context: |

|

| ▹Fine-tuning Policy with Right-context: |

|

4. Experiment and Discussion

4.1. Description of Dataset

4.2. Quantitative Evaluation

4.3. Designing and Evaluating Experimental Models

- BERT LC (only left-context based on BERT) This model addresses the shortcomings of traditional generative models that only generate responses based on the current sentence without considering information from previous conversation turns. It does so by reusing historical information to predict responses, exploiting contextual information from prior turns in the conversation.

- BERT RC (only right-context with BERT and reinforcement learning) Encoder–decoder architectures have been proven to yield good results in text generation tasks. However, they still lack the ability to process information by considering its impact on the future. To address this limitation, this approach leverages the strengths of the BERT2BERT method to capture the semantic information of a previous conversation and combines it with the power of reinforcement learning algorithms to manage the information flow for future conversations.

- BERT FC (full context with BERT and reinforcement learning) This model combines the left-context and right-context to take advantage of them. The left-context allows the model to leverage the information already passed in the conversation. At the same time, the right-context helps the model capture the influence of the information flow on future conversations.

- We presented the BLEU and ROUGE scores to measure conversation generation for correlation with human judgment. We showed that three of our models improved the BLEU score compared to the baseline model. However, the difference in BLEU score between the baseline and the proposed model (with only left-context) is slight. BERT is a state-of-the-art language model that uses a transformer-based architecture to learn contextual relationships between words in a text corpus. One of the main advantages of BERT is its ability to integrate contextual information into its understanding of text. Therefore, adding more contextual information to it may be difficult to further enhance the model’s effectiveness.

- When comparing the use of a single context, we found that the left-context-based model improved the average BLEU score by 5% and the average ROUGE score by 7% compared to the baseline. In contrast, the right-context-based model correspondingly achieved 8% BLEU score and 10% ROUGE score. However, using both the left-context and the right-context, we also find that the RL-based models generate more coherent outputs when compared to the baseline model. Specifically, the model improved 12% average BLEU score and 13% average ROUGE score.

5. Conclusions and Future Work

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AIML | Artificial Intelligence Markup Language |

| ALICE | Artificial Linguistic Internet Computer Entity |

| BERT | Bi-directional Encoder Representations from Transformers |

| BLEU | Bilingual Evaluation Understudy |

| CNN | Convolutional Neural Network |

| DRL | Deep reinforcement learning |

| LLM | Large Language Model |

| LSTM | Long Short Term Memory |

| MLE | Maximum-likelihood estimation |

| MDP | Markov Decision Process |

| NLP | Natural Language Processing |

| POMDP | Partially Observable Markov Decision Process |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| RL | Reinforcement learning |

| Seq2Seq | Sequence-to-sequence |

| SMT | Statistical Machine Translation |

References

- Wallace, R. The Anatomy of A.L.I.C.E. In Parsing the Turing Test; Springer: Dordrecht, The Netherlands, 2009; pp. 181–210. [Google Scholar]

- Jafarpour, S.; Burges, C.; Ritter, A. Filter, Rank, and Transfer the Knowledge: Learning to Chat. Adv. Rank. 2010, 10, 2329–9290. [Google Scholar]

- Yan, Z.; Duan, N.; Bao, J.; Chen, P.; Zhou, M.; Li, Z.; Zhou, J. DocChat: An Information Retrieval Approach for Chatbot Engines Using Unstructured Documents. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; Association for Computational Linguistics: Berlin, Germany, 2016; pp. 516–525. [Google Scholar] [CrossRef]

- Zhong, H.; Dou, Z.; Zhu, Y.; Qian, H.; Wen, J.R. Less is More: Learning to Refine Dialogue History for Personalized Dialogue Generation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 5808–5820. [Google Scholar] [CrossRef]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Building End-to-End Dialogue Systems Using Generative Hierarchical Neural Network Models. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 3776–3783. [Google Scholar]

- Mou, L.; Song, Y.; Yan, R.; Li, G.; Zhang, L.; Jin, Z. Sequence to Backward and Forward Sequences: A Content-Introducing Approach to Generative Short-Text Conversation. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016; The COLING 2016 Organizing Committee: Osaka, Japan, 2016; pp. 3349–3358. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Bangkok, Thailand, 18–22 November 2020; MIT Press: Cambridge, MA, USA, 2014; Volume 2, NIPS’14. pp. 3104–3112. [Google Scholar]

- Sordoni, A.; Galley, M.; Auli, M.; Brockett, C.; Ji, Y.; Mitchell, M.; Nie, J.Y.; Gao, J.; Dolan, B. A Neural Network Approach to Context-Sensitive Generation of Conversational Responses. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; Association for Computational Linguistics: Denver, CO, USA, 2015; pp. 196–205. [Google Scholar] [CrossRef]

- Xu, H.D.; Mao, X.L.; Chi, Z.; Sun, F.; Zhu, J.; Huang, H. Generating Informative Dialogue Responses with Keywords-Guided Networks. In Proceedings of the Natural Language Processing and Chinese Computing: 10th CCF International Conference, NLPCC 2021, Qingdao, China, 13–17 October 2021; Proceedings, Part II. Springer: Berlin/Heidelberg, Germany, 2021; pp. 179–192. [Google Scholar] [CrossRef]

- Ismail, J.; Ahmed, A.; Ouaazizi Aziza, E. Improving a Sequence-to-sequence NLP Model using a Reinforcement Learning Policy Algorithm. In Proceedings of the Artificial Intelligence, Soft Computing and Applications. Academy and Industry Research Collaboration Center (AIRCC), Copenhagen, Denmark, 29–30 January 2022. [Google Scholar] [CrossRef]

- Csaky, R. Deep Learning Based Chatbot Models. arXiv 2019, arXiv:1908.08835. [Google Scholar]

- Cai, P.; Wan, H.; Liu, F.; Yu, M.; Yu, H.; Joshi, S. Learning as Conversation: Dialogue Systems Reinforced for Information Acquisition. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 4781–4796. [Google Scholar] [CrossRef]

- Levin, E.; Pieraccini, R.; Eckert, W. A stochastic model of human-machine interaction for learning dialog strategies. IEEE Trans. Speech Audio Process. 2000, 8, 11–23. [Google Scholar] [CrossRef]

- Pieraccini, R.; Suendermann, D.; Dayanidhi, K.; Liscombe, J. Are We There Yet? Research in Commercial Spoken Dialog Systems. In Text, Speech and Dialogue; Springer: Berlin/Heidelberg, Germany, 2009; pp. 3–13. [Google Scholar] [CrossRef]

- Yang, M.; Huang, W.; Tu, W.; Qu, Q.; Shen, Y.; Lei, K. Multitask Learning and Reinforcement Learning for Personalized Dialog Generation: An Empirical Study. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 49–62. [Google Scholar] [CrossRef] [PubMed]

- Weizenbaum, J. ELIZA—A Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Parkison, R.C.; Colby, K.M.; Faught, W.S. Conversational Language Comprehension Using Integrated Pattern-Matching and Parsing. In Readings in Natural Language Processing; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1986; pp. 551–562. [Google Scholar]

- Ritter, A.; Cherry, C.; Dolan, W.B. Data-Driven Response Generation in Social Media. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 27–31 July 2011; Association for Computational Linguistics: Edinburgh, UK, 2011; pp. 583–593. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Shen, S.; Cheng, Y.; He, Z.; He, W.; Wu, H.; Sun, M.; Liu, Y. Minimum Risk Training for Neural Machine Translation. arXiv 2015, arXiv:1512.02433. [Google Scholar]

- Vaswani, A.; Bengio, S.; Brevdo, E.; Chollet, F.; Gomez, A.N.; Gouws, S.; Jones, L.; Kaiser, L.; Kalchbrenner, N.; Parmar, N.; et al. Tensor2Tensor for Neural Machine Translation. arXiv 2018, arXiv:1803.07416. [Google Scholar]

- Nallapati, R.; Xiang, B.; Zhou, B. Sequence-to-Sequence RNNs for Text Summarization. arXiv 2016, arXiv:1602.06023. [Google Scholar]

- Nallapati, R.; Zhai, F.; Zhou, B. SummaRuNNer: A Recurrent Neural Network based Sequence Model for Extractive Summarization of Documents. arXiv 2016, arXiv:1611.04230. [Google Scholar] [CrossRef]

- Paulus, R.; Xiong, C.; Socher, R. A Deep Reinforced Model for Abstractive Summarization. arXiv 2017, arXiv:1705.04304. [Google Scholar]

- Pamungkas, E.W. Emotionally-Aware Chatbots: A Survey. arXiv 2019, arXiv:1906.09774. [Google Scholar]

- Li, J.; Monroe, W.; Ritter, A.; Jurafsky, D.; Galley, M.; Gao, J. Deep Reinforcement Learning for Dialogue Generation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; Association for Computational Linguistics: Austin, TX, USA, 2016; pp. 1192–1202. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: San Diego, CA, USA, 2016; pp. 110–119. [Google Scholar] [CrossRef]

- Uc-Cetina, V.; Navarro-Guerrero, N.; Martín-González, A.; Weber, C.; Wermter, S. Survey on reinforcement learning for language processing. Artif. Intell. Rev. 2023, 56, 1543–1573. [Google Scholar] [CrossRef]

- Gašić, M.; Breslin, C.; Henderson, M.; Kim, D.; Szummer, M.; Thomson, B.; Tsiakoulis, P.; Young, S. POMDP-based dialogue manager adaptation to extended domains. In Proceedings of the SIGDIAL 2013 Conference, Metz, France, 22–24 August 2013; Association for Computational Linguistics: Metz, France, 2013; pp. 214–222. [Google Scholar]

- Young, S.; Gašić, M.; Thomson, B.; Williams, J.D. POMDP-Based Statistical Spoken Dialog Systems: A Review. Proc. IEEE 2013, 101, 1160–1179. [Google Scholar] [CrossRef]

- Xiang, X.; Foo, S. Recent Advances in Deep Reinforcement Learning Applications for Solving Partially Observable Markov Decision Processes (POMDP) Problems: Part 1—Fundamentals and Applications in Games, Robotics and Natural Language Processing. Mach. Learn. Knowl. Extr. 2021, 3, 554–581. [Google Scholar] [CrossRef]

- Hsueh, Y.L.; Chou, T.L. A Task-Oriented Chatbot Based on LSTM and Reinforcement Learning. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2022, 22, 1–27. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, L.; Liu, X.; Yu, K. Distributed Structured Actor-Critic Reinforcement Learning for Universal Dialogue Management. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2400–2411. [Google Scholar] [CrossRef]

- Ultes, S.; Rojas-Barahona, L.M.; Su, P.H.; Vandyke, D.; Kim, D.; Casanueva, I.; Budzianowski, P.; Mrkšić, N.; Wen, T.H.; Gašić, M.; et al. PyDial: A Multi-domain Statistical Dialogue System Toolkit. In Proceedings of the ACL 2017, System Demonstrations, Vancouver, BC, Canada, 30 July–4 August 2017; Association for Computational Linguistics: Vancouver, BC, Canada, 2017; pp. 73–78. [Google Scholar]

- Verma, S.; Fu, J.; Yang, S.; Levine, S. CHAI: A CHatbot AI for Task-Oriented Dialogue with Offline Reinforcement Learning. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Association for Computational Linguistics: Seattle, WA, USA, 2022; pp. 4471–4491. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- De Coster, M.; Dambre, J. Leveraging Frozen Pretrained Written Language Models for Neural Sign Language Translation. Information 2022, 13, 220. [Google Scholar] [CrossRef]

- Yan, R.; Li, J.; Su, X.; Wang, X.; Gao, G. Boosting the Transformer with the BERT Supervision in Low-Resource Machine Translation. Appl. Sci. 2022, 12, 7195. [Google Scholar] [CrossRef]

- Kurtic, E.; Campos, D.; Nguyen, T.; Frantar, E.; Kurtz, M.; Fineran, B.; Goin, M.; Alistarh, D. The Optimal BERT Surgeon: Scalable and Accurate Second-Order Pruning for Large Language Models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Association for Computational Linguistics: Abu Dhabi, United Arab Emirates, 2022; pp. 4163–4181. [Google Scholar]

- Shen, T.; Li, J.; Bouadjenek, M.R.; Mai, Z.; Sanner, S. Towards understanding and mitigating unintended biases in language model-driven conversational recommendation. Inf. Process. Manag. 2023, 60, 103139. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Rothe, S.; Narayan, S.; Severyn, A. Leveraging Pre-trained Checkpoints for Sequence Generation Tasks. Trans. Assoc. Comput. Linguist. 2020, 8, 264–280. [Google Scholar] [CrossRef]

- Chen, C.; Yin, Y.; Shang, L.; Jiang, X.; Qin, Y.; Wang, F.; Wang, Z.; Chen, X.; Liu, Z.; Liu, Q. bert2BERT: Towards Reusable Pretrained Language Models. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; Association for Computational Linguistics: Dublin, Ireland, 2022; pp. 2134–2148. [Google Scholar] [CrossRef]

- Naous, T.; Bassyouni, Z.; Mousi, B.; Hajj, H.; Hajj, W.E.; Shaban, K. Open-Domain Response Generation in Low-Resource Settings Using Self-Supervised Pre-Training of Warm-Started Transformers. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–12. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; Volume 1, (Long and Short Papers). pp. 4171–4186. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; A Bradford Book: Cambridge, MA, USA, 2018. [Google Scholar]

- Williams, R.J. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I. Reinforcement Learning Neural Turing Machines. arXiv 2015, arXiv:1505.00521. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Eighth International Joint Conference on Natural Language Processing, Taipei, Taiwan, 27 November–1 December 2017; Asian Federation of Natural Language Processing: Taipei, Taiwan, 2017; Volume 1: Long Papers, pp. 986–995. [Google Scholar]

- Wang, H.; Lu, Z.; Li, H.; Chen, E. A Dataset for Research on Short-Text Conversations. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; Association for Computational Linguistics: Seattle, WA, USA, 2013; pp. 935–945. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; Association for Computational Linguistics: Philadelphia, PA, USA, 2002. ACL’02. pp. 311–318. [Google Scholar] [CrossRef]

- Vinyals, O.; Le, Q.V. A Neural Conversational Model. In Proceedings of the ICML, Lille, France, 6–11 July 2015. [Google Scholar]

- Kapočiūtė-Dzikienė, J. A Domain-Specific Generative Chatbot Trained from Little Data. Appl. Sci. 2020, 10, 2221. [Google Scholar] [CrossRef]

- Sordoni, A.; Bengio, Y.; Vahabi, H.; Lioma, C.; Grue Simonsen, J.; Nie, J.Y. A Hierarchical Recurrent Encoder-Decoder for Generative Context-Aware Query Suggestion. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, New York, NY, USA, 18–23 October 2015; Association for Computing Machinery: New York, NY, USA, 2015. CIKM ’15. pp. 553–562. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, M.; Zhang, T.; Zhu, X.; Liu, B. Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, Orleans, LA, USA, 2–7 February 2018; AAAI Press: New Orleans, LA, USA, 2018. [Google Scholar]

- Bao, S.; He, H.; Wang, F.; Wu, H.; Wang, H. PLATO: Pre-trained Dialogue Generation Model with Discrete Latent Variable. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, Online, 5–10 July 2020; pp. 85–96. [Google Scholar] [CrossRef]

- Fan, J.; Yuan, L.; Song, H.; Tang, H.; Yang, R. NLP Final Project: A Dialogue System; Hong Kong University of Science and Technology (HKUST): Hong Kong, China, 2020. [Google Scholar]

| Turn 1 | Mom, how can we get to the supermarket? |

| Turn 2 | We can take a bus. |

| Turn 3 | Does this bus go there? |

| Turn 4 | I can’t see clearly. |

| Turn 5 | Let’s step in. |

| Turn 6 | No, it’s not right. |

| Turn 7 | Mom! What’s bus number? |

| BLEU 1 | BLEU 2 | BLEU 3 | BLEU 4 | ROUGE Precision | ROUGE Recall | ROUGE Fmeasure | |

|---|---|---|---|---|---|---|---|

| BERT (Baseline) | 0.452 | 0.328 | 0.269 | 0.207 | 0.315 | 0.279 | 0.279 |

| BERT LC (only left-context) | 0.458 | 0.345 | 0.287 | 0.226 | 0.332 | 0.302 | 0.301 |

| BERT RC (only right-context with 1 turn) | 0.466 | 0.357 | 0.297 | 0.235 | 0.337 | 0.313 | 0.309 |

| BERT FC (bi-directional context with 1 turn) | 0.488 | 0.367 | 0.308 | 0.246 | 0.345 | 0.325 | 0.320 |

| BLEU 1 | BLEU 2 | BLEU 3 | BLEU 4 | ROUGE Precision | ROUGE Recall | ROUGE Fmeasure | |

|---|---|---|---|---|---|---|---|

| BERT FC (1 turn) | 0.488 | 0.367 | 0.308 | 0.246 | 0.345 | 0.325 | 0.320 |

| BERT FC (3 turns) | 0.492 | 0.374 | 0.316 | 0.256 | 0.362 | 0.336 | 0.334 |

| BERT FC (5 turns) | 0.518 | 0.403 | 0.348 | 0.289 | 0.389 | 0.371 | 0.366 |

| BERT FC (7 turns) | 0.500 | 0.390 | 0.337 | 0.281 | 0.381 | 0.366 | 0.362 |

| Model | BLEU1 | BLEU2 | BLEU3 | BLEU4 |

|---|---|---|---|---|

| Best proposed model | 0.518 | 0.403 | 0.348 | 0.289 |

| HRED [50] | 0.396 | 0.174 | 0.019 | 0.009 |

| COHA [50] | 0.379 | 0.156 | 0.018 | 0.066 |

| COHA + Attention [50] | 0.464 | 0.220 | 0.017 | 0.009 |

| Plato [58] | 0.486 | 0.389 | - | - |

| Model | Average BLEU | Best Proposed Model (Rate of Increase in BLEU Score) |

|---|---|---|

| BERT (baseline) | 0.314 | 0.389 (+24%) |

| HRED | 0.15 | 0.389 (+151%) |

| COHA | 0.15 | 0.389 (+151%) |

| COHA+Attention | 0.178 | 0.389 (+118%) |

| Plato | 0.438 | 0.461 (+5%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tran, Q.-D.L.; Le, A.-C. Exploring Bi-Directional Context for Improved Chatbot Response Generation Using Deep Reinforcement Learning. Appl. Sci. 2023, 13, 5041. https://doi.org/10.3390/app13085041

Tran Q-DL, Le A-C. Exploring Bi-Directional Context for Improved Chatbot Response Generation Using Deep Reinforcement Learning. Applied Sciences. 2023; 13(8):5041. https://doi.org/10.3390/app13085041

Chicago/Turabian StyleTran, Quoc-Dai Luong, and Anh-Cuong Le. 2023. "Exploring Bi-Directional Context for Improved Chatbot Response Generation Using Deep Reinforcement Learning" Applied Sciences 13, no. 8: 5041. https://doi.org/10.3390/app13085041

APA StyleTran, Q.-D. L., & Le, A.-C. (2023). Exploring Bi-Directional Context for Improved Chatbot Response Generation Using Deep Reinforcement Learning. Applied Sciences, 13(8), 5041. https://doi.org/10.3390/app13085041