Three-Dimensional Human Pose Estimation with Spatial–Temporal Interaction Enhancement Transformer

Abstract

1. Introduction

- To predict 3D human pose more accurately from monocular videos, we designed a spatio-temporal interaction enhanced Transformer network, called STFormer. STFormer is a two-stage method, in which the first stage extracts features independently from the spatial and temporal domains, respectively, and the second stage interacts spatial and temporal information across domains to enrich the representations.

- In the second stage, we designed the Spatial Reconstruction block and the Temporal Refinement block. The Spatial Reconstruction block injects the temporal features into the spatial domain to adjust the spatial structure relationship; the reconstructed features are then sent to the Temporal Refinement block to complement the weaker intra-frame structural information in the temporal features.

2. Related Work

2.1. Three-Dimensional Human Pose Estimation

2.2. Three-Dimensional Human Pose Estimation for Video under Lifting Method

2.3. Visual Transformer

3. Method

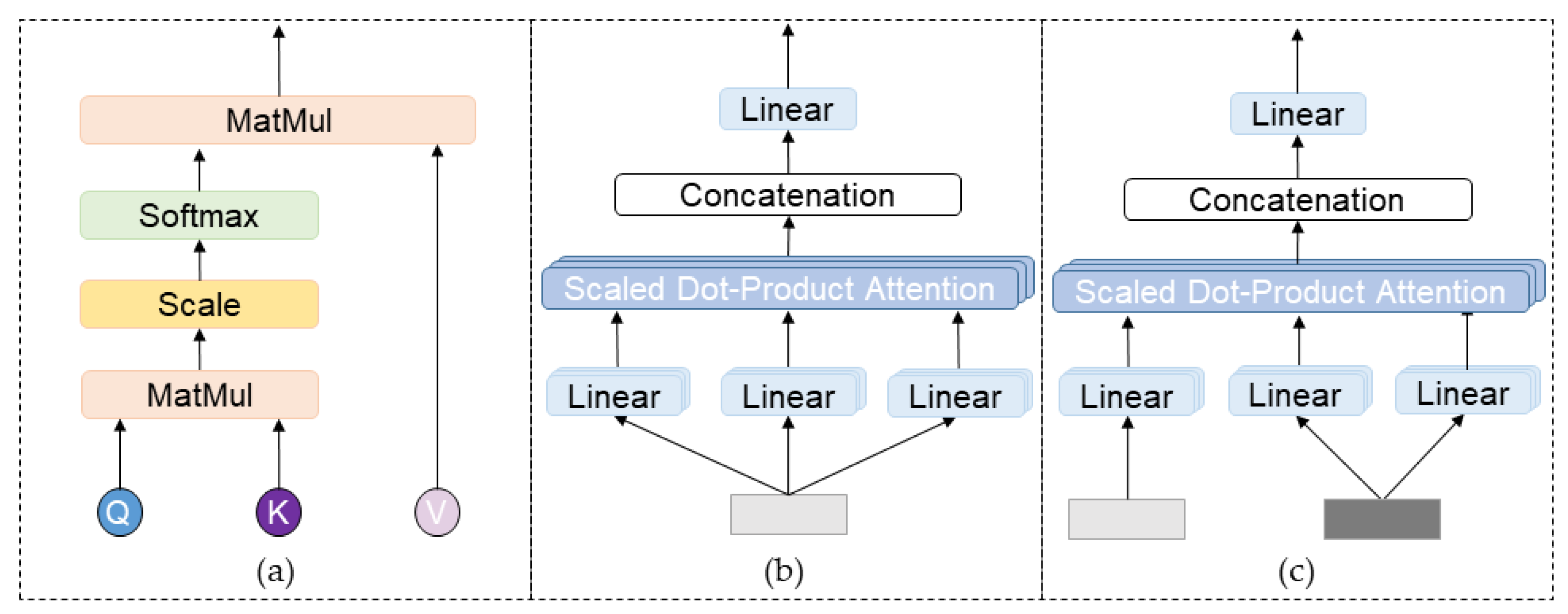

3.1. Preliminary

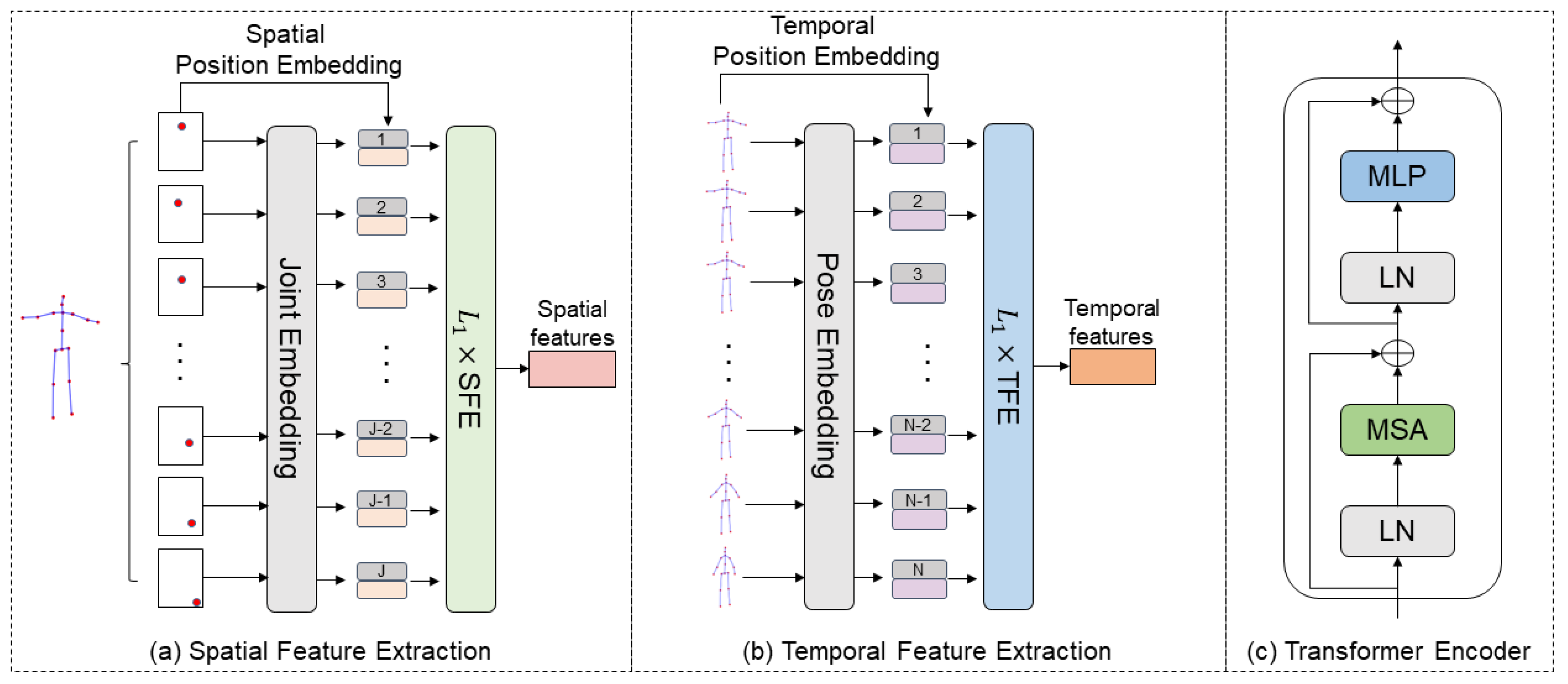

3.2. Feature Extraction

3.2.1. Spatial Feature Extraction Branch

3.2.2. Temporal Feature Extraction Branch

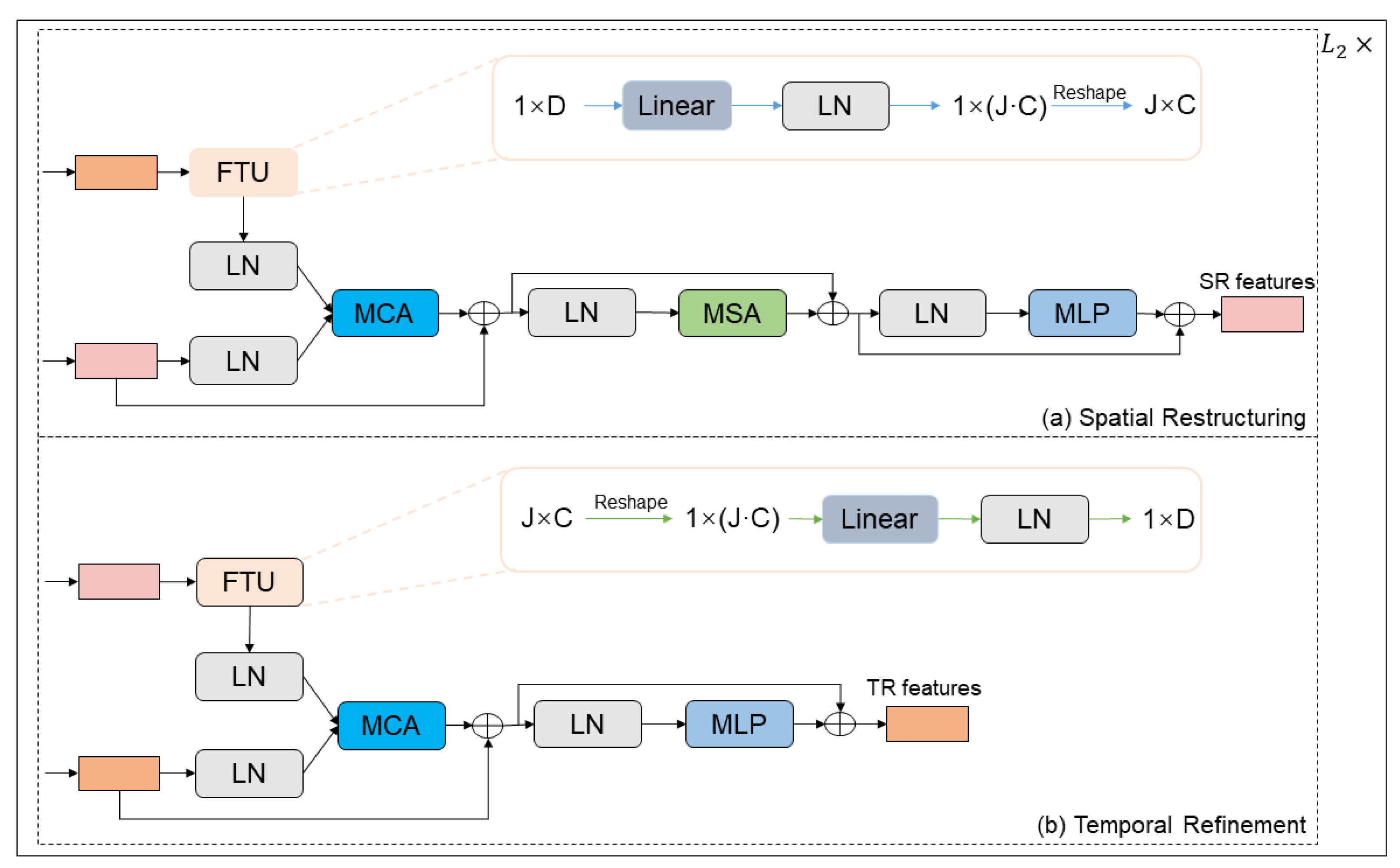

3.3. Cross-Domain Interaction

3.3.1. Spatial Reconstruction

3.3.2. Temporal Refinement

3.4. Regression Head

3.5. Loss Function

4. Experiments

4.1. Dataset and Evaluation Metrics

4.2. Implementation Details

4.3. Results

4.3.1. Results on Human3.6M

4.3.2. Computation Complexity Analysis

4.4. Ablation Study

4.4.1. Impact of Receptive Fields

4.4.2. Structure Analysis

4.4.3. Impact of Each Component

4.4.4. Parameter Setting Analysis

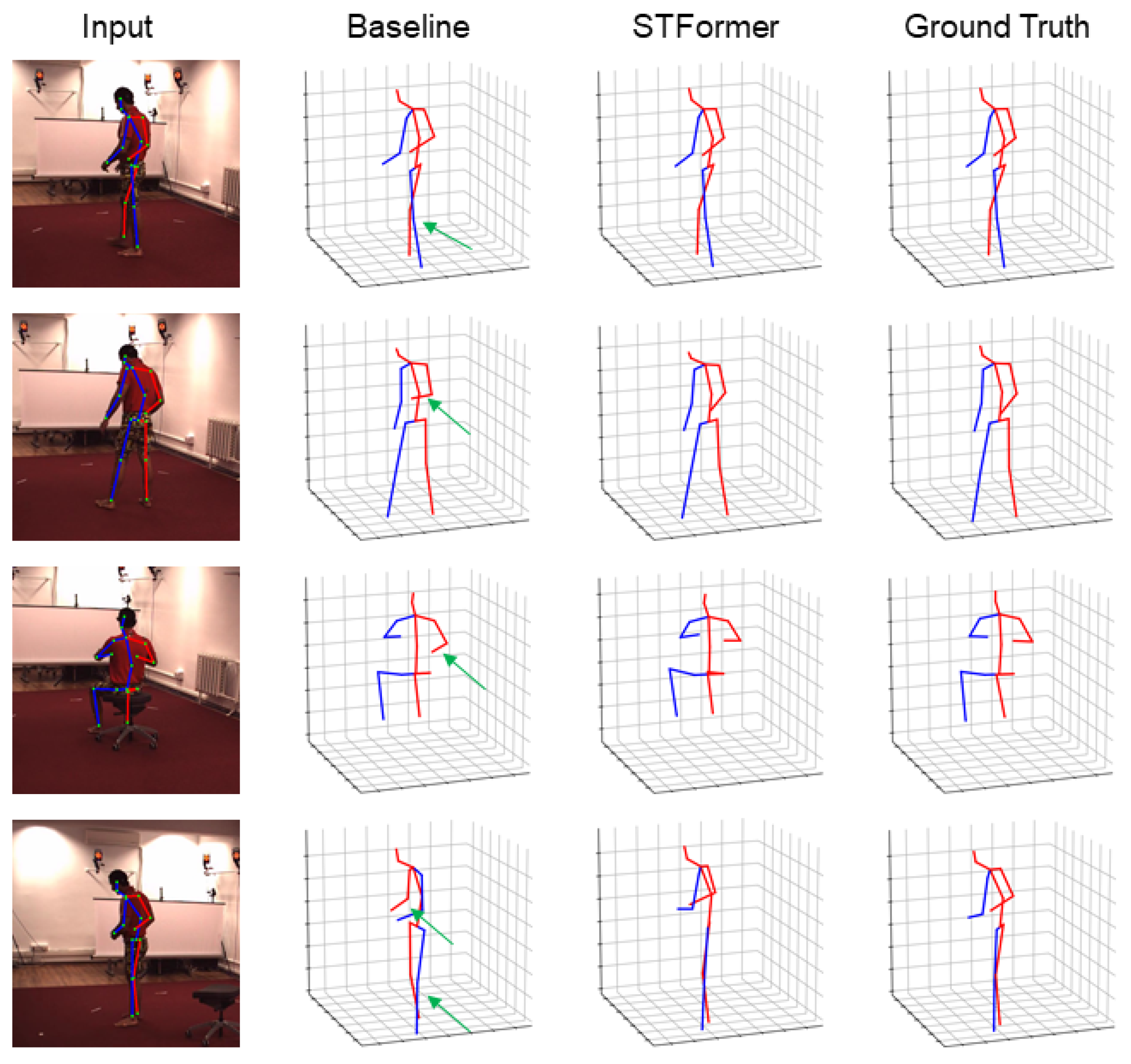

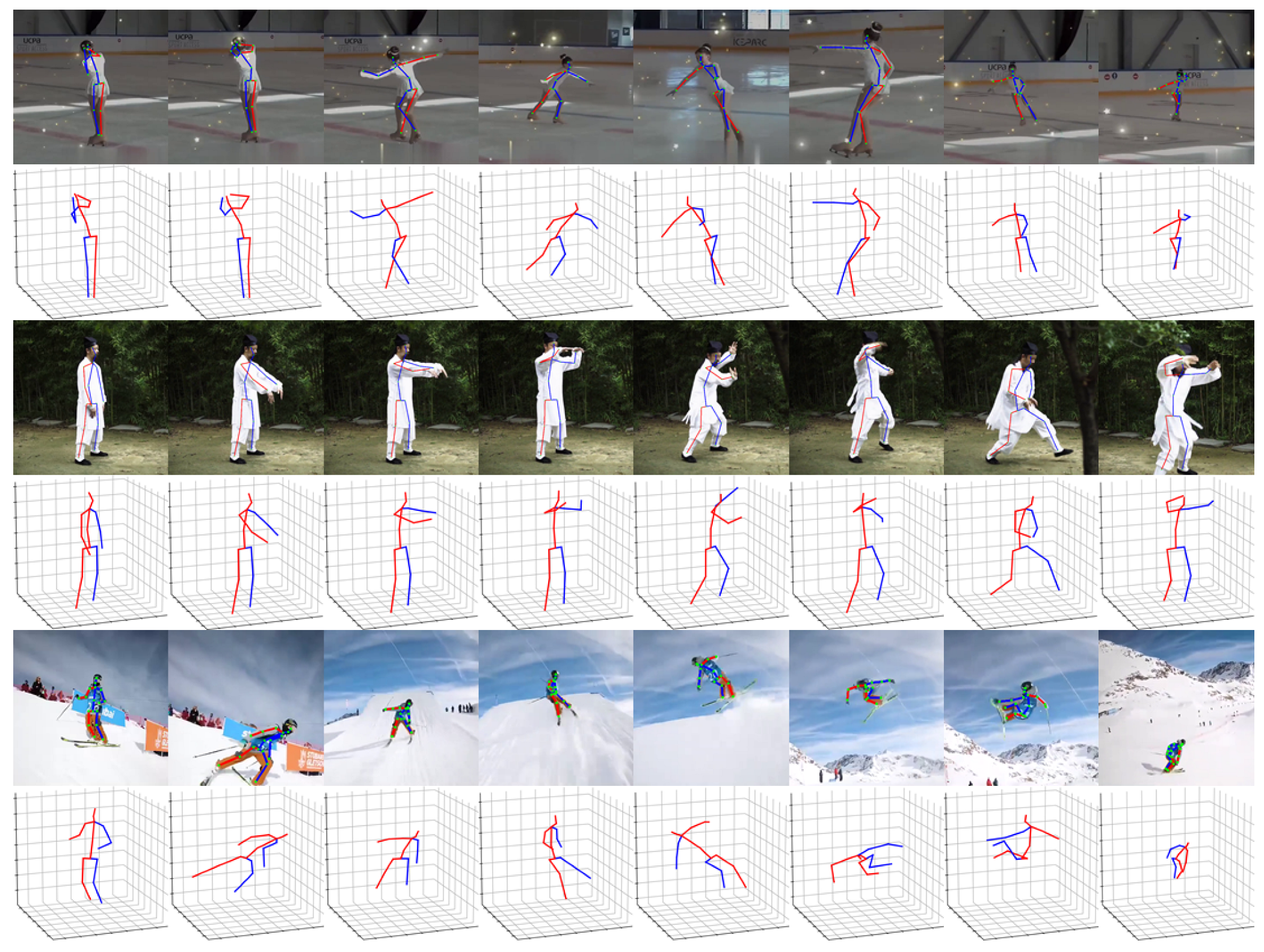

4.5. Qualitative Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, M.; Liu, H.; Chen, C. Enhanced skeleton visualization for view invariant human action recognition. Pattern Recognit. 2017, 68, 346–362. [Google Scholar] [CrossRef]

- Liu, M.; Yuan, J. Recognizing human actions as the evolution of pose estimation maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1159–1168. [Google Scholar]

- Wang, P.; Li, W.; Gao, Z.; Tang, C.; Ogunbona, P.O. Depth pooling based large-scale 3-d action recognition with convolutional neural networks. IEEE Trans. Multimed. 2018, 20, 1051–1061. [Google Scholar] [CrossRef]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Errity, A. Human–computer interaction. In An Introduction to Cyberpsychology; Routledge: Milton Park, UK, 2016; pp. 263–278. [Google Scholar]

- Mehta, D.; Sridhar, S.; Sotnychenko, O.; Rhodin, H.; Shafiei, M.; Seidel, H.P.; Xu, W.; Casas, D.; Theobalt, C. Vnect: Real-time 3d human pose estimation with a single rgb camera. ACM Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Zheng, J.; Shi, X.; Gorban, A.; Mao, J.; Song, Y.; Qi, C.R.; Liu, T.; Chari, V.; Cornman, A.; Zhou, Y.; et al. Multi-modal 3d human pose estimation with 2d weak supervision in autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4478–4487. [Google Scholar]

- Zheng, C.; Zhu, S.; Mendieta, M.; Yang, T.; Chen, C.; Ding, Z. 3d human pose estimation with spatial and temporal transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11656–11665. [Google Scholar]

- Wang, J.; Yan, S.; Xiong, Y.; Lin, D. Motion guided 3d pose estimation from videos. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 764–780. [Google Scholar]

- Chen, T.; Fang, C.; Shen, X.; Zhu, Y.; Chen, Z.; Luo, J. Anatomy-aware 3d human pose estimation with bone-based pose decomposition. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 198–209. [Google Scholar] [CrossRef]

- Li, W.; Liu, H.; Ding, R.; Liu, M.; Wang, P.; Yang, W. Exploiting temporal contexts with strided Transformer for 3d human pose estimation. IEEE Trans. Multimed. 2022, 25, 1282–1293. [Google Scholar] [CrossRef]

- Iskakov, K.; Burkov, E.; Lempitsky, V.; Malkov, Y. Learnable triangulation of human pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7718–7727. [Google Scholar]

- Qiu, H.; Wang, C.; Wang, J.; Wang, N.; Zeng, W. Cross view fusion for 3d human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4342–4351. [Google Scholar]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D.; Auli, M. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7753–7762. [Google Scholar]

- Cai, Y.; Ge, L.; Liu, J.; Cai, J.; Cham, T.J.; Yuan, J.; Thalmann, N.M. Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2272–2281. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.M.; Hu, S.M. Visual attention network. arXiv 2022, arXiv:2202.09741. [Google Scholar]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6054–6063. [Google Scholar]

- Kumar, D.; Kukreja, V. Early Recognition of Wheat Powdery Mildew Disease Based on Mask RCNN. In Proceedings of the 2022 International Conference on Data Analytics for Business and Industry (ICDABI), online, 25–26 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 542–546. [Google Scholar]

- Kumar, D.; Kukreja, V. MRISVM: A Object Detection and Feature Vector Machine Based Network for Brown Mite Variation in Wheat Plant. In Proceedings of the 2022 International Conference on Data Analytics for Business and Industry (ICDABI), Online, 25–26 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 707–711. [Google Scholar]

- Kumar, D.; Kukreja, V. Application of PSPNET and Fuzzy Logic for Wheat Leaf Rust Disease and its Severity. In Proceedings of the 2022 International Conference on Data Analytics for Business and Industry (ICDABI), online, 25–26 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 547–551. [Google Scholar]

- Kumar, D.; Kukreja, V. A Symbiosis with Panicle-SEG Based CNN for Count the Number of Wheat Ears. In Proceedings of the 2022 10th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 13–14 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Zeng, A.; Sun, X.; Yang, L.; Zhao, N.; Liu, M.; Xu, Q. Learning skeletal graph neural networks for hard 3d pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11436–11445. [Google Scholar]

- Liu, K.; Ding, R.; Zou, Z.; Wang, L.; Tang, W. A comprehensive study of weight sharing in graph networks for 3d human pose estimation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 318–334. [Google Scholar]

- Moon, G.; Lee, K.M. I2l-meshnet: Image-to-lixel prediction network for accurate 3d human pose and mesh estimation from a single rgb image. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 752–768. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Daniilidis, K. Ordinal depth supervision for 3d human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7307–7316. [Google Scholar]

- Lin, K.; Wang, L.; Liu, Z. End-to-end human pose and mesh reconstruction with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1954–1963. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Derpanis, K.G.; Daniilidis, K. Coarse-to-fine volumetric prediction for single-image 3D human pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7025–7034. [Google Scholar]

- Hossain, M.R.I.; Little, J.J. Exploiting temporal information for 3d human pose estimation. In Proceedings of the European conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 68–84. [Google Scholar]

- Martinez, J.; Hossain, R.; Romero, J.; Little, J.J. A simple yet effective baseline for 3d human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2640–2649. [Google Scholar]

- Hassanin, M.; Khamiss, A.; Bennamoun, M.; Boussaid, F.; Radwan, I. CrossFormer: Cross spatio-temporal Transformer for 3d human pose estimation. arXiv 2022, arXiv:2203.13387. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; Jiang, W. Transreid: Transformer-based object re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15013–15022. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision Transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, G.; Tang, H.; Ding, M.; Sebe, N.; Ricci, E. Transformer-based attention networks for continuous pixel-wise prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16269–16279. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part I 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Li, Y.; Zhang, S.; Wang, Z.; Yang, S.; Yang, W.; Xia, S.T.; Zhou, E. Tokenpose: Learning keypoint tokens for human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11313–11322. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded pyramid network for multi-person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7103–7112. [Google Scholar]

- Fang, H.S.; Xu, Y.; Wang, W.; Liu, X.; Zhu, S.C. Learning pose grammar to encode human body configuration for 3d pose estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Lee, K.; Lee, I.; Lee, S. Propagating lstm: 3d pose estimation based on joint interdependency. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 119–135. [Google Scholar]

- Xu, T.; Takano, W. Graph stacked hourglass networks for 3d human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16105–16114. [Google Scholar]

- Zou, Z.; Tang, W. Modulated graph convolutional network for 3D human pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11477–11487. [Google Scholar]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in Transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 15908–15919. [Google Scholar]

| Protocol 1 | Dir | Disc | Eat | Greet | Phone | Photo | Pose | Purch | Sit | SitD | Smoke | Wait | WalkD | Walk | WalkT | Avg |

| Martinez et al. [29] | 51.8 | 56.2 | 58.1 | 59.0 | 69.5 | 78.4 | 55.2 | 58.1 | 74.0 | 94.6 | 62.3 | 59.1 | 65.1 | 49.5 | 52.4 | 62.9 |

| Fang et al. [41] | 50.1 | 54.3 | 57.0 | 57.1 | 66.6 | 73.3 | 53.4 | 55.7 | 72.8 | 88.6 | 60.3 | 57.7 | 62.7 | 47.5 | 50.6 | 60.4 |

| Hossain et al. [28] | 48.4 | 50.7 | 57.2 | 55.2 | 63.1 | 72.6 | 53.0 | 51.7 | 66.1 | 80.9 | 59.0 | 57.3 | 62.4 | 46.6 | 49.6 | 58.3 |

| Lee et al. [42] | 40.2 | 49.2 | 47.8 | 52.6 | 50.1 | 75.0 | 50.2 | 43.0 | 55.8 | 73.9 | 54.1 | 55.6 | 58.2 | 43.3 | 43.3 | 52.8 |

| Pavllo et al. [14] | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 49.8 |

| Cai et al. [15] | 44.6 | 47.4 | 45.6 | 48.8 | 50.8 | 59.0 | 47.2 | 43.9 | 57.9 | 61.9 | 49.7 | 46.6 | 51.3 | 37.1 | 39.4 | 48.8 |

| METRO [26] | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 54.0 |

| GraphSH [43] | 45.2 | 49.9 | 47.5 | 50.9 | 54.9 | 66.1 | 48.5 | 46.3 | 59.7 | 71.5 | 51.4 | 48.6 | 53.9 | 39.9 | 44.1 | 51.9 |

| PoseFormer [8] | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 49.9 |

| MGCN [44] | 45.4 | 49.2 | 45.7 | 49.4 | 50.4 | 58.2 | 47.9 | 46.0 | 57.5 | 63.0 | 49.7 | 46.6 | 52.2 | 38.9 | 40.8 | 49.4 |

| STFormer (Ours) | 43.6 | 47.3 | 45.3 | 46.5 | 48.3 | 56.5 | 44.7 | 43.3 | 57.3 | 66.9 | 48.4 | 44.7 | 49.9 | 35.6 | 38.6 | 47.8 |

| Protocol 2 | Dir | Disc | Eat | Greet | Phone | Photo | Pose | Purch | Sit | SitD | Smoke | Wait | WalkD | Walk | WalkT | Avg |

| Martinez et al. [29] | 39.5 | 43.2 | 46.4 | 47.0 | 51.0 | 56.0 | 41.4 | 40.6 | 56.5 | 49.4 | 49.2 | 45.0 | 49.5 | 38.0 | 43.1 | 47.7 |

| Fang et al. [41] | 38.2 | 41.7 | 43.7 | 44.9 | 48.5 | 55.3 | 40.2 | 38.2 | 54.5 | 64.4 | 47.2 | 44.3 | 47.3 | 36.7 | 41.7 | 45.7 |

| Hossain et al. [28] | 35.7 | 39.3 | 44.6 | 43.0 | 47.2 | 54.0 | 38.3 | 37.5 | 51.6 | 61.3 | 46.5 | 41.4 | 47.3 | 34.2 | 39.4 | 44.1 |

| Lee et al. [42] | 34.9 | 35.2 | 43.2 | 42.6 | 46.2 | 55.0 | 37.6 | 38.8 | 50.9 | 67.3 | 48.9 | 35.2 | 50.7 | 31.0 | 34.6 | 43.4 |

| Pavlakos et al. [25] | 34.7 | 39.8 | 41.8 | 38.6 | 42.5 | 47.5 | 38.0 | 36.6 | 50.7 | 56.8 | 42.6 | 39.6 | 43.9 | 32.1 | 36.5 | 41.8 |

| Cai et al. [15] | 35.7 | 37.8 | 36.9 | 40.7 | 39.6 | 45.2 | 37.4 | 34.5 | 46.9 | 50.1 | 40.5 | 36.1 | 41.0 | 29.6 | 33.2 | 39.0 |

| Liu et al. [23] | 35.9 | 40.0 | 38.0 | 41.5 | 42.5 | 51.4 | 37.8 | 36.0 | 48.6 | 56.6 | 41.8 | 38.3 | 42.7 | 31.7 | 36.2 | 41.2 |

| STFormer (Ours) | 33.5 | 36.9 | 36.2 | 37.6 | 37.7 | 43.3 | 34.0 | 32.9 | 46.4 | 52.9 | 39.1 | 34.1 | 39.4 | 27.5 | 31.7 | 37.6 |

| Method | f | Param (M) | FLOPs (M) | MPJPE | FPS |

|---|---|---|---|---|---|

| Hossain et al. [28] | - | 16.95 | 33.88 | 58.3 | - |

| Pavllo et al. [14] | 27 | 8.56 | 17 | 48.8 | 1492 |

| PoseFormer [8] | 9 | 9.58 | 150 | 49.9 | 320 |

| CrossFormer [30] | 9 | 9.93 | 163 | 48.5 | 284 |

| STFormer (Ours) | 9 | 7.21 | 137 | 47.8 | 155 |

| Method | f | Param (M) | FLOPs (M) | MPJPE |

|---|---|---|---|---|

| STFormer | 3 | 7.210262 | 45.9 | 50.6 |

| STFormer | 5 | 7.211286 | 76.6 | 49.5 |

| STFormer | 7 | 7.212310 | 107.2 | 48.6 |

| STFormer | 9 | 7.213334 | 137.8 | 47.8 |

| (a) | (b) | (c) | (d) |

|---|---|---|---|

| 47.8 | 48.7 | 50.2 | 51.0 |

| Method | SFE | TFE | SR | TR | MPJPE |

|---|---|---|---|---|---|

| SFE | ✓ | × | × | × | 57.9 |

| TFE | × | ✓ | × | × | 49.1 |

| FE-SR | ✓ | ✓ | ✓ | × | 48.2 |

| FE-TR | ✓ | ✓ | × | ✓ | 49.1 |

| STFormer | ✓ | ✓ | ✓ | ✓ | 47.8 |

| MPJPE | ||||

|---|---|---|---|---|

| 2 | 1 | 16 | 512 | 49.7 |

| 2 | 1 | 32 | 512 | 47.8 |

| 2 | 1 | 48 | 512 | 48.8 |

| 2 | 1 | 32 | 256 | 48.9 |

| 2 | 1 | 32 | 512 | 47.8 |

| 2 | 1 | 32 | 768 | 48.1 |

| 2 | 1 | 32 | 512 | 47.8 |

| 3 | 1 | 32 | 512 | 48.9 |

| 2 | 2 | 32 | 512 | 48.7 |

| 1 | 2 | 32 | 512 | 49.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Shi, Q.; Shan, B. Three-Dimensional Human Pose Estimation with Spatial–Temporal Interaction Enhancement Transformer. Appl. Sci. 2023, 13, 5093. https://doi.org/10.3390/app13085093

Wang H, Shi Q, Shan B. Three-Dimensional Human Pose Estimation with Spatial–Temporal Interaction Enhancement Transformer. Applied Sciences. 2023; 13(8):5093. https://doi.org/10.3390/app13085093

Chicago/Turabian StyleWang, Haijian, Qingxuan Shi, and Beiguang Shan. 2023. "Three-Dimensional Human Pose Estimation with Spatial–Temporal Interaction Enhancement Transformer" Applied Sciences 13, no. 8: 5093. https://doi.org/10.3390/app13085093

APA StyleWang, H., Shi, Q., & Shan, B. (2023). Three-Dimensional Human Pose Estimation with Spatial–Temporal Interaction Enhancement Transformer. Applied Sciences, 13(8), 5093. https://doi.org/10.3390/app13085093