Abstract

The incorporation of effective denoising techniques is a crucial requirement for seismic data processing during the acquisition phase due to the inherent susceptibility of the seismic data acquisition process to various forms of interference, such as random and coherent noise. For random noise, the Residual Neural Network (Resnet), with its notable ability to effectively suppress noise in seismic data, has garnered widespread utilization in removing unwanted disturbances or interference due to its elegant simplicity and outstanding performance. Despite the considerable advancements achieved by conventional Resnet in the field of suppressing noise, it is irrefutable that there is still room for amelioration in their ability to filter out unwanted disturbances. As a result, this paper puts forth a novel attention-based methodology for Resnet, intended to overcome the present constraints and attain an optimal seismic signal enhancement. Specifically, we add the convolutional block attention module (CBAM) after the convolutional layer of the residual module and add channel attention on the shortcut connections to filter out the disturbance. We replace the commonly used ReLU activation function in the network with ELU, which is better suited for suppressing seismic noise. Empirical assessments conducted on both synthetic and authentic datasets have demonstrated the efficacy of the proposed methodology in amplifying the denoising prowess of Resnet. Our proposed method remains stable even when dealing with seismic data that has complex waveforms. The findings of this investigation evince that the recommended approach furnishes a substantial augmentation in the signal-to-noise ratio (SNR), thereby facilitating the efficient and robust extraction of the underlying signal from the noisy observations.

1. Introduction

The acquisition and processing of seismic information are fraught with noise. Given the intricacies and variances of the exploration environment, this issue is compounded, further hampering the quality of recorded vibrations. As a consequence, the ratio of useful signal to noise in such recordings diminishes precipitously, severely impeding the ability to reconstruct accurate images of the subsurface. Therefore, the denoising of seismic data emerges as a crucial linchpin in the subsequent processing of seismic data, serving as a salient modality to ameliorate the SNR of such information. Seismic interference bifurcates into two forms: random noise and coherent noise. The former is characterized by its lack of fixed frequency and propagation direction, rendering the segregation of the noise from the useful signal a challenging task. In response to this challenge, researchers have undertaken extensive scholarly work and advanced a plethora of effective methodologies to tackle this quandary with sanguine results [1,2,3]. Transform domain-based methods belong to a category of seismic signal enhancement techniques. The crux of this technique lies in leveraging the sparsity of the signal in a specific transform domain. Specifically, the sparsity-enforcing transform basis is employed to convert the seismic data to the sparse domain, followed by the application of thresholding to the resultant coefficients. Finally, the processed coefficients are reverse transformed to the original domain to yield a denoised seismic signal. For instance, a variety of techniques may be employed, including, but not limited to, the Fourier transform, radon transform [4], wavelet transform, curvelet transform, contourlet transform [5], shearlet transform [6], seislet transform [7], KSVD, and DDTF dictionary [8]. The methods in question can effectively extract salient signal features while simultaneously segregating them from noise. Nonetheless, their utilization necessitates a multitude of iterative experiments for the purpose of parameter selection, thus leading to superior outcomes. Decomposition-based methods, such as EMD [9], SVD [10], and SSA [11], strive to decompose the seismic signal into its constituent principal components. The purpose of this endeavor is to eliminate residual components that are intrinsically associated with extraneous perturbations, thereby restoring the original signal. However, to achieve this goal, it is necessary to determine the optimal number of decomposition layers, such that residual components are solely constituted of undesirable perturbations.

The utility of deep learning as an efficacious feature extraction tool in seismic data processing has been extensively implemented. As an illustration of the diverse applications of deep learning in seismic data processing, Kaur et al. [12] utilized generative adversarial networks (GAN) for seismic data interpolation; Tsai et al. [13] proposed a deep semi-supervised neural network for automatic seismic first break picking; Cao et al. [14] introduced long short-term memory (LSTM) networks to enhance the accuracy of inversion; Jing et al. [15] presented the use of point-spread function-based convolution to generate synthetic samples for fault detection. With regards to the field of seismic data denoising, Yao et al. [16] have applied the DnResNeXt deep neural network architecture to effectively suppress noise in seismic data collected from challenging desert areas, achieving a superior denoising performance compared to the DnCNN method. Similarly, Wang et al. [17] have contributed to the advancement of seismic data processing through the utilization of simultaneous denoising and interpolation via residual dense networks, leading to significant improvements in SNR for oceanic data. In addition, Zhong et al. [18] have presented a novel approach to seismic data denoising by integrating the residual structure with U-Net, culminating in the effective removal of desert seismic noise. These techniques demonstrate the potential of deep learning in seismic data processing and analysis, opening avenues for enhanced interpretation of subsurface geological structures.

The efficacy of attentional mechanisms in facilitating the identification of salient information while simultaneously mitigating the impact of irrelevant inputs was initially demonstrated in domains, such as speech processing [19] and computer vision. Attention mechanisms can be divided into soft attention, hard attention, and self-attention. Among them, hard attention is not differentiable, and self-attention leads to performance problems due to its high computational complexity. Therefore, soft attention, which can be learned to obtain attention weights, has been more widely studied. For example, Liu et al. [20] introduced a multiscale residual dense dual attention network for the purpose of SAR image denoising, and this approach effectively addresses speckle noise through a straightforward pixel attention module; Wang et al. [21] proposed a novelty channel–spatial attention module to improve the efficacy of U-Net networks. Given the remarkable advancements achieved through the implementation of attention mechanisms in image processing, scholars have since incorporated this technique into the field of seismic data processing. By doing so, they have effectively executed innovative methodologies to augment the signal quality of seismic data. For example, Dong et al. [22] demonstrated an innovative approach to noise suppression in seismic data by incorporating a multiscale and attention mechanism. This approach, which combines multiple scales with the capability of selective feature extraction, effectively mitigates noise, resulting in an improvement in the overall signal quality of the data; Ma et al. [23] advocated for an integrative approach that merges attention mechanisms with neural networks to enhance the efficacy of tomographic detection; Li et al. [24] proposed a seismic phase pickup technique that integrates attention mechanisms with U-Net. The potential of this methodology to boost the exactitude and reliability of seismic phase identification is noteworthy.

This study is driven by previous research to propose a novel methodology for cleaning seismic data that integrates Resnet and attention mechanism to optimize the performance of the Resnet, which is ResATnet. In particular, we added an attention module after the second convolutional layer of each residual block in Resnet, and we incorporated channel attention (CA) into the shortcut connections of the residual blocks. Additionally, we replaced the ReLU activation function used in the network with ELU. This approach has the potential to enhance the denoising effectiveness of the Resnet through the integration of attention mechanisms. Further details on these modifications can be found in the second part of the paper.

The main contributions of this study consist of:

- This proposed technique for denoising seismic data synergistically integrates a residual neural network and attention mechanism, exhibiting potential for improving the accuracy and dependability of seismic data analysis.

- The validation of the proposed method, using synthetic and actual data, highlights its capacity as a reliable and robust technique for seismic data denoising.

- A network structure that synergistically integrates unconventional activation functions and attention mechanism is proposed as an effective solution.

The rest of the paper is organized as follows: Section 2 expounds a lucid and comprehensive account of the methodology employed, the evaluation criteria, and the model used. Section 3 and Section 4, on the other hand, scrutinize the experimental outcomes of our approach on both simulated and real seismic data, providing a perceptive analysis of our findings. Finally, the last section, Section 5, proffers a conclusion that sums up the salient points discussed in this paper, and it takes a dive into the implications of our research, while also providing a exposition on future directions that could potentially be explored in further research.

2. Methods and Evaluation Index

2.1. ResATnet Denoising Principle

Noisy data is the sum of clean data and noise , and separating these two components is essential for accurate signal interpretation. Here is the calculation formula:

The foremost objective of deep learning is to reduce the divergence between the unpolluted signal and the approximated signal by creating intricate mappings that facilitate the recovery of a pristine, untainted signal from seismic data plagued by noise. The mathematical expressions are:

where stands for mapping, is the parameter of the neural network, and denotes the signal retrieved from the noise by the neural network, which constitutes an estimation of the pristine signal, . Once the mapping is established, the neural network is trained to traverse the loss function in reverse, with the objective of extracting meaningful clean signal features from the noisy data, which can be expressed as:

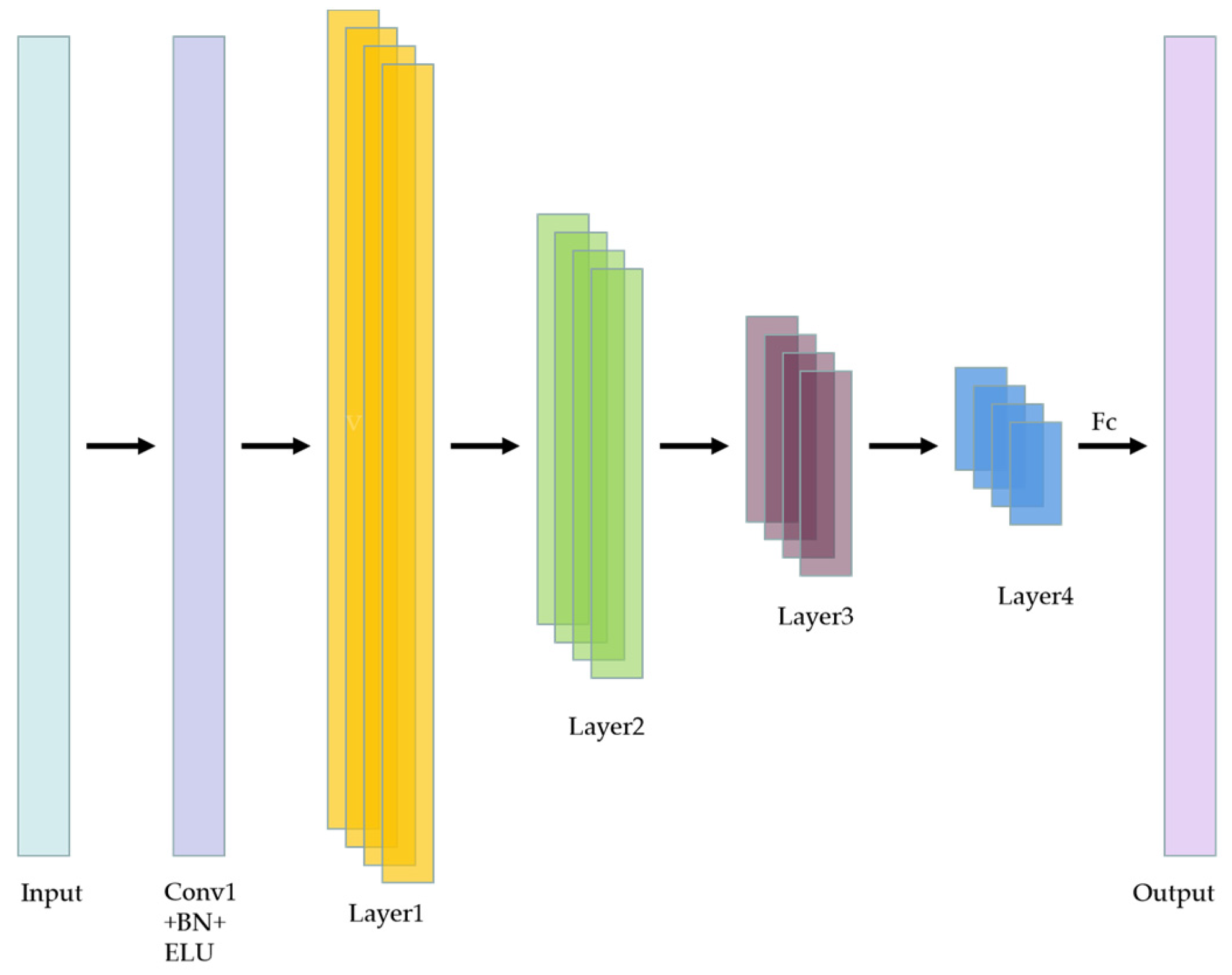

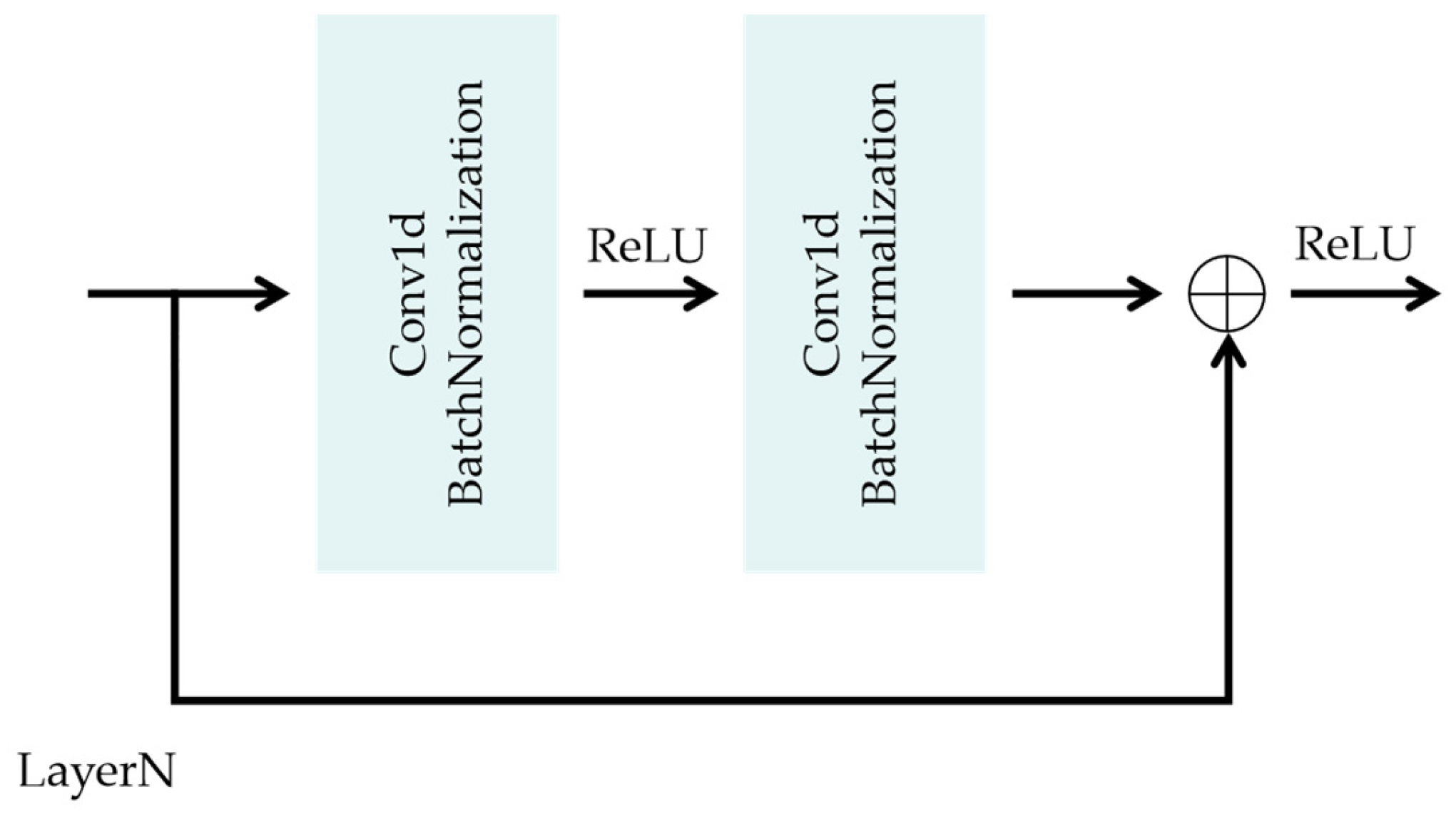

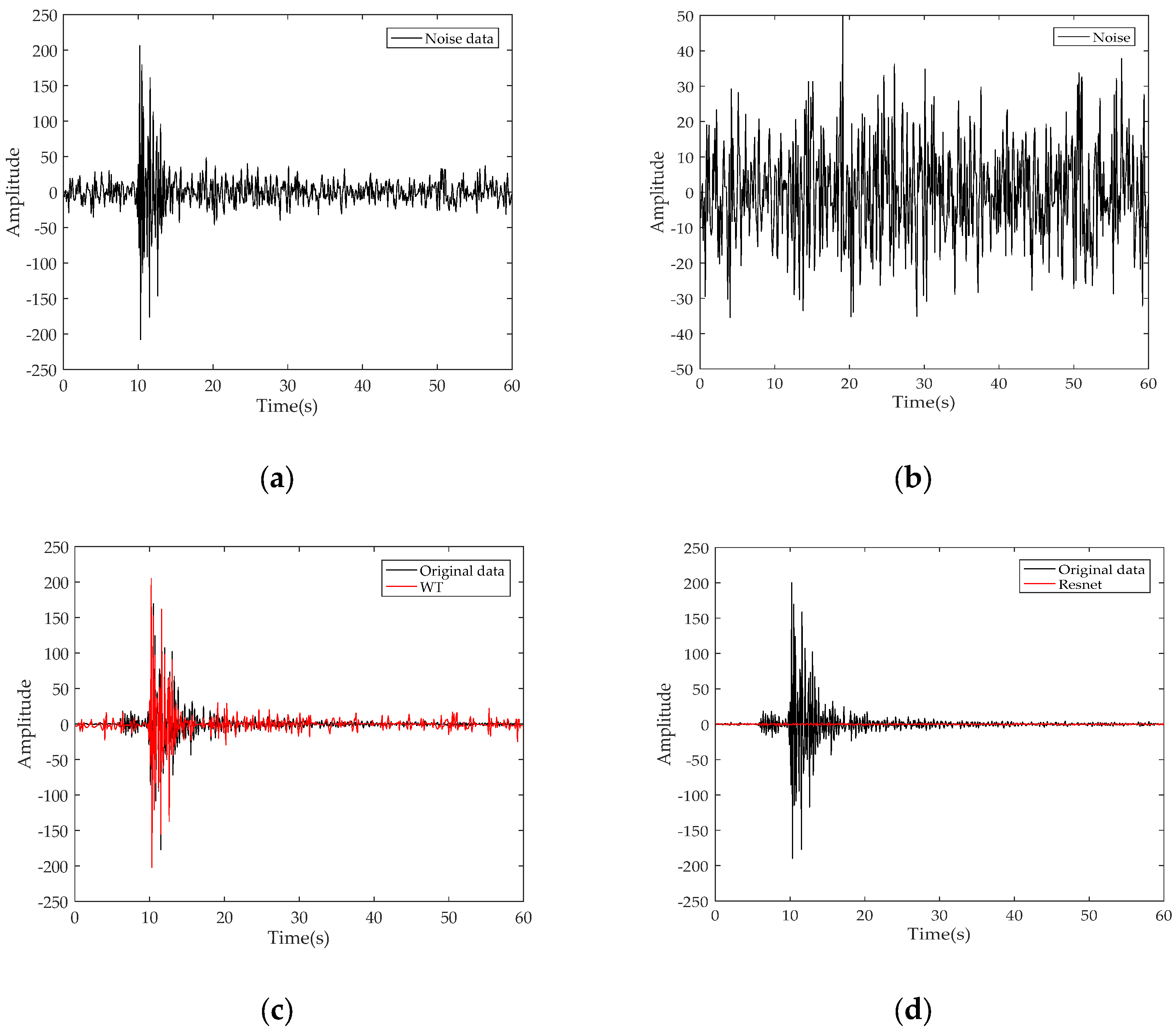

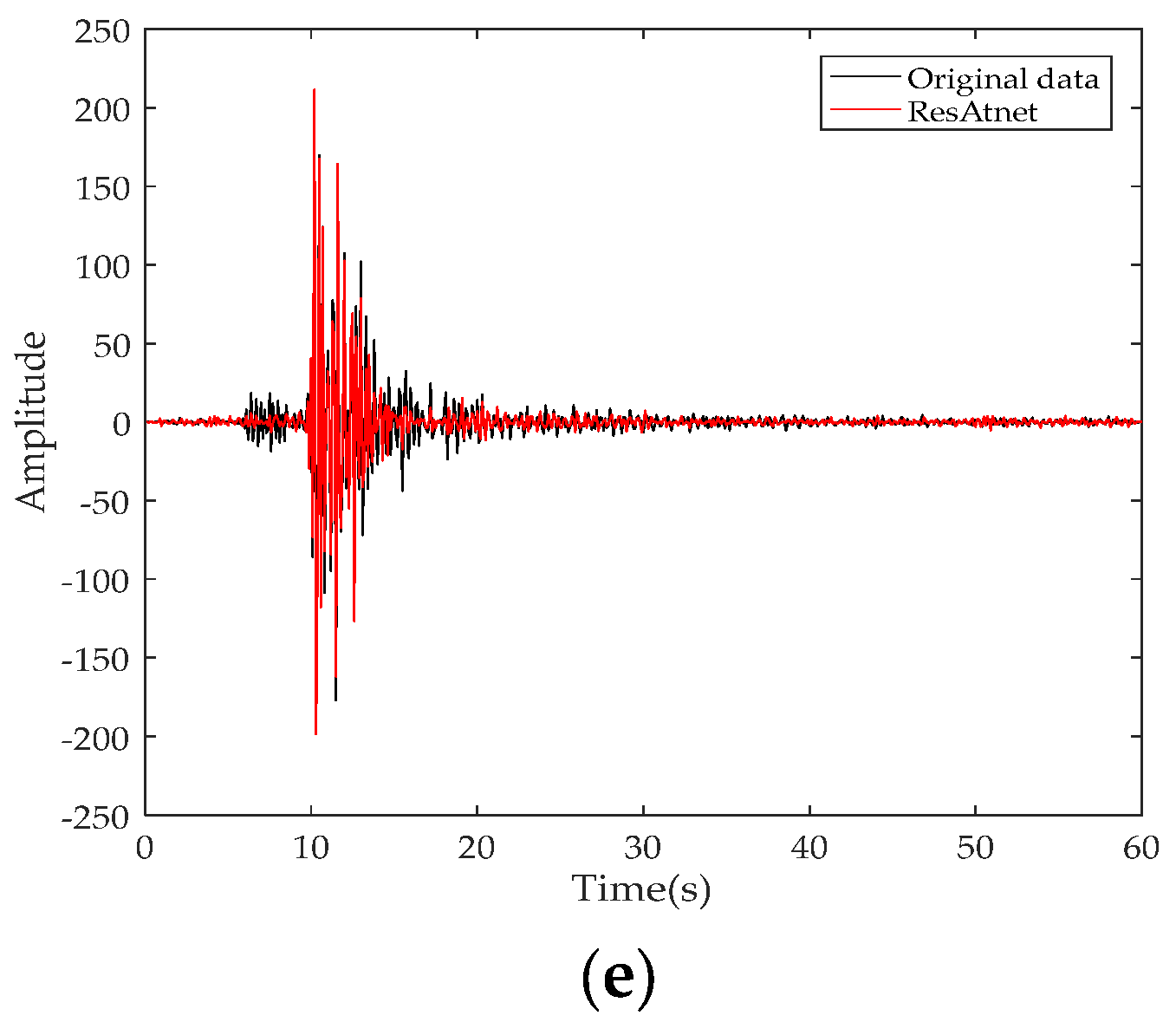

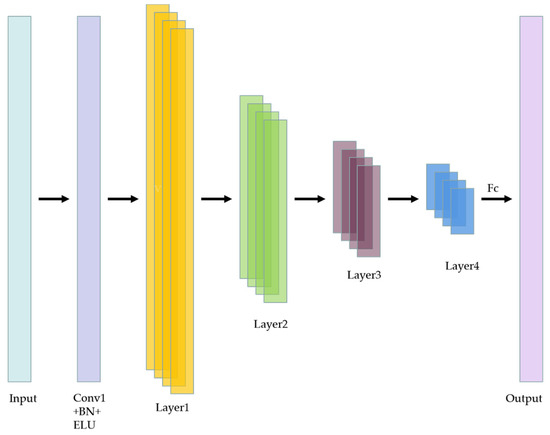

Where represents the loss function, and is the batch size. Figure 1 shows the network structure of ResATnet. The apparent resemblance of its structure to that of the Resnet18 network is noteworthy because the method proposed in this paper is an obvious modification on the residual block of Resnet18. The original Resnet18 network comprises residual blocks, consisting of a pair of weight layers with shortcut connections, which enables the network to circumvent the vanishing gradient problem and attain improved performance in training deep neural networks (Figure 2).

Figure 1.

The network structure of ResATnet.

Figure 2.

Resnet’s residual block.

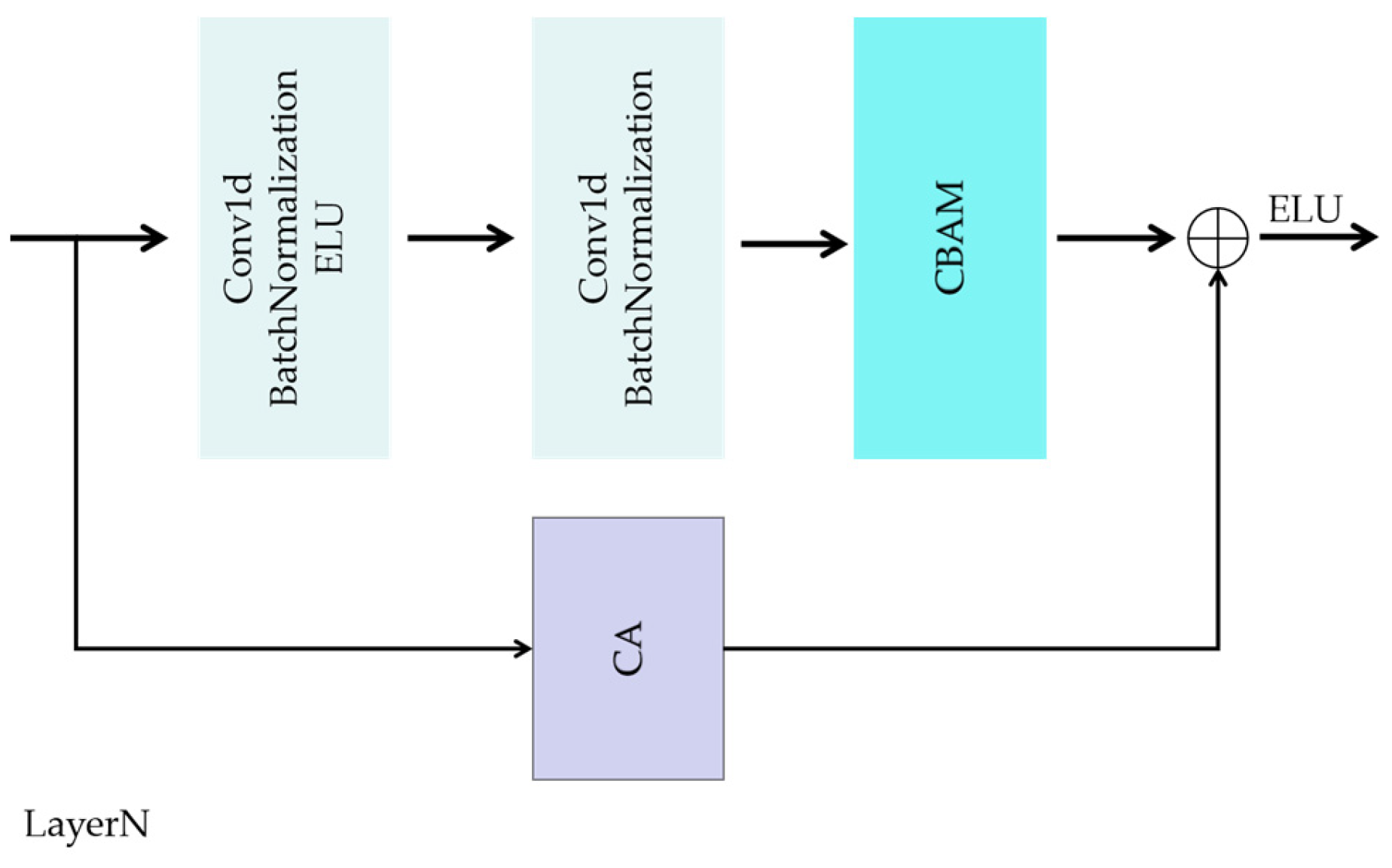

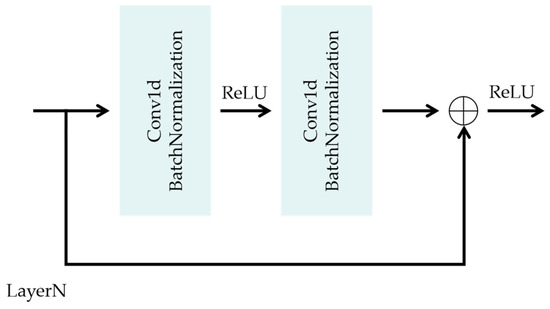

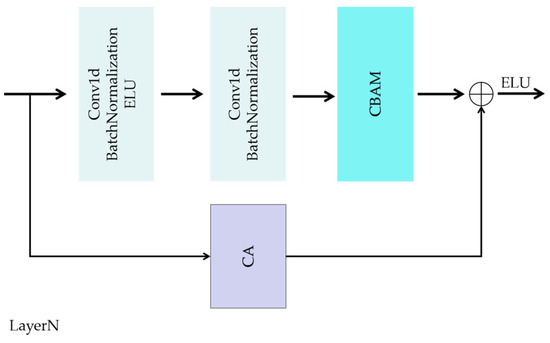

Shortcut connections pass the feature map before the current residual block directly to the features extracted by the weight layer. This practice propagates noise throughout the network, consequently impeding the denoising efficacy of the residual neural network. Motivated by the paper [25] and its innovative attention mechanism, we introduce an attention mechanism into the residual block of Resnet18 to selectively suppress extraneous noise features and amplify informative signal features, thereby elevating the denoising performance of the network. Specifically, the signal features extracted from the weight layer are enhanced using CBAM after the second weight layer of the residual block, and the noise is filtered using the CA on the shortcut connections. After that, the filtered features are added with the features extracted from the weight layer and finally processed by activation function ReLU to obtain the feature output (Figure 3).

Figure 3.

ResATnet’s residual block.

The Convolutional Block Attention Module, a soft attention module proposed by Woo [26], represents a straightforward, yet powerful, mechanism for selectively enhancing salient features within a neural network. It is worth noting that other soft attention modules, such as the spatial attention module and channel attention module [27,28], also exist, with CBAM being a hybrid module encompassing the aspects of both. The CBAM is rooted in the notion that the individual channels of a given feature map undergo a preliminary process of weighting, which is subsequently followed by a further spatial weighting operation in order to obtain the ultimate weighted feature map. Specifically:

The channel attention module (CAM) operates by extracting channel-weighted values via a pooling mechanism that compresses spatial dimensions while preserving the channel dimensions. In the CAM architecture, the feature map is first subjected to two parallel pooling layers, MaxPool and AvgPool, followed by compression and recovery operations via a multilayer perceptron (MLP) module. The resultant features are then subjected to the activation function ReLU, and the two features are subsequently combined through summation and subsequently passed through the sigmoid activation function to generate the final channel attention coefficients. Upon derivation of the said coefficients, they are applied to the original feature map, culminating in the creation of a channel-weighted feature map. The formula can be written as:

where is the feature map, and , are the corresponding parameters.

Then, the spatial attention module (SAM) involves two distinct processing steps executed on the feature maps that have been initially subjected to channel attention module. These steps encompass MaxPool and AvgPool processing to obtain two feature maps, each of which has a channel number of 1. These two feature maps are then concatenated by channel, followed by a convolutional layer that compresses the channel number to 1 once again. The resulting feature is subsequently input to a sigmoid function, yielding the final spatial attention feature map. Finally, the spatial attention feature map is element-wise multiplied with the original feature map of the input spatial attention module to generate the fully optimized feature map for downstream analysis. The formula can be written as:

where is the feature map after channel processing, is the convolution layer with reduced number of channels, and its convolution kernel size is 7.

To avoid the introduction of any superfluous channel attention mechanisms, the current study strategically employs the channel attention module that is embedded in CBAM for the residual block’s shortcut connections. This circumvents the need for an additional channel attention model, thereby ensuring streamlined architecture and efficient utilization of computational resources.

Moreover, it is noteworthy to mention that a salient point of differentiation between our proposed model and the Resnet18 network lies in the modification of the activation function. Specifically, we have made a deliberate departure from the conventional activation function employed in Resnet18 architecture, and we instead opted for a novel activation function that better caters to the specific requirements of our model.

The ELU [29] activation function amalgamates the desirable properties of both sigmoid and ReLU functions, thereby rendering it particularly adept at handling noise and converging swiftly. This can be attributed to the fact that the output mean of ELU closely approximates zero, thereby promoting greater stability and faster convergence during training. As such, the present study strategically employs ELU activation function to supplant the ReLU function in both the Resnet18 and CBAM architectures. This deliberate substitution is aimed at further augmenting the denoising capabilities of the residual network, which is an important facet of the overall performance of the model. The mathematical expression of the ELU activation function is:

where is the hyperparameter and is the neural network eigenvalue of the input activation function.

After the above two changes, for the residual block in this paper, when the eigenvalue of is inputted into it, an abbreviated feature calculation formula is:

where is the residual block output eigenvalue of the Nth residual layer, and is the weight layer parameter of the residual block.

2.2. Denoising Performance Evaluation Index

SNR is a widely used metric for ascertaining the efficacy of seismic data processing. In the present study, we leverage SNR as a robust evaluation criterion to gauge the noise rejection capability of ResATnet. To this end, we employ the following equation to quantitatively assess the SNR:

2.3. Training Model and Parameter Setting

Given that the neural network is primarily designed to process one-dimensional (1D) seismic data, the convolutional layer, pooling layer, and batch normalization are all customized to accommodate the specific properties of one-dimensional data. Furthermore, in order to optimize the architecture for one-dimensional seismic data, we have omitted the AvgPool layer of the original Resnet18 network. By streamlining the architecture to align with the unique demands of one-dimensional seismic data, we are able to improve the model’s overall performance and enhance its ability to accurately analyze and interpret seismic data.

In general, using a relatively large batch size may cause a decrease in the stability of the model’s loss, while a smaller batch size may improve the model’s generalization performance. Therefore, when choosing a batch size, both experimental hardware conditions and the characteristics of the batch size should be taken into consideration. Regarding the neural network parameters, we have set the batch size to 100. In addition, the initial learning rate interval is . Moreover, to facilitate effective training and prevent overfitting, the learning rate is reduced by 10% every 10 cycles of the network, which enables the model to gradually converge towards the optimal solution. The experiments in this paper were run on a machine equipped with an Intel(R) Xeon(R) CPU E5-2678 v3 @ 2.50 GHz and a NVIDIA GeForce RTX 2080 Ti.

3. Synthetic Seismic Data Processing Results

To ascertain the efficacy and robustness of the proposed methodology, we have conducted an initial validation study using synthetic data. The synthetic seismic data employed in this study comprise 10,000 seismic traces with a sampling interval of 0.001 s. To facilitate the training and evaluation of the proposed methodology, the dataset was partitioned into a training set, a validation set, and a test set in an 8:1:1 ratio. Moreover, to augment the training data, a mirroring technique was utilized, thereby generating additional training samples and promoting greater model generalization. To further accelerate network convergence, the data were normalized separately before being fed into the network.

To verify the denoising performance of deep well networks under different levels of noise, we have subjected the data to different levels of noise, resulting in a range of SNRs, and the SNRs evaluated were −11.363 dB, −7.761 dB, −3.829 dB, 4.695 dB, 8.221 dB, and 18.138 dB, respectively.

Initially, a series of ablation experiments are conducted, utilizing the −7.761 dB dataset, to provide justification for the chosen network architecture (Table 1). Through a process of selective removal of specific components, the study aims to isolate and identify the critical elements that underpin the network’s overall performance. Such a methodological approach enables a granular and detailed evaluation of the network’s structural composition, allowing for a comprehensive and rigorous analysis of its functionality and capacity. As can be seen from Table 1, the use of ELU, CBAM, CA, or any two combinations on the Resnet network structure alone cannot fully improve the network denoising performance. Only when ELU, CBAM, and CA work together, the performance can be further improved.

Table 1.

Ablation experiment of ResATnet network structure.

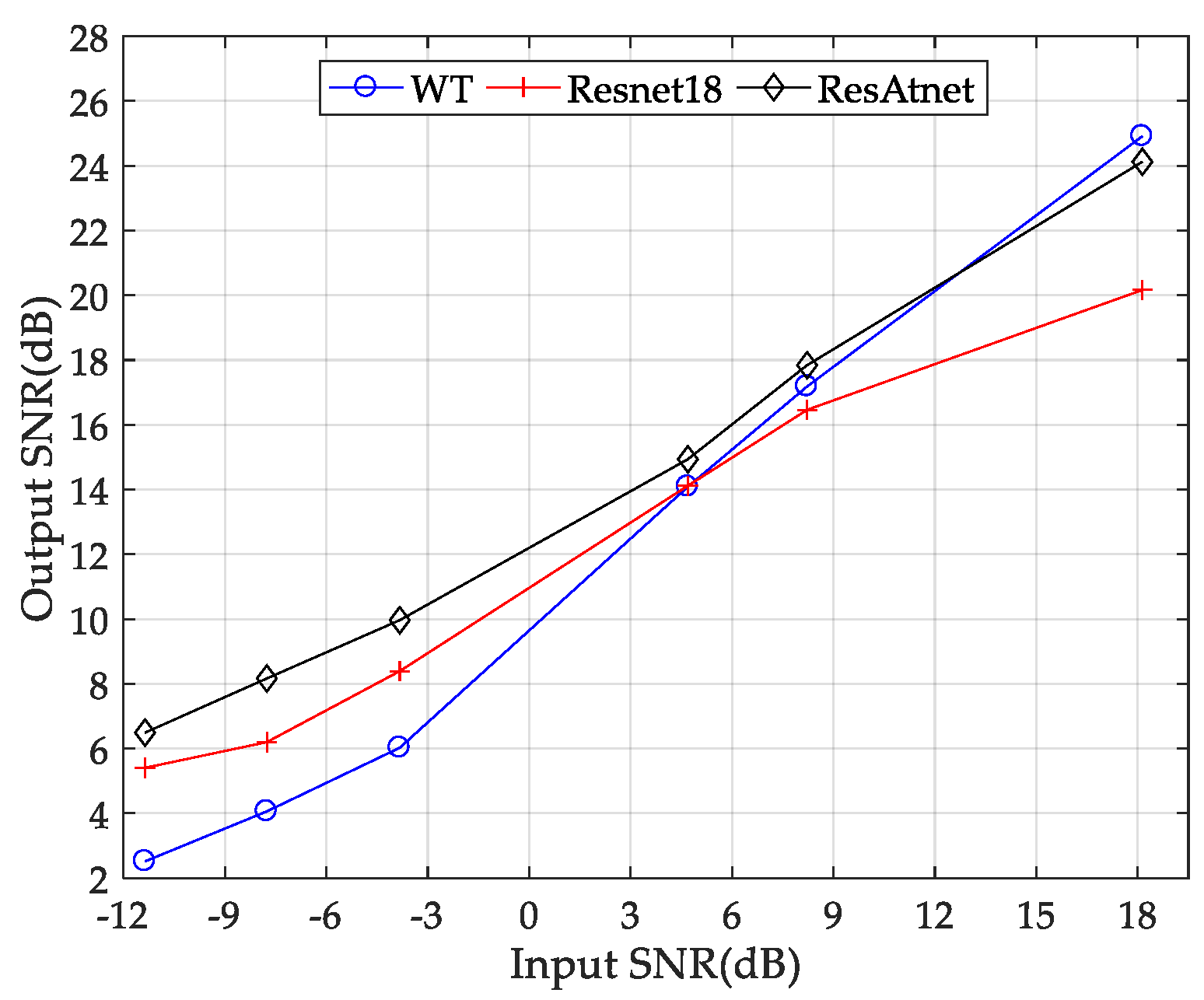

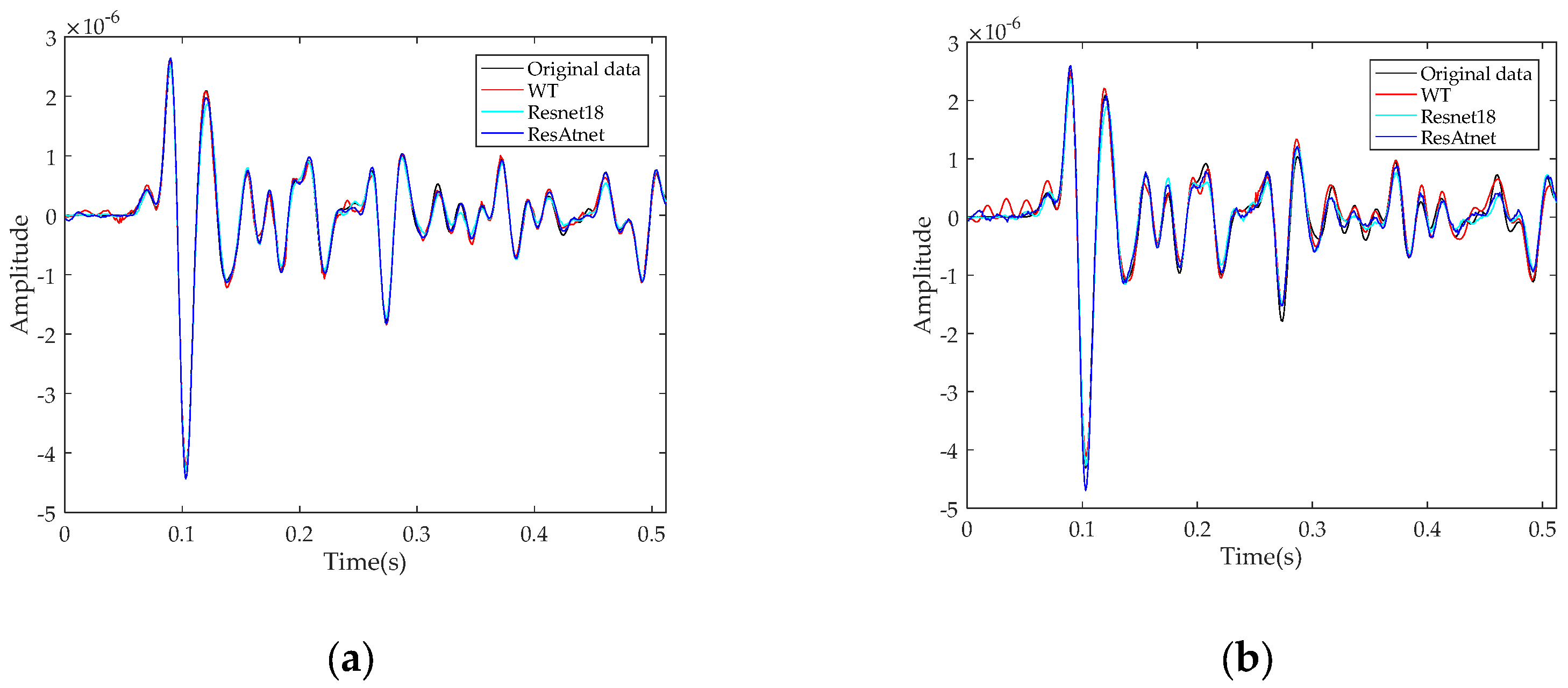

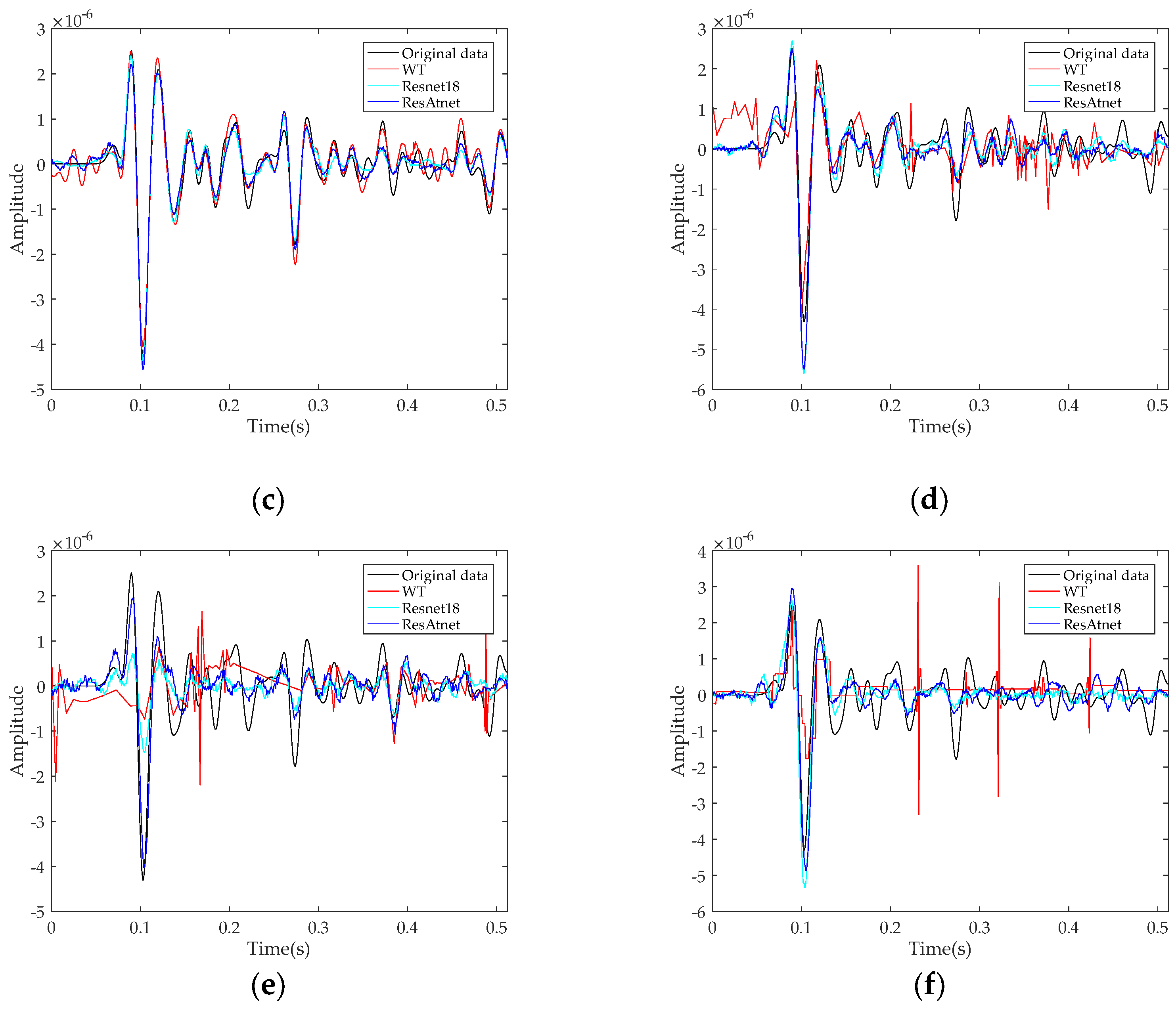

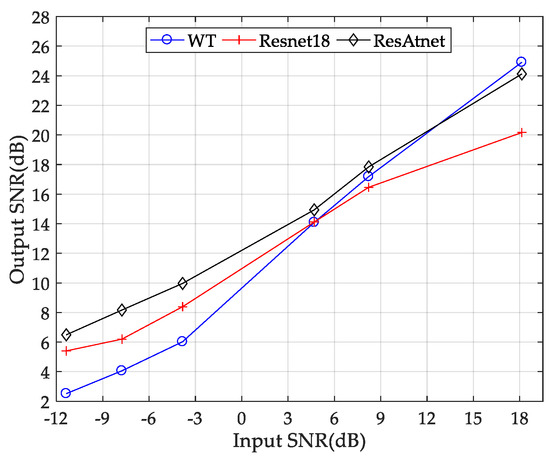

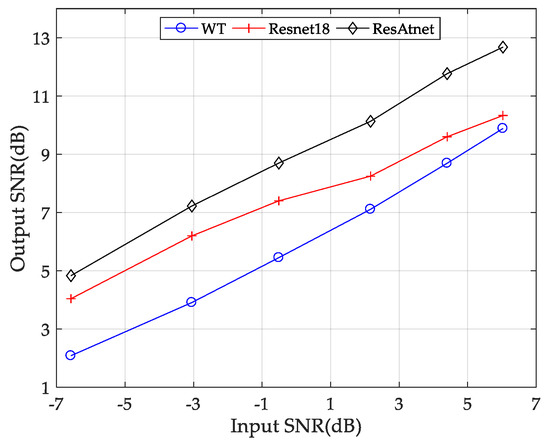

Then, in order to evince the efficacy of the suggested denoising methodology, we conducted a comparative analysis with two denoising methods: Resnet18 and wavelet threshold (WT). Table 2 and Figure 4 present the comparative effects of wavelet threshold, Resnet18, and ResATnet across various noise levels. It is evident that the wavelet threshold only exhibits a marginal advantage over ResATnet at lower noise levels. However, as the noise levels increase, ResATnet surpasses wavelet threshold in terms of ability. At higher noise levels, Resnet18 gradually manifests its efficacy, albeit its performance is still inferior to that of ResATnet.

Table 2.

WT, Resnet18, and ResATnet denoising effects under different noise levels.

Figure 4.

Trend of the denoising results of WT, Resnet18, and ResATnet with varying SNRs of noisy seismic data.

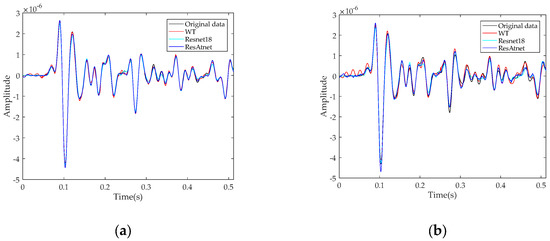

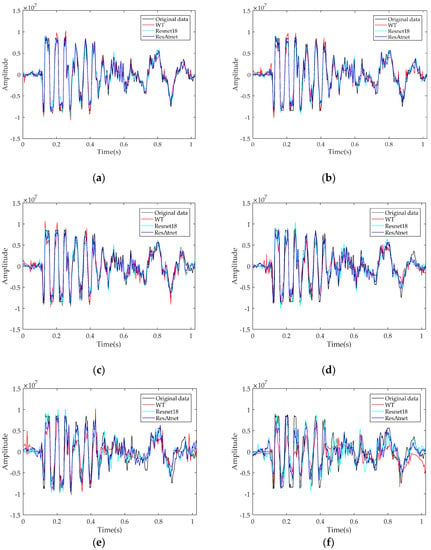

Through a comprehensive comparison of the extracted single-channel data obtained from the output signals under diverse noise levels, a vivid depiction of the efficacies of the three techniques can be visualized (Figure 5). What should be noted is the “Original data” in figures indicate clean data without noise.

Figure 5.

(a–f) illustrates the single-channel comparison of data denoised by three methods for different noise levels.

Evidently, it becomes apparent that, at a SNR of −3.829 dB, the outcomes yielded by the application of wavelet threshold denoising are essentially ineffectual in capturing the features of the original waveform. However, when the SNR is 18.138 dB, the wavelet threshold denoising method can achieve comparable denoising results with the neural network denoising method. This failure can be attributed to the inefficiency of the technique in effectively segregating the signal from the extraneous noise and its low-robustness for high levels of noise. Conversely, while the employment of the neural network approach also experiences a decline in denoising efficacy in the presence of heightened noise levels, it is still capable of approximating the genuine signal with a fair degree of fidelity.

4. Real Seismic Data Processing Results

4.1. Ground-Based Seismic Data

This ground-based seismic data comprises 9356 seismic traces and a sampling interval of 0.002 s. The dataset is subjected to an unchanging process of partitioning into training, validation, and test sets with a proportional distribution of 8:1:1, and the training set was data-augmented, and all data were normalized before entering the network.

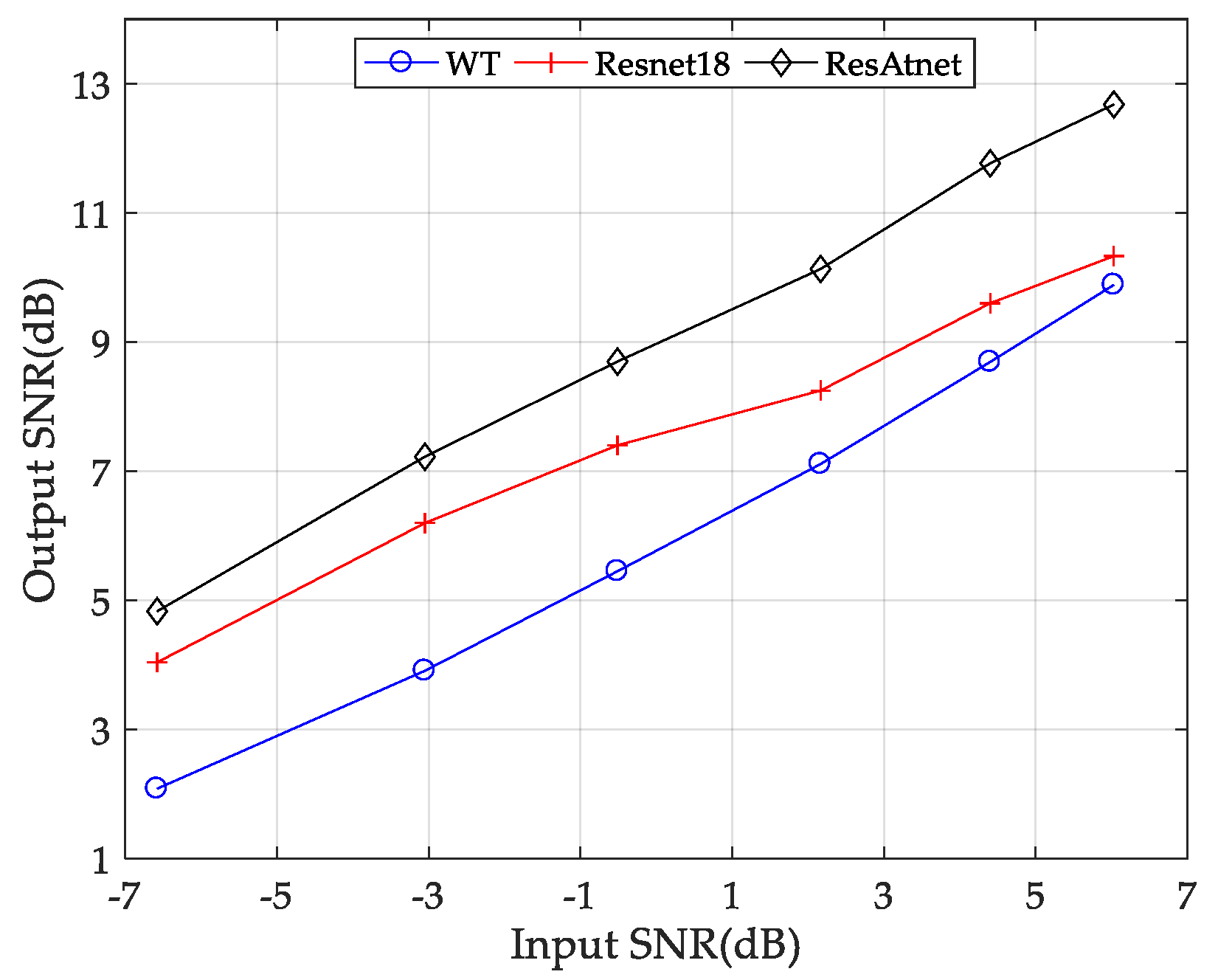

In contrast to the synthetic seismic data, the ground-based seismic data present a greater degree of complexity, characterized by higher levels of waveform fluctuation. As shown in Table 3 and Figure 6, the neural network method outperforms wavelet thresholding in reducing noise in the data mentioned above. The ResATnet architecture shows the best performance in enhancing the quality of noisy data, while the Resnet18 architecture follows closely behind. Importantly, ResATnet exhibits significantly better performance in improving the quality of data with low signal-to-noise ratios compared to Resnet18. Although the denoising performance of ResATnet is better than that of Resnet18, as the level of noise increases, ResATnet has been observed to suffer from a faster rate of degradation in performance. As a result, the difference in performance between these two approaches decreases gradually. At a SNR of −6.581 dB, the difference in their outcomes is only 0.791 dB. The proposed method has limitations in reducing noise from the data. What is clear is that when the noise level is high, the neural network’s performance remains compromised, and it cannot extract the intended signal accurately.

Table 3.

WT, Resnet18, and ResATnet denoising effects under different noise levels under ground-based seismic data.

Figure 6.

Trend of the denoising results of WT, Resnet18, and ResATnet with varying SNRs of ground–based noisy seismic data.

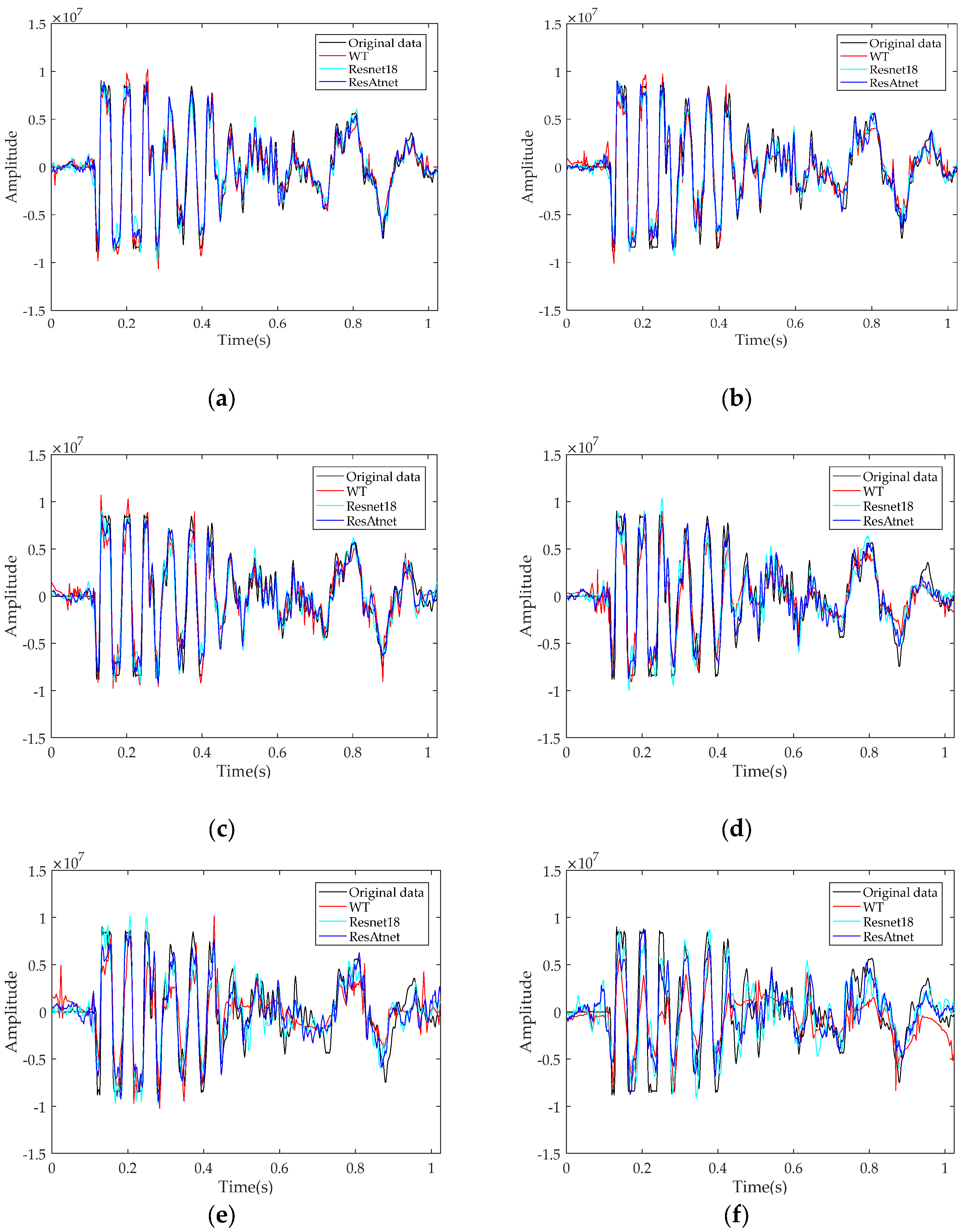

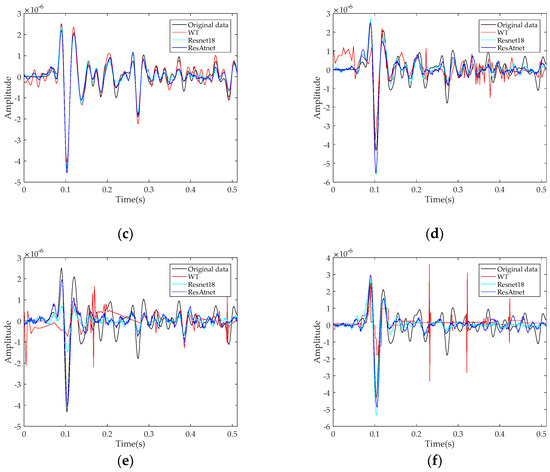

It can be observed in Figure 7 that, as the level of noise increases, the effectiveness of the wavelet threshold approach in fitting the pristine signal progressively diminishes. Additionally, the Resnet18 method gradually loses its efficacy in isolating the noise components from the signal of interest. The diminishing capacity of this approach in attenuating the unwanted perturbations is a clear indication of its limited ability to cope with high levels of noise. In contrast to the Resnet18 approach, the ResATnet method exhibits a greater degree of resilience against the deleterious effects of noise interference. This observation highlights the capacity of the ResATnet method to mitigate the impact of noise perturbations on the denoising performance, albeit not entirely impervious to the effects of increased noise levels. As the noise level intensifies, the ResATnet method’s denoising capability inevitably experiences a decrement, underscoring the inherent trade-off between noise reduction and signal preservation.

Figure 7.

(a–f) illustrates the single-channel comparison of ground-based data denoised by three methods for different noise levels.

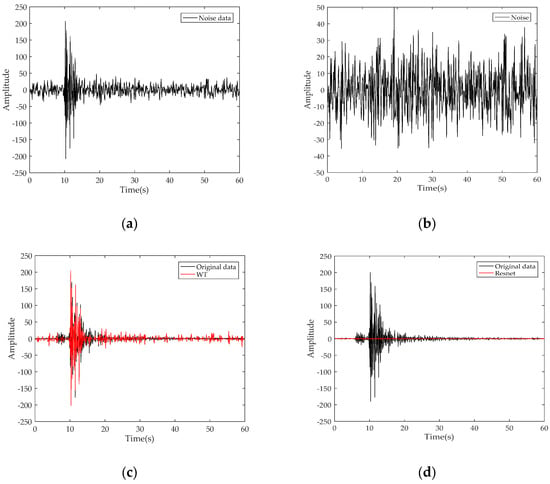

4.2. STanford EArthquake Dataset

In order to provide further substantiation of the effectiveness of the proposed method, the experimental data for the study is derived from the widely recognized and authoritative Stanford EArthquake Dataset [30]. This dataset serves as a robust and reliable benchmark for evaluating the performance of denoising techniques in the seismic data analysis domain.

The STanford EArthquake Dataset contains rich types of seismic data, and we select some of the seismic data with receiver type “EH” as the dataset, where H represents high broad band, and E is an extremely short period. The seismic data in its original form are comprised of east, north, and vertical components. However, due to the limitations of the experimental apparatus employed, it was necessary to restrict the volume of data collected to a manageable range. As such, the decision was taken to solely collect the seismic data in the vertical direction and resample them, thus effectively decreasing the size of the dataset from 6000 sampling points to 600. This approach facilitated the reduction of the computational load and resource requirements of the analysis while ensuring that the key features and characteristics of the original dataset were preserved. We utilize the random noise data from the STanford EArthquake Dataset for the purpose of generating a noisy dataset. Specifically, we intercept part of the noise, scale its amplitude in a fixed range, and superimpose it on the resampled signal to form a noisy dataset. The dataset contains 30,000 seismic traces, which have been partitioned into three subsets—the training, validation, and test sets, and a finely balanced 8:1:1 ratio has been employed to achieve this end. Data normalization was also implemented. Due to the extensive variety of signal types that are comprehensively represented within the dataset, it has been deemed unnecessary to augment the training set with additional data. The SNR of this test data is 5.179 dB.

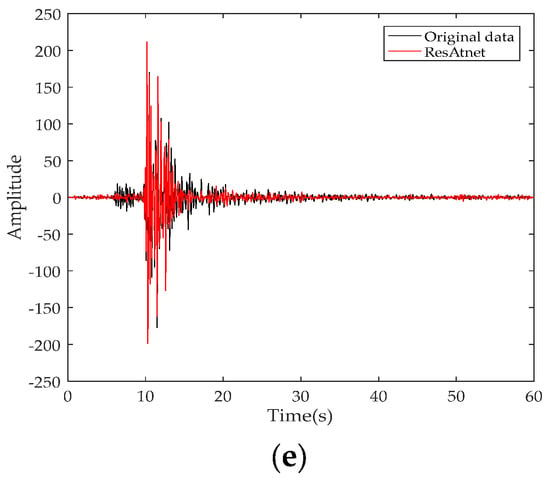

It can be seen that wavelet threshold denoising still works, with a denoising result of 7.682 dB (Figure 8). Resnet18 is unable to effectively remove the noise and can be said to have failed to learn the characteristics of a clean signal. Although it can be seen from the figure that ResATnet is weaker in fitting the weaker fluctuating seismic signals (i.e., before the 10th second and after the 15th second), the ResATnet denoising result is still excellent, reaching 9.277 dB, which is 1.595 dB higher than the wavelet threshold, and it fits the weak fluctuating part of the signal relatively well.

Figure 8.

(a) is noisy data, (b) is the noise in (a), which comes from the STanford EArthquake Dataset, and (c–e) illustrates the single-channel comparison of three methods.

5. Conclusions and Discussion

In this paper, we present a novel approach to enhancing the performance of residual neural networks through the integration of an attention mechanism. By incorporating an attention mechanism into the residual neural network, we are able to selectively focus on the informative features of the data and effectively denoise both synthetic and actual datasets. To evaluate the effectiveness of our proposed method, we conducted extensive experiments on various datasets. From the experiments, we can find that, for synthetic seismic data with little waveform fluctuation, in contrast to traditional denoising methods, which are often limited in their ability to effectively filter out high levels of noise, our neural network denoising method excels in these challenging conditions. By leveraging the power of deep learning techniques, our method is able to capture the complex relationships between noisy and clean seismic data and effectively remove unwanted noise, even in the most difficult scenarios. As the seismic waveforms become increasingly complex, our proposed ResATnet method consistently outperformed traditional denoising methods, but Resnet can hardly learn effective information on the complex STanford EArthquake Dataset. This demonstrates the stability of the ResATnet method of denoising. Although wavelet thresholding methods also have stability, the conventional wavelet thresholding method requires a significant amount of time and effort to achieve optimal results. This is due in part to the need to manually select a suitable wavelet basis and perform multiple layers of decomposition. In our experiments on real data denoising, wavelet thresholds would need to be decomposed in seven or more layers under a suitable wavelet basis to obtain better denoising results, and this is a task that can be both tedious and time-consuming.

The complexity of the waveform structure can impact the denoising performance of the neural network due to the complexity of the subsurface structure. Through actual data experiments, we observed that Resnet, which effectively denoised ground-based seismic data, was unable to effectively denoise the STanford EArthquake Dataset. Similarly, the denoising performance of ResATnet is degraded when processing the STanford EArthquake Dataset under similar input SNR conditions. Furthermore, ResATnet’s ability to distinguish between signal and noise is limited when their amplitudes are similar, as shown in Figure 8e before the 10th second and after the 15th second. The denoising performance of neural networks can be affected by the complexity of the waveform structure, which is in turn influenced by the complexity of the subsurface structure. These, together with noise, cause ResATnet’s denoising performance to degrade faster than Resnet18 as the noise level increases. The original goal of this study was to identify a denoising method that would perform well across a wide range of seismic data. ResATnet meets this expectation, but there are still limitations to its performance. Additionally, ResATnet has two limitations that should be noted. The first issue with ResATnet is its training efficiency, as each cycle of ResATnet takes 5.043 s longer on average than that of Resnet. The increased number of network parameters leads to network inefficiency, which is a drawback that needs to be improved in next versions. The second point is the ability of neural networks to extract effective features from the data, which are significantly hampered in the presence of high levels of noise. Addressing this challenge and devising effective methods for enhancing the network’s ability to extract key features from noisy data are critical areas of future research.

Author Contributions

T.L.: conceptualization, methodology, software; L.H.: review and guidance, funding acquisition, supervision, investigation, project administration; Z.Z.: review and guidance, supervision, project administration; J.Z.: data curation, validation, visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant no. 42074154, no.42130805).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

STanford EArthquake Dataset at https://github.com/smousavi05/STEAD (accessed on 10 April 2023).

Acknowledgments

The authors are very grateful for all constructive comments that helped us improve the original version of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mafakheri, J.; Kahoo, A.R.; Anvari, R.; Mohammadi, M.; Radad, M.; Monfared, M.S. Expand dimensional of seismic data and random noise attenuation using low-rank estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 2773–2781. [Google Scholar] [CrossRef]

- Anvari, R.; Kahoo, A.R.; Monfared, M.S.; Mohammadi, M.; Omer, R.M.D.; Mohammed, A.H. Random Noise Attenuation in Seismic Data Using Hankel Sparse Low-Rank Approximation. Comput. Geosci. 2021, 153, 104802. [Google Scholar] [CrossRef]

- Guan, X.Z.; Wang, J.X.; Wang, X.J.; Xue, D.; Sun, W.B. Research on intelligent denoising technology of marine seismic data based on Resnet. In Proceedings of the Chinese Petroleum Society 2021 Geophysical Exploration Technology Seminar, Chengdu, China, 27–29 September 2021; pp. 1004–1007. [Google Scholar]

- Latif, A.; Mousa, W.A. An Efficient Undersampled High-Resolution Radon Transform for Exploration Seismic Data Processing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1010–1024. [Google Scholar] [CrossRef]

- Shan, H.; Ma, J.W.; Yang, H.Z. Comparisons of Wavelets, Contourlets and Curvelets in Seismic Denoising. J. Appl. Geophys. 2009, 69, 103–115. [Google Scholar] [CrossRef]

- Kong, D.H.; Peng, Z.M. Seismic random noise attenuation using shearlet and total generalized variation. J. Geophys. Eng. 2015, 12, 1024–1035. [Google Scholar] [CrossRef]

- Liu, Y.; Fomel, S.; Liu, C. Signal and noise separation in prestack seismic data using velocity-dependent seislet transform. Geophysics 2015, 80, WD117–WD128. [Google Scholar] [CrossRef]

- Chen, Y.K.; Ma, J.W.; Fomel, S. Double-Sparsity Dictionary for Seismic Noise Attenuation. Geophysics 2016, 81, V103–V116. [Google Scholar] [CrossRef]

- Chen, Y.K.; Fomel, S. EMD-seislet transform. Geophysics 2017, 83, A27–A32. [Google Scholar] [CrossRef]

- Shen, H.Y.; Li, Q.C. New idea for seismic wave filed separation and denoising by singular value decomposition (SVD). Prog. Geophys. 2010, 25, 225–230. [Google Scholar]

- Lari, H.H.; Naghizadeh, M.; Sacchi, M.D.; Gholami, A. Adaptive singular spectrum analysis for seismic denoising and interpolation. Geophysics 2019, 84, V133–V142. [Google Scholar] [CrossRef]

- Kaur, H.; Pham, N.; Fomel, S. Seismic data interpolation using deep learning with generative adversarial networks. Geophys. Prospect. 2021, 69, 307–326. [Google Scholar] [CrossRef]

- Tsai, K.C.; Hu, W.Y.; Wu, X.Q.; Chen, J.F.; Han, Z. Automatic First Arrival Picking via Deep Learning With Human Interactive Learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1380–1391. [Google Scholar] [CrossRef]

- Wei, C.; Guo, X.B.; Tian, F.; Shi, Y.; Wang, W.H.; Sun, H.R.; Ke, X. Seismic Velocity Inversion Based on CNN-LSTM Fusion Deep Neural Network. Appl. Geophys. 2021, 18, 499–514. [Google Scholar] [CrossRef]

- Jing, J.K.; Yan, Z.; Zhang, Z.; Gu, H.; Han, B. Fault Detection Using a Convolutional Neural Network Trained with Point-Spread Function-Convolution-Based Samples. Geophysics 2023, 88, IM1–IM14. [Google Scholar] [CrossRef]

- Yao, H.Y.; Ma, H.T.; Li, Y.; Feng, Q.K. Dnresnext network for desert seismic data denoising. IEEE Geosci. Remote Sens. Lett. 2020, 19, 7501105. [Google Scholar] [CrossRef]

- Wang, X.T.; Yu, K.; Wu, S.X.; Gu, J.J.; Liu, Y.H.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Zhong, T.; Cheng, M.; Dong, X.; Li, Y.; Wu, N. Seismic Random Noise Suppression by Using Deep Residual U-Net. J. Pet. Sci. Eng. 2022, 209, 109901. [Google Scholar] [CrossRef]

- Zhang, J.F.; Xing, L.I.; Tan, Z.; Wang, H.S.; Wang, K.S. Multi-Head Attention Fusion Networks for Multi-Modal Speech Emotion Recognition. Comput. Ind. Eng. 2022, 168, 108078. [Google Scholar] [CrossRef]

- Liu, S.Q.; Lei, Y.; Zhang, L.Y.; Li, B.; Hu, W.M.; Zhang, Y.D. MRDDANet: A Multiscale Residual Dense Dual Attention Network for SAR Image Denoising. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5214213. [Google Scholar] [CrossRef]

- Wang, Y.; Song, X.; Chen, K. Channel and Space Attention Neural Network for Image Denoising. IEEE Signal Process. Lett. 2021, 28, 424–428. [Google Scholar] [CrossRef]

- Dong, X.T.; Lin, J.; Lu, S.P.; Wang, H.Z.; Li, Y. Multiscale Spatial Attention Network for Seismic Data Denoising. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5915817. [Google Scholar] [CrossRef]

- Ma, R.J.; Zhang, B.; Zhou, Y.C.; Li, Z.M.; Lei, F.Y. PID Controller-Guided Attention Neural Network Learning for Fast and Effective Real Photographs Denoising. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3010–3023. [Google Scholar] [CrossRef]

- Li, W.; Chakraborty, M.; Fenner, D.; Faber, J.; Zhou, K.; Rümpker, G.; Stöcker, H.; Srivastava, N. EPick: Attention-based multi-scale UNet for earthquake detection and seismic phase picking. Front. Earth Sci. 2022, 10, 953007. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks. In Annual Conference on Advances in Neural Information Processing Systems 28, Proceedings of the 29th Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 2017–2025. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Yang, L.; Chen, W.; Liu, W.; Zha, B.; Zhu, L. Random noise attenuation based on residual convolutional neural network in seismic datasets. IEEE Access 2020, 8, 30271–30286. [Google Scholar] [CrossRef]

- Mousavi, S.M.; Sheng, Y.X.; Zhu, W.Q.; Beroza, G.C. Stanford EArthquake Dataset (STEAD): A Global dataset of Seismic Signals for AI. IEEE Access 2019, 7, 179464–179476. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).