Abstract

Although driving simulators could be commonly assumed as very useful technological resources for both novel and experienced drivers’ instruction under risk control settings, the evidence addressing their actual effectiveness seems substantially limited. Therefore, this study aimed to analyze the existing original literature on driving simulators as a tool for driver training/instruction, considering study features, their quality, and the established degree of effectiveness of simulators for these purposes. Methods: This study covered a final number of 17 empirical studies, filtered and analyzed in the light of the PRISMA methodology for systematic reviews of the literature. Results: Among a considerably reduced set of original research studies assessing the effectiveness of driving simulators for training purposes, most sources assessing the issue provided reasonably good insights into their value for improving human-based road safety under risk control settings. On the other hand, there are common limitations which stand out, such as the use of very limited research samples, infrequent follow-up of the training outcomes, and reduced information about the limitations targeted during the simulator-based training processes. Conclusions: Despite the key shortcomings highlighted here, studies have commonly provided empirical support on the training value of simulators, as well as endorsed the need for further evaluations of their effectiveness. The data provided by the studies included in this systematic review and those to be carried out in the coming years might provide data of interest for the development and performance improvement of specific training programs using simulators for driver instruction.

1. Introduction

1.1. Simulators in Context

Since their appearance in the 1940s, simulators have been gaining ground and consolidating themselves as “efficient” and risk-respectful tools for driver training under controlled risk conditions. In the beginning, given the large outlay involved, only the Administrations were able to commission the development of simulators, and the United States Army was one of the first sponsors.

In the 1960s and 1970s, with the advent of the first digital computers and computer graphics, the U.S. Army commissioned the development of the first “full mission” simulator in history []. It was an aircraft flight simulator for training pilots that reproduced both the cockpit and a virtual scenario in which the aircraft flew. It was qualified as “full mission” because it allowed pilots to be trained from takeoff to landing.

With the passing of the years and the great advances in the technological field (microprocessors, screens, projection, controllers, etc.), costs grew lower and, in addition to the Administrations, large companies began to develop their own simulators. This encouraged the appearance of simulators for all types of vehicles: flight [], ship [], submarine [,], car [,], truck [,], etc. As an example of this expansion, in the 1970s, there had already been 28 driving simulators developed worldwide [].

Initially, simulators were used only for training in areas or situations that were either too dangerous for users to practice in real life or were much more expensive to reproduce in real life than the investment needed to develop a simulator. Today, this concept has expanded, with entertainment being one of the main niches in the field of simulation. On the other hand, there are also simulators intended exclusively for scientific use.

1.2. Simulator Classification

Currently, there are so many companies dedicated to the design, development, and manufacture of driving simulators that there are very different criteria used to classify them []. Some of these criteria are:

According to their purpose [], simulators can be classified into three groups: (i) training, when educational objectives are pursued or related to the prevention of risks and traffic accidents. These, in turn, can be divided into professional and amateur simulators. This is the group that we focus on in this study. Among other uses (outside the scope of this study), simulators are usually—albeit not only—applied for (ii) research (when the purpose of the simulator is to investigate a certain area of knowledge), and even (iii) entertainment (when the purpose of the simulator is to amuse and entertain).

Based on their physical characteristics, simulators are usually classified on the basis of their visualization system (e.g., field of view, projection system) []; pixel size resolution (i.e., better or worse feeling of user involvement and immersive experience) []; cockpit (having/not having a sensorized driving cab, which allows for driving as if in a real vehicle) []; sound system (e.g., two-way sound (stereo), surround systems, or “8.1” systems) []; force-feedback (small motors providing users with feedback) []; and motion platform (allowing the user to reproduce the accelerations that would be felt in the simulator) [].

In addition, according to their software-related characteristics, the most common simulator uses are procedural simulation (used for formative/training purposes) []; full-mission (used for professional driving training) []; games (generally intended for entertainment) []; and configurable settings (situations can be live-edited) [].

1.3. Simulators for Driving Training

This study covered the specific case of simulators intended to train divers, which has an extensive background principally grounded on the concept of edutainment. It can be understood as “the combination of education and entertainment in a learning process” [,]. Buckingham and Scanlon [] previously stated that “edutainment is based on attracting and maintaining the attention of learners by using screens or animations to make learning fun”; in other words, it is based on the use of simulators. In fact, it has been shown [,,] that, thanks to the use of these new technologies, which often include various stimuli such as images, sounds, and videos, students are more likely to pay attention to the content and end up transferring it from short-term to long-term memory, thus becoming more entrenched in their knowledge. This is especially relevant in in-vehicle driving simulators, since, on the one hand, general skills are taught, and on the other hand, participants are trained in situations that rarely occur in real life are, but for which one must be prepared.

Driving simulators are so widely used today that in some countries, individuals are even required to pass certain tests on simulators in order to obtain a driver’s license [,]. These countries include the United Kingdom, the Netherlands, Singapore, and Finland [,,]. One of the great advantages of using simulators is that they are able to measure, in an analytical way, everything that is happening with the simulated vehicle and whether the user is reacting correctly or not to the situations that arise. Another great advantage of simulators is that they allow the same situation to be recreated reliably over and over again (replicability), which makes it possible, on the one hand, to train complex situations without putting any user at risk, and, on the other hand, to make requirements for passing a test more objective, since all users can face exactly the same situation. In other countries, such as Saudi Arabia, where women were recently allowed to apply for driving licenses, a large number of requests for new driver training were received in a short period of time, and had it not been for the use of simulators, it probably would not have been possible to meet the high demand [].

1.4. Theories of Performance and Implications for Simulation-Based Training (SBT) Measurement

Salas et al., 2009 [], provided a review of the state of the science on simulation-based training (SBT) performance measurement systems. It states that training using traditional methods, such as lectures or conferences, is insufficient to meet the demands of many modern work environments or organizations. That is why they are resorting to the use of simulators to transfer this knowledge, which is usually very practical and oriented to situations that participants will encounter in the real world.

The effectiveness of all the aforementioned types of simulator requires a set of generic/standardized actions, such as a guided training plan and a continuous measurement of staff performance, so that their aptitude can be evaluated and the training can be fed back by these measurements. If a performance measurement is not correctly adjusted for individual or teamwork use, it will certainly lead to a waste of time and money for both the trainee and the company providing the training.

There is currently a wide variety of theories on how performance should be measured and its implications in SBT-based systems, for both individual and collective learning (teamwork).

For the measurement of individual performance, the work of Campbell et al. [] stands out; they state that performance depends on three variables: declarative knowledge, i.e., the facts and knowledge necessary to complete a task (understanding the task requirements); procedural knowledge and skills, i.e., the combination of knowing what to do and how to do it correctly; and, finally, motivation, i.e., the combination of the expenditure, level, and persistence of effort required for learning.

Regarding the measurement of team performance, there is a wide discussion [,] on the definitions of performance and its effectiveness. This has resulted in the formation of different frameworks depending on the specific learning context. Training a flight crew is not the same as training a marketing or human resources team. Among the different existing theoretical frameworks for measuring team performance in SBT are input–process–output (IPO) [,,], shared mental models [,,], adaptability [,], the “big five” of teamwork [,,,,], and macrocognition/team cognition [].

These theories require certain methods for their application in measuring SBT performance and feedback. Salas classifies these methods according to whether they are qualitative or quantitative. Those of the former type are used to define the simulation system to be developed and the measurements to be made. These include protocol analysis [], the critical incident technique [], and conceptual maps []. The latter type consists of those which, based on the former, quantify the developed processes and provide feedback to the system to correct what is necessary. These include behaviorally anchored rating scales, or BARS []; behavioral observation scales, or BOS []; communication analyses []; event-based measurement, or EBAT []; structural behavioral assessment []; self-report measures; and, finally, automated performance recording and measurement [].

Simulation is a field that is increasingly incorporated into our daily lives. In recent years, research has emerged with new methods to measure simulation-based learning. Papakostas et al. [] proposed a novel and well-established model to measure the user experience and usability of a simulation-based training application. This method combines the perceived usefulness, the perceived ease of use, the behavioral intention to use the system, and two more external variables to conclude the user acceptance of the simulation system. Some years before, the same authors proposed a similar method based on the evaluation of external variables considered to be “strong predictors” [].

As can be seen, the variability of measurement types for performance in simulation-based coaches is enormous. Therefore, this methodological review does not focused on any of them, but rather presents the different studies published to date, exposing the results obtained and the methods used for each of them. INTRAS (Institute of Traffic and Road Safety) and IRTIC (Institute of Robotics and Information and Communication Technologies) of the University of Valencia have been working together for many years in the area of driving simulation. They have developed dozens of simulators and campaigns aimed at both professional and novice drivers, always attempting to objectify the results each simulator transmits to its users [,]. Similarly, the scientific community has spent many years studying the impact of each type of simulator on each type of training, and this has been the source of a large number of publications, some of them contradicting the results of others and questioning the effectiveness of certain simulators [].

Therefore, it is considered necessary to systematically review what has been published to date regarding the effectiveness of simulation systems for driver training. The following sections cover this need.

1.5. Study Aim

Considering the aforementioned issues, the core aim of this systematic review was to analyze the existing original literature on driving simulators as tools for driver training/instruction. We considered the features of the studies, their quality, and the established degree of effectiveness of the simulators for these purposes.

2. Materials and Methods

In order to follow a standardized structure and procedural criteria, systematic reviews commonly use a specific and transparent protocol to select articles that explain a specific topic or research question through comprehensive mapping of the scientific evidence []. The present systematic review was conducted following the steps and quality standards established by PRISMA 2020 [] and the recommendations of the Cochrane Review Group []. Four authors of the present manuscript conducted the search, selection, and registration of the data independently to guarantee the validity of the articles included in the review. Where discrepancies occurred, they were resolved by consensus.

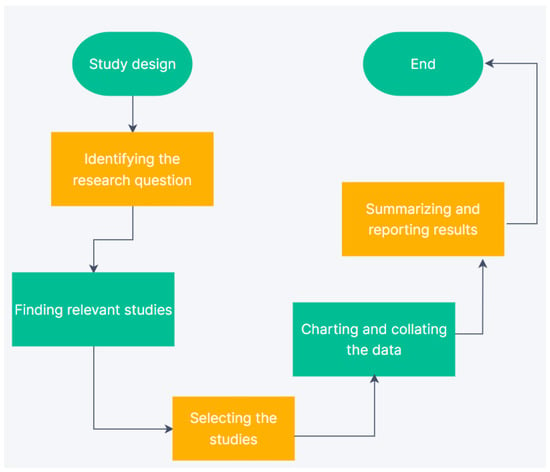

In the development of the systematic review, five steps were followed (Figure 1).

Figure 1.

Data flow chart (DFC) describing the methodological steps of the systematic review.

2.1. Step 1: Identifying the Research Question

The objective of the present systematic review was to identify scientific articles that have evaluated the effectiveness of driving simulators as tools for driver training and education. Therefore, articles that used driving simulators for other research purposes were discarded. Studies that analyzed drivers’ performance, the presence of certain variables specific to the driving task, or any other type of study whose objective was not the use of driving simulators in a training context were not included.

Therefore, as for our purposes, we sought to determine the degree of effectiveness of driving simulators for this purpose, as well as to collect and record the main findings evidenced by the scientific literature and to discover the limitations that may have existed in the evaluation process. No comparisons were made. The results include a summary and a thematic analysis of all selected articles.

2.2. Step 2: Finding Relevant Studies

Initially, a scoping review of the literature was carried out to preliminarily evaluate the potential and scope of the research objectives, as well as to identify the key terms to be applied in the search strategies for the subsequent stages of the review process.

Once the scoping review had been carried out, four databases were selected to develop the search strategy for articles: Web of Science, Scopus, PubMed, and Cochrane Library. These bibliographic databases were chosen because of their recognition as reliable indicators of quality valued by the scientific community and, especially, by the field of research in which the thematic area of the review is framed. In addition, we searched other reference lists of various reviews of potentially eligible scopes that did not appear when the search strategies were applied.

The search was conducted in the second week of August 2022. There were no exclusion criteria related to the year of publication. Therefore, for the present review, all the literature published from the beginning of the database to the date of the search was considered as potentially eligible before applying the systematic review filters.

In addition, the search strategy was carried out taking into account that the review covered research published in both English and Spanish. Therefore, we used the same Boolean search operator in all the databases: “(evaluation OR evaluación OR effectiveness OR efficiency OR efectividad) AND (driving AND conducción OR simulador AND conducción) AND (training OR education OR formation OR research OR entrenamiento OR formación OR educación)”. Both the keywords for the search and the Boolean operator were agreed upon by the authors after the scoping review was performed.

2.3. Step 3: Selecting the Studies

During this step, articles that did not specifically address the research objective were excluded. All authors initially and independently evaluated the manuscripts and subsequently met to discuss and resolve any discrepancies.

Only scientific articles were included, avoiding the inclusion of gray literature. Therefore, publications in the form of letters, doctoral dissertations, conferences/abstracts, editorials, case reports, protocols, or case series were not selected. Eligibility criteria were also restricted to articles for which the full text was accessible, either because they were available due to their open-access status or because they could be requested through the library system.

2.4. Step 4: Charting and Collating the Data

Articles that met the inclusion criteria were critically reviewed using the descriptive-analytic method of Arksey and O’Malley (2005) []. The extraction was recorded for each eligible article, including author(s), year of publication, country of study, objectives, method and sample, results (main outcomes), and key limitations.

2.5. Step 5: Summarizing and Reporting the Results

The data extraction was recorded in tabular form. The main findings were summarized and discussed, and the salient features of the selected articles were described. In addition, a quality assessment of the studies was performed to ensure that the results would not be significantly altered.

3. Results

3.1. Search Results

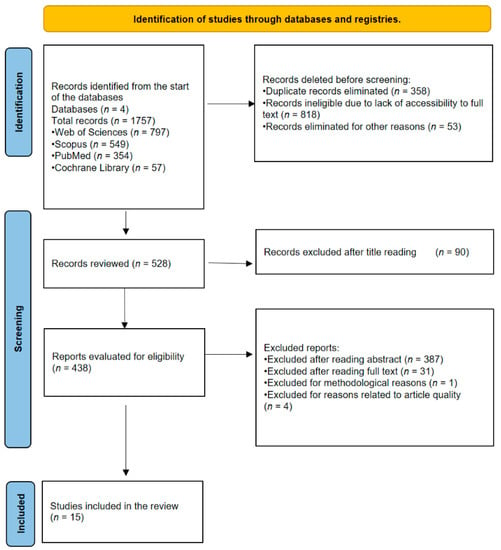

Figure 2 shows the process of searching and selecting data sources. Initially, the search strategy yielded a total of 1757 articles for analysis. After discarding all duplicate documents and ineligible articles for various reasons, 1229 were excluded. After reading the title, abstract, and full text, the articles were screened for whether or not they responded specifically to the objectives of the review and/or for whether or not they had sufficient quality indexes to be included, according to the criteria established by the Critical Appraisal Skills Programme (CASP). After this process, 15 eligible articles were obtained and included in the study.

Figure 2.

PRISMA diagram for this systematic review.

3.2. Characteristics of the Eligible Research Articles

Although the search was conducted for articles published in both Spanish and English, the 15 eligible articles were published in English. The selected papers were published between 2003 and 2021, which is in accordance with the recentness and novelty of the subject matter of the study.

In addition, there were key geographical clusters grouping most of the research, defined in this study on a country basis. The studies were conducted in multiple geographical regions. In this sense, there was representation from eight countries located on three continents. Specifically, the selected studies were distributed among the United States (n = 6), France (n = 3), Italy (n = 1), United Kingdom (n = 1), Canada (n = 1), Switzerland (n = 1), the Netherlands (n = 1), and Korea (n = 1).

After assessing the studies selected for the analysis phase of this systematic review, the studies were classified according to their core features, e.g., year of publication, objectives, methodological approach, main outcomes, and key acknowledged limitations, if applicable. These data were tabulated in order to determine their interpretability and comparability, as suggested by the PRISMA guidelines. Table 1 shows the general characteristics of the analyzed original research articles.

Table 1.

Records of the general characteristics of the chosen studies.

Thus, in relation to the methodologies used in the research on the effectiveness of driving simulators for driver training, several elements of interest can be observed. On the one hand, most of the studies employed an experimental methodology. Therefore, in 7 of the selected studies, which represented 46.7%, groups of participants who had been trained with a training program using a simulator were compared with the control groups, consisting of participants who had not been subjected to this training process, to determine the effectiveness of the simulator for this task. In other cases, different training programs were compared, either with and without simulators or with various types of simulators with different degrees of realism for the user, but, in all cases, without using any group as a control (n = 4, representing 26.6% of the studies). On the other hand, only one study (6.7%) used the same simulator training method for different groups of users to analyze the differences depending on their roles as drivers [].

The rest of the investigations (n = 5, representing 33.3%) employed a single group of subjects, so these were longitudinal studies in which intrasubject comparisons were performed, i.e., each subject was assigned all the conditions of the independent variable. Therefore, the magnitude of the disturbing variables was constant for each subject, and the variation observed in each subject was explained by the variation of the independent variable, which, in this case, was training or training with a driving simulator. Thus, the process was not identical in all studies of this type. In 2 studies (13.3% of the total number of studies selected), the same group was exposed to real road circumstances and to a simulator. In the other 3 studies (20.0%), the subjects were only evaluated before and after simulator training using this tool, without evaluating performance in real environments.

Regarding the results of the studies on the effectiveness of driving simulators for driver training, all but one of the studies observed an improvement in performance (n = 14, representing 93.3%). However, the effects were not equally strong in all studies. Thus, in 11 of them (73.3%), the improvements were significant in comparison with the subjects before receiving training or with the control groups that did not receive training. On the other hand, 4 studies (26.6%) observed that all the training programs they applied were beneficial for the improvement of the participants’ performance, regardless of whether or not a simulator was used and the degree of realism of the tool. In any case, 2 of them (11.7%) emphasized that using more realistic simulators positively affected driver performance compared to other training programs. In addition, there were 2 studies (13.3%) that pointed out the partial benefits of simulator training, as they observed improvements in some driving tasks, such as reduction in errors and violations, but no significant improvements in other specific actions []. Finally, only one study (6.7%) did not obtain evidence of the effectiveness of simulators in improving the execution of driving tasks. However, it did observe learning in the evaluated participants [].

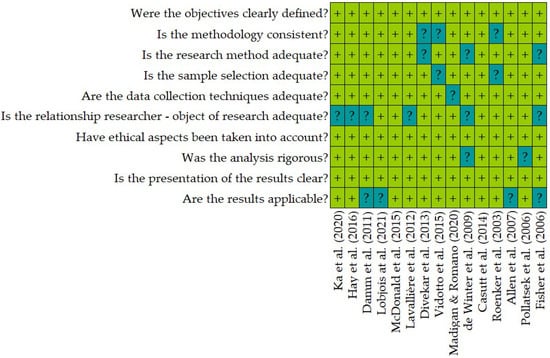

3.3. Evaluation of the Quality of the Selected Studies

The Critical Appraisal Skills Programme (CASP) quality assessment tool was applied to ensure that no selected study could interfere with or distort the conclusions of this systematic review. This instrument allows for evaluation of the rigor and relevance of a scientific publication by means of ten specific questions []. The results obtained from the evaluation of the selected articles are shown in Figure 3. They all present a low risk of bias and have, therefore, been included in this review. In this process, four articles selected in the screening process were eliminated, as shown in Figure 2.

Figure 3.

Evaluation of the quality of the selected articles using the “Critical Appraisal Skills Programme” tool [,,,,,,,,,,,,,,].

4. Discussion

The aim of this systematic review was to gather and analyze the existing scientific literature evaluating the effectiveness of driving simulators as tools for driver training and education. Given the relevance of simulators as a training tool, especially in recent years, the scarce number of research studies specifically focused on evaluating their efficacy for this purpose stands out []. Thus, in bibliographic databases, there are hundreds of scientific articles using driving simulators. However, they are mostly used to develop research in which the simulator analyzes some aspects of the user’s driving task [,]. These types of studies were discarded in the article selection process given that they did not cover the evaluation of the simulator; this tool was a means to evaluate other variables that are not the subject of the present review.

In this sense, analyzing the effectiveness of simulators is important to determine the degree to which they are useful for improving driver performance []. The selected studies employed different methodologies in which groups that had received training with simulators and control groups that had not received it were compared, or the performance of subjects before and after receiving these training programs was compared [,]. In practically all cases (exception McDonald et al. (2015) []), a significant improvement in the performance of the participants who received this type of training was evident. Thus, it is indicated that all types of drivers can benefit from simulator training, since it allows them to experience complex situations in a safe and controlled environment.

Specifically, several studies indicated that these training programs were particularly useful for novice drivers [,,,] and older drivers []. These results contrast with other research conducted in these age groups, which has not presented evidence supporting the effectiveness of simulators for driver training []. For example, Hirsch and Bellavance (2017) [] did not observe differences in the accident rate between drivers who received simulator training and those who did not. For their part, Rosenbloom and Eldror (2014) [] also found no significant differences between novice drivers who had received a training program and those who had not. In any case, these studies were discarded from the article selection process because they presented important limitations that restricted the quality of the manuscripts. These limitations were mainly related to sample selection biases, biases linked to the unblinded self-assessment of the data, and the small sample size, factors which should, therefore, be partially taken into account when drawing conclusions.

In any case, the systematic review carried out herein did include research with homogeneous results that demonstrated the effectiveness of simulators for driver training. However, it should be noted that some variables may have interfered with learning or favored learning through simulators. According to the analyzed studies, one of the most relevant of these was the degree of realism of the utilized simulator. Allen et al. (2007) [] compared three groups of participants trained with different simulators, from a very basic one with a narrow field of vision to a complete one consisting of a combination of wide-angle projected screens. They reported that the best results were obtained when the simulator was adjusted to real conditions. Madigan and Romano (2020) [] shared these findings, observing that the group of participants trained on the simulator with the best video and projection quality obtained the best scores in the subsequent tests.

In this sense, the level of realism may influence whether the assessment of performance through the simulator is (or is not) different from the assessment of drivers in a real environment. In fact, van Paridon et al. (2021) [] stated that the cyclists who participated in their research had a better perception of road objects in the simulator than on the road. They attributed these differences to the fact that the virtual environment had fewer elements than the real environment, making it easier for the subjects to focus their attention on the indicated objects since there were fewer distracting elements. On the other hand, other research demonstrated that the workload reported by the participants in the simulators was higher than that which they had on the road. Further, Lobjois et al. (2021) [] performed physiological measurements in which they observed a higher flicker frequency in virtual environments. In addition, participants self-reported more stress in the simulator compared to real driving. In both cases, the differences occurred because the subject was not fully immersed in a realistic environment, but was aware that he/she was in a simulator, which may have altered performance levels in some cases.

Consequently, and given the potential differences in performance in both environments, it would be beneficial to assess drivers in real-world conditions after their training in virtual environments and/or driving simulators. Otherwise, regardless of a simulator being used (or not), driving training’s typical setting makes it a road performance intervention; for this reason, some studies argue that even more reliable and valid assessment results might be obtained if an adequate balance between intra- and extra-simulator conditions were achieved [,,].

Another variable that influences performance is the prevalence of certain driving situations or actions in the training period. Divekar et al. (2013) [] note that, although driver training was effective for the trained tasks, it did not generalize to other driving actions. Therefore, to increase the effectiveness, realistic simulator conditions are not sufficient, but must be supplemented with road situations that trigger multiple driving tasks to ensure the most complete training possible. However, if systematic errors are observed with certain specific actions, the tool can also be adapted to repeat that action. Thus, performance would be improved in those tasks in which drivers perform more infractions, and would attend to those actions in which participants already show the correct performance to a lesser extent.

Additionally, the level of acceptability and confidence of the participants and their predisposition to use driving simulators can also influence the research results. Thus, Salvati et al. (2020) [] pointed out that some participants may have limitations or difficulties in understanding the simulator system, influencing their performance and ability to take advantage of the tool. In addition, this level of confidence is variable and may increase through practice, or, on the contrary, may decrease if critical situations are experienced.

These results are congruent with other research in which the perceived degree of security these systems and the intention to use them are important variables for trust in technology, especially in a traffic context []. These are factors that can be influenced by the social and cultural context of the region in which these types of training programs are implemented. In addition, other studies recommend considering public acceptance as a relevant matter to strengthen alongside large-scale technological improvements, such as automated vehicles, advanced driving assistance systems (ADAS), and training driving simulators, given their ability to mark new technological trends with potential to increase drivers’ safety and welfare [,,].

Limitations of the Systematic Review

This systematic review was carried out following the PRISMA procedure in order to avoid possible biases in the data selection and/or recording. In addition, the inclusion/exclusion criterion was that the eligible articles were part of databases relevant to the scientific community; this was decided in order to guarantee an adequate selection of articles. Nevertheless, the present systematic review is not free of the limitations inherent to this type of study. Thus, the review may present publication bias, which occurs when research with “negative” or non-significant results is either published in lower-impact journals or not published at all. Consequently, such articles may not have been included in the review because they do not appear in high-impact databases [].

This bias could be related to the final low number of research papers that met the established eligibility criteria. Thus, this circumstance could limit the breadth and scope of the research findings. In addition, it is possible that the non-indexed literature (although it may have methodological limitations or limitations in terms of the quality of the results) could provide more interesting information on this subject.

5. Conclusions

The results of this systematic review point to the problematic scarcity of literature on the formal evaluation of driving simulators for driver training, given the (overall) reduced number of high-quality studies meeting the PRISMA-based inclusion criteria. This means that, aside from the large number of existing simulation tools, there is limited information on the actual effectiveness of these tools for training purposes. In addition, although most studies demonstrated good quality, many of them showed external validity issues as a consequence of typical shortcomings such as limited research samples and the absence of follow-up procedures to determine the training outcomes. Nevertheless, most sources assessing the issue provided good insights into the value of driving simulators in improving human-based road safety under risk control settings.

To fill these gaps, some of the analyzed sources suggested strengthening the current evaluation processes, aiming to target the degree of contextual effectiveness of various simulators and, if necessary, being able to apply further modifications and ergonomic improvements to increase their training value for both novice and current drivers.

Moreover, as evaluations become more common and frequent in this area, there will also be further technical improvements and ergonomic adaptations of simulators to allow for their more frequent use in driver training. Therefore, the knowledge provided by the existing evaluations included in this systematic review, as well as those to be carried out in the coming years, will provide data of interest for the design and development of specific training programs using simulators for driver instruction.

Author Contributions

Conceptualization, F.A. and S.A.U.; methodology, S.A.U. and M.F.; software, M.F.-M.; validation, F.A., J.V.R. and M.F.-M.; formal analysis, S.A.U. and M.F.; investigation, S.A.U. and M.F.; data curation, F.A., J.V.R. and M.F.; writing—original draft preparation, M.F. and S.A.U.; writing—review and editing, S.A.U. and M.F.; visualization, F.A. and M.F.-M.; funding acquisition, M.F.; supervision, S.A.U. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly supported by the research grant ACIF/2020/035 offered by the “Generalitat Valenciana” (Regional Government of the Valencian Community, Spain). The sponsoring body did not interfere with the study design; with the collection, analysis, or interpretation of the data; nor with the writing of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of the Research Institute on Traffic and Road Safety at the University of Valencia (IRB approval number: RE0002260522, from 26 May 2022).

Informed Consent Statement

Not applicable.

Data Availability Statement

The systematic review does not require the creation of new data, the selected articles are available in open access in the databases used (Web of Science, Scopus, PubMed, and Cochrane Library).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Woodruff, R.; Smith, J.F.; Fuller, J.R.; Weyer, D.C. Full Mission Simulation in Undergraduate Pilot Training: An Exploratory Study; Defense Technical Information Center, Department of Defense: Fort Belvoir, VA, USA, 1976. [Google Scholar]

- Rolfe, J.M.; Staples, K.J. Flight Simulation; No. 1; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Spencer, S.N. Proceedings of the 14th ACM SIGGRAPH International Conference on Virtual Reality Continuum and Its Applications in Industry, Kobe, Japan 30 October–1 November 2015; ACM: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Huang, H.M.; Hira, R.; Feldman, P. A Submarine Simulator Driven by a Hierarchical Real-Time Control System Architecture; National Institute of Standards and Technology: Gaithersburg, MD, USA, 1992; NIST IR 4875. [Google Scholar] [CrossRef]

- Lin, Z.; Feng, S.; Ying, L. The design of a submarine voyage training simulator. In Proceedings of the SMC’98 Conference Proceedings, Proceedings 1998 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No. 98CH36218), San Diego, CA, USA, 14 October 1998; IEEE: Piscataway, NJ, USA, 1998; Volume 4, pp. 3720–3724. [Google Scholar] [CrossRef]

- Allen, R.W.; Jex, H.R.; McRuer, D.T.; DiMarco, R.J. Alcohol effects on driving behavior and performance in a car simulator. IEEE Trans. Syst. Man Cybern. 1975, SMC-5, 498–505. [Google Scholar] [CrossRef]

- Wymann, B.; Espié, E.; Guionneau, C.; Dimitrakakis, C.; Coulom, R.; Sumner, A. Torcs, the Open Racing Car Simulator. 2000. Available online: http://torcs.sourceforge.net (accessed on 7 January 2023).

- Robin, J.L.; Knipling, R.R.; Tidwell, S.A.; Derrickson, L.; Antonik, C.; McFann, J. Truck Simulator Validation (“SimVal”) Training Effectiveness Study; International Truck and Bus Safety and Security Symposium: Alexandria, VA, USA, 2005; Available online: https://trid.trb.org/view/1156437 (accessed on 9 December 2022).

- Gillberg, M.; Kecklund, G.; Åkerstedt, T. Sleepiness and performance of professional drivers in a truck simulator—Comparisons between day and night driving. J. Sleep Res. 1996, 5, 12–15. [Google Scholar] [CrossRef] [PubMed]

- Hulbert, S.; Wojcik, C. Driving Tak Simulation. Human Factors in Highway Traffic Safety Research; Michigan State University: East Lansing, MI, USA, 1972. [Google Scholar]

- Eryilmaz, U.; Tokmak, H.S.; Cagiltay, K.; Isler, V.; Eryilmaz, N.O. A novel classification method for driving simulators based on existing flight simulator classification standards. Transp. Res. Part C Emerg. Technol. 2014, 42, 132–146. [Google Scholar] [CrossRef]

- Galloway, R.T. Model validation topics for real time simulator design courses. In Proceedings of the Society for Modeling and Simulation International, 2001 Summer Computer Simulation Conference, Orlando, FL, USA, 15–19 July 2001. [Google Scholar]

- Li, N.; Sun, N.; Cao, C.; Hou, S.; Gong, Y. Review on visualization technology in simulation training system for major natural disasters. Nat. Hazards 2022, 112, 1851–1882. [Google Scholar] [CrossRef]

- Ng, T.S. Robotic Vehicles: Systems and Technology; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Ploner-Bernard, H.; Sontacchi, A.; Lichtenegger, G.; Vössner, S.; Braunstingl, R. Sound-system design for a professional full-flight simulator. In Proceedings of the 8th International Conference on Digital Audio Effects (DAFx-05), Madrid, Spain, 20–22 September 2005; pp. 36–41. [Google Scholar]

- Mohellebi, H.; Kheddar, A.; Espié, S. Adaptive haptic feedback steering wheel for driving simulators. IEEE Trans. Veh. Technol. 2008, 58, 1654–1666. [Google Scholar] [CrossRef]

- Mohellebi, H.; Espié, S.; Arioui, H.; Amouri, A.; Kheddar, A. Low cost motion platform for driving simulator. In Proceedings of the ICMA: International Conference on Machine Automation, Japan, November 2004; Volume 5, pp. 271–277. [Google Scholar]

- Seymour, N.E. VR to OR: A review of the evidence that virtual reality simulation improves operating room performance. World J. Surg. 2008, 32, 182–188. [Google Scholar] [CrossRef]

- Parkes, A.M.; Reed, N.; Ride, N.M. Fuel efficiency training in a full-mission truck simulator. In Behavioural Research in Road Safety 2005: Fifteenth Seminar; Department for Transport: London, UK, 2005; pp. 135–146. [Google Scholar]

- Narayanasamy, V.; Wong, K.W.; Fung, C.C.; Rai, S. Distinguishing games and simulation games from simulators. Comput. Entertain. 2006, 4, 9-es. [Google Scholar] [CrossRef]

- Corona, F.; Perrotta, F.; Polcini, E.T.; Cozzarelli, C. The new frontiers of edutainment: The development of an educational and socio-cultural phenomenon over time of globalization. J. Soc. Sci. 2011, 7, 408. [Google Scholar] [CrossRef]

- Corona, F.; Cozzarelli, C.; Palumbo, C.; Sibilio, M. Information technology and edutainment: Education and entertainment in the age of interactivity. Int. J. Digit. Lit. Digit. Competence 2013, 4, 12–18. [Google Scholar] [CrossRef]

- Buckingham, D.; Scanlon, M. Edutainment. J. Early Child. Lit. 2001, 1, 281–299. [Google Scholar] [CrossRef]

- Backhaus, K.; Liff, J.P. Cognitive styles and approaches to studying in management education. J. Manag. Educ. 2007, 31, 445–466. [Google Scholar] [CrossRef]

- Faus, M.; Alonso, F.; Esteban, C.; Useche, S. Are Adult Driver Education Programs Effective? A Systematic Review of Evaluations of Accident Prevention Training Courses. Int. J. Educ. Psychol. 2023, 12, 62–91. [Google Scholar] [CrossRef]

- Valero-Mora, P.M.; Zacarés, J.J.; Sánchez-García, M.; Tormo-Lancero, M.T.; Faus, M. Conspiracy beliefs are related to the use of smartphones behind the wheel. Int. J. Environ. Health Res. 2021, 18, 7725. [Google Scholar] [CrossRef] [PubMed]

- Straus, S.H. New, Improved, Comprehensive, and Automated Driver’s License Test and Vision Screening System; No. FHWA-AZ-04- 559 (1); Arizona Department of Transportation: Phoenix, AZ, USA, 2005. [Google Scholar]

- Sætren, G.B.; Pedersen, P.A.; Robertsen, R.; Haukeberg, P.; Rasmussen, M.; Lindheim, C. Simulator training in driver education—Potential gains and challenges. In Safety and Reliability–Safe Societies in a Changing World; CRC Press: Boca Raton, FL, USA, 2018; pp. 2045–2049. [Google Scholar]

- Upahita, D.P.; Wong, Y.D.; Lum, K.M. Effect of driving experience and driving inactivity on young driver’s hazard mitigation skills. Transp. Res. F Traffic Psychol. Behav. 2018, 59, 286–297. [Google Scholar] [CrossRef]

- Bro, T.; Lindblom, B. Strain out a gnat and swallow a camel?—Vision and driving in the Nordic countries. Acta Ophthalmol. 2018, 96, 623–630. [Google Scholar] [CrossRef] [PubMed]

- Al-Garawi, N.; Dalhat, M.A.; Aga, O. Assessing the road traffic crashes among novice female drivers in Saudi Arabia. Sustainability 2021, 13, 8613. [Google Scholar] [CrossRef]

- Salas, E.; Rosen, M.A.; Held, J.D.; Weissmuller, J.J. Performance measurement in simulation-based training: A review and best practices. Simul. Gaming 2009, 40, 328–376. [Google Scholar] [CrossRef]

- Campbell, J.P.; McCloy, R.A.; Oppler, S.H.; Sager, E. A theory of performance. In Personnel Selection in Organizations; Schmitt, N., Borman, W., Eds.; Jossey-Bass: San Francisco, CA, USA, 1993; pp. 35–70. [Google Scholar]

- Kendall, D.L.; Salas, E. Measuring team performance: Review of current methods and consideration of future needs. In The Science and Simulation of Human Performance; Ness, J.W., Tepe, V., Ritzer, D., Eds.; Elsevier: Boston, MA, USA, 2004; pp. 307–326. [Google Scholar]

- MacBryde, J.; Mendibil, K. Designing performance measurement systems for teams: Theory and practice. Manag. Decis. 2003, 41, 722–733. [Google Scholar] [CrossRef]

- Salas, E.; Stagl, K.C.; Burke, C.S. 25 years of team effectiveness in organizations: Research themes and emerging needs. Int. Rev. Ind. Organ. Psychol. 2004, 19, 47–91. [Google Scholar]

- Salas, E.; Priest, H.A.; Burke, C.S. Teamwork and team performance measurement. In Evaluation of Human Work, 3rd ed.; Wilson, J.R., Corlett, N., Eds.; Taylor & Francis: Boca Raton, FL, USA, 2005; pp. 793–808. [Google Scholar]

- Marks, M.A.; Mathieu, J.E.; Zaccaro, S.J. A temporally based framework and taxonomy of team processes. Acad. Manag. Rev. 2001, 26, 356–376. [Google Scholar] [CrossRef]

- Klimoski, R.; Mohammed, S. Team mental model: Construct or metaphor? J. Manag. 1994, 20, 403–437. [Google Scholar] [CrossRef]

- Cannon-Bowers, J.A.; Salas, E.; Converse, S. Shared mental models in expert team decision making. In Individual and Group Decision Making; Castellan, N.J.J., Ed.; Lawrence Erlbaum: Hillsdale, NJ, USA, 1993; pp. 221–246. [Google Scholar]

- Mathieu, J.E.; Heffner, T.S.; Goodwin, G.F.; Salas, E.; Cannon-Bowers, J. The influence of shared mental models on team process and performance. J. Appl. Psychol. 2000, 85, 273–283. [Google Scholar] [CrossRef]

- Morgan, B.B.; Salas, E., Jr.; Glickman, A.S. An analysis of team evolution and maturation. J. Gen. Psychol. 1993, 120, 277–291. [Google Scholar] [CrossRef]

- Burke, C.S.; Stagl, K.C.; Salas, E.; Pierce, L.; Kendall, D. Understanding team adaptation: A conceptual analysis & model. J. Appl. Psychol. 2006, 91, 1189–1207. [Google Scholar] [PubMed]

- Zaccaro, S.J.; Rittman, A.L.; Marks, M.A. Team leadership. Leadersh. Q. 2001, 12, 451–483. [Google Scholar] [CrossRef]

- Burke, C.S.; Fiore, S.M.; Salas, E. The role of shared cognition in enabling shared leadership and team adaptability. In Shared Leadership: Reframing the Hows and Whys of Leadership; Pearce, C.L., Conger, J.A., Eds.; Sage: Thousand Oaks, CA, USA, 2004; pp. 103–121. [Google Scholar]

- Porter, C.O.; Hollenbeck, J.R.; Ilgen, D.R.; Ellis, A.P.; West, B.J.; Moon, H. Backing up behaviors in teams: The role of personality and legitimacy of need. J. Appl. Psychol. 2003, 88, 391–403. [Google Scholar] [CrossRef] [PubMed]

- Bandow, D. Time to create sound teamwork. J. Qual. Particip. 2001, 24, 41–47. [Google Scholar]

- Mohammed, S.; Klimoski, R.; Rentsch, J.R. The measurement of team mental models: We have no shared schema. Organ. Res. Methods 2000, 3, 123–165. [Google Scholar] [CrossRef]

- Shadbolt, N. Eliciting expertise. In Evaluation of Human Work; Wilson, J.R., Corlett, N., Eds.; Taylor & Francis: Boca Raton, FL, USA, 2005; pp. 185–218. [Google Scholar]

- Flanagan, J.C. The critical incident technique. Psychol. Bull. 1954, 51, 327–358. [Google Scholar] [CrossRef]

- Cooke, N.J.; Salas, E.; Kiekel, P.A.; Bell, B. Advances in measuring team cognition. In Team Cognition: Understanding the Factors that Drive Process and Performance; Salas, E., Fiore, S.M., Eds.; American Psychiatric Association: Washington, DC, USA, 2004; pp. 83–106. [Google Scholar]

- Smith, P.C.; Kendall, L.M. Retranslations or expectations: An approach to the construction of unambiguous anchors for rating scales. J. Appl. Scales 1963, 47, 149–155. [Google Scholar] [CrossRef]

- Baddeley, A.D.; Hitch, G. The recency effect: Implicit learning with explicit retrieval? Mem. Cogn. 1993, 21, 146–155. [Google Scholar] [CrossRef]

- Keikel, P.A.; Cooke, N.J.; Foltz, P.W.; Shope, S.M. Automating measurement of team cognition through analysis of communication data. In Usability Evaluation and Interface Design; Smith, M.J., Salvendy, G., Harris, D., Koubek, R.J., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2001; pp. 1382–1386. [Google Scholar]

- Rosen, M.A.; Salas, E.; Silvestri, S.; Wu, T.; Lazzara, E.H. A measurement tool for simulation-based training in emergency medicine: The Simulation Module for Assessment of Resident Targeted Event Responses (SMARTER) approach. Simul. Healthc. 2008, 3, 170–179. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Measuring User Experience, Usability and Interactivity of a Personalized Mobile Augmented Reality Training System. Sensors 2021, 21, 3888. [Google Scholar] [CrossRef] [PubMed]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. User acceptance of augmented reality welding simulator in engineering training. Educ. Inf. Technol. 2021, 27, 791–817. [Google Scholar] [CrossRef]

- Faus, M.; Alonso, F.; Javadinejad, A.; Useche, S.A. Are social networks effective in promoting healthy behaviors? A systematic review of evaluations of public health campaigns broadcast on Twitter. Front. Public Health 2022, 10, 1045645. [Google Scholar] [CrossRef]

- Alonso, F.; Faus, M.; Tormo, M.T.; Useche, S.A. Could technology and intelligent transport systems help improve mobility in an emerging country? Challenges, opportunities, gaps and other evidence from the caribbean. Appl. Sci. 2022, 12, 4759. [Google Scholar] [CrossRef]

- Rother, E.T. Systematic literature review X narrative review. Acta Paul. Enferm. 2007, 20, v–vi. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Moher, D. Updating guidance for reporting systematic reviews: Development of the PRISMA 2020 statement. J. Clin. Epidemiol. 2021, 134, 103–112. [Google Scholar] [CrossRef] [PubMed]

- Lundh, A.; Gøtzsche, P.C. Recommendations by Cochrane Review Groups for assessment of the risk of bias in studies. BMC Med. Res. Methodol. 2008, 8, 22. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Ka, E.; Kim, D.G.; Hong, J.; Lee, C. Implementing surrogate safety measures in driving simulator and evaluating the safety effects of simulator-based training on risky driving behaviors. J. Adv. Transp. 2020, 2020, 7525721. [Google Scholar] [CrossRef]

- Hay, M.; Adam, N.; Bocca, M.L.; Gabaude, C. Effectiveness of two cognitive training programs on the performance of older drivers with a cognitive self-assessment bias. Eur. Transp. Res Rev. 2016, 8, 20. [Google Scholar] [CrossRef]

- Damm, L.; Nachtergaële, C.; Meskali, M.; Berthelon, C. The evaluation of traditional and early driver training with simulated accident scenarios. Hum. Factors 2011, 53, 323–337. [Google Scholar] [CrossRef]

- Lobjois, R.; Faure, V.; Désiré, L.; Benguigui, N. Behavioral and workload measures in real and simulated driving: Do they tell us the same thing about the validity of driving simulation? Saf. Sci. 2021, 134, 105046. [Google Scholar] [CrossRef]

- McDonald, C.C.; Kandadai, V.; Loeb, H.; Seacrist, T.; Lee, Y.-C.; Bonfiglio, D.; Fisher, D.L.; Winston, F.K. Evaluation of a risk awareness perception training program on novice teen driver behavior at left-turn intersections. Transp. Res. Rec. 2015, 2516, 15–21. [Google Scholar] [CrossRef]

- Lavallière, M.; Simoneau, M.; Tremblay, M.; Laurendeau, D.; Teasdale, N. Active training and driving-specific feedback improve older drivers’ visual search prior to lane changes. BMC Geriatr. 2012, 12, 5. [Google Scholar] [CrossRef]

- Divekar, G.; Pradhan, A.K.; Masserang, K.M.; Reagan, I.; Pollatsek, A.; Fisher, D.L. A simulator evaluation of the effects of attention maintenance training on glance distributions of younger novice drivers inside and outside the vehicle. Transp. Res. F Traffic Psychol. Behav. 2013, 20, 154–169. [Google Scholar] [CrossRef]

- Vidotto, G.; Tagliabue, M.; Tira, M.D. Long-lasting virtual motorcycle-riding trainer effectiveness. Front. Psychol. 2015, 6, 1653. [Google Scholar] [CrossRef]

- Madigan, R.; Romano, R. Does the use of a head mounted display increase the success of risk awareness and perception training (RAPT) for drivers? Appl. Ergon. 2020, 85, 103076. [Google Scholar] [CrossRef]

- de Winter, J.C.; De Groot, S.; Mulder, M.; Wieringa, P.A.; Dankelman, J.; Mulder, J.A. Relationships between driving simulator performance and driving test results. Ergonomics 2009, 52, 137–153. [Google Scholar] [CrossRef]

- Casutt, G.; Theill, N.; Martin, M.; Keller, M.; Jäncke, L. The drive-wise project: Driving simulator training increases real driving performance in healthy older drivers. Front. Aging Neurosci. 2014, 6, 85. [Google Scholar] [CrossRef] [PubMed]

- Roenker, D.L.; Cissell, G.M.; Ball, K.K.; Wadley, V.G.; Edwards, J.D. Speed-of-processing and driving simulator training result in improved driving performance. Hum. Factors 2003, 45, 218–233. [Google Scholar] [CrossRef]

- Allen, R.W.; Park, G.D.; Cook, M.L.; Fiorentino, D. The effect of driving simulator fidelity on training effectiveness. In Proceedings of the Driving Simulation Conference, North America 2007 (DSC-NA 2007), Iowa City, IA, USA, 12–14 September 2007. [Google Scholar]

- Pollatsek, A.; Narayanaan, V.; Pradhan, A.; Fisher, D.L. Using eye movements to evaluate a PC-based risk awareness and perception training program on a driving simulator. Hum. Factors 2006, 48, 447–464. [Google Scholar] [CrossRef] [PubMed]

- Fisher, D.L.; Pollatsek, A.P.; Pradhan, A. Can novice drivers be trained to scan for information that will reduce their likelihood of a crash? Inj. Prev. 2006, 12 (Suppl. 1), i25–i29. [Google Scholar] [CrossRef]

- Long, H.A.; French, D.P.; Brooks, J.M. Optimising the value of the critical appraisal skills programme (CASP) tool for quality appraisal in qualitative evidence synthesis. Res. Methods Med. Health Sci. 2020, 1, 31–42. [Google Scholar] [CrossRef]

- Wang, Z.; Liao, X.; Wang, C.; Oswald, D.; Wu, G.; Boriboonsomsin, K.; Barth, M.J.; Han, K.; Kim, B.; Tiwari, P. Driver behavior modeling using game engine and real vehicle: A learning-based approach. IEEE Trans. Intell. Veh. 2020, 5, 738–749. [Google Scholar] [CrossRef]

- Carsten, O.; Jamson, A.H. Driving simulators as research tools in traffic psychology. In Handbook of Traffic Psychology; Academic Press: Cambridge, MA, USA, 2011; pp. 87–96. [Google Scholar] [CrossRef]

- Wynne, R.A.; Beanland, V.; Salmon, P.M. Systematic review of driving simulator validation studies. Saf. Sci. 2019, 117, 138–151. [Google Scholar] [CrossRef]

- Zhao, X.; Xu, W.; Ma, J.; Li, H.; Chen, Y. An analysis of the relationship between driver characteristics and driving safety using structural equation models. Transp. Res. F Traffic Psychol. Behav. 2019, 62, 529–545. [Google Scholar] [CrossRef]

- Martín-de los Reyes, L.M.; Jiménez-Mejías, E.; Martínez-Ruiz, V.; Moreno-Roldán, E.; Molina-Soberanes, D.; Lardelli-Claret, P. Efficacy of training with driving simulators in improving safety in young novice or learner drivers: A systematic review. Transp. Res. F Traffic Psychol. Behav. 2019, 62, 58–65. [Google Scholar] [CrossRef]

- Hirsch, P.; Bellavance, F. Transfer of skills learned on a driving simulator to on-road driving behavior. Transp. Res. Rec. 2017, 2660, 1–6. [Google Scholar] [CrossRef]

- Rosenbloom, T.; Eldror, E. Effectiveness evaluation of simulative workshops for newly licensed drivers. Accid. Anal. Prev. 2014, 63, 30–36. [Google Scholar] [CrossRef]

- van Paridon, K.; Timmis, M.A.; Sadeghi Esfahlani, S. Development and evaluation of a virtual environment to assess cycling hazard perception skills. Sensors 2021, 21, 5499. [Google Scholar] [CrossRef] [PubMed]

- Salvati, L.; d’Amore, M.; Fiorentino, A.; Pellegrino, A.; Sena, P.; Villecco, F. Development and Testing of a Methodology for the Assessment of Acceptability of LKA Systems. Machines 2020, 8, 47. [Google Scholar] [CrossRef]

- Alonso, F.; Faus, M.; Esteban, C.; Useche, S.A. Is there a predisposition towards the use of new technologies within the traffic field of emerging countries? The case of the Dominican Republic. Electronics 2021, 10, 1208. [Google Scholar] [CrossRef]

- Useche, S.A.; Peñaranda-Ortega, M.; Gonzalez-Marin, A.; Llamazares, F.J. Assessing the Effect of Drivers’ Gender on Their Intention to Use Fully Automated Vehicles. Appl. Sci. 2022, 12, 103. [Google Scholar] [CrossRef]

- Lijarcio, I.; Useche, S.A.; Llamazares, J.; Montoro, L. Availability, Demand, Perceived Constraints and Disuse of ADAS Technologies in Spain: Findings from a National Study. IEEE Access 2019, 7, 129862–129873. [Google Scholar] [CrossRef]

- Mlinarić, A.; Horvat, M.; Šupak Smolčić, V. Dealing with the positive publication bias: Why you should really publish your negative results. Biochem. Med. 2017, 27, 447–452. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).