Spare Parts Forecasting and Lumpiness Classification Using Neural Network Model and Its Impact on Aviation Safety

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

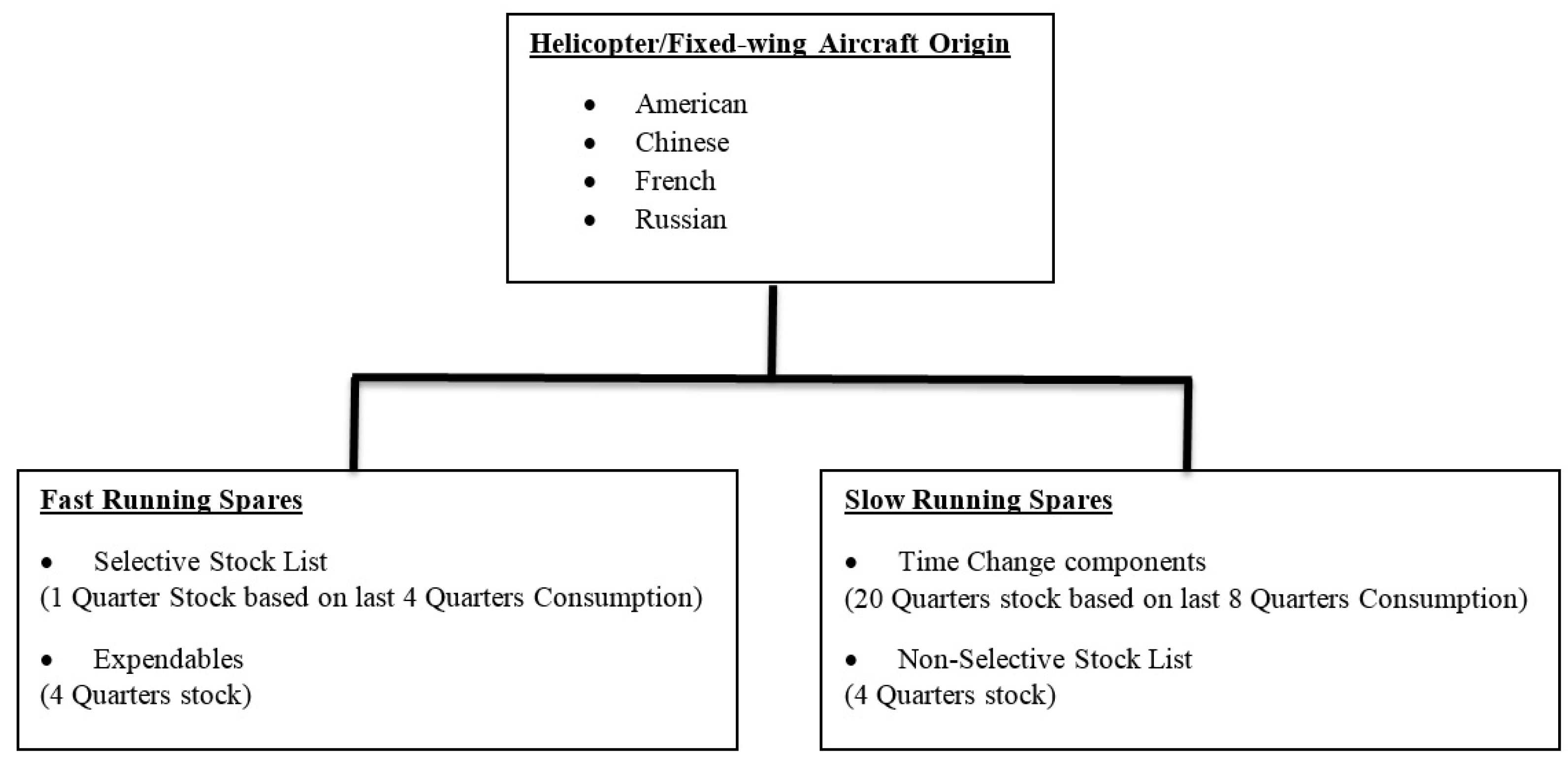

3.1. Proposed Lumpiness Classification

- Intervals between the demands;

- Demand size;

- Relationship between intervals and sizes.

3.2. Croston Method

3.3. Auto-ARIMA

3.4. Simple Exponential Smoothing

3.5. Artificial Neural Network Modeling

- Number of input neurons;

- Number of hidden neurons;

- Number of output neurons.

3.6. Recurent Neural Network Modeling

3.7. Long Short-Term Memory Modeling

3.8. Calibration

3.9. Implementation Methodology

- Filtering the critical spare parts (CSP) from the whole collection of aviation spare parts having the highest demand activity and characterized by lumpiness [58];

- Defining the influential factors that signified variables most strongly predictive of an outcome [41];

- Utilizing the neural network model to forecast the unknown demand data values of future consumption.

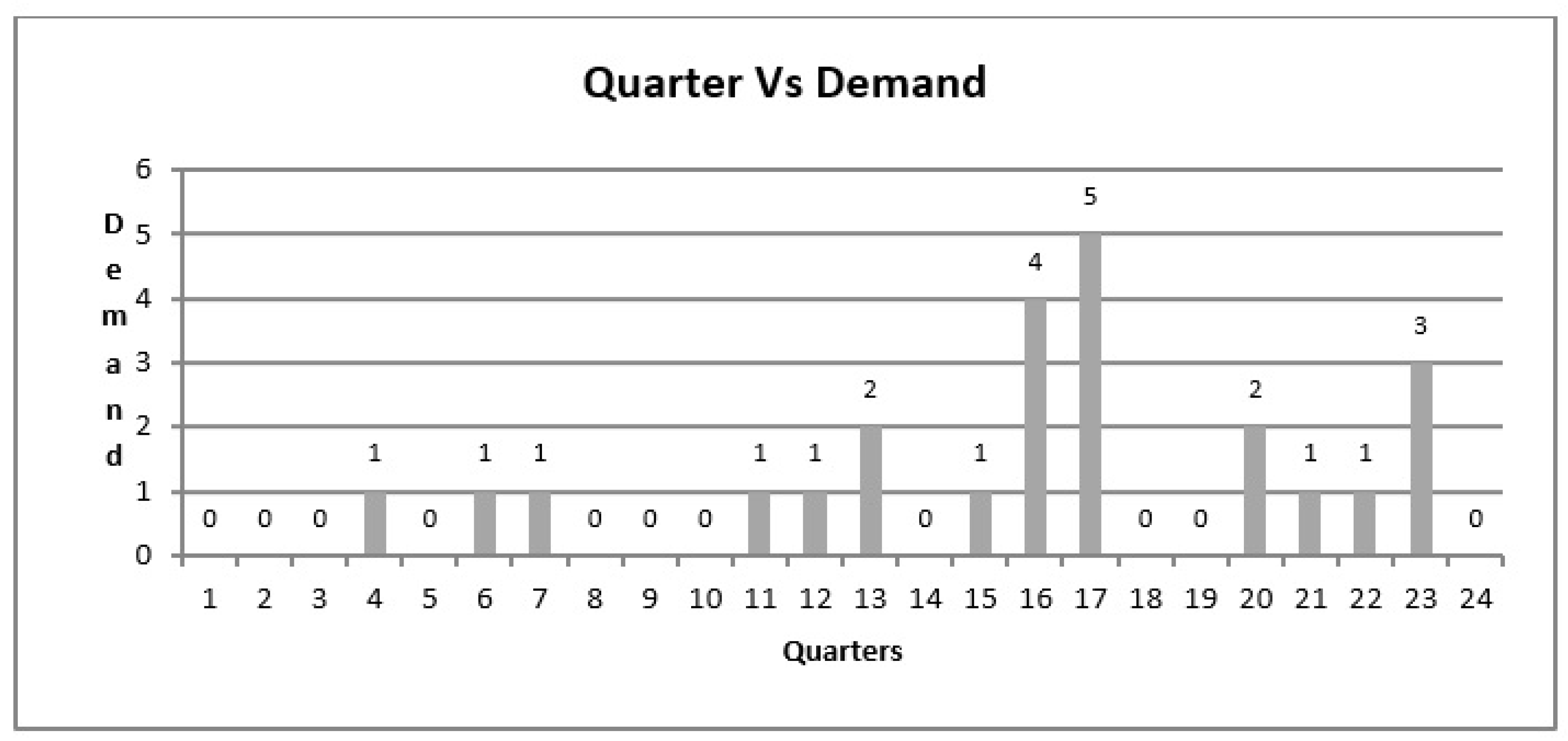

3.9.1. Lumpiness Factor Calculation

3.9.2. Calculation of Size Information

3.9.3. Calculation of Interval Information

3.9.4. Neural Network Training

- Training set (80%);

- Validation set (20% of the training set decided based on literature review);

- Testing set (20%).

- —Average of difference between the demand and its mean of four quarters over three years;

- —Average of four quarters demand over three years;

- —Average of four quarter interdemand interval over three years.

4. Results and Discussion

4.1. Comparison with Other State-of-the-Art Models

4.2. Implications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ghobbar, A.A.; Friend, C.H. Evaluation of forecasting methods for intermittent parts demand in the field of aviation: A predictive model. Comput. Oper. Res. 2003, 30, 2097–2114. [Google Scholar] [CrossRef]

- Nasiri Pour, A.; Rostami-Tabar, B.; Rahimzadeh, A. A hybrid neural network and traditional approach for forecasting lumpy demand. Proc. World Acad. Sci. Eng. Technol. 2008, 2, 1028–1034. [Google Scholar]

- Amin-Naseri, M.R.; Tabar, B.R. Neural network approach to lumpy demand forecasting for spare parts in process industries. In Proceedings of the 2008 International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 1378–1382. [Google Scholar]

- Hua, Z.; Zhang, B.; Yang, J.; Tan, D. A new approach of forecasting intermittent demand for spare parts inventories in the process industries. J. Oper. Res. Soc. 2007, 58, 52–61. [Google Scholar] [CrossRef]

- Wang, W.; Syntetos, A.A. Spare parts demand: Linking forecasting to equipment maintenance. Transp. Res. Part E Logist. Transp. Rev. 2011, 47, 1194–1209. [Google Scholar] [CrossRef]

- Wang, L.; Zeng, Y.; Chen, T. Back propagation neural network with adaptive differential evolution algorithm for time series forecasting. Expert Syst. Appl. 2015, 42, 855–863. [Google Scholar] [CrossRef]

- Zhang, G.; Patuwo, B.E.; Hu, M.Y. Forecasting with artificial neural networks: The state of the art. Int. J. Forecast. 1998, 14, 35–62. [Google Scholar] [CrossRef]

- Syntetos, A.A.; Boylan, J.E. The accuracy of intermittent demand estimates. Int. J. Forecast. 2005, 21, 303–314. [Google Scholar] [CrossRef]

- Vaitkus, V.; Zylius, G.; Maskeliunas, R. Electrical spare parts demand forecasting. Elektron. Ir Elektrotechnika 2014, 20, 7–10. [Google Scholar] [CrossRef]

- Bacchetti, A.; Saccani, N. Spare parts classification and demand forecasting for stock control: Investigating the gap between research and practice. Omega 2012, 40, 722–737. [Google Scholar] [CrossRef]

- Costantino, F.; Di Gravio, G.; Patriarca, R.; Petrella, L. Spare parts management for irregular demand items. Omega 2018, 81, 57–66. [Google Scholar] [CrossRef]

- Gamberini, R.; Lolli, F.; Rimini, B.; Sgarbossa, F. Forecasting of sporadic demand patterns with seasonality and trend components: An empirical comparison between Holt-Winters and (S) ARIMA methods. Math. Probl. Eng. 2010, 2010, 579010. [Google Scholar] [CrossRef]

- Pai, P.F.; Lin, K.P.; Lin, C.S.; Chang, P.T. Time series forecasting by a seasonal support vector regression model. Expert Syst. Appl. 2010, 37, 4261–4265. [Google Scholar] [CrossRef]

- Fu, W.; Chien, C.F.; Lin, Z.H. A hybrid forecasting framework with neural network and time-series method for intermittent demand in semiconductor supply chain. In Proceedings of the IFIP International Conference on Advances in Production Management Systems, Seoul, Republic of Korea, 26–30 August 2018; pp. 65–72. [Google Scholar]

- Nikolopoulos, K. We need to talk about intermittent demand forecasting. Eur. J. Oper. Res. 2021, 291, 549–559. [Google Scholar] [CrossRef]

- Rosienkiewicz, M. Artificial intelligence methods in spare parts demand forecasting. Logist. Transp. 2013, 18, 41–50. [Google Scholar]

- Hua, Z.; Zhang, B. A hybrid support vector machines and logistic regression approach for forecasting intermittent demand of spare parts. Appl. Math. Comput. 2006, 181, 1035–1048. [Google Scholar] [CrossRef]

- Rustam, F.; Siddique, M.A.; Siddiqui, H.U.R.; Ullah, S.; Mehmood, A.; Ashraf, I.; Choi, G.S. Wireless capsule endoscopy bleeding images classification using CNN based model. IEEE Access 2021, 9, 33675–33688. [Google Scholar] [CrossRef]

- Siddiqui, H.U.R.; Shahzad, H.F.; Saleem, A.A.; Khan Khakwani, A.B.; Rustam, F.; Lee, E.; Ashraf, I.; Dudley, S. Respiration Based Non-Invasive Approach for Emotion Recognition Using Impulse Radio Ultra Wide Band Radar and Machine Learning. Sensors 2021, 21, 8336. [Google Scholar] [CrossRef] [PubMed]

- Shafi, I.; Hussain, I.; Ahmad, J.; Kim, P.W.; Choi, G.S.; Ashraf, I.; Din, S. License plate identification and recognition in a non-standard environment using neural pattern matching. Complex Intell. Syst. 2022, 8, 3627–3639. [Google Scholar] [CrossRef]

- Gutierrez, R.S.; Solis, A.O.; Mukhopadhyay, S. Lumpy demand forecasting using neural networks. Int. J. Prod. Econ. 2008, 111, 409–420. [Google Scholar] [CrossRef]

- Croston, J.D. Forecasting and stock control for intermittent demands. J. Oper. Res. Soc. 1972, 23, 289–303. [Google Scholar] [CrossRef]

- Willemain, T.R.; Smart, C.N.; Shockor, J.H.; DeSautels, P.A. Forecasting intermittent demand in manufacturing: A comparative evaluation of Croston’s method. Int. J. Forecast. 1994, 10, 529–538. [Google Scholar] [CrossRef]

- Syntetos, A.A.; Boylan, J.E. On the bias of intermittent demand estimates. Int. J. Prod. Econ. 2001, 71, 457–466. [Google Scholar] [CrossRef]

- Willemain, T.R.; Smart, C.N.; Schwarz, H.F. A new approach to forecasting intermittent demand for service parts inventories. Int. J. Forecast. 2004, 20, 375–387. [Google Scholar] [CrossRef]

- Levén, E.; Segerstedt, A. Inventory control with a modified Croston procedure and Erlang distribution. Int. J. Prod. Econ. 2004, 90, 361–367. [Google Scholar] [CrossRef]

- Fu, W.; Chien, C.F. UNISON data-driven intermittent demand forecast framework to empower supply chain resilience and an empirical study in electronics distribution. Comput. Ind. Eng. 2019, 135, 940–949. [Google Scholar] [CrossRef]

- Lee, K.; Kang, D.; Choi, H.; Park, B.; Cho, M.; Kim, D. Intermittent demand forecasting with a recurrent neural network model using IoT data. Int. J. Control Autom. 2018, 11, 153–168. [Google Scholar] [CrossRef]

- Doszyń, M. New forecasting technique for intermittent demand, based on stochastic simulation. An alternative to Croston’s method. Acta Univ. Lodz. Folia Oeconomica 2018, 5, 41–55. [Google Scholar] [CrossRef]

- Yilmaz, T.E.; Yapar, G.; Yavuz, İ. Comparison of Ata method and croston based methods on forecasting of intermittent demand. Mugla J. Sci. Technol. 2019, 5, 49–55. [Google Scholar]

- Babai, M.Z.; Dallery, Y.; Boubaker, S.; Kalai, R. A new method to forecast intermittent demand in the presence of inventory obsolescence. Int. J. Prod. Econ. 2019, 209, 30–41. [Google Scholar] [CrossRef]

- Aggarwal, A.; Rani, A.; Sharma, P.; Kumar, M.; Shankar, A.; Alazab, M. Prediction of landsliding using univariate forecasting models. Internet Technol. Lett. 2022, 5, e209. [Google Scholar] [CrossRef]

- Huifeng, W.; Shankar, A.; Vivekananda, G. Modelling and simulation of sprinters’ health promotion strategy based on sports biomechanics. Connect. Sci. 2021, 33, 1028–1046. [Google Scholar] [CrossRef]

- Liu, P. Intermittent demand forecasting for medical consumables with short life cycle using a dynamic neural network during the COVID-19 epidemic. Health Inform. J. 2020, 26, 3106–3122. [Google Scholar] [CrossRef]

- Kourentzes, N. Intermittent demand forecasts with neural networks. Int. J. Prod. Econ. 2013, 143, 198–206. [Google Scholar] [CrossRef]

- Lolli, F.; Gamberini, R.; Regattieri, A.; Balugani, E.; Gatos, T.; Gucci, S. Single-hidden layer neural networks for forecasting intermittent demand. Int. J. Prod. Econ. 2017, 183, 116–128. [Google Scholar] [CrossRef]

- Babai, M.Z.; Tsadiras, A.; Papadopoulos, C. On the empirical performance of some new neural network methods for forecasting intermittent demand. IMA J. Manag. Math. 2020, 31, 281–305. [Google Scholar] [CrossRef]

- Tian, X.; Wang, H.; Erjiang, E. Forecasting intermittent demand for inventory management by retailers: A new approach. J. Retail. Consum. Serv. 2021, 62, 102662. [Google Scholar] [CrossRef]

- Jiang, P.; Huang, Y.; Liu, X. Intermittent demand forecasting for spare parts in the heavy-duty vehicle industry: A support vector machine model. Int. J. Prod. Res. 2021, 59, 7423–7440. [Google Scholar] [CrossRef]

- Pennings, C.L.; Van Dalen, J.; van der Laan, E.A. Exploiting elapsed time for managing intermittent demand for spare parts. Eur. J. Oper. Res. 2017, 258, 958–969. [Google Scholar] [CrossRef]

- Regattieri, A.; Gamberi, M.; Gamberini, R.; Manzini, R. Managing lumpy demand for aircraft spare parts. J. Air Transp. Manag. 2005, 11, 426–431. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M.; Yousefi, M. An optimized model using LSTM network for demand forecasting. Comput. Ind. Eng. 2020, 143, 106435. [Google Scholar] [CrossRef]

- Dudek, G.; Pełka, P.; Smyl, S. A hybrid residual dilated LSTM and exponential smoothing model for midterm electric load forecasting. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 2879–2891. [Google Scholar] [CrossRef]

- Syntetos, A.A. A note on managing lumpy demand for aircraft spare parts. J. Air Transp. Manag. 2007, 13, 166–167. [Google Scholar] [CrossRef]

- Li, S.; Kuo, X. The inventory management system for automobile spare parts in a central warehouse. Expert Syst. Appl. 2008, 34, 1144–1153. [Google Scholar] [CrossRef]

- Teunter, R.; Sani, B. On the bias of Croston’s forecasting method. Eur. J. Oper. Res. 2009, 194, 177–183. [Google Scholar] [CrossRef]

- Solis, A.; Longo, F.; Mukhopadhyay, S.; Nicoletti, L.; Brasacchio, V. Approximate and exact corrections of the bias in Croston’s method when forecasting lumpy demand: Empirical evaluation. In Proceedings of the 13th International Conference on Modeling and Applied Simulation, MAS, Bordeaux, France, 10–12 September 2014; p. 205. [Google Scholar]

- Yermal, L.; Balasubramanian, P. Application of auto arima model for forecasting returns on minute wise amalgamated data in nse. In Proceedings of the 2017 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Coimbatore, TN, India, 14–16 December 2017; pp. 1–5. [Google Scholar]

- Ostertagová, E.; Ostertag, O. The simple exponential smoothing model. In Proceedings of the 4th International Conference on Modelling of Mechanical and Mechatronic Systems, Herľany, Slovak Republic, 20–22 September 2011; Technical University of Košice: Košice, Slovak Republic, 2011; pp. 380–384. [Google Scholar]

- Ostertagova, E.; Ostertag, O. Forecasting using simple exponential smoothing method. Acta Electrotech. Et Inform. 2012, 12, 62. [Google Scholar] [CrossRef]

- Truong, N.K.V.; Sangmun, S.; Nha, V.T.; Ichon, F. Intermittent Demand forecasting by using Neural Network with simulated data. In Proceedings of the 2011 International Conference on Industrial Engineering and Operations Management, Kuala Lumpur, Malaysia, 22–24 January 2011. [Google Scholar]

- Kozik, P.; Sęp, J. Aircraft engine overhaul demand forecasting using ANN. Manag. Prod. Eng. Rev. 2012, 3, 21–26. [Google Scholar]

- Huang, Y.; Sun, D.; Xing, G.; Chang, H. Criticality evaluation for spare parts based on BP neural network. In Proceedings of the 2010 International Conference on Artificial Intelligence and Computational Intelligence, Sanya, China, 23–24 October 2010; Volume 1, pp. 204–206. [Google Scholar]

- Mitrea, C.; Lee, C.; Wu, Z. A comparison between neural networks and traditional forecasting methods: A case study. Int. J. Eng. Bus. Manag. 2009, 1, 11. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, Y.; Wang, Z.; Wen, J.; Li, J.; Lu, J. A two-stage dynamic capacity planning approach for agricultural machinery maintenance service with demand uncertainty. Biosyst. Eng. 2020, 190, 201–217. [Google Scholar] [CrossRef]

- Tseng, F.M.; Yu, H.C.; Tzeng, G.H. Combining neural network model with seasonal time series ARIMA model. Technol. Forecast. Soc. Chang. 2002, 69, 71–87. [Google Scholar] [CrossRef]

- Chen, F.L.; Chen, Y.C. An investigation of forecasting critical spare parts requirement. In Proceedings of the 2009 WRI World Congress on Computer Science and Information Engineering, Los Angeles, CA, USA, 31 March–2 April 2009; Volume 4, pp. 225–230. [Google Scholar]

- Ren, J.; Xiao, M.; Zhou, Z.; Zhang, F. Based on improved bp neural network to forecast demand for spare parts. In Proceedings of the 2009 Fifth International Joint Conference on INC, IMS and IDC, Seoul, Republic of Korea, 25–27 August 2009; pp. 1811–1814. [Google Scholar]

- Ying, Z.; Hanbin, X. Study on the model of demand forecasting based on artificial neural network. In Proceedings of the 2010 Ninth International Symposium on Distributed Computing and Applications to Business, Engineering and Science, Hong Kong, China, 10–12 August 2010; pp. 382–386. [Google Scholar]

- Tofallis, C. A better measure of relative prediction accuracy for model selection and model estimation. J. Oper. Res. Soc. 2015, 66, 1352–1362. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef]

- Kim, S.; Kim, H. A new metric of absolute percentage error for intermittent demand forecasts. Int. J. Forecast. 2016, 32, 669–679. [Google Scholar] [CrossRef]

- Makridakis, S. Accuracy measures: Theoretical and practical concerns. Int. J. Forecast. 1993, 9, 527–529. [Google Scholar] [CrossRef]

- Qu., S.; Sun, Z.; Fan, H.; Li, K. BP neural network for the prediction of urban building energy consumption based on Matlab and its application. In Proceedings of the 2010 Second International Conference on Computer Modeling and Simulation, Darmstadt, Germany, 15–18 November 2010; Volume 2, pp. 263–267. [Google Scholar]

- Muhaimin, A.; Prastyo, D.D.; Lu, H.H.S. Forecasting with recurrent neural network in intermittent demand data. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 802–809. [Google Scholar]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent neural networks for time series forecasting: Current status and future directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar] [CrossRef]

- Kiefer, D.; Grimm, F.; Bauer, M.; Van Dinther, C. Demand forecasting intermittent and lumpy time series: Comparing statistical, machine learning and deep learning methods. In Proceedings of the 54th Annual Hawaii International Conference on System Sciences, Kauai, HI, USA, 5–8 January 2021. [Google Scholar]

- Song, H.; Zhang, C.; Liu, G.; Zhao, W. Equipment spare parts demand forecasting model based on grey neural network. In Proceedings of the 2012 International Conference on Quality, Reliability, Risk, Maintenance, and Safety Engineering, Chengdu, China, 15–18 June 2012; pp. 1274–1277. [Google Scholar]

| Series Type | Ref. | Application | Forecasting Method | MAE | sMAPE | RMSE | MAD | MASE |

|---|---|---|---|---|---|---|---|---|

| Intermittent | 2021 [38] | Retail industry | SBA | × | × | 0.632 | × | × |

| SES | × | × | 0.617 | × | × | |||

| Croston | × | × | 0.630 | × | × | |||

| Markov-combined method | 0.328 | 0.406 | 0.576 | × | × | |||

| 2019 [27] | Electronics distribution | ARIMA | 12.39 | × | 16.90 | × | 1.108 | |

| Croston | 13.24 | × | 17.31 | × | 1.197 | |||

| SVM | 10.11 | × | 16.72 | × | 0.901 | |||

| RNN | 9.29 | × | 16.61 | × | 0.792 | |||

| UNISON data driven | 9.19 | × | 15.13 | × | 0.768 | |||

| 2021 [39] | Vehicle industry | SVM | × | × | × | × | 0.830 | |

| ANN | × | × | × | × | 0.954 | |||

| RNN | × | × | × | × | 0.999 | |||

| 2017 [40] | Naval industry | SES | × | × | × | × | 1.796 | |

| SBA | × | × | × | × | 1.678 | |||

| TSB | × | × | × | × | 1.700 | |||

| Bootstrap | × | × | × | × | 1.981 | |||

| LUMPY | 2021 [39] | Vehicle industry | SVM | × | × | × | × | 0.821 |

| ANN | × | × | × | × | 1.042 | |||

| RNN | × | × | × | × | 1.069 | |||

| 2005 [41] | Space industry | SES | × | × | × | 9.26 | × | |

| Croston | × | × | × | 8.77 | × | |||

| 2008 [21] | Electronics | SBA | × | 138.36 | × | × | × | |

| NN | × | 128.20 | × | × | × | |||

| SMOOTH | 2021 [39] | Vehicle industry | SVM | × | × | × | × | 0.877 |

| ANN | × | × | × | × | 0.930 | |||

| RNN | × | × | × | × | 0.987 | |||

| 2020 [42] | Furniture industry | ARIMA | × | 0.1616 | 3531 | × | × | |

| KNN | × | 0.1374 | 2913 | × | × | |||

| RNN | × | 0.1333 | 2788 | × | × | |||

| ANN | × | 0.1011 | 2115 | × | × | |||

| SVM | × | 0.1101 | 1967 | × | × | |||

| LSTM | × | 0.1072 | 2267 | × | × | |||

| Dilated LSTM | × | 0.1023 | 2172 | × | × | |||

| 2021 [43] | Electric power | ANN | × | 5.27 | 378 | × | × | |

| ARIMA | × | 5.65 | 463 | × | × | |||

| GRNN | × | 5.01 | 350 | × | × | |||

| LSTM | × | 6.11 | 431 | × | × | |||

| ETS+RD-LSTM | × | 4.46 | 351 | × | × |

| Part No. | Nomenclature | Quantity Demanded | Lumpiness Factor | Demand Categorization |

|---|---|---|---|---|

| 246-3904-000 | Tail rotor hub | 48 | 1.230240223 | Lumpy |

| 246-3925-00 | Tail rotor blade | 44 | 1.391716506 | Lumpy |

| LOPR-15875-2300-1 | Tail rotor chain | 41 | 1.543305508 | Lumpy |

| KAY-115AM | Hydraulic booster | 32 | 1.404591553 | Erratic |

| HP-3BM | Fuel control unit | 31 | 1.17969417 | Lumpy |

| TB3-117BM | Engine assembly | 30 | 2.228030758 | Lumpy |

| AK50T-1 | Air compressor | 27 | 1.404358296 | Lumpy |

| 8AT-1250-00-02 | Vibration damper assembly | 24 | 1.334541546 | Lumpy |

| 8AT-2710-000 | Main rotor blade set of 5 | 24 | 1.723303051 | Lumpy |

| 8-1930-00 | Main rotor hub | 22 | 1.423085356 | Lumpy |

| Grand Total | 323 * |

| Testing Dataset Values | ||||

|---|---|---|---|---|

| Quarters | Set of Inputs | Actual Output Dataset Values | ||

| 1 | 2.8464 | 1 | 0.333333 | 3 |

| 2 | 1.833066667 | 3 | 1.666667 | 2 |

| 3 | 2.8464 | 1 | 0.333333 | 1 |

| 4 | 0.393066667 | 2.3333333 | 1.333333 | 3 |

| Normalized Dataset Values | Actual = 9 | |||

| 1 | 0.9 | 0.1 | 0.1 | |

| 2 | 0.569565217 | 0.9 | 0.9 | |

| 3 | 0.9 | 0.1 | 0.1 | |

| 4 | 0.1 | 0.6333333 | 0.7 | |

| Forecasted Dataset Values | |||||

|---|---|---|---|---|---|

| Quarters | 1 | 2 | 3 | 4 | Forecasted |

| Normalized | 0.497869174 | 0.752308738 | 0.497869174 | 0.427485871 | |

| Descaled | 1.790411283 | 2.93538932 | 1.790411283 | 1.473686419 | 7.989898306 |

| Metric | RNN | Simple ANN | Croston | SES | ANN with Levenberg-Marquardt Training Algorithm | SVM | Adaptive Univariate SVM [39] | Proposed |

|---|---|---|---|---|---|---|---|---|

| MAE | 0.6 | 0.4 | 0.7 | 0.9 | 0.36 | 0.52 | 0.43 | 0.11 |

| S. No. | Model | MASE |

|---|---|---|

| 1 | Croston | 2.18 |

| 2 | Holt-Winter | 1.07 |

| 3 | Auto-ARIMA | 1.04 |

| 4 | Random Forest | 1.15 |

| 5 | XGBoost | 1.10 |

| 6 | Auto-SVR | 1.06 |

| 7 | MLP | 1.68 |

| 8 | LSTM | 0.96 |

| 9 | ANN | 0.821 |

| 10 | SVM | 1.042 |

| 11 | Adaptive univariate SVM [39] | 1.069 |

| 12 | Propose approach | 0.613 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shafi, I.; Sohail, A.; Ahmad, J.; Espinosa, J.C.M.; López, L.A.D.; Thompson, E.B.; Ashraf, I. Spare Parts Forecasting and Lumpiness Classification Using Neural Network Model and Its Impact on Aviation Safety. Appl. Sci. 2023, 13, 5475. https://doi.org/10.3390/app13095475

Shafi I, Sohail A, Ahmad J, Espinosa JCM, López LAD, Thompson EB, Ashraf I. Spare Parts Forecasting and Lumpiness Classification Using Neural Network Model and Its Impact on Aviation Safety. Applied Sciences. 2023; 13(9):5475. https://doi.org/10.3390/app13095475

Chicago/Turabian StyleShafi, Imran, Amir Sohail, Jamil Ahmad, Julio César Martínez Espinosa, Luis Alonso Dzul López, Ernesto Bautista Thompson, and Imran Ashraf. 2023. "Spare Parts Forecasting and Lumpiness Classification Using Neural Network Model and Its Impact on Aviation Safety" Applied Sciences 13, no. 9: 5475. https://doi.org/10.3390/app13095475

APA StyleShafi, I., Sohail, A., Ahmad, J., Espinosa, J. C. M., López, L. A. D., Thompson, E. B., & Ashraf, I. (2023). Spare Parts Forecasting and Lumpiness Classification Using Neural Network Model and Its Impact on Aviation Safety. Applied Sciences, 13(9), 5475. https://doi.org/10.3390/app13095475