1. Introduction

Massive open online courses (MOOCs) have become popular recently. Learners can select MOOCs from different platforms based on their learning interests [

1,

2]. According to a report from Class Central, by December 2021, the number of MOOC learners reached 220 million, and the number of courses reached 19,400 [

3].

Since 2011, Udacity, edX, and Coursera have become well-known international MOOC platforms. On the one hand, courses continue to increase, making it difficult for learners to find suitable courses [

4,

5]. Some high-quality course resources are also difficult for target learners to find. On the other hand, the massive learning data generated by MOOC platforms contain rich learning information. Therefore, it is necessary to dig deeply into the characteristics of learners and courses so that the learning characteristics and learning experiences of MOOC learners can be incorporated into improved and personalized course recommendations.

Currently, MOOCs’ recommendation algorithms usually focus on data mining learners’ course ratings. However, learners’ course choices are also based on their personal interests, preferences, knowledge domains, and learning capabilities. For example, knowledge graph enhanced recommendation algorithms were proposed to estimate learners’ potential interests automatically and iteratively [

6] In fact, their interests, preferences, knowledge domains, and capabilities dynamically change with time [

7,

8]. Notably, owing to the large-scale data in the MOOC context, runtime efficiency is low when using a collaborative filtering algorithm to measure learners’ similarities. Therefore, based on multidimensional item response theory in psychometrics and the Ebbinghaus forgetting curve, this study proposed a hybrid recommendation algorithm for MOOCs by integrating clustering and collaborative filtering algorithms. These algorithms mine learners’ preferences based on course ratings, course attributes, and multidimensional capabilities by integrating memory weights.

Coursera, an internationally renowned MOOC platform, was selected to verify the effectiveness of the proposed recommendation algorithm. On the theoretical side, this study can enrich and develop personalized recommendations in the research field of MOOCs. In practice, it can promote the effective knowledge dissemination of high-quality course resources to improve learners’ learning experiences in the MOOC context.

2. Literature Review on the Personalized Recommendation in the MOOC Context

Owing to developments in information technology, MOOCs have flourished since 2008. Especially during the COVID-19 pandemic, MOOC platforms provided learners with stable learning resources and environments. Moreover, owing to the expanding scale of MOOC platforms and learners, personalized recommendations for MOOCs have become a research focus recently [

9]. The current mainstream recommendation algorithms can be summarized as content-based, collaborative filtering-based, machine learning model-based, and hybrid model-based.

2.1. Content-Based Recommendation Algorithms

Content-based recommendation algorithms primarily center on information screening and filtering mechanisms. A direct correlation between items or content was found, and based on user preferences and historical behavior records, relevant items or contents were recommended to users.

Content-based personalized recommendations on MOOC platforms are based on learners’ course preferences embedded in both the relevant content searched and course learning records. Courses with high relevance are then recommended to matched learners. An advantage of this recommendation algorithm is its easy implementation. A content-based personalized recommendation only requires a learner’s course information, learning records, and search content. The proposed content-based MOOC recommendation algorithm has a higher accuracy rate than random recommendations. Notably, the richer the course information, the more accurate the recommendations [

10,

11].

However, content-based recommendation algorithms have some obvious disadvantages. This is because the recommendations based on learners’ historical behaviors are likely to be too consistent, resulting in a high degree of similarity between the recommended courses and the courses that learners have already studied. Consequently, exploring new interests using this content-based recommendation algorithm is difficult.

2.2. Recommendation Algorithm Based on Collaborative Filtering

Collaborative filtering is another widely used method. It is mainly based on the assumption that similar things or people fit well together. Correspondingly, learners who like the same items are more likely to have the same interests and preferences in general [

12,

13]. At present, collaborative filtering algorithms are mainly of two types, namely, user- and item-based.

User-based collaborative filtering has been applied to MOOCs’ personalized recommendations to determine target learners’ preferences based on their course ratings to group together learners that are similar to some extent (hereafter referred to as similar learner groups). On this basis, personalized recommendations to target learners are provided according to the courses preferred by similar learner groups.

Item-based collaborative filtering (Item-CF) has been applied to personalized recommendations to find similar courses by modeling courses and calculating course similarities to recommend similar courses for learners. Thus, the recommendation algorithm based on collaborative filtering is easy to understand and implement. Personalized recommendation research in the MOOC context has shown that an adaptive recommendation scheme based on collaborative filtering and time series can improve the accuracy of personalized recommendations [

14].

However, the essence of the collaborative filtering algorithm for MOOCs is to make recommendations based on learners’ historical data, leading to certain unavoidable disadvantages. For newly registered MOOC learners and newly started courses, there may be a cold-start problem, resulting in sparse data, thus affecting recommendation outcomes.

2.3. Recommendation Algorithms Based on Machine Learning Models

With the development of big data technology, recommendation algorithms based on machine learning models have been created to predict and rank learners’ interests and preferences. Common machine learning models include matrix decomposition, topic, clustering, and neural network models. Although the training time of recommendation algorithms based on machine learning models is longer, they have obvious advantages in feature extraction. The recommendation results are more accurate, especially when based on a deep learning model. Data can be accurately represented in a high-level form.

Recommendation algorithms based on machine learning models have been applied to personalized recommendation for MOOCs. For example, a deep learning personalized recommendation algorithm for MOOCs based on multiple attention mechanisms was proposed by analyzing learning behaviors [

15]. The personalized recommendation algorithm for MOOCs based on deep belief networks was proposed in function approximation, feature extraction, and prediction classification [

16]. A personalized recommendation algorithm for MOOCs based on a priori clustering was proposed by revealing the course association rules [

17].

Although recommendation algorithms based on machine learning models have gained increasing attention owing to their high accuracy rate, they also have some disadvantages. First, because the recommendation process of this algorithm is similar to that of the black box, the interpretability of the recommendation results is weak. Second, to ensure the model has better recommendation results, manual intervention is required to screen the data attributes and adjust and correct the parameters, requiring more time and resources.

2.4. Recommendation Algorithms Based on Mixed Models

In practical applications, it is usually rare to directly use one algorithm alone for a recommendation because each of the aforementioned recommendation algorithms has certain shortcomings. Therefore, many personalized recommendation systems use a combination of different algorithms to obtain a more accurate recommendation performance.

Given the characteristics of MOOC platforms, the resource recommendation model is constructed by integrating a deep belief network model. As a result, the contrast divergence algorithm has higher accuracy [

18]. The matrix decomposition model of a multi-social network framework based on a joint probability distribution helps improve the runtime efficiency [

19]. Furthermore, a collaborative filtering algorithm based on a dichotomous graph context can effectively solve the cold-start problem in collaborative filtering [

20]. In addition, both the hybrid recommendation model integrating the collaborative filtering algorithm and deep-learning algorithm [

21], and the personalized recommendation method based on deep-learning and multi-view fusion [

22] have better recommendation results.

Consequently, recommendation algorithms based on hybrid models, which combine the advantages of multiple algorithms, have gradually become popular in the MOOC context.

2.5. Comments on Current Research

Collaborative filtering algorithms are still widely popular owing to their simplicity and efficiency. However, the problems of data sparsity and cold-start must be solved. Currently, hybrid recommendations are the mainstream recommendation algorithms. For example, for the movie dataset scenario, clustering users based on their personal attributes or their rating matrix [

23,

24], followed by applying collaborative filtering recommendations, help improve the accuracy of collaborative filtering algorithms.

In the MOOC context, learners often choose courses according to the information provided by the platform. Accordingly, course information reflects learners’ interests in and preferences for a course to a certain extent. Although current recommendation algorithms for MOOCs focus on course ratings, they do not sufficiently consider learners’ knowledge domains and learning levels. Thus, this study expanded course rating data to learners’ course rating preferences, course attribute preferences, and multidimensional capabilities matched with course traits.

To reiterate, learners’ preferences and capabilities change dynamically over time in the MOOC context. Although some algorithms recommend based on learners’ learning sequences [

14], current recommendation algorithms seldom consider dynamic changes in these attributes. Moreover, the runtime efficiency of the collaborative filtering algorithm was not high when directly measuring the learners’ similarities in the MOOC context.

Therefore, this study proposes a hybrid recommendation algorithm for MOOCs by integrating clustering and collaborative filtering based on multidimensional item response theory (MIRT) and the Ebbinghaus forgetting curve. MIRT can be viewed as an extension of IRT for better interpreting the interaction between item characteristics and individual behaviors due to the focus on multidimensional traits [

25]. By integrating memory weight into learners’ course rating preferences, course attribute preferences, and multidimensional capabilities that match course traits, the proposed recommendation algorithm aims to solve the data sparsity and singularity problems in the collaborative filtering algorithm.

3. Methods

Based on the general architecture of a personalized recommendation system, the hybrid recommendation model framework proposed in this study is presented in

Figure 1.

3.1. Input Module

Data on the learners’ course ratings, course attribute preferences, and multidimensional capabilities were obtained from expanding course rating data. Then, based on multidimensional item response theory in psychometrics, the learners’ multidimensional capabilities that match course traits were acquired. Subsequently, the Ebbinghaus forgetting curve that incorporates memory weights was introduced in Step 1 to capture the dynamic changes in learners’ course ratings, course attribute preferences, and multidimensional capabilities. This summarizes the data preparation.

3.1.1. Learners’ Course Ratings with Memory Weight

The Ebbinghaus forgetting curve represents the capacity to forget and remember as a time-dependent function [

26]. The rate at which people forget descends until it becomes stable, as shown in Equation (1). In Equation (1),

denotes the memory retention ratio,

denotes the initial value of memory,

denotes the interval between the initial memory time and the current time, and

and

are control constants. According to repeated experiments, the amount of memory left is a logarithmic function curve, and

[

23].

Therefore, the memory-related characteristics of MOOC learners should be incorporated into the model [

27]. According to the timeframes of learners’ course ratings, the memory retention ratio of a course should be incorporated as a factor in a learner’s course ratings.

First, a two-dimensional matrix of course ratings is constructed, in which the number of matrix rows denotes the number of MOOC learners, the number of matrix columns denotes the number of MOOC courses, and the element of the matrix

represents the value of learner

’s rating of course

. Then, the memory retention ratio in Equation (1) is introduced to construct course rating dynamic preferences

, as in Equation (2).

3.1.2. Learners’ Course Attribute Preferences with Memory Weight

First, the learners’ course ratings can be used to construct a two-dimensional matrix of course attributes. The number of rows in the matrix denotes the number of learners, the number of columns in the matrix denotes the number of course attributes, and the element in the matrix

denotes the average value of learner

’s course attribute

in

rating courses by learner

;

represents the value of attribute

of course

. The course ratings with memory weights in Equation (2) is introduced to construct course attribute dynamic preferences

, as in Equation (3).

3.1.3. Learners’ Multidimensional Capabilities with Memory Weight

The knowledge domain, teaching language (the necessary language requirement), and course level were treated as course traits in this study. Thus, multidimensional capabilities that match course traits were explored based on multidimensional item response theory (MIRT). Although MIRT is widely used in assessment and evaluation of educational and psychological tests, it is seldom applied in the MOOC context [

28]. In this study, it modeled learners’ multidimensional capabilities that match course traits.

First, the course traits ratings were obtained from the learners’ course rating data. The data were converted into a numerical matrix using one-hot coding to construct a learner-multidimensional capability matrix. The number of rows in the matrix denotes the number of learners, the number of columns in the matrix denotes the number of course traits, and the element of the matrix

represents the average value of learner

’s course traits

in

rating courses by learner

;

represents the value of trait

of course

. Then, the course rating with memory weight in Equation (2) is introduced to model multidimensional dynamic capabilities that match course traits

, as in Equation (4).

3.2. Recommending Module

In the MOOC context, course rating data are usually sparse. Therefore, in this study, learners were clustered by combining course attribute preferences and multidimensional capabilities. Then, for each learner group, a collaborative filtering algorithm was used. This algorithm comprehensively considers the similarity of course rating preferences, course attribute preferences, multidimensional capability matching course traits, and similarities among learners (thus creating similar learning groups). Consequently, the efficiency and accuracy of the recommendation can be improved.

3.2.1. Clustering Algorithm

As MOOCs have many course attributes, feature extraction and feature selection are required to reduce attribute redundancy so that course attributes can be better applied to course attribute preferences to improve the clustering effect. Therefore, principal component analysis (PCA) was employed to reduce the dimension of course attributes before clustering learners based on course attributes and multidimensional capabilities in Step 2.

As an unsupervised machine learning algorithm, the clustering algorithm divides the overall sample into several non-overlapping groups. It is based on a distance measurement method so that the individuals within each group are relatively more similar, and the individuals from different groups differ. Consequently, each group is clearly differentiated. Although there are many clustering algorithms, K-means has the advantage of being simple and efficient because it divides different individuals into different groups through iterative calculations, which is suitable in big data analysis. Therefore, by combining course attribute preferences and multidimensional capabilities that match course traits, this study selected K-means clustering to divide learners into K clusters (similar learning groups).

3.2.2. Collaborative Filtering Algorithm

As the learning choice of courses by MOOC learners is largely based on personal interests and capabilities, diversity is an important evaluation indicator in personalized recommendations. Therefore, in Step 3, for each cluster, a learner-based collaborative filtering algorithm (Learner-CF) is used to calculate the comprehensive similarity among learners using the k nearest neighbor algorithm.

- (1)

Learner similarity in course rating preferences

The improved cosine similarity calculation of course ratings is shown in Equation (5). The larger the absolute value of

, the higher the similarity of the course ratings between learner

and learner

. In Equation (5),

denotes the intersection of courses rated by learner

and learner

;

denote the rating of course

by learner

and learner

, respectively;

denotes the average rating of course

.

- (2)

Learner similarity in course attribute preference

The cosine similarity calculation of the course attribute preferences is shown in Equation (6). The larger the value of

, the higher the similarity of course attribute preferences between learner

and learner

. In Equation (6),

denotes the attribute intersection of courses rated by learner

and learner

;

denote the number of rating times for courses with attribute value

k by learner

and learner

, respectively.

- (3)

Learner similarity in multidimensional capabilities

The matching course traits of the cosine similarity calculation of multidimensional capabilities are shown in Equation (7). The larger the value of

, the higher the similarity of the multidimensional capabilities between learner

and

. In Equation (7),

denotes the intersection of multidimensional capabilities that match the course traits of learner

and learner

;

denote the value of learner

’s capability

and learner

’s capability

, respectively.

- (4)

Comprehensive similarity among users

According to Equations (5)–(7),

denotes learner similarity of course rating preferences,

denotes learner similarity of course attribute preferences, and

denotes learner similarity of multidimensional capabilities that match course traits. Therefore, the comprehensive similarity between learner

and learner

is shown in Equation (8);

are in the range of [0, 1] and satisfy

to balance the influence of similarity in learners’ course rating preferences, course attribute preferences, and multidimensional capabilities that match course traits. It is usually necessary to determine the optimal value of the balance factors

by testing.

According to Equation (8), a symmetric similarity matrix with an dimension is formed by calculating the similarities among learners, where is the number of learners, and the elements of matrix denote the similarity between learner and learner . The learners are ranked according to their similarities to other learners. The top most similar learners are selected as the nearest neighbors; their similarity exceeds a pre-specified criterion.

3.3. Output Module

3.3.1. Prediction and Recommendation

First, we take the courses that the top nearest neighbors have learned but that the target learner has not learned as candidate courses.

Moreover, some learners rate courses more conservatively, while others rate courses in an opposite way. As a second step, to ensure the consistency of learner course ratings, the predicted rating of unlearned course

by the target learner

can be predicted, as shown in Equation (9). Here,

denotes the average rating for learned courses by learner

;

denotes the set of target learner’s top

k nearest neighbor learners who rated course

in candidate courses;

denotes learner

in

;

denotes the rating of course

by learner

;

denotes the average rating of learned courses by learner

;

shown in Equation (8) denotes the comprehensive similarity between learner

and learner

. Considering the influence of the similarity between learner

and learner

, the predicted value of learner

’s course

rating

can be calculated based on

and

.

Finally, the top n courses with the highest predicted ratings were recommended for the target learners in Step 4.

3.3.2. Analysis of Performance Indicators

Offline evaluation is one of the most commonly adopted evaluation methods for recommendation systems. The holdout test is a basic offline evaluation method, which uses indicators of the precision rate, recall rate, F1 score, and mean average error (MAE) [

14,

18,

19] to analyze and compare the performance of the recommendation results.

The precision or accuracy rate indicates the proportion of correctly predicted positive samples to the total number of samples predicted to be positive. In this study, this rate is shown in Equation (10). It describes the proportion of learners’ rated courses in the list of recommended courses. The recall rate is the proportion of correctly predicted positive samples to the actual positive samples, as shown in Equation (11); it describes the proportion of learners’ rated courses in the list of recommended courses to the overall learner-rated courses in this study.

denotes the list of recommended courses for learner

u;

denotes the list of courses rated by learner

;

denotes the total number of learners;

denotes the set of learners;

denotes the overall recommendation precision rate; and

denotes the overall recommendation recall rate.

To comprehensively reflect the recommendation performance, the F1 score reflects the precision rate and recall rate simultaneously, as shown in Equation (12).

Moreover, the mean absolute value error (MAE) can describe the error between the learners’ actual and predicted course ratings. As shown in Equation (13),

is the learner’s actual course rating,

is the predicted one, and

is the number of the actual rating courses.

4. Results

4.1. Data Collection

Coursera is a world-renowned MOOCs platform. As of December 2021, Coursera had over 92 million registered learners. It has over 250 university and industry partners. It offers more than 2000 instructional programs that provide hands-on learning. It has more than 6000 course resources covering the following 11 subject categories: arts and humanities, business, computer science, data science, information technology, health, math and logic, personal and development, physical science and engineering, social science, and language learning. Each category of discipline is subdivided into multiple second-level categories. Moreover, course discussion and course review forums are set up for teachers and students to discuss and share information. Therefore, Coursera was selected as the research object to collect course ratings, course attribute, and course trait data from January 2016 to December 2021.

4.2. Data Preprocessing

The raw data were preprocessed in the following manner. First, fields and records with null values were eliminated. Second, the data items were normalized and unified. Third, to improve the efficiency of the proposed recommendation algorithm, the preprocessed dataset was screened for courses rated by at least ten learners [

29].

The data volumes of the original dataset, the preprocessed dataset, and the experiment dataset are listed in

Table 1. There were 1849 learners who rated at least ten courses. They learned 2951 courses, accounting for 77% of the total number of courses following data preprocessing. The number of ratings and reviews of the 2951 experiment courses was 42,485 and 41,741, respectively.

Based on the experimental dataset, the statistics of 11 course attributes are shown in

Table 2. The number of clicks and the number of views had the largest variances. These were followed by the number of registrations and the number of ratings. These indicated that courses on Coursera vary in popularity.

4.3. Recommendation Process on Coursera

4.3.1. Learners’ Dynamic Preferences and Multidimensional Capabilities

According to Step 1, as shown in

Figure 1, Equations (1)–(4) with memory weight were applied to calculate learners’ course rating preferences, course attribute preferences, and multidimensional capabilities.

Table 3 shows a subset of data on learners’ course rating preferences with memory weight calculated by Equation (2). In this table, 0 means a learner did not rate any of the ten courses in the first row.

Table 4 shows a subset of data on learners’ course attribute preferences with memory weight calculated by Equation (3). It displays the average values of learners’ course attribute preferences. For example, courses rated by Learner 8164 had 54 materials, one teaching assistant, and 2554 reviews, on average.

Table 5 shows a subset of data on learners’ multidimensional capabilities (that match course traits) with memory weight calculated using Equation (4). Course traits on Coursera consist of teaching language (i.e., English, French, Russian, Spanish, and Portuguese), subject categories (i.e., computer science, data science, information technology, math and logic, business, personal development, life science, physical science and engineering, arts and humanities, social science, and language learning), and course-level (i.e., beginner, intermediate, and advanced). For example, Learner 8164 learned courses in English, French, Russian, and Spanish in the knowledge domain of computer science and data science at beginner and intermediate levels.

4.3.2. Feature Extraction of Course Attributes and Learner Clustering

Although course attribute preferences can be obtained, there may be a certain correlation between the 11 course attributes, as shown in

Table 2. It is necessary to reduce the dimensions of the course attributes before clustering the learners. According to Step 2 shown in

Figure 1, principal component analysis was applied.

The results of the KMO and Bartlett tests for course attribute preferences are presented in

Table 6. The KMO measure was 0.877, which is greater than 0.8. The Bartlett spherical test was significant, at

p < 0.05, indicating that the data were suitable for principal component analysis [

30].

Based on the principal component analysis, the factors retained 86% of the component information. The principal components and the course attributes are listed in

Table 7.

After dimension reduction and combing course attribute preferences with multidimensional capabilities that matched course traits, learners were clustered using the K-means clustering algorithm. This involved evaluating the clustering results by minimizing the distance between samples within each cluster. The smaller the sum of the squared distances between the class centers of each class and the sample points within the class, the more similar the class members. As the number of clusters increased, the error sum decreased until it reached a critical value and remained relatively stable; therefore, this point could be considered the optimal number of clusters.

In this study, the elbow method, a heuristic used in determining the number of clusters in a dataset, was applied to find the appropriate inflection point quickly and effectively [

31]. Accordingly,

was determined as the optimal number of learner clusters. The characteristics of the clustering sample are shown in

Table 8. Apparently, the number of samples in each cluster was balanced. The characteristics included course attributes and course traits based on course attribute preferences and multidimensional capabilities matching the course traits.

4.3.3. Calculating Learner Similarity

Using both the course attribute preferences and multidimensional capability to cluster learners (into similar learning groups), better clustering performance was shown. The same weight was given to the course attribute preferences and multidimensional capabilities in Equation (8), that is,

. Consequently, according to Step 3, shown in

Figure 1, an example of the learner similarity matrix is illustrated in

Table 9 below. The diagonal elements represent the similarity of each learner to himself/herself, thus taking the value of 1. For example, the similarity between Learner 8064 and Learner 10,269 was 0.21.

Moreover, to calculate the comprehensive similarity of learners so that the top

similar learners in clusters could be found, the optimal values of the course rating similarity weight coefficient in Equation (8) and the top nearest neighbors were determined through sensitivity analysis.

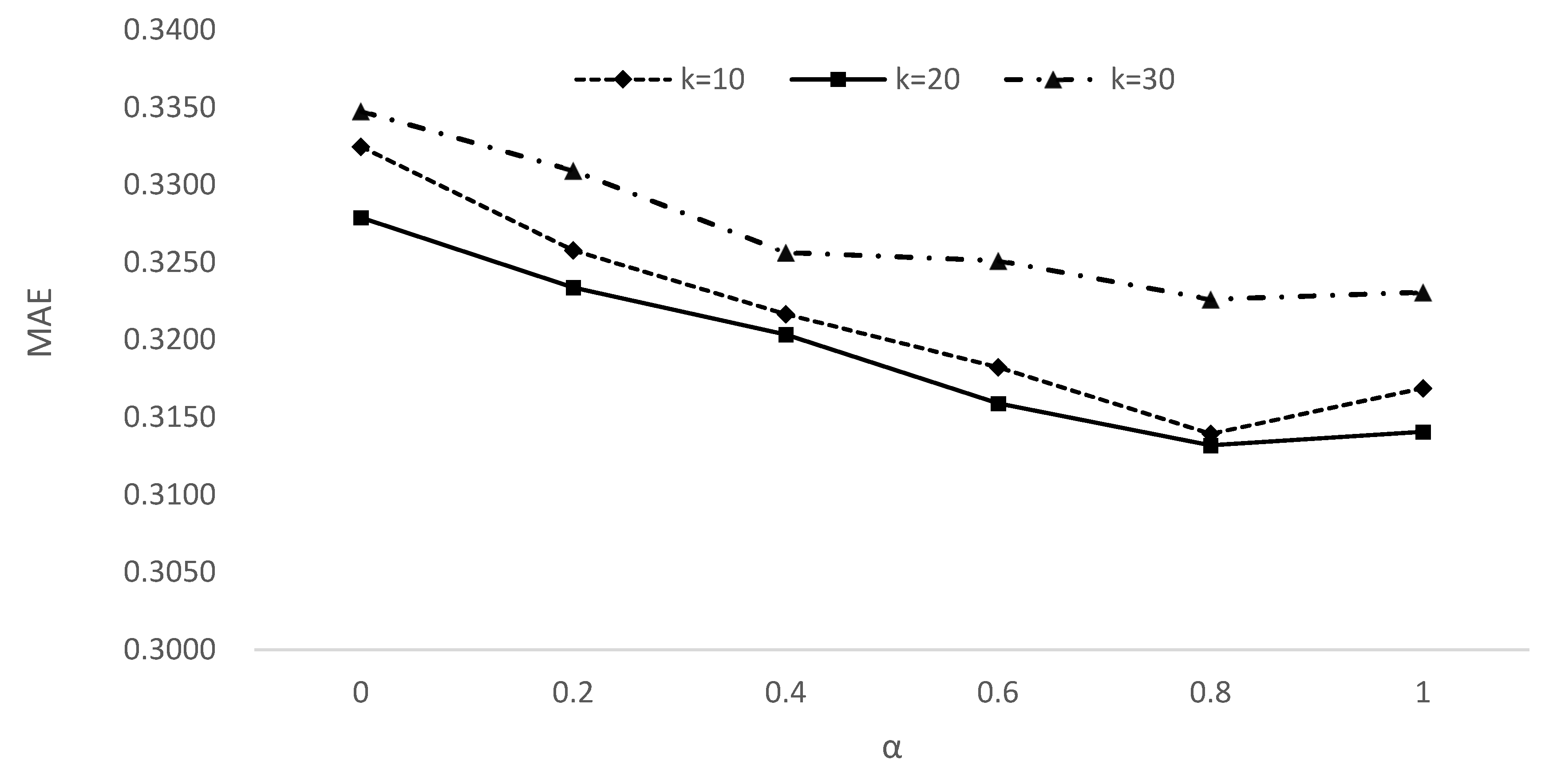

In this study, the mean absolute error (MAE) between predicted and actual course ratings was obtained when the value of the weight coefficient α changed by iterating the nearest top

neighbors. Usually, MAE changes smoothly with the nearest top

neighbors. The step size

is generally set to 5 or 10 [

26,

32]. This study started with

, and a change in MAE was observed, as shown in

Figure 2.

As indicated in

Figure 2, when the number of the nearest neighbors

is fixed and the course rating similarity weight coefficient

increases from 0 to 1, MAE fluctuates; it decreases at first and then it increases after

. Finally, when the course rating similarity weight coefficient

is 0.8 and the number of the nearest neighbor

is 20, the model obtains the smallest MAE. Therefore, the course rating similarity weight coefficient

and the top

nearest neighbors were determined to be 0.8 and 20, respectively, through sensitivity analysis. Accordingly, based on Equation (8), both the course attribute similarity weight coefficient

and multidimensional capability similarity weight coefficient

took the value of 0.1. Consequently, sorted by comprehensive similarity, the top

nearest neighbors were selected for target learners.

4.3.4. Prediction and Recommendation

According to Step 4 in

Figure 1, a target learner’s predicted course rating, as shown in Equation (9), is obtained based on the top

nearest neighbors. Then the top

courses are recommended to the target learner in the ascending order of rating.

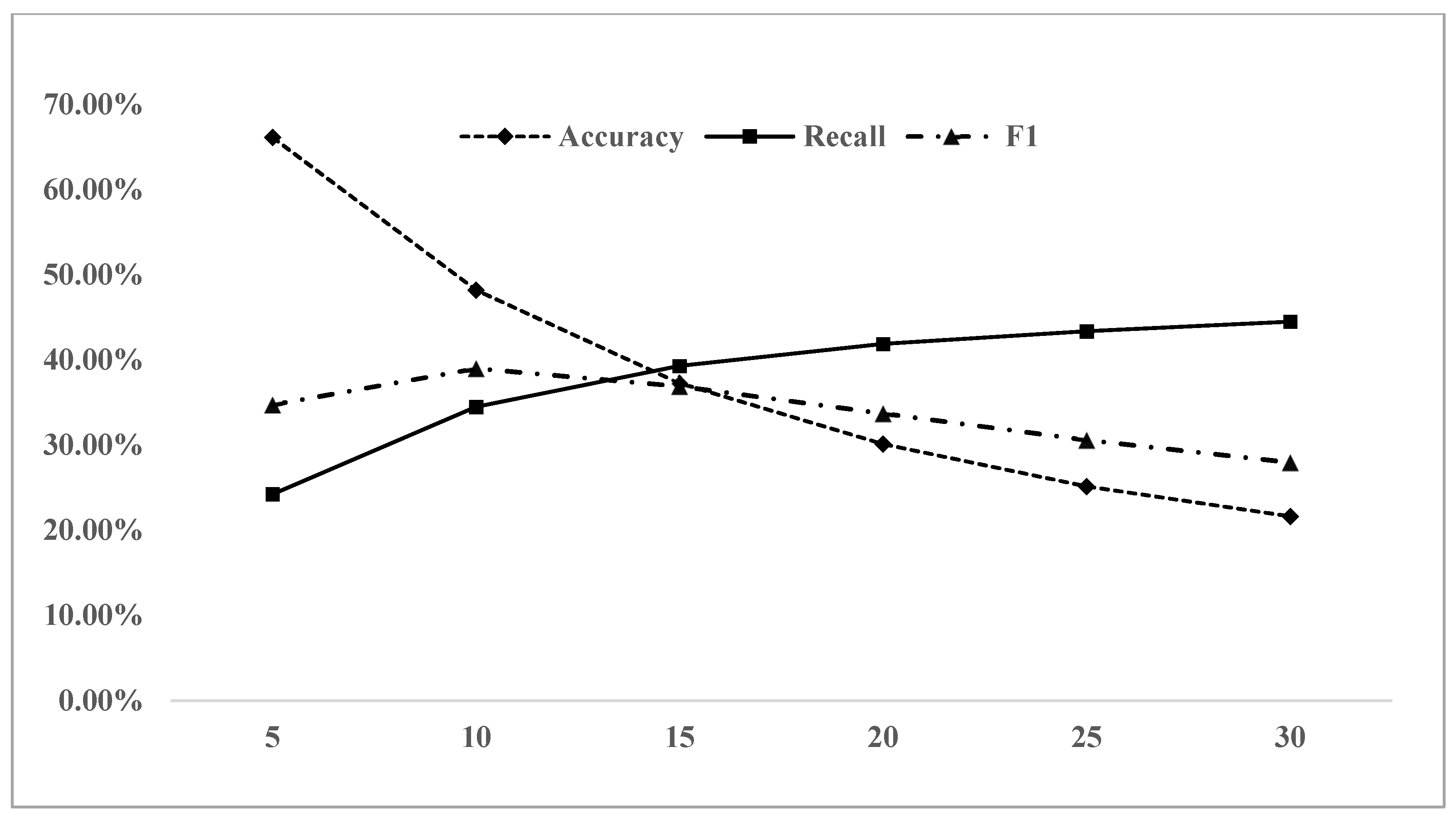

To obtain the optimal top

, a sensitivity analysis was performed by changing the number of recommended courses, as shown in

Figure 3. As the number of recommended courses increased from 5 to 30, the precision rate of the algorithm decreased from 66% to 22%, whereas the recall rate increased from 24% to 45%. Therefore, the F1 score (Equation (12)) was introduced as the comprehensive evaluation criterion to determine the optimal number of recommend courses. Finally, when the number of recommended courses was 10, the F1 score reached a maximum of 39%. Therefore, according to the course rating by the top 2

nearest neighbors, the value of the target learner’s course rating was predicted so that the top 10 courses with the highest predicted rating were selected (recommended).

4.4. Model Performance Evaluation

First, when using the Holdout test method, 80% of the course rating dataset was randomly used as the training dataset. The remaining 20% was used as the testing dataset [

33].

Second, the learner-based collaborative filtering algorithm (Learner-CF), the item-based collaborative filtering algorithm (Item-CF), and the proposed hybrid recommendation algorithm removing clustering (NoCluster-CF) were set as baseline algorithms. Then, the performance of the baseline algorithms was compared with the proposed hybrid recommendation algorithm (Cluster-CF).

Third, according to the top 20 nearest neighbors and the top 10 recommended courses from the previous sensitivity analysis, the precision rate, recall rate, and F1 score of the four algorithms were compared and analyzed, as shown in

Figure 4. Compared with the Learn-CF, Item-CF, and NoCluster-CF, the precision and recall rates of Cluster-CF proposed in this study improved. Specifically, the F1 score (comprehensive evaluation index) was increased by 12%, 17%, and 7%, respectively.

Although the F1 score in the Cluster-CF improved by only less than 10%, its runtime efficiency improved by nearly 50% compared to NoCluster-CF. This shows that Cluster-CF is superior to the baseline recommendation algorithms with better runtime efficiency. This is because relatively homogenous learners belonging to the similar learning groups can be summarized by a single cluster representative, which enables data reduction.

5. Discussion

The development of MOOCs is important for promoting equity and education. However, with the massive growth of MOOC platforms, learners are confronted with information overload when searching for learning resources or choosing courses. This hampers their learning efficiency and learning experiences. It also hampers the platforms’ knowledge sharing. Consequently, course recommendations are essential in the MOOC context.

At present, many recommendation algorithms mainly focus on learners’ course ratings (ratings of previous courses). However, learners’ choices of courses depend not only on their previous course preferences. They also depends on their course attribute preferences and multidimensional capabilities that match course traits. Moreover, their course attribute preferences and multidimensional capabilities change dynamically over time. Therefore, based on multidimensional item response theory in psychometrics and the Ebbinghaus forgetting curve, this study addresses the need for better recommendation algorithms in the MOOC context. To this end, this study mines course ratings, course attribute preferences, and multidimensional capabilities, which integrate memory weights. Moreover, in order to improve the recommendation algorithm’s runtime efficiency, a clustering algorithm must be used (clustering learners before the collaborative filtering algorithm is applied) to measure learners’ similarities. Therefore, a hybrid recommendation algorithm is proposed in this paper to improve the runtime efficiency by combining a clustering algorithm based on collaborative filtering. According to this algorithm, learners are divided into clusters. A similarity calculation is done within each cluster to solve the problem of dealing with high-dimensional sparse data and to further improve the performance of the recommendation algorithm.

Coursera, the world-renowned MOOCs platform, was selected for this experiment. The learner-based collaborative filtering algorithm, the item-based collaborative filtering algorithm, and the proposed hybrid recommendation algorithm removing clustering were set as baseline algorithms. According to the experimental results, the proposed algorithm is superior to the baseline recommendation algorithms, with better runtime efficiency.

6. Conclusions

Theoretically, this study contributes the following.

First, we used multidimensional item response theory in psychometrics to expand course rating data to learners’ course rating preferences, course attribute preferences, and multidimensional capabilities that match the course traits. This solves the problem of singularity and sparse data sources arising from only using course rating data in the MOOC context.

Second, integrating memory weights from the Ebbinghaus forgetting curve is useful for depicting the dynamic characteristics of learners’ course rating preferences, course attribute preferences, and multidimensional capabilities matching the course traits. Consequently, the performance and interpretation of the recommendation algorithm proposed in this study can be improved.

Third, integrating Cluster-CF (a clustering algorithm with a collaborative filtering recommendation algorithm) improves the runtime efficiency of calculating learners’ similarities in the MOOC context that has a large amount of data.

This study used a dataset from Coursera. Cluster-CF (the proposed hybrid recommendation algorithm) was compared to three baseline algorithms. Cluster-CF has improved accuracy and runtime efficiency. In terms of practice, this study makes the following contributions.

First, since learners’ course rating preferences, course attribute preferences, and multidimensional capabilities that match course traits are the basis for course recommendations, data on the basic course information and comments provided on MOOCs platform should be exhaustive and elaborate.

Second, since learners’ course rating preferences, course attribute preferences, and multidimensional capabilities that match course traits have dynamic characteristics, courses provided on MOOC platforms should be diversified in language, knowledge domain, and knowledge levels. Consequently, diversified courses can better meet learners’ learning needs and competency development.

Third, Cluster-CF is recommended for MOOC platforms. This will help improve the dissemination of high-quality MOOC resources, knowledge sharing, knowledge exploration, and knowledge learning in the MOOC context.

However, for future research, other MOOC platforms can be selected, especially those capable of providing learners’ personal attribute information. This can make the data more exhaustive and diverse to improve the recommendation algorithm. Moreover, in the process of model experiments, the selection of the number of clusters, similarity weight coefficients, and the number of recommended courses can be combined with other methods in future research, such as using the parameter optimization function in machine learning, to make a more refined comparison for obtaining the best combination of parameters.