A Survey on Datasets for Emotion Recognition from Vision: Limitations and In-the-Wild Applicability

Abstract

:1. Introduction

- We review how the behavioral psychology literature describes emotion perception in humans and correlate this with current datasets (Section 2);

- We survey and list the datasets currently employed for benchmarking in the state-of-the-art techniques (Section 3);

- We explore the difference in the annotations of these datasets and how they could impact training and evaluating techniques (Section 3.2);

- We discuss annotations described using continuous models, such as the valence, arousal and dominance (VAD) model, and how they can harm the ability of a model to understand emotion (Section 3.3);

- We investigate the presence of nonverbal cues beyond facial expression in the datasets of the state-of-the-art techniques and propose experiments regarding their representativeness, visibility, and data quality (Section 3.4);

- We discuss possible application scenarios for emotion recognition extracted from published works and how each dataset can impact positively and negatively according to its features (Section 4).

2. Perceived Emotion in Humans

2.1. The Portrayal of Emotion

2.2. Association between Nonverbal Cues

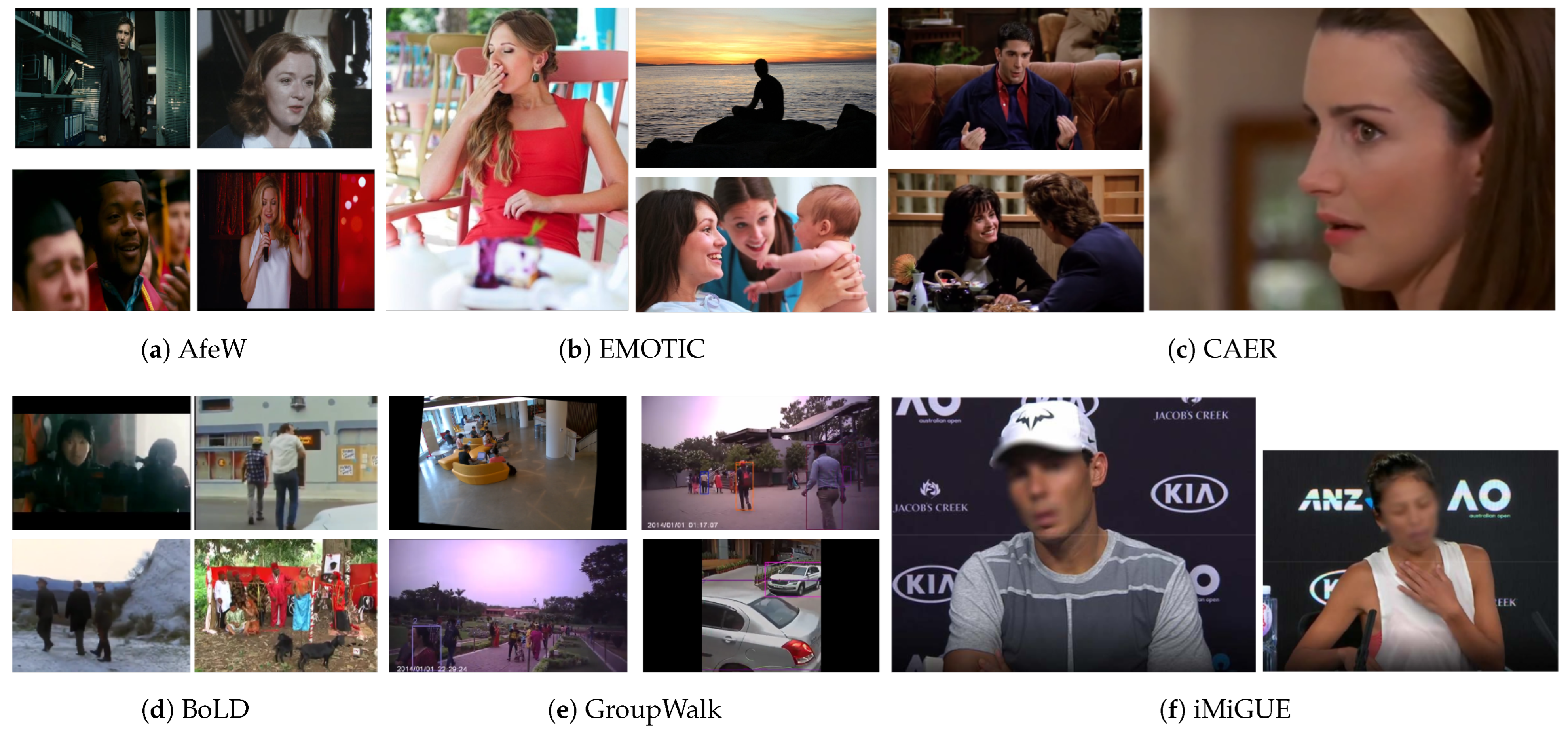

3. Datasets used in State-of-the-Art Techniques

| Dataset Name | Annotation Formats | Annotators | Samples | Demographics |

|---|---|---|---|---|

| EMOTIC [53] | Categorical—26 classes Continuous—VAD model | AMT 2 | 18,316 images | 23,788 people—66% M and 34% F; 78% A, 11% T, and 11% C |

| EMOTIC 1 [16] | Categorical—26 classes Continuous—VAD model | AMT 2 | 23,571 images | 34,320 people—66% M and 34% F; 83% A, 7% T, and 10% C |

| CAER and CAER-S [17] | Categorical—6 classes + neutral | 6 annotators | 13,201 video clips 70,000 images | Unknown |

| iMiGUE [54] | Categorical—2 classes | 5 (trained) annotators | 359 video clips | 72 adults—50% M and 50% F |

| AfeW [51] | Categorical—6 classes + neutral | 2 annotators | 1426 video clips | 330 subjects aged from 1 to 70 years |

| AfeW-VA [52] | Continuous—VA model | 2 (trained) annotators | 600 video clips | 240 subjects aged from 8 to 76 years 52% female |

| BoLD [55] | Categorical—26 classes Continuous—VAD model | AMT 2 | 26,164 video clips | 48,037 instances—71% M and 29% F; 90.7% A, 6.9% T, and 2.5% C |

| GroupWalk [56] | Categorical—3 classes + neutral | 10 annotators | 45 video clips | 3544 people |

3.1. Techniques in the State of the Art

3.2. Emotion Categories and Annotations

Assessing Annotators’ Agreement

3.3. Continuous Annotations

3.4. Presence of Nonverbal Cues

3.4.1. Context Variability

3.4.2. Body Keypoints Visibility

4. Discussion

4.1. Application Scenarios

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sternglanz, R.W.; DePaulo, B.M. Reading nonverbal cues to emotions: The advantages and liabilities of relationship closeness. J. Nonverbal Behav. 2004, 28, 245–266. [Google Scholar] [CrossRef]

- Rouast, P.V.; Adam, M.T.; Chiong, R. Deep learning for human affect recognition: Insights and new developments. IEEE Trans. Affect. Comput. 2019, 12, 524–543. [Google Scholar] [CrossRef]

- Patel, D.S. Body Language: An Effective Communication Tool. IUP J. Engl. Stud. 2014, 9, 7. [Google Scholar]

- Wallbott, H.G.; Scherer, K.R. Cues and channels in emotion recognition. J. Personal. Soc. Psychol. 1986, 51, 690. [Google Scholar] [CrossRef]

- Archer, D.; Akert, R.M. Words and everything else: Verbal and nonverbal cues in social interpretation. J. Personal. Soc. Psychol. 1977, 35, 443. [Google Scholar] [CrossRef]

- Barrett, L.F.; Kensinger, E.A. Context is routinely encoded during emotion perception. Psychol. Sci. 2010, 21, 595–599. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F.; Mesquita, B.; Gendron, M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011, 20, 286–290. [Google Scholar] [CrossRef]

- Guthier, B.; Alharthi, R.; Abaalkhail, R.; El Saddik, A. Detection and visualization of emotions in an affect-aware city. In Proceedings of the 1st International Workshop on Emerging Multimedia Applications and Services for Smart Cities, Orlando, FL, USA, 7 November 2014; pp. 23–28. [Google Scholar]

- Aerts, R.; Honnay, O.; Van Nieuwenhuyse, A. Biodiversity and human health: Mechanisms and evidence of the positive health effects of diversity in nature and green spaces. Br. Med. Bull. 2018, 127, 5–22. [Google Scholar] [CrossRef]

- Wei, H.; Hauer, R.J.; Chen, X.; He, X. Facial expressions of visitors in forests along the urbanization gradient: What can we learn from selfies on social networking services? Forests 2019, 10, 1049. [Google Scholar] [CrossRef]

- Wei, H.; Hauer, R.J.; Zhai, X. The relationship between the facial expression of people in university campus and host-city variables. Appl. Sci. 2020, 10, 1474. [Google Scholar] [CrossRef]

- Meng, Q.; Hu, X.; Kang, J.; Wu, Y. On the effectiveness of facial expression recognition for evaluation of urban sound perception. Sci. Total Environ. 2020, 710, 135484. [Google Scholar] [CrossRef] [PubMed]

- Dhall, A.; Goecke, R.; Joshi, J.; Hoey, J.; Gedeon, T. Emotiw 2016: Video and group-level emotion recognition challenges. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 427–432. [Google Scholar]

- Su, W.; Zhang, H.; Su, Y.; Yu, J. Facial Expression Recognition with Confidence Guided Refined Horizontal Pyramid Network. IEEE Access 2021, 9, 50321–50331. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6897–6906. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Context based emotion recognition using emotic dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2755–2766. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Kim, S.; Kim, S.; Park, J.; Sohn, K. Context-aware emotion recognition networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10143–10152. [Google Scholar]

- Le, N.; Nguyen, K.; Nguyen, A.; Le, B. Global-local attention for emotion recognition. Neural Comput. Appl. 2022, 34, 21625–21639. [Google Scholar] [CrossRef]

- Costa, W.; Macêdo, D.; Zanchettin, C.; Talavera, E.; Figueiredo, L.S.; Teichrieb, V. A Fast Multiple Cue Fusing Approach for Human Emotion Recognition. SSRN Preprint 4255748. 2022. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4255748 (accessed on 5 April 2023).

- Chen, J.; Yang, T.; Huang, Z.; Wang, K.; Liu, M.; Lyu, C. Incorporating structured emotion commonsense knowledge and interpersonal relation into context-aware emotion recognition. Appl. Intell. 2022, 53, 4201–4217. [Google Scholar] [CrossRef]

- Saxena, A.; Khanna, A.; Gupta, D. Emotion recognition and detection methods: A comprehensive survey. J. Artif. Intell. Syst. 2020, 2, 53–79. [Google Scholar] [CrossRef]

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R.W. Driver emotion recognition for intelligent vehicles: A survey. ACM Comput. Surv. (CSUR) 2020, 53, 1–30. [Google Scholar] [CrossRef]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; de Sa Junior, A.R.; Pozzebon, E.; Sobieranski, A.C. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Inf. Sci. 2022, 582, 593–617. [Google Scholar] [CrossRef]

- Veltmeijer, E.A.; Gerritsen, C.; Hindriks, K. Automatic emotion recognition for groups: A review. IEEE Trans. Affect. Comput. 2021, 14, 89–107. [Google Scholar] [CrossRef]

- Khan, M.A.R.; Rostov, M.; Rahman, J.S.; Ahmed, K.A.; Hossain, M.Z. Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey. Appl. Sci. 2023, 13, 387. [Google Scholar] [CrossRef]

- Thanapattheerakul, T.; Mao, K.; Amoranto, J.; Chan, J.H. Emotion in a century: A review of emotion recognition. In Proceedings of the 10th International Conference on Advances in Information Technology, Bangkok, Thailand, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Birdwhistell, R.L. Introduction to Kinesics: An Annotation System for Analysis of Body Motion and Gesture; Department of State, Foreign Service Institute: Arlington, VA, USA, 1952. [Google Scholar]

- Frank, L.K. Tactile Communication. ETC Rev. Gen. Semant. 1958, 16, 31–79. [Google Scholar]

- Hall, E.T. A System for the Notation of Proxemic Behavior. Am. Anthropol. 1963, 65, 1003–1026. [Google Scholar] [CrossRef]

- Darwin, C. The Expression of the Emotions in Man and Animals; John Marry: London, UK, 1872. [Google Scholar]

- Ekman, P. Facial expression and emotion. Am. Psychol. 1993, 48, 384. [Google Scholar] [CrossRef] [PubMed]

- Wallbott, H.G. Bodily expression of emotion. Eur. J. Soc. Psychol. 1998, 28, 879–896. [Google Scholar] [CrossRef]

- Tracy, J.L.; Matsumoto, D. The spontaneous expression of pride and shame: Evidence for biologically innate nonverbal displays. Proc. Natl. Acad. Sci. USA 2008, 105, 11655–11660. [Google Scholar] [CrossRef]

- Keltner, D. Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. J. Personal. Soc. Psychol. 1995, 68, 441. [Google Scholar] [CrossRef]

- Tassinary, L.G.; Cacioppo, J.T. Unobservable facial actions and emotion. Psychol. Sci. 1992, 3, 28–33. [Google Scholar] [CrossRef]

- Ekman, P.; Roper, G.; Hager, J.C. Deliberate facial movement. Child Dev. 1980, 51, 886–891. [Google Scholar] [CrossRef]

- Ekman, P.; O’Sullivan, M.; Friesen, W.V.; Scherer, K.R. Invited article: Face, voice, and body in detecting deceit. J. Nonverbal Behav. 1991, 15, 125–135. [Google Scholar] [CrossRef]

- Greenaway, K.H.; Kalokerinos, E.K.; Williams, L.A. Context is everything (in emotion research). Soc. Personal. Psychol. Compass 2018, 12, e12393. [Google Scholar] [CrossRef]

- Van Kleef, G.A.; Fischer, A.H. Emotional collectives: How groups shape emotions and emotions shape groups. Cogn. Emot. 2016, 30, 3–19. [Google Scholar] [CrossRef] [PubMed]

- Aviezer, H.; Hassin, R.R.; Ryan, J.; Grady, C.; Susskind, J.; Anderson, A.; Moscovitch, M.; Bentin, S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 2008, 19, 724–732. [Google Scholar] [CrossRef] [PubMed]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the Neural Information Processing: 20th International Conference, ICONIP 2013, Proceedings, Part III 20, Daegu, Republic of Korea, 3–7 November 2013; Springer: Cham, Swizterland, 2013; pp. 117–124. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Savchenko, A.V.; Savchenko, L.V.; Makarov, I. Classifying emotions and engagement in online learning based on a single facial expression recognition neural network. IEEE Trans. Affect. Comput. 2022, 13, 2132–2143. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Expression, affect, action unit recognition: Aff-wild2, multi-task learning and arcface. arXiv 2019, arXiv:1910.04855. [Google Scholar]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract your attention: Multi-head cross attention network for facial expression recognition. arXiv 2021, arXiv:2109.07270. [Google Scholar]

- Antoniadis, P.; Filntisis, P.P.; Maragos, P. Exploiting Emotional Dependencies with Graph Convolutional Networks for Facial Expression Recognition. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021. [Google Scholar] [CrossRef]

- Ryumina, E.; Dresvyanskiy, D.; Karpov, A. In search of a robust facial expressions recognition model: A large-scale visual cross-corpus study. Neurocomputing 2022, 514, 435–450. [Google Scholar] [CrossRef]

- Aouayeb, M.; Hamidouche, W.; Soladie, C.; Kpalma, K.; Seguier, R. Learning vision transformer with squeeze and excitation for facial expression recognition. arXiv 2021, arXiv:2107.03107. [Google Scholar]

- Meng, D.; Peng, X.; Wang, K.; Qiao, Y. Frame attention networks for facial expression recognition in videos. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3866–3870. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Acted Facial Expressions in the Wild Database; Technical Report TR-CS-11; Australian National University: Canberra, Australia, 2011; Volume 2, p. 1. [Google Scholar]

- Kossaifi, J.; Tzimiropoulos, G.; Todorovic, S.; Pantic, M. AFEW-VA database for valence and arousal estimation in-the-wild. Image Vis. Comput. 2017, 65, 23–36. [Google Scholar] [CrossRef]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. EMOTIC: Emotions in Context dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 61–69. [Google Scholar]

- Liu, X.; Shi, H.; Chen, H.; Yu, Z.; Li, X.; Zhao, G. iMiGUE: An identity-free video dataset for micro-gesture understanding and emotion analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10631–10642. [Google Scholar]

- Luo, Y.; Ye, J.; Adams, R.B.; Li, J.; Newman, M.G.; Wang, J.Z. ARBEE: Towards automated recognition of bodily expression of emotion in the wild. Int. J. Comput. Vis. 2020, 128, 1–25. [Google Scholar] [CrossRef]

- Mittal, T.; Guhan, P.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. Emoticon: Context-aware multimodal emotion recognition using frege’s principle. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14234–14243. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Cham, Swizterland, 2014; pp. 740–755. [Google Scholar]

- Zhou, B.; Zhao, H.; Puig, X.; Xiao, T.; Fidler, S.; Barriuso, A.; Torralba, A. Semantic understanding of scenes through the ade20k dataset. Int. J. Comput. Vis. 2019, 127, 302–321. [Google Scholar] [CrossRef]

- Zuckerman, M.; Hall, J.A.; DeFrank, R.S.; Rosenthal, R. Encoding and decoding of spontaneous and posed facial expressions. J. Personal. Soc. Psychol. 1976, 34, 966. [Google Scholar] [CrossRef]

- Gu, C.; Sun, C.; Ross, D.A.; Vondrick, C.; Pantofaru, C.; Li, Y.; Vijayanarasimhan, S.; Toderici, G.; Ricco, S.; Sukthankar, R.; et al. Ava: A video dataset of spatio-temporally localized atomic visual actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6047–6056. [Google Scholar]

- Dhall, A.; Goecke, R.; Joshi, J.; Wagner, M.; Gedeon, T. Emotion recognition in the wild challenge 2013. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction, Sydney, Australia, 9–13 December 2013; pp. 509–516. [Google Scholar]

- Wu, J.; Zhang, Y.; Ning, L. The Fusion Knowledge of Face, Body and Context for Emotion Recognition. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 108–113. [Google Scholar]

- Zhang, M.; Liang, Y.; Ma, H. Context-aware affective graph reasoning for emotion recognition. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 151–156. [Google Scholar]

- Thuseethan, S.; Rajasegarar, S.; Yearwood, J. Boosting emotion recognition in context using non-target subject information. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–7. [Google Scholar]

- Peng, K.; Roitberg, A.; Schneider, D.; Koulakis, M.; Yang, K.; Stiefelhagen, R. Affect-DML: Context-Aware One-Shot Recognition of Human Affect using Deep Metric Learning. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar]

- Wu, S.; Zhou, L.; Hu, Z.; Liu, J. Hierarchical Context-Based Emotion Recognition with Scene Graphs. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Huang, S.; Wang, S.; Liu, Y.; Zhai, P.; Su, L.; Li, M.; Zhang, L. Emotion Recognition for Multiple Context Awareness. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer: Cham, Swizterland, 2022; pp. 144–162. [Google Scholar]

- Gao, Q.; Zeng, H.; Li, G.; Tong, T. Graph reasoning-based emotion recognition network. IEEE Access 2021, 9, 6488–6497. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Q.; Zhou, F. Robust lightweight facial expression recognition network with label distribution training. AAAI Conf. Artif. Intell. 2021, 35, 3510–3519. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Q.; Wang, S. Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 2021, 30, 6544–6556. [Google Scholar] [CrossRef]

- Zhou, S.; Wu, X.; Jiang, F.; Huang, Q.; Huang, C. Emotion Recognition from Large-Scale Video Clips with Cross-Attention and Hybrid Feature Weighting Neural Networks. Int. J. Environ. Res. Public Health 2023, 20, 1400. [Google Scholar] [CrossRef]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Said, C.P.; Sebe, N.; Todorov, A. Structural resemblance to emotional expressions predicts evaluation of emotionally neutral faces. Emotion 2009, 9, 260. [Google Scholar] [CrossRef]

- Montepare, J.M.; Dobish, H. The contribution of emotion perceptions and their overgeneralizations to trait impressions. J. Nonverbal Behav. 2003, 27, 237–254. [Google Scholar] [CrossRef]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378. [Google Scholar] [CrossRef]

- Mehrabian, A. Basic Dimensions for a General Psychological Theory: Implications for Personality, Social, Environmental, and Developmental Studies; Oelgeschlager, Gunn & Hain: Cambridge, MA, USA, 1980. [Google Scholar]

- Kołakowska, A.; Szwoch, W.; Szwoch, M. A review of emotion recognition methods based on data acquired via smartphone sensors. Sensors 2020, 20, 6367. [Google Scholar] [CrossRef] [PubMed]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting large, richly annotated facial-expression databases from movies. IEEE Multimed. 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Pandey, R.; Purohit, H.; Castillo, C.; Shalin, V.L. Modeling and mitigating human annotation errors to design efficient stream processing systems with human-in-the-loop machine learning. Int. J. Hum. Comput. Stud. 2022, 160, 102772. [Google Scholar] [CrossRef]

- López-Cifuentes, A.; Escudero-Viñolo, M.; Bescós, J.; García-Martín, Á. Semantic-Aware Scene Recognition. Pattern Recognit. 2020, 102, 107256. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.; Lee, J.; et al. MediaPipe: A Framework for Perceiving and Processing Reality. In Proceedings of the Third Workshop on Computer Vision for AR/VR at IEEE Computer Vision and Pattern Recognition (CVPR) 2019, Long Beach, CA, USA, 17 June 2019. [Google Scholar]

- Lima, J.P.; Roberto, R.; Figueiredo, L.; Simões, F.; Thomas, D.; Uchiyama, H.; Teichrieb, V. 3D pedestrian localization using multiple cameras: A generalizable approach. Mach. Vis. Appl. 2022, 33, 61. [Google Scholar] [CrossRef]

- Limbu, D.K.; Anthony, W.C.Y.; Adrian, T.H.J.; Dung, T.A.; Kee, T.Y.; Dat, T.H.; Alvin, W.H.Y.; Terence, N.W.Z.; Ridong, J.; Jun, L. Affective social interaction with CuDDler robot. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 179–184. [Google Scholar]

- Busch, A.B.; Sugarman, D.E.; Horvitz, L.E.; Greenfield, S.F. Telemedicine for treating mental health and substance use disorders: Reflections since the pandemic. Neuropsychopharmacology 2021, 46, 1068–1070. [Google Scholar] [CrossRef]

- Zoph, B.; Ghiasi, G.; Lin, T.Y.; Cui, Y.; Liu, H.; Cubuk, E.D.; Le, Q. Rethinking pre-training and self-training. Adv. Neural Inf. Process. Syst. 2020, 33, 3833–3845. [Google Scholar]

- Li, D.; Zhang, H. Improved regularization and robustness for fine-tuning in neural networks. Adv. Neural Inf. Process. Syst. 2021, 34, 27249–27262. [Google Scholar]

- Chen, X.; Wang, S.; Fu, B.; Long, M.; Wang, J. Catastrophic forgetting meets negative transfer: Batch spectral shrinkage for safe transfer learning. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Xu, Y.; Zhong, X.; Yepes, A.J.J.; Lau, J.H. Forget me not: Reducing catastrophic forgetting for domain adaptation in reading comprehension. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Hastings, J.; Ceusters, W.; Smith, B.; Mulligan, K. Dispositions and processes in the Emotion Ontology. In Proceedings of the 2nd International Conference on Biomedical Ontology, Buffalo, NY, USA, 26–30 July 2011. [Google Scholar]

| Emotion | Description of Body Movements |

|---|---|

| Joy | Various purposeless movements, jumping, dancing for joy, clapping of hands, stamping while laughing, head nods to and fro, during excessive laughter the whole body is thrown backward and shakes or is almost convulsed, body held erect and head upright (pp. 76, 196, 197, 200, 206, 210, 214) |

| Sadness | Motionless, passive, head hangs on contracted chest (p. 176) |

| Pride | Head and body held erect (p. 263) |

| Shame | Turning away the whole body, more especially the face, avert, bend down, awkward, nervous movements (pp. 320, 328, 329) |

| Fear | Head sinks between shoulders, motionless or crouches down (pp. 280, 290), convulsive movements, hands alternately clenched and opened with twitching movement, arms thrown wildly over the head, the whole body often turned away or shrinks, arms violently protruded as if to push away, raising both shoulders with the bent arms pressed closely against sides or chest (pp. 291, 305) |

| Anger/rage | Whole body trembles, intend to push or strike violently away, inanimate objects struck or dashed to the ground, gestures become purposeless or frantic, pacing up and down, shaking fist, head erect, chest well expanded, feet planted firmly on the ground, one or both elbows squared or arms rigidly suspended by the sides, fists are clenched, shoulders squared (pp. 74, 239, 243, 245, 271, 361) |

| Disgust | Gestures as if to push away or to guard oneself, spitting, arms pressed close to the sides, shoulders raised as when horror is experienced (pp. 257, 260) |

| Contempt | Turning away of the whole body, snapping one’s fingers (pp. 254, 255, 256) |

| Approach | Available From | Dataset |

|---|---|---|

| Wu et al. [62] | May 2019 | EMOTIC |

| Zhang et al. [63] | August 2019 | EMOTIC |

| Mittal et al. [56] | June 2020 | EMOTIC |

| Thuseethan et al. [64] | September 2021 | EMOTIC |

| Peng et al. [65] | December 2021 | EMOTIC |

| Wu et al. [66] | August 2022 | EMOTIC |

| Yang et al. [67] | October 2022 | EMOTIC |

| Lee et al. [17] | October 2019 | CAER |

| Lee et al. [17] | October 2019 | CAER-S |

| Gao et al. [68] | January 2021 | CAER-S |

| Zhao et al. [69] | May 2021 | CAER-S |

| Zhao et al. [70] | July 2021 | CAER-S |

| Le et al. [18] | December 2021 | CAER-S |

| Le et al. [18] | December 2021 | NCAER-S 1 |

| Wu et al. [66] | August 2022 | CAER-S |

| Chen et al. [20] | October 2022 | CAER-S |

| Costa et al. [19] | October 2022 | CAER-S |

| Zhou et al. [71] | January 2023 | CAER-S |

| Dataset Name | Cue | |||

|---|---|---|---|---|

| Facial Expression | Context or Background | Body Language | Others | |

| EMOTIC [16,53] | Somewhat present | Present | Somewhat present | N/A |

| CAER/CAER-S [17] | Present | Present | Somewhat present | N/A |

| iMiGUE [54] | Present | Missing | Annotated | Annotated microgestures |

| AfeW/AfeW-VA [51,52] | Present | Somewhat present | Somewhat present | N/A |

| BoLD [55] | Present | Present | Annotated | N/A |

| GroupWalk [56] | Somewhat present | Somewhat present | Somewhat present | Gait is visible but not annotated |

| Dataset | Suggested Application Scenarios | Possible Limitations |

|---|---|---|

| EMOTIC [16,53] | Images in which context is highly representative and/or highly variable.

|

|

| CAER/CAER-S [17] | Images or videos in which the face will mostly be aligned to the camera.

|

|

| iMiGUE [54] | Spontaneous scenarios in which subtle cues need to be considered.

|

|

| AfeW/AfeW-VA [51,52] | Scenes with low context contribution.

|

|

| BoLD [55] | Images or videos with representative body language.

|

|

| GroupWalk [56] | Scenarios in which a distant emotional overview is sufficient.

|

|

| EMOTIC and BoLD | CAER and AfeW | iMiGUE | GroupWalk |

|---|---|---|---|

| 1. Peace: well being and relaxed; no worry; having positive thoughts or sensations; satisfied 2. Affection: fond feelings; love; tenderness 3. Esteem: feelings of favorable opinion or judgment; respect; admiration; gratefulness 4. Anticipation: state of looking forward; hoping for or getting prepared for possible future events 5. Engagement: paying attention to something; absorbed into something; curious; interested 6. Confidence: feeling of being certain; conviction that an outcome will be favorable; encouraged; proud 7. Happiness: feeling delighted; feeling enjoyment or amusement 8. Pleasure: feeling of delight in the senses 9. Excitement: feeling enthusiasm; stimulated; energetic 10. Surprise: sudden discovery of something unexpected 11. Sympathy: state of sharing others emotions, goals or troubles; supportive; compassionate 12. Doubt/Confusion: difficulty to understand or decide; thinking about different options 13. Disconnection: feeling not interested in the main event of the surroundings; indifferent; bored; distracted 14. Fatigue: weariness; tiredness; sleepy 15. Embarrassment: feeling ashamed or guilty 16. Yearning: strong desire to have something; jealous; envious; lust 17. Disapproval: feeling that something is wrong or reprehensible; contempt; hostile 18. Aversion: feeling disgust, dislike, repulsion; feeling hate 19. Annoyance: bothered by something or someone; irritated; impatient; frustrated 20. Anger: intense displeasure or rage; furious; resentful 21. Sensitivity: feeling of being physically or emotionally wounded; feeling delicate or vulnerable 22. Sadness: feeling unhappy, sorrow, disappointed, or discouraged 23. Disquietment: nervous; worried; upset; anxious; tense; pressured; alarmed 24. Fear: feeling suspicious or afraid of danger, threat, evil or pain; horror 25. Pain: physical suffering 26. Suffering: psychological or emotional pain; distressed; anguished | 1. Anger 2. Disgust 3. Fear 4. Happiness (Happy) 5. Sadness (Sad) 6. Surprise 7. Neutral | 1. Positive 2. Negative | 1. Somewhat happy 2. Extremely happy 3. Somewhat sad 4. Extremely sad 5. Somewhat angry 6. Extremely angry 7. Neutral |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa, W.; Talavera, E.; Oliveira, R.; Figueiredo, L.; Teixeira, J.M.; Lima, J.P.; Teichrieb, V. A Survey on Datasets for Emotion Recognition from Vision: Limitations and In-the-Wild Applicability. Appl. Sci. 2023, 13, 5697. https://doi.org/10.3390/app13095697

Costa W, Talavera E, Oliveira R, Figueiredo L, Teixeira JM, Lima JP, Teichrieb V. A Survey on Datasets for Emotion Recognition from Vision: Limitations and In-the-Wild Applicability. Applied Sciences. 2023; 13(9):5697. https://doi.org/10.3390/app13095697

Chicago/Turabian StyleCosta, Willams, Estefanía Talavera, Renato Oliveira, Lucas Figueiredo, João Marcelo Teixeira, João Paulo Lima, and Veronica Teichrieb. 2023. "A Survey on Datasets for Emotion Recognition from Vision: Limitations and In-the-Wild Applicability" Applied Sciences 13, no. 9: 5697. https://doi.org/10.3390/app13095697

APA StyleCosta, W., Talavera, E., Oliveira, R., Figueiredo, L., Teixeira, J. M., Lima, J. P., & Teichrieb, V. (2023). A Survey on Datasets for Emotion Recognition from Vision: Limitations and In-the-Wild Applicability. Applied Sciences, 13(9), 5697. https://doi.org/10.3390/app13095697