Measuring the Centrality of DNS Infrastructure in the Wild

Abstract

:1. Introduction

- We propose a novel and lightweight resolver pool measurement approach based on NS chain reflecting, which is capable of discovering the implicit resolver pool structure of the DNS service in a fast and low-cost manner.

- We conducted a comprehensive quantitative analysis of DNS centrality from the client-side and server-side in terms of the infrastructure on various dimensions, including IP address, domain name, DNS service provider, and IP provider, providing a multi-faceted view of DNS infrastructure centralization.

- We uncovered several previously unknown insights about the centrality of DNS infrastructure. On the client side, more than 90% of FDNSes are actually backed by less than 5% of iRDNSes. On the server side, more than 98% of all domain names rely on a single name server provider, with the top 10 leading name server providers serving 48.5% of all domain names.

2. Related Work

2.1. DNS Measurement

2.2. Internet Centrality

3. Preliminaries

4. Methodology

4.1. CNAME Chain-Based Method

4.1.1. Experiment Setup

4.1.2. Results

4.2. Client-Side DNS Infrastructure Measurement

4.2.1. Measurement Scheme

4.2.2. Resolver Pool Discovery

4.2.3. Advantage and Disadvantage

4.3. Name Server Measurement

5. Resolver Centrality Analysis

5.1. Summary of Measurement

5.2. Centrality of iRDNS

5.3. Resolver Pool

5.4. Service Geo-Distribution

6. Name Server Centrality Analysis

6.1. Dataset

6.2. Service Provider

6.3. The Configuration and Behavior of Name Server

- (1)

- Placeholder. Special setting by providers is for certain purposes, such as domain parking and expired domain.

- (2)

- TLD typo. A typo unintentionally in TLD is caused by the owner of domain names, such as and .

- (3)

- Nonexistent TLD. The owners configure a nonexistent TLD, such as .

- (4)

- IP address. The owners configure an IP address as NS records, including normal IP, private IP, and invalid IP.

- (5)

- Public DNS. The owners apply public DNS as NS records, which may fail to resolve the domain name.

- (6)

- Replaced or disabled NS. The owners of domain names replace or stop the original NS, but forget to clean the zone file, which may lead to potential security risks [39].

6.4. The Response of Name Server

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arkko, J.; Trammell, B.; Nottingham, M.; Huitema, C.; Thomson, M.; Tantsura, J.; Ten Oever, N. Considerations on Internet Consolidation and the Internet Architecture. Technical Report, Internet Draft. 2019. Available online: https://tools.ietf.org/html/draft-arkko-iab-internet (accessed on 10 March 2023).

- DNS Resolver Centrality. 2019. Available online: https://blog.apnic.net/2019/09/23/dns-resolver-centrality/ (accessed on 9 April 2023).

- Radu, R.; Hausding, M. Consolidation in the DNS resolver market–how much, how fast, how dangerous? J. Cyber Policy 2020, 5, 46–64. [Google Scholar] [CrossRef]

- Moura, G.C.; Castro, S.; Hardaker, W.; Wullink, M.; Hesselman, C. Clouding up the Internet: How centralized is DNS traffic becoming? In Proceedings of the ACM Internet Measurement Conference, Virtual Event, 27–29 October 2020; pp. 42–49. [Google Scholar]

- Consolidation in the Internet Economy. 2021. Available online: https://future.internetsociety.org/2019/ (accessed on 11 December 2021).

- Akamai Edge DNS Causes Massive Outage 2021. 2021. Available online: https://constellix.com/news/akamai-edge-dns-causes-massive-outage-2021 (accessed on 11 December 2021).

- Numerous Lessons We Can Learn from the Facebook Outage and Its Mistakes. 2021. Available online: https://circleid.com/posts/20211007-numerous-lessons-we-can-learn-from-the-facebook-outage-and-its-mistakes (accessed on 11 December 2021).

- EU Wants to Build Its Own DNS Infrastructure with Built-In Filtering Capabilities. 2022. Available online: https://therecord.media/eu-wants-to-build-its-own-dns-infrastructure-with-built-in-filtering-capabilities/ (accessed on 11 May 2022).

- De Vries, W.B.; van Rijswijk-Deij, R.; de Boer, P.T.; Pras, A. Passive observations of a large DNS service: 2.5 years in the life of Google. IEEE Trans. Netw. Serv. Manag. 2019, 17, 190–200. [Google Scholar] [CrossRef]

- Allman, M. Comments on dns robustness. In Proceedings of the Internet Measurement Conference 2018, Boston, MA, USA, 31 October–2 November 2018; pp. 84–90. [Google Scholar]

- Park, J.; Khormali, A.; Mohaisen, M.; Mohaisen, A. Where are you taking me? Behavioral analysis of open dns resolvers. In Proceedings of the 2019 49th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), IEEE, Portland, OR, USA, 24–27 June 2019; pp. 493–504. [Google Scholar]

- Schomp, K.; Callahan, T.; Rabinovich, M.; Allman, M. On measuring the client-side DNS infrastructure. In Proceedings of the 2013 Internet Measurement Conference, IMC 2013, Barcelona, Spain, 23–25 October 2013; Papagiannaki, K., Gummadi, P.K., Partridge, C., Eds.; ACM: New York, NY, USA, 2013; pp. 77–90. [Google Scholar]

- Dagon, D.; Lee, C.; Lee, W.; Provos, N. Corrupted DNS resolution paths: The rise of a malicious resolution authority. In Proceedings of the Network and Distributed System Security Symposium, NDSS 2008, San Diego, CA, USA, 10–13 February 2008. [Google Scholar]

- Alzoubi, H.A.; Rabinovich, M.; Spatscheck, O. The anatomy of LDNS clusters: Findings and implications for web content delivery. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 83–94. [Google Scholar]

- Kührer, M.; Hupperich, T.; Bushart, J.; Rossow, C.; Holz, T. Going wild: Large-scale classification of open DNS resolvers. In Proceedings of the 2015 Internet Measurement Conference, Tokyo, Japan, 28–30 October 2015; pp. 355–368. [Google Scholar]

- Pearce, P.; Jones, B.; Li, F.; Ensafi, R.; Feamster, N.; Weaver, N.; Paxson, V. Global measurement of DNS manipulation. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 307–323. [Google Scholar]

- Liu, B.; Lu, C.; Duan, H.; Liu, Y.; Li, Z.; Hao, S.; Yang, M. Who is answering my queries: Understanding and characterizing interception of the DNS resolution path. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1113–1128. [Google Scholar]

- Mao, Z.M.; Cranor, C.D.; Douglis, F.; Rabinovich, M.; Spatscheck, O.; Wang, J. A Precise and Efficient Evaluation of the Proximity Between Web Clients and Their Local DNS Servers. In Proceedings of the USENIX Annual Technical Conference, General Track, Berkeley, CA, USA, 10–15 June 2002; pp. 229–242. [Google Scholar]

- Gao, H.; Yegneswaran, V.; Jiang, J.; Chen, Y.; Porras, P.; Ghosh, S.; Duan, H. Reexamining DNS from a global recursive resolver perspective. IEEE/ACM Trans. Netw. 2014, 24, 43–57. [Google Scholar] [CrossRef]

- Al-Dalky, R.; Schomp, K. Characterization of collaborative resolution in recursive DNS resolvers. In Proceedings of the International Conference on Passive and Active Network Measurement, Berlin, Germany, 26–27 March 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 146–157. [Google Scholar]

- Hao, S.; Wang, H.; Stavrou, A.; Smirni, E. On the DNS deployment of modern web services. In Proceedings of the 2015 IEEE 23rd International Conference on Network Protocols (ICNP), IEEE, San Francisco, CA, USA, 10–13 November 2015; pp. 100–110. [Google Scholar]

- Akiwate, G.; Savage, S.; Voelker, G.M.; Claffy, K.C. Risky BIZness: Risks Derived from Registrar Name Management. In Proceedings of the 21st ACM Internet Measurement Conference, Virtual Event, 2–4 November 2021; pp. 673–686. [Google Scholar]

- Arkko, J. Centralised Architectures in Internet Infrastructure. IETF Internet Draft 2019. Available online: https://datatracker.ietf.org/doc/html/draft-arkko-arch-infrastructure-centralisation-00 (accessed on 11 March 2023).

- DNS Resolver Centrality. 2021. Available online: https://labs.apnic.net/presentations/store/2021-09-10-hknog-resolver-centrality.pdf (accessed on 11 May 2022).

- Internet Centrality. 2021. Available online: https://labs.apnic.net/presentations/store/2021-07-19-centrality.pdf (accessed on 11 May 2022).

- Opinion: CDNs and Centrality. 2021. Available online: https://blog.apnic.net/2021/07/02/opinion-cdns-and-centrality/ (accessed on 11 May 2022).

- Shue, C.A.; Kalafut, A.J.; Gupta, M. The web is smaller than it seems. In Proceedings of the 7th ACM SIGCOMM Conference on Internet Measurement, San Diego, CA, USA, 24–26 October 2007; pp. 123–128. [Google Scholar]

- Zembruzki, L.; Jacobs, A.S.; Landtreter, G.S.; Granville, L.Z.; Moura, G.C. Measuring Centralization of DNS Infrastructure in the Wild. In Proceedings of the International Conference on Advanced Information Networking and Applications, Caserta, Italy, 15–17 April 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 871–882. [Google Scholar]

- Dell’Amico, M.; Bilge, L.; Kayyoor, A.; Efstathopoulos, P.; Vervier, P.A. Lean on me: Mining internet service dependencies from large-scale dns data. In Proceedings of the 33rd Annual Computer Security Applications Conference, Orlando, FL, USA, 4–8 December 2017; pp. 449–460. [Google Scholar]

- Kashaf, A.; Sekar, V.; Agarwal, Y. Analyzing third party service dependencies in modern web services: Have we learned from the mirai-dyn incident? In Proceedings of the ACM Internet Measurement Conference, Virtual Event, 27–29 October 2020; pp. 634–647. [Google Scholar]

- Public DNS Server List. 2022. Available online: https://public-dns.info/ (accessed on 11 May 2022).

- Durumeric, Z.; Wustrow, E.; Halderman, J.A. ZMap: Fast Internet-wide Scanning and Its Security Applications. In Proceedings of the 22nd USENIX Security Symposium (USENIX Security 13), Washington, DC, USA, 14–16 August 2013; pp. 605–620. [Google Scholar]

- Wan, G.; Izhikevich, L.; Adrian, D.; Yoshioka, K.; Holz, R.; Rossow, C.; Durumeric, Z. On the Origin of Scanning: The Impact of Location on Internet-Wide Scans. In Proceedings of the IMC ’20: ACM Internet Measurement Conference, Virtual Event, 27–29 October 2020; ACM: New York, NY, USA, 2020; pp. 662–679. [Google Scholar] [CrossRef]

- ZDNS. 2021. Available online: https://github.com/zmap/zdns (accessed on 11 December 2021).

- Maxmind. 2021. Available online: https://www.maxmind.com/en/home (accessed on 11 December 2021).

- Warf, B. Geographies of global Internet censorship. GeoJournal 2011, 76, 1–23. [Google Scholar] [CrossRef]

- Google Public Dns Resolver Pool. 2022. Available online: https://www.gstatic.com/ipranges/publicdns.json (accessed on 11 May 2022).

- Centralized Zone Data Service. 2021. Available online: https://czds.icann.org/home (accessed on 11 December 2021).

- The International Incident—Gaining Control of a .int Domain Name with DNS Trickery. 2021. Available online: https://thehackerblog.com/the-international-incident-gaining-control-of-a-int-domain-name-with-dns-trickery/index.html (accessed on 11 December 2021).

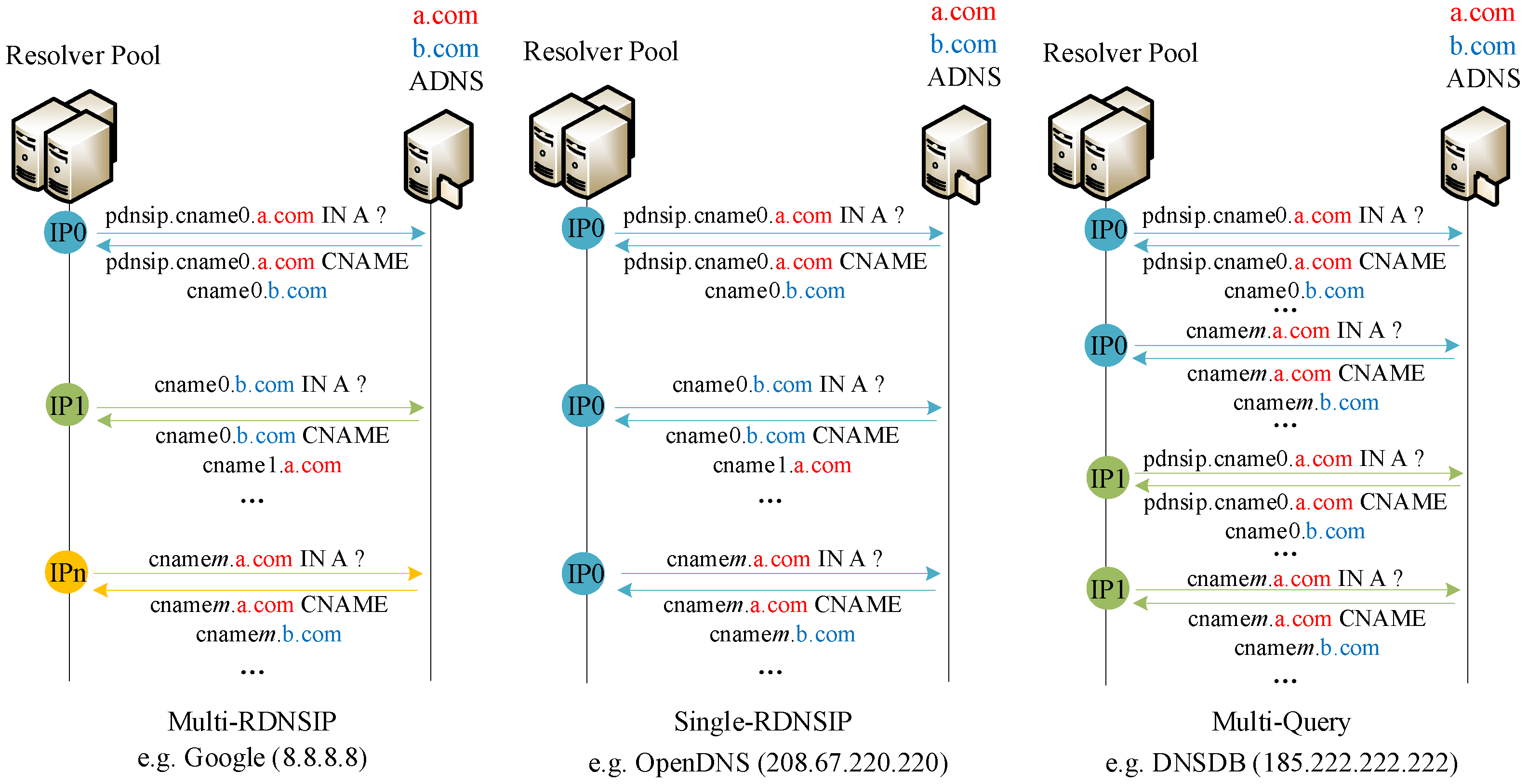

| Pattern | Public DNS |

|---|---|

| Multi-RDNSIP | Google, Level3 |

| Single-RDNS | OpenDNS, Cloudflare, Dyn, Verisign, Norton, 114DNS, DNS Watch, SafeDNS, Comodo, Clearbrowsing, Freenom World, DNS Advantage |

| Multi-Query | Quad9, Tencent, AliDNS, Yandex, AdGuard DNS, DNSDB |

| Methodology | Distributed Measurement Platform | CNAME Chain | Our Method |

|---|---|---|---|

| Mechanisms | Public DNS assigns various backend resolvers to users based on their geographical locations. | Public DNS initiates CNAME record queries using different backend resolvers. | Open forwarders carry the RD flag when forwarding requests. |

| Condition | Researchers need authorization from the measurement platform. | Researchers need to control authoritative name servers to construct CNAME chains. | Researchers need to control authoritative servers to build NS chains. |

| Measurement Scope | Public DNS. | Public DNS that meets specific conditions. | Public DNS. |

| Round | FDNS | dRDNS | iRDNS | |||

|---|---|---|---|---|---|---|

| R2 # | R1 # | R2 # | R1 # | R2 # | R1 # | |

| 1 | 2,422,618 | 5,582,383 | 51,223 | 58,028 | 55,109 | 64,136 |

| 2 | 2,045,257 | 5,309,418 | 42,471 | 55,116 | 51,978 | 63,410 |

| 3 | 2,327,287 | 5,217,285 | 48,324 | 54,712 | 54,698 | 63,988 |

| 4 | 2,331,808 | 5,250,101 | 47,498 | 54,969 | 54,007 | 63,535 |

| 5 | 2,370,049 | 5,266,898 | 49,224 | 55,805 | 54,640 | 63,692 |

| 6 | 2,256,663 | 5,205,243 | 44,935 | 55,009 | 53,250 | 64,128 |

| 7 | 2,288,812 | 5,129,975 | 47,156 | 54,139 | 54,017 | 63,185 |

| 8 | 2,376,106 | 5,293,396 | 46,327 | 55,387 | 53,732 | 63,917 |

| 9 | 2,350,699 | 5,117,815 | 44,914 | 52,881 | 53,150 | 63,071 |

| 10 | 2,339,042 | 5,179,783 | 48,873 | 55,071 | 54,535 | 63,376 |

| DNS SP | Resolver/24 # | Resolver # | Resolver per/24 # | FDNS # |

|---|---|---|---|---|

| 110 | 2274 | 20.67 | 4,272,808 | |

| 114DNS | 155 | 625 | 4.03 | 2,877,600 |

| Level3 | 74 | 937 | 12.66 | 306,539 |

| Cloudflare | 263 | 8593 | 32.67 | 284,188 |

| OpenDNS | 42 | 419 | 9.98 | 257,962 |

| Total | 644 | 12,848 | 19.95 | 7,646,226 |

| Data Type | # |

|---|---|

| domain name | 210,446,494 |

| name server | 2,786,562 |

| name server zone | 1,123,511 |

| name server IP | 754,404 |

| Name Server Provider | NS # | IP # | Domain Name # | Invalid Rate | ASO |

|---|---|---|---|---|---|

| GoDaddy | 180 | 97 | 50,807,891 | 0.44 | Host Europe GmbH; GO-DADDY |

| Cloudflare | 1500 | 2821 | 9,420,340 | 0.32 | CLOUDFLARENET |

| DNS.COM | 94 | 74 | 6,826,290 | 0.39 | |

| 95 | 36 | 6,716,254 | 0.58 | ||

| Namecheap | 307 | 132 | 6,713,315 | 0.26 | |

| HiChina | 70 | 113 | 5,700,130 | 0.40 | Hangzhou Alibaba Advertising Co., Ltd. |

| Wix | 139 | 12 | 5,463,090 | 0.03 | |

| IONOS | 607 | 568 | 4,067,655 | 0.08 | IONOS SE; Fasthosts Internet Limited |

| NameBright | 20 | 46 | 4,057,960 | 0.10 | Google; AMAZON-AES |

| Network Solutions | 108 | 24 | 2,472,561 | 0.07 | CLOUDFLARENET; NETWORK-SOLUTIONS-HOSTING |

| IP Provider | IP # | NS Provider # |

|---|---|---|

| OVH SAS | 55,693 | 70,097 |

| UNIFIEDLAYER-AS-1 | 45,629 | 40,423 |

| Hetzner Online GmbH | 28,441 | 32,507 |

| AMAZON-02 | 14,013 | 25,847 |

| DIGITALOCEAN-ASN | 13,468 | 18,649 |

| LIQUIDWEB | 12,298 | 12,751 |

| PRIVATESYSTEMS | 11,343 | 8036 |

| IONOS SE | 10,130 | 9874 |

| CLOUDFLARENET | 10,071 | 13,127 |

| Linode, LLC | 9329 | 11,199 |

| Combination of NS Providers | Domain Name # | Shared Name Server | Shared IP Provider |

|---|---|---|---|

| nsone.net; squarespacedns.com | 1,393,701 | - | - |

| dnsnw.com; nagor.cn; nagor.com.cn | 53,756 | DNSPOD.NET | - |

| dan.com; undeveloped.com | 29,444 | CLOUDFLARE.COM | AMAZON-02 |

| worldnic.com; register.com | 19,346 | - | NETWORK SOLUTIONS HOSTING |

| 72dns.com; idc1.cn | 15,882 | 72DNS.COM | WeiYi Network Technology Co.,Ltd |

| beonintermedia.com; jagoanweb.com; jagoanhosting.com | 14,258 | - | PT. Beon Intermedia |

| cdnhost.cn; dnsfamily.com | 12,246 | DNSFAMILY.COM | Chinanet |

| ultradns.{org; com; net; biz}; nsone.net | 12,023 | - | - |

| nsone.net; cloudflare.com | 11,798 | - | - |

| babies-fluffy.com; form-potato.com | 8818 | DNSV.JP | ARTERIA Networks Corporation |

| Rcode | # |

|---|---|

| 5 (REFUSED) | 545,941 |

| 0 (NOERROR) | 51,216 |

| 2 (SERVFAIL) | 12,095 |

| 3 (NXDOMAIN) | 3517 |

| 9 (NOTAUTH) | 481 |

| 4 (NOTIMP) | 42 |

| 10 (NotZone) | 4 |

| 1 (FORMERR) | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, C.; Zhang, Y.; Shi, F.; Shan, H.; Guo, B.; Li, Y.; Xue, P. Measuring the Centrality of DNS Infrastructure in the Wild. Appl. Sci. 2023, 13, 5739. https://doi.org/10.3390/app13095739

Xu C, Zhang Y, Shi F, Shan H, Guo B, Li Y, Xue P. Measuring the Centrality of DNS Infrastructure in the Wild. Applied Sciences. 2023; 13(9):5739. https://doi.org/10.3390/app13095739

Chicago/Turabian StyleXu, Chengxi, Yunyi Zhang, Fan Shi, Hong Shan, Bingyang Guo, Yuwei Li, and Pengfei Xue. 2023. "Measuring the Centrality of DNS Infrastructure in the Wild" Applied Sciences 13, no. 9: 5739. https://doi.org/10.3390/app13095739