Featured Application

Pepper, the social robot, includes various capabilities, and exploration of its features and functionalities can provide valuable insights into its strengths and limitations where it may require improvement. In this paper, five capabilities—mapping and navigation, speech, hearing, object detection, and face detection, are considered for investigation. All of them can be employed in various settings, such as schools, hospitals, and the service industry, to enhance the user experience.

Abstract

The application of social robots is increasing daily due to their various capabilities. In real settings, social robots have been successfully deployed in multiple domains, such as health, education, and the service industry. However, it is crucial to identify the strengths and limitations of a social robot before it can be employed in a real-life scenario. In this study, we explore and examine the capabilities of a humanoid robot, ‘Pepper’, which can be programmed to interact with humans. The present paper investigates five capabilities of Pepper: mapping and navigation, speech, hearing, object detection, and face detection. We attempt to study each of these capabilities in-depth with the help of experiments conducted in the laboratory. It has been identified that Pepper’s sound and speech recognition capabilities yielded satisfactory results, even with various accents. On the other hand, Pepper’s built-in SLAM navigation is unreliable, making it difficult to reach destinations accurately due to generated maps. Moreover, its object and face detection capabilities delivered inconsistent outcomes. This shows that Pepper has potential for improvement in its current capabilities. However, previous studies showed that with the integration of artificial intelligence techniques, a social robot’s capabilities can be enhanced significantly. In the future, we will focus on such integration in the Pepper robot, and the present study’s exploration will help to establish a baseline comprehension of the in-built artificial intelligence of Pepper. The findings of the present paper provide insights to researchers and practitioners planning to use the Pepper robot in their future work.

1. Introduction

Social robots play a valuable role in communicating with humans and helping them with daily tasks by understanding human intelligence and behavior. Robots can be used for entertainment purposes, as well [1]. To achieve this competence, the integration of various algorithms [2] and sensors [3] is needed. The advancement of robotics enables the enhancement of capabilities related to cognition and interaction with people [4]. To adequately assist humans in social and work-related situations, robots must not only be cognitively sound but should be able to recognize human emotions as well. They need a broad spectrum of social abilities and an understanding of human behavior based on implicit knowledge of how human brains work [5]. Humanoid robots belong to a group of technologically advanced social robots. These robots resemble humans in shape or behavior to a certain extent [6]. The humanoid robot Pepper is popular in the field of social robotics.

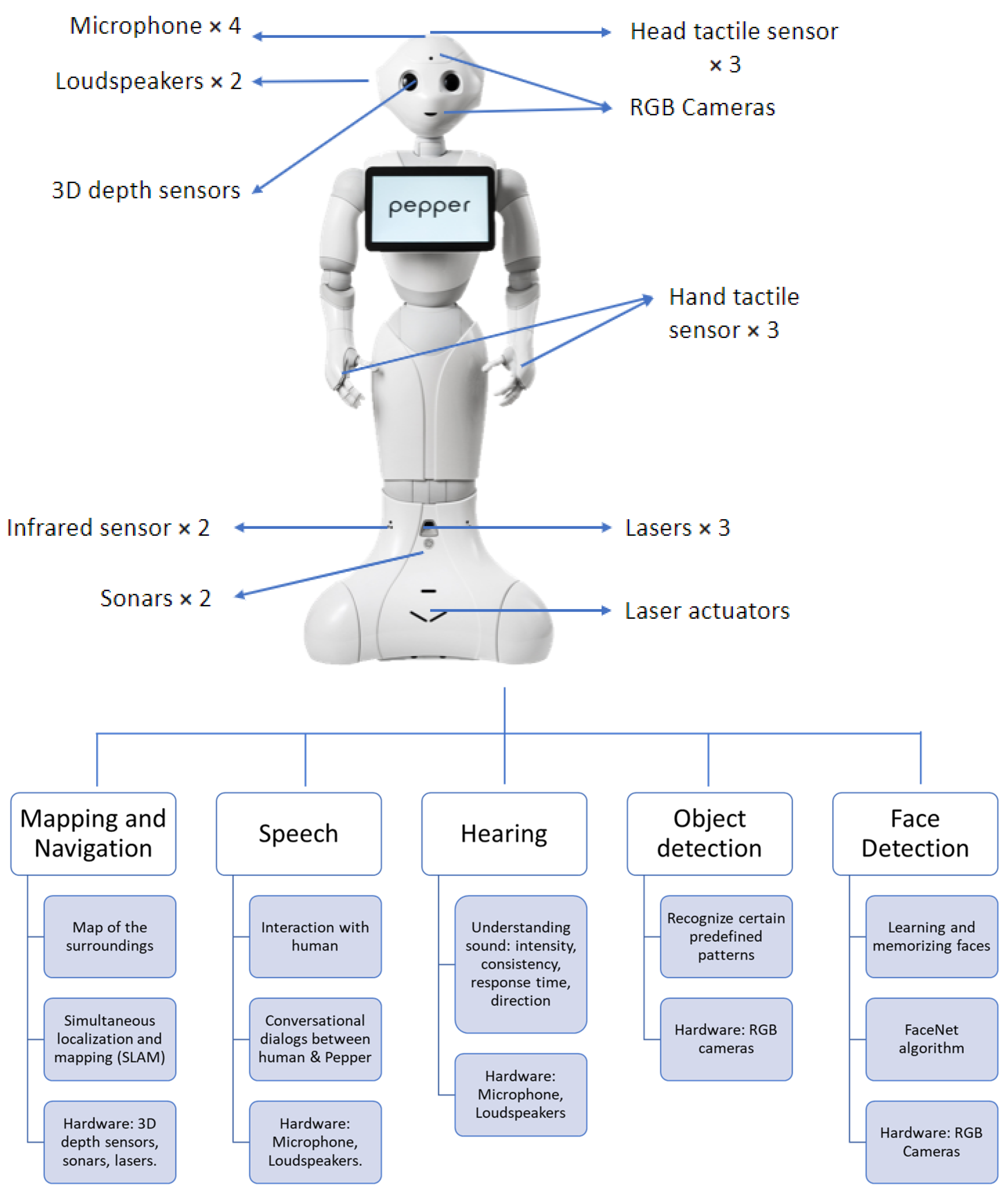

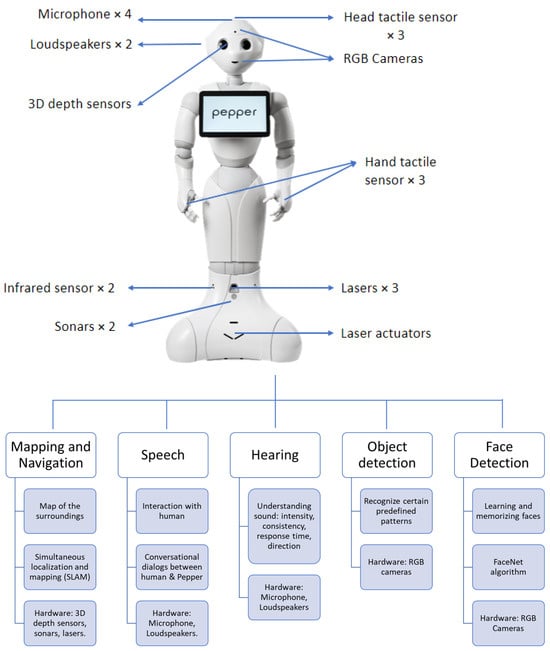

Pepper was developed by SoftBank Robotics in Paris in 2014 [7]. Pepper’s height is 1.2 m, and it is equipped with three wheels and 17 joints. Wheels provide smoothness to its movement. With the help of joints, Pepper becomes elegant and vivid. Due to the long life of its battery, it can work for 12 h comfortably and be charged if needed [7]. Pepper can perceive its environment through various sensors such as microphones and cameras. These sensors enable Pepper’s various functionalities to provide help to humans. The five functionalities of Pepper covered in the present paper are shown in Figure 1.

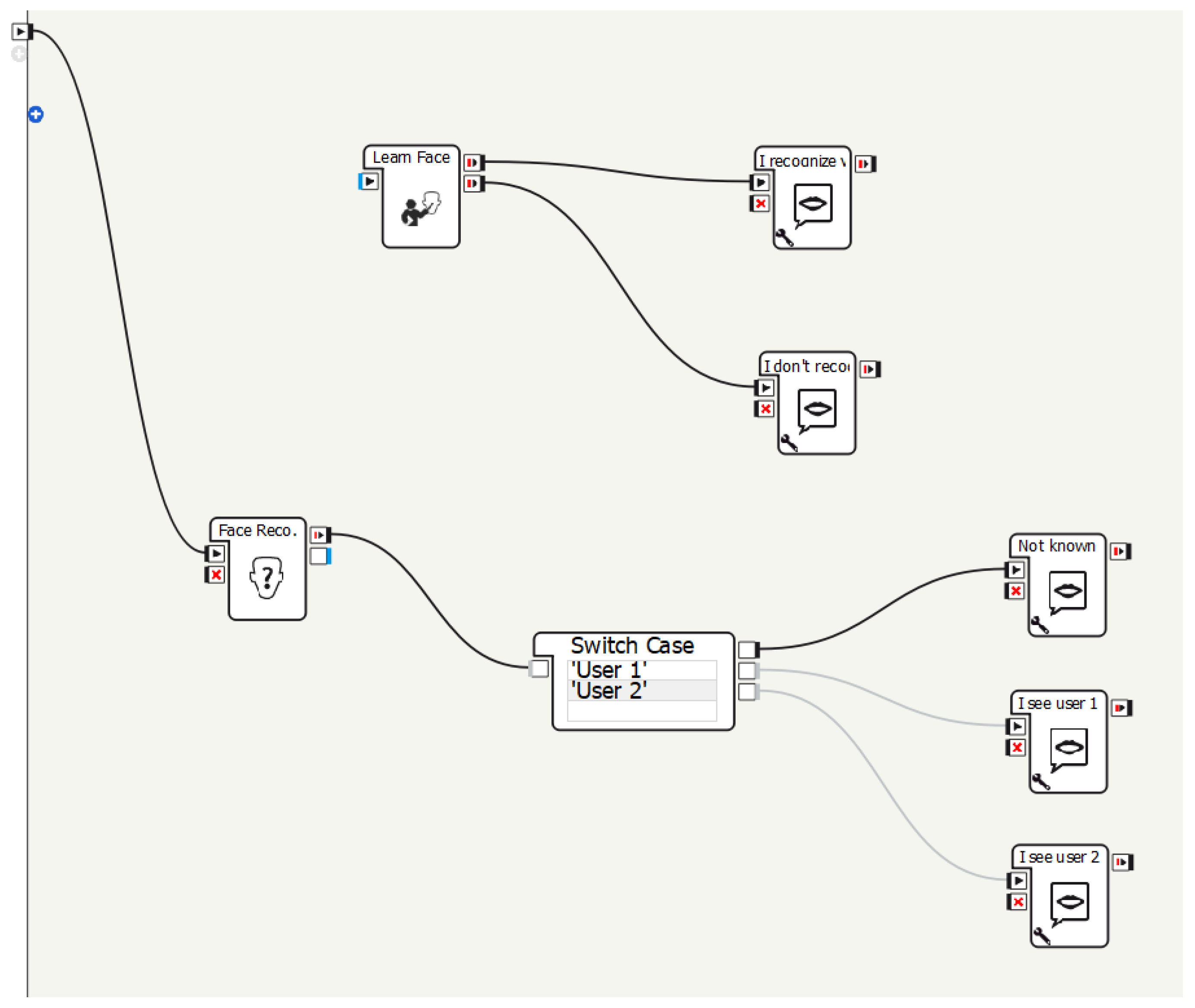

Figure 1.

Pepper’s functionalities and components.

Various studies have been conducted with Pepper since its release in recent years. There are some studies of Pepper successfully working as a companion or a guide in a variety of settings, such as museum guides [8], elderly care [9], automated tours [10], service robots [11], and education [12]. It can also help in children’s education by engaging them in educational games [13]. These studies have demonstrated favorable outputs and acceptance among users to a certain extent. Although there is immense potential in terms of Pepper’s application in the real world, it can be challenging to realize in a real setting, depending on the environment and the limitations of Pepper’s functionalities. The combination of these functionalities can be used for special tasks. For example, Pepper, as a teaching assistant or campus guide, could roam around and help a person with more personalized interactions. Pepper could also provide a supportive or compassionate message according to the user’s reactions.

To the best of our knowledge, there are only limited studies that have examined the accuracy of Pepper’s multiple functionalities together during experimentation. Ghita et al. [14] focused on various functionalities of Pepper, such as navigation and obstacle avoidance, speech, and dialogue management. In another study, Gardecki and Podpora [15] reported their experiences working with Pepper along with its comparison with the NAO robot. In their study, modules such as people’s perception, dialogue, and movement are explored with acceptable results; however, the tests involved only simple movements and dialogues. Draghici et al. [8] explained only the capabilities related to face and picture recognition, even though the validation was not included in the study. Therefore, this study aims to provide a realistic assessment of Pepper’s features and their practicality in real-world scenarios. This paper reports the results of a series of experiments conducted with Pepper to evaluate the efficiency and response behavior concerning its different functionalities, namely, navigation, speech and hearing, and object and face detection.

2. Related Works

In the past, studies were conducted by utilizing different functionalities of Pepper, such as hearing and speech, navigation, and object and face detection. Hearing and speech are important aspects of social robots because they can help to build a connection between a user and a robot. Pepper was designed to understand human speech through speech recognition systems and respond to humans accordingly. Pepper is equipped with microphones and loudspeakers. Moreover, Pepper is aware of its surroundings. When Pepper hears a sound, it orients itself toward the person who created the sound (claps or voice), establishing and maintaining eye contact with that person. Carros et al. [16] found that Pepper’s speech recognition was not always accurate with elderly people, while Ekstrom et al. [17] noted that it had difficulty interacting with children. However, children were found to be motivated and engaged in the presence of Pepper [18]. Pande et al. [19] demonstrated the challenges related to speech recognition capabilities and proposed that integration of Pepper with Whisper (Version 1.0), a speech recognition software, could be a potential solution for students struggling to understand a teacher’s accent.

Pepper’s navigation capabilities enable it to move around its environment, determine its position inside a reference frame, and find safe routes to a specific destination. Most of the robot’s navigation systems use simultaneous localization and mapping (SLAM). It facilitates the mapping of the environment and the location of objects to produce a safety map [14]. Alhmiedat et al. [20] highlighted the problems with Pepper’s navigation system. Gomez et al. [21] mentioned that due to the small-range LIDARs and RGB, Pepper is not able to self-localize in larger surroundings. Silva et al. [22] studied Pepper’s navigation with and without obstacle avoidance and illustrated that without obstacles, its success rate is higher. Ardon et al. [23] utilized the combination of SLAM and object recognition systems to enhance Pepper’s capability in indoor surroundings.

Pepper is equipped with 3D depth sensors and cameras, which help Pepper navigate and recognize objects in the environment and facial recognition. Pepper can locate and identify certain predefined patterns called NAOMarks [24]. Those patterns are unique circular predefined shapes, each with its identifier, and Pepper can execute commands based on the identified NAOMark. They can be utilized in optimizing object detection or self-location of the robot by placing specific items or objects in the location Pepper is attempting to map or to trigger a specific instruction. Examples could include warnings about dangerous objects or areas to be avoided (edges, difficult terrain), objects to be sought after, or even corners, columns, and tables, which may hinder Pepper’s mapping progress.

Face detection functionality is available in Pepper. First, each potential face is captured by one of Pepper’s cameras and then transmitted to a local machine to detect a face. In addition, it can recognize various traits, with tools like age detection and basic emotions embedded into its framework. Gardeki et al. [25] reported that built-in face detection capability is not useful in real scenarios. Previous studies indicated that Pepper was utilized with artificial intelligence techniques for face detection. Greco et al. [26] integrated Jetson TX2 with Pepper to analyze facial emotions using the deep learning method. Similarly, Reyes et al. [27] incorporated Jetson TX1 and a battery with Pepper to identify objects in images.

3. Methodology

Pepper’s functionalities (as shown in Table 1) were programmed using Python and Choregraphe [28]. These tests were carried out with laptops in the research laboratory of NTNU. A laptop with the configuration of Windows 10 Operating system, Intel Core i5-10300H CPU @2.5 GHz, 8 GB RAM, 512 GB HardDrive, was used.

Table 1.

Services used to implement different functionalities of Pepper.

3.1. Mapping and Navigation

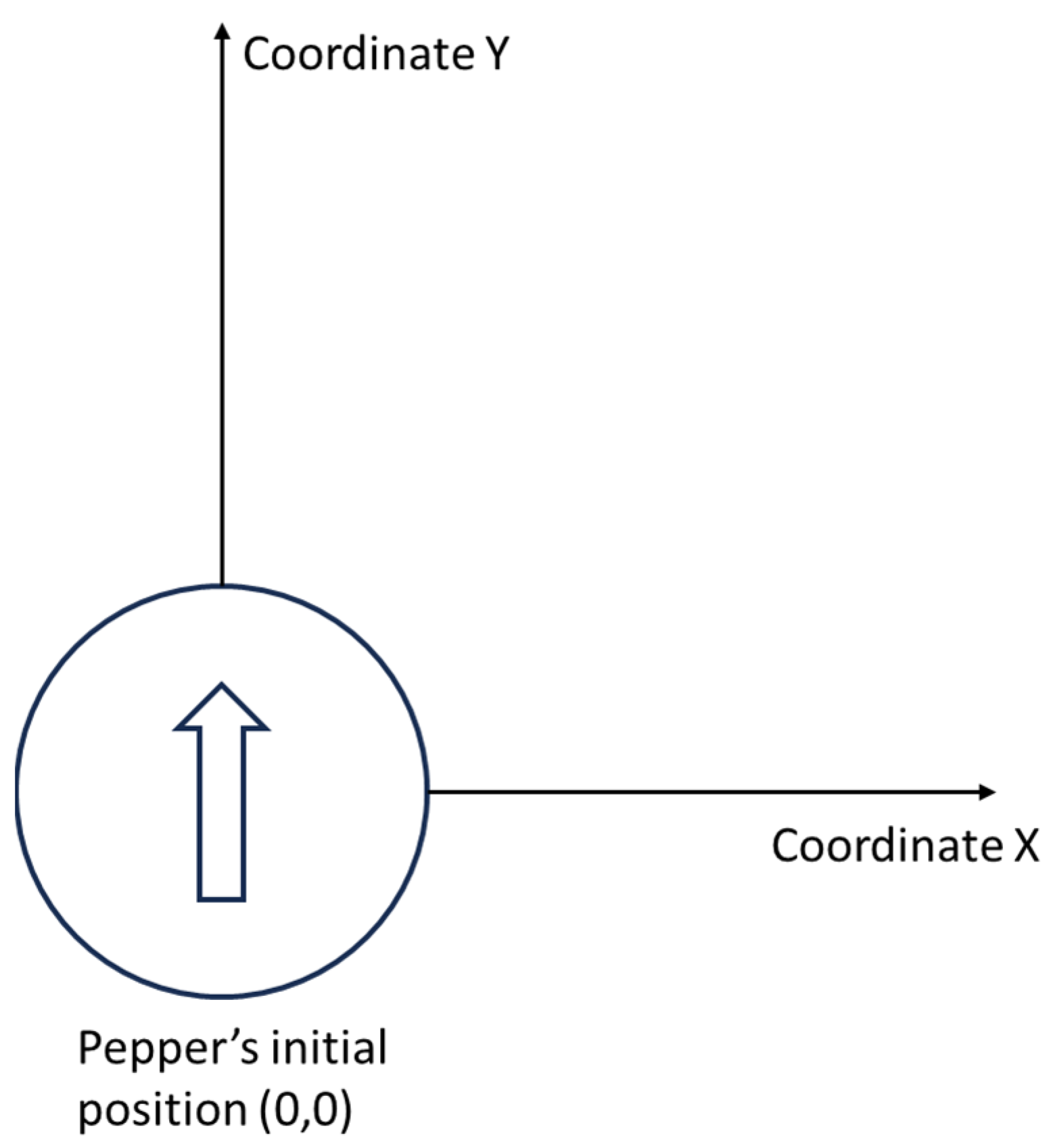

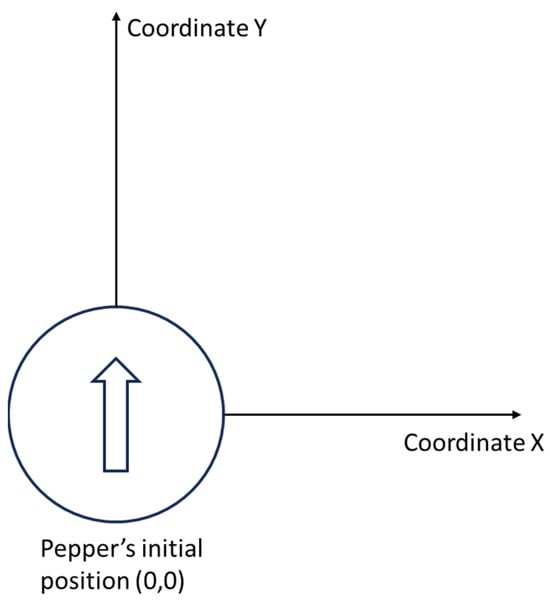

The SLAM functionality of Pepper has a main module, ALNavigation, with two components—API Exploration and API Navigation. Exploration API uses Pepper’s odometry and lasers to perform SLAM. For this purpose, the only parameter that needs to be specified is the radius of the desired area to be explored. Within that radius, Pepper starts moving and mapping the surroundings, which generates a safety map in the form of a tridimensional array of values (X, Y, Obs). Pepper’s coordinate system is illustrated in Figure 2. The first two values in the array correspond to the spatial coordinates (X, Y) of the area that was explored, whereas the third value (Obs) indicates the presence (1) or absence (0) of an obstacle. Pepper’s starting point will be located as the coordinate (0,0), orientation of the coordinates X and Y will be defined, taking into consideration Pepper’s initial orientation (before the exploration starts). This array can be used to generate an image that corresponds to the mapped area.

Figure 2.

Pepper’s coordinate system in 2D.

Navigation API allows Pepper to self-locate and move in safe trajectories based on an obstacles map of the near environment (also called Safety Map) instead of direct obstacle avoidance using sensors. The function to perform the navigation is called move to () and can receive as parameters a desired point in the map using its coordinates X and Y and the value θ, which corresponds to the desired final orientation.

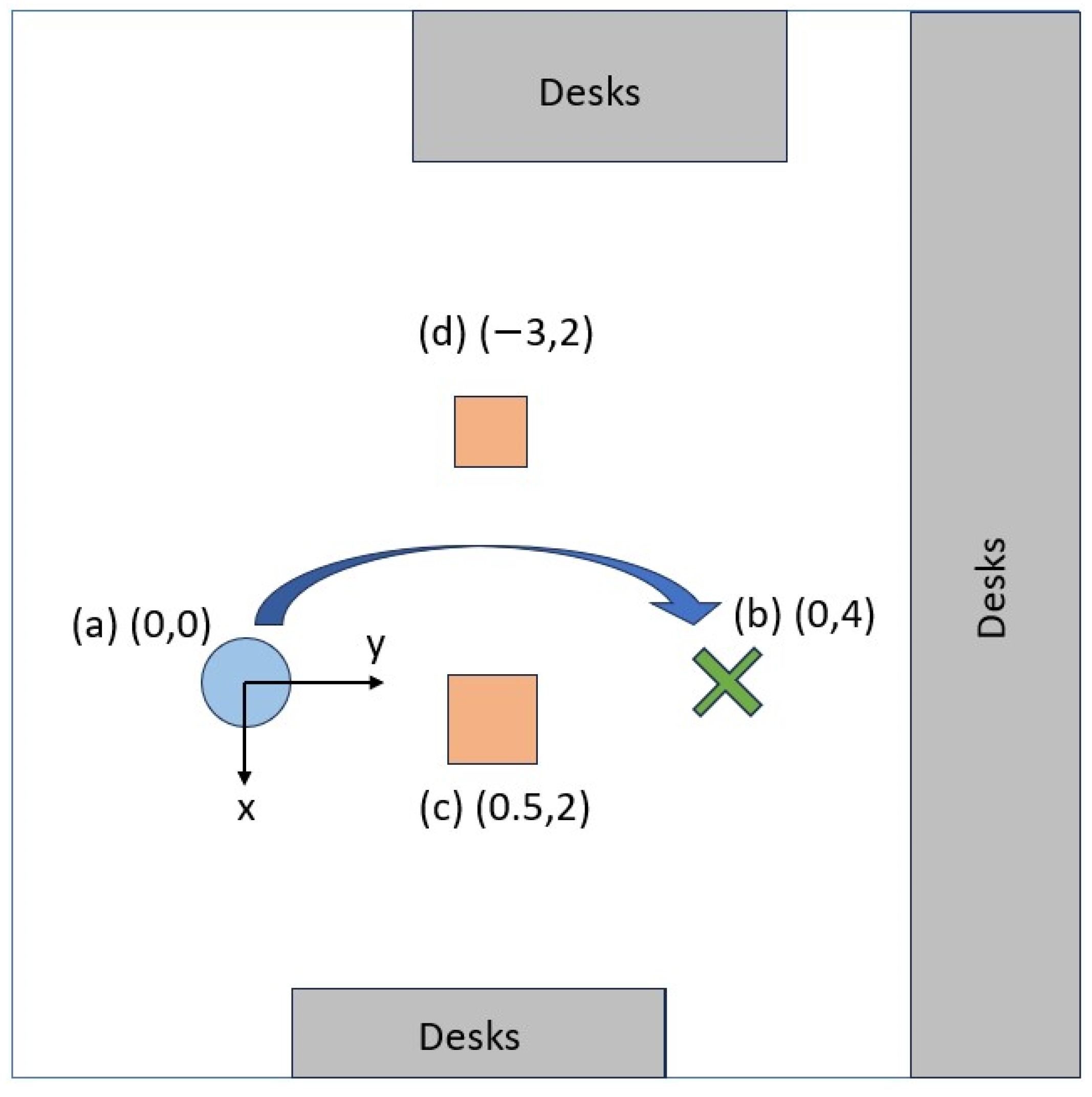

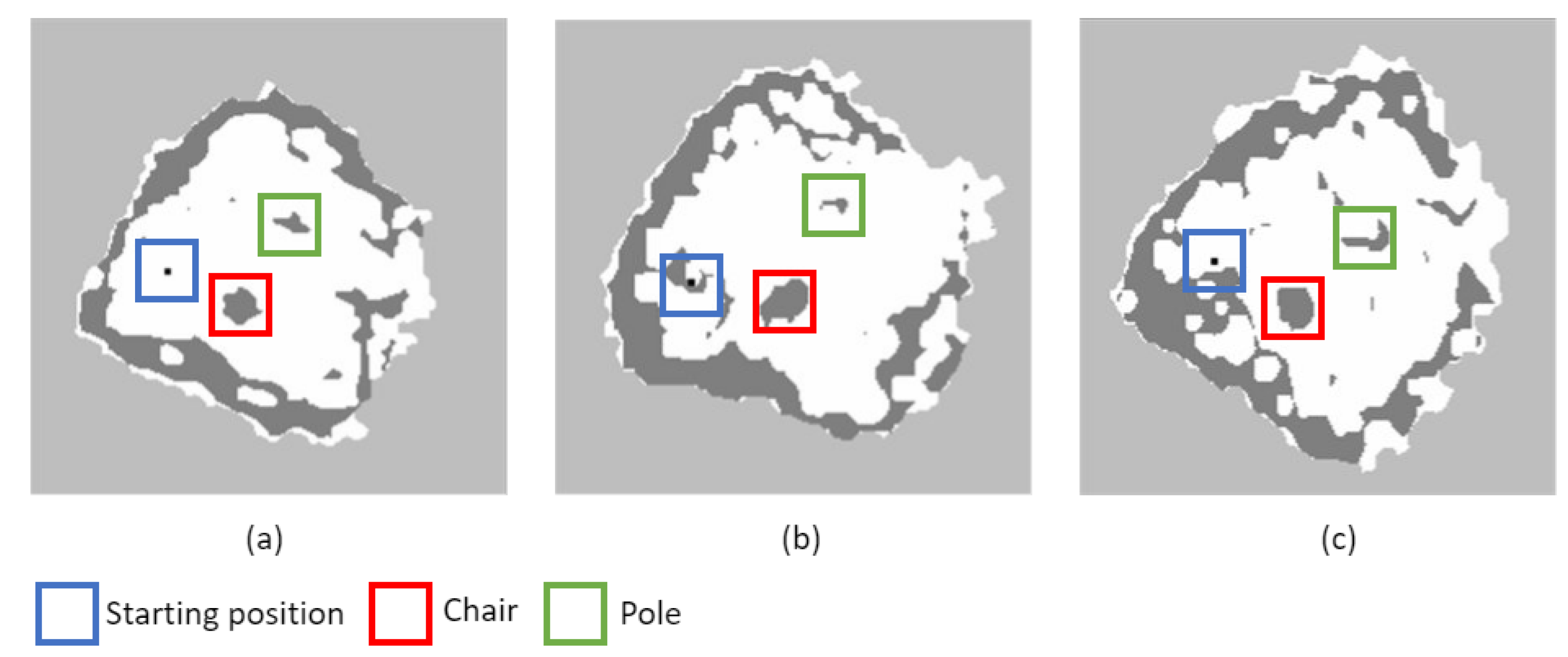

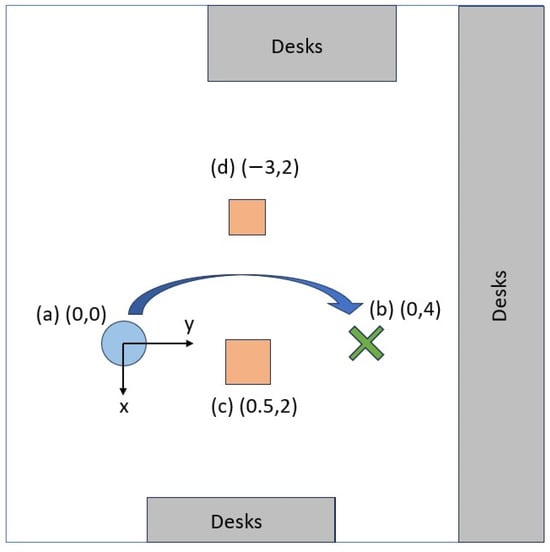

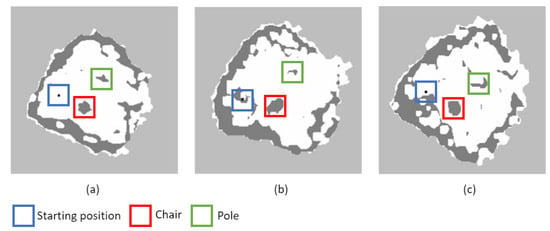

To test mapping and navigation functions, the experiment was conducted with Pepper in an 8 × 8 m room. The room had obstacles, such as desks and chairs next to the walls, one pole in the middle, and a chair that was placed about 2 m in front of Pepper. Figure 3 presents the initial setup of the laboratory where the experiment was conducted.

Figure 3.

Initial setup. (a) Pepper’s initial position, (b) Intended Final Position, (c) Chair, (d) Pole.

The purpose of each test is to evaluate Pepper’s navigation after the exploration function was performed. Both exploration and navigation were completed three times to compare results. The exploration radius was specified as 4 m. A mark was painted to ensure Pepper would start in the same position and orientation. Three tests were conducted with the same light conditions inside a quiet and controlled room. Finally, Pepper’s desired destination was defined 4 m ahead (see Figure 3), and Pepper was asked to navigate to that coordinate using the generated maps. It is important to note that this study uses and tests the SLAM module incorporated in Pepper without any modifications.

3.2. Speech Capability of Pepper

The assessment of Pepper’s speech capabilities involved four distinct tasks aimed at evaluating different aspects of its interaction with speech inputs. Each task targeted specific facets of Pepper’s speech recognition and generation abilities, aiming to gauge its proficiency in managing simultaneous inputs, perceiving speech from varying distances, maintaining consistency in responses, and understanding diverse accents.

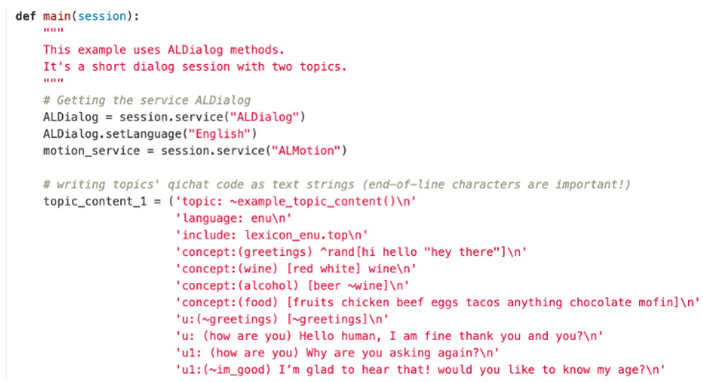

Speech capability was evaluated mainly by using the ALDialog service. The session with Pepper was initiated by establishing the connection via its IP address. Python libraries such as qi, argparse, and sys were also used. Two types of rules can be used to realize speech functionality. The first is the ‘User rule’, which will link the specific user input to the robot output. The second rule is the ‘Proposal rule’, which will trigger specific robot output without any user output beforehand. Both rules are utilized in the study. The rules were further grouped into topics to manage the conversation. To begin with, a short dialogue session was coded with two topics (collection of similar information), as given below. The process involved fetching the ALDialog service with the language as English. Topic 1: Example topic content: Contains a basic conversation, including some concepts, and two depth conversation layers were added (Appendix A).

The dialogue engine was subscribed once in the beginning for all the loaded topics using the ALDialog.subscribe function. Completion of the conversation can be achieved by pressing the ‘ENTER’ key. This dialogue engine can deactivate and unload the topics using ALDialog.deactivateTopic and ALDialog.unloadTopic, respectively. Additionally, we used the ALMotion service to control the gestures and posture and have general conversations to make it look natural and human-like during the conversation.

Topic 2: Touching. There is an event embedded in this dialogue. When Pepper’s head is touched, a dance routine will start. Afterward, this topic will conclude with a brief conversation asking the user what he thinks about the movements. This topic involved acknowledging the touch by evaluating the ‘OR’ operation on events like e: FrontTactilTouched, e: MiddleTactilTouched, e: RearTactilTouched. These events are triggered by touching the corresponding body parts of Pepper. The body movements are rendered for Pepper using a script to define a list of names, times, and keys that ALMotion API uses. The names can be the body parts with actions like ‘LElbowRoll’, which specifies the times, and the key list can configure movements for Pepper. Next, based on the conversations, certain named actions were called to perform the dance by choreographing the movements for Pepper in the user-defined ‘dance’ function.

The evaluation method comprised a structured series of tasks and interactions aimed at assessing Pepper’s speech capabilities. In each task, Pepper received specific questions or statements, with the number of repetitions logged to ensure evaluation consistency. Observations focused on various aspects of Pepper’s response, noting instances of no response or confusion, assessing response times to stimuli, analyzing consistency in Pepper’s replies across multiple trials, and observing its proficiency in managing simultaneous interactions or inputs. These observations provided a comprehensive understanding of Pepper’s performance in various speech-related scenarios.

3.3. Hearing Capabilities of Pepper

The evaluation of Pepper’s hearing ability involved a well-organized sequence of activities and exchanges. Pepper’s hearing capabilities were tested for five tasks containing multiple inputs, distance, consistency in response, response time, and elevation detection.

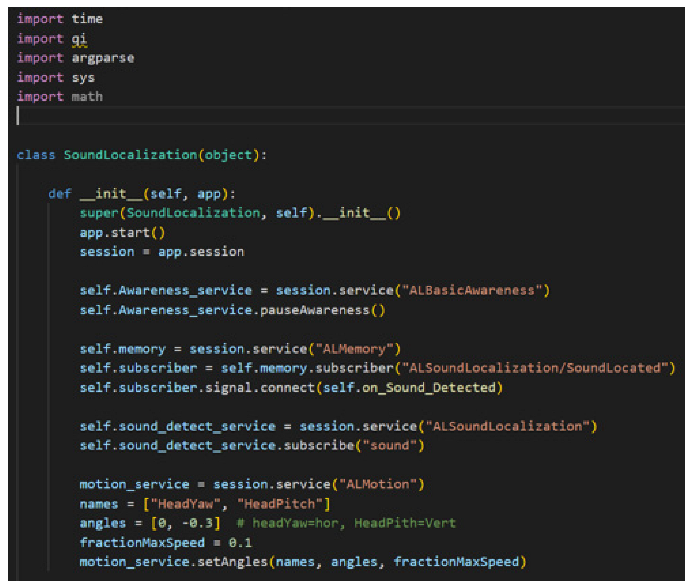

The experiment was performed to understand Pepper’s hearing capabilities or sound perception. It involved approximation of sound and locating the origin of sound to make Pepper rotate in the direction of the sound and, further, move along until an obstacle is found in the given radius.

First, the qi framework has to be initialized by giving the port and IP address in the connection URL. Then, a class is defined to determine the experimentation method (Appendix B). Pepper is automatically aware of its surroundings, and this basic awareness enables the robot to be aware of the stimuli coming from its surrounding environment. For instance, Pepper establishes and maintains eye contact with people using this service. Therefore, for this test, the awareness is paused, and the services ALMemory and ALSoundLocalization are used to subscribe to the events of ‘sound located’ and ‘sound’. To have Pepper move, the ALMotion service is used. The program runs until it is interrupted by an external keyboard input, in which case it restores Pepper’s awareness via the service mentioned before. In the event of sound, the function onSoundDetected is triggered, which collects the coordinates of the sound origin and rotates Pepper using the coordinates fetched by providing it in the theta parameter for rotation. Furthermore, it guides Pepper to move in the direction of the sound using the ALMotion service and x, y parameters if the rotation is successful until it encounters an obstacle in the given radius.

The evaluation method involved various experiments assessing Pepper’s response to different aspects of sound, including intensity, distance, consistency, response time, and elevation detection. These experiments aimed to determine Pepper’s accuracy, reliability, and speed in perceiving and responding to auditory stimuli from its surroundings.

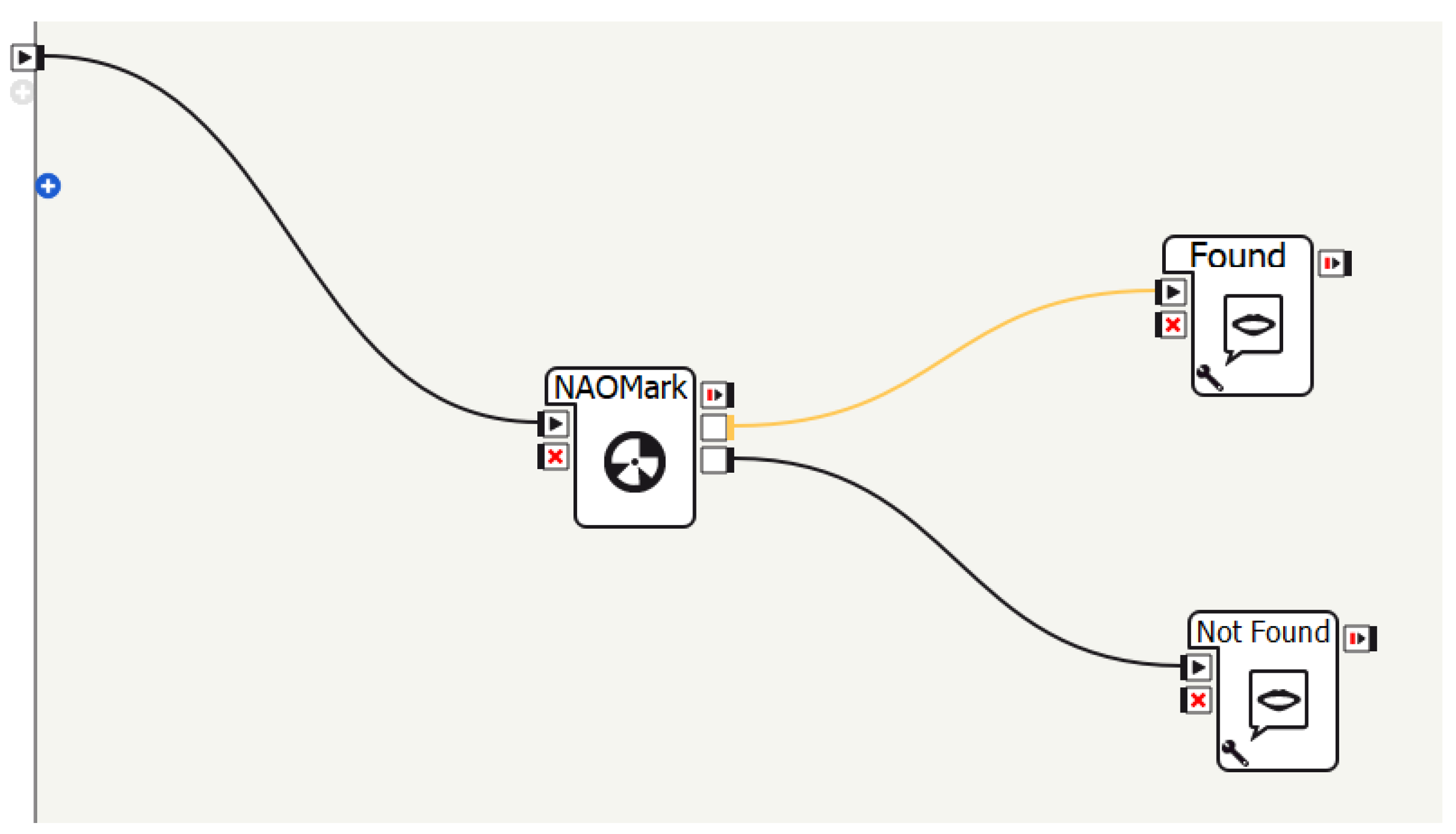

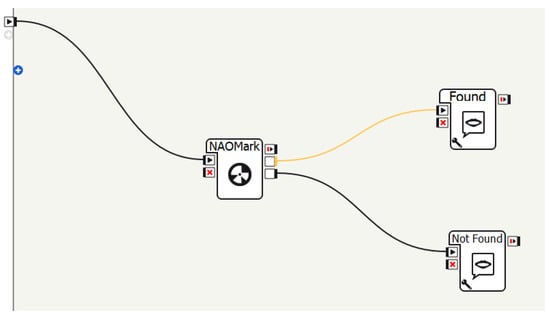

3.4. Pattern Recognition

The pattern recognition capability of Pepper was assessed utilizing NAOmarks. To accomplish this, tasks were created to check the best appropriate distance for identifying two different size NAOMarks. The detection is mainly achieved by the use of the ALLandMarkDetection vision module preinstalled in Pepper and is easily accessible with either the Choreographe and monitor supporting apps or directly being added to the code. It is necessary to test their limitations and present their maximum observed distance for the potential user to easily decide whether they can be a viable asset in their work. To do this, two different-sized NAOMarks, with diameters 5.5 and 9 cm accordingly, were placed in Pepper’s vision at various distances. The maximum and minimum distances from which Pepper can detect them and identify their code were then measured. The laboratory where the experiment was conducted is situated in the basement with partial windows. Therefore, this space is dimly lighted during the daytime in the absence of artificial lights. When the window blinds are closed, the laboratory becomes extremely dark in the absence of internal light sources. Artificial internal light sources were used to illuminate the laboratory, including the surrounding areas of the NAOMarks and Pepper. The experiments were performed in varying light conditions from no light, dim light, and strong light, as well as having the light source pointing directly at Pepper or the NAOMarks. These experiments were performed only with the use of Choreographe, Pepper’s GUI, and built-in libraries (Figure 4).

Figure 4.

Example of a simple NAOMark detection implemented with Choreographe.

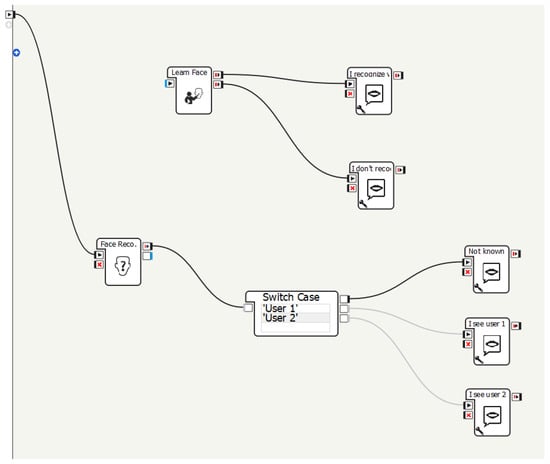

3.5. Face Detection by Pepper

In order to evaluate Pepper’s ability to learn and recognize user faces, a tool that can be used in various applications, we mainly tested Pepper’s ability to identify users with facial accessories at various distances and angles. The experiments were performed with the use of Choreographe. Four volunteer users were recruited for the investigation. In order to test Pepper’s range in terms of proximity, the users were positioned at different distances from the robot. The human volunteers were placed opposite Pepper. Two of them were placed at a close range, whereas the other two were at a long distance.

The faces of the users were learned by Pepper using Choreographe’s “learn face” integrated mechanic (see Figure 5). The experiment started by executing the “learn face” and learning each user’s face from different angles. Multiple shots of the same user were needed for training so that the Pepper could recognize all the users at least once at close range with direct and 25 degrees of eye contact. Pepper could learn the same subject from different angles; however, these images were stored with other names. The naming was conducted in a way to protect the identity of a person. The volunteers stood in front of Pepper at a close range of less than one meter and at different angles. The users first positioned themselves in front of Pepper by looking directly at it (0 degrees), at 25 degrees, at 45 degrees, and finally at 90 degrees from both sides. We evaluated Pepper’s ability to recognize the users at different angles and with simple everyday accessories that can cover part of the face. The accessories used were a bini, scarf under the nose (Scarf/UN), scarf over the nose (Scarf/ON), and sunglasses.

Figure 5.

Example of a face learning/detection test in Choreographe.

4. Results and Discussions

The output of five capabilities is demonstrated in this section.

4.1. Map and Navigation Functionality of Pepper

Pepper’s initial position was the same in each of the three tests; the generated map was slightly different in the first two cases and very different in the third case.

First test: The result obtained in the first test indicated that Pepper can identify the position of the chair and the pole, but it is not capable of predicting their shapes correctly, specifically the pole, which is supposed to be a perfect square. The surroundings, which consist of desks and chairs, are represented on the map with a different and more irregular shape than the real one; however, distances among objects are acceptable but not precise. There are also some dots on the map (Figure 6), representing obstacles in the room. In terms of navigation, results using this map are not consistent. A starting point was specified in the room from which Pepper needed to move straightforwardly for 4 m. However, because a chair was in the middle of the route, Pepper was required to find the best way to evade this obstacle. In the first attempt, Pepper walked toward the pole; then, it returned to the starting point. In the second attempt, Pepper went directly to the designated point surrounding the chair but missed the goal by 0.5 m. In a third trial, Pepper evaded the pole and the chair and went to the destination; it missed the exact location by 0.6 m. None of the three attempts can be considered successful due to the routes that Pepper took and because the desired position was not reached.

Figure 6.

Results of Map and Navigation functionality of Pepper (a) first test, (b) second, and (c) third test.

Second test: The second map exhibited a similar result as the first map in terms of how the shapes of the objects were represented and the room’s configuration; however, two significant differences were noted. Firstly, the coordinate system was rotated even though Pepper’s initial orientation was the same at the beginning of all tests. We did not find a way to set Pepper’s orientation programmatically. Due to this random rotation of the coordinate system, it was impossible to know the coordinates of a given point in the room beforehand. Second, Pepper reported many false or nonexistent obstacles nearby; the cause of this problem is unknown, but it also impacted the navigation test. As Pepper was surrounded by these nonexistent obstacles on its map, it was not capable of moving when we tried to move Pepper to specific points.

Third Test: In the third map, the acquired results were similar regarding precision and representation of the objects. This test also showed non-existing objects close to Pepper, but at least Pepper was not surrounded this time. Similar navigation tests were conducted as with the first map. In this case, the three attempts failed regarding the expected route and the location of the final position. This is supported by Alhmiedat et al. [20], who reported an average localization error of 0.51 m and 86% user acceptability.

We found a lack of precision in orientation in all three tests. The parameter θ in the function move_to() is not helpful, and a different approach is recommended, for instance, the one proposed by Silva et al. [22]. Additionally, since the SLAM operation is a blocking call, Pepper will not be able to carry out additional commands until the exploration is complete. This is problematic since it makes it impossible to follow the procedure and does not allow the possibility to provide more feedback regarding the location of Pepper or the surrounding objects. The “vanilla” Pepper’s SLAM (simultaneous localization and mapping) capabilities were also described in [22]. The study [19] shows a generated explored map example and an alternative method for addressing the lack of orientation. Although promising results were reported, the studies were conducted in a constrained space, suggesting additional research. Previous studies have described the improvement in Pepper’s existing functionality, such as improving locomotion using control theory [31], 3D depth perception [32,33], and another using a combination of monocular perception and the in-built 3D sensor, navigation, and localization using the Robot operating system (ROS) [34], ORB SLAM [21], and an improved version of ORB SLAM 2 [23].

4.2. Speech Capability of Pepper

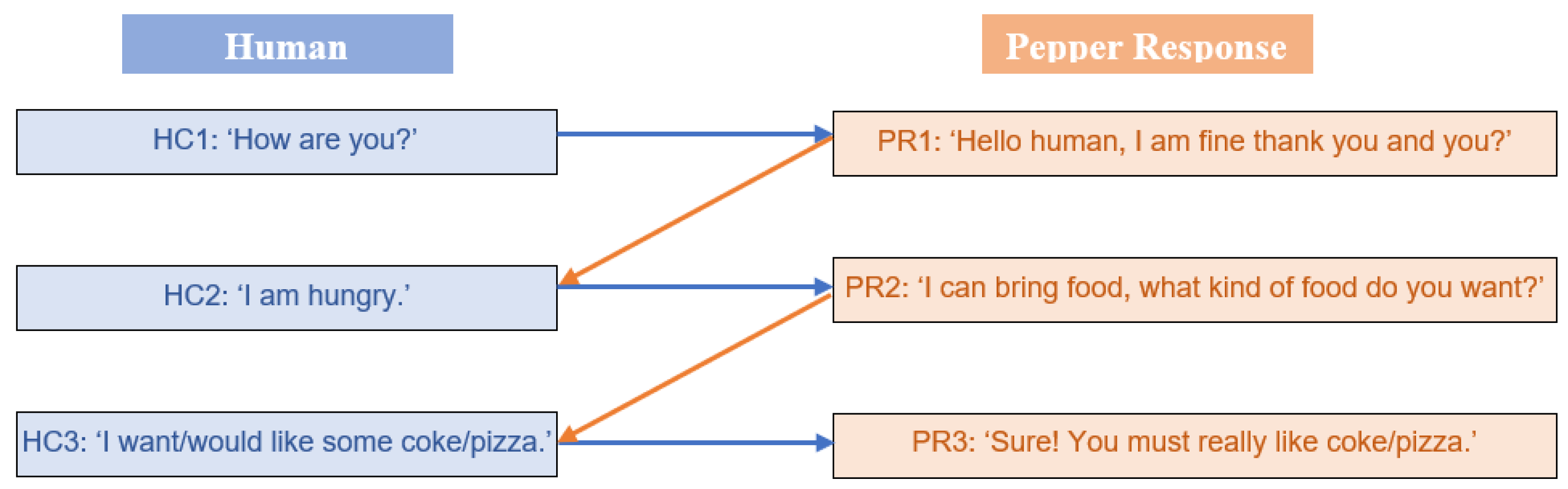

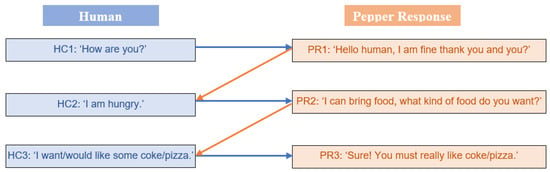

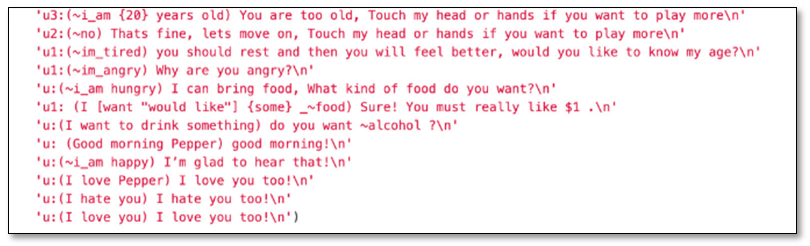

A conversation was established between humans and Pepper using dialogs. These conversations were about general topics such as the exchange of greetings and responses. One such dialog establishment is shown in Figure 7. For instance, when Pepper was greeted using the defined concept of greetings, Pepper was programmed to respond to the user by selecting any greeting from the user-defined concept of ‘∼greetings’. When Pepper was asked, ‘How are you?’, it would say, ‘Hello human, I am fine, thank you, and you?’. Further, if you repeat the question ’∼I am hungry’, Pepper will then say, ‘Why are you asking again?’. Thus, based on user inputs, further conversational levels were defined to check the speech capabilities. Furthermore, predefined concepts like ‘∼I don’t feel good’, ‘∼I’m tired’, ‘∼I am hungry’, and ‘∼I am happy’, along with other concepts, were used to build conversations with Pepper.

Figure 7.

Conversation chain between human and Pepper.

In another instance, if you say to Pepper, ‘I am hungry’, it will say, ‘I can bring food; what kind of food do you want?’. If you say, ‘I want or would like some pizza’, it will respond, ‘You must like pizza’. Appendix C shows the code snippet used in the above instance to build conversation and relate to user inputs by capturing the user’s words as parameters during the conversation while allowing variations in their response choices. As seen in the code, Pepper allows users to choose sentences like, ‘I want some Coke’, or ‘I would like some pizza’, or ‘I want some pizza’, and captures the user’s response as a parameter and embeds them into its subsequent response—‘Sure! You must like Coke.’ or ‘Sure! It would help if you really liked pizza’, based on user inputs. Table 2 describes the results of these scenarios.

Table 2.

Results of speech recognition and generation.

The results show the inconsistency in the response of Pepper to the speech stimuli. For instance, the same conversations held at various times did not evoke the same response from Pepper. Pepper also stayed unresponsive at times, and sometimes, it responded slowly. Pepper was also confused when multiple people were communicating with it. Stommel et al. [35] also reported miscommunication issues encountered during their study, where older people were enrolled in surveys with Pepper.

4.3. Hearing Capabilities of Pepper

Table 3 shows the response of Pepper to various experiments. During the experiment, it was noted that the sound detection and rotation were not accurate. For example, when Pepper is moving along the direction of the sound, if it encounters another sound, then it is deflected from the first sound and follows the direction of the second sound. This implies that Pepper monitors the latest event of sound. Further studies are required to establish the sensitivity of Pepper toward the sound in terms of the frequency, intensity, and distance of the sound and if there is any specific orientation of Pepper in which the hearing capabilities are at maximum level.

Table 3.

Results of hearing capabilities of Pepper.

4.4. Recognition of Patterns by Pepper

During the experiment, Pepper was subjected to a varying range of light from dim to bright illuminated environments. Pepper had no difficulty in pattern recognition with varying lighting conditions except under certain conditions. Notably, when a direct light source was directed into its camera, such as a flashlight from a phone, Pepper faced difficulties. Similarly, Pepper faced issues when operating in a completely dark room, without any external or internal light sources, or when the lights were turned off. Moreover, even during the day, the laboratory did not have sufficient brightness for optimal performance. Concerning the size of the NAOMarks and the distance, the experiment proved that Pepper could detect a pattern accurately without missing it or losing detection. It mainly depends on the NAOMark size. As seen from Table 4, Pepper can detect a mark of 5.5 cm diameter from a distance of around 1 m away and marks of 9 cm diameter around 3 m.

Table 4.

Results of pattern recognition.

Our next step in pattern recognition would be human pattern recognition, more particularly hand gestures, which will be translated into simple commands or behavior control. Behavior control could be directed by gestures (as an alternative to sound commands), where the robot can detect a user’s gestures like thumbs up, thumbs down, or clapping. Those could be used as starting points for basic automatic learning through external commands. For example, Pepper could be used in another research to perform certain actions such as specific move sets or dialogue training. The commands could be implemented with the aid of an external image recognition pattern [36], which will then have to be integrated with Pepper’s systems. Initially, the commands to be tested will be simple commands like stop and come running in parallel with another existing movement function; after this step is successfully implemented, gestures like pointing directions firstly directly left and right and, in a later stage, with more specific direction.

4.5. Face Detection by Pepper

The experiment was conducted by testing distance and various accessories from various angles and whether the user was maintaining eye contact with Pepper (Table 5). Initially, the users maintained eye contact with Pepper and looked at Pepper directly at 25° and 45° angles. Afterward, the users looked past the robot at 25°, 45°, and 90°.

Table 5.

Results of face detection.

The long-range tests were initially implemented at 2 m, but the results were unsatisfactory, and the recognition of anything beyond direct eye contact was wildly inefficient; the face recognition took extensive amounts of time, and it was practically incapable of detecting anything over direct eye contact, and this with minimal accuracy. In order to test something meaningful, we moved the users closer to Pepper until we found a distance that provided some recognition under the angle. This proved to be a limitation of Pepper’s recognition ability in a well-lit room.

During the first phase, both users wore a bini. After the second take, Pepper was able to recognize one of the users with the same success as the non-accessory phase, but it failed with the other on every angle again. Pepper faced difficulty recognizing a user after a change in hair/eyewear, other facial solid characteristics, or even location and environmental changes. To counter these problems, the learning process must be repeated, and the face must be re-entered into the database. After several days of learning, Pepper’s accuracy improved.

It is essential that the user’s face is depicted and the user has a neutral face. It can be challenging for Pepper to recognize a face where the user is showing strong emotions. It is also worth mentioning that Pepper had difficulty learning the faces of people with strong Asian characteristics, such as participants from Cambodia and Nepal, sometimes even after multiple attempts. We can easily observe that Pepper bases its recognition mainly on eye contact, distance, and the central part of the face. Lighting, as long as it was adequate for a human to distinguish clear images, did not hinder its sight for face recognition or pattern detection. Moreover, on multiple occasions, it was required to inform the users that there could be minor difficulties and that they may have to reposition themselves for Pepper to understand and recognize them.

Another approach could be, should the need arise, to retrain Pepper for the same users but with some common accessories they use often. However, those ‘new’ faces must be stored under different names and have the remaining code or Choreographe boxes adapted to this. This is not encouraged because it will lead to significantly higher costs and time for both the users and the developers, and it can be rendered useless when the accessories change (e.g., the user utilizes a new pair of glasses).

Other studies related to face detection have also shown mixed results. It has been reported that although Pepper was able to record adequately and store persons’ faces and later greet them with personalized messages, sometimes difficulties were encountered when faces were registered, and concerns were also raised for the security of the existing frameworks and whether they are prone to misidentification [37]. Another limitation of Pepper regarding the face recognition algorithm is the lack of 3D person representation ability. During the recognition process, it utilizes distances between key points, which are altered with every head movement. Five captions of the same face are necessary to train the algorithm fully during the learning process. A key point is that only the largest face in the image will be recognized [29]. It has also been reported that Pepper’s camera suffers from radial distortion, especially with flat surfaces [32]. Another study [38] added a 3D camera to enhance the capability of Pepper. Tariq et al. [39] found that AI algorithms misclassified faces when analyzing South Asian participants, which supports the current study’s results related to Pepper’s inability to identify Asian participants’ faces.

Overall, we encountered several issues, indicating certain areas of weakness in the robot’s performance. The built-in SLAM capability for Pepper’s navigation is not reliable enough for practical purposes, and the generated maps do not always represent the actual setting of the physical environment. Furthermore, navigation can lead Pepper to take strange and unexpected routes, and in many cases, it will not reach the exact desired location. On the other hand, Pepper’s sound and speech recognition capabilities yielded reasonably acceptable results. Pepper responded to most accents without any deviation. However, evaluating Pepper’s object and face detection capabilities provided mixed results.

5. Conclusions

This study highlights the strengths and weaknesses of Pepper’s features and emphasizes the importance of continued research to improve the robot’s performance. The authors of this paper conducted a series of tests to evaluate five functionalities of Pepper. In summary, the present study has found that Pepper’s built-in SLAM navigation has limitations that make it unreliable and inaccurate in generating maps. This can result in unexpected routes and difficulty in reaching precise locations. However, Pepper’s sound and speech recognition perform well across different accents. On the other hand, evaluations of its object and face detection capabilities show mixed results, indicating variability in effectiveness. Therefore, there is a scope to improve Pepper’s capabilities by incorporating different algorithms.

In addition to the issues identified during experimentation, it is worth noting that there are limitations on the version support of Python with Pepper. This limitation can adversely affect the feature implementation through Pepper as, in our case, the current version of Python supported by Pepper is older. It may not support the functionalities that require a higher version of Python. Therefore, it is essential to consider the version support limitations of Python when utilizing Pepper’s functionalities in real-world scenarios.

It is worth noting that the researchers could not explore all the available APIs due to time constraints and the vast list of functions available on Pepper. The authors intend to extend this work in the future by exploring the capabilities of Pepper not addressed in this study and improving Pepper’s functionality by incorporating artificial intelligence and machine learning algorithms.

Author Contributions

Conceptualization, D.M. and A.P.; methodology, G.A.R., B.N.B. and D.C.; formal analysis, G.A.R., B.N.B. and D.C.; investigation, G.A.R., B.N.B. and D.C.; writing—original draft preparation, D.M., A.P., G.A.R., B.N.B. and D.C.; writing—review and editing, D.M., A.P. and B.S.; Supervision, D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study aimed to evaluate five basic functionalities of the Pepper robot. Human subjects were required to assess three of these capabilities (creating dialogue to evaluate speech detection, clapping to evaluate hearing and face detection). Human subjects in the author's team participated in the evaluation of these capabilities and consented to be part of the experiment. The results of these exploratory experiments were collected in real time, and no data was stored or recorded to be processed later due to the nature of the experiments. However, if required, the data can be re-produced. Therefore the approval was not required as per institutional guidelines.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. However, they are not publicly available as the experiments were conducted for exploratory purposes, and the preliminary nature of the data necessitates controlled access to ensure accurate interpretation and ongoing research integrity. The original data were not stored; nevertheless, they can be reproduced through the programs used in the experiment. If anyone wishes, we are prepared to rerun the experiment and provide the necessary data upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Dialogue establishment between human and Pepper

Appendix B. Code Snippet for the Hearing Capability of Pepper

Appendix C. Code Snippet of Conversation between Human and Pepper

References

- Morris, K.J.; Samonin, V.; Baltes, J.; Anderson, J.; Lau, M.C. A robust interactive entertainment robot for robot magic performances. Appl. Intell. 2019, 49, 3834–3844. [Google Scholar] [CrossRef]

- Anzalone, S.M.; Boucenna, S.; Ivaldi, S.; Chetouani, M. Evaluating the Engagement with Social Robots. Int. J. Soc. Robot. 2015, 7, 465–478. [Google Scholar] [CrossRef]

- Yan, H.; Ang, M.H.; Poo, A.N. A Survey on Perception Methods for Human–Robot Interaction in Social Robots. Int. J. Soc. Robot. 2013, 6, 85–119. [Google Scholar] [CrossRef]

- Dağlarlı, E.; Dağlarlı, S.F.; Günel, G.; Köse, H. Improving human-robot interaction based on joint attention. Appl. Intell. 2017, 47, 62–82. [Google Scholar] [CrossRef]

- Breazeal, C.; Dautenhahn, K.; Kanda, T. Social Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1935–1972. [Google Scholar]

- Alotaibi, S.B.; Manimurugan, S. Humanoid robotic system for social interaction using deep imitation learning in a smart city environment. Front. Sustain. Cities 2022, 4, 1076101. [Google Scholar] [CrossRef]

- Pandey, A.K.; Gelin, R. A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of Its Kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Draghici, B.G.; Dobre, A.E.; Misaros, M.; Stan, O.P. Development of a Human Service Robot Application Using Pepper Robot as a Museum Guide. In Proceedings of the 2022 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 10–21 May 2022. [Google Scholar]

- Miyagawa, M.; Yasuhara, Y.; Tanioka, T.; Locsin, R.; Kongsuwan, W.; Catangui, E.; Matsumoto, K. The optimization of humanoid robot’s dialog in improving communication between humanoid robot and older adults. Intell. Control Autom. 2019, 10, 118–127. [Google Scholar] [CrossRef][Green Version]

- Suddrey, G.; Jacobson, A.; Ward, B. Enabling a pepper robot to provide automated and interactive tours of a robotics laboratory. arXiv 2018, arXiv:1804.03288. [Google Scholar]

- Karar, A.; Said, S.; Beyrouthy, T. Pepper humanoid robot as a service robot: A customer approach. In Proceedings of the 2019 3rd International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 24–26 April 2019. [Google Scholar]

- Woo, H.; LeTendre, G.K.; Pham-Shouse, T.; Xiong, Y. The use of social robots in classrooms: A review of field-based studies. Educ. Res. Rev. 2021, 33, 100388. [Google Scholar] [CrossRef]

- Mishra, D.; Parish, K.; Lugo, R.G.; Wang, H. A framework for using humanoid robots in the school learning environment. Electronics 2021, 10, 756. [Google Scholar] [CrossRef]

- Ghita, A.S.; Gavril, A.F.; Nan, M.; Hoteit, B.; Awada, I.A.; Sorici, A.; Mocanu, I.G.; Florea, A.M. The AMIRO Social Robotics Framework: Deployment and Evaluation on the Pepper Robot. Sensors 2020, 20, 7271. [Google Scholar] [CrossRef]

- Gardecki, A.; Podpora, M. Experience from the operation of the Pepper humanoid robots. In Proceedings of the 2017 Progress in Applied Electrical Engineering (PAEE), Koscielisko, Poland, 25–30 June 2017. [Google Scholar]

- Carros, F.; Meurer, J.; Löffler, D.; Unbehaun, D.; Matthies, S.; Koch, I.; Wieching, R.; Randall, D.; Hassenzahl, M.; Wulf, V. Exploring human-robot interaction with the elderly: Results from a ten-week case study in a care home. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Ekström, S.; Pareto, L. The dual role of humanoid robots in education: As didactic tools and social actors. Educ. Inf. Technol. 2022, 27, 12609–12644. [Google Scholar] [CrossRef]

- Marku, D. Child-Robot Interaction in Language Acquisition Through Pepper Humanoid Robot; NTNU: Gjøvik, Norway, 2022. [Google Scholar]

- Pande, A.; Mishra, D. The Synergy between a Humanoid Robot and Whisper: Bridging a Gap in Education. Electronics 2023, 12, 3995. [Google Scholar] [CrossRef]

- Alhmiedat, T.; Marei, A.M.; Messoudi, W.; Albelwi, S.; Bushnag, A.; Bassfar, Z.; Alnajjar, F.; Elfaki, A.O. A SLAM-based localization and navigation system for social robots: The pepper robot case. Machines 2023, 11, 158. [Google Scholar] [CrossRef]

- Gómez, C.; Mattamala, M.; Resink, T.; Ruiz-Del-Solar, J. Visual slam-based localization and navigation for service robots: The pepper case. In RoboCup 2018: Robot World Cup XXII 22; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Silva, J.R.; Simão, M.; Mendes, N.; Neto, P. Navigation and obstacle avoidance: A case study using Pepper robot. In Proceedings of the IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019. [Google Scholar]

- Ardón, P.; Kushibar, K.; Peng, S. A hybrid SLAM and object recognition system for pepper robot. arXiv 2019, arXiv:1903.00675. [Google Scholar]

- Naoqi API Documentation—Vision. Softbank Robotics. 2018. Available online: http://doc.aldebaran.com/2-5/naoqi/vision/allandmarkdetection.html (accessed on 30 April 2023).

- Gardecki, A.; Podpora, M.; Beniak, R.; Klin, B. The Pepper Humanoid Robot in Front Desk Application. In Proceedings of the 2018 Progress in Applied Electrical Engineering (PAEE), Koscielisko, Poland, 18–22 June 2018. [Google Scholar]

- Greco, A.; Roberto, A.; Saggese, A.; Vento, M.; Vigilante, V. Emotion Analysis from Faces for Social Robotics. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (Smc), Bari, Italy, 6–9 October 2019; pp. 358–364. [Google Scholar]

- Reyes, E.; Gómez, C.; Norambuena, E.; Ruiz-del-Solar, J. Near Real-Time Object Recognition for Pepper Based on Deep Neural Networks Running on a Backpack. In RoboCup 2018: Robot World Cup XXII; Springer International Publishing: Cham, Switzerland, 2019. [Google Scholar]

- Subedi, A.; Pandey, D.; Mishra, D. Programming Nao as an Educational Agent: A Comparison Between Choregraphe and Python SDK. In Innovations in Smart Cities Applications Volume 5, Proceedings of the 6th International Conference on Smart City Applications, Safranbolu, Turkey, 27–29 November 2021; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Naoqi API Documentation. Softbank Robotics. 2018. Available online: http://doc.aldebaran.com/2-5/naoqi/index.html (accessed on 30 April 2023).

- Choregraphe Suite. Softbank Robotics. 2018. Available online: http://doc.aldebaran.com/2-5/software/choregraphe/index.html (accessed on 30 April 2023).

- Lafaye, J.; Gouaillier, D.; Wieber, P.-B. Linear model predictive control of the locomotion of Pepper, a humanoid robot with omnidirectional wheels. In Proceedings of the 2014 IEEE-RAS International Conference on Humanoid Robots, Madrid, Spain, 18–20 November 2014. [Google Scholar]

- Bauer, Z.; Escalona, F.; Cruz, E.; Cazorla, M.; Gomez-Donoso, F. Refining the fusion of pepper robot and estimated depth maps method for improved 3d perception. IEEE Access 2019, 7, 185076–185085. [Google Scholar] [CrossRef]

- Bauer, Z.; Escalona, F.; Cruz, E.; Cazorla, M.; Gomez-Donoso, F. Improving the 3D perception of the pepper robot using depth prediction from monocular frames. In Advances in Physical Agents: Proceedings of the 19th International Workshop of Physical Agents (WAF 2018), Madrid, Spain, 22–23 November 2018; Springer: Berlin/Heidelberg, Germany; p. 2018.

- Perera, V.; Pereira, T.; Connell, J.; Veloso, M. Setting up pepper for autonomous navigation and personalized interaction with users. arXiv 2017, arXiv:1704.04797. [Google Scholar]

- Stommel, W.; de Rijk, L.; Boumans, R. “Pepper, what do you mean?” Miscommunication and repair in robot-led survey interaction. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022. [Google Scholar]

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand Gesture Recognition Based on Computer Vision: A Review of Techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Pepper, R. Facial Recognition (Part of the Pepper Series). 2019. Available online: https://blogemtech.medium.com/pepper-facial-recognition-43e24b10cea2 (accessed on 30 April 2023).

- Caniot, M.; Bonnet, V.; Busy, M.; Labaye, T.; Besombes, M.; Courtois, S.; Lagrue, E. Adapted Pepper. arXiv 2020, arXiv:2009.03648. [Google Scholar]

- Tariq, S.; Jeon, S.; Woo, S.S. Evaluating Trustworthiness and Racial Bias in Face Recognition APIs Using Deepfakes. Computer 2023, 56, 51–61. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).