Palmprint Recognition: Extensive Exploration of Databases, Methodologies, Comparative Assessment, and Future Directions

Abstract

:1. Introduction

- -

- We deliver a timely, thorough, and concise review of the extensive literature on image-based palmprint recognition. This includes an analysis of both contact and contactless palmprint databases, along with the employed evaluation methods. Our evaluation covers 20 databases and more than 60 publications from late 2002 to 2023.

- -

- Our goal is to enlighten emerging scholars by highlighting significant advances in the historical context of the field and directing them to relevant references for in-depth exploration.

- -

- We present a systematic categorization of palmprint feature extraction techniques, including contemporary methods rooted in deep learning. The purpose of developing this taxonomy is to structure the existing literature on palmprint recognition approaches and provide a coherent framework for understanding the diverse methodologies employed in the field.

- -

- We provide an up-to-date and thorough comprehensive survey of both contact and contactless databases utilized in the realm of palmprint recognition. Our methodology involves organizing these databases and building a chronological timeline, showing the evolution of these datasets over time in terms of the number of individuals represented and the number of samples per individual.

- -

- We scrutinize the existing deep learning-based methodologies, highlighting their exceptional performance on intricate, unregulated, and extensive datasets. Consequently, our examination offers researchers with an inclusive understanding of deep learning-based techniques, which have significantly transformed palmprint recognition paradigms since the early 2015s.

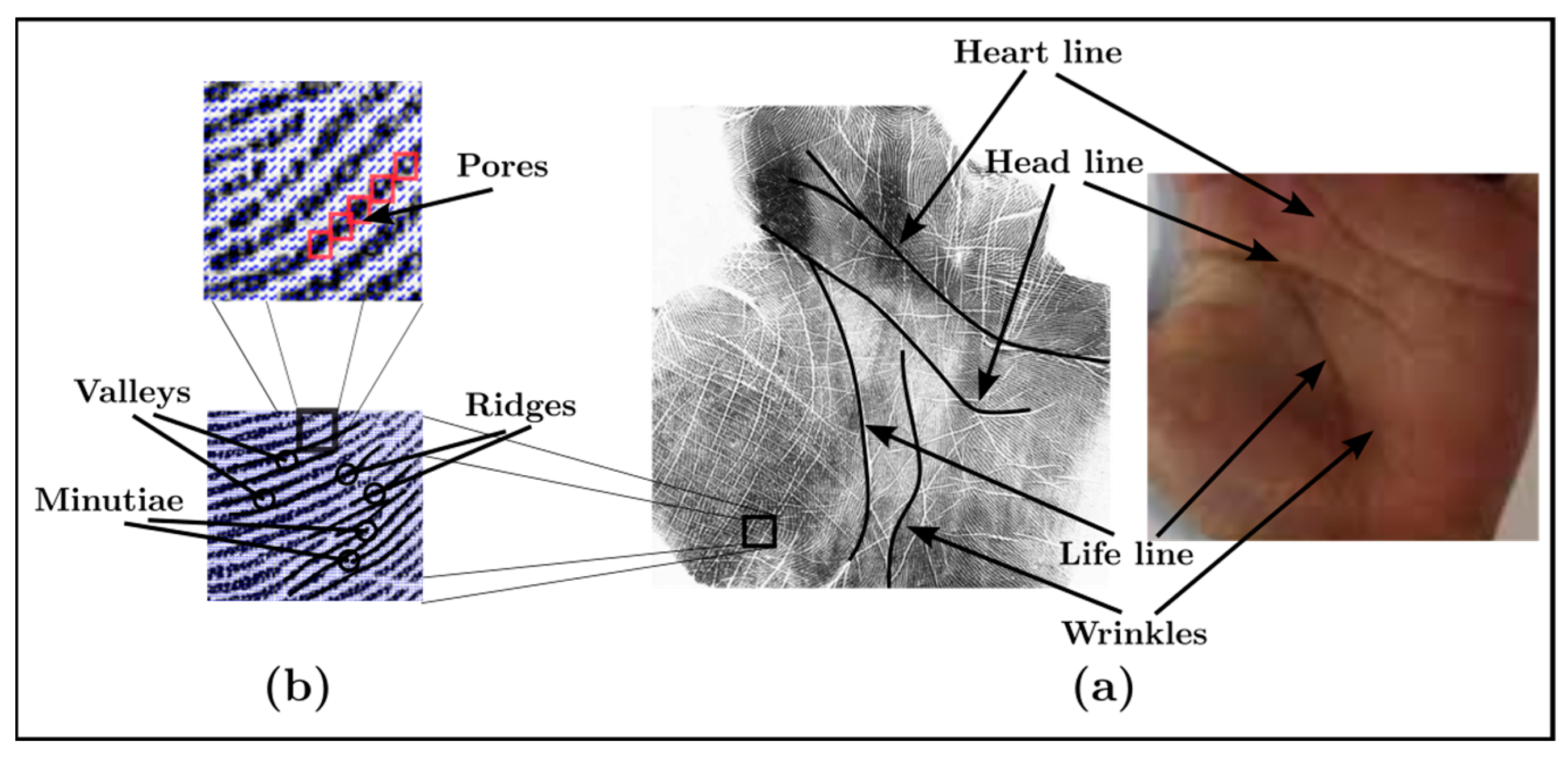

2. What Is a Palmprint?

3. Why Palmprint Recognition?

4. Structure of a Palmprint Recognition System

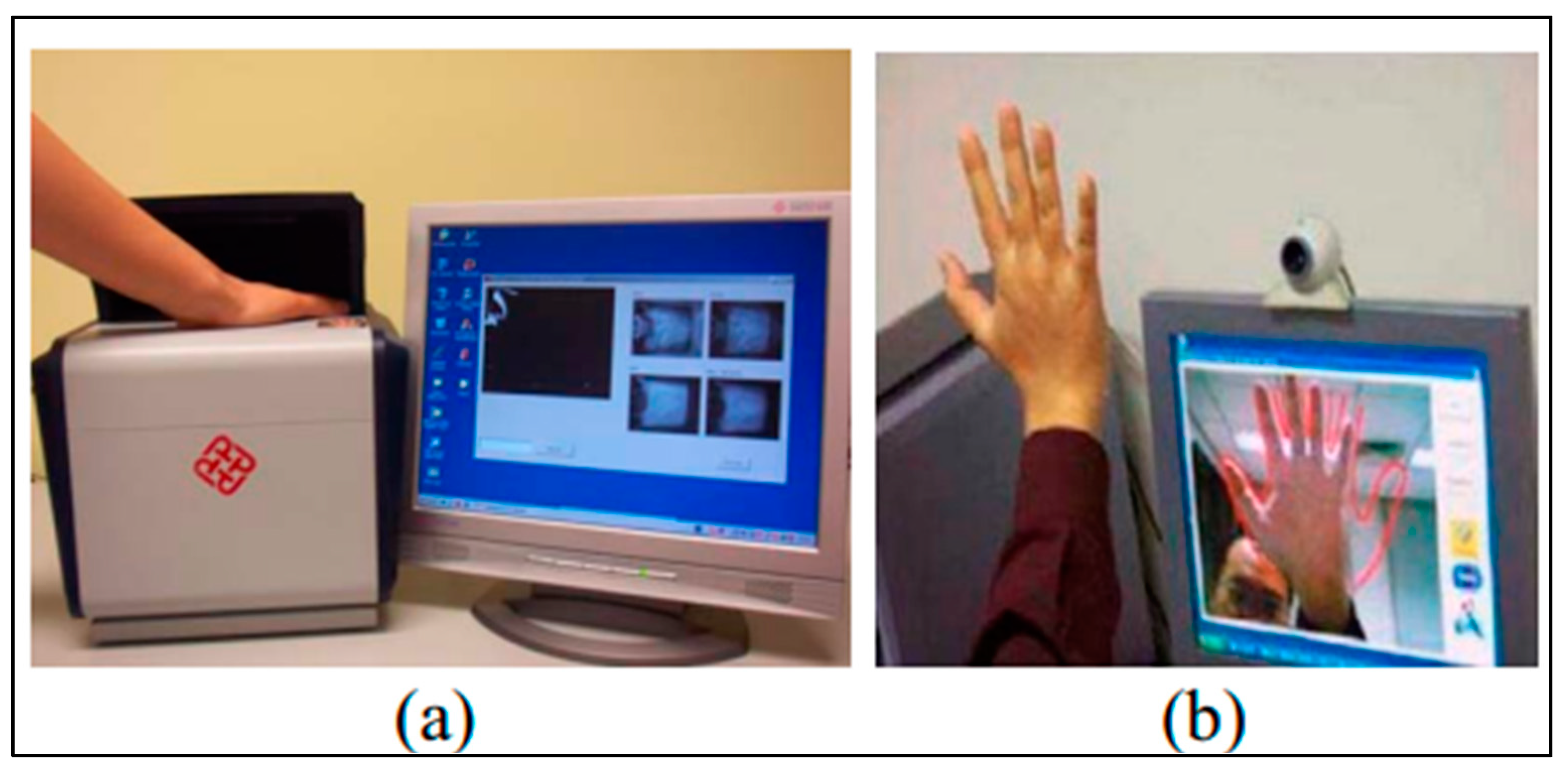

4.1. Image Acquisition

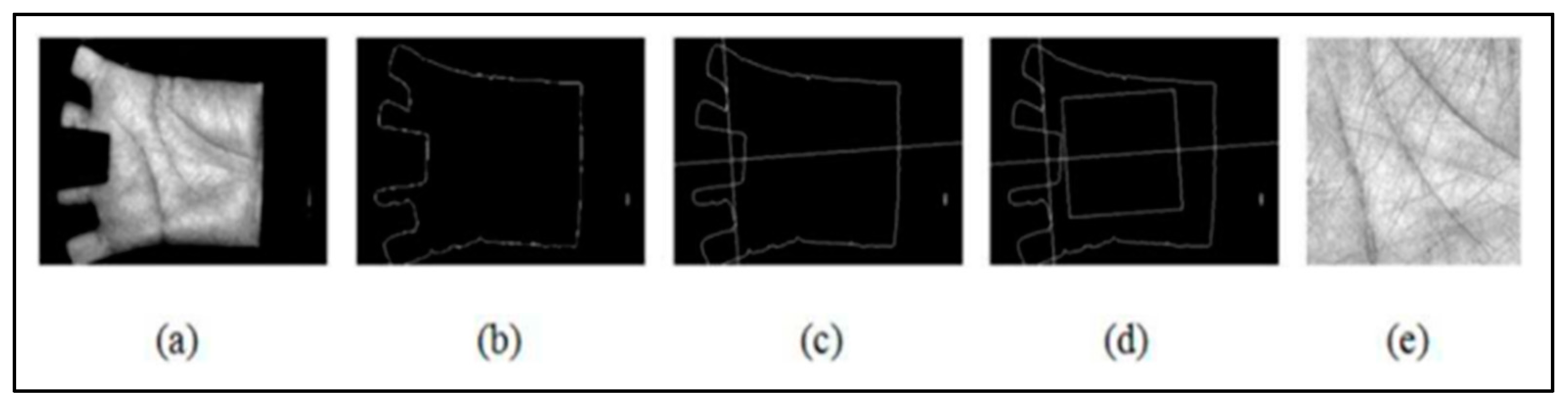

4.2. Preprocessing

4.3. Feature Extraction

4.4. Classification

4.5. Evaluation Performances

5. Palmprint Biometric Recognition Challenges

6. Databases

6.1. Contact-Based Palmprint Databases

6.1.1. PolyU (2003)

6.1.2. PolyU-MS (2009)

6.1.3. IIITDMJ (2015)

6.1.4. BJTU_PalmV1 (2019)

6.1.5. PV_790 (2020)

6.1.6. COEP

6.2. Contactless-Based Palmprint Databases

6.2.1. CASIA (2005)

6.2.2. CASIA-MS (2007)

6.2.3. IITD (2008)

6.2.4. GPSD (2011)

6.2.5. Cross-Sensor (2012)

6.2.6. REST (2016)

6.2.7. TJI (2017)

6.2.8. NTU-PI-v1 (2019)

6.2.9. NTU-CP-v1 (2019)

6.2.10. BJTU_PalmV2 (2019)

6.2.11. MPD (2020)

6.2.12. XJTU-UP (2021)

6.2.13. CrossDevice-A (2022)

6.2.14. CrossDevice-B (2022)

7. Feature Extraction Approaches

7.1. Line-Based Approaches

7.2. Subspace Learning-Based Approaches

7.3. Local Direction Encoding-Based Approaches

7.4. Texture-Based Approaches

7.5. Deep Learning-Based Approaches

7.6. Comparative Analysis

8. Future Directions

8.1. Enhancement of Palmprint Imagery through the Application of Generative Adversarial Networks

8.2. Enhancing Recognition Rates and Expediting Processing time through the Exploitation of Soft Biometric Attributes

8.3. Utilizing Three-Dimensional Representations to Mitigate Image Acquisition Challenges

8.4. Exploring Liveness Detection and Mitigating Vulnerability to Spoofing Attacks

9. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kong, A.; Zhang, D.; Kamel, M. A Survey of Palmprint Recognition. Pattern Recognit. 2009, 42, 1408–1418. [Google Scholar] [CrossRef]

- Connie, T.; Jin, A.T.B.; Ong, M.G.K.; Ling, D.N.C. An Automated Palmprint Recognition System. Image Vis. Comput. 2005, 23, 501–515. [Google Scholar] [CrossRef]

- Zhong, D.; Du, X.; Zhong, K. Decade Progress of Palmprint Recognition: A Brief Survey. Neurocomputing 2019, 328, 16–28. [Google Scholar] [CrossRef]

- Trabelsi, S.; Samai, D.; Dornaika, F.; Benlamoudi, A.; Bensid, K.; Taleb-Ahmed, A. Efficient Palmprint Biometric Identification Systems Using Deep Learning and Feature Selection Methods. Neural Comput. Appl. 2022, 34, 12119–12141. [Google Scholar] [CrossRef]

- Zhang, D.; Zuo, W.; Yue, F. A Comparative Study of Palmprint Recognition Algorithms. ACM Comput. Surv. 2012, 44, 1–37. [Google Scholar] [CrossRef]

- Zhao, S.; Fei, L.; Wen, J. Multiview-Learning-Based Generic Palmprint Recognition: A Literature Review. Mathematics 2023, 11, 1261. [Google Scholar] [CrossRef]

- Shu, W.; Zhang, D. Automated Personal Identification by Palmprint. Opt. Eng. 1998, 37, 2359–2362. [Google Scholar] [CrossRef]

- Jia, W.; Huang, D.S.; Zhang, D. Palmprint Verification Based on Robust Line Orientation Code. Pattern Recognit. 2008, 41, 1504–1513. [Google Scholar] [CrossRef]

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online Palmprint Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef]

- Jain, A.K.; Feng, J. Latent Palmprint Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 1032–1047. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, Present, and Future of Face Recognition: A Review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Ross, A.; Banerjee, S.; Chen, C.; Chowdhury, A.; Mirjalili, V.; Sharma, R.; Yadav, S. Some Research Problems in Biometrics: The Future Beckons. In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–8. [Google Scholar]

- Alausa, D.W.; Adetiba, E.; Badejo, J.A.; Davidson, I.E.; Obiyemi, O.; Buraimoh, E.; Oshin, O. Contactless Palmprint Recognition System: A Survey. IEEE Access 2022, 10, 132483–132505. [Google Scholar] [CrossRef]

- Zhang, L.; Li, L.; Yang, A.; Shen, Y.; Yang, M. Towards Contactless Palmprint Recognition: A Novel Device, a New Benchmark, and a Collaborative Representation Based Identification Approach. Pattern Recognit. 2017, 69, 199–212. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Kong, W.K.; Zhang, D.; Li, W. Palmprint Feature Extraction Using 2-D Gabor Filters. Pattern Recognit. 2003, 36, 2339–2347. [Google Scholar] [CrossRef]

- Laadjel, M.; Kurugollu, F.; Bouridane, A.; Boussakta, S. Degraded Partial Palmprint Recognition for Forensic Investigations. In Proceedings of the 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1513–1516. [Google Scholar]

- Matkowski, W.M.; Chai, T.; Kong, A.W.K. Palmprint Recognition in Uncontrolled and Uncooperative Environment. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1601–1615. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, D.; Wang, K.; Huang, B. Palmprint Classification Using Principal Lines. Pattern Recognit. 2004, 37, 1987–1998. [Google Scholar] [CrossRef]

- Fei, L.; Lu, G.; Jia, W.; Teng, S.; Zhang, D. Feature Extraction Methods for Palmprint Recognition: A Survey and Evaluation. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 346–363. [Google Scholar] [CrossRef]

- Fei, L.; Zhang, B.; Zhang, W.; Teng, S. Local Apparent and Latent Direction Extraction for Palmprint Recognition. Inf. Sci. 2019, 473, 59–72. [Google Scholar] [CrossRef]

- Xiao, Q.; Lu, J.; Jia, W.; Liu, X. Extracting Palmprint ROI from Whole Hand Image Using Straight Line Clusters. IEEE Access 2019, 7, 74327–74339. [Google Scholar] [CrossRef]

- Leng, L.; Liu, G.; Li, M.; Khan, M.K.; Al-Khouri, A.M. Logical Conjunction of Triple-Perpendicular-Directional Translation Residual for Contactless Palmprint Preprocessing. In Proceedings of the 11th International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 7–9 April 2014; pp. 523–528. [Google Scholar]

- Liu, Y.; Kumar, A. Contactless Palmprint Identification Using Deeply Learned Residual Features. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 172–181. [Google Scholar] [CrossRef]

- Ali, M.M.; Gaikwad, A.T. Multimodal Biometrics Enhancement Recognition System Based on Fusion of Fingerprint and Palmprint: A Review. Glob. J. Comput. Sci. Technol. 2016, 16, 13–26. [Google Scholar]

- Dai, J.; Feng, J.; Zhou, J. Robust and Efficient Ridge-Based Palmprint Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1618–1632. [Google Scholar]

- Benzaoui, A.; Khaldi, Y.; Bouaouina, R.; Amrouni, N.; Alshazly, H.; Ouahabi, A. A Comprehensive Survey on Ear Recognition: Databases, Approaches, Comparative Analysis, and Open Challenges. Neurocomputing. Neurocomputing 2023, 537, 236–270. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, Z.; Lu, G.; Zhang, L.; Zuo, W. An Online System of Multispectral Palmprint Verification. IEEE Trans. Instrum. Meas. 2009, 59, 480–490. [Google Scholar] [CrossRef]

- Tamrakar, D.; Khanna, P. Occlusion Invariant Palmprint Recognition with ULBP Histograms. Procedia Comput. Sci. 2015, 54, 491–500. [Google Scholar] [CrossRef]

- Chai, T.; Prasad, S.; Wang, S. Boosting Palmprint Identification with Gender Information Using DeepNet. Future Gener. Comput. Syst. 2019, 99, 41–53. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Learning Salient and Discriminative Descriptor for Palmprint Feature Extraction and Identification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5219–5230. [Google Scholar] [CrossRef]

- COEP Palmprint Database. Available online: https://www.coep.org.in/resources/coeppalmprintdatabase (accessed on 8 November 2023).

- Sun, Z.; Tan, T.; Wang, Y.; Li, S.Z. Ordinal Palmprint Representation for Personal Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Hao, Y.; Sun, Z.; Tan, T. Comparative Studies on Multispectral Palm Image Fusion for Biometrics. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2007; pp. 12–21. [Google Scholar]

- Kumar, A. Incorporating Cohort Information for Reliable Palmprint Authentication. In Proceedings of the 6th Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 16–19 December 2008; pp. 583–590. [Google Scholar]

- GPDS Palmprint Image Database. Available online: https://gpds.ulpgc.es/ (accessed on 8 November 2023).

- Jia, W.; Hu, R.X.; Gui, J.; Zhao, Y.; Ren, X.M. Palmprint Recognition across Different Devices. Sensors 2012, 12, 7938–7964. [Google Scholar] [CrossRef]

- Charfi, N.; Trichili, H.; Alimi, A.M.; Solaiman, B. Local Invariant Representation for Multi-Instance Touchless Palmprint Identification. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 003522–003527. [Google Scholar]

- Zhang, Y.; Zhang, L.; Zhang, R.; Li, S.; Li, J.; Huang, F. Towards Palmprint Verification on Smartphones. arXiv 2020, arXiv:2003.13266. [Google Scholar]

- Shao, H.; Zhong, D.; Du, X. Deep Distillation Hashing for Unconstrained Palmprint Recognition. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Shen, L.; Zhang, Y.; Zhao, K.; Zhang, R.; Shen, W. Distribution Alignment for Cross-Device Palmprint Recognition. Pattern Recognit. 2022, 132, 108942. [Google Scholar] [CrossRef]

- Hassanat, A.; Al-Awadi, M.; Btoush, E.; Al-Btoush, A.; Alhasanat, E.A.; Altarawneh, G. New Mobile Phone and Webcam Hand Images Databases for Personal Authentication and Identification. Procedia Manuf. 2015, 3, 4060–4067. [Google Scholar] [CrossRef]

- Li, W.; Zhang, D.; Xu, Z. Palmprint Identification by Fourier Transform. Int. J. Pattern Recognit. Artif. Intell. 2002, 16, 417–432. [Google Scholar] [CrossRef]

- Jia, W.; Ling, B.; Chau, K.W.; Heutte, L. Palmprint Identification Using Restricted Fusion. Appl. Math. Comput. 2008, 205, 927–934. [Google Scholar] [CrossRef]

- Jia, W.; Hu, R.X.; Lei, Y.K.; Zhao, Y.; Gui, J. Histogram of Oriented Lines for Palmprint Recognition. IEEE Trans. Syst. Man Cybern. Syst. 2013, 44, 385–395. [Google Scholar] [CrossRef]

- Luo, Y.T.; Zhao, L.Y.; Zhang, B.; Jia, W.; Xue, F.; Lu, J.T.; Xu, B.Q. Local Line Directional Pattern for Palmprint Recognition. Pattern Recognit. 2016, 50, 26–44. [Google Scholar] [CrossRef]

- Mokni, R.; Drira, H.; Kherallah, M. Combining Shape Analysis and Texture Pattern for Palmprint Identification. Multimed. Tools Appl. 2017, 76, 23981–24008. [Google Scholar] [CrossRef]

- Gumaei, A.; Sammouda, R.; Al-Salman, A.M.; Alsanad, A. An Effective Palmprint Recognition Approach for Visible and Multispectral Sensor Images. Sensors 2018, 18, 1575. [Google Scholar] [CrossRef]

- Zhou, K.; Zhou, X.; Yu, L.; Shen, L.; Yu, S. Double Biologically Inspired Transform Network for Robust Palmprint Recognition. Neurocomputing 2019, 337, 24–45. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, D.; Wang, K. Fisherpals Based Palmprint Recognition. Pattern Recognit. Lett. 2003, 24, 2829–2838. [Google Scholar] [CrossRef]

- Hu, D.; Feng, G.; Zhou, Z. Two-Dimensional Locality Preserving Projections (2DLPP) with Its Application to Palmprint Recognition. Pattern Recognit. 2007, 40, 339–342. [Google Scholar] [CrossRef]

- Pan, X.; Ruan, Q.Q. Palmprint Recognition with Improved Two-Dimensional Locality Preserving Projections. Image Vis. Comput. 2008, 26, 1261–1268. [Google Scholar] [CrossRef]

- Lu, J.; Tan, Y.P. Improved Discriminant Locality Preserving Projections for Face and Palmprint Recognition. Neurocomputing 2011, 74, 3760–3767. [Google Scholar] [CrossRef]

- Rida, I.; Al-Maadeed, S.; Mahmood, A.; Bouridane, A.; Bakshi, S. Palmprint Identification Using an Ensemble of Sparse Representations. IEEE Access 2018, 6, 3241–3248. [Google Scholar] [CrossRef]

- Rida, I.; Herault, R.; Marcialis, G.L.; Gasso, G. Palmprint Recognition with an Efficient Data Driven Ensemble Classifier. Pattern Recognit. Lett. 2019, 126, 21–30. [Google Scholar] [CrossRef]

- Wan, M.; Chen, X.; Zhan, T.; Xu, C.; Yang, G.; Zhou, H. Sparse Fuzzy Two-Dimensional Discriminant Local Preserving Projection (SF2DDLPP) for Robust Image Feature Extraction. Inf. Sci. 2021, 563, 1–15. [Google Scholar] [CrossRef]

- Zhao, S.; Wu, J.; Fei, L.; Zhang, B.; Zhao, P. Double-Cohesion Learning Based Multiview and Discriminant Palmprint Recognition. Inf. Fusion 2022, 83, 96–109. [Google Scholar] [CrossRef]

- Wan, M.; Chen, X.; Zhan, T.; Yang, G.; Tan, H.; Zheng, H. Low-Rank 2D Local Discriminant Graph Embedding for Robust Image Feature Extraction. Pattern Recognit. 2023, 133, 109034. [Google Scholar] [CrossRef]

- Kumar, A.; Shen, H.C. Palmprint Identification Using Palmcodes. In Proceedings of the 3rd International Conference on Image and Graphics (ICIG’04), Hong Kong, China, 18–20 December 2004; pp. 258–261. [Google Scholar]

- Kong, A.; Zhang, D.; Kamel, M. Palmprint Identification Using Feature-Level Fusion. Pattern Recognit. 2006, 39, 478–487. [Google Scholar] [CrossRef]

- Mansoor, A.B.; Masood, H.; Mumtaz, M.; Khan, S.A. A Feature Level Multimodal Approach for Palmprint Identification Using Directional Subband Energies. J. Netw. Comput. Appl. 2011, 34, 159–171. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Niu, J. Fragile Bits in Palmprint Recognition. IEEE Signal Process. Lett. 2012, 19, 663–666. [Google Scholar] [CrossRef]

- Zhang, S.; Gu, X. Palmprint Recognition Method Based on Score Level Fusion. Optik-Int. J. Light Electron Opt. 2013, 124, 3340–3344. [Google Scholar] [CrossRef]

- Li, H.; Zhang, J.; Wang, L. Robust Palmprint Identification Based on Directional Representations and Compressed Sensing. Multimed. Tools Appl. 2014, 70, 2331–2345. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Tang, W.; Zhang, D. Double-Orientation Code and Nonlinear Matching Scheme for Palmprint Recognition. Pattern Recognit. 2016, 49, 89–101. [Google Scholar] [CrossRef]

- Xu, Y.; Fei, L.; Wen, J.; Zhang, D. Discriminative and Robust Competitive Code for Palmprint Recognition. IEEE Trans. Syst. Man Cybern. Syst. 2016, 48, 232–241. [Google Scholar] [CrossRef]

- Almaghtuf, J.; Khelifi, F.; Bouridane, A. Fast and Efficient Difference of Block Means Code for Palmprint Recognition. Mach. Vis. Appl. 2020, 31, 1–10. [Google Scholar] [CrossRef]

- Liang, L.; Chen, T.; Fei, L. Orientation Space Code and Multi-Feature Two-Phase Sparse Representation for Palmprint Recognition. Int. J. Mach. Learn. Cybern. 2020, 11, 1453–1461. [Google Scholar] [CrossRef]

- Hammami, M.; Ben Jemaa, S.; Ben-Abdallah, H. Selection of Discriminative Sub-Regions for Palmprint Recognition. Multimed. Tools Appl. 2014, 68, 1023–1050. [Google Scholar] [CrossRef]

- Raghavendra, R.; Busch, C. Texture Based Features for Robust Palmprint Recognition: A Comparative Study. EURASIP J. Inf. Secur. 2015, 2015, 5. [Google Scholar] [CrossRef]

- Tamrakar, D.; Khanna, P. Kernel Discriminant Analysis of Block-Wise Gaussian Derivative Phase Pattern Histogram for Palmprint Recognition. J. Vis. Commun. Image Represent. 2016, 40, 432–448. [Google Scholar] [CrossRef]

- Doghmane, H.; Bourouba, H.; Messaoudi, K.; Bouridane, A. Palmprint Recognition Based on Discriminant Multiscale Representation. J. Electron. Imaging 2018, 27, 053032. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Huang, W.; Zhang, C. Combining Modified LBP and Weighted SRC for Palmprint Recognition. Signal Image Video Process. 2018, 12, 1035–1042. [Google Scholar] [CrossRef]

- El-Tarhouni, W.; Boubchir, L.; Elbendak, M.; Bouridane, A. Multispectral Palmprint Recognition Using Pascal Coefficients-Based LBP and PHOG Descriptors with Random Sampling. Neural Comput. Appl. 2019, 31, 593–603. [Google Scholar] [CrossRef]

- Attallah, B.; Serir, A.; Chahir, Y. Feature Extraction in Palmprint Recognition Using Spiral of Moment Skewness and Kurtosis Algorithm. Pattern Anal. Appl. 2019, 22, 1197–1205. [Google Scholar] [CrossRef]

- Chaudhary, G.; Srivastava, S. A Robust 2D-Cochlear Transform-Based Palmprint Recognition. Soft Comput. 2020, 24, 2311–2328. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Huang, W. Palmprint Identification Combining Hierarchical Multi-Scale Complete LBP and Weighted SRC. Soft Comput. 2020, 24, 4041–4053. [Google Scholar] [CrossRef]

- Amrouni, N.; Benzaoui, A.; Bouaouina, R.; Khaldi, Y.; Adjabi, I.; Bouglimina, O. Contactless Palmprint Recognition Using Binarized Statistical Image Features-Based Multiresolution Analysis. Sensors 2022, 22, 9814. [Google Scholar] [CrossRef]

- Harrou, F.; Dairi, A.; Zeroual, A.; Sun, Y. Forecasting of Bicycle and Pedestrian Traffic Using Flexible and Efficient Hybrid Deep Learning Approach. Appl. Sci. 2022, 12, 4482. [Google Scholar] [CrossRef]

- Zeroual, A.; Harrou, F.; Dairi, A.; Sun, Y. Deep Learning Methods for Forecasting COVID-19 Time-Series Data: A Comparative Study. Chaos Solitons Fractals 2020, 140, 110121. [Google Scholar] [CrossRef]

- Izadpanahkakhk, M.; Razavi, S.M.; Taghipour-Gorjikolaie, M.; Zahiri, S.H.; Uncini, A. Deep Region of Interest and Feature Extraction Models for Palmprint Verification Using Convolutional Neural Networks Transfer Learning. Appl. Sci. 2018, 8, 1210. [Google Scholar] [CrossRef]

- Genovese, A.; Piuri, V.; Plataniotis, K.N.; Scotti, F. PalmNet: Gabor-PCA Convolutional Networks for Touchless Palmprint Recognition. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3160–3174. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, B. Deep Discriminative Representation for Generic Palmprint Recognition. Pattern Recognit. 2020, 98, 107071. [Google Scholar] [CrossRef]

- Liu, C.; Zhong, D.; Shao, H. Few-Shot Palmprint Recognition Based on Similarity Metric Hashing Network. Neurocomputing 2021, 456, 540–549. [Google Scholar] [CrossRef]

- Shao, H.; Zhong, D. Towards Open-Set Touchless Palmprint Recognition via Weight-Based Meta Metric Learning. Pattern Recognit. 2022, 121, 108247. [Google Scholar] [CrossRef]

- Türk, Ö.; Çalışkan, A.; Acar, E.; Ergen, B. Palmprint Recognition System Based on Deep Region of Interest Features with the Aid of Hybrid Approach. Signal Image Video Process. 2023, 17, 3837–3845. [Google Scholar] [CrossRef]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Yi, X.; Walia, E.; Babyn, P. Generative Adversarial Network in Medical Imaging: A Review. Med. Image Anal. 2019, 58, 101552. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A Review on Generative Adversarial Networks: Algorithms, Theory, and Applications. IEEE Trans. Knowl. Data Eng. 2021, 35, 3313–3332. [Google Scholar] [CrossRef]

- Khaldi, Y.; Benzaoui, A. A New Framework for Grayscale Ear Images Recognition Using Generative Adversarial Networks under Unconstrained Conditions. Evol. Syst. 2021, 12, 923–934. [Google Scholar] [CrossRef]

- Hassan, B.; Izquierdo, E.; Piatrik, T. Soft Biometrics: A Survey—Benchmark Analysis, Open Challenges, and Recommendations. Multimed. Tools Appl. 2021, 1–44. [Google Scholar] [CrossRef]

- Alonso-Fernandez, F.; Diaz, K.H.; Ramis, S.; Perales, F.J.; Bigun, J. Soft-Biometrics Estimation in the Era of Facial Masks. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 16–18 September 2020; pp. 1–6. [Google Scholar]

- Nixon, M.S.; Correia, P.L.; Nasrollahi, K.; Moeslund, T.B.; Hadid, A.; Tistarelli, M. On Soft Biometrics. Pattern Recognit. Lett. 2015, 68, 218–230. [Google Scholar] [CrossRef]

- Zhang, H.; Beveridge, J.R.; Draper, B.A.; Phillips, P.J. On the Effectiveness of Soft Biometrics for Increasing Face Verification Rates. Comput. Vis. Image Underst. 2015, 137, 50–62. [Google Scholar] [CrossRef]

- Jia, W.; Gao, J.; Xia, W.; Zhao, Y.; Min, H.; Lu, J.T. A Performance Evaluation of Classic Convolutional Neural Networks for 2D and 3D Palmprint and Palm Vein Recognition. Int. J. Autom. Comput. 2021, 18, 18–44. [Google Scholar] [CrossRef]

- Fei, L.; Zhang, B.; Xu, Y.; Jia, W.; Wen, J.; Wu, J. Precision Direction and Compact Surface Type Representation for 3D Palmprint Identification. Pattern Recognit. 2019, 87, 237–247. [Google Scholar] [CrossRef]

- Chaa, M.; Akhtar, Z.; Attia, A. 3D Palmprint Recognition Using Unsupervised Convolutional Deep Learning Network and SVM Classifier. IET Image Process. 2019, 13, 736–745. [Google Scholar] [CrossRef]

- Bai, X.; Meng, Z.; Gao, N.; Zhang, Z.; Zhang, D. 3D Palmprint Identification Using Blocked Histogram and Improved Sparse Representation-Based Classifier. Neural Comput. Appl. 2020, 32, 12547–12560. [Google Scholar] [CrossRef]

- Bhilare, S.; Kanhangad, V.; Chaudhari, N. A Study on Vulnerability and Presentation Attack Detection in Palmprint Verification System. Pattern Anal. Appl. 2018, 21, 769–782. [Google Scholar] [CrossRef]

- Li, X.; Bu, W.; Wu, X. Palmprint Liveness Detection by Combining Binarized Statistical Image Features and Image Quality Assessment. In Proceedings of the Biometric Recognition: 10th Chinese Conference, CCBR 2015, Tianjin, China, 13–15 November 2015; pp. 275–283. [Google Scholar]

- Farmanbar, M.; Toygar, Ö. Spoof Detection on Face and Palmprint Biometrics. Signal Image Video Process. 2017, 11, 1253–1260. [Google Scholar] [CrossRef]

- Aydoğdu, Ö.; Sadreddini, Z.; Ekinci, M. A Study on Liveness Analysis for Palmprint Recognition System. In Proceedings of the 41st International Conference on Telecommunications and Signal Processing (TSP), Athens, Greece, 4–6 July 2018; pp. 1–4. [Google Scholar]

| Database | Year | Developer | #Imgs | #IDs | #Imgs/ID | Res. | Norm. | Var. | Acqui. Dev. |

|---|---|---|---|---|---|---|---|---|---|

| PolyU [9] | 2003 | The Hong Kong Polytechnic University | 7752 | 386 | ≈20 | 384 × 284 | No | No | Scanner |

| PolyU-MS [28] | 2009 | The Hong Kong Polytechnic University | 6000 × 4 (red, green, blue, and NIR) | 500 × 4 | 12 | 700 × 500 | Yes | No | Scanner |

| IIITDMJ [29] | 2015 | Indian Institute of Information Technology, Design and Manufacturing, Jabalpur | 900 | 150 | 6 | 700 × 500 | No | Small | Scanner |

| BJTU_PalmV1 [30] | 2019 | Institute of Information Science, Beijing Jiaotong University | 2431 | 174 | From 8 to 10 | 1792 × 1200 | No | Large | 2 CCD cameras |

| PV_790 [31] | 2020 | University of Macau | 5180 | 518 | 10 | N/A | No | No | Scanner |

| COEP [32] | N/A | College of Engineering, Pune | 1344 | 168 | 8 | 1600 × 1200 | No | No | Scanner |

| Database | Year | Developer | #Imgs | #IDs | #Imgs/ID | Res. | Norm. | Var. | Acqui. Dev. |

|---|---|---|---|---|---|---|---|---|---|

| CASIA [33] | 2005 | Chinese Academy of Sciences, Institute of Automation | 5502 | 624 | ≈8 | 640 × 480 | No | Small | Digital camera |

| CASIA-MS [34] | 2007 | Chinese Academy of Sciences, Institute of Automation | 1200 | 200 | 6 | 700 × 500 | No | Large | Digital camera |

| IITD [35] | 2008 | Indian Institute of Technology, Delhi | 2601 | 460 | ≈6 | 800 × 600 | Yes | Small | Digital camera |

| GPDS [36] | 2011 | Las Palmas de Gran Canaria University | 1000 | 100 | 10 | 800 × 600 | Yes | Small | 2 webcams |

| Cross-Sensor [37] | 2012 | Chinese Academy of Science | 12,000 | 200 | 60 | 816 × 612 778 × 581 816 × 612 | No | Large | 1 digital camera + 2 mobile phones |

| REST [38] | 2016 | Sfax University, Tunisia | 1945 | 358 | ≈5 | 2048 × 1536 | No | Small | Digital camera |

| TJI [14] | 2017 | Tongji University, China | 12,000 | 600 | 20 | 800 × 600 | No | Small | Developed device |

| NTU-PI-v1 [18] | 2019 | Nanyang Technological University, Singapore | 7781 | 2035 | ≈4 | From 30 × 30 to 1415 × 1415 | No | Large | From internet |

| NTU-CP-v1 [18] | 2019 | Nanyang Technological University, Singapore | 2478 | 655 | ≈4 | From 420 × 420 to 1977 × 1977 | No | Large | Digital camera |

| BJTU_PalmV1 [30] | 2019 | Institute of Information Science, Beijing Jiaotong University | 2663 | 296 | From 6 to 10 | 3264 × 2448 | No | Large | Several mobile phones |

| MPD [39] | 2020 | Tongji University, China | 16,000 | 400 | 40 | N/A | No | Large | Mobile phone |

| XJTU-UP [40] | 2021 | Xi’an Jiaotong University, China | >20,000 | 200 | ≈100 | From 3264 × 2448 to 5312 × 2988 | Yes | Large | 5 smartphone cameras |

| CrossDevice-A [41] | 2022 | Tencent Youtu Lab, China | 18,600 | 310 | 60 | N/A | No | Large | Mobile phone + IoT |

| CrossDevice-B [41] | 2022 | Tencent Youtu Lab, China | 6000 | 200 | 30 | N/A | No | Large | Mobile phone + webcam |

| Year | Paper | Method | Used Datasets | Evaluation Protocol | Acc. (%) | ||

|---|---|---|---|---|---|---|---|

| Name | #IDs. | #Imgs. | |||||

| 2002 | Li et al. [43] | Fourier transform | Private | 500 | 3000 | 1 Img/sub (Rand) for training, remain 5 Imgs for testing | 95.48 |

| 2008 | Jia et al. [44] | PL + LPP | PolyU | 100 | 600 | First session for training, second session for testing | 100.00 |

| 2014 | Jia et al. [45] | HOL | PolyU | 386 | 7752 | First 3 Imgs/sub from 1st session for training, all images from 2nd session for testing | 99.97 |

| PolyU-MS | 500 | 6000 | 100.00 | ||||

| 2016 | Luo et al. [46] | LLDP | PolyU | 386 | 7752 | First 3 Imgs from 1st session for training, second session for testing | 100.00 |

| PolyU-MS | 500 | 6000 | 100.00 | ||||

| Cross-Sensor | 200 | 12,000 | 98.45 | ||||

| IITD | 460 | 2601 | First Img/sub for training, remaining (4–6) for testing | 92.00 | |||

| 2017 | Mokni et al. [47] | Principal lines + texture pattern | PolyU | 386 | 7752 | All Imgs from 1st session for training, 5 Imgs (Rand) from second session for testing | 96.99 |

| CASIA | 312 | 5502 | 5 Imgs/sub for training, 3 Imgs/sub for testing (only right hands) | 98.00 | |||

| IITD | 460 | 2300 | 3 Imgs/sub for training, 2 Imgs/sub for testing | 97.98 | |||

| 2018 | Gumaei et al. [48] | HOG-SGF + AE | PolyU-MS | 500 | 6000 | First 3 Imgs from 1st session for training, 6 Imgs from second session for testing | 99.22 |

| CASIA | 614 | 5502 | 6 Imgs/sub for training, remaining for testing (Rand) | 97.75 | |||

| TJI | 600 | 12,000 | First session for training, second session for testing | 98.85 | |||

| 2019 | Zhou et al. [49] | DBIT | PolyU-MS | 500 | 6000 | 5-fold cross-validation | 99.83 |

| PolyU | 386 | 7752 | 98.85 | ||||

| CASIA | 614 | 5502 | 97.02 | ||||

| IITD | 460 | 2601 | 94.79 | ||||

| COEP | 168 | 1344 | 97.64 | ||||

| Year | Paper | Method | Used Datasets | Evaluation Protocol | Acc. (%) | ||

|---|---|---|---|---|---|---|---|

| Name | #IDs. | #Imgs. | |||||

| 2003 | Wu et al. [50] | Fisherpalms | Private | 300 | 3000 | 6 Imgs/sub (Rand) for training, remaining 4 Imgs/sub for testing | 99.75 |

| 2005 | Connie et al. [2] | WFDA | Private | 150 | 900 | 3 Imgs/sub for training, remaining 3 Imgs/sub for testing | 98.64 |

| 2007 | Hu et al. [51] | 2DLPP | PolyU | 100 | 600 | First session for training, second session for testing | 84.67 |

| 2008 | Pan and Ruan [52] | I2DLPPG | Private-1 | 40 | 400 | 5 Imgs/sub for training (Rand), remaining for testing | 99.50 |

| Private-2 | 346 | 1730 | 5-fold cross-validation | 95.77 | |||

| 2011 | Lu and Tan [53] | W2D−DLPP | PolyU | 100 | 600 | 4 Imgs/sub for training (Rand, 20 iterations), remaining for testing | 94.90 |

| 2018 | Rida et al. [54] | SR | PolyU-MS | 500 | 6000 | 4 Imgs/sub for training, remaining for testing (Rand, 10 iterations) | 99.24 |

| PolyU | 374 | 3740 | 99.87 | ||||

| 2019 | Rida et al. [55] | RSM | PolyU | 374 | 3740 | First 4 Imgs/sub for training, remaining for testing | 99.96 |

| PolyU-MS | 500 | 6000 | 99.15 | ||||

| Private | 400 | 8000 | 94.50 | ||||

| 2021 | Wan et al. [56] | SF2DDLPP | PolyU | 100 | 600 | 50% for training (Rand), 50% for testing | 95.18 |

| 2022 | Zhao et al. [57] | DC_MDPR | IITD | 460 | 2601 | 4 Imgs/sub for training, remaining for testing (Rand, 10 iterations) | 98.43 |

| GPDS | 100 | 1000 | 98.93 | ||||

| CASIA | 624 | 5500 | 99.11 | ||||

| TJI | 600 | 12,000 | 99.44 | ||||

| PV_790 | 418 | 4180 | 99.76 | ||||

| 2023 | Wan et al. [58] | LR-2DLDGE | PolyU | 100 | 600 | 50% for training (Rand, 10 iterations), 50% for testing | 93.87 |

| Year | Paper | Method | Used Datasets | Evaluation Protocol | Acc. (%) | ||

|---|---|---|---|---|---|---|---|

| Name | #IDs. | #Imgs. | |||||

| 2004 | Kumar and Shen [59] | PalmCode | Private | 40 | 800 | 1 Img/sub for training, remaining for testing (both palms) | 98.00 |

| 2006 | Kong et al. [60] | Fusion Code | Private | 488 | 9599 | First session for training, second session for testing | 98.26 |

| 2011 | Mansoor et al. [61] | CT + NSCT | PolyU | 386 | 7752 | 5 Imgs/sub for training, remaining 3 for testing | 88.91 |

| GPDS | 50 | 500 | 3 Imgs/sub for training, remaining 7 for testing | 98.20 | |||

| 2012 | Zhang et al. [62] | E-BOCV | PolyU | 384 | 7752 | First session for training, second session for testing | EER = 0.03 |

| 2013 | Zhang and Gu [63] | Competitive Coding + TPTSR | PolyU-MS | 500 | 6000 | First 3 Imgs/sub for training, remaining 6 Imgs/sub for testing | 98.00 |

| 2014 | Li et al. [64] | DR + PCA | PolyU | 100 | 600 | First 3 Imgs/sub for training, remaining for testing | 97.00 |

| 2016 | Fei et al. [65] | DOC | PolyU | 374 | 3740 | First 2 Imgs/sub for training, remaining for testing | 99.27 |

| PolyU-MS | 500 | 6000 | 98.60 | ||||

| IITD | 460 | 2300 | 78.70 | ||||

| 2016 | Xu et al. [66] | DRCC | PolyU | 386 | 7752 | First 2 Imgs/sub for training, remaining for testing | 98.79 |

| PolyU-MS | 500 | 6000 | 98.67 | ||||

| IITD | 460 | 2600 | 81.38 | ||||

| 2020 | Almaghtuf et al. [67] | DBM | PolyU | 386 | 7752 | First 3 Imgs/sub for training, remaining 3 for testing | 100.00 |

| PolyU-MS | 500 | 6000 | 100.00 | ||||

| IITD | 230 | 3220 | 95.40 | ||||

| CASIA | 624 | 5502 | First 2 Imgs/sub for training, remaining 2 for testing | 93.90 | |||

| 2020 | Liang et al. [68] | MTPSR | PolyU | 386 | 7752 | First 4 Imgs/sub for training, remaining for testing | 99.58 |

| GPDS | 100 | 1000 | 96.83 | ||||

| IITD | 460 | 2601 | 97.11 | ||||

| Year | Paper | Method | Used Datasets | Evaluation Protocol | Acc. (%) | ||

|---|---|---|---|---|---|---|---|

| Name | #IDs. | #Imgs. | |||||

| 2014 | Hammami et al. [69] | LBP + SFFS | CASIA | 564 | 4512 | 5 Imgs/sub for training, remaining 3 Imgs/sub for testing (Rand) | 97.53 |

| PolyU | 386 | 7752 | First session for training, second session for testing | 95.35 | |||

| 2015 | Raghavendra and Busch [70] | B-BSIF | PolyU | 352 | 7040 | Fist 10 Imgs/sub for training, remaining 10 Imgs/sub for testing | EER = 4.06 |

| IITD | 470 | 2350 | 4 Imgs/sub for training, remaining 1 Img/sub for testing (Rand, 10 iteration) | EER = 0.42 | |||

| PolyU-MS | 500 | 6000 | First session for training, second session for testing | EER = 0.00 | |||

| 2016 | Tamrakar and Khanna [71] | BGDPPH + KDA | PolyU | 400 | 8000 | 4-fold cross-validation | 99.98 |

| CASIA | 624 | 5335 | 3-fold cross-validation | 99.22 | |||

| IITD | 430 | 2400 | 99.19 | ||||

| IIITDMJ | 150 | 900 | 100.00 | ||||

| PolyU-MS | 500 | 6000 | 2-fold cross-validation | 100.00 | |||

| CASIA-MS | 200 | 1200 | 98.99 | ||||

| 2018 | Doghmane et al. [72] | DGLSPH | PolyU | 386 | 7752 | First 3 Imgs/sub for training, remaining for testing | 99.95 |

| PolyU 2D/3D | 400 | 8000 | 99.95 | ||||

| IITD | 460 | 2601 | 99.57 | ||||

| 2018 | Zhang et al. [73] | WACS-LBP + WSRC | PolyU | 386 | 7752 | First 10 Imgs/sub for training, remaining 10 Imgs/sub for testing | 99.14 |

| CASIA | 624 | 5502 | 7 Imgs/sub for training, remaining for testing (50 Rand iterations) | 98.06 | |||

| 2019 | El-Tarhouni et al. [74] | PCMLBP + PHOG | PolyU | 500 | 6000 | First session for training, second session for testing | 99.34 |

| 2019 | Attallah et al. [75] | LBP + Spiral features + mRMR | PolyU | 352 | 7040 | First session for training, second session for testing | ≈97.00 |

| IITD | 470 | 2350 | 4 Imgs/sub for training, remaining for testing | ≈98.00 | |||

| PolyU-MS | 500 | 6000 | First session for training, second session for testing | ≈99.00 | |||

| 2020 | Chaudhary and Srivastava [76] | 2DCT | IITD | 200 | 1200 | 50% for training (first Imgs), 50% for testing | 97.85 |

| CASIA | 312 | 2496 | 98.66 | ||||

| PolyU | 386 | 7752 | 99.80 | ||||

| 2020 | Zhang et al. [77] | HMS-CLBP + WSRC | PolyU | 386 | 7752 | 5-fold cross-validation (50 iterations) | 99.68 |

| CASIA | 312 | 5335 | 97.13 | ||||

| 2022 | Amrouni et al. [78] | BSIF + DWT | IITD | 460 | 2601 | First 3 Imgs/sub for training, remaining for testing | 98.77 |

| CASIA | 624 | 4992 | First 4 Imgs/sub for training, remaining for testing | 98.10 | |||

| Year | Paper | Method | Used Datasets | Evaluation Protocol | Acc. (%) | ||

|---|---|---|---|---|---|---|---|

| Name | #IDs. | #Imgs. | |||||

| 2018 | Izadpanahkakhk et al. [81] | Fast CNN | PolyU | 386 | 7752 | First 4 Imgs/sub for training, remaining for testing | 99.40 |

| Private | 354 | 3540 | 98.30 | ||||

| IITD | 460 | 2600 | 94.70 | ||||

| 2019 | Matkowski et al. [18] | EE-PRnet | CASIA | 618 | 5502 | First 4 Imgs/sub for training, remaining for testing | 97.65 |

| IITD | 460 | 2601 | 99.61 | ||||

| PolyU | 177 | 1770 | 50% for training, 50% for testing (Rand) | 99.77 | |||

| NTU-CP-v1 | 655 | 2478 | 95.34 | ||||

| NTU-PI-v1 | 2035 | 7881 | 41.92 | ||||

| 2019 | Chai et al. [30] | PalmNet + GenderNet | PolyU2D/3D | 177 | 1770 | 50% for training, 50% for testing | 98.98 |

| IITD | 460 | 2601 | 99.01 | ||||

| TJI | 600 | 12000 | 99.50 | ||||

| CASIA | 620 | 5502 | 99.18 | ||||

| BJTU_PalmV1 | 347 | 2431 | 100.00 | ||||

| BJTU_PalmV2 | 296 | 2663 | 95.16 | ||||

| 2019 | Genovese et al. [82] | PalmNet-GaborPCA | CASIA | 624 | 5455 | 2-fold cross-validation, 5 permutations (Rand) | 99.77 |

| IITD | 467 | 2669 | 99.37 | ||||

| REST | 358 | 1937 | 97.16 | ||||

| TJI | 600 | 5182 | 99.83 | ||||

| 2020 | Zhao and Zhang [83] | DDR | CASIA | 624 | 5500 | 4 Imgs/sub for training, remaining for testing (Rand, 5 iterations) | 99.41 |

| IITD | 460 | 2600 | 98.70 | ||||

| PolyU-MS | 500 | 6000 | 99.95 | ||||

| 2020 | Liu and Kumar [24] | RFN | IITD | 230 | 1150 | 5 left Imgs for training, 5 right Imgs for testing | 99.20 |

| 2021 | Liu et al. [84] | SMHNet | PolyU-MS | 500 | 6000 | 5-way 1-shot recognition | 98.94 |

| XJTU-UP | N/A | N/A | 89.73 | ||||

| TJI | 600 | 12,000 | 97.36 | ||||

| 2022 | Shen et al. [41] | ArcPalm-IR50 + PTD | CASIA | 624 | 5502 | 5-fold cross-validation (4/5 for training, 1/5 for testing) | 99.85 |

| IITD | 460 | 2601 | 100.00 | ||||

| PolyU | 114 | 1140 | 100.00 | ||||

| TJI | 600 | 12,000 | 100.00 | ||||

| MPD | 400 | 16,000 | 99.78 | ||||

| CrossDevice-A | 310 | 18,600 | 99.19 | ||||

| CrossDevice-B | 200 | 6000 | 71.20 | ||||

| 2022 | Shao and Zhong [85] | W2ML | XJTU-UP | 200 | 2000 | 50% for training (first half), 50% for testing | 91.63 |

| TJI | 600 | 12,000 | 93.39 | ||||

| MPD | 400 | 16,000 | 71.74 | ||||

| IITD | 460 | 2600 | 94.02 | ||||

| 2023 | Türk et al. [86] | CNN deep features + SVM | PolyU | 386 | 7752 | Monte Carlo cross-validation (5 iterations) | 99.72 |

| Strengths | Weakness | |

|---|---|---|

| Line-based methods | These methods demonstrate the ability to acquire data-driven representations and exhibit strong discriminative abilities. | It is essential that the training data represent the classes under consideration comprehensively and accurately. |

| Subspace learning-based methods | High descriptive capabilities coupled with a low computational cost characterize the efficiency of these methods. | The sensitivity to the size of the subspace is a significant challenge that requires careful consideration in its application. |

| Local direction encoding-based methods | These methods have a high discriminatory capability and stable characteristics. | They require significant computing resources and contribute to high computational costs. |

| Texture based-methods | Characteristics can be extracted from lower-resolution images, and these techniques exhibit consistent and stable characteristics. | They are highly susceptible to noise interference. |

| Deep learning-based methods | These methods demonstrate exceptional robustness when applied to large and complex datasets. | They require a significant amount of training data and incur high computational costs. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amrouni, N.; Benzaoui, A.; Zeroual, A. Palmprint Recognition: Extensive Exploration of Databases, Methodologies, Comparative Assessment, and Future Directions. Appl. Sci. 2024, 14, 153. https://doi.org/10.3390/app14010153

Amrouni N, Benzaoui A, Zeroual A. Palmprint Recognition: Extensive Exploration of Databases, Methodologies, Comparative Assessment, and Future Directions. Applied Sciences. 2024; 14(1):153. https://doi.org/10.3390/app14010153

Chicago/Turabian StyleAmrouni, Nadia, Amir Benzaoui, and Abdelhafid Zeroual. 2024. "Palmprint Recognition: Extensive Exploration of Databases, Methodologies, Comparative Assessment, and Future Directions" Applied Sciences 14, no. 1: 153. https://doi.org/10.3390/app14010153

APA StyleAmrouni, N., Benzaoui, A., & Zeroual, A. (2024). Palmprint Recognition: Extensive Exploration of Databases, Methodologies, Comparative Assessment, and Future Directions. Applied Sciences, 14(1), 153. https://doi.org/10.3390/app14010153