Estimating Riparian Vegetation Volume in the River by 3D Point Cloud from UAV Imagery and Alpha Shape

Abstract

:1. Introduction

2. Materials and Methods

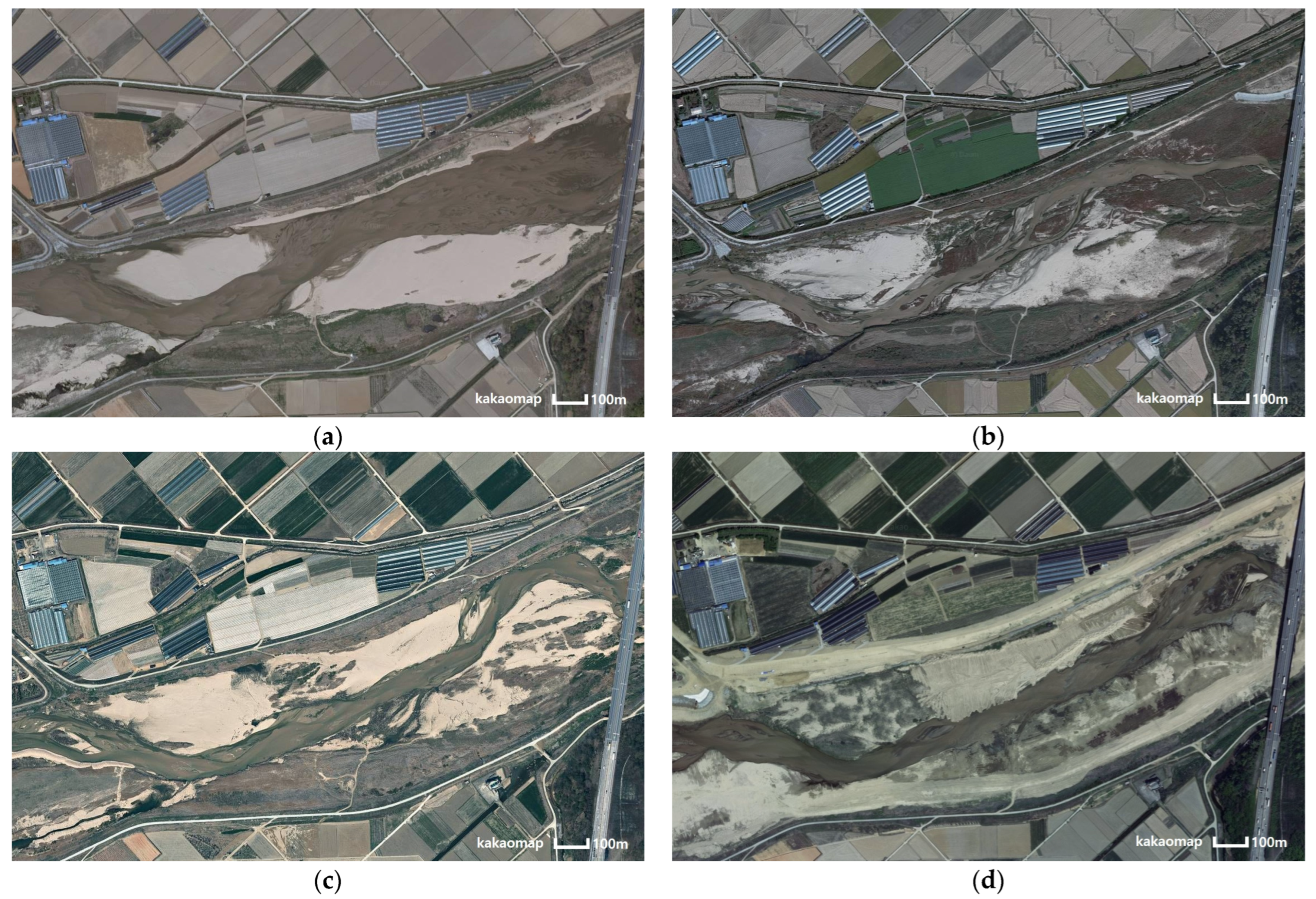

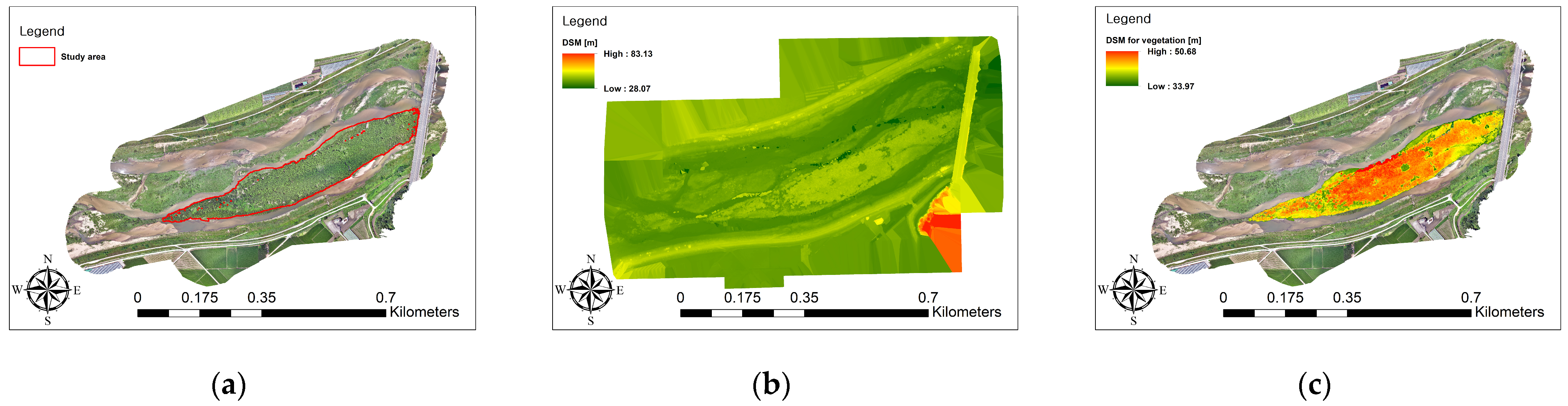

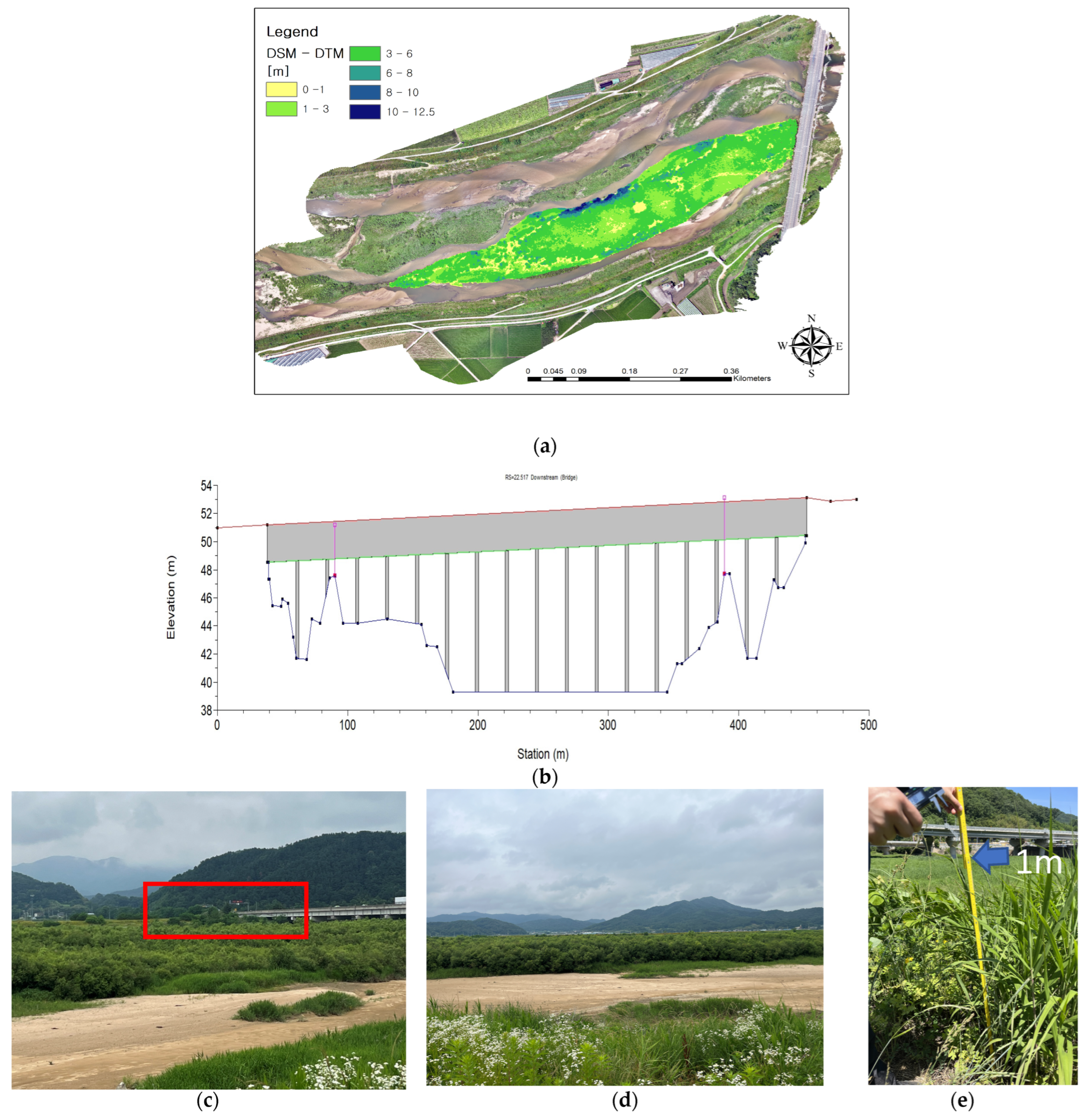

2.1. Target Section and Field Survey

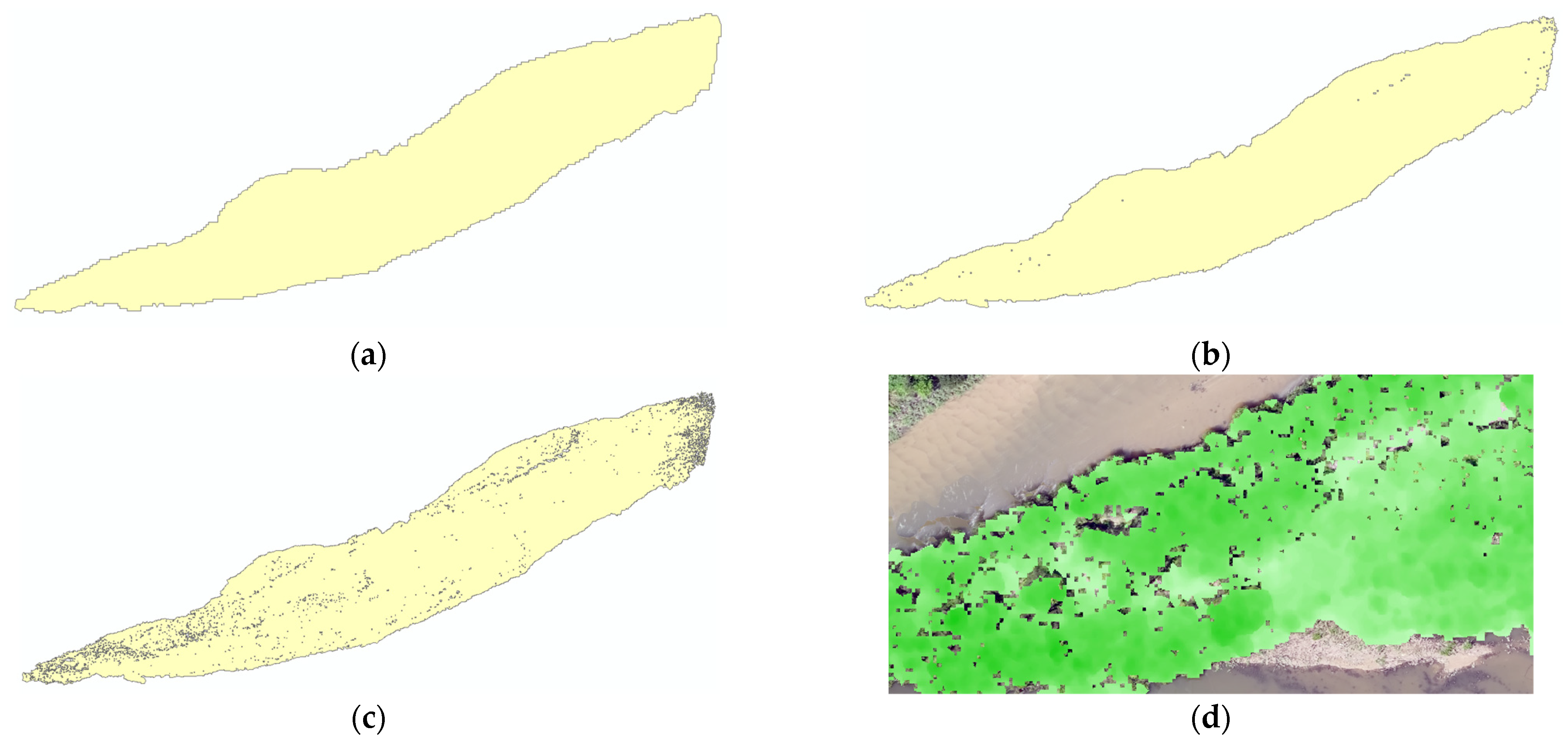

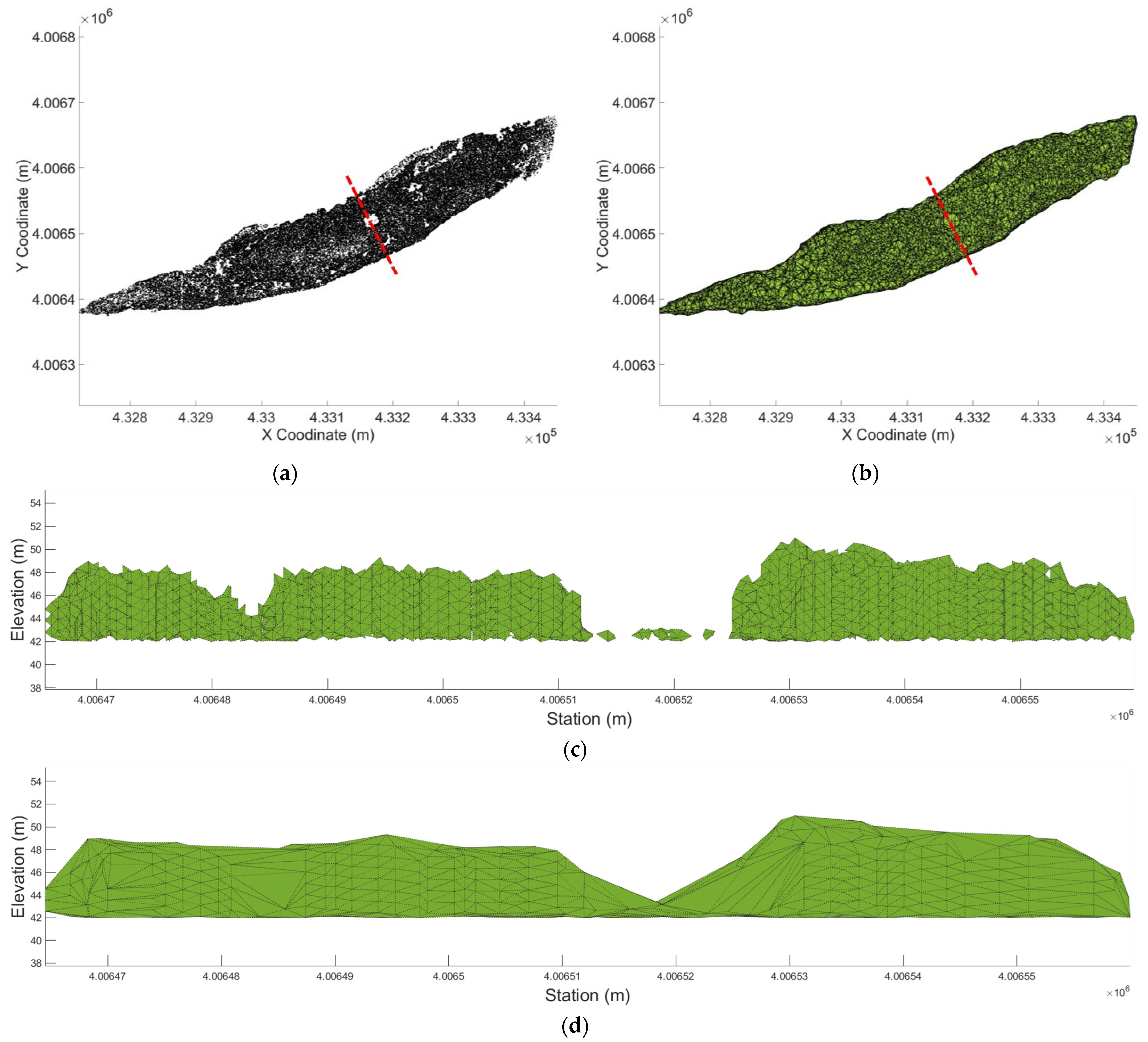

2.2. 3D Point Cloud Editing

2.3. Alpha Shape

3. Results

3.1. Vegetation Volume Estimation Results Based on DSM and DTM

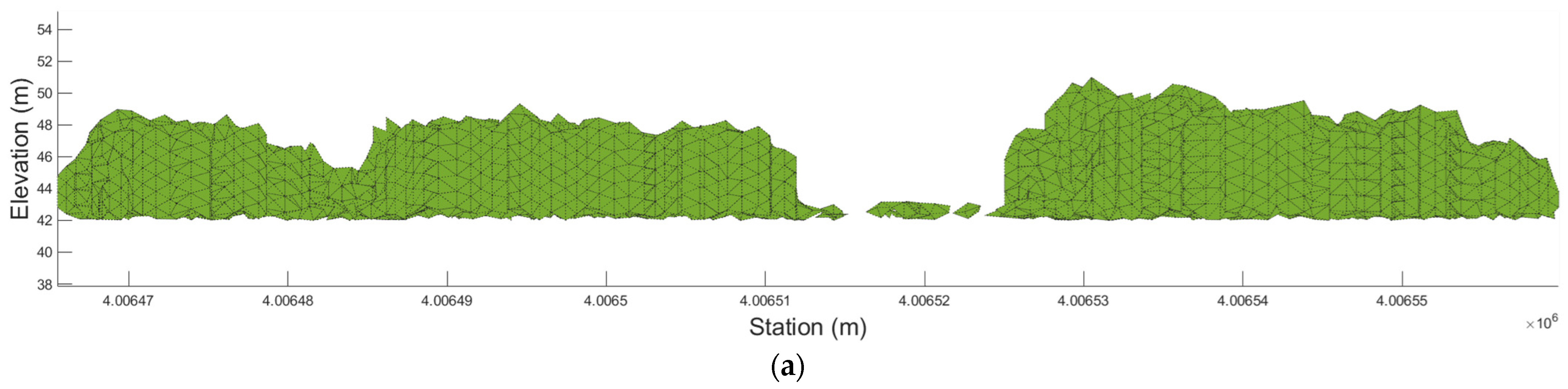

3.2. Results of Volume Estimation by the Alpha Shape Method

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rominger, J.T.; Lightbody, A.F.; Nepf, H.M. Effects of added vegetation on sand bar stability and stream hydrodynamics. J. Hydraul. Eng. 2010, 136, 994–1002. [Google Scholar] [CrossRef]

- Surian, N.; Barban, M.; Ziliani, L.; Monegato, G.; Bertoldi, W.; Comiti, F. Vegetation turnover in a braided river: Frequency and effectiveness of floods of different magnitude. Earth Surf. Process. Landf. 2015, 40, 542–558. [Google Scholar] [CrossRef]

- Jin, S.N.; Cho, K.H. Expansion of riparian vegetation due to change of flood regime in the Cheongmi-cheon stream, Korea. Ecol. Resil. Infrastruct. 2016, 3, 322–326. [Google Scholar] [CrossRef]

- Kim, W.; Kim, S. Analysis of the riparian vegetation expansion in middle size rivers in Korea. J. Korea Water Resour. Assoc. 2019, 52, 875–885. [Google Scholar]

- Kim, W.; Kim, S. Riparian vegetation expansion due to the change of rainfall pattern and water level in the river. Ecol. Resil. Infrastruct. 2020, 7, 238–247. [Google Scholar]

- Ji, U.; Järvelä, J.; Västilä, K.; Bae, I. Experimentation and modeling of reach-scale vegetative flow resistance due to willow patches. J. Hydraul. Eng. 2023, 149, 04023018. [Google Scholar] [CrossRef]

- Lee, C.; Kim, D.G.; Hwang, S.Y.; Kim, Y.; Jeong, S.; Kim, S.; Cho, H. Dataset of long-term investigation on change in hydrology, channel morphology, landscape and vegetation along the Naeseong stream (II). Ecol. Resil. Infrastruct. 2019, 6, 34–48. [Google Scholar]

- Woo, H.; Cho, K.H.; Jang, C.L.; Lee, C.J. Fluvial processes and vegetation-research trends and implications. Ecol. Resil. Infrastruct. 2019, 6, 89–100. [Google Scholar]

- Woo, H.; Park, M. Cause-based categorization of the riparian vegetative recruitment and corresponding research direction. Ecol. Resil. Infrastruct. 2016, 3, 207–211. [Google Scholar] [CrossRef]

- Woo, H.; Park, M.; Cheong, S.J. A preliminary investigation on patterns of riparian vegetation establishment and typical cases in Korea. In Proceedings of the Annual Conference of Korea Water Resources Association, Pyungchang, Republic of Korea, 8–12 November 2009; Korea Water Resources Association: Seoul, Republic of Korea, 2009; pp. 474–478. [Google Scholar]

- Wang, Y.; Fu, B.; Liu, Y.; Li, Y.; Feng, X.; Wang, S. Response of vegetation to drought in the Tibetan plateau: Elevation differentiation and the dominant factors. Agric. For. Meteorol. 2021, 306, 108468. [Google Scholar] [CrossRef]

- Lama, G.F.C.; Errico, A.; Francalanci, S.; Chirico, G.B.; Solari, L.; Preti, F. Hydraulic modeling of field experiments in a drainage channel under different riparian vegetation scenarios. In Innovative Biosystems Engineering for Sustainable Agriculture, Forestry and Food Production: International Mid-Term Conference 2019 of the Italian Association of Agricultural Engineering (AIIA); Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 69–77. [Google Scholar] [CrossRef]

- Symmank, L.; Natho, S.; Scholz, M.; Schröder, U.; Raupach, K.; Schulz-Zunkel, C. The impact of bioengineering techniques for riverbank protection on ecosystem services of riparian zones. Ecol. Eng. 2020, 158, 106040. [Google Scholar] [CrossRef]

- Naiman, R.J.; Decamps, H.; Pollock, M. The role of riparian corridors in maintaining regional biodiversity. Ecol. Appl. 1993, 3, 209–212. [Google Scholar] [CrossRef] [PubMed]

- Nilsson, C.; Svedmark, M. Basic principles and ecological consequences of changing water regimes: Riparian plant communities. Environ. Manag. 2002, 30, 468–480. [Google Scholar] [CrossRef] [PubMed]

- Tockner, K.; Stanford, J.A. Riverine flood plains: Present state and future trends. Environ. Conserv. 2002, 29, 308–330. [Google Scholar] [CrossRef]

- Catterall, C.P.; Lynch, R.; Jansen, A. Riparian wildlife and habitats. In Principles for Riparian Lands Management; Lovett, S., Price, P., Eds.; Land and Water Australia: Canberra, Australia, 2007; pp. 141–158. [Google Scholar]

- Blentlinger, L.; Herrero, H.V. A tale of grass and trees: Characterizing vegetation change in Payne’s Creek National Park, Belize from 1975 to 2019. Appl. Sci. 2020, 10, 4356. [Google Scholar] [CrossRef]

- Catford, J.A.; Jansson, R. Drowned, buried and carried away: Effects of plant traits on the distribution of native and alien species in riparian ecosystems. New Phytol. 2014, 204, 19–36. [Google Scholar] [CrossRef] [PubMed]

- Garssen, A.G.; Verhoeven, J.T.; Soons, M.B. Effects of climate-induced increases in summer drought on riparian plant species: A meta-analysis. Freshw. Biol. 2014, 59, 1052–1063. [Google Scholar] [CrossRef]

- Rohde, S.; Schütz, M.; Kienast, F.; Englmaier, P. River widening: An approach to restoring riparian habitats and plant species. River Res. Appl. 2005, 21, 1075–1094. [Google Scholar] [CrossRef]

- Jang, E.K.; Ahn, M. Estimation of single vegetation volume using 3D point cloud-based alpha shape and voxel. Ecol. Resil. Infrastruct. 2021, 8, 204–211. [Google Scholar]

- Ahn, M.; Jang, E.K.; Bae, I.; Ji, U. Reconfiguration of physical structure of vegetation by voxelization based on 3D point clouds. KSCE J. Civ. Environ. Eng. Res. 2020, 40, 571–581. [Google Scholar]

- Jang, E.K.; Ahn, M.; Ji, U. Introduction and application of 3D terrestrial laser scanning for estimating physical structurers of vegetation in the channel. Ecol. Resil. Infrastruct. 2020, 7, 90–96. [Google Scholar]

- Yan, G.; Li, L.; Coy, A.; Mu, X.; Chen, S.; Xie, D.; Zhang, W.; Shen, Q.; Zhou, H. Improving the estimation of fractional vegetation cover from UAV RGB imagery by colour unmixing. ISPRS J. Photogramm. 2019, 158, 23–34. [Google Scholar] [CrossRef]

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Qiao, L.; Zhao, R.; Tang, W.; An, L.; Sun, H.; Li, M.; Wang, N.; Liu, Y.; Liu, G. Estimating maize LAI by exploring deep features of vegetation index map from UAV multispectral images. Field Crops Res. 2022, 289, 108739. [Google Scholar] [CrossRef]

- Vicari, M.B.; Disney, M.; Wilkes, P.; Burt, A.; Calders, K.; Woodgate, W. Leaf and wood classification framework for terrestrial LiDAR point clouds. Methods Ecol. Evol. 2019, 10, 680–694. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Fournier, R.A.; Côté, J.F.; Pimont, F. Estimation of vertical plant area density from single return terrestrial laser scanning point clouds acquired in forest environments. Remote Sens. Environ. 2022, 279, 113115. [Google Scholar] [CrossRef]

- Soma, M.; Pimont, F.; Dupuy, J.L. Sensitivity of voxel-based estimations of leaf area density with terrestrial LiDAR to vegetation structure and sampling limitations: A simulation experiment. Remote Sens. Environ. 2021, 257, 112354. [Google Scholar] [CrossRef]

- Béland, M.; Kobayashi, H. Mapping forest leaf area density from multiview terrestrial LiDAR. Methods Ecol. Evol. 2021, 12, 619–633. [Google Scholar] [CrossRef]

- Halubok, M.; Kochanski, A.K.; Stoll, R.; Bailey, B.N. Errors in the estimation of leaf area density from aerial LiDAR data: Influence of statistical sampling and heterogeneity. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Wei, S.; Yin, T.; Dissegna, M.A.; Whittle, A.J.; Ow, G.L.F.; Yusof, M.L.M.; Lauret, N.; Gastellu-Etchegorry, J.P. An assessment study of three indirect methods for estimating leaf area density and leaf area index of individual trees. Agric. For. Meteorol. 2020, 292–293, 108101. [Google Scholar] [CrossRef]

- Polat, N.; Uysal, M. An experimental analysis of digital elevation models generated with LiDAR data and UAV photogrammetry. J. Indian Soc. Remote Sens. 2018, 46, 1135–1142. [Google Scholar] [CrossRef]

- Nikolakopoulos, K.G.; Kyriou, A.; Koukouvelas, I.K. Developing a guideline of unmanned aerial vehicle’s acquisition geometry for landslide mapping and monitoring. Appl. Sci. 2022, 12, 4598. [Google Scholar] [CrossRef]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Wan Mohd Jaafar, W.S.; Srinivasan, S.; Bajaj, S.; Dalla Corte, A.P.; do Amaral, C.H.; Gopan, G.; Saad, S.N.M.; et al. Individual tree detection using UAV-Lidar and UAV-SfM data: A tutorial for beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- Chen, S.; McDermid, G.J.; Castilla, G.; Linke, J. Measuring vegetation height in linear disturbances in the boreal forest with UAV photogrammetry. Remote Sens. 2017, 9, 1257. [Google Scholar] [CrossRef]

- Giannetti, F.; Chirici, G.; Gobakken, T.; Næsset, E.; Travaglini, D.; Puliti, S. A new approach with DTM-independent metrics for forest growing stock prediction using UAV photogrammetric data. Remote Sens. Environ. 2018, 213, 195–205. [Google Scholar] [CrossRef]

- Ministry of Land (MoL). Infrastructure and Transport Gamcheon River Maintenance Basic Plan, Ministry of Land; Ministry of Land (MoL): Sejong, Republic of Korea, 2020.

- Kakao Map. Available online: https://map.kakao.com/ (accessed on 15 December 2023).

- Nwaogu, J.M.; Yang, Y.; Chan, A.P.; Chi, H.L. Application of drones in the architecture, engineering, and construction (AEC) industry. Autom. Constr. 2023, 150, 104827. [Google Scholar] [CrossRef]

- Boothroyd, R. Flow-Vegetation Interactions at the Plant-Scale: The Importance of Volumetric Canopy Morphology on Flow Field Dynamics. Ph.D. Thesis, Durham University, Durham, UK, 2017. [Google Scholar]

- ColudCompare (Webpage) SOR Filter. Available online: https://www.cloudcompare.org/doc/wiki/index.php?title=SOR_filter#Description (accessed on 15 December 2023).

- Edelsbrunner, H.; Kirkpatrick, D.; Seidel, R. On the shape of a set of points in the plane. IEEE Trans. Inf. Theor. 1983, 29, 551–559. [Google Scholar] [CrossRef]

- Becker, C.; Häni, N.; Rosinskaya, E.; d’Angelo, E.; Strecha, C. Classification of aerial photogrammetric 3D point clouds. arXiv 2017, arXiv:1705.08374. [Google Scholar] [CrossRef]

- Gardiner, J.D.; Behnsen, J.; Brassey, C.A. Alpha shapes: Determining 3D shape complexity across morphologically diverse structures. BMC Evol. Biol. 2018, 18, 184. [Google Scholar] [CrossRef]

- Xu, X.; Harada, K. Automatic surface reconstruction with alpha-shape method. Vis. Comput. 2003, 19, 431–443. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional alpha shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

| Name | Coordinates | Name | Coordinates | ||||

|---|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | ||

| GCP1 | 313,137.79 | 401,026.08 | 48.44 | GCP7 | 312,052.35 | 401,082.40 | 49.20 |

| GCP2 | 312,954.72 | 400,941.34 | 48.7 | GCP8 | 312,388.18 | 401,180.32 | 46.36 |

| GCP3 | 312,791.71 | 400,912.27 | 44.94 | GCP9 | 312,642.63 | 401,176.64 | 44.58 |

| GCP4 | 312,378.46 | 400,837.23 | 49.33 | GCP10 | 313,027.94 | 401,321.81 | 44.70 |

| GCP5 | 311,511.35 | 400,776.99 | 49.86 | GCP11 | 313,087.25 | 401,376.73 | 48.47 |

| GCP6 | 311,731.34 | 400,927.81 | 49.43 | ||||

| Raw Data (Surface Only) | Sampling by Space (Surface Only) | Filled Point Data | |

|---|---|---|---|

| Raw data | 27,004,538 | - | - |

| SOR 1st | 23,882,816 | 97,361 | 446,050 |

| SOR 2nd | 20,599,446 | 90,762 | 414,791 |

| SOR 3rd | 18,255,112 | 85,151 | 386,791 |

| Cell Size | Mean Elevation of DSM-DTM (m) | Standard Deviation of DSM-DTM (m) | Area of Study Site (m2) | Volume (m3) |

|---|---|---|---|---|

| 0.56 | 3.567 | 1.78 | 66,380.4 | 236,779 |

| 1.2 | 3.545 | 1.77 | 68,545.9 | 242,995 |

| 2.4 | 3.542 | 1.75 | 70,011.8 | 247,981 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jang, E.; Kang, W. Estimating Riparian Vegetation Volume in the River by 3D Point Cloud from UAV Imagery and Alpha Shape. Appl. Sci. 2024, 14, 20. https://doi.org/10.3390/app14010020

Jang E, Kang W. Estimating Riparian Vegetation Volume in the River by 3D Point Cloud from UAV Imagery and Alpha Shape. Applied Sciences. 2024; 14(1):20. https://doi.org/10.3390/app14010020

Chicago/Turabian StyleJang, Eunkyung, and Woochul Kang. 2024. "Estimating Riparian Vegetation Volume in the River by 3D Point Cloud from UAV Imagery and Alpha Shape" Applied Sciences 14, no. 1: 20. https://doi.org/10.3390/app14010020