Abstract

In recent years, the scientific and technological developments have led to an explosion of available videos on the web, increasing the necessity of fast and effective video analysis and summarization. Video summarization methods aim to generate a synopsis by selecting the most informative parts of the video content. The user’s personal preferences, often involved in the expected results, should be taken into account in the video summaries. In this paper, we provide the first comprehensive survey on personalized video summarization relevant to the techniques and datasets used. In this context, we classify and review personalized video summary techniques based on the type of personalized summary, on the criteria, on the video domain, on the source of information, on the time of summarization, and on the machine learning technique. Depending on the type of methodology used by the personalized video summarization techniques for the summary production process, we classify the techniques into five major categories, which are feature-based video summarization, keyframe selection, shot selection-based approach, video summarization using trajectory analysis, and personalized video summarization using clustering. We also compare personalized video summarization methods and present 37 datasets used to evaluate personalized video summarization methods. Finally, we analyze opportunities and challenges in the field and suggest innovative research lines.

1. Introduction

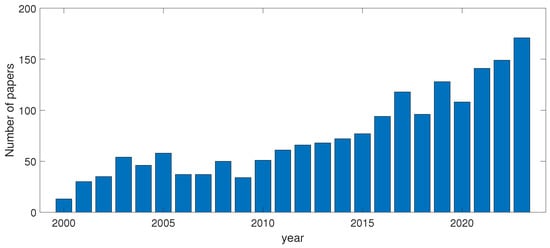

In recent years, the rapid advancement of technology has led to the integration of camcorders into many devices. As the number of camcorders increases, so does the number of recorded videos []. This results in a huge increase in videos that are uploaded daily on the Internet []. Daily activities or special moments are recorded, resulting in large amounts of video []. Human access to multimedia creation, mainly through mobile devices and the tendency to share them through social networks [], causes an explosion of videos available on the Web. Searching for specific video content and categorization is generally time-consuming. The traditional representation of video files as a sequence of numerous consecutive frames, each of which corresponds to a constant time interval, while adequate for viewing a file in a movie mode, presents a number of limitations for the new emerging multimedia services such as content-based search, retrieval, navigation, and video browsing []. The need for rational time management led to the development of automatic video content summarization [] and indexing/clustering [] techniques to facilitate access, content search, and automatic categorization (tagging/labeling), as well as action recognition [] and common action detection in videos []. The number of papers per year that contain in their title the phrase “video summarization” according to Google Scholar is depicted in Figure 1.

Figure 1.

Thenumber of papers per year containing in their title the phrase “video summarization” according to google scholar.

Many studies have been devoted to developing and designing tools that have the ability to create videos with a duration shorter than the original video, reflecting the most important visual and semantic content [,]. As users grow in the base, so does the diversity between them. A summary which is uniform for all users may not suit everyone’s needs. Each user can consider different important sections according to his interests, needs, and the time he will spend. Therefore, the focus should be on the personalized summary of the general video summary []. User preferences, which are often involved in the expected results, should be taken into account in video summaries []. Therefore, it is important to modify the video summary to suit the user’s interests and preferences, thus creating a personalized video summary, while retaining important semantic content from the original video [].

Video summaries that reflect the understanding of individual users about the content of the video should be personalized in a way that is based on individual needs and intuitive. Consequently, the personalized video summary is tailored to the individual user’s understanding of the video content or understanding of the content of the video. In contrast, video summaries that are not personalized are not customized in any way to the understanding of the individual user. In several ways, summaries can be personalized. For example, personalizing video summaries can involve pre-filtering content via user profiles before showing it to the user, creating summaries tailored to the user’s behavior and movements during video capture, and customizing summaries according to the user’s unique browsing and access habits [].

Several studies on personalized video summarization have appeared in the literature. In [], Tsenk et al. (2001) introduced personalized video summarization for mobile devices. After two years, Tseng et al. (2003) [] used context information to generate hierarchical video summarization. Lie and Hsu (2008) [] proposed a personalized summary video framework from the semantic extraction features of each frame. Shafeian and Bhanu (2012) [] proposed a personalized system for video summarization and retrieval. Zhang et al. (2013) [] proposed a personalized video summarization that is interactive and based on sketches. Panagiotakis et al. (2020) [] provided personalized video summarization through a recommender system, where the output is personalized rankings of video segments. Hereafter, a rough classification of the current research is presented:

- Many works were carried out that produced a personalized video summary in real time. Valdés and Martínez (2010) [] introduced an application for interactive video summarization in real time on the fly. Chen and Vleeschouwer (2010) [] produced personalized basketball video summaries in real time from data from multiple streams.

- Several works were based on queries. Kannan et al. (2015) [] proposed a system to create personalized movie summaries with a query interface. Given a given semantic query, Garcia (2016) [] proposed a system that can find relevant digital memories and perform personalized summarization. Huang and Worring (2020) [] created a dataset based on a query–video pair.

- In a few works, information was extracted from humans using sensors. Katti et al. (2011) [] used eye gaze and pupillary dilation to generate storyboard personalized video summaries. Qayyum et al. (2019) [] used electroencephalography to detect viewer emotion.

- Few studies focused on egocentric personalized video summarization. Varini et al. (2015) [] proposed a personalized egocentric video summarization framework. The most recent study by Nagar et al. (2021) [] presented a framework of unsupervised reinforcement learning in daylong egocentric videos.

- Many studies have been conducted in which machine learning has been used in the development of a video summarization technique. Park and Cho (2011) [] proposed the summarization of personalized live video logs from a multicamera system using machine learning. Peng et al. (2011) [] proposed personalized video summarization by supervised machine learning using a classifier. Ul et al. (2019) [] used the deep CNN model to recognize facial expression. Zhou et al. (2019) [] proposed a Character-Oriented Video Summarization framework. Fei et al. (2021) [] proposed a triplet deep-ranking model for personalized video summarization. Mujtaba et al. (2022) [] proposed a framework for personalized video summarization using 2D CNN. öprü and Erzin (2022) [] used Affective Visual Information for Human-Centric Video Summarization. Ul et al. (2022) [] presented Object of Interest (OoI), a personalized video summarization framework based on the Object of Interest.

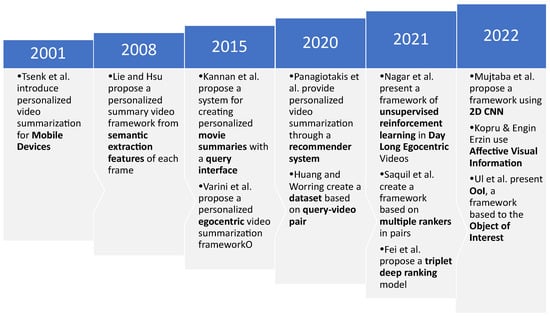

Figure 2 presents milestone works in personalized video summarization. This figure also shows the first paper published for the personalized video summary and the long time it took for the works to begin to be published en masse.

Figure 2.

Milestones in personalized video summarization ([] (2001), [] (2008), [,] (2015), [,] (2020), [,,] (2021), [,,] (2022)).

The remainder of this paper is structured as follows. Section 2 introduces the main applications of the personalized video summary. Section 3 presents approaches to summarize personalized video summaries by separating audiovisual cues. Section 4 presents the classification of personalized video summary techniques into six categories according to the type of personalized summary, criteria, video domain, source of information, time of summarization, and machine learning technique. Section 5 classifies the techniques into five main categories according to the type of methodology used by the personalized video summarization techniques. Section 6 describes the individualized video datasets suitable for summarization. Section 7 describes the evaluation of personalized video summarization methods. In Section 8, the quantitative comparison of personalized video summarization approaches is presented on the most prevalent datasets. In Section 9, this work is concluded by briefly describing the main results of the study conducted. In addition, opportunities and challenges in the field and suggested innovative research lines are analyzed.

2. Applications of Personalized Video Summarization

Nowadays, with the increasing affordability of video recorders, there has been an increase in the number of videos recorded. Personalized video summarization is crucial to help people manage their videos effectively by providing personalized summaries and is useful for a number of purposes. Listed below are the main applications of personalized video summarization.

2.1. Personalized Trailer of Automated Serial, Movies, Documentaries and TV Broadcasting

For the same film, there is a large discrepancy in users’ preferences for summarization, so nowadays, it is necessary to create a personalized movie summarization system []. Personalized video summarization is useful for creating series and movie trailers to make each viewer watch the series or movies more interesting, as they focus on their interests. Examples of such works are presented below.

Darabi and Ghinea [] proposed a framework to produce personalized video summaries based on ratings assigned by video experts in video frames, and their second method could demonstrate good results for the movie category. Kannan et al. [] proposed a system to create movie summaries with semantic meaning for the same video, which are tailored to the user’s preferences and interests and which are collected from a query interface. Fei et al. [] proposed an event detection-based framework and expanded it to create a personalized summary of video film and soccer. Ul et al. [] presented a personalized movie summarization scheme using deep CNN-Assisted Recognition of facial expressions. Mujtaba et al. [] proposed a lightweight client-driven personalized video summarization framework that aimed to create subsets for long videos, such as documentaries and movies.

2.2. Personalized Sport Highlights

Sports footage is produced using manual methods; editing requires experience and is performed using any video editing tool []. The personalized video summary is useful for creating sports highlights so that each viewer can view the snapshots that interest them from each sport activity. Examples of such works are presented below.

Chen and Vleeschouwer [] proposed a flexible framework to produce personalized basketball video summaries in real time, integrating general production principles, narrative user preferences on story patterns, and contextual information. Hannon et al. [] generated video highlights by summarizing full-length matches. Chen et al. [] proposed a complete autonomous framework production process for personalized basketball video summaries from multisensor data from multiple cameras. Olsen and Moon [] described how the user interaction of previous viewers could be used to create a personalized video summarization, computing more interesting plays in a game. Chen et al. [,] produced a hybrid personalized video summarization framework by summarizing a broadcast soccer video that combines content truncation and adaptive fast forwarding. Kao et al. proposed a personal video summarization system that integrates the global positioning system and the radio frequency identification information for marathon activities []. Sukhwani and Kothari [] presented an approach that can be applied by multiple matches to create complex summaries or the summary of a match. Tejero-de-Pablos et al. [] proposed a framework that uses neural networks to generate a personalized video summarization that can be applied to any sport in which games have a sequence of actions. To select the highlights of the original video, they used the actions of the players as a contract. To evaluate the method, this project uses the case of Kendo.

2.3. Personalized Indoor Video Summary

Creating a personalized home video summary is very important, as each user can view events that interest them according to their preferences without having to watch the entire video. Niu et al. [] presented an interactive mobile-based real-time home video summarization application that reflects user preferences. Park and Cho [] presented a method by which the video summarization of life logs from multiple sources is performed in an office environment. Peng et al. [] presented a system to automatically generate personalized home video summaries based on user behavior.

2.4. Personalized Video Search Engines

The Internet contains a large amount of video content. Consider a scenario in which a user is looking for videos on a specific topic. Search engines present the user with numerous video results []. Various search engines are used based on the user’s interest to contain video topics in the form of a short video clip format []. SH-DPP was proposed by Sharghi et al. [] to generate query-focused video summaries to be useful to search engines, such as displaying video clips.

2.5. Personalized Egocentric Video Summarization

Plenty of egocentric cameras have led to the daily production of thousands of egocentric videos. A large proportion of these self-centered videos are many hours long, so it is necessary to produce a personalized video summarization so that each viewer can watch the snapshots that interest them. Watching egocentric videos is difficult from start to finish due to extreme camera shakes and their unnecessary nature. Summary tools are effectively required by these videos for consumption. But traditional summary techniques developed for static surveillance films or videos and sports videos cannot be adapted to egocentric videos. However, it is a limitation to focus on important people and objects using specialized summary techniques developed for egocentric videos []. To summarize egocentric videos that are a day long, Nagar et al. [] proposed an unsupervised learning framework to generate personalized summaries in terms of both content and length.

3. Approaches for Summarizing Personalized Videos

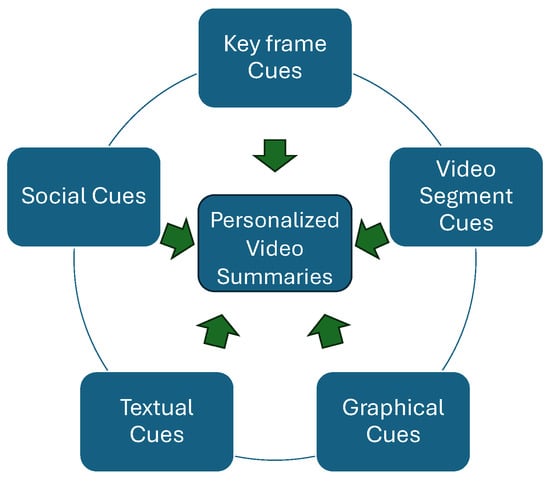

To achieve a concise and condensed presentation of the content of a video stream, video summaries have several audiovisual cues []. The goal of each personalized video summary is to keep the audiovisual elements that they incorporate intact. The audiovisual cues used for personalized video summaries are shown in Figure 3 and are classified as follows:

Figure 3.

Audiovisual cues used for personalized video summaries.

- Keyframe cues are the most representative and important frames or still images drawn from a video stream in the sequence of time []. For example, the personalized video summarization from Köprü and Erzin [] is configured as a keyframe option that maximizes a scoring function based on sentiment characteristics.

- Video segment cues are a dynamic extension of keyframe cues, which are video segment cues []. These are the most important part of a video stream, and video summaries produced with dynamic elements in mind manage to preserve both video and audio []. These video summaries have the ability to preserve the sound element and movement of the video, making them more attractive to the user. A major drawback is that the user takes longer to understand the content of these videos []. Sharghi et al. [] proposed a framework that checks the relevance of a shot to its importance in the context of the video and in the user’s query for inclusion in the summary.

- Graphical cues complement other cues using syntax and visual cues []. Using syntax as a substitute for other conditions and visual cues shows an extra layer of detail. Users perceive an overview of the content of a video summary in more detail due to embedded widgets that other methods cannot achieve []. This is illustrated by Tseng and Smith, who presented a method according to which the annotation tool learns the semantic idea, either from other sources or from the same video sequence [].

- Textual cues use text captions or textual descriptors to summarize content []. For example, the multilayered Probabilistic Latent Semantic Analysis (PLSA) model [] presents a personalized video summarization framework based on contemporary feedback in time offered by multiple users.

- Social cues are connections of the user image through the social network. Yin et al. [] proposed an automatic video summarization framework in which user interests are extracted from their image collections on the social network.

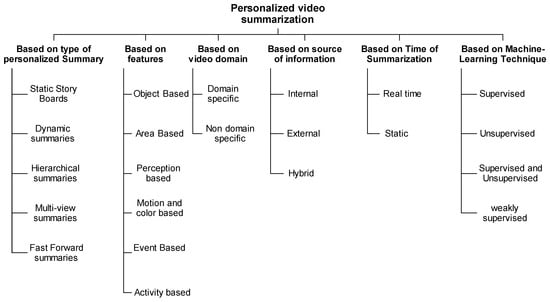

4. Classification of Personalized Video Summarization Techniques

Many techniques have emerged to create personalized video summaries with the aim of keeping the exact content of the original video intact. Based on the characteristics and properties, the techniques are classified into the following categories that are depicted in Figure 4.

Figure 4.

Categories of personalized video summarization techniques.

4.1. Type of Personalized Summary

Video summarization is a technique used to generate a concise overview of a video, which can consist of a sequence of still images (keyframes) or moving images (video skims) []. The personalized video summarization process involves creating a personalized summary of a specific video. The desired types of personalized summaries following this process also serve as a foundation for categorizing techniques used in personalized video summarization. Different possible outputs can be considered when approaching the problem of personalized summary, such as the following:

- Static storyboards are also called static summaries. Some personalized video summaries consist of a set of static images that extract highlights, and their representation is made into a photo album [,,,,,,,,,,,,,,,,,,]. The generation of these summaries is performed by extracting the keyframes according to the user’s preferences and the summary criteria.

- Dynamic summaries are also called dynamic video skims. Personalized video summarization is performed by selecting a subset of consecutive frames from all frames of the original video that includes the most relevant subshots that represent the original video according to the user’s preferences as well as the summary criteria [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,].

- A Hierarchical summary is a multilevel and scalable summary. It consists of a few abstractive layers, where the lowest layer contains the largest number of keyframes and more details, while the highest layer contains the smallest number of keyframes. Hierarchical video summaries provide the user with a few levels of summary, which provides the advantage of making it easier for users to determine what is appropriate []. Based on the preferences of each user, the video summary presents an appropriate overview of their original video. Sachan and Keshaveni [], to accurately identify the related concept with a frame, proposed a hierarchical mechanism to classify the images. The role of the system that performs the hierarchical classification is the deep categorization of a framework against a classification that is defined. Tseng and Smith [] suggested a summary algorithm, where server metadata descriptions, contextual information, user preferences, and user interface statements are used to create hierarchical summary video production.

- Multiview summaries are summaries created from videos recorded simultaneously by multiple cameras. When watching sports videos, these summaries are useful, as the video is recorded by many cameras. In the multiview summary, challenges can arise that are often due to the overlapping and redundancy of the contents, as well as the lighting conditions and visual fields from the different views. As a result, for static summary output, the basic frame can be extracted with difficulty, and for the video, to shoot border detection. Therefore, in this scenario, conventional techniques for video skimming and extracting keyframes from videos recorded by a camera cannot be applied directly []. In personalized video summarization, the multiview video summary is determined based on the preferences of each user and the summary criteria [,,,].

- Fast forward summaries: When a user watches a video that is not informative or interesting, they will often play it fast or move it forward quickly []. Therefore, in the personalized video summary, the user wants to fast forward the video segments that are not of interest. In [], Yin et al., in order to inform the context, users play in fast forward mode the less important parts. Chen et al. [] proposed an adaptive fast forward personalized video summarization framework that performs clip-level fast forwarding, choosing from discrete options the playback speeds, which include as a special case the cropping of content at a playback speed that is infinite. In personalized video summary, fast forwarding is used in sports videos, where each viewer wants to watch the main phases of the match that are of interest to them according to their preferences, while also having a brief overview of the remaining phases that are not as interesting or important to them. Chen and Vleeschouwer [] proposed a personalized video summarization framework for soccer video broadcasts with adaptive fast forwarding, where efficient resource allocation selection selects the optimal combination of candidate summaries.

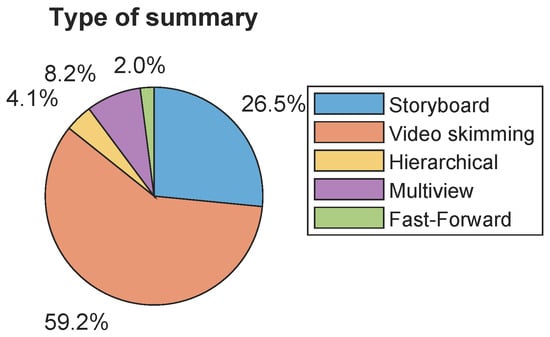

Figure 5 shows the distribution of the papers reported in Table 1 according to the type of feature of the personalized summary. From this distribution, it is depicted that personalized summaries with a percentage of around 59% are of video skimming type. Next in the ranking are the papers whose personalized summaries are of the storyboard type, with a percentage of around 27%. At a percentage of around 8%, there are works whose personalized summaries are the multiview type. The number of works whose personalized summaries are of the hierarchical type is half that of multiview type since their percentage is around 4%. The smallest number of works is those whose personalized summaries are of the fast forward type, at only around 2%.

Figure 5.

Distribution of the papers reported in Table 1 according to the type of personalized summary technique.

4.2. Features

Each video includes several features that are useful in creating a personalized video summary with the correct representation of the content of the original video. Users focus on one or more video features, such as identifying events, activities, objects, etc., resulting in the user adopting specific summary techniques based on these characteristics selected according to their preferences. The descriptions of the techniques based on these features are presented below.

4.2.1. Object Based

Object-based techniques focus on objects that are specific and present in the video. Techniques are useful in detecting one or more objects, such as a table, a dog, a person, etc. Some object-based summaries may include graphics or text to simplify the process of selecting video segments or keys easier, or to represent the objects contained in the video summary based on the detection of those objects []. Video summarization can be performed by collecting all frames with the desired object from the video. If the video does not include some type of desired object, then this method, although executed, will not be effective [].

In the literature, there are several studies in which the creation of a personalized video summary is based on the user’s query, which is taken into consideration [,,,,,,,,,,,,]. Sachan and Keshaveni [] proposed a personalized video summarization approach using classification-based entity identification. They succeeded in creating personalized summaries of desired lengths, thanks to the compression, recognition, and special classification of the macros present in the video. After the completion of video segmentation, the method of Otani et al. [] selects video segments based on their objects. Each video segment is represented by object tags and their meaning is included. The Object of Interest (OoI), defined by Gunawardena et al. [], is the user’s interest features. They suggested an algorithm that summarizes a video that focuses on a given OoI with the key option oriented to the user’s interest. Ul et al. [] proposed a framework in which, using deep learning technology, frames with OoIs are detected, which are combined to produce a video digest as output. This framework can detect one or more objects presented in the video. Nagar et al. [] proposed a framework for egocentric video summarization that uses unsupervised reinforcement learning and 3D convolutional neural networks (CNNs) that incorporate user preferences such as places, faces, and user choice in an interactive way to exclude or include that type of content. Zhang et al. [] proposed a method to generate personalized video summaries based on sketch selection that relies on the generation of a graph from keyframes. The features of the sketches are represented by the generated graph. The proposed algorithm interactively selects those frames related to the same person so that there is direct user interaction with the video content. Depending on the different requirements of each user, the interaction and design are carried out with gestures.

The body-based method presented by Tejero-de-Pablos et al. [], which focuses on the characteristics of the body joint based on these characteristics of human appearance, has little variability, and human movement is well represented. The method based on a neural network uses the actions of the players by combining holistic features and body joints so that the most important points can be extracted from the original video. Two types of action-related features are extracted and then classified as uninteresting or interesting. The personalized video summaries presented in [,,,,,,,,,,,] are also object-based.

4.2.2. Area Based

Area-based techniques are useful for detecting one or more areas, such as indoors or outdoors, as well as detecting specific scenes in that area, such as mountains, nature, skyline, etc. Lie and Hsu [] proposed a video summary system that takes into account user preferences based on events, objects, and area. Through a user-friendly interface, the user can adjust the frame number or time limit. Kannan et al. [] proposed a personalized video summarization system. The proposed system includes 25 detectors for semantic concepts based on objects, events, activities, and areas. Each captured video is assigned shot relevance scores according to the set of semantic concepts. Based on the time period and shots that are most relevant to the user’s preferences, the video summary is generated. Tseng et al. [] proposed a system consisting of three layers of server-middleware-client to produce a video summary according to user preferences based on objects, events, and scenes in the area. Many studies [,,,,] have proposed that personalized video summarization is also based on area.

4.2.3. Perception Based

Perception-based video summaries refer to the ways, represented by high-level concepts, in which the content of a video can be perceived by the user. Some relevant examples include the association of the content of the video with some level of importance by the user, the size of the excitement that the user can have by watching the video, the amount that the user can perceive from the distraction videos, or the kind of emotions or the intensity that the user may perceive from the content. Other research areas apply theories such as semiotic theory, human perception theory, and user-attention theories as a means of interpreting the semantics of video content to achieve high-level abstraction. Therefore, unlike video summaries based on events and objects in which tangible events and objects present in the video content are identified, user perception-based summaries focus on extracting how the user perceives or can perceive the content of the video []. Hereafter, we present perception-based video summary methods.

Miniakhmetova and Zymbler [] proposed a framework to generate a personalized video summarization that takes into account the user’s evaluations of previously watched videos. The rating can be “like”, “neutral”, or “dislike”. Scenes that affect the user of the video the most are collected, and their sequence forms the personalized video summary. A behavior pattern classifier presented by Varini et al. [] is based on 3D convolutional neural networks (3D CNNs) and uses visual evaluation features and 3D motion. The selection of elements is based on the degree of narrative, visual, and semantic perspective along with the user’s preferences, as well as the user’s attention behavior. Dong et al. [,] proposed a video summarization system based on the intentions of the user according to a set of predefined idioms and expected duration. Ul et al. [] proposed a framework for summarizing movies based on people’s emotional moments through facial expression recognition (FER). The emotions on which it is based are disgust, surprise, happy, sad, neutral, anger, and fear. Köprü and Erzin [] proposed a human-centered video summary framework based on information derived from emotions and extracted from visual data. Initially, with the use of repetitive convolutional neural networks, emotional information is extracted, and then the emotional mechanisms of attention and information are expanded to enrich the video summary. Katti et al. [] presented a semi-automated gaze-based method of the eye for emotional video analysis. The behavioral signal received from the user and entered is pupil dilation (PD) to assess user engagement and arousal. In addition to discovering regions of interest (ROIs) and emotional video segments, the method includes fusion with content-based features and gaze analysis. The Interest Meter (IM) was proposed by Peng et al. [] to measure user interest through spontaneous reactions. By using a fuzzy fusion scheme, emotion and attention features are combined; this results in viewing behaviors being converted into quantitative interest scores, and a video summary is produced by combining those parts of the video that are considered interesting. Yoshitaka and Sawada [] proposed a framework for video summary based on the observer’s behavior while monitoring the content of the video. The observer’s behavior is detected on the basis of the operation of the video remote control and eye movement. Olsen and Moon [] proposed the DOI function to obtain a set of features of each video based on the interactive behavior of the viewers.

4.2.4. Motion and Color Based

Producing a video summary is difficult when it is based on motion and especially when the camera is involved []. Sukhwani and Kothari [] proposed a framework for creating a personalized video summary in which the use of colors identifies football clips as event segments. To isolate the football events from the video, they modeled models of the player’s activity and movement. The actions of the footballers are described using the dense characteristics descriptions in the trajectory descriptions. For the immediate identification of the players in the moving frames, they used the deep learning method for the identification of the player, and for the modeling of the football field, the Gaussian mixture model (GMM) method to achieve background removal. AVCutty was used in the work of Darabi et al. [] to detect the boundaries of the scene and thus detect when a change in scene occurs through the motion and color features of the frames. The study by Varini et al. [] is also motion-based.

4.2.5. Event Based

To detect abnormal and normal events presented in videos, event-based approaches are useful. There are many examples such as terrorism, mobile hijacking, robbery scenes, the recognition and monitoring of sudden changes in the environment, etc., in which the observation of anomalous/suspicious features is performed using detection models. To produce the video digest, the frames with abnormal scenes are joined using a summarization algorithm []. Hereafter, we present such event-based approaches.

Valdés and Martínez [] described a method for creating video skims using an algorithm to dynamically build a skimming tree. They presented an implementation to obtain features that are different through online analysis. Park and Cho [] presented a study in which a single sequence of events is generated from multiple sequences and the production of a personalized video summary is performed using fuzzy TSC rules. Chen et al. [] described a study in which metadata are acquired based on the detection of events and objects in video. Taking metadata into account divides the video into segments so that each segment covers a self-contained period of a basketball game. Fei et al. [] proposed two methods for event detection. The first is detection by combining a support vector machine (CNNs-SVM) with a convolutional neural network, and the second is detection using an optimized summary network (SumNet). Lei et al. [] proposed a method to produce a personalized video summarization without supervision based on interesting events. The unsupervised Multigraph Fusion (MGF) method was proposed by Ji et al. [] to find events that are automatically relevant to the query. The LTC-SUM method was proposed by Mujtaba et al. [] based on the design of a 2D CNN model to detect individual events through thumbnails. This model solves privacy and computation problems on end-user devices that are resource constrained. At the same time, due to this model, the efficiency of storage and communication is improved, as the computational complexity is reduced to a significant extent. PASSEV was developed by Hannon et al. [] to create personalized real-time video summaries by detecting events using data from the Web. Chung et al. [] proposed a PLSA-based model for video summarization based on user preferences and events. Chen and Vleeschouwer proposed a video segmentation method based on clock events []. More specifically, it is based on the rule that exists in basketball that each team has 24 s to attack by making a shot. Many events, such as fouls, shots, interceptions, and others, are immediately monitored with the restart, end, and start of the clock. Many studies [,,,,,,,,,,,,] have proposed that personalized video summarization is also based on events.

4.2.6. Activity Based

Activity-based techniques focus on specific activities present in the video, such as lunch at the office, sunset at the beach, drinks after work, friends playing tennis, bowling, cooking, etc. Ghinea et al. [] proposed a summarization system that can be used with any kind of video in any domain. From each keyframe, 25 semantic concepts are detected for categories of visual scenes that cover people, areas, settings, objects, events, and activities that are sufficient to represent a keyframe in a semantic space. From each video, relevance scores are assigned for a set of semantic concepts. The score shows the relevance between a particular semantic concept and a shot. Garcia [] proposed a system that accepts from the user an image, text, or video query, then retrieves from the memories small subshots stored in the database, and produces the summary according to the user’s activity preferences. The following studies [,,,] are also based on activities.

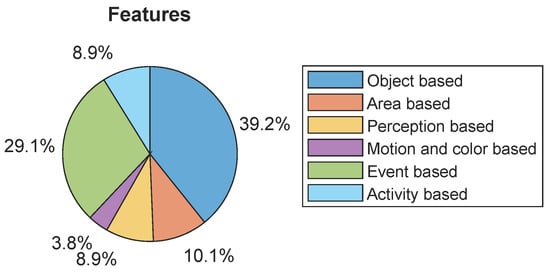

The distribution of the papers reported in Table 1 is shown in Figure 6 according to the type of features of the personalized summary. According to the distribution, most tasks perform a personalized object-based summarization at a percentage of around 39%. With a difference of 10% and a percentage of around 29%, those tasks follow in which the personalized video summary is event-based. Next, we have the tasks whose personalized summary is an area based on a percentage of around 10%. In fourth place are personalized perception-based and activity-based summaries with the same number of tasks and with a percentage of around 9%. The smallest number of works is the one with a percentage of around 4%, whose abstract is based on motion and color.

Figure 6.

Distribution of the papersreported in Table 1 according to the type of features of the personalized summary.

Table 1.

Classification of personalized summarization techniques.

Table 1.

Classification of personalized summarization techniques.

| Paper | Type of Summary | Features | Domain | Time | Method | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Storyboard | Video Skimming | Hierarchical | Multiview | Fast Forward | Object Based | Area Based | Perception Based | Motion and Color Based | Event Based | Activity Based | Domain Specific | Non-Domain Specific | Real Time | Static | Supervised | Weakly Supervised | Unsupervised | |

| Tsenk et al., 2001 [] | x | x | x | x | ||||||||||||||

| Tseng and Smith, 2003 [] | x | x | x | x | x | x | ||||||||||||

| Tseng et al., 2004 [] | x | x | x | x | x | x | x | |||||||||||

| Lie and Hsu, 2008 [] | x | x | x | x | x | x | x | |||||||||||

| Chen and Vleeschouwer, 2010 [] | x | x | x | x | x | |||||||||||||

| Valdés and Martínez, 2010 [] | x | x | x | x | x | x | ||||||||||||

| Chen et al., 2011 [] | x | x | x | x | x | x | x | |||||||||||

| Hannon et al., 2011 [] | x | x | x | x | ||||||||||||||

| Katti et al., 2011 [] | x | x | x | x | ||||||||||||||

| Park and Cho, 2011 [] | x | x | x | x | x | x | ||||||||||||

| Peng et al., 2011 [] | x | x | x | x | x | |||||||||||||

| Yoshitaka and Sawada, 2012 [] | x | x | x | x | ||||||||||||||

| Kannan et al., 2013 [] | x | x | x | x | x | x | x | |||||||||||

| Niu et al., 2013 [] | x | x | x | x | x | x | ||||||||||||

| Zhang et al., 2013 [] | x | x | x | x | x | |||||||||||||

| Chung et al., 2014 [] | x | x | x | x | x | |||||||||||||

| Darabi et al., 2014 [] | x | x | x | x | ||||||||||||||

| Ghinea et al., 2014 [] | x | x | x | x | x | x | x | |||||||||||

| Kannan et al., 2015 [] | x | x | x | x | x | x | x | |||||||||||

| Garcia, 2016 [] | x | x | x | x | ||||||||||||||

| Sharghi et al., 2016 [] | x | x | x | x | x | x | ||||||||||||

| del et al., 2017 [] | x | x | x | x | x | x | ||||||||||||

| Otani et al., 2017 [] | x | x | x | x | x | |||||||||||||

| Sachan and Keshaveni, 2017 [] | x | x | x | x | ||||||||||||||

| Sukhwani and Kothari, 2017 [] | x | x | x | x | x | x | ||||||||||||

| Varini et al., 2017 [] | x | x | x | x | x | x | x | x | x | |||||||||

| Fei et al., 2018 [] | x | x | x | x | x | |||||||||||||

| Tejero-de-Pablos et al., 2018 [] | x | x | x | x | x | |||||||||||||

| Zhang et al., 2018 [] | x | x | x | x | x | |||||||||||||

| Dong et al., 2019 [] | x | x | x | x | x | x | x | x | ||||||||||

| Dong et al., 2019 [] | x | x | x | x | x | x | x | x | ||||||||||

| Gunawardena et al., 2019 [] | x | x | x | x | x | |||||||||||||

| Jiang and Han, 2019 [] | x | x | x | x | x | |||||||||||||

| Ji et al., 2019 [] | x | x | x | x | x | |||||||||||||

| Lei et al., 2019 [] | x | x | x | x | x | |||||||||||||

| Ul et al., 2019 [] | x | x | x | x | x | |||||||||||||

| Zhang et al., 2019 [] | x | x | x | x | x | |||||||||||||

| Baghel et al., 2020 [] | x | x | x | x | x | |||||||||||||

| Xiao et al., 2020 [] | x | x | x | x | x | x | ||||||||||||

| Fei et al., 2021 [] | x | x | x | x | x | |||||||||||||

| Nagar et al., 2021 [] | x | x | x | x | x | x | ||||||||||||

| Narasimhan et al., 2021 [] | x | x | x | x | x | x | x | |||||||||||

| Mujtaba et al., 2022 [] | x | x | x | x | x | |||||||||||||

| Ul et al., 2022 [] | x | x | x | x | x | |||||||||||||

| Cizmeciler et al., 2022 [] | x | x | x | x | x | |||||||||||||

4.3. Video Domain

The techniques can be divided into two categories: those whose analysis is domain specific and those whose analysis is not domain specific.

- In techniques that refer to domain-specific analysis, video summarization is performed in that domain. Common content areas include home videos, news, music, and sports. When performing an analysis of video content, blur levels are reduced when focusing on a specific domain []. The video summary must be unique to the domain. To produce a good summary in different domains, the criteria vary dramatically [].

- In contrast to domain-specific techniques, non-domain-specific techniques perform video summarization for any domain, so there is no restriction on the choice of video to produce the summary. The system proposed by Kannan et al. [] can generate a video summarization without relying on any specific domain. The summaries presented in [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,] are not domain specific. The types of domains found in the literature are personal videos [,], movies/serial clips [,,,,], sports videos [,,,,,,,,,], cultural heritage [], zoo videos [], and office videos [].

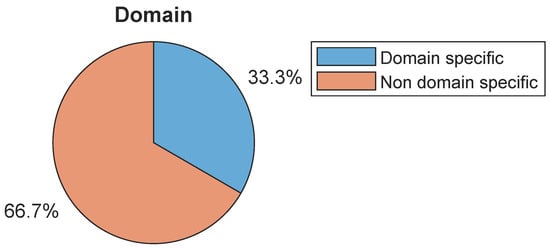

Table 2 provides a classification of works by domain type. Figure 7 presents the distribution of papers in the domain of personalized summary. In Figure 7, it can be seen that the large number of works produce personalized non-domain-specific summaries at a percentage of around 67%, in contrast to the works that produce personalized domain-specific summaries at a percentage of around 33%.

Table 2.

Classification of domains.

Figure 7.

Distribution of the papers reported in Table 1 in the domain of personalized summary.

4.4. Source of Information

The video content life cycle includes three stages. The first stage is the capture stage, during which the recording or capturing of the video takes place. The second stage is the production stage; during this stage, a message or a story is conveyed through the format the video has been converted into after being edited. The third stage is the viewing stage, during which the video is shown to a targeted audience. Through these stages, the video content is desemanticized, and then the various audiovisual elements are extracted []. Based on the source of information, personalized video summarization techniques can be divided into three categories: internal personalized summarization techniques, external personalized summarization techniques, and hybrid personalized summarization techniques.

4.4.1. Internal Personalized Summarization Techniques

Internal personalized summarization techniques are the techniques that analyze and use information that is internal to the content of the video stream. During the second stage of the video life cycle and, more specifically, for the video content produced, internal summarization techniques apply. Through these techniques, low-level text, audio, and image features are automatically parsed from the video stream into abstract semantics suitable for video summarization [].

- Image features may have changes in the motion, shape, texture, and color of objects that come from the video image stream. These changes can be used to perform video segmentation in shots by identifying fades or hot boundaries. Fades are identified by slow changes in the characteristics of an image. Hot boundaries are identified by changes in an image’s features in a sharp way, such as clipping. Specific objects can be detected, and an improvement in the depth of summarization can also be achieved for videos with a known structure by the analysis of image features. Sports videos are suitable for event detection because of their rigid structure. At the same time, event and object detection can also be achieved in other content areas that present a rigid structure, such as in news-related videos, as the start includes an overview of headlines, then a series of references is displayed, and finally the anchor face is the return [].

- Audio features are related to the video stream and appear in the audio stream in different ways. Audio features include music, speech, silence, and sounds. Audio features can help identify segments that are candidates for inclusion in a video summary, and improving the depth of the summary can be achieved using domain-specific knowledge [].

- Text features in the form of text captions or subtitles are displayed in the video. Instead of being a separate stream, text captions are “burned” or integrated into the video’s image stream. Text may contain detailed information related to the content of the video stream and thus be an important source of information []. For example, in a football match broadcast live, captions showing the names of the teams, the score between them, the percentage of possession, the shots on target at that moment, etc., should appear during the match. As with video and audio features, events can also be identified from text. Otani et al. [] proposed a text-based method. According to the text-based method, video blog posts use supporting texts that are used in the video summary at an earlier time. First, the video is segmented and then, according to the relevance of each segment to the input text, its priority is assigned to the summary video. Then, a subset of segments that have content similar to the content of the input text is selected. Therefore, based on the input text, a different video summary is produced.

4.4.2. External Personalized Summarization Techniques

External personalized summarization techniques analyze and use information that is external to the content of the video stream in the form of metadata. The life cycle of the video at each stage of its information is analyzed using external summarization techniques []. An external source of information is contextual information. Contextual information does not come from the video stream or the user and is additional []. The method presented by Katti et al. [] is based on gaze analysis by combining the features of the video content to discover the regions of interest (ROIs) and emotional segments. First, eye tracking is performed to record pupil dilation and eye movement information. Then, after each peak pupil dilation stimulation, a determination of the first fixation is made, and the corresponding video frames are marked as keyframes. Linking keyframes creates a storyboard sequence.

4.4.3. Hybrid Personalized Summarization Techniques

Hybrid personalized summarization techniques analyze and use information that is both external and internal to the content of the video stream. From the life cycle of the video, at each stage, its information is analyzed by external summarization techniques. Any combination of outer and inner summarization techniques can form hybrid summarization techniques. Each approach tries to capitalize on its strengths while minimizing its weaknesses, to make video summaries as effective as possible []. Combining text metadata with the capabilities of image-level video frames can help improve summary performance []. Furthermore, for non-domain-specific techniques, hybrid approaches have proven useful [].

4.5. Based on Time of Summarization

The techniques can be divided into two categories depending on whether the personalized summary is conducted live or on a pre-recorded video, respectively. The first category is real time, and the second category is static. Both are presented below.

- In real-time techniques, the production of the personalized summary takes place during the playback of the video stream. Due to the fact that the output should be delivered very quickly, it is a difficult process to produce in real time. In real-time systems, an output that is delayed is incorrect []. The Sequential and Hierarchical Determinant Point Process (SH-DPP) is a probabilistic model developed by Sharghi et al. [] to be able to produce extractive summaries from streaming video or long-form video. The personalized video summaries presented in [,,,,,,,,,,] are in real time.

- In static techniques, the production of the personalized summary takes place on a recorded video. Most studies are static in time [,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,].

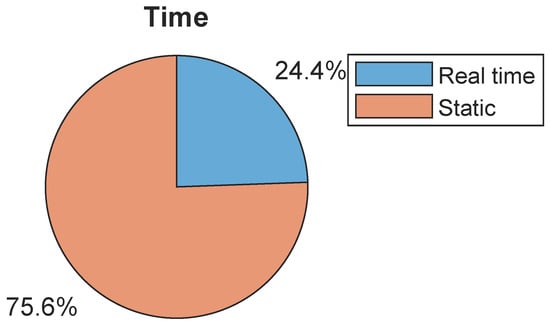

Figure 8 presents the distribution of the papers, reported in Table 1, between the time of the personalized summary. This distribution dominates the number of papers whose personalized summaries are static, at a percentage of around 76%, as opposed to the number of papers whose summaries are in real time, at a percentage of around 24%.

Figure 8.

Distribution of the papers reported in Table 1 between the time of the personalized summary.

4.6. Based on Machine Learning

Techniques related to machine learning are developed to identify objects, areas, events, etc. Various machine learning techniques apply algorithms to generate personalized video summaries. Based on the algorithm applied, the techniques are divided into supervised, weakly supervised, and unsupervised categories. The methods in the above categories use convolutional neural networks (CNNs).

In large-scale video and image recognition, CNNs have been very successful. The learning of high-level features in a progressive manner and the obtaining of the original image with the best representation are supported by CNNs []. Features for the recognition of holistic action that are more generalized and reliable than hand-made characteristics can be extracted from CNN. Therefore, CNN has overcome traditional methods []. The FasterRCNN model was proposed by Nagar et al. [] for face detection. Varini et al. [] proposed a 3D CNN with 10 layers. The layers are trained in frame features and visual motion evaluation. The way for unsupervised and supervised summarization techniques has been paved by the success of deep neural networks (DNNs) in learning video representations and complex frames []. In neural networks, memory networks are used to flexibly model the attentional scheme. In addition, to deal with visual answers to questions and answers to questions, memory networks are used [].

Through deep learning based on artificial neural networks, computers learn to process data as a human would. By using many data and through training models, they learn the characteristics that are their own, with room for optimization. On the basis of the algorithm applied, the techniques are divided into the following categories.

4.6.1. Supervised

In supervised techniques, a model is first trained using labeled data, and then the video summary is produced based on that model. Hereafter, we present supervised approaches. Deep learning for player detection was proposed by Sukhwani and Kothari []. To address the multiscale matching problem in the person search, Zhou et al. [] used the Cross-Level Semantic Alignment (CLSA) model. From the identity features, the most discriminative representations are learned using the end-to-end CLSA model. The Deep-SORT algorithm was used by Namitha et al. [] to track objects. Huang and Worring [] proposed a deep learning method to generate query-based video summarization for a visual text embedding space. The method is end-to-end and consists of a video digest generator, a video digest controller, and a video digest output module. OoI detection was performed using YOLOv3 by Ul et al. []. A single neural network was applied to the full video. The frames are divided into regions, delineated, and the neural network predicts probabilities. A deep architecture was trained by Choi et al. [] to perform efficient learning of the semantic embeddings of video frames. The learning is conducted through the progressive exploitation of the data from the captions of the images. According to the implemented algorithm, the semantically relevant segments of the original video stream are selected according to the context of the sentence or text description provided by the user. Studies [,,,,,,,,,,,,,] used the supervised technique to produce a summary.

Active Video Summary (AVS) was suggested by Garcia del Molino et al. [], which constantly asks the user questions to obtain the user’s preferences about the video, updating the summary online. From each extracted frame, object recognition is performed using a neural network. Using a line search algorithm, the summary is generated. Fei et al. [] introduced a video summarization framework that employs a deep ranking model to analyze large-scale collections of Flickr images. This approach identifies user interests to autonomously create significant video summaries. This framework does not simply use hand-crafted features to calculate the similarity between a set of web images and a frame.

Tejero-de-Pablos et al. [] proposed a method that uses two separate neural networks to transform the joint positions of the human body and the RGB frames of the video stream as input types. To identify the highlights, the two streams are merged into a single representation. The network is trained using the UGSV dataset from the lower to the upper layers. Depending on the type of summary, there are many ways that the personalized summary process can be modeled.

- Keyframe selection: The goal is to identify the most inclusive and varied content (or frames) in a video for brief summaries. Keyframes are used to represent significant information included in the video []. The keyframe-based approach chooses a limited set of image sequences from the original video to provide an approximate visual representation []. Baghel et al. [] proposed a method in which user preference is entered as an image query. The method is based on object recognition with automatic keyframe recognition. From the input video, the important frame is selected, so that the output video is produced from these frames. Based on the similarity score between the input video and the query image, a keyframe is selected. A selection table is created from the keyframe that is decided to be selected. A threshold is applied to the selection score. If the frame has a selection score greater than the threshold value, then this frame is a keyframe; otherwise, the frame is not considered a keyframe and is discarded.

- Keyshot selection: The keyshots comprise standard continuous video segments extracted from full-length video, each of which is shorter than the original video. Keyshot-driven video summarization techniques are used to generate excerpts from short videos (such as user-created TikToks and news) or long videos (such as full-length movies and soccer games) []. Mujtaba et al. [] presented the LTC-SUM method to produce personalized keyshot summaries that minimize the distance between the semantic information of the side and the selected video frame. Using a supervised encoder–decoder network, the importance of the frame sequence is measured. Zhang et al. [] proposed a mapping network (MapNet) to express the degree of association of a shot with a given query, to create a visual information mapping in the query space. Using deep reinforcement learning (SummNet), they proposed to build a summarization network to integrate diversity, representativeness, and relevance to produce personalized video summaries. Jiang and Han proposed a scoring mechanism []. In the hierarchical structure, the mechanism is based on the scene layer and the shooting layer and receives the output. Each shot is scored through this mechanism, and as basic shots, the shots are selected as high-rated shots.

- Event-based selection: The process of personalized summarization detects events from a video based on the user’s preferences. In the above method [], to identify thumbnail events that are not specific domains, a two-dimensional convolutional neural network (2D CNN) model was implemented.

- Learning shot-level features: It involves learning advanced semantic information from a video segment. The Convolutional Hierarchical Attention Network (CHAN) method was proposed by Xiao et al. []. After dividing the video into segments, visual features are extracted using the pre-trained network. To perform shot-level feature learning, visual features are sent to the feature encoding network. To perform learning on a high-level semantic information video segment, they proposed a local self-attention module. To manage the semantic relationship between the given query and all segments, they used a global attention module that responds to the query. To reduce the length of the shot sequence and the dimension of the visual feature, they used a fully convolutional block.

- Transfer learning: Transfer learning involves adjusting the information gained from one area (source domain) to address challenges in a separate, yet connected area (target domain). The concept of transfer learning is rooted in the idea that when tackling a problem, we generally rely on the knowledge and experience we have gained from addressing similar issues in the past []. Ul et al. [] proposed a framework using transfer learning to perform facial expression recognition (FER). More specifically, they presented the learning process that, to be completed, includes two steps. In the first step, a CNN model is trained for face recognition. In the second step, transfer learning is performed for the FER of the same model.

- Adversarial learning. This is a technique employed in the field of machine learning to trick or confuse a model by introducing harmful input, which can be used to carry out an attack or cause a malfunction in a machine learning system. A competitive three-player network was proposed by Zhang et al. []. The content of the video, as well as the representation of the user query, is learned from the generator. The parser receives three pairs of digests based on the query so that the parser can distinguish the real digest from a random one and a generated one. To train the classifier and the generator, a lossy input of three players is performed. Training avoids the generation of random summaries which are trivial, as the summary results are better learned by the generator.

- Vision-language: A vision language model is an artificial intelligence model that integrates natural language and computer vision processing abilities to comprehend and produce textual descriptions of images, thus connecting visual information with natural language explanations. Plummer et al. [] used a two-branch network to learn the integration model of the vision language. Of the two branches of the network, one receives the text features and the other the visual features. The triple loss based on the margin trains the network by combining a neighborhood-preserving term and two-way ranking terms.

- Hierarchical self-attentive network: The hierarchical self-attentive network (HSAN) is able to understand the consistent connection between video content and its associated semantic data at both the frame and segment levels. This ability enables the generation of a comprehensive video summary []. A hierarchical self-attentive network was presented by Xiao et al. []. First, the original video is divided into segments, and then, using a pre-trained deep convolutional network, the visual feature is extracted from each frame. To record the semantic relationship at the section level and at the context level, a global and a local self-care module are proposed. To learn the relationship between visual content and caption, the self-attention results are sent to a caption generator, which is enhanced. An importance score is generated for each frame or segment to produce the video summary.

- Weight learning: The weight learning approach was proposed by Dong et al. [], in which using maximum-margin learning, it can automatically learn the weights of different objects. Learning can occur for processing styles that are not the same or different types of product, as these videos contain annotations that are highly relevant to the domain expert’s decisions. For different processing styles or product categories, there may be different weightings of audio annotations built directly with domain-specific processing decisions. For efficient user exploration of the design space, there may be default storage of these weights.

- Gaussian mixture model: A Gaussian mixture model is a clustering method used to estimate the likelihood that a specific data point is part of a cluster. In the user preference learning algorithm proposed by Niu et al. [], the most representative are initially selected as temporary keyframes from the extracted frames. To indicate a scene change, temporary frames are displayed to the user. If the user is not satisfied with the selected temporary keyframes, they can interact by manually selecting the keyframes. A Gaussian mixture model (GMM) is modeled by learning user preferences. The parameters of the GMM are automatically updated based on the user’s manual selection of keyframes. Production of the personalized summary is performed in real time as the personalized frames update the selected frames from the temporary base. Personalized keyframes represent user preferences and taste.

4.6.2. Unsupervised

In unsupervised techniques, clusters of frames are first created based on the quality of their content, and then the video summary is created by concatenating the keyframes of each cluster in chronological order. An unsupervised method, called FrameRank, was introduced by Lei et al. []. They constructed a graph where frame similarity is measured at the edges and video frames correspond to vertices. To measure the relative importance of each segment and each video frame, they applied a graph-ranking technique. Depending on the type of summary, there are many ways in which the personalized summary process can be modeled, which are described hereafter.

- Contrastive learning: Using a pretext, self-supervised pretraining of a model can be performed, which is the approach to contrastive learning. According to contrastive learning, the model learns to repel representations intended to be far away, called negative representations, and to attract them from positive representations intended to be close to discriminate between different objects [].

- Reinforcement learning: A framework for creating an unsupervised personalized video summary that supports the integration of diverse pre-existing preferences, along with dynamic user interaction for selecting or omitting specific content types, was proposed by Nagar et al. []. Initially, the egocentric video captures spatio-temporal features using 3D convolutional neural networks (3D CNNs). Then, the video is split into non-overlapping frame shots, and the features are extracted. Subsequently, the features are imported by the reinforcement learning agent, which employs a bi-directional long- and short-term memory network (BiLSTM). Using forward and backward flow, BiLSTM serves to encapsulate future and past information from each subshot.

- Event-based keyframe selection (EKP): EKP was developed by Ji et al. [] so that keyframes can be presented in groups. The separation of groups is based on specific facts that are relevant to the query. The Multigraph Fusion (MGF) method is implemented to automatically find events that are relevant to the query. The keyframes in the different event categories are then separated from the correspondence between the videos and the keyframes. Through the two-level structure, the summarization is represented. Event descriptions are the first layer, and keyframes are the second layer.

- Fuzzy rule based: To represent human knowledge, which includes fuzziness and uncertainty, a method is a fuzzy system. From the theory of fuzzy sets, the fuzzy system is a representative and important application []. Park and Cho [] used a system based on fuzzy TSK rules. This system was used to evaluate video event shots. Also, in this rule-based system, consistency is a function of the variables used as input and not a linguistic variable, and therefore, the time-consuming decomposition process can be avoided. The summaries in [,,,,] use an unsupervised technique to produce a summary.

4.6.3. Supervised and Unsupervised

Narasimhan et al. [] presented a model that can be trained with and without supervision and that belongs to the supervised and unsupervised categories. The supervised setting uses reconstruction, diversity, and classification as loss functions, whereas the unsupervised setting uses reconstruction and diversity as loss functions.

4.6.4. Weakly Supervised

Weakly supervised video summary methods use less expensive labels without using basic truth data. The labels they use are imperfect compared to tags that are complete in human annotations. However, they can lead to an effective training of summary models []. Compared to the supervised video summary approach, a weakly supervised summary approach needs a smaller set of training to carry out the video summary. Cizmeciler et al. [] suggested a personalized video summary approach with weak supervision. Weak supervision is carried out as semantic maps of reliability. Through predictions from pre-trained classifiers of actions/characteristics, semantic maps are obtained.

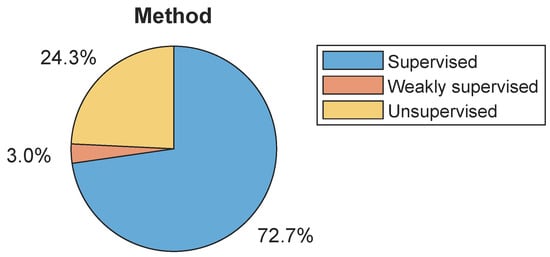

The distribution of the papers reported in Table 1, according to the type of personalized summary method, is shown in Figure 9. It is evident from the distribution that in the largest number of papers, the proposed method of producing a personalized video summary is supervised, at a percentage of around 73%. Second, the percentage of unsupervised personalized video summarization methods is around 24%. In the last place, and with a percentage of around 3%, is the weakly supervised methods.

Figure 9.

Distribution of the papers reported in Table 1 among types of personalized summary method.

5. Types of Methodology

According to the type of methodology used by personalized video summary techniques for the summary production process, the techniques are classified into five broad categories.

5.1. Feature-Based Video Summarization

Based on speech transcription, audiovisual material, object, dynamic content, gestures, color, and movement, categorization of summarization techniques can be carried out []. Gunawardena et al. [] proposed the Video Shot Sampling Module (VSSM) to initially divide the video into shots consisting of similar visual content to conquer and divide each shot separately. The proposed method is based on hierarchical clustering but also on changing the color in the histogram to perform boundary detection in the video shot. In the framework developed by Lie and Hsu [], an analysis of the colors and intensities of the flame is performed in each frame to detect the explosion frames.

5.2. Keyframe Selection

The primary versions of the video summarization systems fundamentally employed frame categorization methods that relied on the labeled data inputted into them. Features drawn from video frames are used as input for an algorithm that categorizes the frames as being of interest or not being of interest. Only frames of interest are incorporated into the summary. The description and detection of important criteria is a challenge and is used to select the appropriate keyframes. The techniques for keyframe selection are presented below.

- Object-based keyframe selection: Summaries that are based on objects specifically concentrate on certain objects within a video. These are scrutinized and used as benchmarks to identify the video keyframes. The techniques in the following studies [,,,,,,] are object-based keyframe selection.

- Event-based keyframe selection: Event-oriented summaries pay special attention to particular events in a video, which are examined and used as benchmarks for the summarization process. The keyframes of the video are identified based on the selected event. The personalized video summary technique in [] is the selection of event-based keyframes.

- Perception-based keyframe selection: The detection of the important criterion of user perception is a factor in keyframe selection. In studies [,], the keyframes are detected based on the user’s perception.

5.3. Keyshot Selection

A video shot is a segment of video that is captured in continuous time through a camera. The keyframes are selected after the video has been split into shots. Another challenge arises when one shot is selected by the keyframes []. Identifying and defining crucial factors in this method is a hurdle and is used to choose the appropriate keyshots. Subsequently, methods for keyshot selection are introduced.

- Activity-based shot selection: The activity-based summaries focus particularly on specific activities of a video, which are analyzed and considered criteria for detecting keyshots of the video. In [,], the selection of the keyshot is based on activity.

- Object-based shot selection: The summaries that are object based place special emphasis on distinct objects within a video, which are examined and used as benchmarks for the summarization process. Based on the selected object, the keyshots of the video are detected. In the following studies [,,,,,,,], the keyshot selection technique is based on object detection.

- Event-based shot selection: Video keyshots are identified based on a particular event that the user finds interesting. For the personalized summaries in [,], the selection of keyshots are based on events.

- Area-based shot selection: Area-based summaries focus particularly on specific regions within a video, which are scrutinized and employed as reference points for the summarization procedure. Depending on the chosen region, the video keyshots are identified. For the personalized summaries presented in [,], the selection of keyshots is based on the area.

5.4. Video Summarization Using Trajectory Analysis

Chen et al. [] introduced a method that identifies 2D candidates from each separate view, subsequently calculating 3D ball positions through triangulation and confirmation of these 2D candidates. After identifying viable 3D ball candidates, examining their trajectories aids in distinguishing true positives from false positives, as the ball is expected to adhere to a ballistic trajectory, unlike most false detections (typically related to body parts). Chen et al. [] proposed a method in which the video surveillance trajectory is extracted to help identify group interactions.

Dense Trajectories

In order to describe in space and time the actions of soccer players, Sukhwani and Kothari used dense feature trajectory descriptors []. Trajectories are extracted from several spatial scales. Samples are taken from the area of a player, which is in the form of a grid, and monitored separately at each scale. The motion of the camera is corrected for by calculating features, which is a default property. Feature points that do not belong to player areas in event representations are suppressed and cut to avoid inconsistencies. Noise is introduced through the non-player points, as they are not representations of actual player actions. To record the player’s movements, the HOF is calculated along the entire length of the dense trajectories. Depending on the angle, each flow vector subtends with the horizontal axis, and depending on the magnitude of the vector, it is weighted. Using full orientation, quantization is performed in eight bins of HOF orientations.

5.5. Personalized Video Summarization Using Clustering

The text method proposed by Otani et al. [] first extracts the nouns from the input text and then the videos are clustered into groups based on each event after being segmented. The priority of each section is calculated according to the clusters that have been created. Segments that are high priority have a higher chance of being included in the video summary. The video summary is then generated by selecting the subset of segments that is optimal according to the calculated priority calculation.

Based on clustering, the categories into which the personalized video summary can be divided are hierarchical clustering, aggregation hierarchical clustering, hierarchical context clustering, k-means clustering, dense-neighbor-based clustering, concept clustering and affinity propagation.

- Hierarchical clustering: Hierarchical clustering, or hierarchical cluster analysis, is a method that aggregates similar items into collections known as clusters. The final result is a series of clusters, each unique from the others, and the elements within each cluster share a high degree of similarity. Yin et al. [] proposed a hierarchy to encode visual features. The hierarchy will be used as a dictionary to encode visual features in an improved way. Initially, a cluster of leaf nodes is created hierarchically based on their paired similarities. The same process is then retrospectively performed to cluster the images into subgroups. The result of this process is the creation of a semantic tree from the root to the leaves.

- K-means clustering: The Non-negative Matrix Factorization (NMF) method was proposed by Liu et al. [] to produce supervised personalized video summarization. The video is segmented into small clips, and each clip is described by a word from the bag-of-words model. The NMF method is used for action segmentation and clustering. Using the k-means algorithm, the dictionary is learned. The unsupervised method proposed by Ji et al. [] uses a k-means algorithm to cluster words containing similar meanings. In the method proposed by Cizmeciler et al. [], clustering is performed using the k-means algorithm. From the frames, the extraction of visual characteristics is carried out using CNN, which is pre-trained. Each shot is represented by the average of visual characteristics. With the k-means method, the shots are clustered into a set of clusters. The centers are then initialized using the Euclidean distance metric by taking random samples from the data. When the shots closest to each center of the cluster are selected, the summary is created. To cluster photos, Darabi and Ghinea [] used the K-means algorithm in their supervised framework to categorize them based on RGB color histograms.

- Dense-neighbor based clustering: Lei et al. [] proposed an unsupervised framework to produce a personalized video summary. With a clustering method, the video is divided into separate segments. The method is based on dense neighbors. They clustered the video frames into discrete segments that are semantically consistent frames using a clustering center clustering algorithm.

- Concept clustering: Sets of video frames that are different but semantically close to each other are grouped together. Clusters of concepts are formed from this grouping. For the representation of macro-optical concepts, which are created from similar types of micro-optical concepts of video units that are different, these clusters are intended. Using the cumulative clustering technique, sets of frames are clustered. The summation technique is top–down and is based on the pairwise similarity function (SimFS) operating on two sets of frames [].

- Affinity propagation: It is a clustering approach that has a higher fitness value than other approaches. AP is based on factor graphs and has been used as a tool to create storyboard summaries and cluster video frames [].

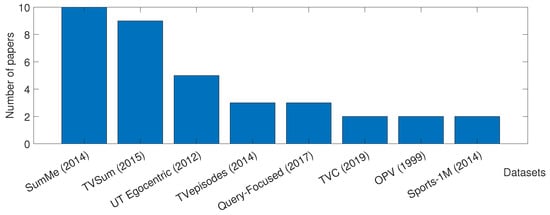

6. Personalized Video Datasets

Datasets are needed to test, train, and benchmark various personalized video summarization techniques. Figure 10 depicts personalized video datasets (x-axis) and the number of corresponding articles (y-axis) that report results on each of them. The following datasets have been created to evaluate the summarization of personalized videos.

Figure 10.

Personalized video datasets (x-axis) and the number of corresponding papers (y-axis) that report results on each of them (SumMe [], TVSum [], UT Egocentric [], TV episodes [], Query-Focused [], TVC [], OVP [], Sports-1M []).

- 1.

- Virtual Surveillance Dataset for Video Summary (VSSum): According to the permutation and combination method, Zhang et al. [] created a dataset in 2022. The dataset consists of virtual surveillance videos and includes 1000 videos that are simulated in virtual scenarios. Each video has a resolution of in 30 fps and a duration of 5 min.

- 2.

- The Open Video Project (OVP): This dataset was created in 1999, including 50 videos recorded at a resolution of 352 × 240 pixels at 30 fps, which are in MPEG-1 format and last from 1 to 4 min. The types of content are educational, lecture, historical, ephemeral, and documentary []. This repository contains videos longer than 40 h, mostly in MPEG-1 format, pulled from American agencies such as NASA and the National Archives. A wide range of video types and features are reflected in the repository content, such as home, entertainment, and news videos [].

- 3.

- TVC: In 2019, Dong et al. [] created the TVC dataset which consists of 618 TV commercial videos.

- 4.

- Art City: The largest unconstrained dataset of heritage tour visits, including egocentric videos sharing narrative landmark information, behavior classification, and geolocation information, was published by Varini et al. [] in 2017. The videos have recorded cultural visits to six art cities by tourists and contain geolocation information.

- 5.

- Self-compiled: Based on the UT Egocentric (UTE) dataset [], a new dataset was compiled after collecting dense concept annotations on each video capture by Sharghi et al. [] in 2017. The new dataset is designed for query-focused summarization to provide different summarizations depending on the query and provide automatic and efficient evaluation metrics.

- 6.

- Common Film Action: This repository includes 475 video clips from 32 Hollywood films. The video clip that contains it is from eight categories of actions that have been annotated [].

- 7.

- Soccer video: This dataset contains 50 soccer video clips whose annotation was manually collected from the UEFA Champions League and the FIFA World Cup 2010 championship [].

- 8.