Development of Sensory Virtual Reality Interface Using EMG Signal-Based Grip Strength Reflection System

Abstract

1. Introduction

- To develop a novel interface that reflects hand strength, rather than hand movement, in hand tracking.

- To propose an electromyography signal-processing method that can measure grip strength.

- To develop breakable objects that generate particles in a VR environment.

- To develop an interface that can destroy objects with different intensities in a VR environment.

- To develop a VR interface that reflects grip strength and apply it to VR contents such as rehabilitation, muscle strengthening, exercise, games, and education in the future.

- Does the proposed EMG signal-processing method measure the user’s muscle activity?

- Does the user’s grip strength reflect the destruction of objects with different strengths in the VR content?

- Are users satisfied with the proposed grip strength reflection interface?

2. Related Research

2.1. Flow in Virtual Environments

2.2. System Using EMG

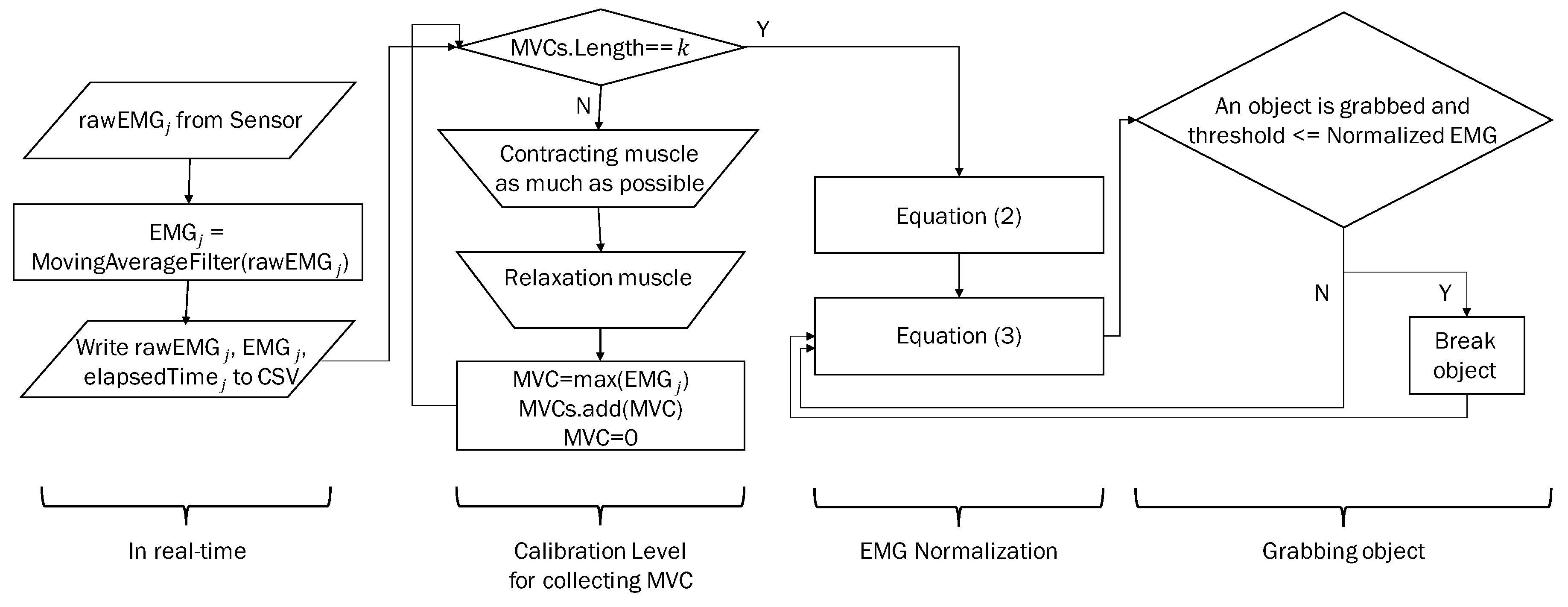

3. Proposed Method

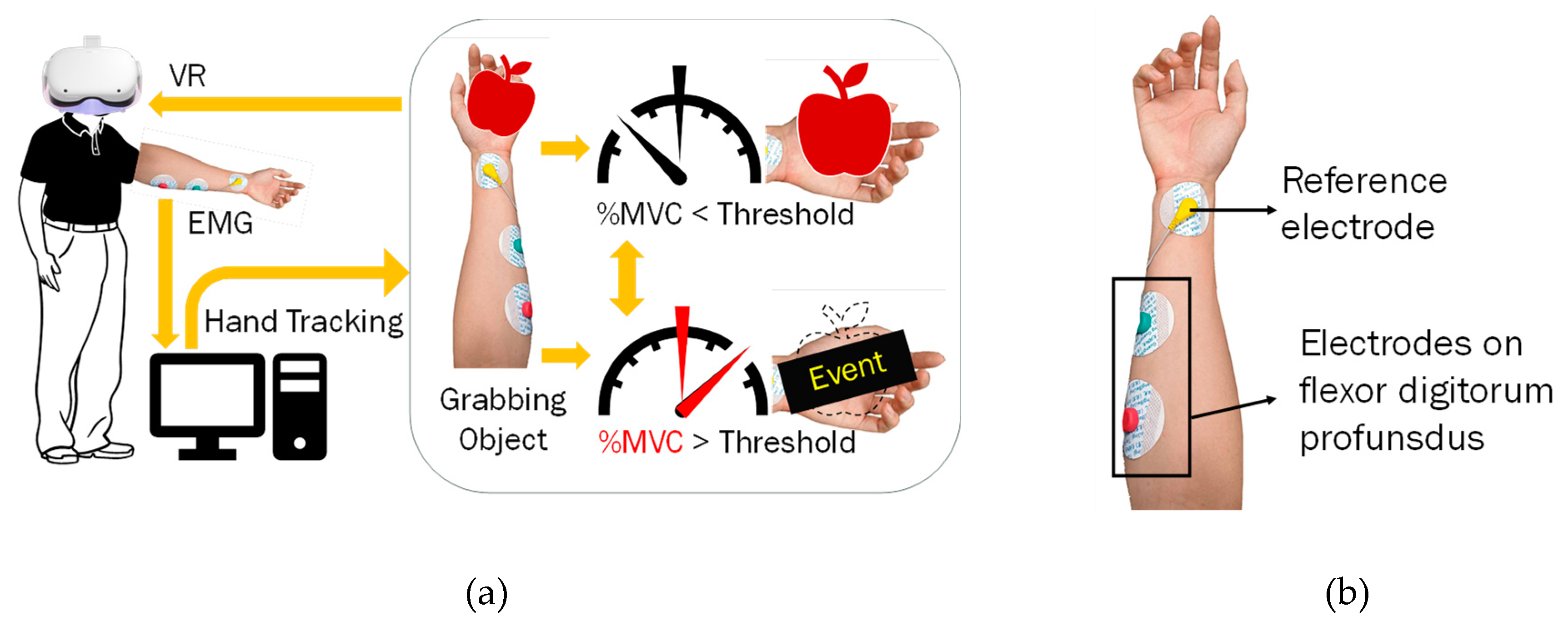

3.1. Surface Electromyography

3.1.1. Acquisition of FDP EMG

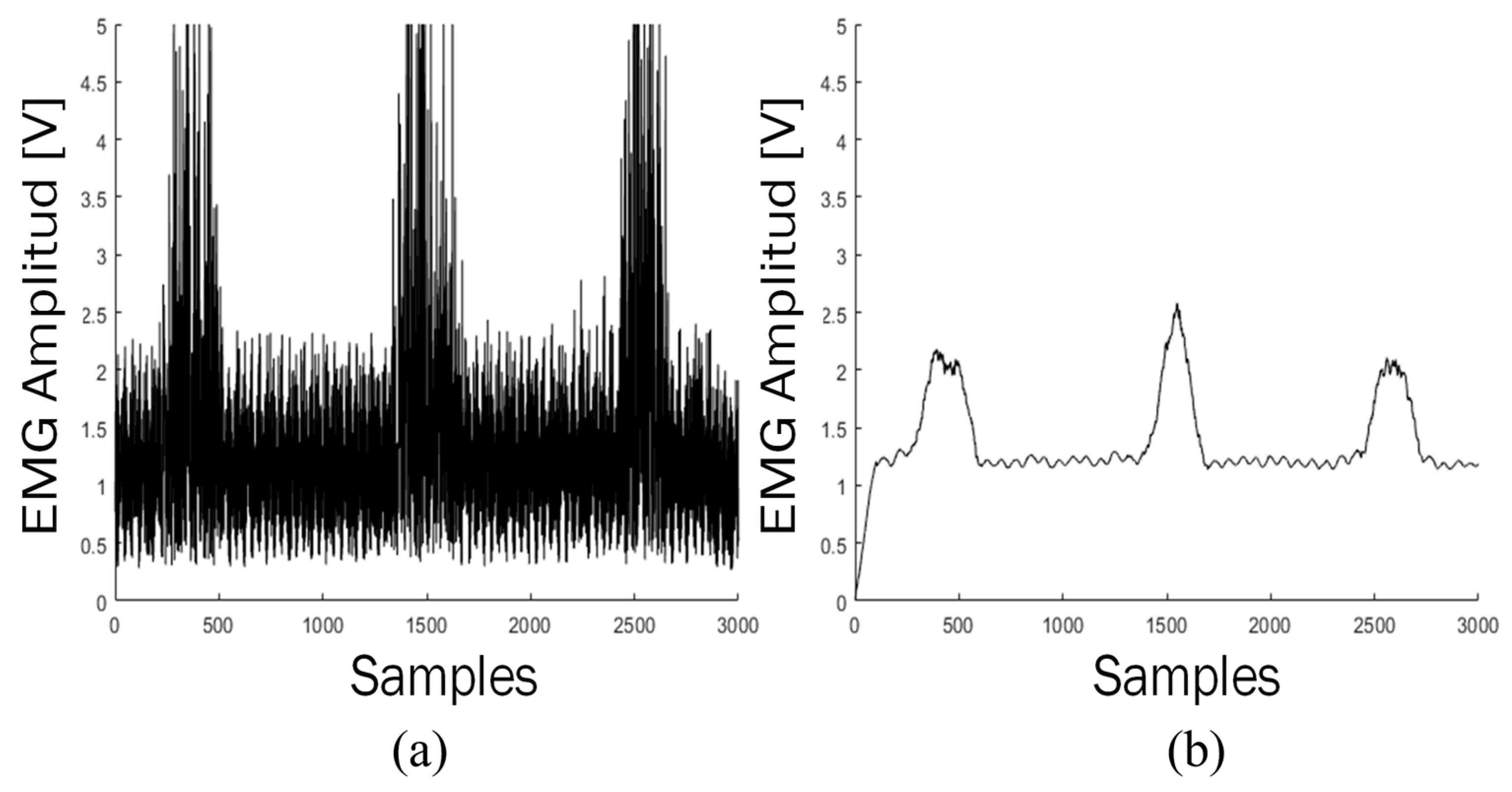

3.1.2. EMG Signal Processing

| Algorithm 1 Pseudo code for moving average filter algorithm. | |

| 1: | EMG = ParseLong(stream.ReadLine()) |

| 2: | total −= readings[readIndex] |

| 3: | readings[readIndex] = EMG |

| 4: | total += readings[readIndex] |

| 5: | readIndex = readIndex ++ |

| 6: | if readIndex >= numReadings then |

| 7: | readIndex = 0 |

| 8: | end if |

| 9: | Smoothed = total/numReadings |

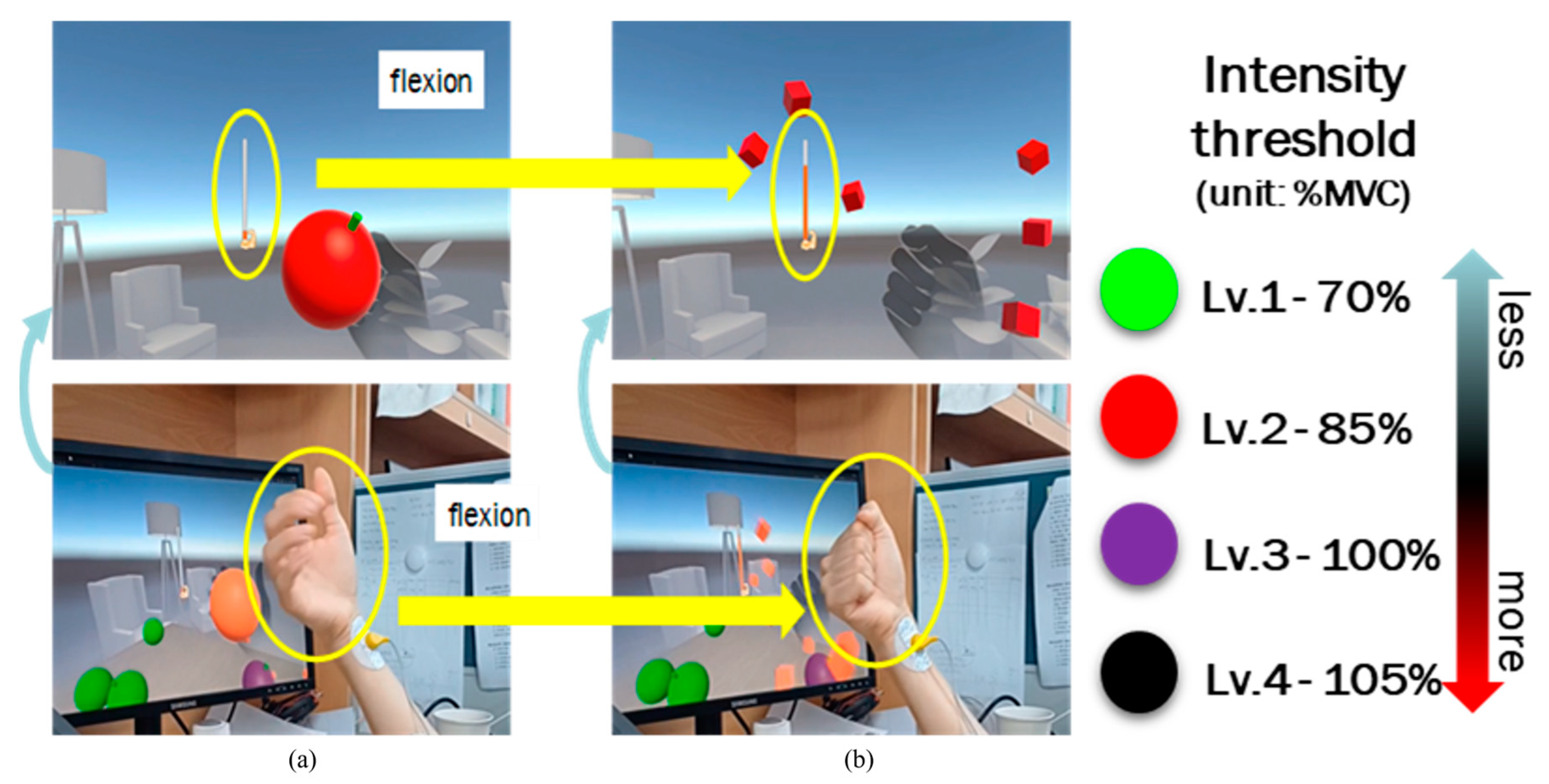

3.2. Scene Creation

3.2.1. Construction of VR Environment

3.2.2. Explanation of the Grip Reflection Scene

4. Results and Discussion

4.1. Experimental Environment

4.2. Satisfaction Evaluation Results

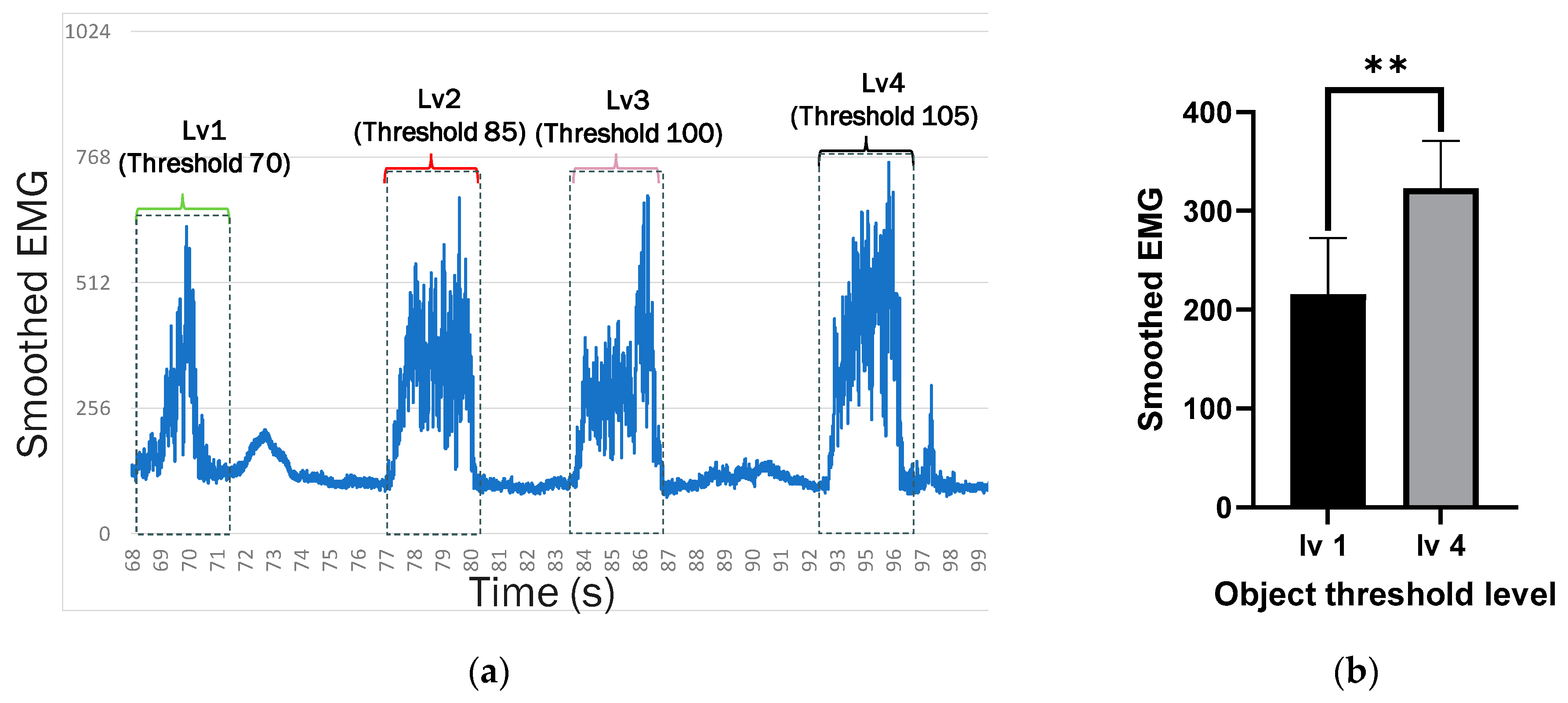

4.3. Results of EMG by Object Intensity

4.4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kim, T.K.; Kim, S.S. Metaverse App Market and Leisure: Analysis on Oculus Apps. Knowl. Manag. Res. 2022, 23, 37–60. [Google Scholar]

- Lee, M.T.; Youn, J.H.; Kim, E.S. Evaluation and Analysis of VR Content Dementia Prevention Training based on Musculoskeletal Motion Tracking. J. Korea Multimed. Soc. 2020, 23, 15–23. [Google Scholar]

- Kwon, C.S. A Study on the Relationship of Distraction Factors, Presence, Flow, and Learning Effects in HMD—Based Immersed VR Learning. J. Korea Multimed. Soc. 2018, 21, 1002–1020. [Google Scholar]

- Shin, J.M.; Choi, D.S.; Kim, S.Y.; Jin, K.B. A Study on Efficiency of the Experience Oriented Self-directed Learning in the VR Vocational Training Contents. J. Knowl. Inf. Technol. Syst. 2019, 14, 71–80. [Google Scholar]

- Jo, J.H. Analysis of Visual Attention of Students with Developmental Disabilities in Virtual Reality Based Training Contents. J. Korea Multimed. Soc. 2021, 24, 328–335. [Google Scholar]

- Kim, C.M.; Youn, J.H.; Kang, I.C.; Kim, B.K. Development and Assement of Multi-sensory Effector System to Improve the Realistic of Virtual Underwater Simulation. J. Korea Multimed. Soc. 2014, 17, 104–112. [Google Scholar] [CrossRef]

- Li, K.; Wang, J.Z.L.; Zhang, M.; Li, J.; Bao, S. A Review of the Key Technologies for sEMG-based Human-robot Interaction Systems. Biomed. Signal Process. Control. 2020, 62, 102074. [Google Scholar] [CrossRef]

- Dwivedi, A.; Kwon, Y.J.; Lioarokapis, M. EMG-Based Decoding of Manipulation Motions in Virtual Reality: Towards Immersive Interfaces. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3296–3303. [Google Scholar]

- Kim, K.S.; Han, Y.H.; Jung, W.B.; Lee, Y.H.; Kang, J.H.; Choi, H.H.; Mun, C.W. Technical Development of Interactive Game Interface Using Multi-Channel EMG Signal. J. Korea Game Soc. 2010, 10, 65–73. [Google Scholar]

- Lee, M.; Lee, J.H.; Kim, D.H. Gender Recognition using Optimal Gait Feature based on Recursive Feature Elimination in Normal Walking. Expert Syst. Appl. 2022, 189, 116040. [Google Scholar] [CrossRef]

- Maciejewski, M.W.; Qui, H.Z.; Rujan, I.; Mobli, M.; Hoch, J.C. Nonuniform Sampling and Spectral Aliasing. J. Magn. Reson. 2009, 199, 88–93. [Google Scholar] [CrossRef]

- Islam, M.J.; Ahmad, S.; Haque, F.; Reaz, M.B.I.; Bhuiyan, M.A.S.; Islam, M.R. Application of Min-Max Normalization on Subject-Invariant EMG Pattern Recognition. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- CDe Luca, J.; Gilmore, L.D.; Kuznetsov, M.; Roy, S.H. Filtering the Surface EMG Signal: Movement Artifact and Baseline Noise Contamination. J. Biomech. 2010, 43, 1573–1579. [Google Scholar] [CrossRef]

- Zhu, Y.; Geng, Y.; Huang, R.; Zhang, X.; Wang, L.; Liu, W. Driving towards the future: Exploring human-centered design and experiment of glazing projection display systems for autonomous vehicles. Int. J. Hum.–Comput. Interact. 2023, 1–16. [Google Scholar] [CrossRef]

- Fang, C.; He, B.; Wang, Y.; Cao, J.; Gao, S. EMG-Centered Multisensory Based Technologies for Pattern Recognition in Rehabilitation: State of the Art and Challenges. Biosensors 2020, 10, 85. [Google Scholar] [CrossRef] [PubMed]

| Q1 | Q2 | Q3 | Q4 | |

|---|---|---|---|---|

| Subject 1 | 5 | 5 | 5 | 4 |

| Subject 2 | 5 | 4 | 5 | 5 |

| Subject 3 | 5 | 5 | 5 | 5 |

| Subject 4 | 5 | 5 | 4 | 5 |

| Subject 5 | 5 | 4 | 5 | 5 |

| Subject 6 | 5 | 5 | 5 | 5 |

| Total | 5 | 4.67 (0.52) | 4.83 (0.41) | 4.83 (0.41) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, Y.; Lee, M. Development of Sensory Virtual Reality Interface Using EMG Signal-Based Grip Strength Reflection System. Appl. Sci. 2024, 14, 4415. https://doi.org/10.3390/app14114415

Shin Y, Lee M. Development of Sensory Virtual Reality Interface Using EMG Signal-Based Grip Strength Reflection System. Applied Sciences. 2024; 14(11):4415. https://doi.org/10.3390/app14114415

Chicago/Turabian StyleShin, Younghoon, and Miran Lee. 2024. "Development of Sensory Virtual Reality Interface Using EMG Signal-Based Grip Strength Reflection System" Applied Sciences 14, no. 11: 4415. https://doi.org/10.3390/app14114415

APA StyleShin, Y., & Lee, M. (2024). Development of Sensory Virtual Reality Interface Using EMG Signal-Based Grip Strength Reflection System. Applied Sciences, 14(11), 4415. https://doi.org/10.3390/app14114415