Pyramid Feature Attention Network for Speech Resampling Detection

Abstract

1. Introduction

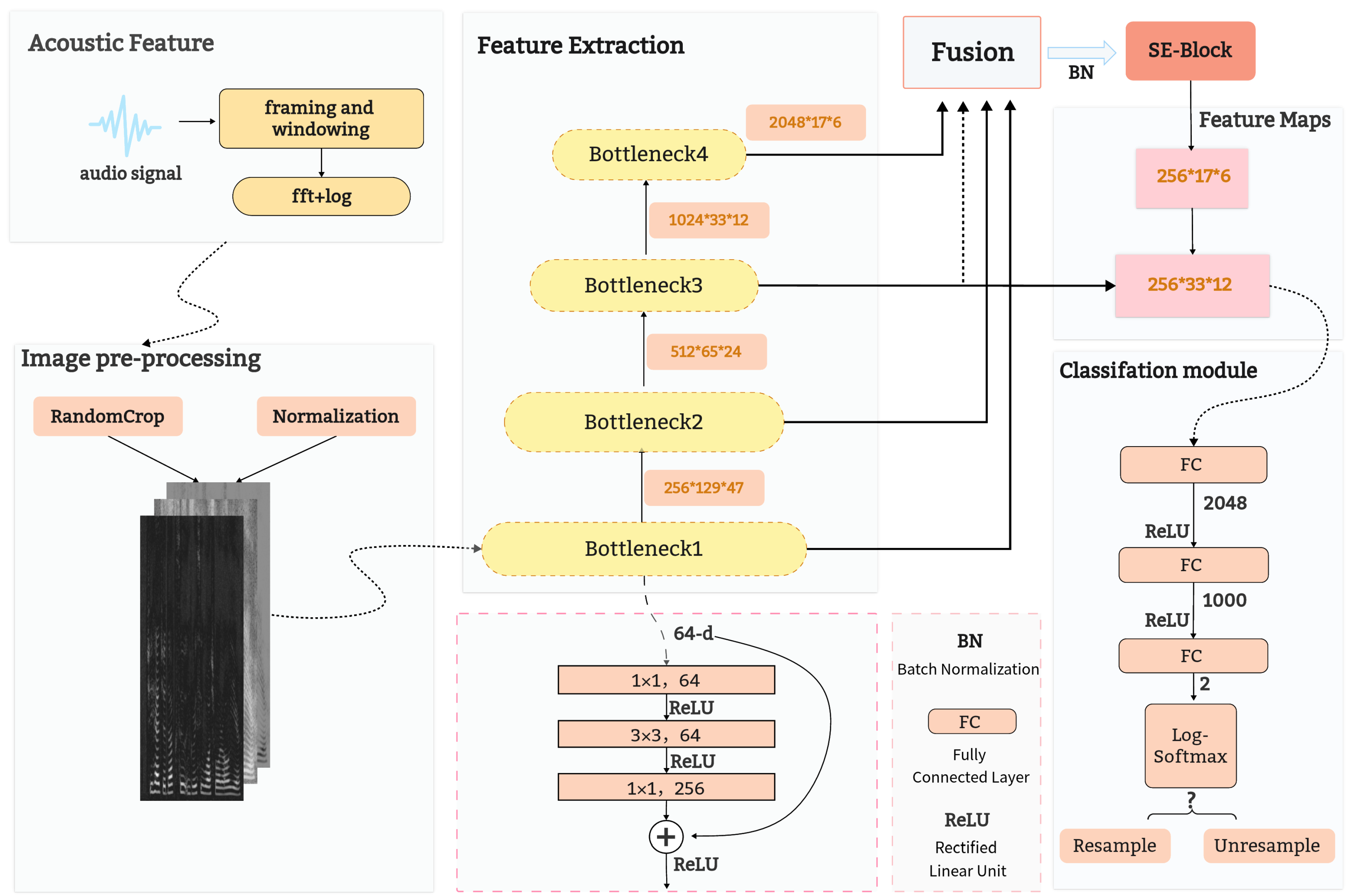

- We propose to use a deep learning method to extract resampling features from spectrograms containing time–frequency information, and apply various models of classical image classification for speech resampling detection;

- We apply the SE attention mechanism to the feature pyramid fusion network, which can focus on those channels that are more efficient for the resampling classification task and help the network to make resampling decisions;

- Thorough experimental evaluations show that the proposed method is suitable for speech detection tampered with multiple resampling factors, especially for tampering close to the original speech, and the detection performance is significantly improved. Moreover, the effect of MP3 compression on speech resampling detection is evaluated, and the results show that the proposed model is robust to MP3 compression.

2. Proposed Method

2.1. Speech Preprocessing

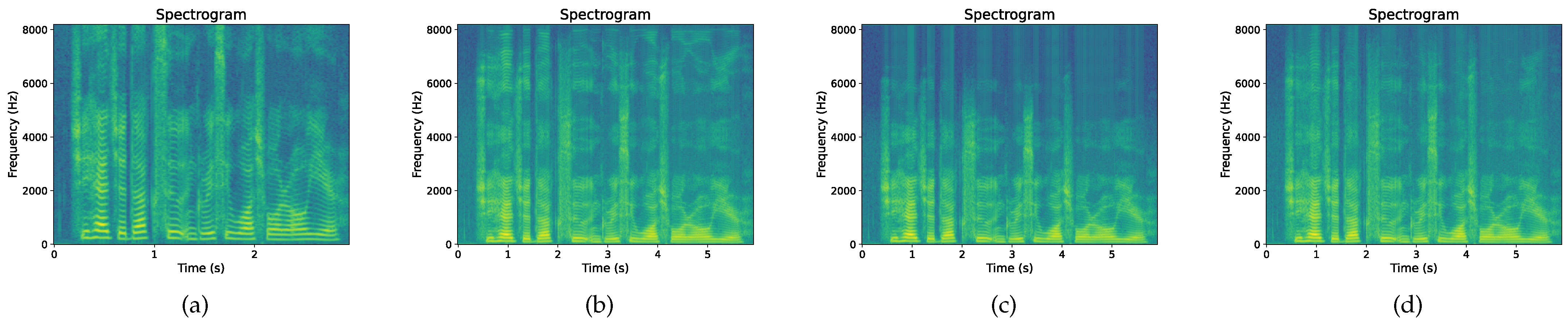

2.1.1. Audio Signal to Spectrogram

2.1.2. The Performance of Resampling on the Spectrogram

- Up-sampling: By inserting zeros between every two sample points of the signal, the sampling point of the signal becomes after the change, so that the signal is expanded by a factor of p in the time domain. When , ; otherwise, .

- Interpolation: The signal is convolved by a low-pass linear interpolation filter to obtain the interpolated signal . According to the use of different filters [35], the interpolated signal can be divided into linear interpolation, spline interpolation, and cubic interpolation.

- Down-sampling: One point is extracted every sample points, so that the signal is reduced by a factor of q in the time domain. The changed signal is . When ,, the resampled signal is .

2.2. Image Preprocessing

2.3. Architecture

2.3.1. Feature Extraction

- (a)

- Bottleneck: A residual basis of ResNet [38], which is different from another residual basis, Basicblock, Bottleneck is divided into three steps: first, convolution is used to reduce the dimension of data; then the conventional convolution kernel is used; and finally, convolution is used to increase the dimension of the data. Its structure is similar to an hourglass, so it is called a bottleneck structure. For example, if the number of input channels is 256, the convolutional layer will first reduce the number of channels to 64, and then, increase the number of channels to 256 after the convolutional layer.

- (b)

- FPN: The feature pyramid is a pyramid shape composed of features at different scales extracted by deep convolutional neural networks [39]. In this paper, we use four bottleneck modules as the backbone network for extracting features at different scales, and the high-level features with low resolution but high-semantic information and the low-level features with high resolution but low-semantic information are added by up-sampling and lateral connection methods. The features at all scales have rich semantic information. Finally, feature maps of different scales are used for classification tasks.

2.3.2. Feature Fusion

- (a)

- Concat: Unifies the scale of the feature map through the maximum pooling operation. Utilizes the convolution kernel to adjust the 4-layer features to the same number of channels, and feature fusion is completed by using the Concat function.

- (b)

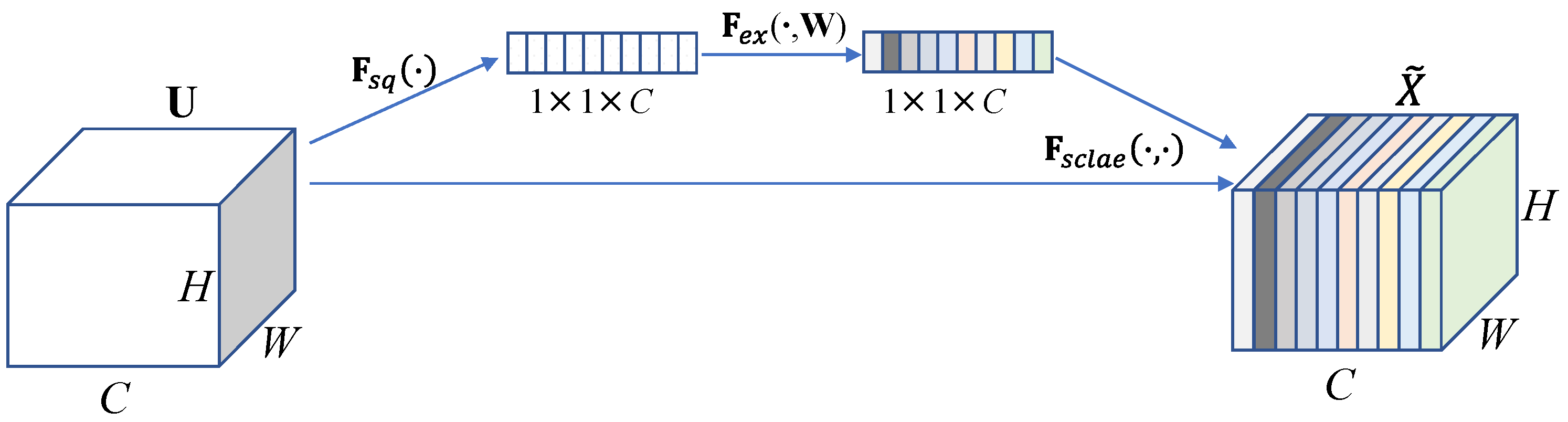

- SENet: Adjusts the channel attention of the fused feature map through the SE attention module to enhance the expression ability of the feature map [40]. SENet, shown in Figure 4, is a plug-and-play channel attention module that is often applied in visual models. It can enhance the channel features of the input feature map, and the output of the SE module does not change the size of the input feature map. SENet mainly includes two operations: squeeze and excitation.

- : Squeeze refers to compressing the spatial information in the input feature map. The input feature map ’s dimensions are . In the spatial dimension, global average pooling is realized to obtain a feature map. The following equation is used:

- : Excitation combines the learned channel attention information with the input feature map . The vector obtained in the previous step is processed through two fully connected layers and , and the channel weight value is obtained. The formula is as follows:The activation function of the first fully connected layer is ReLU, and the activation function of the second fully connected layer is sigmoid.

- : The generated weight vector is weight-assigned to the feature map . To obtain the feature map with channel attention, the formula is as follows:The size of is the same as the feature map , which realizes the weighting of the feature map . The SE attention module can enhance the resampling time–frequency characteristics expressed in the feature map by focusing on channels that are effective for the resampling classification task and suppressing channels that are ineffective.

- (c)

- Lateral connection: Used in the top-down up-sampling process of the network, accompanied by the operation of lateral connection. The results of up-sampling are fused with the bottom-up-generated feature maps of the same scale, that is, added pixel by pixel [40]. In the experiment, bilinear interpolation is selected for up-sampling. is obtained by the SE attention module, which reduces the number of channels to 256 by convolution, obtaining through (6):where represents the weight coefficients of the four pixels closest to the target pixel, which can be obtained from the distance between the target pixel and these four pixels. We will process the feature maps extracted from the Bottleneck3 through convolution, reducing the number of channels to 256, and obtain . After Equation (7), the final feature map is calculated.

2.3.3. Classification and Evaluation Metrics

2.4. Changes in Spectrogram Features in Different Network Layers

3. Experiments and Analysis

3.1. Experimental Setup

3.1.1. Dataset

3.1.2. Implementation Details

3.2. Results and Analysis

3.2.1. Different Interpolation Methods

- Different interpolation methods have a certain impact on the detection model, and the detection accuracy obtained by different interpolation methods varies from 0.17% to 2.16%. In general, the detection accuracy of the model is less affected by the interpolation method.

- Regardless of the detection under different sampling factors or different interpolation methods, the accuracy of the method proposed in this paper is higher than the methods of Hou [16], Wang [18] and Zhang [19]. The results show that the performance of using the deep learning method to extract spectrogram features for resampling detection is significantly better than the existing research results.

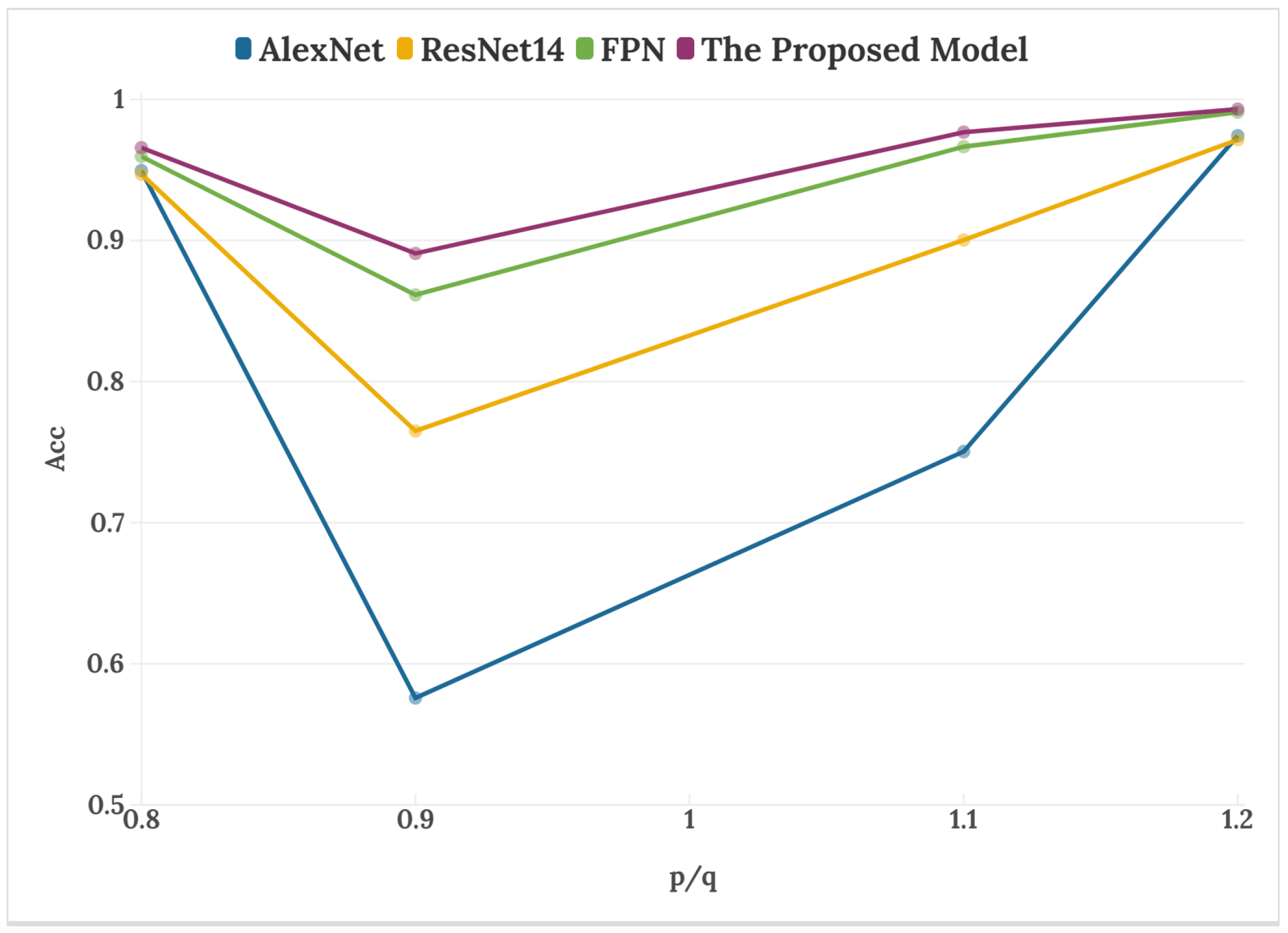

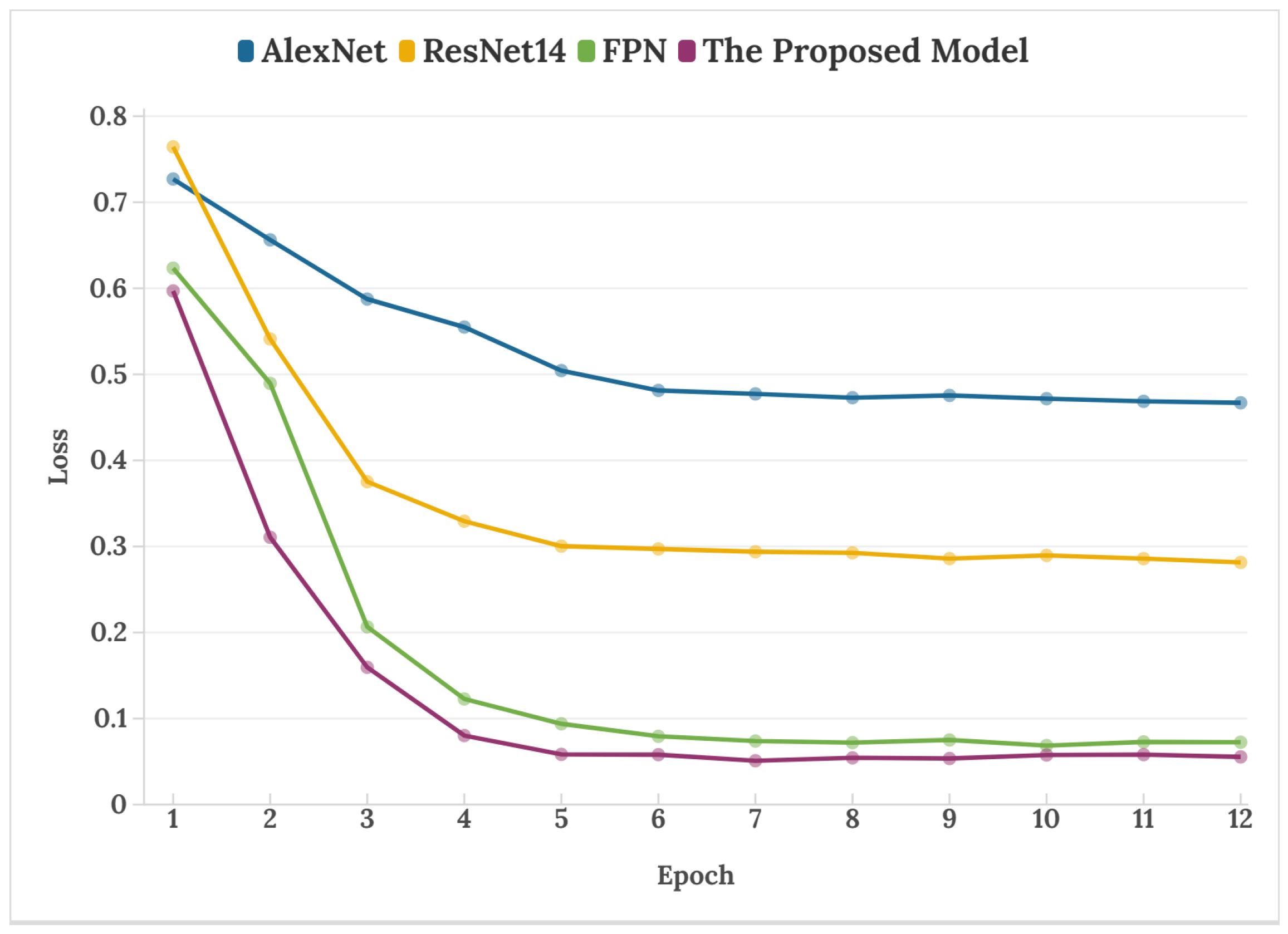

3.2.2. Architecture Comparison

3.2.3. Different Compression Sampling Factors

3.2.4. The Impact of MP3 Compression

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Audacity: Free Audio Editor and Recorder. Available online: http://www.audacityteam.org/ (accessed on 17 December 2023).

- Cool Edit Pro Is Now Adobe Audition. Available online: http://www.adobe.com/products/audition.html (accessed on 6 August 2023).

- Gold Wave-Audio Editor, Recorder, Converter, Restoration, and Analysis Software. Available online: http://www.goldwave.ca/ (accessed on 3 February 2024).

- Yan, Q.; Yang, R.; Huang, J. Detection of speech smoothing on very short clips. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2441–2453. [Google Scholar] [CrossRef]

- Bevinamarad, P.R.; Shirldonkar, M. Audio forgery detection techniques: Present and past review. In Proceedings of the 2020 4th International Conference on Trends in Electronics and Informatics (ICOEI)(48184), Tirunelveli, India, 15–17 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 613–618. [Google Scholar]

- Mubeen, Z.; Afzal, M.; Ali, Z.; Khan, S.; Imran, M. Detection of impostor and tampered segments in audio by using an intelligent system. Comput. Electr. Eng. 2021, 91, 107122. [Google Scholar] [CrossRef]

- Saleem, S.; Dilawari, A.; Khan, U.G. Spoofed voice detection using dense features of stft and mdct spectrograms. In Proceedings of the 2021 International Conference on Artificial Intelligence (ICAI), Islamabad, Pakistan, 5–7 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 56–61. [Google Scholar]

- Capoferri, D.; Borrelli, C.; Bestagini, P.; Antonacci, F.; Sarti, A.; Tubaro, S. Speech audio splicing detection and localization exploiting reverberation cues. In Proceedings of the 2020 IEEE International Workshop on Information Forensics and Security (WIFS), New York, NY, USA, 6–11 December 2020; IEEE: Piscataway, NJ, USA; 2020; pp. 1–6. [Google Scholar]

- Huang, X.; Liu, Z.; Lu, W.; Liu, H.; Xiang, S. Fast and effective copy-move detection of digital audio based on auto segment. In Digital Forensics and Forensic Investigations: Breakthroughs in Research and Practice; IGI Global: Hershey, PA, USA, 2020; pp. 127–142. [Google Scholar]

- Zhao, J.; Lu, B.; Huang, L.; Huang, M.; Huang, J. Digital audio tampering detection using ENF feature and LST-MInception net. In Proceedings of the AIIPCC 2022; The Third International Conference on Artificial Intelligence, Information Processing and Cloud Computing, Online, 21–22 June 2022; VDE: Berlin, Germany, 2022; pp. 1–4. [Google Scholar]

- Gallagher, A.C. Detection of linear and cubic interpolation in JPEG compressed images. In Proceedings of the 2nd Canadian Conference on Computer and Robot Vision (CRV’05), Victoria, BC, Canada, 9–11 May 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 65–72. [Google Scholar]

- Mahdian, B.; Saic, S. Blind authentication using periodic properties of interpolation. IEEE Trans. Inf. Forensics Secur. 2008, 3, 529–538. [Google Scholar] [CrossRef]

- Yao, Q.; Chai, P.; Xuan, G.; Yang, Z.; Shi, Y. Audio re-sampling detection in audio forensics based on EM algorithm. J. Comput. Appl. 2006, 26, 2598–2601. [Google Scholar]

- Chen, Y.X.; Xi, W.U. A method of detecting re-sampling based on expectation maximization applied in audio blind forensics. J. Circuits Syst. 2012, 17, 118–123. [Google Scholar]

- Shi, Q.; Ma, X. Detection of audio interpolation based on singular value decomposition. In Proceedings of the 2011 3rd International Conference on Awareness Science and Technology (iCAST), Dalian, China, 27–30 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 287–290. [Google Scholar]

- Hou, L.; Wu, W.; Zhang, X. Audio re-sampling detection in audio forensics based on second-order derivative. J. Shanghai Univ. 2014, 20, 304–312. [Google Scholar]

- Popescu, A.C.; Farid, H. Exposing digital forgeries by detecting traces of resampling. IEEE Trans. Signal Process. 2005, 53, 758–767. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, D.; Wang, R.; Xiang, L.; Wu, T. Speech resampling detection based on inconsistency of band energy. Comput. Mater. Contin. 2018, 56, 247–259. [Google Scholar]

- Zhang, Y.; Dai, S.; Song, W.; Zhang, L.; Li, D. Exposing speech resampling manipulation by local texture analysis on spectrogram images. Electronics 2019, 9, 23. [Google Scholar] [CrossRef]

- Xu, Y.; Irfan, M.; Fang, A.; Zheng, J. Multiscale Attention Network for Detection and Localization of Image Splicing Forgery. IEEE Trans. Instrum. Meas. 2023, 72, 1–15. [Google Scholar] [CrossRef]

- Lang, X.; Han, F. MFL Image Recognition Method of Pipeline Corrosion Defects Based on Multilayer Feature Fusion Multiscale GhostNet. IEEE Trans. Instrum. Meas. 2022, 71, 1–8. [Google Scholar] [CrossRef]

- Wani, T.M.; Gunawan, T.S.; Qadri, S.A.A.; Mansor, H.; Arifin, F.; Ahmad, Y.A. Stride Based Convolutional Neural Network for Speech Emotion Recognition. In Proceedings of the 2021 IEEE 7th International Conference on Smart Instrumentation, Measurement and Applications (ICSIMA), Bandung, Indonesia, 23–25 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 41–46. [Google Scholar]

- Banerjee, S.; Singh, G.K. A Robust Bio-Signal Steganography With Lost-Data Recovery Architecture Using Deep Learning. IEEE Trans. Instrum. Meas. 2022, 71, 1–10. [Google Scholar] [CrossRef]

- Huang, J.; Luo, T.; Li, L.; Yang, G.; Xu, H.; Chang, C.C. ARWGAN: Attention-Guided Robust Image Watermarking Model Based on GAN. IEEE Trans. Instrum. Meas. 2023, 72, 1–17. [Google Scholar] [CrossRef]

- Küçükuğurlu, B.; Ustubioglu, B.; Ulutas, G. Duplicated Audio Segment Detection with Local Binary Pattern. In Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), Milan, Italy, 7–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 350–353. [Google Scholar]

- Yan, D.; Dong, M.; Gao, J. Exposing speech transsplicing forgery with noise level inconsistency. Secur. Commun. Networks 2021, 2021, 6659371. [Google Scholar] [CrossRef]

- Ulutas, G.; Tahaoglu, G.; Ustubioglu, B. Forge Audio Detection Using Keypoint Features on Mel Spectrograms. In Proceedings of the 2022 45th International Conference on Telecommunications and Signal Processing (TSP), Virtual, 13–15 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 413–416. [Google Scholar]

- Jadhav, S.; Patole, R.; Rege, P. Audio splicing detection using convolutional neural network. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Ustubioglu, A.; Ustubioglu, B.; Ulutas, G. Mel spectrogram-based audio forgery detection using CNN. Signal Image Video Process. 2023, 17, 2211–2219. [Google Scholar] [CrossRef]

- Chuchra, A.; Kaur, M.; Gupta, S. A Deep Learning Approach for Splicing Detection in Digital Audios. In Proceedings of the Congress on Intelligent Systems: Proceedings of CIS 2021; Springer: Berlin/Heidelberg, Germany, 2022; Volume 1, pp. 543–558. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Özseven, T. Investigation of the effect of spectrogram images and different texture analysis methods on speech emotion recognition. Appl. Acoust. 2018, 142, 70–77. [Google Scholar] [CrossRef]

- Zeng, Y.; Mao, H.; Peng, D. Spectrogram based multi-task audio classification. Multimed. Tools Appl. 2019, 78, 3705–3722. [Google Scholar] [CrossRef]

- Pyrovolakis, K.; Tzouveli, P.; Stamou, G. Multi-modal song mood detection with deep learning. Sensors 2022, 22, 1065. [Google Scholar] [CrossRef] [PubMed]

- Savić, N.; Milivojević, Z.; Prlinčević, B.; Kostić, D. Septic-convolution Kernel-Comparative Analysis of the Interpolation Error. In Proceedings of the 2022 International Conference on Development and Application Systems (DAS), Suceava, Romania, 26–28 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 36–41. [Google Scholar]

- Pereira, E.; Carneiro, G.; Cordeiro, F.R. A Study on the Impact of Data Augmentation for Training Convolutional Neural Networks in the Presence of Noisy Labels. In Proceedings of the 2022 35th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Natal, Brazil, 24–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; Volume 1, pp. 25–30. [Google Scholar]

- Yue, G.; Li, S.; Cong, R.; Zhou, T.; Lei, B.; Wang, T. Attention-Guided Pyramid Context Network for Polyp Segmentation in Colonoscopy Images. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar]

- Xiang, Z.; Bestagini, P.; Tubaro, S.; Delp, E.J. Forensic Analysis and Localization of Multiply Compressed MP3 Audio Using Transformers. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2929–2933. [Google Scholar]

- Hailu, N.; Siegert, I.; Nürnberger, A. Improving automatic speech recognition utilizing audio-codecs for data augmentation. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 21–24 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Guo, T.; Wen, C.; Jiang, D. Didispeech: A large scale mandarin speech corpus. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6968–6972. [Google Scholar]

- Fu, Y.; Cheng, L.; Lv, S. Aishell-4: An open source dataset for speech enhancement, separation, recognition and speaker diarization in conference scenario. arXiv 2021, arXiv:2104.03603. [Google Scholar]

| Dataset | Original Speech | Resampled Speech (0.6∼1.6) | |||

|---|---|---|---|---|---|

| Un-Interpolated | Linear | Spline | Cubic | Mixed | |

| Uncompressed | 3000 samples | 3000 × 3 × 10 = 90,000 samples | 3000 × 10 = 30,000 samples | ||

| Compressed (32 kbps, 64 kbps, 128 kbps) | 3000 × 3 = 9000 samples | 3000 × 3 × 10 × 3 = 270,000 samples | 3000 × 3 × 10 = 90,000 samples | ||

| 0.8 | Hou et al. [16] | 0.7061 | 0.7237 | 0.7061 | 0.6841 |

| Wang et al. [18] | 0.4943 | 0.4820 | 0.5310 | 0.4950 | |

| Zhang et al. [19] | 0.8553 | 0.8360 | 0.8340 | 0.8270 | |

| 0.9 | Hou et al. [16] | 0.6876 | 0.6771 | 0.6619 | 0.6673 |

| Wang et al. [18] | 0.4490 | 0.4140 | 0.4840 | 0.4550 | |

| Zhang et al. [19] | 0.6960 | 0.7030 | 0.6600 | 0.6950 | |

| 1.1 | Hou et al. [16] | 0.6902 | 0.6514 | 0.6806 | 0.6817 |

| Wang et al. [18] | 0.6247 | 0.6300 | 0.6690 | 0.6700 | |

| Zhang et al. [19] | 0.7413 | 0.7530 | 0.7440 | 0.7430 | |

| 1.2 | Hou et al. [16] | 0.6657 | 0.6745 | 0.6201 | 0.6675 |

| Wang et al. [18] | 0.7020 | 0.6620 | 0.7490 | 0.7030 | |

| Zhang et al. [19] | 0.8127 | 0.8160 | 0.8460 | 0.8000 | |

| 0.8 | 0.9495 | 0.9470 | 0.9595 | |

| 0.9 | 0.5758 | 0.7650 | 0.8614 | |

| 1.1 | 0.7504 | 0.9003 | 0.9664 | |

| 1.2 | 0.9742 | 0.9714 | 0.9909 |

| 0.8 | 0.9270 | 0.9533 | |

| 0.9 | 0.8510 | 0.8867 | |

| 1.1 | 0.9240 | 0.9397 | |

| 1.2 | 0.9620 | 0.9826 |

| [16] | [18] | [19] | ||

|---|---|---|---|---|

| 0.6 | 0.7120 | 0.6593 | 0.9813 | |

| 0.7 | 0.6884 | 0.5573 | 0.9407 | |

| 0.8 | 0.7061 | 0.4943 | 0.8553 | |

| 0.9 | 0.6876 | 0.4490 | 0.6960 | |

| 1.1 | 0.6902 | 0.6247 | 0.7413 | |

| 1.2 | 0.6657 | 0.7027 | 0.8127 | |

| 1.3 | 0.6603 | 0.7090 | 0.9093 | |

| 1.4 | 0.6523 | 0.7617 | 0.9433 | |

| 1.5 | 0.6346 | 0.7873 | 0.9649 | |

| 1.6 | 0.6804 | 0.8373 | 0.9791 |

| Method | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 32 kbps | Hou et al. [16] | 0.6984 | 0.6977 | 0.6968 | 0.7222 | 0.7303 | 0.7485 | 0.6549 | 0.6596 | 0.6510 | 0.6393 | |

| Wang et al. [18] | 0.6593 | 0.5447 | 0.4977 | 0.4387 | 0.6683 | 0.7187 | 0.7433 | 0.7733 | 0.8110 | 0.8660 | ||

| Zhang et al. [19] | 0.9980 | 0.9787 | 0.9627 | 0.9500 | 0.9540 | 0.9580 | 0.9633 | 0.9760 | 0.9813 | 0.9913 | ||

| 64 kbps | Hou et al. [16] | 0.7059 | 0.7219 | 0.7061 | 0.6702 | 0.6800 | 0.6752 | 0.6482 | 0.6596 | 0.6407 | 0.6739 | |

| Wang et al. [18] | 0.6330 | 0.5297 | 0.4983 | 0.4677 | 0.6367 | 0.6833 | 0.7097 | 0.7587 | 0.7943 | 0.8400 | ||

| Zhang et al. [19] | 0.9860 | 0.9407 | 0.8473 | 0.7040 | 0.7380 | 0.8273 | 0.9047 | 0.9480 | 0.9733 | 0.9880 | ||

| 128 kbps | Hou et al. [16] | 0.7179 | 0.7346 | 0.6963 | 0.6795 | 0.6809 | 0.6884 | 0.6473 | 0.6464 | 0.6299 | 0.6543 | |

| Wang et al. [18] | 0.6370 | 0.5273 | 0.4993 | 0.4587 | 0.6397 | 0.6840 | 0.7097 | 0.7513 | 0.7973 | 0.8403 | ||

| Zhang et al. [19] | 0.9860 | 0.9373 | 0.8440 | 0.6847 | 0.7140 | 0.8187 | 0.8980 | 0.9407 | 0.9727 | 0.9840 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Zhang, Y.; Wang, Y.; Tian, J.; Xu, S. Pyramid Feature Attention Network for Speech Resampling Detection. Appl. Sci. 2024, 14, 4803. https://doi.org/10.3390/app14114803

Zhou X, Zhang Y, Wang Y, Tian J, Xu S. Pyramid Feature Attention Network for Speech Resampling Detection. Applied Sciences. 2024; 14(11):4803. https://doi.org/10.3390/app14114803

Chicago/Turabian StyleZhou, Xinyu, Yujin Zhang, Yongqi Wang, Jin Tian, and Shaolun Xu. 2024. "Pyramid Feature Attention Network for Speech Resampling Detection" Applied Sciences 14, no. 11: 4803. https://doi.org/10.3390/app14114803

APA StyleZhou, X., Zhang, Y., Wang, Y., Tian, J., & Xu, S. (2024). Pyramid Feature Attention Network for Speech Resampling Detection. Applied Sciences, 14(11), 4803. https://doi.org/10.3390/app14114803