An Efficient Approach for Automatic Fault Classification Based on Data Balance and One-Dimensional Deep Learning

Abstract

1. Introduction

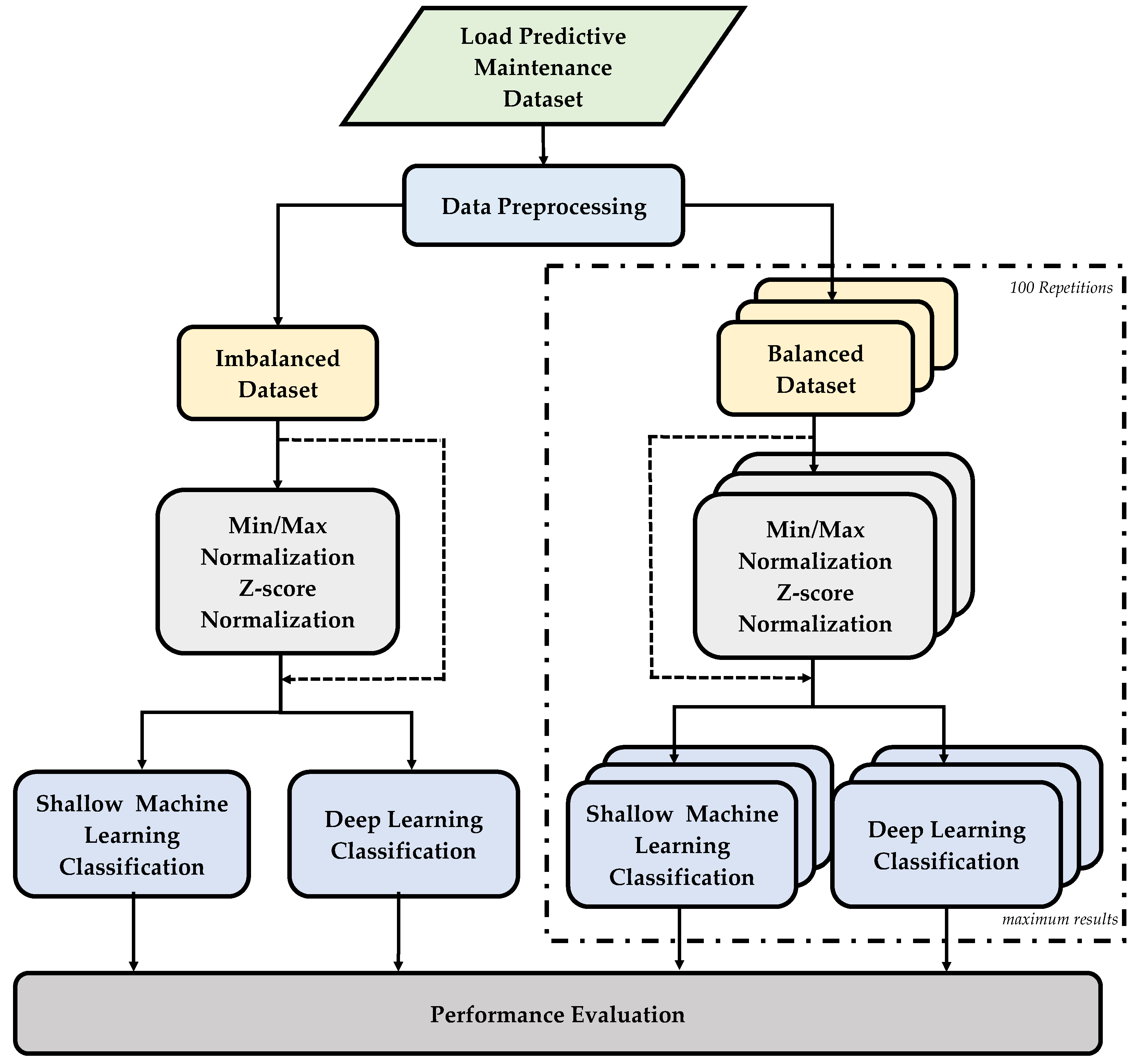

- Within the scope of classification studies, in addition to shallow machine learning algorithms, one-dimensional deep learning architectures (1D-LeNet, 1D-AlexNet, and 1D-VGG16) were also used for PdM.

- Before fault classification, the data preprocessing operation mentioned in the “Dataset Description and Preprocessing” section was applied to the original dataset to remove outliers, unlike the studies using the Matzka PdM dataset.

- Feature normalization techniques such as min/max and z-score, which were not investigated in the Matzka PdM dataset, were used to ensure that the features were on a similar scale and had the same range of values.

- To demonstrate the impact of imbalanced and balanced distributions of labeled data on classifier performance, new dataset groups were generated for all fault types; generations were performed with 100 different repetitions and the maximum performance scores are displayed in tables, diverging from the analyses conducted with the Matzka PdM dataset.

- A table was constructed to compare this study with others in the literature that used the same original PdM dataset, focusing on methodology, dataset type, fault type and performance metrics.

2. Materials and Methods

2.1. Dataset Description and Data Preprocessing

2.2. Feature Normalization

2.3. Shallow Classification Algorithms

2.3.1. Decision Trees (DTs)

2.3.2. Support Vector Machines (SVMs)

2.3.3. k-Nearest Neighbors (k-NNs)

2.4. Deep Learning/CNN Architectures for Classification

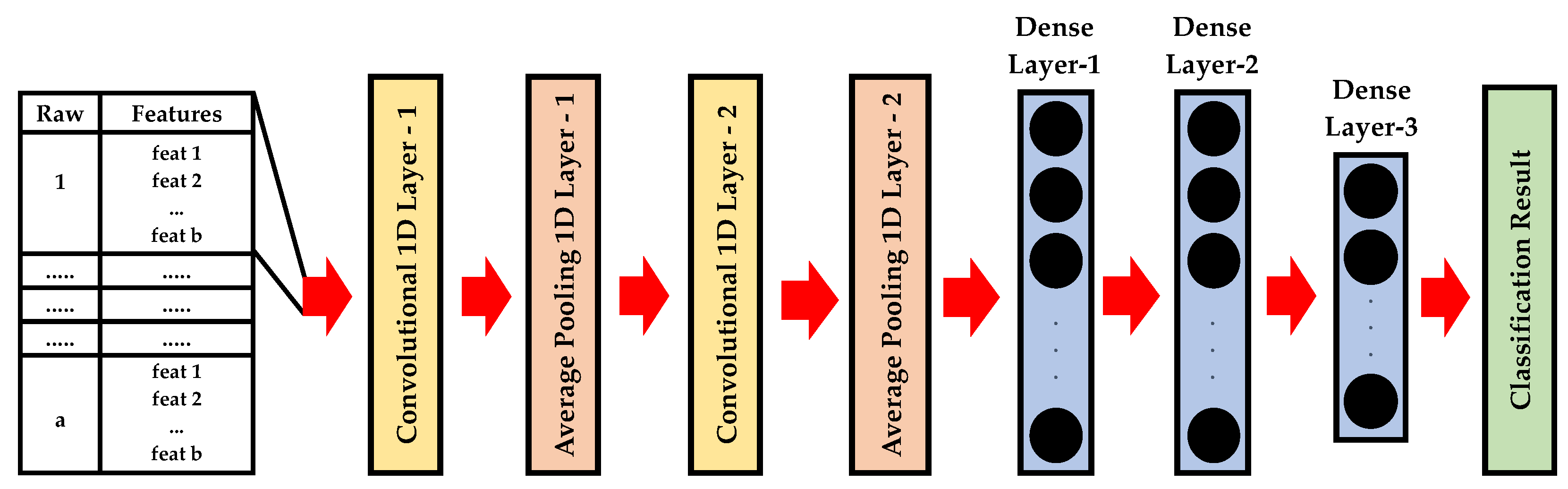

2.4.1. 1D-LeNet

2.4.2. 1D-AlexNet

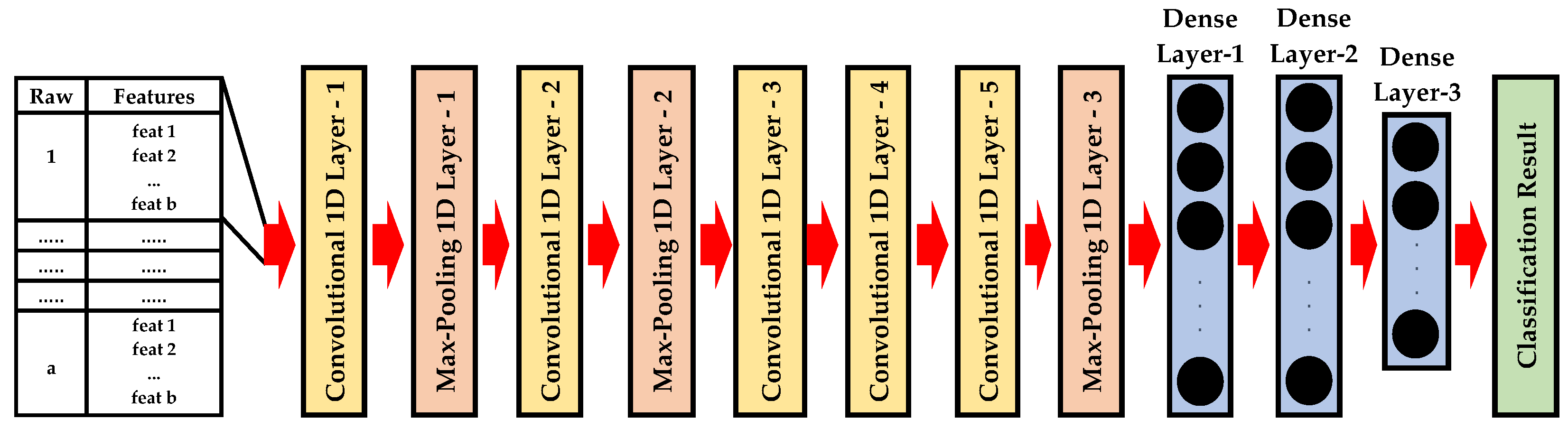

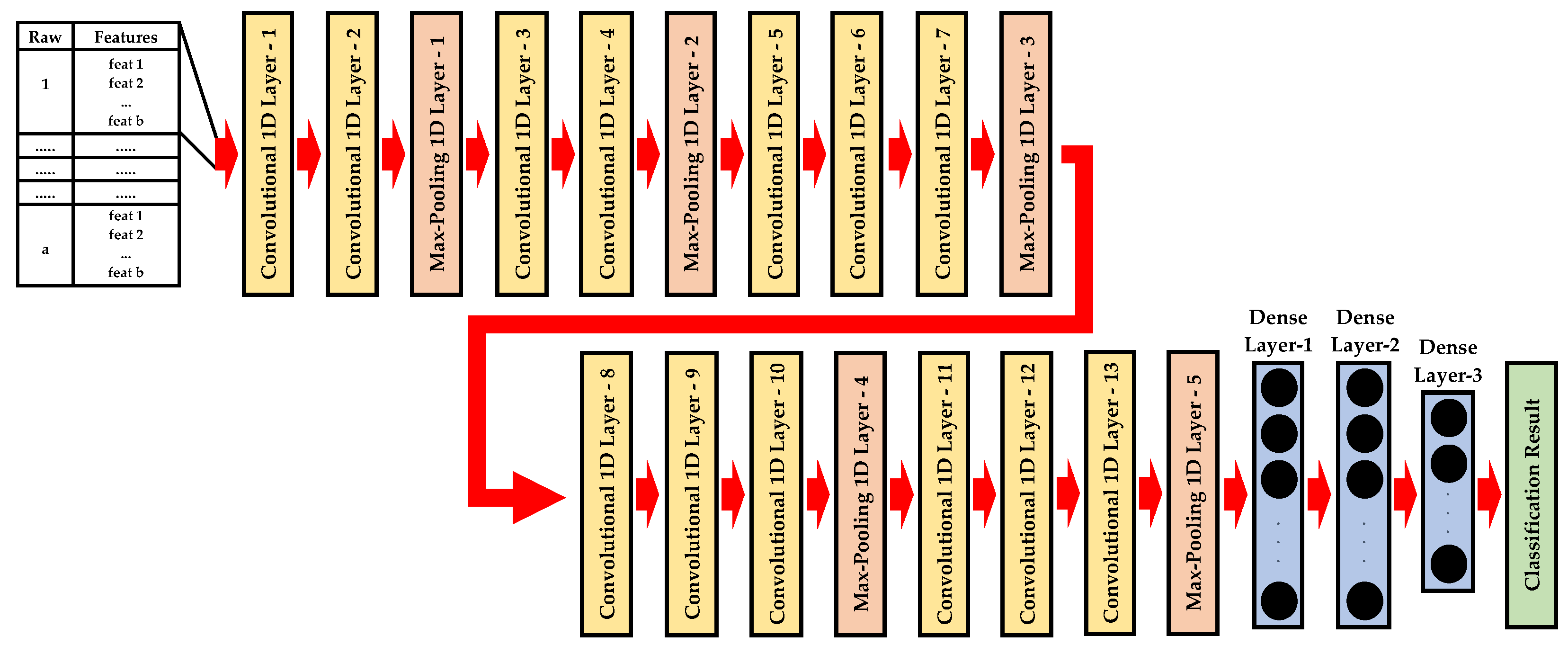

2.4.3. 1D-VGG16

2.5. Performance Metrics

3. Experimental Results

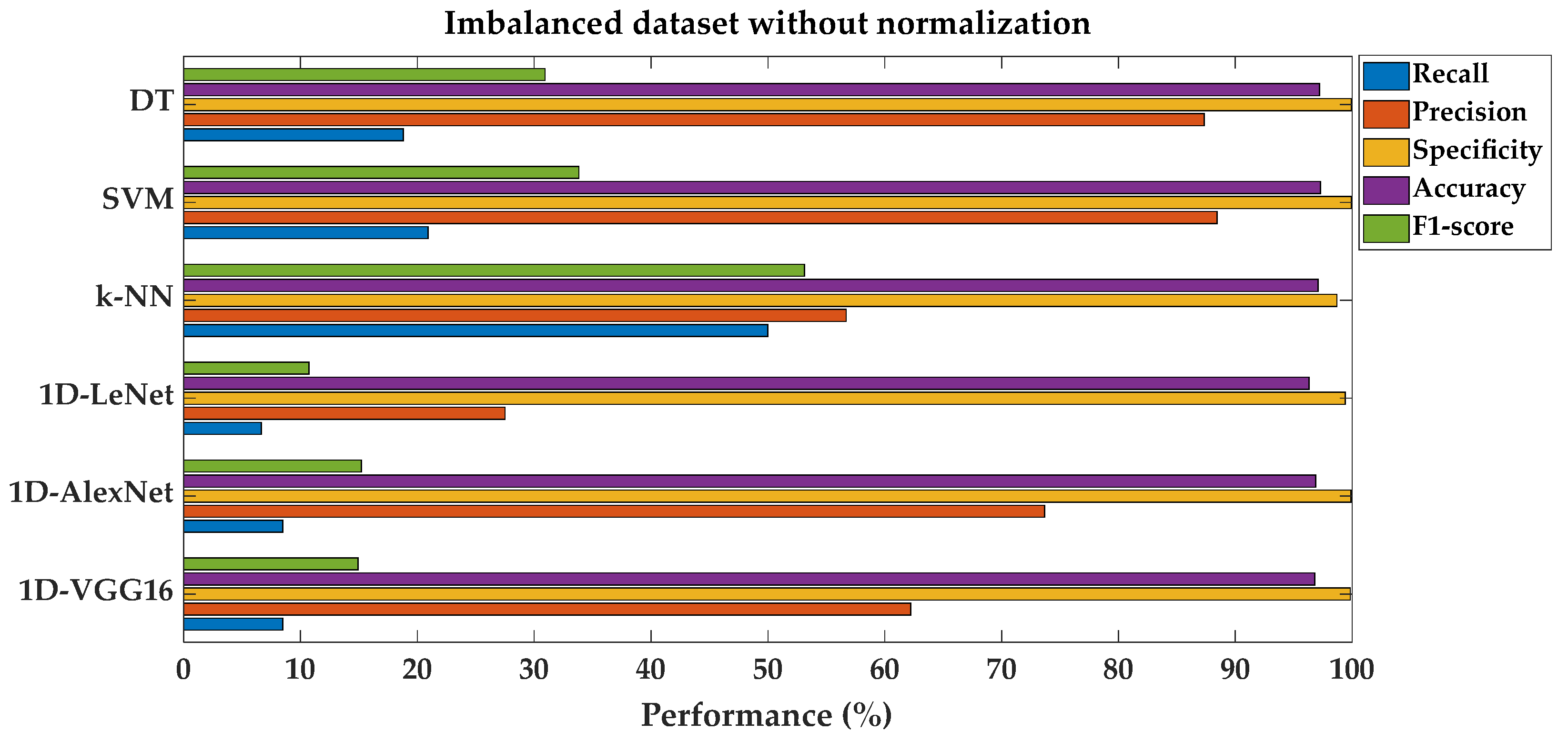

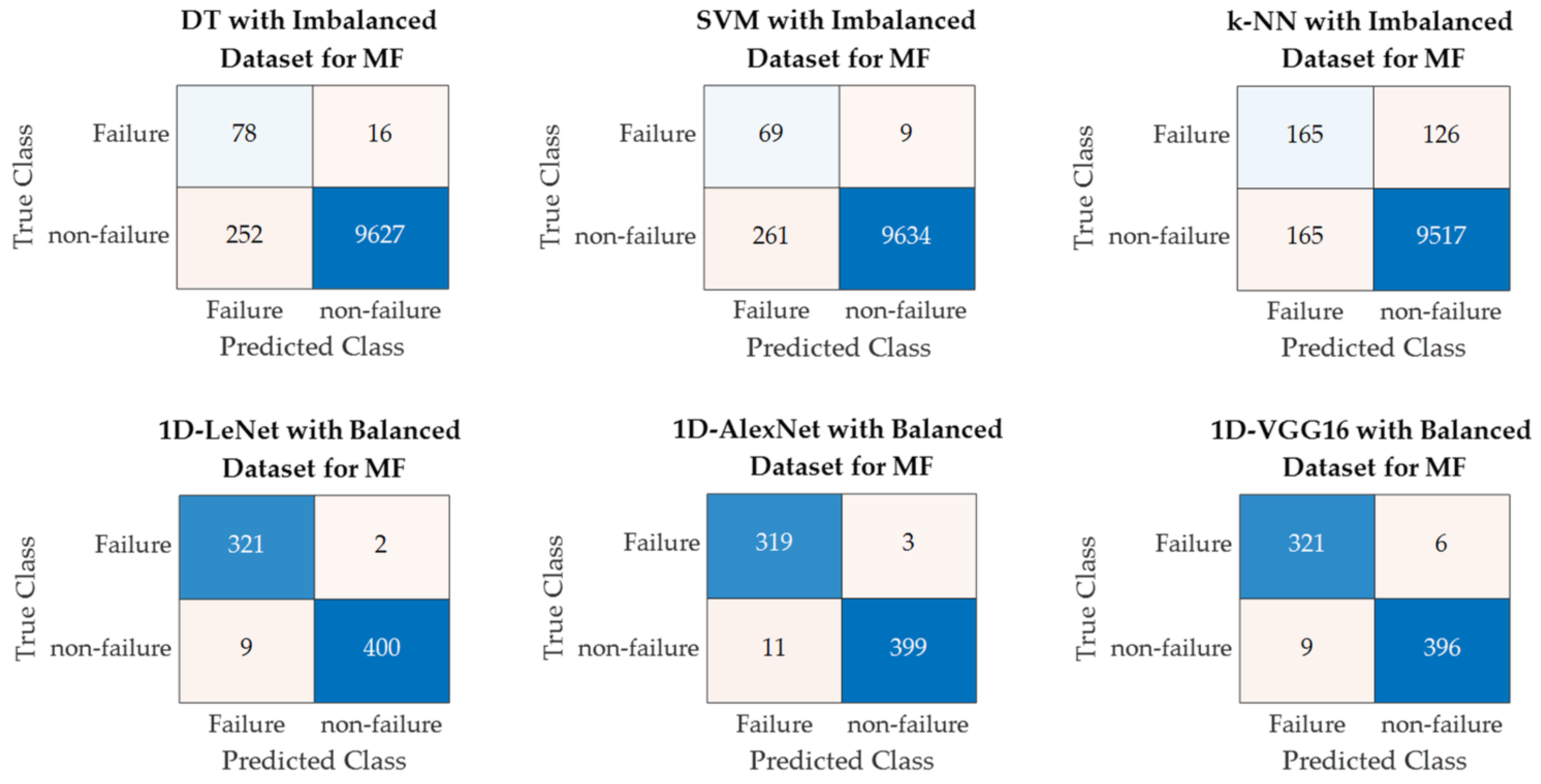

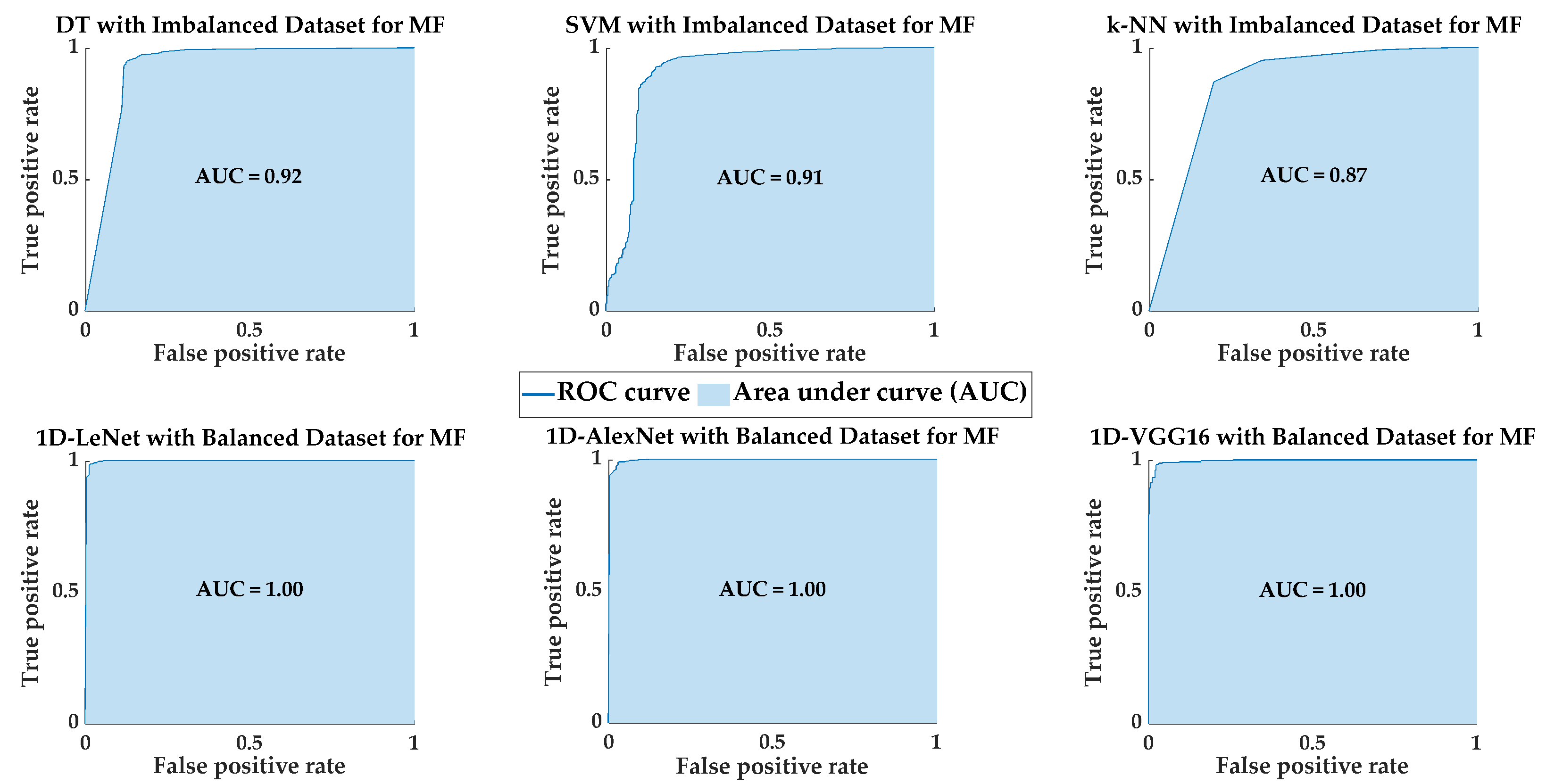

3.1. Performance Metrics for MF Class Using Imbalanced Dataset without Feature Normalization

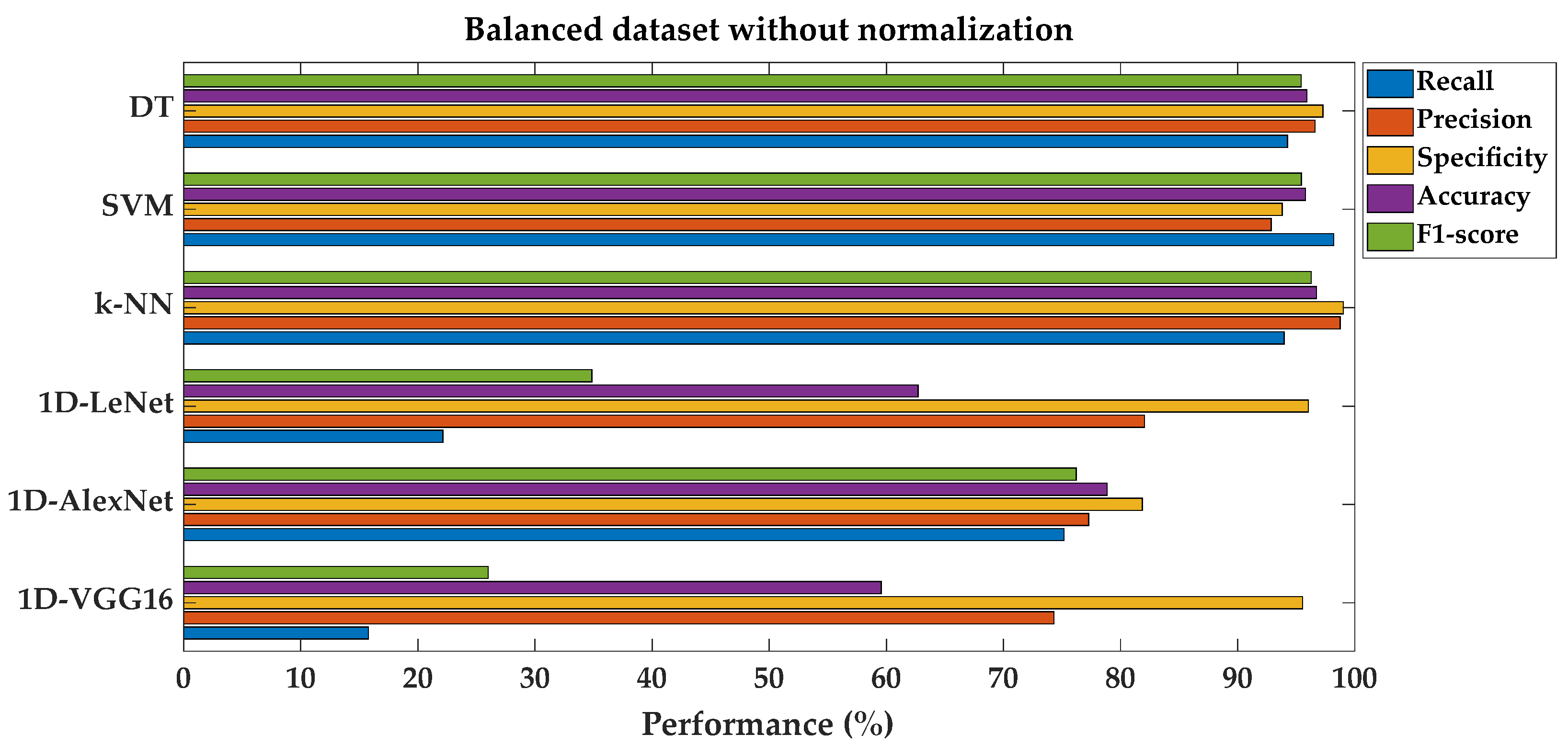

3.2. Performance Metrics for MF Class Using Balanced Dataset without Feature Normalization

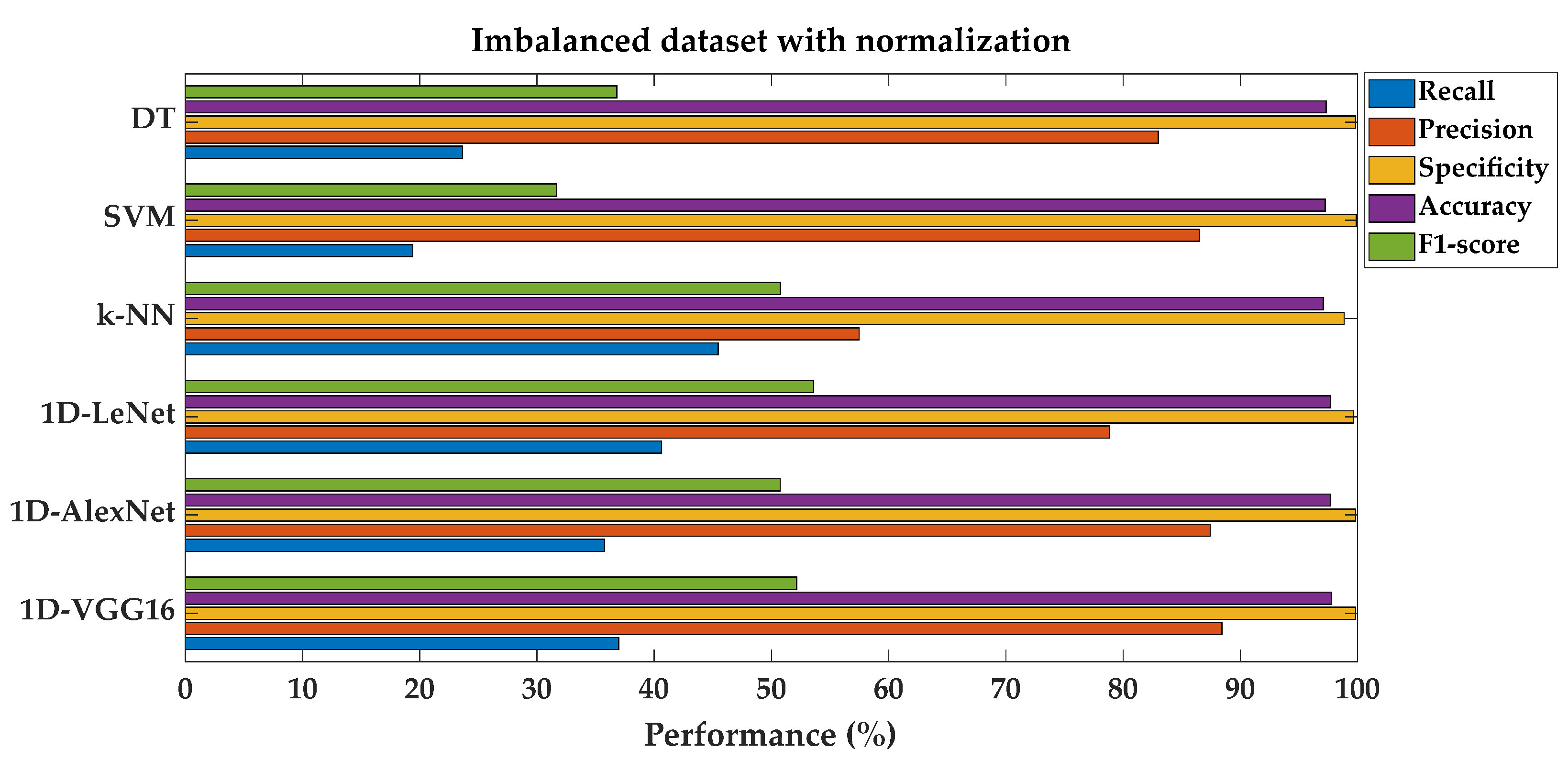

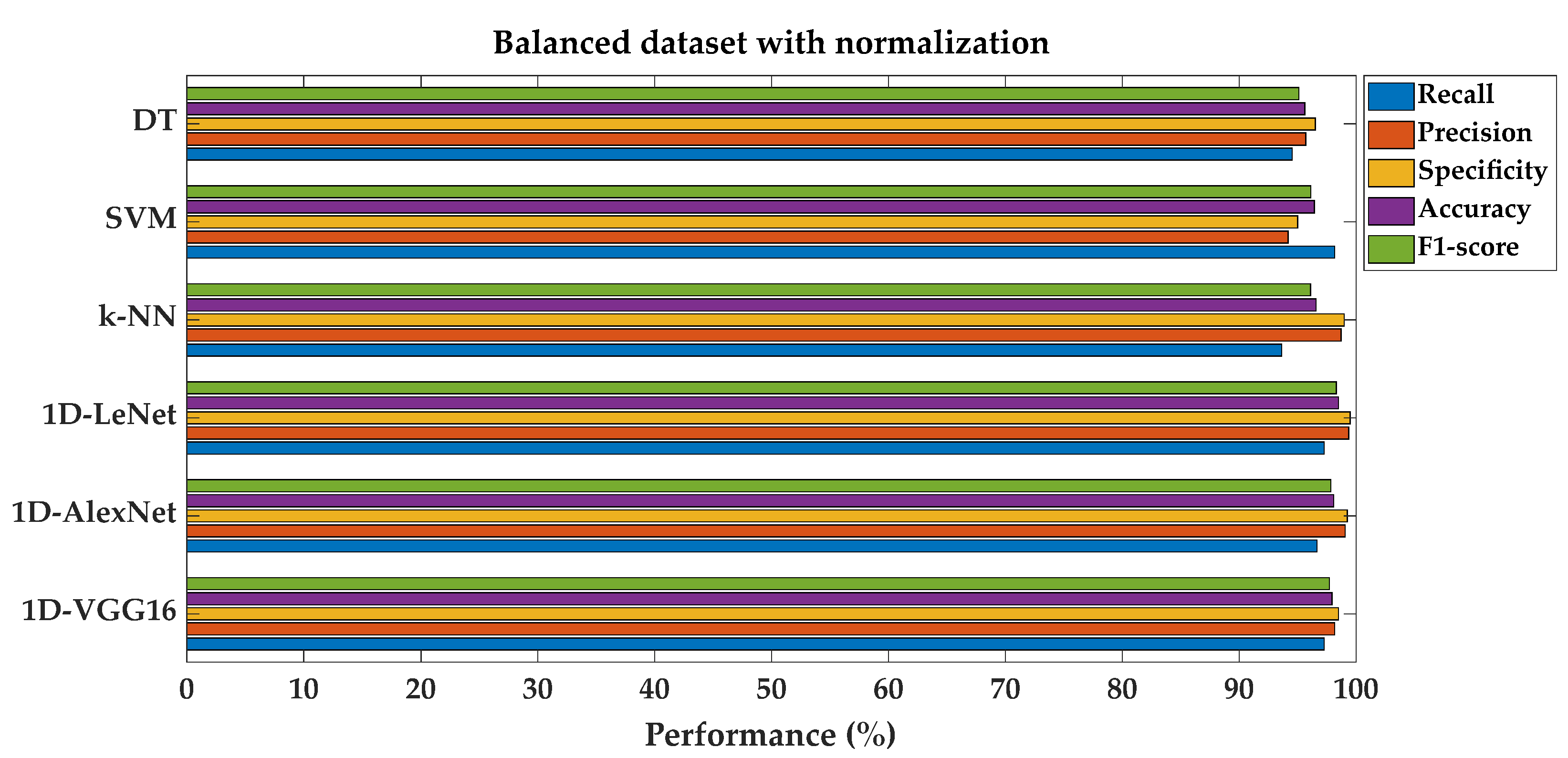

3.3. Performance Metrics for MF Class Using Imbalanced and Balanced Dataset with Feature Normalization

3.4. Performance Metrics for Fault Type Classification Using Balanced Dataset without and with Feature Normalization

4. Discussion

| Literature | Classification Algorithm | Dataset Type | Fault Class | Performance Metrics (%) | |

|---|---|---|---|---|---|

| Matzka [23] | Bagged Trees | Imbalanced | MF | Acc = 98.34 | F1 = 77.98 |

| Pastorino and Biswas [48] | Data-Blind Machine Learning | Imbalanced | MF | Acc = 97.30 | F1 = - |

| Torcianti and Matzka [49] | Random Undersampling Boosting Trees | Imbalanced | MF | Acc = 92.74 | F1 = 45.90 |

| Diao et al. [57] | Density-Based Spatial Clustering of Applications with NoiseClustering by Fast Search and Find of Density Peaks | Imbalanced | MF | Acc = - | F1 = 63.00 |

| Mota et al. [50] | Gradient Boosting | Imbalanced | MF | Acc = 94.55 | F1 = 49.00 |

| Vuttipittayamongkol and Arreeras [53] | Decision Tree | Imbalanced | MF | Acc = - | F1 = 77.66 |

| de Campos Souza and Lughofer [58] | Evolving Fuzzy Neural Classifier with Expert rules | Imbalanced | MF | Acc = 97.30 | F1 = - |

| Sharma et al. [55] | Random Forest | Imbalanced | MF | Acc = 98.40 | F1 = - |

| Iantovics and Enăchescu [56] | Binary Logistic Regression | Imbalanced | MF | Acc = 97.10 | F1 = 44.07 |

| Vandereycken and Voorhaar [51] | Extreme Gradient Boosting | Imbalanced | MF | Acc = 95.74 | F1 = - |

| Chen et al. [52] | Combination of Synthetic minority oversampling technique for nominal and continuous, Conditional tabular generative adversarial network and Categorical Boosting | Imbalanced | MF | Acc = 88.83 | F1 = - |

| Harichandran et al. [54] | Hybrid Unsupervised and Supervised Machine Learning | Imbalanced | MF | Acc = 98.46 | F1 = 78.80 |

| Kong et al. [59] | A Data Filling Approach based on Probability Analysis in Incomplete Soft sets | Imbalanced | MF | Acc = 83.74 | F1 = - |

| In this study | Decision Trees | Imbalanced | MF | Acc = 97.31 | F1 = 36.79 |

| Support Vector Machines | Imbalanced | MF | Acc = 97.29 | F1 = 33.82 | |

| k-Nearest Neighbors | Imbalanced | MF | Acc = 97.08 | F1 = 53.14 | |

| 1D-LeNet | Balanced | MF | Acc = 98.50 | F1 = 98.32 | |

| 1D-AlexNet | Balanced | MF | Acc = 98.09 | F1 = 97.85 | |

| 1D-VGG16 | Balanced | MF | Acc = 97.95 | F1 = 97.72 | |

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vermesan, O.; Friess, P. (Eds.) Internet of Things: Converging Technologies for Smart Environments and Integrated Ecosystems; River Publishers: Aalborg, Denmark, 2013. [Google Scholar]

- Guzmán, V.E.; Muschard, B.; Gerolamo, M.; Kohl, H.; Rozenfeld, H. Characteristics and Skills of Leadership in the Context of Industry 4.0. Procedia Manuf. 2020, 43, 543–550. [Google Scholar] [CrossRef]

- Cao, Q.; Zanni-Merk, C.; Samet, A.; Reich, C.; De Beuvron, F.D.B.; Beckmann, A.; Giannetti, C. KSPMI: A knowledge-based system for predictive maintenance in industry 4.0. Robot. Comput. Integr. Manuf. 2022, 74, 102281. [Google Scholar] [CrossRef]

- Li, Z. Deep Learning Driven Approaches for Predictive Maintenance: A Framework of Intelligent Fault Diagnosis and Prognosis in the Industry 4.0 Era. Ph.D. Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2018; p. 132. [Google Scholar]

- Levitt, J. Complete Guide to Preventive and Predictive Maintenance; Industrial Press Inc.: South Norwalk, CT, USA, 2003. [Google Scholar]

- Achouch, M.; Dimitrova, M.; Dhouib, R.; Ibrahim, H.; Adda, M.; Sattarpanah Karganroudi, S.; Ziane, K.; Aminzadeh, A. Predictive Maintenance and Fault Monitoring Enabled by Machine Learning: Experimental Analysis of a TA-48 Multistage Centrifugal Plant Compressor. Appl. Sci. 2023, 13, 1790. [Google Scholar] [CrossRef]

- Velmurugan, R.S.; Dhingra, T. Maintenance strategy selection and its impact in maintenance function: A conceptual framework. Int. J. Oper. Prod. Manag. 2015, 35, 1622–1661. [Google Scholar] [CrossRef]

- Kiangala, K.S.; Wang, Z. Initiating predictive maintenance for a conveyor motor in a bottling plant using industry 4.0 concepts. Int. J. Adv. Manuf. Technol. 2018, 97, 3251–3271. [Google Scholar] [CrossRef]

- Lee, J. Machine performance monitoring and proactive maintenance in computer-integrated manufacturing: Review and perspective. Int. J. Comput. Integr. Manuf. 1995, 8, 370–380. [Google Scholar] [CrossRef]

- Li, D.; Landström, A.; Fast-Berglund, Å.; Almström, P. Human-centred dissemination of data, information and knowledge in industry 4.0. Procedia CIRP 2019, 84, 380–386. [Google Scholar] [CrossRef]

- Baccarini, L.M.R.; e Silva, V.V.R.; de Menezes, B.R.; Caminhas, W.M. SVM practical industrial application for mechanical faults diagnostic. Expert Syst. Appl. 2011, 38, 6980–6984. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Wang, K. Fault diagnosis and prognosis using wavelet packet decomposition, Fourier transform and artificial neural network. J. Intell. Manuf. 2013, 24, 1213–1227. [Google Scholar] [CrossRef]

- Xiong, G.; Shi, D.; Chen, J.; Zhu, L.; Duan, X. Divisional fault diagnosis of large-scale power systems based on radial basis function neural network and fuzzy integral. Electr. Power Syst. Res. 2013, 105, 9–19. [Google Scholar] [CrossRef]

- Muralidharan, V.; Sugumaran, V. Feature extraction using wavelets and classification through decision tree algorithm for fault diagnosis of mono-block centrifugal pump. Measurement 2013, 46, 353–359. [Google Scholar] [CrossRef]

- Phillips, J.; Cripps, E.; Lau, J.W.; Hodkiewicz, M.R. Classifying machinery condition using oil samples and binary logistic regression. Mech. Syst. Signal Process. 2015, 60, 316–325. [Google Scholar] [CrossRef]

- Konar, P.; Chattopadhyay, P. Bearing fault detection of induction motor using wavelet and Support Vector Machines (SVMs). Appl. Soft Comput. 2011, 11, 4203–4211. [Google Scholar] [CrossRef]

- Rai, A.; Upadhyay, S.H. Intelligent bearing performance degradation assessment and remaining useful life prediction based on self-organising map and support vector regression. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2018, 232, 1118–1132. [Google Scholar] [CrossRef]

- Orrù, P.F.; Zoccheddu, A.; Sassu, L.; Mattia, C.; Cozza, R.; Arena, S. Machine Learning Approach Using MLP and SVM Algorithms for the Fault Prediction of a Centrifugal Pump in the Oil and Gas Industry. Sustainability 2020, 12, 4776. [Google Scholar] [CrossRef]

- Pinedo-Sanchez, L.A.; Mercado-Ravell, D.A.; Carballo-Monsivais, C.A. Vibration analysis in bearings for failure prevention using CNN. J. Braz. Soc. Mech. Sci. Eng. 2020, 42, 628. [Google Scholar] [CrossRef]

- Deng, H.; Zhang, W.X.; Liang, Z.F. Application of BP Neural Network and Convolutional Neural Network (CNN) in Bearing Fault Diagnosis. Mater. Sci. Eng. 2021, 1043, 42–46. [Google Scholar] [CrossRef]

- Gao, J.; Han, H.; Ren, Z.; Fan, Y. Fault diagnosis for building chillers based on data self-production and deep convolutional neural network. J. Build. Eng. 2021, 34, 102043. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, Y.; Ge, Z. Dynamic Ensemble Selection Based Improved Random Forests for Fault Classification in Industrial Processes. IFAC J. Syst. Control 2022, 20, 100189. [Google Scholar] [CrossRef]

- Matzka, S. Explainable artificial intelligence for predictive maintenance applications. In Proceedings of the Third International Conference on Artificial Intelligence for Industries, Irvine, CA, USA, 21–23 September 2020; pp. 69–74. [Google Scholar]

- Matzka, S. AI4I 2020 Predictive Maintenance Dataset. UCI Machine Learning Repository. 2020. Available online: www.explorate.ai/dataset/predictiveMaintenanceDataset.csv (accessed on 22 December 2021).

- Azeri, N.; Hioual, O.; Hioual, O. Towards an Approach for Modeling and Architecting of Self-Adaptive Cyber-Physical Systems. In Proceedings of the 2022 4th International Conference on Pattern Analysis and Intelligent Systems (PAIS), Oum El Bouaghi, Algeria, 12–13 October 2022; pp. 1–7. [Google Scholar]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann Publisher: Waltham, MA, USA, 2011. [Google Scholar]

- Bhanja, S.; Das, A. Impact of Data Normalization on Deep Neural Network for Time Series Forecasting. arXiv 2018, arXiv:1812.05519. [Google Scholar]

- Quinlan, J.R. C4. 5: Programs for Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Chien, C.F.; Chen, L.F. Data mining to improve personnel selection and enhance human capital: A case study in high-technology industry. Expert Syst. Appl. 2008, 34, 280–290. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.; Hart, P. Nearest Neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Kim, S.H.; Choi, H.L. Convolutional Neural Network for Monocular Vision-based Multi-target Tracking. Int. J. Control Autom. Syst. 2019, 17, 2284–2296. [Google Scholar] [CrossRef]

- Lee, S.J.; Choi, H.; Hwang, S.S. Real-time Depth Estimation Using Recurrent CNN with Sparse Depth Cues for SLAM System. Int. J. Control Autom. Syst. 2020, 18, 206–216. [Google Scholar] [CrossRef]

- Deng, L. Deep Learning: Methods and Applications. Found. Trends Signal Process. 2013, 7, 197–387. [Google Scholar] [CrossRef]

- Song, H.A.; Lee, S.Y. Hierarchical Representation Using NMF. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Abdeljaber, O.; Sassi, S.; Avci, O.; Kiranyaz, S.; Ibrahim, A.A.; Gabbouj, M. Fault detection and severity identification of ball bearings by online condition monitoring. IEEE Trans. Ind. Electro 2018, 66, 8136–8147. [Google Scholar] [CrossRef]

- Le Cun, Y.; Jackel, L.D.; Boser, B.; Denker, J.S.; Graf, H.P.; Guyon, I.; Henderson, D.; Howard, R.E.; Hubbard, W. Handwritten digit recognition: Applications of neural network chips and automatic learning. IEEE Commun. Mag. 1989, 27, 41–46. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wu, Y.; Yang, F.; Liu, Y.; Zha, X.; Yuan, S. A comparison of 1-D and 2-D deep convolutional neural networks in ECG classification. arXiv 2018, arXiv:1810.07088. [Google Scholar]

- Xie, S.; Ren, G.; Zhu, J. Application of a new one-dimensional deep convolutional neural network for intelligent fault diagnosis of rolling bearings. Sci. Prog. 2020, 103, 36850420951394. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Eren, L. Bearing fault detection by one-dimensional convolutional neural networks. Math. Probl. Eng. 2017, 2017, 8617315. [Google Scholar] [CrossRef]

- Pietikäinen, M. Local Binary Patterns. Scholarpedia 2010, 5, 9775. [Google Scholar] [CrossRef]

- Rodriguez, J.D.; Perez, A.; Lozano, J.A. Sensitivity Analysis of k-Fold Cross Validation in Prediction Error Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 569–575. [Google Scholar] [CrossRef]

- Batista, G.E.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1. [Google Scholar]

- Pastorino, J.; Biswas, A.K. Data-Blind ML: Building privacy-aware machine learning models without direct data access. In Proceedings of the IEEE Fourth International Conference on Artificial Intelligence and Knowledge Engineering, Laguna Hills, CA, USA, 1–3 December 2021; pp. 95–98. [Google Scholar]

- Torcianti, A.; Matzka, S. Explainable Artificial Intelligence for Predictive Maintenance Applications using a Local Surrogate Model. In Proceedings of the 4th International Conference on Artificial Intelligence for Industries, Laguna Hills, CA, USA, 20–22 September 2021; pp. 86–88. [Google Scholar]

- Mota, B.; Faria, P.; Ramos, C. Predictive Maintenance for Maintenance-Effective Manufacturing Using Machine Learning Approaches. In Proceedings of the 17th International Conference on Soft Computing Models in Industrial and Environmental Applications, Salamanca, Spain, 5–7 September 2022; Lecture Notes in Networks and Systems. Volume 531, pp. 13–22. [Google Scholar]

- Vandereycken, B.; Voorhaar, R. TTML: Tensor trains for general supervised machine learning. arXiv 2022, arXiv:2203.04352. [Google Scholar]

- Chen, C.-H.; Tsung, C.-K.; Yu, S.-S. Designing a Hybrid Equipment-Failure Diagnosis Mechanism under Mixed-Type Data with Limited Failure Samples. Appl. Sci. 2022, 12, 9286. [Google Scholar] [CrossRef]

- Vuttipittayamongkol, P.; Arreeras, T. Data-driven Industrial Machine Failure Detection in Imbalanced Environments. In Proceedings of the IEEE International Conference on Industrial Engineering and Engineering Management, Kuala Lumpur, Malaysia, 7–10 December 2022; pp. 1224–1227. [Google Scholar]

- Harichandran, A.; Raphael, B.; Mukherjee, A. Equipment Activity Recognition and Early Fault Detection in Automated Construction through a Hybrid Machine Learning Framework. Comput. Aided Civ. Infrastruct. Eng. 2022, 38, 253–268. [Google Scholar] [CrossRef]

- Sharma, N.; Sidana, T.; Singhal, S.; Jindal, S. Predictive Maintenance: Comparative Study of Machine Learning Algorithms for Fault Diagnosis. In Proceedings of the Proceedings of the International Conference on Innovative Computing & Communication (ICICC), Delhi, India, 19–20 February 2022. [Google Scholar]

- Iantovics, L.B.; Enăchescu, C. Method for Data Quality Assessment of Synthetic Industrial Data. Sensors 2022, 22, 1608. [Google Scholar] [CrossRef] [PubMed]

- Diao, L.; Deng, M.; Gao, J. Clustering by Constructing Hyper-Planes. IEEE Access 2021, 9, 70167–70181. [Google Scholar] [CrossRef]

- Souza, P.V.C.; Lughofer, E. EFNC-Exp: An evolving fuzzy neural classifier integrating expert rules and uncertainty. Fuzzy Sets Syst. 2023, 466, 108438. [Google Scholar] [CrossRef]

- Kong, Z.; Lu, Q.; Wang, L.; Guo, G. A Simplified Approach for Data Filling in Incomplete Soft Sets. Expert Syst. Appl. 2023, 213, 119248. [Google Scholar] [CrossRef]

| Features | Description |

|---|---|

| Product ID | Product Quality Variant (L, M, or H) |

| Air Temperature [K] | Atmospheric/Ambient Temperature |

| Process Temperature [K] | Operational Temperature |

| Rotational Speed [rpm] | Speed of the machine |

| Torque [Nm] | Torque of the machine |

| Tool wear [min] | The quality variables of tool wear for the used tool in the process |

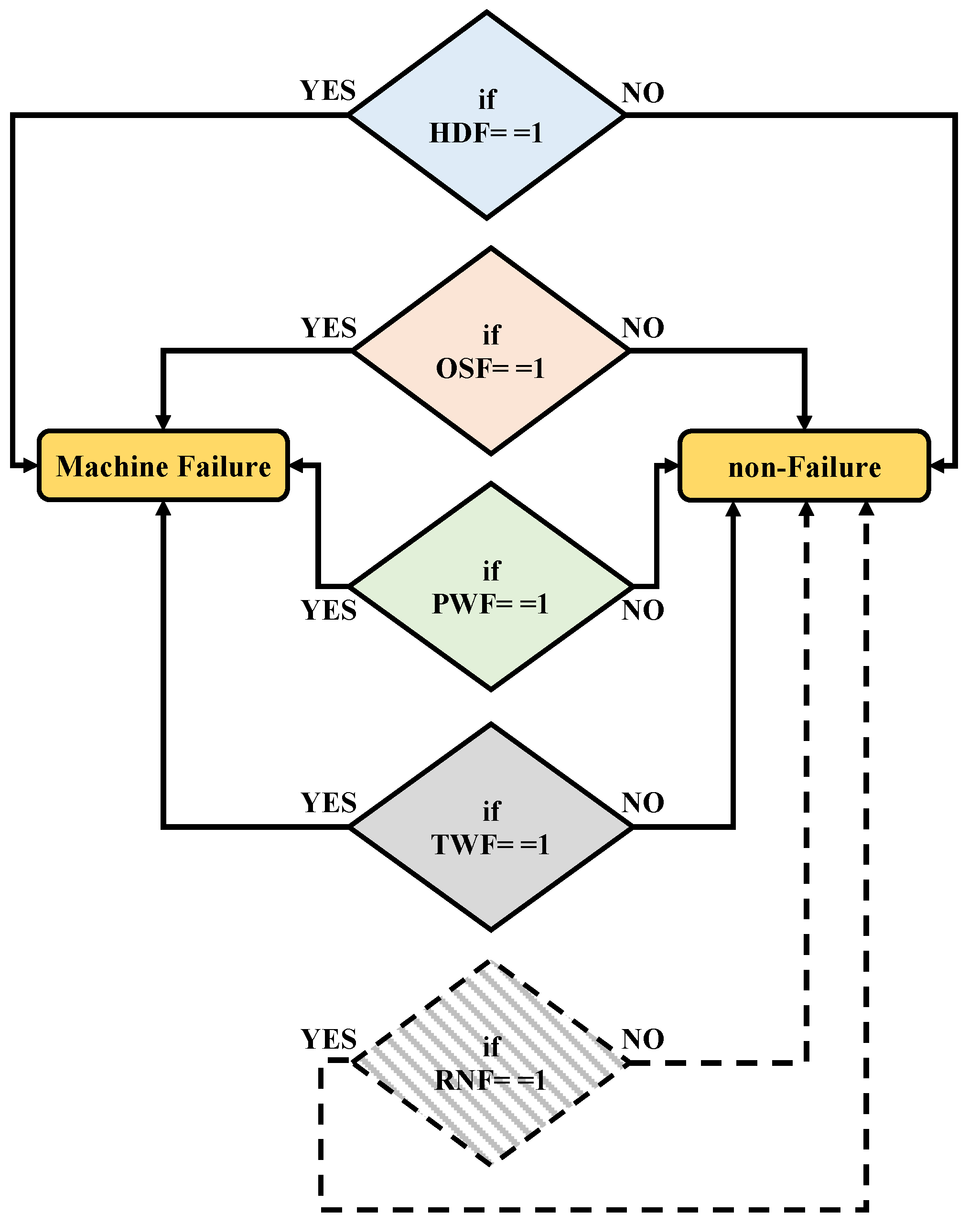

| Machine Failure | Machine failure:1, non-Failure:0 |

| Fault Types | Heat dissipation failure (HDF) |

| Overstrain failure (OSF) | |

| Power failure (PWF) | |

| Tool wear failure (TWF) | |

| Random failures (RNF) |

| Predicted Class | |||

| Positive | Negative | ||

| Actual Class | Positive | True Positive, TP | False Positive, FP |

| Negative | False Negative, FN | True Negative, TN | |

| Fault Types | Dataset Type | # of Failures | # of Non-Failures |

|---|---|---|---|

| MF | Imbalanced | 330 | 9643 |

| MF | Balanced | 330 | 402 |

| HDF | Balanced | 115 | 120 |

| OSF | Balanced | 98 | 100 |

| PWF | Balanced | 95 | 100 |

| TWF | Balanced | 46 | 50 |

| Model | Normalization | Data Balance | Rec (%) | Pre (%) | Spe (%) | Acc (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| DT | No | Imbalanced | 18.79 | 87.32 | 99.91 | 97.22 | 30.92 |

| SVM | No | Imbalanced | 20.91 | 88.46 | 99.91 | 97.29 | 33.82 |

| k-NN | No | Imbalanced | 50.00 | 56.70 | 98.69 | 97.08 | 53.14 |

| 1D-LeNet | No | Imbalanced | 6.67 | 27.50 | 99.40 | 96.33 | 10.73 |

| 1D-AlexNet | No | Imbalanced | 8.48 | 73.68 | 99.90 | 96.87 | 15.22 |

| 1D-VGG16 | No | Imbalanced | 8.48 | 62.22 | 99.82 | 96.80 | 14.93 |

| DT | No | Balanced | 94.24 | 96.58 | 97.26 | 95.90 | 95.40 |

| SVM | No | Balanced | 98.18 | 92.84 | 93.78 | 95.77 | 95.43 |

| k-NN | No | Balanced | 93.94 | 98.73 | 99.00 | 96.72 | 96.27 |

| 1D-LeNet | No | Balanced | 22.12 | 82.02 | 96.02 | 62.70 | 34.84 |

| 1D-AlexNet | No | Balanced | 75.15 | 77.26 | 81.84 | 78.83 | 76.19 |

| 1D-VGG16 | No | Balanced | 15.76 | 74.29 | 95.52 | 59.56 | 26.00 |

| DT | Yes | Imbalanced | 23.64 | 82.98 | 99.83 | 97.31 | 36.79 |

| SVM | Yes | Imbalanced | 19.39 | 86.49 | 99.9 | 97.23 | 31.68 |

| k-NN | Yes | Imbalanced | 45.45 | 57.47 | 98.85 | 97.08 | 50.76 |

| 1D-LeNet | Yes | Imbalanced | 40.61 | 78.82 | 99.63 | 97.67 | 53.60 |

| 1D-AlexNet | Yes | Imbalanced | 35.76 | 87.41 | 99.82 | 97.70 | 50.75 |

| 1D-VGG16 | Yes | Imbalanced | 36.97 | 88.41 | 99.83 | 97.75 | 52.14 |

| DT | Yes | Balanced | 94.55 | 95.71 | 96.52 | 95.63 | 95.12 |

| SVM | Yes | Balanced | 98.18 | 94.19 | 95.02 | 96.45 | 96.14 |

| k-NN | Yes | Balanced | 93.64 | 98.72 | 99.00 | 96.58 | 96.11 |

| 1D-LeNet | Yes | Balanced | 97.27 | 99.38 | 99.50 | 98.50 | 98.32 |

| 1D-AlexNet | Yes | Balanced | 96.67 | 99.07 | 99.25 | 98.09 | 97.85 |

| 1D-VGG16 | Yes | Balanced | 97.27 | 98.17 | 98.51 | 97.95 | 97.72 |

| Model | Fault Type | Normalization | Rec (%) | Pre (%) | Spe (%) | Acc (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| DT | HDF | No | 98.26 | 96.58 | 96.67 | 97.45 | 97.41 |

| SVM | HDF | No | 74.78 | 96.63 | 97.50 | 86.38 | 84.31 |

| k-NN | HDF | No | 98.26 | 88.28 | 87.50 | 92.77 | 93.00 |

| 1D-LeNet | HDF | No | 100 | 77.70 | 72.50 | 85.96 | 87.45 |

| 1D-AlexNet | HDF | No | 100 | 86.47 | 85.00 | 92.34 | 92.74 |

| 1D-VGG16 | HDF | No | 37.39 | 45.74 | 57.50 | 47.66 | 41.15 |

| DT | HDF | Yes | 98.26 | 97.41 | 97.50 | 97.87 | 97.84 |

| SVM | HDF | Yes | 80.87 | 74.40 | 73.33 | 77.02 | 77.50 |

| k-NN | HDF | Yes | 87.83 | 82.11 | 81.67 | 84.68 | 84.87 |

| 1D-LeNet | HDF | Yes | 100 | 95.83 | 95.83 | 97.87 | 97.87 |

| 1D-AlexNet | HDF | Yes | 99.13 | 95.80 | 95.83 | 97.45 | 97.44 |

| 1D-VGG16 | HDF | Yes | 100 | 95.04 | 95.00 | 97.45 | 97.46 |

| DT | OSF | No | 97.96 | 94.12 | 94.00 | 95.96 | 96.00 |

| SVM | OSF | No | 90.82 | 97.80 | 98.00 | 94.44 | 94.18 |

| k-NN | OSF | No | 97.96 | 97.96 | 98.00 | 97.98 | 97.96 |

| 1D-LeNet | OSF | No | 100 | 95.15 | 95.00 | 97.47 | 97.51 |

| 1D-AlexNet | OSF | No | 81.63 | 81.63 | 82.00 | 81.82 | 81.63 |

| 1D-VGG16 | OSF | No | 35.71 | 44.30 | 56.00 | 45.96 | 39.55 |

| DT | OSF | Yes | 97.96 | 96.00 | 96.00 | 96.97 | 96.97 |

| SVM | OSF | Yes | 93.88 | 95.83 | 96.00 | 94.95 | 94.85 |

| k-NN | OSF | Yes | 97.96 | 97.96 | 98.00 | 97.98 | 97.96 |

| 1D-LeNet | OSF | Yes | 98.98 | 97.98 | 98.00 | 98.48 | 98.48 |

| 1D-AlexNet | OSF | Yes | 95.92 | 96.91 | 97.00 | 96.46 | 96.41 |

| 1D-VGG16 | OSF | Yes | 97.96 | 97.96 | 98.00 | 97.98 | 97.96 |

| DT | PWF | No | 96.84 | 94.85 | 95.00 | 95.90 | 95.83 |

| SVM | PWF | No | 87.37 | 86.46 | 87.00 | 87.18 | 86.91 |

| k-NN | PWF | No | 91.58 | 91.58 | 92.00 | 91.79 | 91.58 |

| 1D-LeNet | PWF | No | 73.68 | 87.50 | 90.00 | 82.05 | 80.00 |

| 1D-AlexNet | PWF | No | 90.53 | 93.48 | 94.00 | 92.31 | 91.98 |

| 1D-VGG16 | PWF | No | 16.84 | 41.03 | 77.00 | 47.69 | 23.88 |

| DT | PWF | Yes | 98.95 | 94.95 | 95.00 | 96.92 | 96.91 |

| SVM | PWF | Yes | 96.84 | 90.20 | 90.00 | 93.33 | 93.40 |

| k-NN | PWF | Yes | 89.47 | 91.40 | 92.00 | 90.77 | 90.43 |

| 1D-LeNet | PWF | Yes | 100 | 95.00 | 95.00 | 97.44 | 97.44 |

| 1D-AlexNet | PWF | Yes | 97.89 | 94.90 | 95.00 | 96.41 | 96.37 |

| 1D-VGG16 | PWF | Yes | 97.89 | 94.90 | 95.00 | 96.41 | 96.37 |

| DT | TWF | No | 93.48 | 95.56 | 96.00 | 94.79 | 94.51 |

| SVM | TWF | No | 47.83 | 88.00 | 94.00 | 71.88 | 61.97 |

| k-NN | TWF | No | 80.43 | 77.08 | 78.00 | 79.17 | 78.72 |

| 1D-LeNet | TWF | No | 54.35 | 73.53 | 82.00 | 68.75 | 62.50 |

| 1D-AlexNet | TWF | No | 93.48 | 84.31 | 84.00 | 88.54 | 88.66 |

| 1D-VGG16 | TWF | No | 17.39 | 40.00 | 76.00 | 47.92 | 24.24 |

| DT | TWF | Yes | 97.83 | 95.74 | 96.00 | 96.88 | 96.77 |

| SVM | TWF | Yes | 56.52 | 89.66 | 94.00 | 76.04 | 69.33 |

| k-NN | TWF | Yes | 82.61 | 82.61 | 84.00 | 83.33 | 82.61 |

| 1D-LeNet | TWF | Yes | 97.83 | 95.74 | 96.00 | 96.88 | 96.77 |

| 1D-AlexNet | TWF | Yes | 100 | 90.20 | 90.00 | 94.79 | 94.85 |

| 1D-VGG16 | TWF | Yes | 97.83 | 93.75 | 94.00 | 95.83 | 95.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ileri, U.; Altun, Y.; Narin, A. An Efficient Approach for Automatic Fault Classification Based on Data Balance and One-Dimensional Deep Learning. Appl. Sci. 2024, 14, 4899. https://doi.org/10.3390/app14114899

Ileri U, Altun Y, Narin A. An Efficient Approach for Automatic Fault Classification Based on Data Balance and One-Dimensional Deep Learning. Applied Sciences. 2024; 14(11):4899. https://doi.org/10.3390/app14114899

Chicago/Turabian StyleIleri, Ugur, Yusuf Altun, and Ali Narin. 2024. "An Efficient Approach for Automatic Fault Classification Based on Data Balance and One-Dimensional Deep Learning" Applied Sciences 14, no. 11: 4899. https://doi.org/10.3390/app14114899

APA StyleIleri, U., Altun, Y., & Narin, A. (2024). An Efficient Approach for Automatic Fault Classification Based on Data Balance and One-Dimensional Deep Learning. Applied Sciences, 14(11), 4899. https://doi.org/10.3390/app14114899