Enhancement of Three-Dimensional Computational Integral Imaging via Post-Processing with Visibility Coefficient Estimation

Abstract

:1. Introduction

2. Related Works

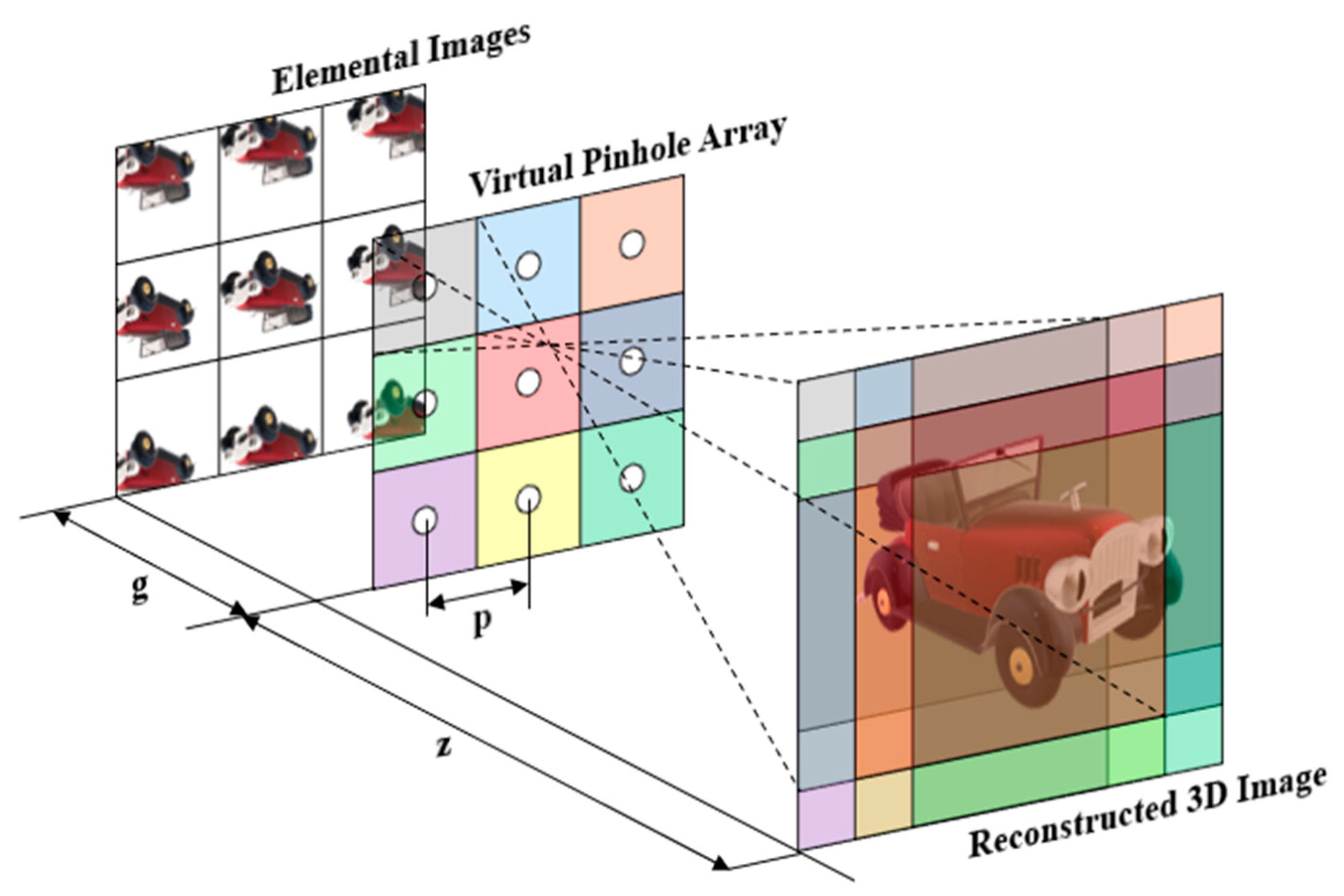

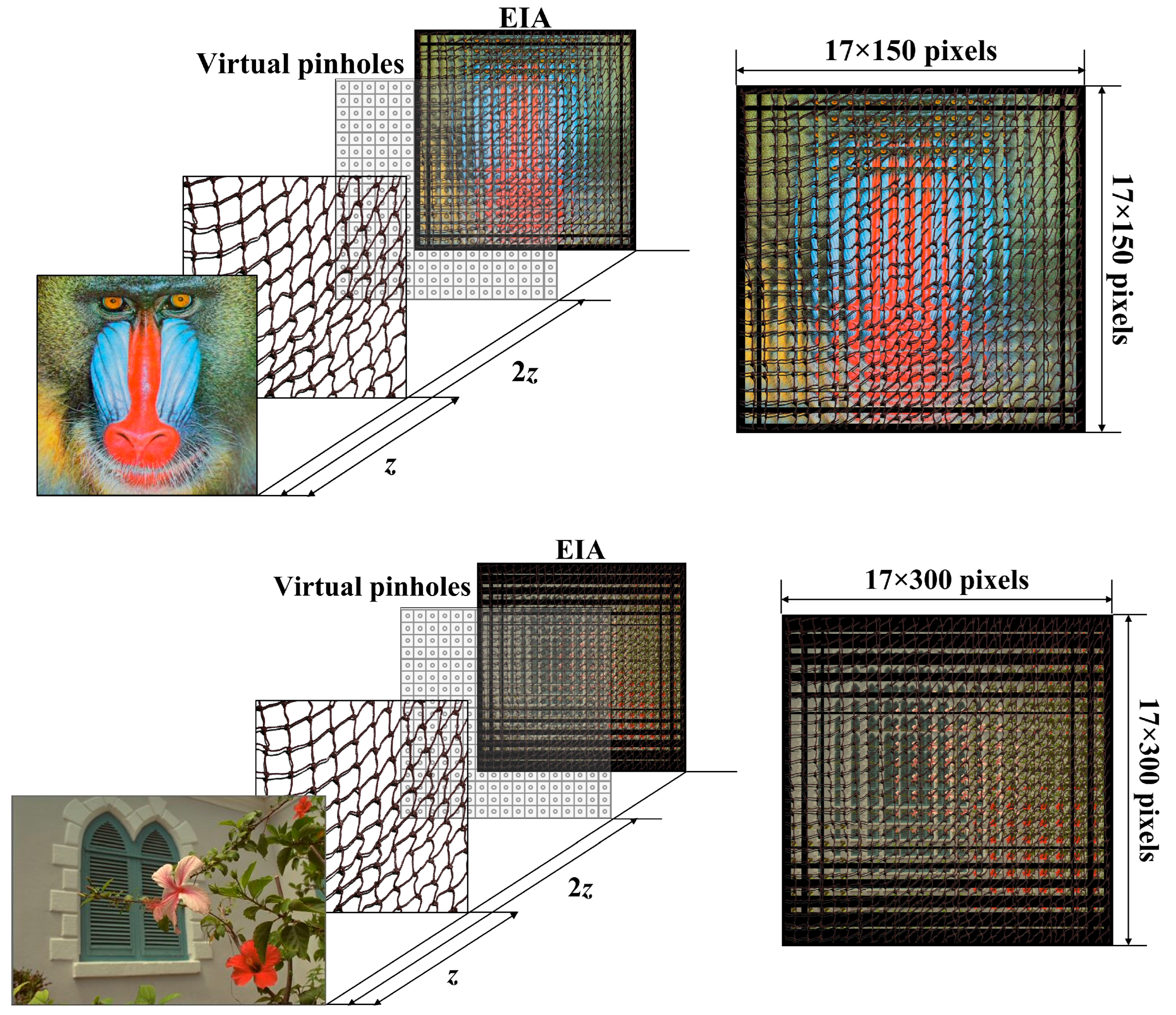

2.1. Computational Integral Imaging Reconstruction

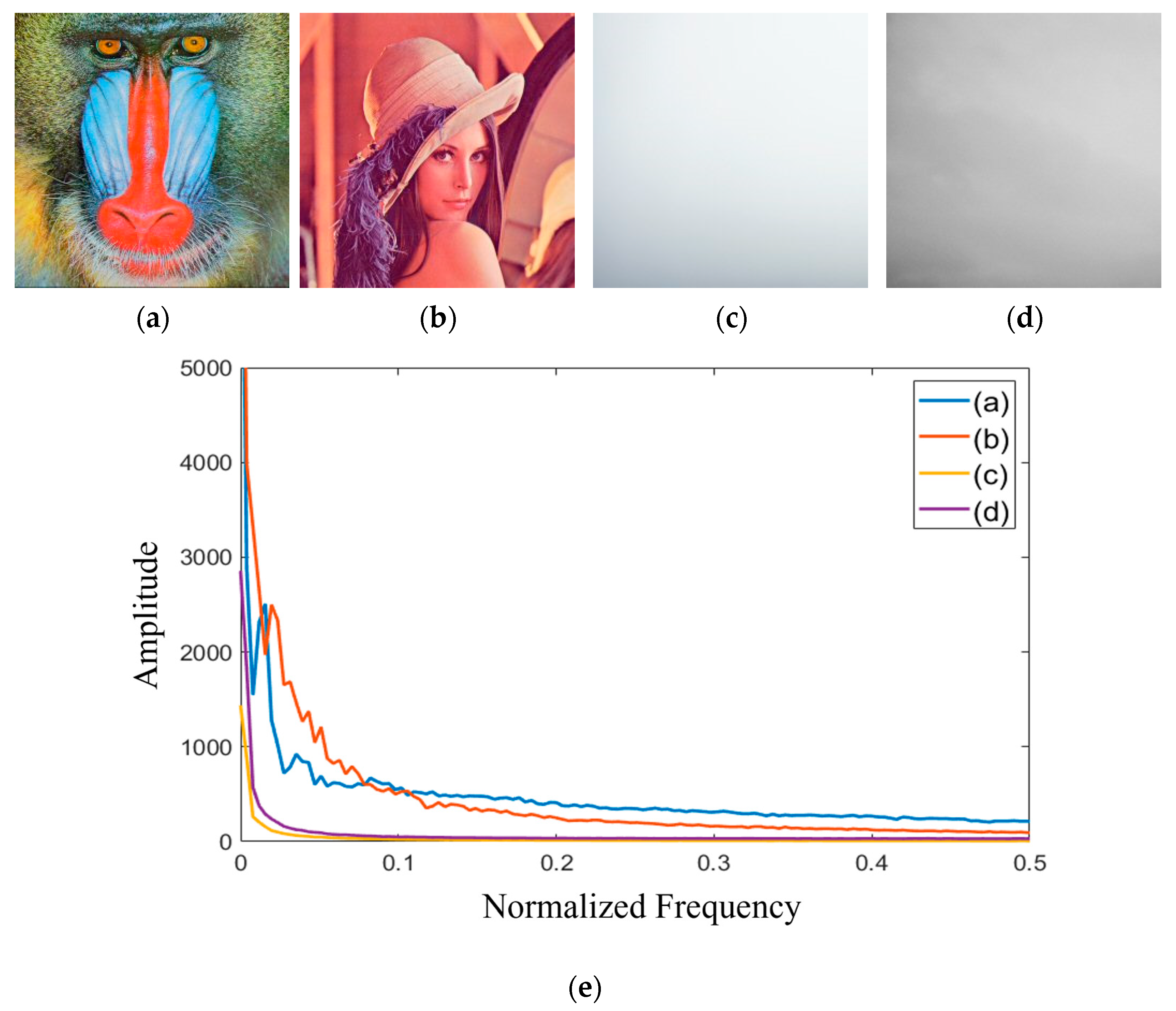

2.2. Existing Model for Scattering Medium with Spectral Analysis and Integral Imaging

3. Proposed Method

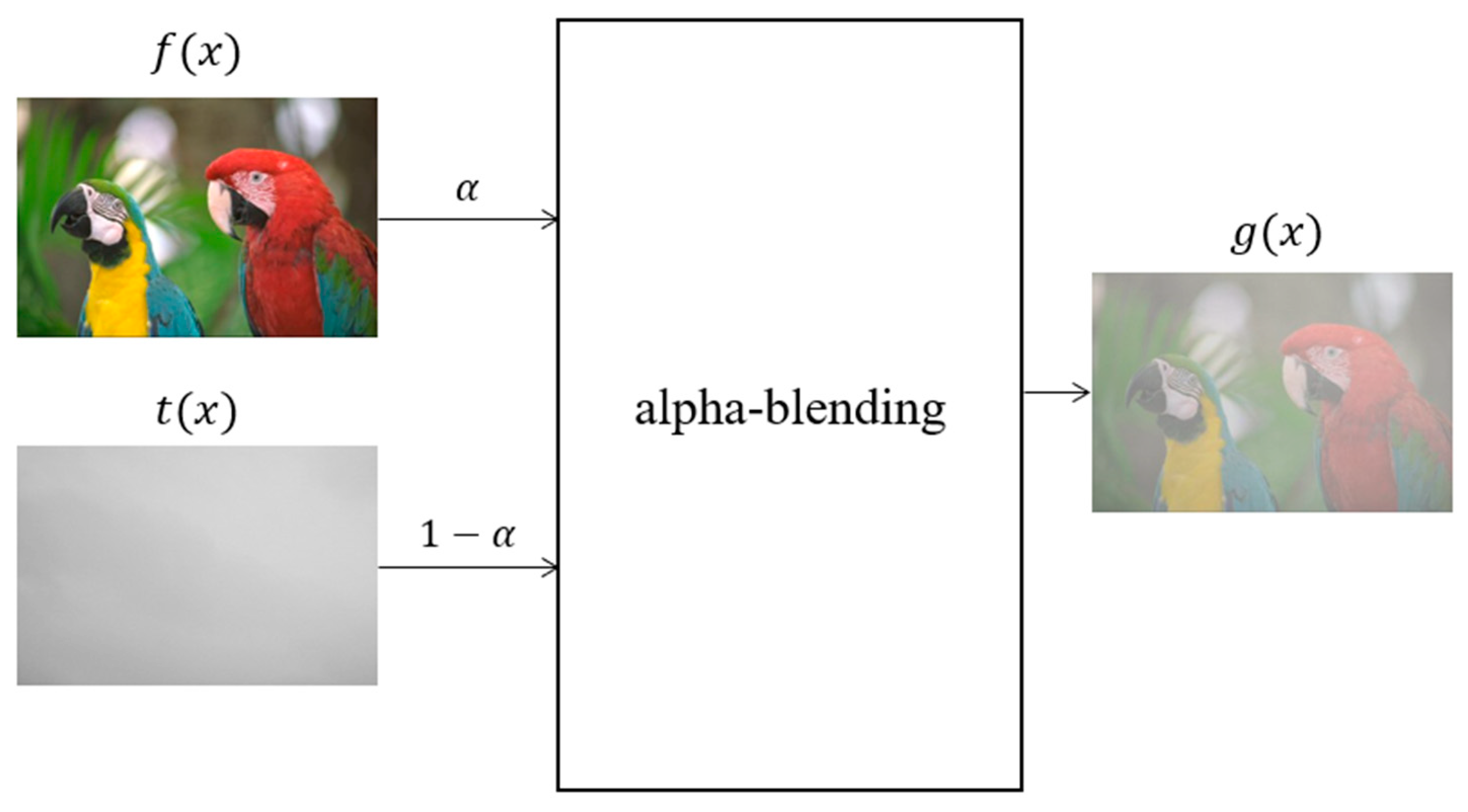

3.1. Formulations of Proposed Model

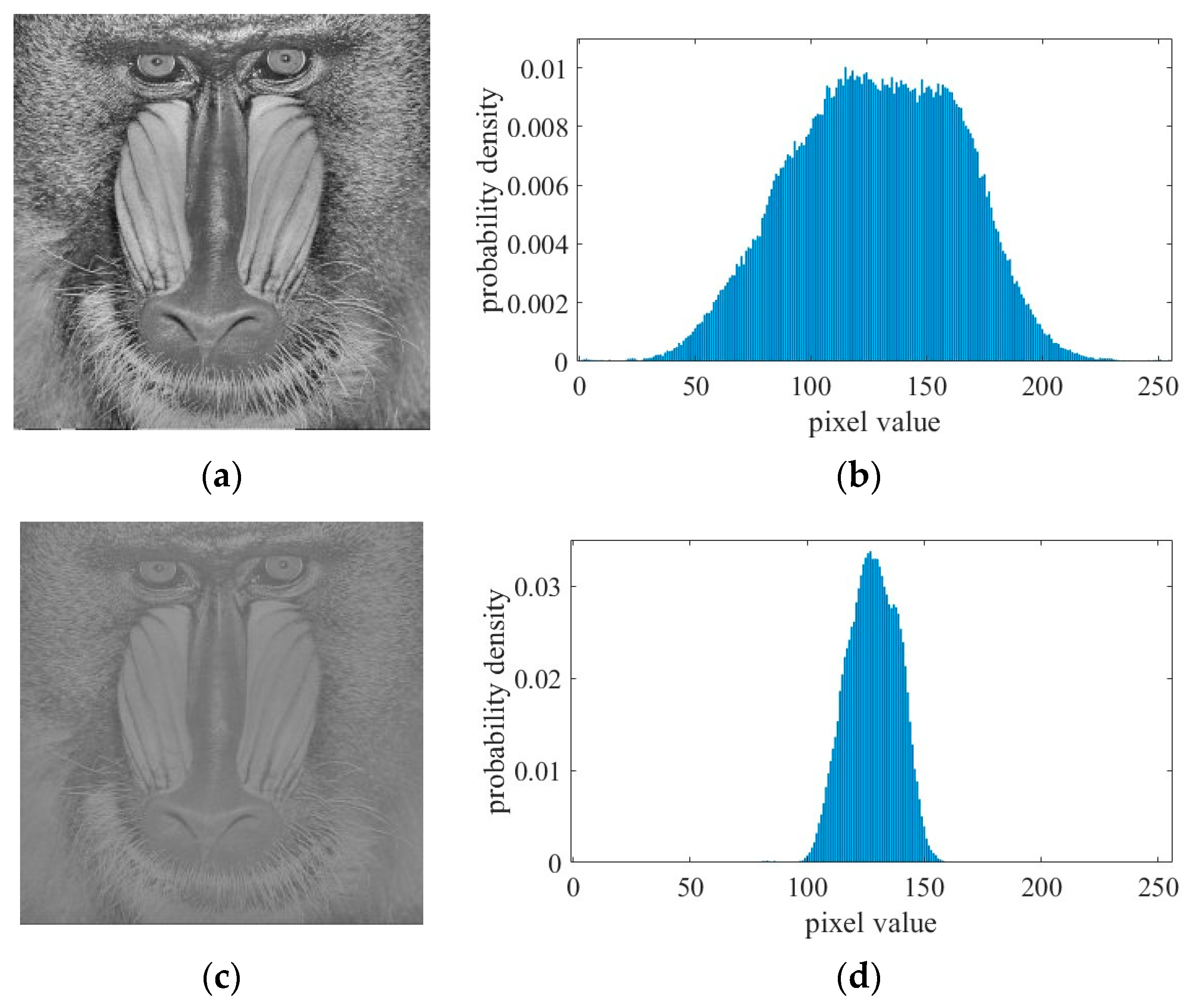

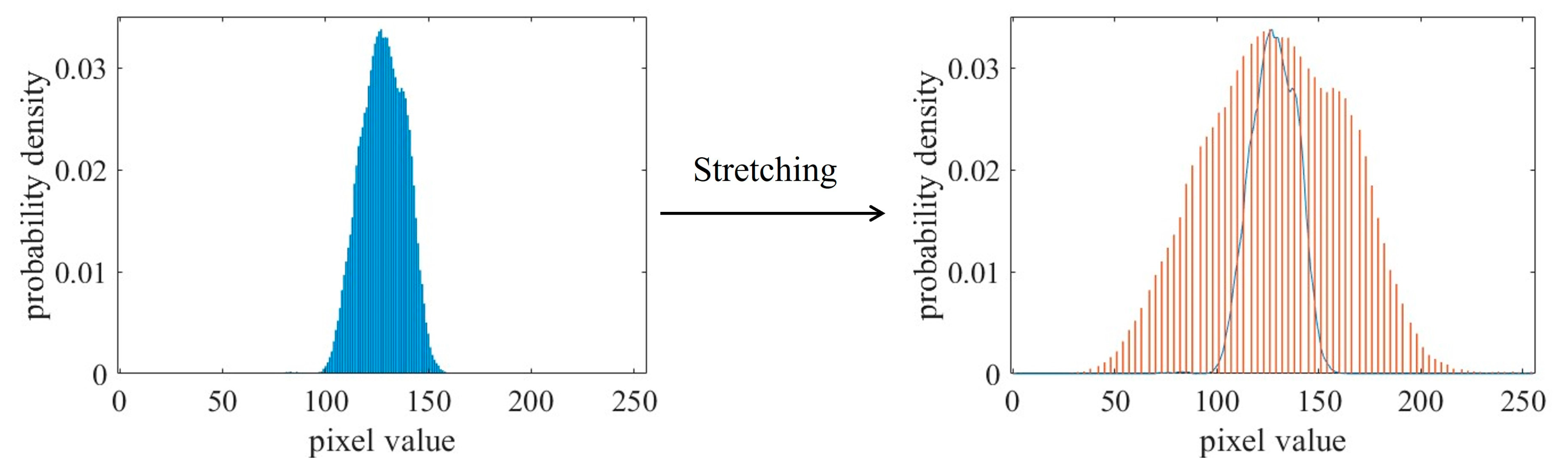

3.2. Estimation of Visibility Coefficient

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lippmann, G. Epreuves reversibles donnant la sensation du relief. J. Phys. Theor. Appl. 1908, 7, 821–825. [Google Scholar] [CrossRef]

- Javidi, B.; Carnicer, A.; Arai, J.; Fujii, T.; Hua, H.; Liao, H.; Martínez-Corral, M.; Pla, F.; Stern, A.; Waller, L.; et al. Roadmap on 3D integral imaging: Sensing, processing, and display. Opt. Express 2022, 28, 32266–32293. [Google Scholar] [CrossRef]

- Li, H.; Wang, S.; Zhao, Y.; Wei, J.; Piao, M. Large-scale elemental image array generation in integral imaging based on scale invariant feature transform and discrete viewpoint acquisition. Displays 2021, 69, 102025. [Google Scholar] [CrossRef]

- Jang, J.S.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Express 2022, 27, 1144–1146. [Google Scholar] [CrossRef]

- Xing, S.; Sang, X.; Yu, X.; Duo, C.; Pang, B.; Gao, X.; Yang, S.; Guan, Y.; Yan, B.; Yuan, J.; et al. High-efficient computer-generated integral imaging based on the backward ray-tracing technique and optical reconstruction. Opt. Express 2017, 25, 330–338. [Google Scholar] [CrossRef] [PubMed]

- Xing, Y.; Wang, Q.H.; Ren, H.; Luo, L.; Deng, H.; Li, D.H. Optical arbitrary-depth refocusing for large-depth scene in integral imaging display based on reprojected parallax image. Opt. Commun. 2019, 433, 209–214. [Google Scholar] [CrossRef]

- Sang, X.; Gao, X.; Yu, X.; Xing, S.; Li, Y.; Wu, Y. Interactive floating full-parallax digital three-dimensional light-field display based on wavefront recomposing. Opt. Express 2018, 26, 8883–8889. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Wang, S.; Zhong, C.; Piao, M.; Zhao, Y. Integral imaging with full parallax based on mini LED display unit. IEEE Access 2019, 7, 32030–32036. [Google Scholar] [CrossRef]

- Yanaka, K. Integral photography using hexagonal fly’s eye lens and fractional view. Proc. SPIE 2008, 6803, 533–540. [Google Scholar]

- Lee, E.; Cho, H.; Yoo, H. Computational Integral Imaging Reconstruction via Elemental Image Blending without Normalization. Sensors 2023, 23, 5468. [Google Scholar] [CrossRef]

- Lee, J.J.; Lee, B.G.; Yoo, H. Depth extraction of three-dimensional objects using block matching for slice images in synthetic aperture integral imaging. Appl. Opt. 2011, 50, 5624–5629. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.H.; Jang, J.S.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Express 2004, 12, 483–491. [Google Scholar] [CrossRef]

- Stern, A.; Javidi, B. Three-dimensional image sensing and reconstruction with time-division multiplexed computational integral imaging. Appl. Opt. 2003, 42, 7036–7042. [Google Scholar] [CrossRef]

- Shin, D.H.; Yoo, H. Image quality enhancement in 3D computational integral imaging by use of interpolation methods. Opt. Express 2007, 15, 12039–12049. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.H.; Yoo, H. Computational integral imaging reconstruction method of 3D images using pixel-to-pixel mapping and image interpolation. Opt. Commun. 2009, 282, 2760–2767. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Computational reconstruction of three-dimensional integral imaging by rearrangement of elemental image pixels. J. Disp. Technol. 2009, 5, 61–65. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Visual quality enhancement of integral imaging by using pixel rearrangement technique with convolution operator (CPERTS). Opt. Lasers Eng. 2018, 111, 206–210. [Google Scholar] [CrossRef]

- Yang, C.; Wang, J.; Stern, A.; Gao, S.; Gurev, V.; Javidi, B. Three-dimensional super resolution reconstruction by integral imaging. J. Disp. Technol. 2015, 11, 947–952. [Google Scholar] [CrossRef]

- Yoo, H. Axially moving a lenslet array for high-resolution 3D images in computational integral imaging. Opt. Express 2013, 21, 8873–8878. [Google Scholar] [CrossRef]

- Yoo, H.; Jang, J.Y. Intermediate elemental image reconstruction for refocused three-dimensional images in integral imaging by convolution with δ-function sequences. Opt. Lasers Eng. 2017, 97, 93–99. [Google Scholar] [CrossRef]

- Yoo, H.; Shin, D.H. Improved analysis on the signal property of computational integral imaging system. Opt. Express 2007, 15, 14107–14114. [Google Scholar] [CrossRef] [PubMed]

- Yoo, H. Artifact analysis and image enhancement in three-dimensional computational integral imaging using smooth windowing technique. Opt. Lett. 2011, 36, 2107–2109. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.Y.; Shin, D.; Kim, E.S. Improved 3-D image reconstruction using the convolution property of periodic functions in curved integral-imaging. Opt. Lasers Eng. 2014, 54, 14–20. [Google Scholar] [CrossRef]

- Jang, J.Y.; Shin, D.; Kim, E.S. Optical three-dimensional refocusing from elemental images based on a sifting property of the periodic δ-function array in integral-imaging. Opt. Express 2014, 22, 1533–1550. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Wang, S.; Chen, W.; Qi, Z.; Zhao, Y.; Zhong, C.; Chen, Y. Computational Integral Imaging Reconstruction Based on Generative Adversarial Network Super-Resolution. Appl. Sci. 2024, 14, 656. [Google Scholar] [CrossRef]

- Yi, F.; Jeong, O.; Moon, I.; Javidi, B. Deep learning integral imaging for three-dimensional visualization, object detection, and segmentation. Opt. Lasers Eng. 2021, 146, 106695. [Google Scholar] [CrossRef]

- Aloni, D.; Yitzhaky, Y. Automatic 3D object localization and isolation using computational integral imaging. Appl. Opt. 2015, 54, 6717–6724. [Google Scholar] [CrossRef] [PubMed]

- Yi, F.; Lee, J.; Moon, I. Simultaneous reconstruction of multiple depth images without off-focus points in integral imaging using a graphics processing unit. Appl. Opt. 2014, 53, 2777–2786. [Google Scholar] [CrossRef] [PubMed]

- Kadosh, M.; Yitzhaky, Y. 3D Object Detection via 2D Segmentation-Based Computational Integral Imaging Applied to a Real Video. Sensors 2023, 23, 4191. [Google Scholar] [CrossRef]

- Zhang, M.; Piao, Y.; Kim, E.S. Visibility-enhanced reconstruction of three-dimensional objects under a heavily scattering medium through combined use of intermediate view reconstruction, multipixel extraction, and histogram equalization methods in the conventional integral imaging system. Appl. Opt. 2011, 50, 5369–5381. [Google Scholar]

- Cho, M.; Javidi, B. Three-dimensional visualization of objects in turbid water using integral imaging. J. Disp. Technol. 2010, 6, 544–547. [Google Scholar] [CrossRef]

- Markman, A.; Javidi, B. Learning in the dark: 3D integral imaging object recognition in very low illumination conditions using convolutional neural networks. OSA Contin. 2018, 1, 373–383. [Google Scholar] [CrossRef]

- Joshi, R.; Usmani, K.; Krishnan, G.; Blackmon, F.; Javidi, B. Underwater object detection and temporal signal detection in turbid water using 3D-integral imaging and deep learning. Opt. Express 2024, 32, 1789–1801. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Krishnan, G.; O’Connor, T.; Joshi, R.; Javidi, B. End-to-end integrated pipeline for underwater optical signal detection using 1D integral imaging capture with a convolutional neural network. Opt. Express 2023, 31, 1367–1385. [Google Scholar] [CrossRef] [PubMed]

- Usmani, K.; O’Connor, T.; Wani, P.; Javidi, B. 3D object detection through fog and occlusion: Passive integral imaging vs active (LiDAR) sensing. Opt. Express 2023, 31, 479–491. [Google Scholar] [CrossRef]

- Wani, P.; Usmani, K.; Krishnan, G.; O’Connor, T.; Javidi, B. Lowlight object recognition by deep learning with passive three-dimensional integral imaging in visible and long wave infrared wavelengths. Opt. Express 2022, 30, 1205–1218. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.; Carnicer, A.; Javidi, B. Three-dimensional polarimetric integral imaging under low illumination conditions. Opt. Lett. 2019, 44, 3230–3233. [Google Scholar] [CrossRef]

- Lee, Y.; Yoo, H. Three-dimensional visualization of objects in scattering medium using integral imaging and spectral analysis. Opt. Lasers Eng. 2016, 77, 31–38. [Google Scholar] [CrossRef]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 820–827. [Google Scholar]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons: New York, NY, USA, 1976. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Crow, F.C. Summed-area tables for texture mapping. In Proceedings of the 11th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 January 1984; pp. 207–212. [Google Scholar]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Guido, R.C.; Pedroso, F.; Contreras, R.C.; Rodrigues, L.C.; Guariglia, E.; Neto, J.S. Introducing the Discrete Path Transform (DPT) and its applications in signal analysis, artefact removal, and spoken word recognition. Digit. Signal Process. 2021, 117, 103158. [Google Scholar] [CrossRef]

- Guariglia, E.; Silvestrov, S. Fractional-Wavelet Analysis of Positive definite Distributions and Wavelets on D’(C). In Engineering Mathematics II; Springer: Cham, Switzerland, 2016; pp. 337–353. [Google Scholar]

- Yang, L.; Su, H.L.; Zhong, C.; Meng, Z.Q.; Luo, H.W.; Li, X.C.; Tang, Y.Y.; Lu, Y. Hyperspectral image classification using wavelet transform-based smooth ordering. Int. J. Wavelets Multiresolut. Inf. Process. 2019, 17, 1950050. [Google Scholar] [CrossRef]

- Guariglia, E. Harmonic Sierpinski Gasket and Applications. Entropy 2018, 20, 714. [Google Scholar] [CrossRef] [PubMed]

- Zheng, X.W.; Tang, Y.Y.; Zhou, J.T. A framework of adaptive multiscale wavelet decomposition for signals on undirected graphs. IEEE Trans. Signal Process. 2019, 67, 1696–1711. [Google Scholar] [CrossRef]

- Guariglia, E. Primality, Fractality and image analysis. Entropy 2019, 21, 304. [Google Scholar] [CrossRef] [PubMed]

- Berry, M.V.; Lewis, Z.V. On the Weierstrass-Mandelbrot fractal function. Proc. R. Soc. Lond. Ser. A 1980, 370, 459–484. [Google Scholar]

- Jeong, H.; Lee, E.; Yoo, H. Re-Calibration and Lens Array Area Detection for Accurate Extraction of Elemental Image Array in Three-Dimensional Integral Imaging. Appl. Sci. 2022, 12, 9252. [Google Scholar] [CrossRef]

- Li, X.; Yan, L.; Qi, P.; Zhang, L.; Goudail, F.; Liu, T.; Zhai, J.; Hu, H. Polarimetric imaging via deep learning: A review. Remote Sens. 2023, 15, 1540. [Google Scholar] [CrossRef]

- Tian, Z.; Qu, P.; Li, J.; Sun, Y.; Li, G.; Liang, Z.; Zhang, W. A Survey of Deep Learning-Based Low-Light Image Enhancement. Sensors 2023, 23, 7763. [Google Scholar] [CrossRef]

- Jakubec, M.; Lieskovská, E.; Bučko, B.; Zábovská, K. Comparison of CNN-based models for pothole detection in real-world adverse conditions: Overview and evaluation. Appl. Sci. 2023, 13, 5810. [Google Scholar] [CrossRef]

- Leng, C.; Liu, G. IFE-Net: An Integrated Feature Extraction Network for Single-Image Dehazing. Appl. Sci. 2023, 13, 12236. [Google Scholar] [CrossRef]

| Visibility Coefficient | 0.3 | 0.5 | 0.7 | |||

|---|---|---|---|---|---|---|

| Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | |

| Baboon_hazed image1 | 0.18 | 0.32 | 0.29 | 0.53 | 0.40 | 0.74 |

| Baboon_hazed image2 | 0.19 | 0.33 | 0.30 | 0.53 | 0.40 | 0.74 |

| Lenna_hazed image1 | 0.15 | 0.34 | 0.25 | 0.54 | 0.35 | 0.76 |

| Lenna_hazed image2 | 0.16 | 0.34 | 0.24 | 0.55 | 0.34 | 0.76 |

| Kodim22_hazed image3 | 0.20 | 0.27 | 0.33 | 0.42 | 0.46 | 0.59 |

| Kodim22_hazed image4 | 0.20 | 0.27 | 0.33 | 0.44 | 0.46 | 0.59 |

| Kodim23_hazed image3 | 0.19 | 0.34 | 0.30 | 0.56 | 0.42 | 0.79 |

| Kodim23_hazed image4 | 0.19 | 0.34 | 0.31 | 0.56 | 0.42 | 0.79 |

| AVE | 0.18 | 0.32 | 0.29 | 0.52 | 0.41 | 0.72 |

| Visibility Coefficient | 0.3 | 0.5 | 0.7 | |||

|---|---|---|---|---|---|---|

| Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | |

| Baboon_hazed image1 | 17.82 | 21.06 | 17.96 | 23.10 | 17.86 | 26.10 |

| Baboon_hazed image2 | 18.33 | 21.72 | 18.14 | 23.66 | 17.95 | 26.50 |

| Lenna_hazed image1 | 16.96 | 21.66 | 16.64 | 24.44 | 16.59 | 27.64 |

| Lenna_hazed image2 | 16.75 | 22.43 | 15.96 | 24.98 | 16.21 | 28.20 |

| Kodim22_hazed image3 | 16.73 | 17.15 | 17.56 | 18.20 | 18.17 | 19.26 |

| Kodim22_hazed image4 | 16.60 | 17.20 | 17.24 | 18.04 | 17.89 | 18.96 |

| Kodim23_hazed image3 | 15.69 | 16.01 | 16.08 | 16.89 | 16.45 | 17.92 |

| Kodim23_hazed image4 | 15.79 | 16.30 | 16.02 | 17.09 | 16.35 | 18.04 |

| AVE | 16.83 | 19.19 | 16.95 | 20.80 | 17.18 | 22.83 |

| Visibility Coefficient | 0.3 | 0.5 | 0.7 | |||

|---|---|---|---|---|---|---|

| Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | Inaccurate Alpha | Proposed Method | |

| Baboon_hazed image1 | 0.84 | 0.93 | 0.86 | 0.97 | 0.86 | 0.99 |

| Baboon_hazed image2 | 0.88 | 0.96 | 0.87 | 0.98 | 0.86 | 0.99 |

| Lenna_hazed image1 | 0.85 | 0.93 | 0.84 | 0.98 | 0.83 | 0.99 |

| Lenna_hazed image2 | 0.84 | 0.97 | 0.81 | 0.99 | 0.82 | 0.99 |

| Kodim22_hazed image3 | 0.69 | 0.71 | 0.85 | 0.89 | 0.88 | 0.94 |

| Kodim22_hazed image4 | 0.76 | 0.83 | 0.85 | 0.91 | 0.87 | 0.94 |

| Kodim23_hazed image3 | 0.68 | 0.69 | 0.81 | 0.86 | 0.83 | 0.92 |

| Kodim23_hazed image4 | 0.77 | 0.86 | 0.81 | 0.91 | 0.82 | 0.93 |

| AVE | 0.79 | 0.86 | 0.84 | 0.94 | 0.85 | 0.96 |

| CIIR | Inaccurate Alpha | Proposed Method | |

|---|---|---|---|

| Baboon | 18.89 | 17.64 | 23.66 |

| Lenna | 19.07 | 16.10 | 24.58 |

| peppers | 19.74 | 16.03 | 19.18 |

| fruits | 17.10 | 15.75 | 17.82 |

| Kodim07 | 21.99 | 16.66 | 18.80 |

| AVE | 19.36 | 16.44 | 20.81 |

| CIIR | Inaccurate Alpha | Proposed Method | |

|---|---|---|---|

| Baboon | 0.90 | 0.85 | 0.95 |

| Lenna | 0.93 | 0.81 | 0.97 |

| peppers | 0.92 | 0.85 | 0.89 |

| fruits | 0.87 | 0.79 | 0.89 |

| Kodim07 | 0.92 | 0.77 | 0.90 |

| AVE | 0.91 | 0.81 | 0.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, H.; Yoo, H. Enhancement of Three-Dimensional Computational Integral Imaging via Post-Processing with Visibility Coefficient Estimation. Appl. Sci. 2024, 14, 5384. https://doi.org/10.3390/app14135384

Cho H, Yoo H. Enhancement of Three-Dimensional Computational Integral Imaging via Post-Processing with Visibility Coefficient Estimation. Applied Sciences. 2024; 14(13):5384. https://doi.org/10.3390/app14135384

Chicago/Turabian StyleCho, Hyunji, and Hoon Yoo. 2024. "Enhancement of Three-Dimensional Computational Integral Imaging via Post-Processing with Visibility Coefficient Estimation" Applied Sciences 14, no. 13: 5384. https://doi.org/10.3390/app14135384

APA StyleCho, H., & Yoo, H. (2024). Enhancement of Three-Dimensional Computational Integral Imaging via Post-Processing with Visibility Coefficient Estimation. Applied Sciences, 14(13), 5384. https://doi.org/10.3390/app14135384