Impact of Dual-Depth Head-Up Displays on Vehicle Driver Performance

Abstract

:1. Introduction

2. Materials and Methods

- A

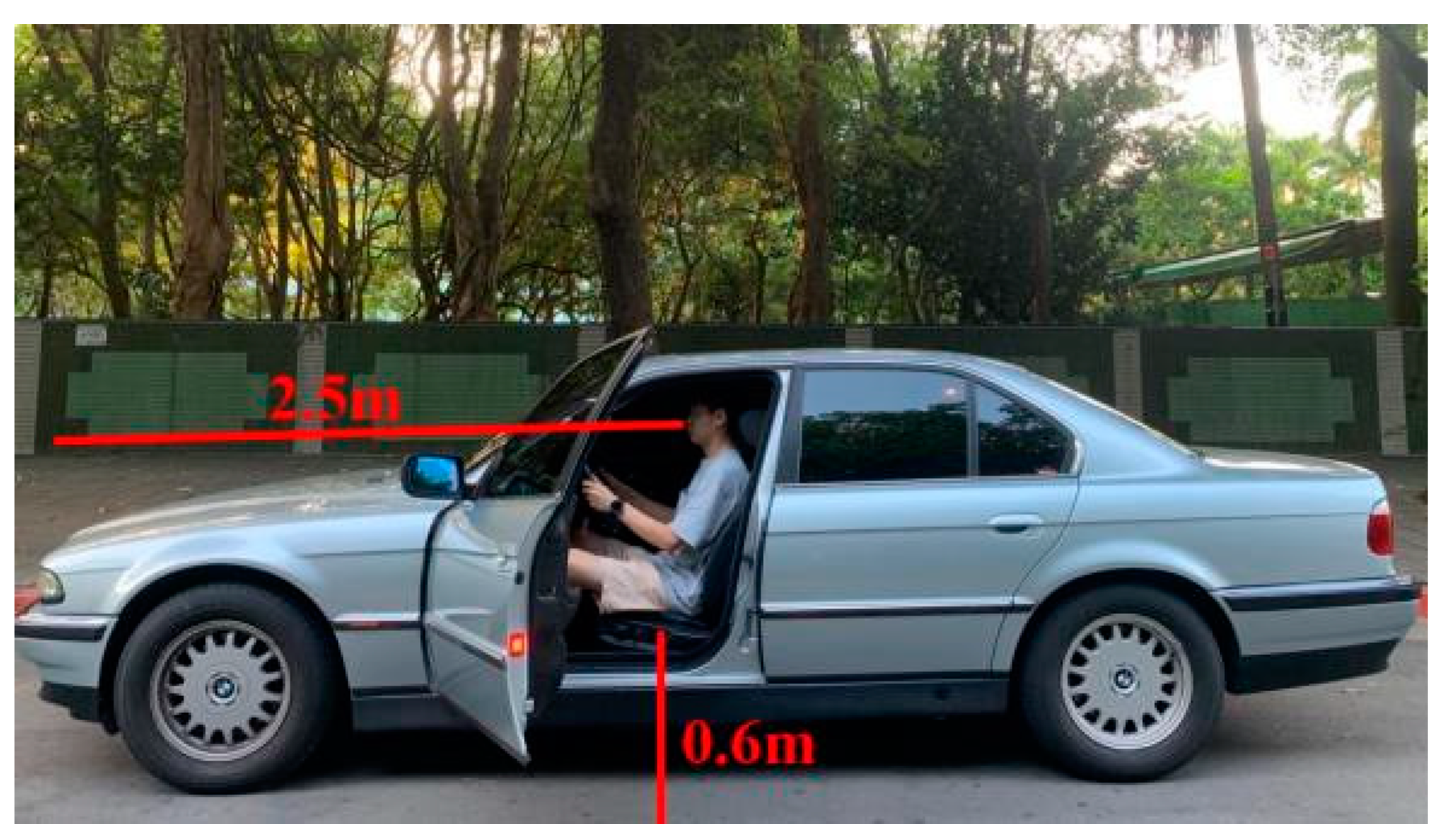

- Design Specifications

- B

- Participants

- C

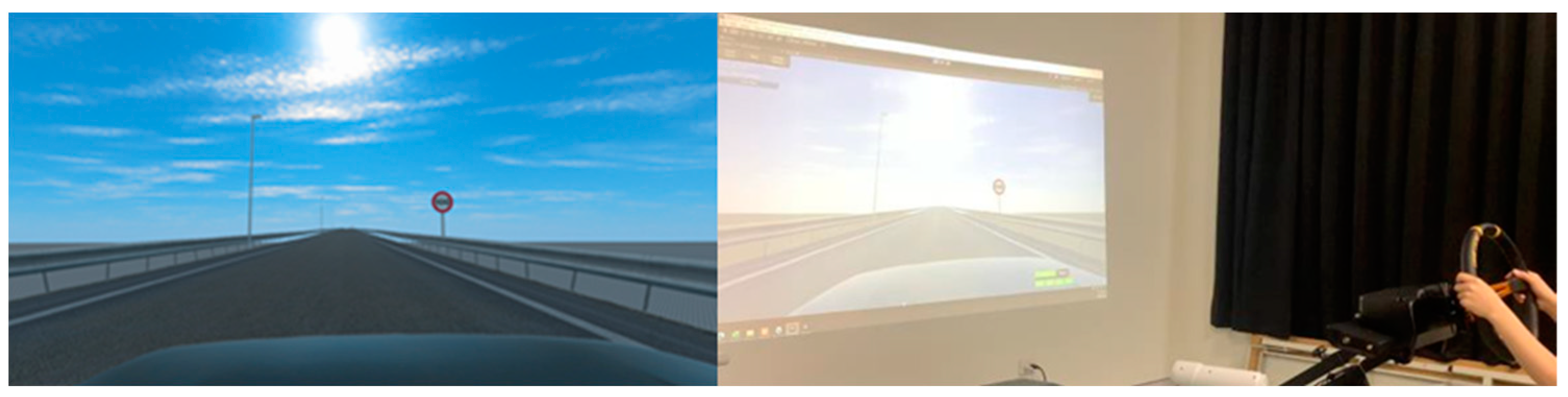

- Apparatus

- (a)

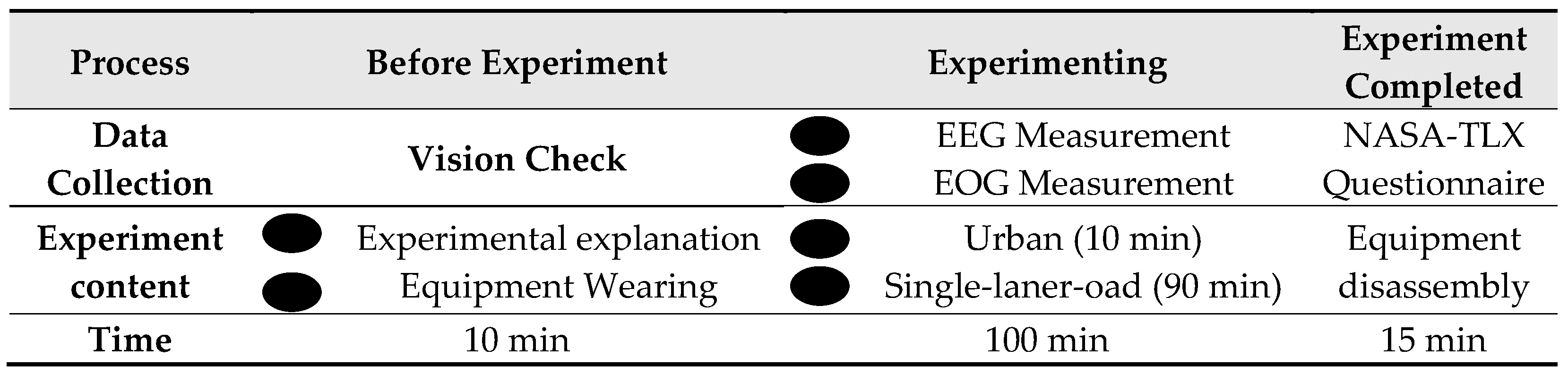

- Procedure

3. Results

- (a)

- Statistical Methods

- (b)

- EEG Analysis Results (Driving Fatigue)

- (c)

- EEG Discussion

- (d)

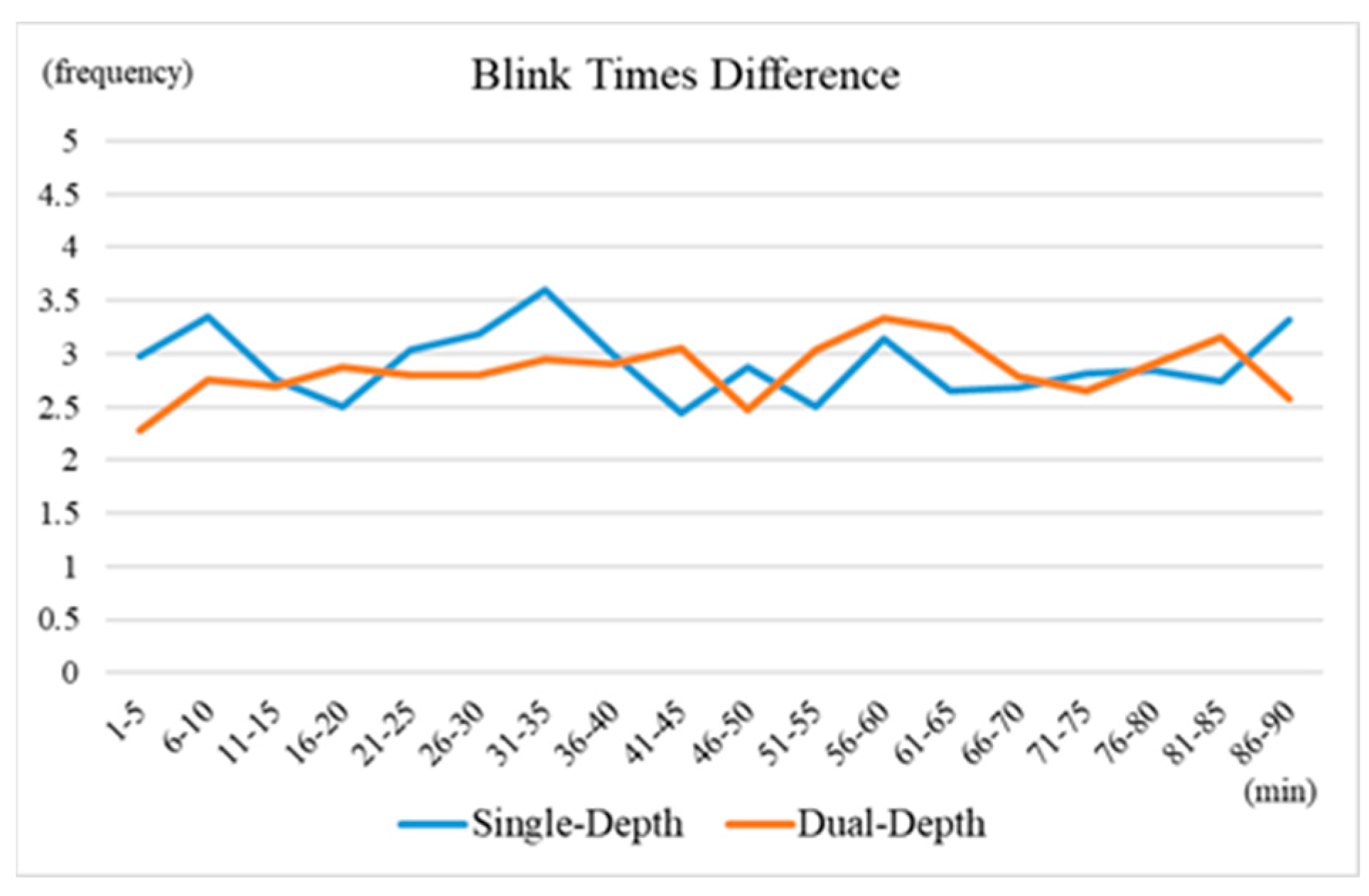

- EOG Analysis Results (Number of Blinks)

- (e)

- EOG Discussion:

- (f)

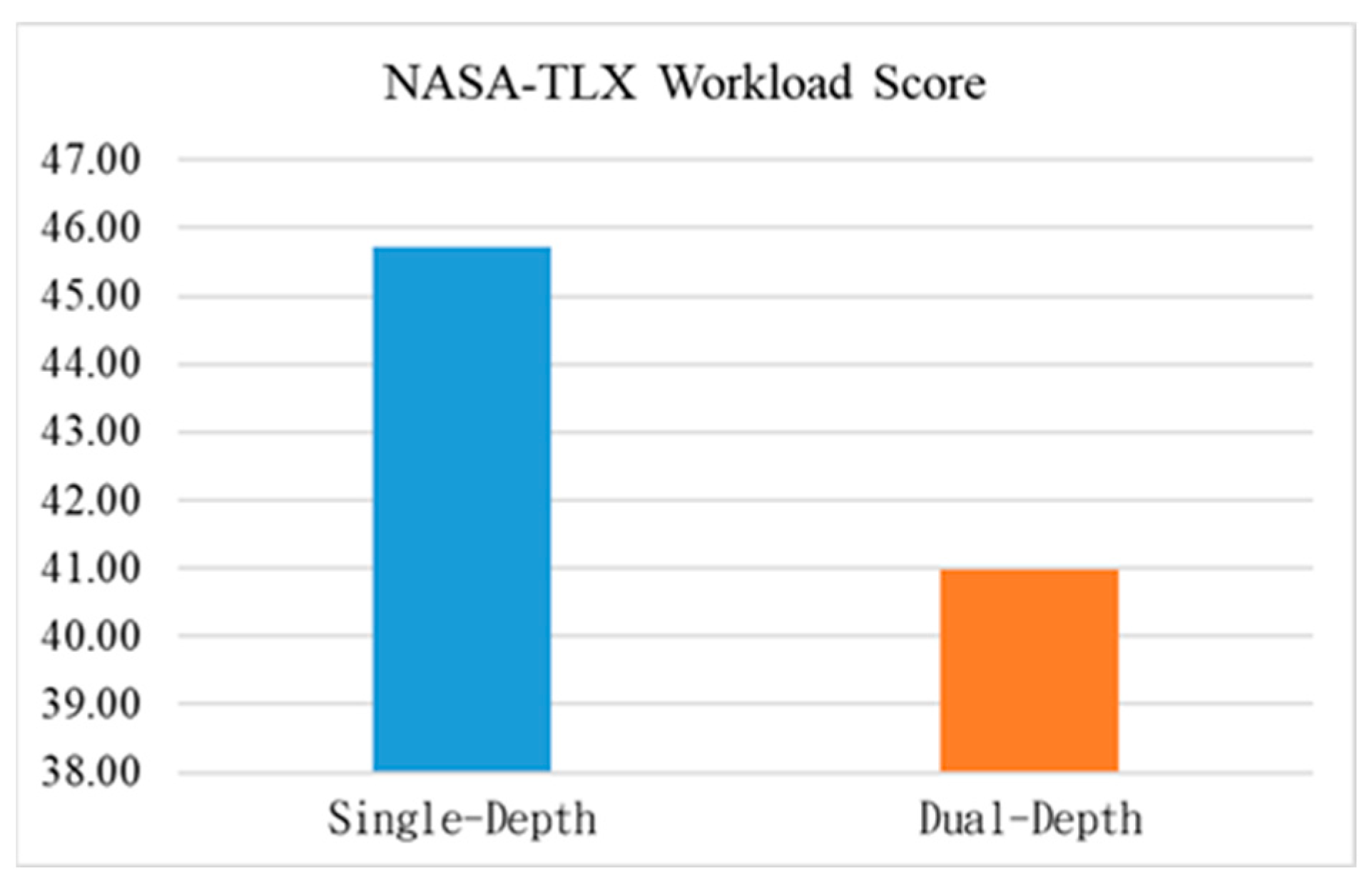

- NASA-TLX Analysis Result

- (g)

- NASA-TLX Discussion:

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Park, H.S.; Park, M.W.; Won, K.H.; Kim, K.; Jung, S.K. In-Vehicle AR-HUD System to Provide Driving-Safety Information. ETRI J. 2013, 35, 1038–1047. [Google Scholar] [CrossRef]

- Kim, H.; Gabbard, J.L. Assessing Distraction Potential of Augmented Reality Head-Up Displays for Vehicle Drivers. Hum. Factors 2019, 64, 852–865. [Google Scholar] [CrossRef] [PubMed]

- Martens, M.; Van Winsum, W. Measuring Distraction: The Peripheral Detection Task; TNO Human Factors: Soesterberg, The Netherlands, 2000. [Google Scholar]

- Faria, N.d.O. Evaluating Automotive Augmented Reality Head-up Display Effects on Driver Performance and Distraction. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 553–554. [Google Scholar] [CrossRef]

- Inuzuka, Y.; Osumi, Y.; Shinkai, H. Visibility of Head up Display (HUD) for Automobiles. Proc. Hum. Factors Soc. Annu. Meet. 1991, 35, 1574–1578. [Google Scholar] [CrossRef]

- Alotaiby, T.; El-Samie, F.E.A.; Alshebeili, S.; Ahmad, I. A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 2015, 2015, 66. [Google Scholar] [CrossRef]

- Weihrauch, M.; Meloeny, G.G.; Goesch, T.C. The First Head Up Display Introduced by General Motors; SAE International: Warrendale, PA, USA, 1989; SAE Technical Paper 890288. [Google Scholar] [CrossRef]

- Weintraub, D.J.; Ensing, M. Human Factors Issues in Head-Up Display Design: The Book of HUD; Crew System Ergonomics Information Analysis Center: Dayton, OH, USA, 1992. [Google Scholar]

- Gabbard, J.L.; Fitch, G.M.; Kim, H. Behind the Glass: Driver Challenges and Opportunities for AR Automotive Applications. Proc. IEEE 2014, 102, 124–136. [Google Scholar] [CrossRef]

- Bark, K.; Tran, C.; Fujimura, K.; Ng-Thow-Hing, V. Personal Navi: Benefits of an Augmented Reality Navigational Aid Using a See-Thru 3D Volumetric HUD. In Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, 17–19 September 2014. [Google Scholar] [CrossRef]

- Yost, B.; Haciahmetoglu, Y.; North, C. Beyond visual acuity: The perceptual scalability of information visualizations for large displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New York, NY, USA, 28 April–3 May 2007; pp. 101–110. [Google Scholar] [CrossRef]

- Ma, X.; Jia, M.; Hong, Z.; Kwok, A.P.K.; Yan, M. Does Augmented-Reality Head-Up Display Help? A Preliminary Study on Driving Performance Through a VR-Simulated Eye Movement Analysis. IEEE Access 2021, 9, 129951–129964. [Google Scholar] [CrossRef]

- Anstey, K.J.; Wood, J.; Lord, S.; Walker, J.G. Cognitive, sensory and physical factors enabling driving safety in older adults. Clin. Psychol. Rev. 2005, 25, 45–65. [Google Scholar] [CrossRef] [PubMed]

- De Raedt, R.; Ponjaert-Kristoffersen, I. The Relationship Between Cognitive/Neuropsychological Factors and Car Driving Performance in Older Adults. J. Am. Geriatr. Soc. 2000, 48, 1664–1668. [Google Scholar] [CrossRef]

- Lisle, L.; Merenda, C.; Tanous, K.; Kim, H.; Gabbard, J.L.; Bowman, D.A. Effects of Volumetric Augmented Reality Displays on Human Depth Judgments: Implications for Heads-Up Displays in Transportation. Int. J. Mob. Hum. Comput. Interact. IJMHCI 2019, 11, 1–18. [Google Scholar] [CrossRef]

- Smith, M.; Doutcheva, N.; Gabbard, J.L.; Burnett, G. Optical see-through head up displays’ effect on depth judgments of real world objects. In Proceedings of the 2015 IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 401–405. [Google Scholar] [CrossRef]

- Christmas, J.; Smeeton, T.M. Dynamic Holography for Automotive Augmented-Reality Head-Up Displays (AR-HUD). SID Symp. Dig. Tech. Pap. 2021, 52, 560–563. [Google Scholar] [CrossRef]

- Gabbard, J.L.; Smith, M.; Merenda, C.; Burnett, G.; Large, D.R. A Perceptual Color-Matching Method for Examining Color Blending in Augmented Reality Head-Up Display Graphics. IEEE Trans. Vis. Comput. Graph. 2020, 28, 2834–2851. [Google Scholar] [CrossRef]

- Currano, R.; Park, S.Y.; Moore, D.J.; Lyons, K.; Sirkin, D. Little Road Driving HUD: Heads-Up Display Complexity Influences Drivers’ Perceptions of Automated Vehicles. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 7–17 May 2021; pp. 1–15. [Google Scholar] [CrossRef]

- Koulieris, G.A.; Akşit, K.; Stengel, M.; Mantiuk, R.K.; Mania, K.; Richardt, C. Near-Eye Display and Tracking Technologies for Virtual and Augmented Reality. Comput. Graph. Forum 2019, 38, 493–519. [Google Scholar] [CrossRef]

- Hoffman, D.M.; Girshick, A.R.; Akeley, K.; Banks, M.S. Vergence–accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 2008, 8, 33. [Google Scholar] [CrossRef] [PubMed]

- Gur, S.; Ron, S.; Heicklen-Klein, A. Objective evaluation of visual fatigue in VDU workers. Occup. Med. 1994, 44, 201–204. [Google Scholar] [CrossRef]

- Yano, S.; Ide, S.; Mitsuhashi, T.; Thwaites, H. A study of visual fatigue and visual comfort for 3D HDTV/HDTV images. Displays 2002, 23, 191–201. [Google Scholar] [CrossRef]

- Rantanen, E.M.; Goldberg, J.H. The effect of mental workload on the visual field size and shape. Ergonomics 1999, 42, 816–834. [Google Scholar] [CrossRef]

- Cutting, J.E.; Vishton, P.M. Perceiving Layout and Knowing Distances: The Integration, Relative Potency, and Con-Textual Use of Different Information about Depth; Academic Press: Cambridge, MA, USA, 1995; p. 49. [Google Scholar]

- Schmidt, R.F.; Thews, G. Physiologie des Menschen; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Stern, J.A.; Boyer, D.; Schroeder, D. Blink Rate: A Possible Measure of Fatigue. Hum. Factors 1994, 36, 285–297. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Jap, B.T.; Lal, S.; Fischer, P.; Bekiaris, E. Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst. Appl. 2009, 36, 2352–2359. [Google Scholar] [CrossRef]

- Kong, W.; Zhou, Z.; Jiang, B.; Babiloni, F.; Borghini, G. Assessment of driving fatigue based on intra/inter-region phase synchronization. Neurocomputing 2017, 219, 474–482. [Google Scholar] [CrossRef]

- Faure, V.; Lobjois, R.; Benguigui, N. The effects of driving environment complexity and dual tasking on drivers’ mental workload and eye blink behavior. Transp. Res. Part F Traffic Psychol. Behav. 2016, 40, 78–90. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, J.; Hong, K.; Yang, H.K.; Jung, J.-H.; Choi, H.; Min, S.-W.; Seo, J.-M.; Hwang, J.-M.; Lee, B. Accommodative Response of Integral Imaging in Near Distance. J. Disp. Technol. 2012, 8, 70–78. [Google Scholar] [CrossRef]

- Laser-Based Displays: A Review. Available online: https://opg.optica.org/ao/abstract.cfm?uri=ao-49-25-f79 (accessed on 17 August 2022).

- Gabbard, J.L.; Mehra, D.G.; Swan, J.E. Effects of AR Display Context Switching and Focal Distance Switching on Human Performance. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2228–2241. [Google Scholar] [CrossRef] [PubMed]

- Kalra, P.; Karar, V. Impact of Symbology Luminance and Task Complexity on Visual Fatigue in AR Environments. In Technology Enabled Ergonomic Design; Nature: Singapore, 2022; pp. 329–338. [Google Scholar] [CrossRef]

- Begum, S.; Ahmed, M.U.; Funk, P.; Filla, R. Mental state monitoring system for the professional drivers based on Heart Rate Variability analysis and Case- Based Reasoning. In Proceedings of the 2012 Federated Conference on Computer Science and Information Systems (FedCSIS), Wroclaw, Poland, 9–12 September 2012; pp. 35–42. [Google Scholar]

| Mean ± SD | |||

|---|---|---|---|

| Single-Depth | Dual-Depth | p | |

| F3 | 2.212 ± 0.517 | 2.209 ± 0.511 | 0.982 |

| F4 | 1.968 ± 0.390 | 1.949 ± 0.407 | 0.208 |

| P3 | 2.108 ± 0.543 | 2.030 ± 0.580 | 0.004 ** |

| P4 | 5.499 ± 2.180 | 5.621 ± 2.241 | 0.253 |

| O1 | 1.988 ± 0.372 | 1.982 ± 0.438 | 0.511 |

| O2 | 2.084 ± 0.483 | 2.160 ± 0.474 | 0.000 *** |

| Mean ± SD | |

|---|---|

| Single-Depth | Dual-Depth |

| 45.73 ± 12.19 | 40.97 ± 17.06 |

| Mean ± SD | |||

|---|---|---|---|

| Single-Depth | Dual-Depth | p | |

| 1–45 min | 2.981 ± 1.595 | 2.789 ± 1.236 | 0.432 |

| 46–90 min | 2.842 ± 1.250 | 2.904 ± 0.783 | 0.783 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.-Y.; Chou, T.-A.; Chuang, C.-H.; Hsu, C.-C.; Chen, Y.-S.; Huang, S.-H. Impact of Dual-Depth Head-Up Displays on Vehicle Driver Performance. Appl. Sci. 2024, 14, 6441. https://doi.org/10.3390/app14156441

Chen C-Y, Chou T-A, Chuang C-H, Hsu C-C, Chen Y-S, Huang S-H. Impact of Dual-Depth Head-Up Displays on Vehicle Driver Performance. Applied Sciences. 2024; 14(15):6441. https://doi.org/10.3390/app14156441

Chicago/Turabian StyleChen, Chien-Yu, Tzu-An Chou, Chih-Hao Chuang, Ching-Cheng Hsu, Yi-Sheng Chen, and Shi-Hwa Huang. 2024. "Impact of Dual-Depth Head-Up Displays on Vehicle Driver Performance" Applied Sciences 14, no. 15: 6441. https://doi.org/10.3390/app14156441

APA StyleChen, C.-Y., Chou, T.-A., Chuang, C.-H., Hsu, C.-C., Chen, Y.-S., & Huang, S.-H. (2024). Impact of Dual-Depth Head-Up Displays on Vehicle Driver Performance. Applied Sciences, 14(15), 6441. https://doi.org/10.3390/app14156441