Integration of Relation Filtering and Multi-Task Learning in GlobalPointer for Entity and Relation Extraction

Abstract

1. Introduction

- A global pointer decoding approach is introduced in this research to address the issues of overlapping triplets and nested entities. Entity and relation information is encapsulated in a dual-head matrix, which effectively resolves the problems.

- The global pointer-based model is expanded to implement two enhancements. Firstly, relation filtering is applied to combat redundant relationships, leading to a notable reduction in the evaluation of such redundancies. Secondly, a dynamic loss balancing strategy is applied to solve the complicated problems of multi-task learning, and the team has embraced it, encouraging improved interactions among various tasks.

2. Related Work

3. Materials and Methods

3.1. Coding Module

3.2. GlobalPointer Decoding Module

3.3. Relation Filtering Decoding Module

3.4. Loss Function

4. Experiments

4.1. Data and Evaluation Metrics

4.2. Setup

4.3. Comparative Models

- NovelTagging: This model transforms the entity relation extraction problem into a sequence labeling problem, enabling the simultaneous recognition of entities and relations. While it does not address the issue of overlapping triples, it provides a foundational reference for our research.

- CasRel: Based on sequence labeling, this model introduces a cascaded binary tagging framework to tackle the problem of overlapping triples, which has become a critical milestone in the field.

- TPLinker_plus: This model proposed a novel tagging scheme, which not only resolves overlapping triple issues, but also addresses entity nesting problems.

- PRGC: Based on a table-filling approach, this model introduces a new end-to-end framework that can resolve overlapping triple problems by separately predicting entities and relations. The model reduces redundant information generation and offers us a different perspective.

- GPLinker: Utilizing a global pointer-based model with matrix labeling, the model enables entity relation prediction from a global perspective. It successfully tackles overlapping triple issues and also resolves entity nesting problems, and it is taken as an important reference model for us.

5. Results and Discussion

5.1. Model Comparative Experiment

5.2. Ablation Experiment

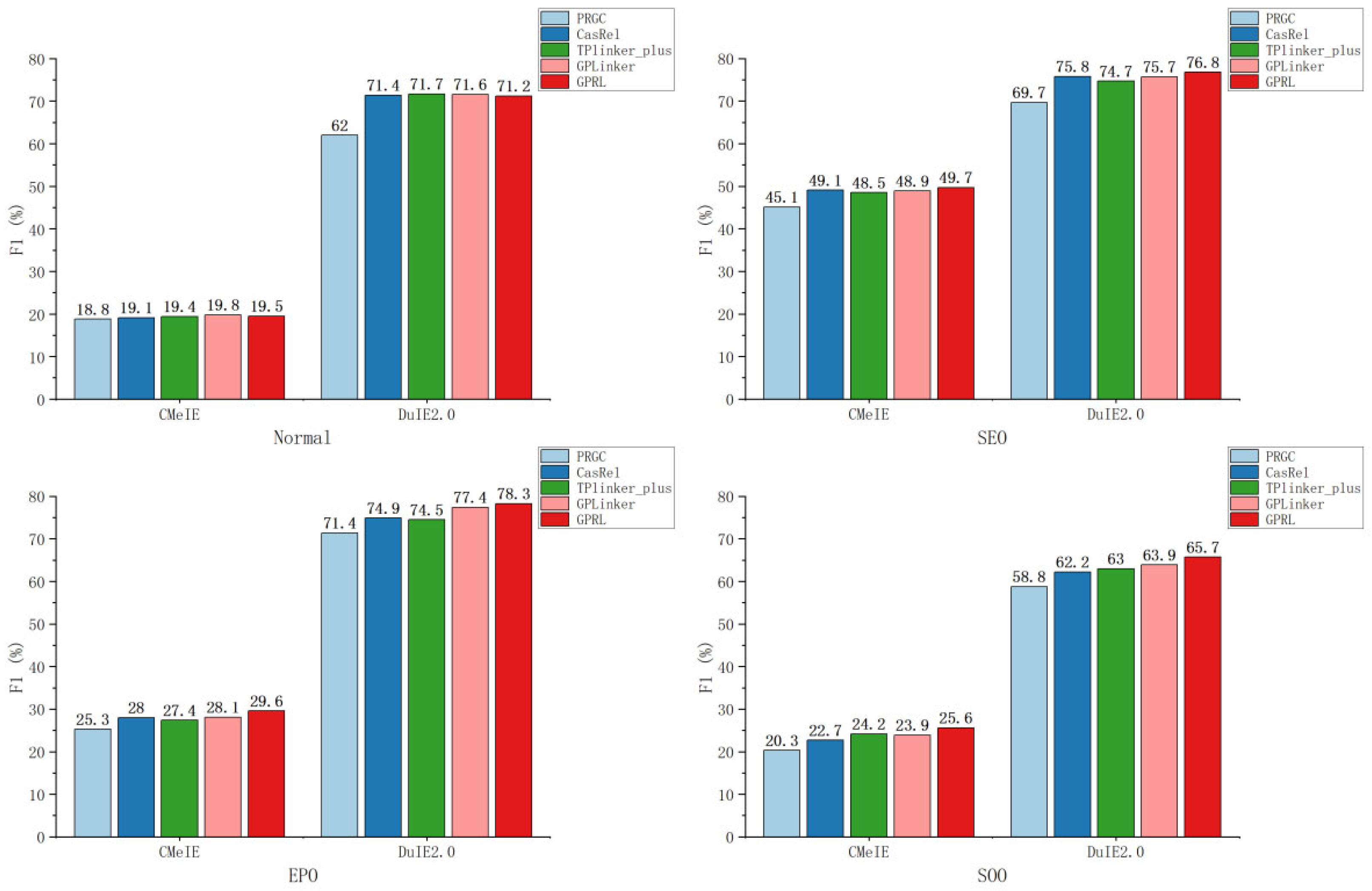

5.3. Overlapping Triples and Nested Entities Experiment

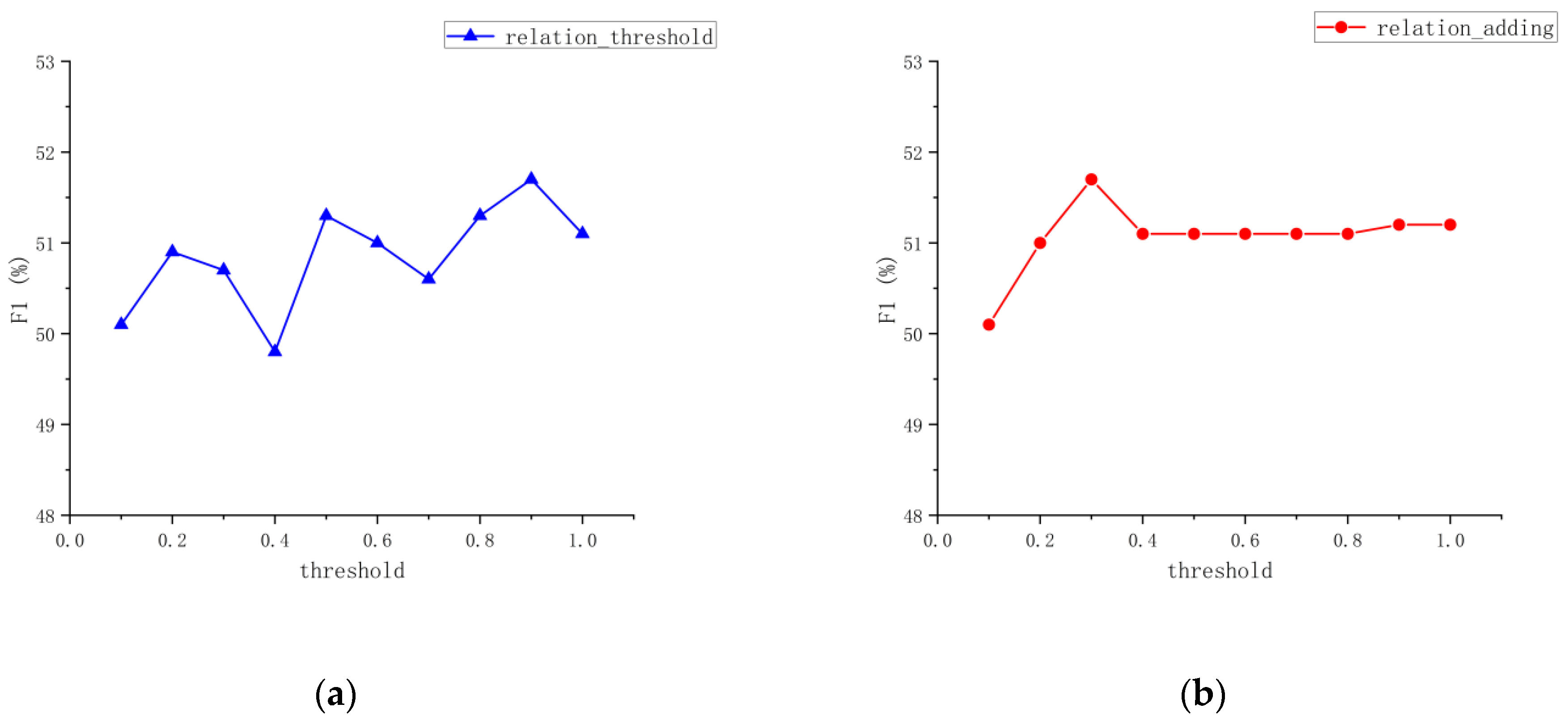

5.4. Parameter Experiment

5.5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ji, S.; Pan, S.; Cambria, E.; Marttinen, P.; Philip, S.Y. A survey on knowledge graphs: Representation, acquisition, and applications. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 494–514. [Google Scholar] [CrossRef] [PubMed]

- Tan, L.; Haihong, E.; Kuang, Z.; Song, M.; Liu, Y.; Chen, Z.; Xie, X.; Li, J.; Fan, J.; Wang, Q.; et al. Key technologies and research progress of medical knowledge graph construction. Big Data Res. 2021, 7, 80–104. [Google Scholar]

- Cheng, D.; Yang, F.; Wang, X.; Zhang, Y.; Zhang, L. Knowledge graph-based event embedding framework for financial quantitative investments. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 2221–2230. [Google Scholar]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge graph embedding: A survey of approaches and applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Liu, H.; Jiang, Q.; Gui, Q.; Zhang, Q.; Wang, Z.; Wang, L.; Wang, L. A Review of Research Progress in Entity Relationship Extraction Techniques. Comput. Appl. Res. 2020, 37, 1–5. [Google Scholar]

- Chowdhary, K.; Chowdhary, K.R. Natural language processing. In Fundamentals of Artificial Intelligence; Springer: New Delhi, India, 2020; pp. 603–649. [Google Scholar]

- Li, D.; Zhang, Y.; Li, D.; Lin, D. A survey of entity relation extraction methods. J. Comput. Res. Dev. 2020, 57, 1424–1448. [Google Scholar]

- Feng, J.; Zhang, T.; Hang, T. An Overview of Overlapping Entity Relationship Extraction. J. Comput. Eng. Appl. 2022, 58, 1–11. [Google Scholar]

- Hong, E.; Zhang, W.; Xiao, S.; Chen, R.; Hu, Y.; Zhou, X.; Niu, P. Survey of entity relationship extraction based on deep learning. J. Softw. 2019, 30, 1793–1818. [Google Scholar]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. [Google Scholar]

- Socher, R.; Huval, B.; Manning, C.D.; Ng, A.Y. Semantic compositionality through recursive matrix-vector spaces. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Republic of Korea, 12–14 July 2012; pp. 1201–1211. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Short papers. Volume 2, pp. 207–212. [Google Scholar]

- Xu, Y.; Mou, L.; Li, G.; Chen, Y.; Peng, H.; Jin, Z. Classifying relations via long short term memory networks along shortest dependency paths. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1785–1794. [Google Scholar]

- Zhang, S.; Zheng, D.; Hu, X.; Yang, M. Bidirectional long short-term memory networks for relation classification. In Proceedings of the 29th Pacific Asia Conference on Language, Information and Computation, Shanghai, China, 30 October–1 November 2015; pp. 73–78. [Google Scholar]

- Luo, L.; Yang, Z.; Yang, P.; Zhang, Y.; Wang, L.; Lin, H.; Wang, J. An attention-based BiLSTM-CRF approach to document-level chemical named entity recognition. Bioinformatics 2018, 34, 1381–1388. [Google Scholar] [CrossRef]

- Miwa, M.; Bansal, M. End-to-end relation extraction using lstms on sequences and tree structures. arXiv 2016, arXiv:1601.00770. [Google Scholar]

- Zheng, S.; Wang, F.; Bao, H.; Hao, Y.; Zhou, P.; Xu, B. Joint extraction of entities and relations based on a novel tagging scheme. arXiv 2017, arXiv:1706.05075. [Google Scholar]

- Yu, B.; Zhang, Z.; Shu, X.; Wang, Y.; Liu, T.; Wang, B.; Li, S. Joint extraction of entities and relations based on a novel decomposition strategy. arXiv 2019, arXiv:1909.04273. [Google Scholar]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A novel cascade binary tagging framework for relational triple extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1–13. [Google Scholar]

- Wang, L.; Xiong, C.; Deng, N. A research on overlapping relationship extraction based on multi-objective dependency. In Proceedings of the 15th International Conference on Computer Science & Education(ICCSE), Delft, The Netherlands, 18–22 August 2020; pp. 618–622. [Google Scholar]

- Xiao, L.; Zang, Z.; Song, S. Research on relational Extraction Cascade labeling framework incorporating Self-attention. Comput. Eng. Appl. 2023, 59, 77. [Google Scholar]

- Wang, Y.; Yu, B.; Zhang, Y.; Liu, T.; Zhu, H.; Sun, L. Tplinker: Single-stage joint extraction of entities and relations through token pair linking. In Proceedings of the 28th International Conference on Computational Linguistics, COLING 2020, Barcelona, Spain, 8–13 December 2020; pp. 1572–1582. [Google Scholar]

- Zheng, H.; Wen, R.; Chen, X.; Yang, Y.; Zhang, Y.; Zhang, Z.; Zhang, N.; Qin, B.; Xu, M.; Zheng, Y. PRGC: Potential relation and global correspondence based joint relational triple extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 1–6 August 2021; pp. 6225–6235. [Google Scholar]

- Su, J.; Murtadha, A.; Pan, S.; Hou, J.; Sun, J.; Huang, W.; Wen, B.; Liu, Y. Global pointer: Novel efficient span-based approach for named entity recognition. arXiv 2022, arXiv:2208.03054. [Google Scholar]

- Su, J. Gplinker: Entity Relationship Joint Extraction Based on Globalpointer. Available online: https://spaces.ac.cn/archives/8888 (accessed on 30 January 2022).

- Zhang, Y.; Liu, S.; Liu, Y.; Ren, L.; Xin, Y. A review of deep learning-based joint extraction of entity relationships. Electron. Lett. 2023, 51, 1093. [Google Scholar]

- Michael, C. Multi-task learning with deep neural networks: A survey. arXiv 2020, arXiv:2009.09796. [Google Scholar]

- Zhao, F.; Jiang, Z.; Kang, Y.; Sun, C.; Liu, X. Adjacency List Oriented Relational Fact Extraction via Adaptive Multi-task Learning. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021. [Google Scholar]

- Chen, Z.; Badrinarayanan, V.; Lee, C.Y.; Rabinovich, A. Gradnorm: Gradient normalization for adaptive loss balancing in deep multitask networks. In Proceedings of the International Conference on Machine Learning(PMLR), Stockholm, Sweden, 10–15 July 2018; pp. 794–803. [Google Scholar]

- Liu, S.; Johns, E.; Davison, A.J. End-to-end multi-task learning with attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1871–1880. [Google Scholar]

- Guo, M.; Haque, A.; Huang, D.A.; Yeung, S.; Fei-Fei, L. Dynamic task prioritization for multitask learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 270–287. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for Chinese bert. IEEE ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Buckman, J.; Roy, A.; Raffel, C.; Goodfellow, I. Thermometer encoding: One hot way to resist adversarial examples. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Church, K.W. Word2Vec. J. Nat. Lang Eng. 2017, 23, 155–162. [Google Scholar] [CrossRef]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. Neurocomputing 2024, 568, 127063. [Google Scholar] [CrossRef]

- Su, J.; Zhu, M.; Murtadha, A.; Pan, S.; Wen, B.; Liu, Y. Zlpr: A novel loss for multi-label classification. arXiv 2022, arXiv:2208.02955. [Google Scholar]

- Guan, T.; Zan, H.; Zhou, X.; Xu, H.; Zhang, K. CMeIE: Construction and evaluation of Chinese medical information extraction dataset. In Natural Language Processing and Chinese Computing, Proceedings of the 9th CCF International Conference, NLPCC 2020, Zhengzhou, China, 14–18 October 2020; Proceedings, Part I 9; Springer International Publishing: Cham, Switzerland, 2020; pp. 270–282. [Google Scholar]

- Li, S.; He, W.; Shi, Y.; Jiang, W.; Liang, H.; Jiang, Y.; Zhang, Y.; Lyu, Y.; Zhu, Y. Duie: A large-scale chinese dataset for information extraction. In Natural Language Processing and Chinese Computing, Proceedings of the 8th CCF International Conference, NLPCC 2019, Dunhuang, China, 9–14 October 2019; Proceedings, Part II 8; Springer International Publishing: Cham, Switzerland, 2019; pp. 791–800. [Google Scholar]

- Mao, D.; Li, X.; Liu, J.; Zhang, D.; Yan, W. Chinese entity and relation extraction model based on parallel heterogeneous graph and sequential attention mechanism. Comput. Appl. 2024, 44, 2018–2025. [Google Scholar]

- Lu, X.; Tong, J.; Xia, S. Entity relationship extraction from Chinese electronic medical records based on feature augmentation and cascade binary tagging framework. Math. Biosci. Eng. 2024, 21, 1342–1355. [Google Scholar] [CrossRef]

- Xiao, Y.; Chen, G.; Du, C.; Li, L.; Yuan, Y.; Zou, J.; Liu, J. A Study on Double-Headed Entities and Relations Prediction Framework for Joint Triple Extraction. Mathematics 2023, 11, 4583. [Google Scholar] [CrossRef]

- Kong, L.; Liu, S. REACT: Relation Extraction Method Based on Entity Attention Network and Cascade Binary Tagging Framework. Appl. Sci. 2024, 14, 2981. [Google Scholar] [CrossRef]

- Tang, H.; Zhu, D.; Tang, W.; Wang, S.; Wang, Y.; Wang, L. Research on joint model relation extraction method based on entity mapping. PLoS ONE 2024, 19, e0298974. [Google Scholar] [CrossRef]

| Dataset | Train (Ratio%) | Test (Ratio%) | Validation (Ratio%) | All | Relation |

|---|---|---|---|---|---|

| DuIE2.0 | 137,258 (68%) | 34,044 (17%) | 30,485 (15%) | 201,787 | 48 |

| CMeIE | 11,651 (65%) | 3584 (20%) | 2689 (15%) | 17,924 | 53 |

| Category | CMeIE | DuIE2.0 | ||||

|---|---|---|---|---|---|---|

| Train (Ratio%) | Test (Ratio%) | Validation (Ratio%) | Train (Ratio%) | Test (Ratio%) | Validation (Ratio%) | |

| Normal | 4375 (38%) | 1329 (37%) | 993 (37%) | 87,591 (64%) | 33,080 (97%) | 27,043 (89%) |

| SEO | 9678 (83%) | 2979 (83%) | 2257 (84%) | 56,912 (41%) | 704 (2%) | 3168 (10%) |

| EPO | 51 (0.4%) | 12 (0.3%) | 14 (0.5%) | 6891 (5%) | 126 (0.3%) | 478 (1.5%) |

| SOO | 1163 (9%) | 375 (10%) | 281 (10%) | 2064 (2%) | 273 (0.8%) | 301 (0.9%) |

| Type | Example | Ternary |

|---|---|---|

| Normal | “Hepatic hydrothorax may cause breathing difficulties” | (Hepatic hydrothorax, clinical manifestations, dyspnea) |

| SEO | “Septic arthritis often involves the hips, knees, and ankles, and symptoms can last for several days”. | (Septic arthritis, hip) (Septic arthritis, knee) (Septic arthritis, ankle) |

| EPO | “Obstructive shock is due to decreased cardiac output not myocardial under function. Causes include pneumothorax, cardiac tamponade, pulmonary embolism, and aortic constriction, which results in obstruction of extracardiac blood flow pathways”. | (Obstructive shock, pathophysiology, pulmonary embolism) (Obstructive Shock, Related (Causes), Pulmonary embolism) |

| SOO | “Gallbladder inflammation abdominal mass may be palpable in the distended gallbladder in 30 to 40 percent of cases”. | (Gallbladder inflammation, site of onset, gallbladder) |

| Parameter | Value | Meaning |

|---|---|---|

| maxlen | 300 | Truncation length of the text |

| batch_size | 16 | Batch size of training data |

| epochs | 100 | Number of rounds of training iterations |

| learning rate | 2 × 10−5 | Initial learning rate |

| pool_kernel_size | 2 | Pooling layer size |

| gp_threshold | 0 | Entity–relationship extraction threshold |

| relation_threshold | 0.9 | Relationship filtering threshold |

| relation_adding | 0.3 | Relationship filtering threshold |

| Parameter | NovelTagging | CasRel | TPLinker-Plus | PRGC | GPLinker |

|---|---|---|---|---|---|

| maxlen | 300 | 300 | 300 | 300 | 300 |

| batch_size | 16 | 16 | 8 | 8 | 16 |

| epochs | 100 | 100 | 100 | 100 | 100 |

| learning rate | 2 × 10−5 | 2 × 10−5 | 2 × 10−5 | 2 × 10−5 | 2 × 10−5 |

| Model | CMeIE | DuIE2.0 | ||||

| P(%) | R(%) | F1(%) | P(%) | R(%) | F1(%) | |

| NovelTagging | 51.4 | 17.1 | 25.6 | 60.6 | 45.3 | 55.1 |

| PRGC | 40.8 | 46.4 | 43.4 | 48.8 | 81.4 | 61.3 |

| CasRel | 50.5 | 48.5 | 49.5 | 62.0 | 80.4 | 70.6 |

| TPLinker_plus | 50.7 | 49.8 | 50.2 | 64.3 | 74.4 | 69.0 |

| GPLinker | 51.2 | 47.5 | 49.6 | 61.7 | 81.0 | 70.4 |

| GPRL (our model) | 54.7 | 50.6 | 51.7 | 64.1 | 81.6 | 71.8 |

| Examples of Unpredicted Correct Triples | Example |

|---|---|

| (Diseases, Related (Symptoms), Diseases) | (submucous cleft palate, related (symptom), airway obstruction) |

| (Symptoms, Synonyms, Symptoms) | (gastritis (Chinese), Synonym, gastritis (English)) |

| Model | CMeIE | DuIE2.0 | ||||

|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| HNNERJE [42] | 48.49 | 46.37 | 47.40 | / | / | / |

| FA-CBT [43] | 54.51 | 48.63 | 51.40 | / | / | / |

| DEPR [44] | 47.51 | 46.11 | 46.80 | 71.06 | 65.35 | 68.09 |

| REACT [45] | / | / | / | 68.5 | 66.0 | 67.2 |

| CasRelBLCF [46] | / | / | / | 74.0 | 68.6 | 71.2 |

| GPRL (our model) | 54.7 | 50.6 | 51.7 | 64.1 | 81.6 | 71.8 |

| Model | CMeIE | DuIE2.0 | ||||

|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| GPlinker | 51.2 | 47.5 | 49.6 | 61.7 | 81.0 | 70.4 |

| GPlinker + Relation filter | 53.5 | 48.3 | 50.8 | 63.6 | 81.3 | 71.5 |

| GPlinker + Relation filter + Loss optimization | 54.7 | 50.6 | 51.7 | 64.1 | 81.6 | 71.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Tao, J.; Chen, W.; Zhang, Y.; Chen, M.; He, L.; Tang, D. Integration of Relation Filtering and Multi-Task Learning in GlobalPointer for Entity and Relation Extraction. Appl. Sci. 2024, 14, 6832. https://doi.org/10.3390/app14156832

Liu B, Tao J, Chen W, Zhang Y, Chen M, He L, Tang D. Integration of Relation Filtering and Multi-Task Learning in GlobalPointer for Entity and Relation Extraction. Applied Sciences. 2024; 14(15):6832. https://doi.org/10.3390/app14156832

Chicago/Turabian StyleLiu, Bin, Jialin Tao, Wanyuan Chen, Yijie Zhang, Min Chen, Lei He, and Dan Tang. 2024. "Integration of Relation Filtering and Multi-Task Learning in GlobalPointer for Entity and Relation Extraction" Applied Sciences 14, no. 15: 6832. https://doi.org/10.3390/app14156832

APA StyleLiu, B., Tao, J., Chen, W., Zhang, Y., Chen, M., He, L., & Tang, D. (2024). Integration of Relation Filtering and Multi-Task Learning in GlobalPointer for Entity and Relation Extraction. Applied Sciences, 14(15), 6832. https://doi.org/10.3390/app14156832