Cortical and Subjective Measures of Individual Noise Tolerance Predict Hearing Outcomes with Varying Noise Reduction Strength

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Task Design and Procedures

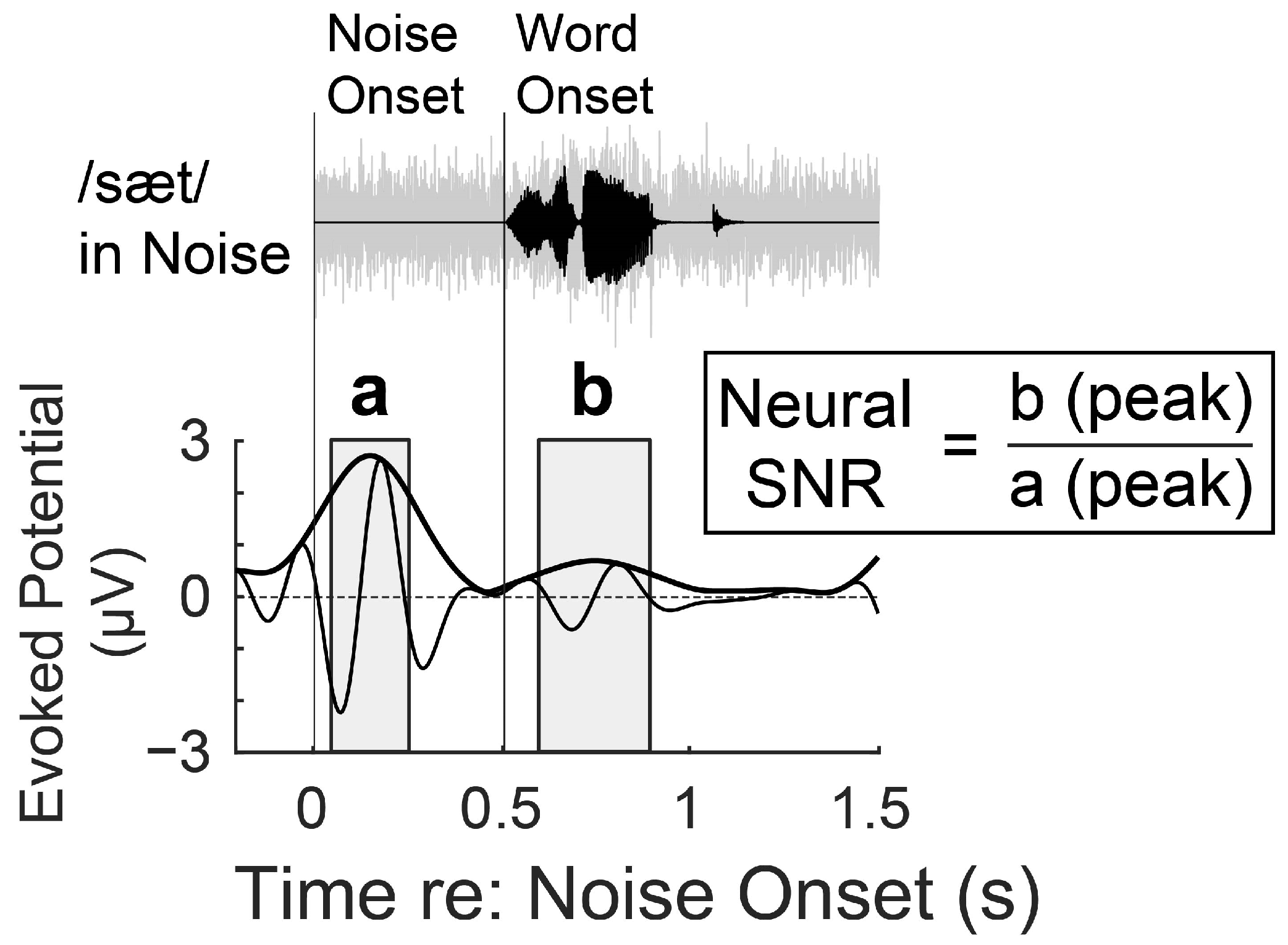

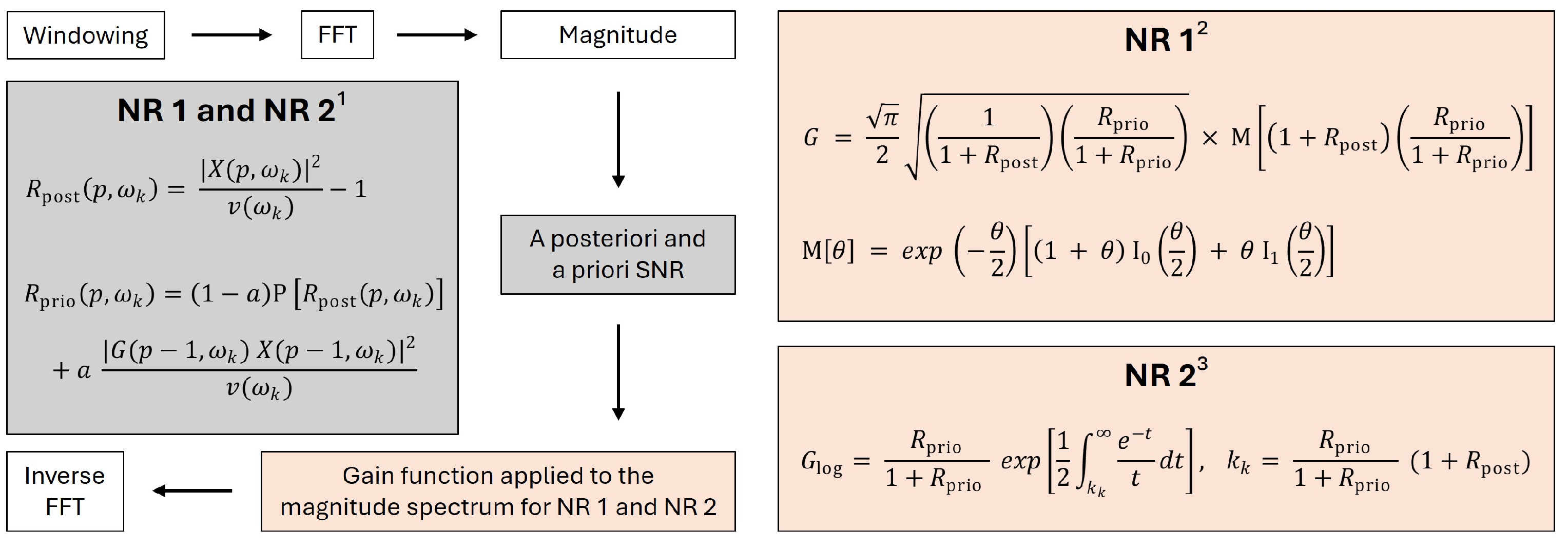

2.3. Quantification of NR Effects on Stimuli

2.4. Data Acquisition and Preprocessing

2.5. Statistical Analysis

3. Results

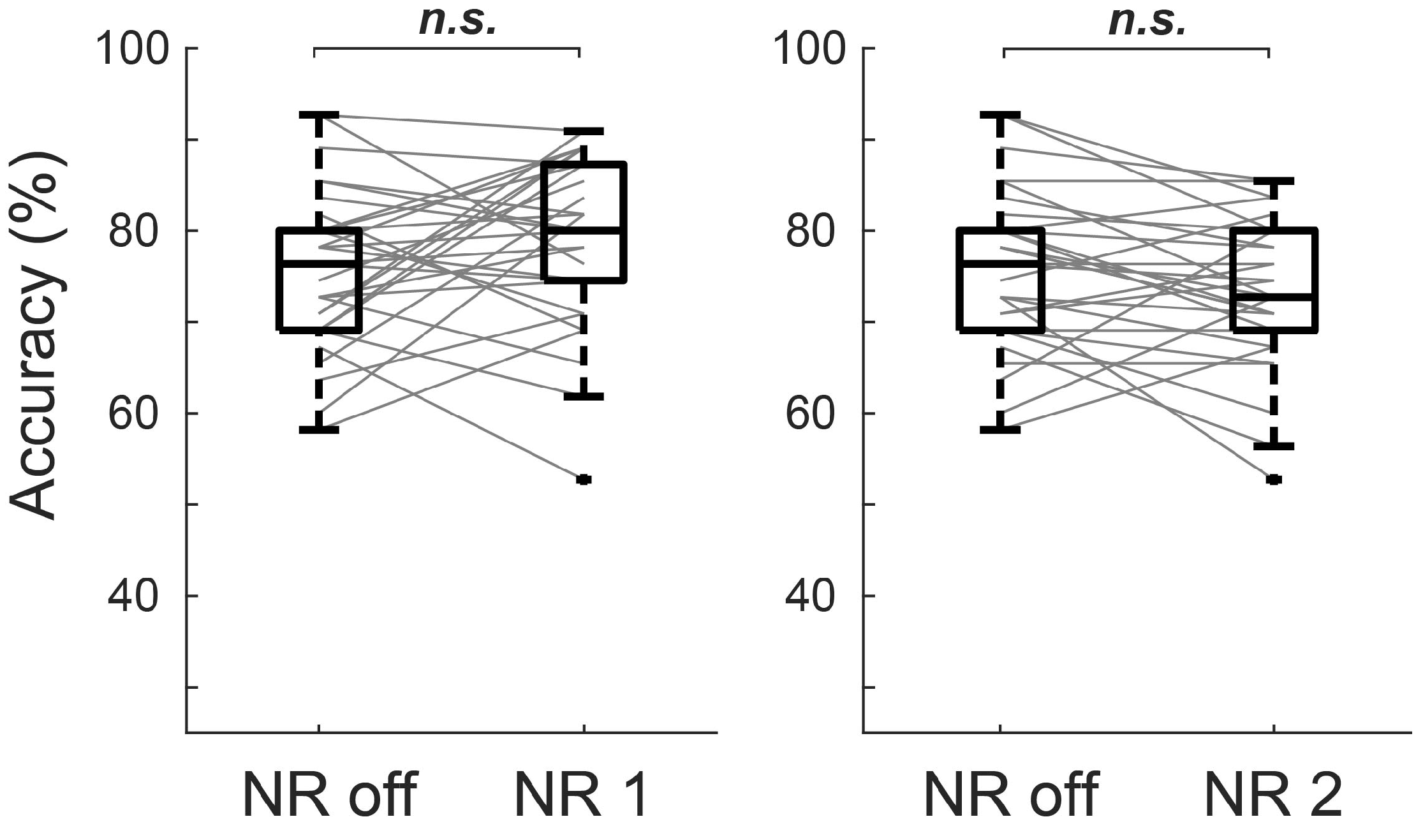

3.1. Behavioral Performance

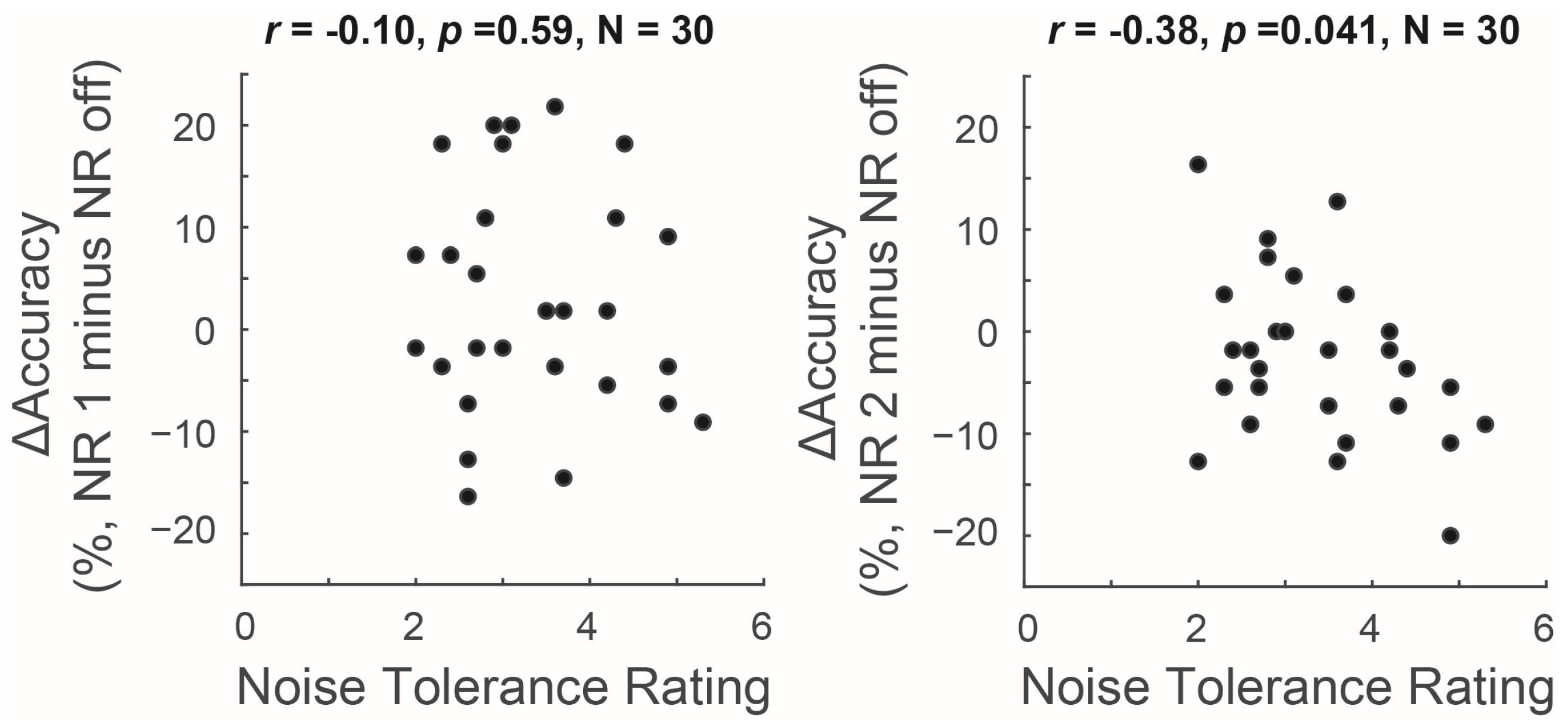

3.2. Relationship between Measures of Noise Tolerance and NR Outcomes

3.3. Stepwise Regression: Modeling the Influence of Cortical and Subjective Measures of Noise Tolerance on NR Outcomes

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Plomp, R. Noise, amplification, and compression: Considerations of three main issues in hearing aid design. Ear Hear. 1994, 15, 2–12. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, G.; Martinez, C.D.; Beamer, S.; Bridges, J.; Noffsinger, D.; Sugiura, K.; Bratt, G.W.; Williams, D.W. Subjective measures of hearing aid benefit and satisfaction in the NIDCD/VA follow-up study. J. Am. Acad. Audiol. 2007, 18, 323–349. [Google Scholar] [CrossRef]

- Davidson, A.; Marrone, N.; Wong, B.; Musiek, F. Predicting Hearing Aid Satisfaction in Adults: A Systematic Review of Speech-in-noise Tests and Other Behavioral Measures. Ear Hear. 2021, 42, 1485–1498. [Google Scholar] [CrossRef]

- Bentler, R.; Chiou, L.-K. Digital Noise Reduction: An Overview. Trends Amplif. 2006, 10, 67–82. [Google Scholar] [CrossRef] [PubMed]

- Bentler, R.; Wu, Y.-H.; Kettel, J.; Hurtig, R. Digital noise reduction: Outcomes from laboratory and field studies. Int. J. Audiol. 2008, 47, 447–460. [Google Scholar] [CrossRef] [PubMed]

- Hoetink, A.E.; Körössy, L.; Dreschler, W.A. Classification of steady state gain reduction produced by amplitude modulation based noise reduction in digital hearing aids. Int. J. Audiol. 2009, 48, 444–455. [Google Scholar] [CrossRef] [PubMed]

- Kates, J.M. Digital Hearing Aids; Plural Pub: San Diego, CA, USA, 2008. [Google Scholar]

- Arehart, K.; Souza, P.; Lunner, T.; Pedersen, M.S.; Kates, J.M. Relationship between distortion and working memory for digital noise-reduction processing in hearing aids. J. Acoust. Soc. Am. 2013, 133 (Suppl. S5), 3382. [Google Scholar] [CrossRef]

- Brons, I.; Dreschler, W.A.; Houben, R. Detection threshold for sound distortion resulting from noise reduction in normal-hearing and hearing-impaired listeners. J. Acoust. Soc. Am. 2014, 136, 1375–1384. [Google Scholar] [CrossRef]

- Brons, I.; Houben, R.; Dreschler, W.A. Effects of Noise Reduction on Speech Intelligibility, Perceived Listening Effort, and Personal Preference in Hearing-Impaired Listeners. Trends Hear. 2014, 18, 2331216514553924. [Google Scholar] [CrossRef]

- Kubiak, A.M.; Rennies, J.; Ewert, S.D.; Kollmeier, B. Relation between hearing abilities and preferred playback settings for speech perception in complex listening conditions. Int. J. Audiol. 2022, 61, 965–974. [Google Scholar] [CrossRef]

- Cox, R.M.; Alexander, G.C.; Gray, G.A. Personality, hearing problems, and amplification characteristics: Contributions to self-report hearing aid outcomes. Ear Hear. 2007, 28, 141–162. [Google Scholar] [CrossRef] [PubMed]

- Nabelek, A.K.; Freyaldenhoven, M.C.; Tampas, J.W.; Burchfield, S.B.; Muenchen, R.A. Acceptable Noise Level as a Predictor of Hearing Aid Use. J. Am. Acad. Audiol. 2006, 17, 626–639. [Google Scholar] [CrossRef] [PubMed]

- Neher, T. Relating hearing loss and executive functions to hearing aid users’ preference for, and speech recognition with, different combinations of binaural noise reduction and microphone directionality. Front. Neurosci. 2014, 8, 391. [Google Scholar] [CrossRef] [PubMed]

- Neher, T.; Grimm, G.; Hohmann, V.; Kollmeier, B. Do hearing loss and cognitive function modulate benefit from different binaural noise-reduction settings? Ear Hear. 2014, 35, e52–e62. [Google Scholar] [CrossRef] [PubMed]

- Neher, T.; Wagener, K.C. Investigating differences in preferred noise reduction strength among hearing aid users. Trends Hear. 2016, 20, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Neher, T.; Wagener, K.C.; Fischer, R.-L. Directional processing and noise reduction in hearing aids: Individual and situational influences on preferred setting. J. Am. Acad. Audiol. 2016, 27, 628–646. [Google Scholar] [CrossRef] [PubMed]

- Mackersie, C.L.; Kim, N.K.; Lockshaw, S.A.; Nash, M.N. Subjective criteria underlying noise-tolerance in the presence of speech. Int. J. Audiol. 2021, 60, 89–95. [Google Scholar] [CrossRef] [PubMed]

- Recker, K.L.; Micheyl, C. Speech Intelligibility as a Cue for Acceptable Noise Levels. Ear Hear. 2017, 38, 465–474. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.H.; Stangl, E. The effect of hearing aid signal-processing schemes on acceptable noise levels: Perception and prediction. Ear Hear. 2013, 34, 333–341. [Google Scholar] [CrossRef]

- Mueller, H.G.; Weber, J.; Hornsby, B.W.Y. The effects of digital noise reduction on the acceptance of background noise. Trends Amplif. 2006, 10, 83–93. [Google Scholar] [CrossRef]

- Billings, C.J.; McMillan, G.P.; Penman, T.M.; Gille, S.M. Predicting perception in noise using cortical auditory evoked potentials. J. Assoc. Res. Otolaryngol. 2013, 14, 891–903. [Google Scholar] [CrossRef]

- Billings, C.J.; Penman, T.M.; McMillan, G.P.; Ellis, E.M. Electrophysiology and perception of speech in noise in older listeners: Effects of hearing impairment & age. Ear Hear. 2015, 36, 710–722. [Google Scholar] [PubMed]

- Benítez-Barrera, C.R.; Key, A.P.; Ricketts, T.A.; Tharpe, A.M. Central auditory system responses from children while listening to speech in noise. Hear. Res. 2021, 403, 108165. [Google Scholar] [CrossRef] [PubMed]

- Decruy, L.; Vanthornhout, J.; Francart, T. Hearing impairment is associated with enhanced neural tracking of the speech envelope. Hear. Res. 2020, 393, 107961. [Google Scholar] [CrossRef] [PubMed]

- Vanthornhout, J.; Decruy, L.; Wouters, J.; Simon, J.Z.; Francart, T. Speech Intelligibility Predicted from Neural Entrainment of the Speech Envelope. J. Assoc. Res. Otolaryngol. 2018, 19, 181–191. [Google Scholar] [CrossRef]

- Gillis, M.; Van Canneyt, J.; Francart, T.; Vanthornhout, J. Neural tracking as a diagnostic tool to assess the auditory pathway. Hear. Res. 2022, 426, 108607. [Google Scholar] [CrossRef]

- Alickovic, E.; Lunner, T.; Wendt, D.; Fiedler, L.; Hietkamp, R.; Ng, E.H.N.; Graversen, C. Neural Representation Enhanced for Speech and Reduced for Background Noise with a Hearing Aid Noise Reduction Scheme during a Selective Attention Task. Front. Neurosci. 2020, 14, 846. [Google Scholar] [CrossRef]

- Alickovic, E.; Ng, E.H.N.; Fiedler, L.; Santurette, S.; Innes-Brown, H.; Graversen, C. Effects of Hearing Aid Noise Reduction on Early and Late Cortical Representations of Competing Talkers in Noise. Front. Neurosci. 2021, 15, 636060. [Google Scholar] [CrossRef]

- Kim, S.; Schwalje, A.T.; Liu, A.S.; Gander, P.E.; McMurray, B.; Griffiths, T.D.; Choi, I. Pre- and post-target cortical processes predict speech-in-noise performance. NeuroImage 2021, 228, 117699. [Google Scholar] [CrossRef]

- Kim, S.; Wu, Y.-H.; Bharadwaj, H.M.; Choi, I. Effect of noise reduction on cortical speech-in-noise processing and its variance due to individual noise tolerance. Ear Hear. 2022, 43, 849–861. [Google Scholar] [CrossRef]

- Mesgarani, N.; Chang, E.F. Selective cortical representation of attended speaker in multi-talker speech perception. Nature 2012, 485, 233–236. [Google Scholar] [CrossRef] [PubMed]

- Hillyard, S.A.; Vogel, E.K.; Luck, S.J. Sensory gain control (amplification) as a mechanism of selective attention: Electrophysiological and neuroimaging evidence. Philos. Trans. R. Soc. Lond. B Biol. Sci. 1998, 353, 1257–1270. [Google Scholar] [CrossRef] [PubMed]

- Berger, J.I.; Gander, P.E.; Kim, S.; Schwalje, A.T.; Woo, J.; Na, Y.-M.; Holmes, A.; Hong, J.M.; Dunn, C.C.; Hansen, M.R.; et al. Neural Correlates of Individual Differences in Speech-in-Noise Performance in a Large Cohort of Cochlear Implant Users. Ear Hear. 2023, 44, 1107–1120. [Google Scholar] [CrossRef] [PubMed]

- Shim, H.; Kim, S.; Hong, J.; Na, Y.; Woo, J.; Hansen, M.; Gantz, B.; Choi, I. Differences in neural encoding of speech in noise between cochlear implant users with and without preserved acoustic hearing. Hear. Res. 2023, 427, 108649. [Google Scholar] [CrossRef] [PubMed]

- Geller, J.; Holmes, A.; Schwalje, A.; Berger, J.I.; Gander, P.E.; Choi, I.; McMurray, B. Validation of the Iowa Test of Consonant Perception. J. Acoust. Soc. Am. 2021, 150, 2131–2153. [Google Scholar] [CrossRef] [PubMed]

- Brainard, D.H. The Psychophysics Toolbox. Spat. Vis. 1997, 10, 433–436. [Google Scholar] [CrossRef] [PubMed]

- Weinstein, N.D. Individual differences in reactions to noise: A longitudinal study in a college dormitory. J. Appl. Psychol. 1978, 63, 458–466. [Google Scholar] [CrossRef] [PubMed]

- Kishikawa, H.; Matsui, T.; Uchiyama, I.; Miyakawa, M.; Hiramatsu, K.; Stansfeld, S. The development of Weinstein′s noise sensitivity scale. Noise Health 2006, 8, 154–160. [Google Scholar] [CrossRef]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum mean-square error log-spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1985, 33, 443–445. [Google Scholar] [CrossRef]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 1109–1121. [Google Scholar] [CrossRef]

- Sarampalis, A.; Kalluri, S.; Edwards, B.; Hafter, E. Objective measures of listening effort: Effects of background noise and noise reduction. J. Speech Lang. Hearth Res. 2009, 52, 1230–1240. [Google Scholar] [CrossRef] [PubMed]

- Stelmachowicz, P.; Lewis, D.; Hoover, B.; Nishi, K.; McCreery, R.; Woods, W. Effects of Digital Noise Reduction on Speech Perception for Children with Hearing Loss. Ear Hear. 2010, 31, 345–355. [Google Scholar] [CrossRef] [PubMed]

- Park, G.; Cho, W.; Kim, K.-S.; Lee, S. Speech Enhancement for Hearing Aids with Deep Learning on Environmental Noises. Appl. Sci. 2020, 10, 6077. [Google Scholar] [CrossRef]

- Gustafson, S.; McCreery, R.; Hoover, B.; Kopun, J.G.; Stelmachowicz, P. Listening effort and perceived clarity for normal-hearing children with the use of digital noise reduction. Ear Hear. 2014, 35, 183–194. [Google Scholar] [CrossRef] [PubMed]

- Hagerman, B.; Olofsson, Å. A method to measure the effect of noise reduction algorithms using simultaneous speech and noise. Acta Acust. United Acust. 2004, 90, 356–361. [Google Scholar]

- Yun, D.; Shen, Y.; Lentz, J.J. Verification of Estimated Output Signal-to-Noise Ratios From a Phase Inversion Technique Using a Simulated Hearing Aid. Am. J. Audiol. 2023, 32, 197–209. [Google Scholar] [CrossRef]

- Miller, C.W.; Bentler, R.A.; Wu, Y.-H.; Lewis, J.; Tremblay, K. Output signal-to-noise ratio and speech perception in noise: Effects of algorithm. Int. J. Audiol. 2017, 56, 568–579. [Google Scholar] [CrossRef] [PubMed]

- Kay, S.M. Modern Spectral Estimation: Theory and Application; Prentice-Hall Signal Processing Series; Prentice Hall: Englewood Cliffs, NJ, USA, 1988. [Google Scholar]

- Kates, J.M.; Arehart, K.H. Multichannel Dynamic-Range Compression Using Digital Frequency Warping. EURASIP J. Adv. Signal Process. 2005, 2005, 483486. [Google Scholar] [CrossRef]

- Lewis, J.D.; Goodman, S.S.; Bentler, R.A. Measurement of hearing aid internal noise. J. Acoust. Soc. Am. 2010, 127, 2521–2528. [Google Scholar] [CrossRef]

- Fynn, M.; Nordholm, S.; Rong, Y. Coherence Function and Adaptive Noise Cancellation Performance of an Acoustic Sensor System for Use in Detecting Coronary Artery Disease. Sensors 2022, 22, 6591. [Google Scholar] [CrossRef]

- de Cheveigné, A.; Nelken, I. Filters: When, why, and how (not) to use them. Neuron 2019, 102, 280–293. [Google Scholar] [CrossRef] [PubMed]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Ricketts, T.A.; Hornsby, B.W. Sound quality measures for speech in noise through a commercial hearing aid implementing digital noise reduction. J. Am. Acad. Audiol. 2005, 16, 270–277. [Google Scholar] [CrossRef] [PubMed]

- Bregman, A.S. Auditory Scene Analysis: The Perceptual Organization of Sound; The MIT Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Liberman, M.C.; Epstein, M.J.; Cleveland, S.S.; Wang, H.; Maison, S.F. Toward a differential diagnosis of hidden hearing loss in humans. PLoS ONE 2016, 11, e0162726. [Google Scholar] [CrossRef] [PubMed]

- Wan, G.; Corfas, G. Transient auditory nerve demyelination as a new mechanism for hidden hearing loss. Nat. Commun. 2017, 8, 14487. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.E.; Seok, J.M.; Ahn, J.; Ji, Y.S.; Lee, K.M.; Hong, S.H.; Choi, B.-O.; Moon, I.J. Hidden hearing loss in patients with Charcot-Marie-Tooth disease type 1A. Sci. Rep. 2018, 8, 10335. [Google Scholar] [CrossRef] [PubMed]

- Bharadwaj, H.M.; Verhulst, S.; Shaheen, L.; Liberman, M.C.; Shinn-Cunningham, B.G. Cochlear neuropathy and the coding of supra-threshold sound. Front. Syst. Neurosci. 2014, 8, 26. [Google Scholar] [CrossRef] [PubMed]

- Darwin, C.J. Auditory grouping. Trends Cogn. Sci. 1997, 1, 327–333. [Google Scholar] [CrossRef] [PubMed]

- Zuijen, T.L.V.; Sussman, E.; Winkler, I.; Näätänen, R.; Tervaniemi, M. Grouping of Sequential Sounds—An Event-Related Potential Study Comparing Musicians and Nonmusicians. J. Cogn. Neurosci. 2004, 16, 331–338. [Google Scholar] [CrossRef]

- Auksztulewicz, R.; Friston, K. Attentional Enhancement of Auditory Mismatch Responses: A DCM/MEG Study. Cereb. Cortex 2015, 25, 4273–4283. [Google Scholar] [CrossRef]

- Choi, I.; Rajaram, S.; Varghese, L.A.; Shinn-Cunningham, B.G. Quantifying attentional modulation of auditory-evoked cortical responses from single-trial electroencephalography. Front. Hum. Neurosci. 2013, 7, 115. [Google Scholar] [CrossRef] [PubMed]

- Choi, I.; Wang, L.; Bharadwaj, H.; Shinn-Cunningham, B. Individual differences in attentional modulation of cortical responses correlate with selective attention performance. Hear. Res. 2014, 314, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Hillyard, S.A.; Hink, R.F.; Schwent, V.L.; Picton, T.W. Electrical signs of selective attention in the human brain. Science 1973, 182, 177–180. [Google Scholar] [CrossRef] [PubMed]

- Woldorff, M.G.; Gallen, C.C.; Hampson, S.A.; Hillyard, S.A.; Pantev, C.; Sobel, D.; E Bloom, F. Modulation of early sensory processing in human auditory cortex during auditory selective attention. Proc. Natl. Acad. Sci. USA 1993, 90, 8722–8726. [Google Scholar] [CrossRef] [PubMed]

- Shinn-Cunningham, B. Cortical and Sensory Causes of Individual Differences in Selective Attention Ability Among Listeners With Normal Hearing Thresholds. J. Speech Lang. Hear. Res. 2017, 60, 2976–2988. [Google Scholar] [CrossRef] [PubMed]

- Oberfeld, D.; Klöckner-Nowotny, F. Individual differences in selective attention predict speech identification at a cocktail party. eLife 2016, 5, e16747. [Google Scholar] [CrossRef]

- Monaghan, J.J.; Garcia-Lazaro, J.A.; McAlpine, D.; Schaette, R. Hidden Hearing Loss Impacts the Neural Representation of Speech in Background Noise. Curr. Biol. 2020, 30, 4710–4721.e4. [Google Scholar] [CrossRef] [PubMed]

- Bharadwaj, H.M.; Masud, S.; Mehraei, G.; Verhulst, S.; Shinn-Cunningham, B.G. Individual differences reveal correlates of hidden hearing deficits. J. Neurosci. 2015, 35, 2161–2172. [Google Scholar] [CrossRef]

- Bressler, S.; Goldberg, H.; Shinn-Cunningham, B. Sensory coding and cognitive processing of sound in Veterans with blast exposure. Hear. Res. 2017, 349, 98–110. [Google Scholar] [CrossRef]

- Viswanathan, V.; Bharadwaj, H.M.; Shinn-Cunningham, B.G. Electroencephalographic signatures of the neural representation of speech during selective attention. eneuro 2019, 6, ENEURO.0057-19.2019. [Google Scholar] [CrossRef]

- O’sullivan, J.; Herrero, J.; Smith, E.; Schevon, C.; McKhann, G.M.; Sheth, S.A.; Mehta, A.D.; Mesgarani, N. Hierarchical encoding of attended auditory objects in multi-talker speech perception. Neuron 2019, 104, 1195–1209.e3. [Google Scholar] [CrossRef] [PubMed]

- Teoh, E.S.; Ahmed, F.; Lalor, E.C. Attention Differentially Affects Acoustic and Phonetic Feature Encoding in a Multispeaker Environment. J. Neurosci. 2022, 42, 682–691. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Emory, C.; Choi, I. Neurofeedback Training of Auditory Selective Attention Enhances Speech-In-Noise Perception. Front. Hum. Neurosci. 2021, 15, 676992. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C.J. Cochlear Hearing Loss: Physiological, Psychological and Technical Issues, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Horst, J.W. Frequency discrimination of complex signals, frequency selectivity, and speech perception in hearing-impaired subjects. J. Acoust. Soc. Am. 1987, 82, 874–885. [Google Scholar] [CrossRef] [PubMed]

- Liberman, M.C.; Dodds, L.W. Single-neuron labeling and chronic cochlear pathology. III: Stereocilia damage and alterations of threshold tuning curves. Hear. Res. 1984, 16, 55–74. [Google Scholar] [CrossRef] [PubMed]

- Henry, K.S.; Kale, S.; Heinz, M.G. Distorted Tonotopic Coding of Temporal Envelope and Fine Structure with Noise-Induced Hearing Loss. J. Neurosci. 2016, 36, 2227–2237. [Google Scholar] [CrossRef]

- Henry, K.S.; Sayles, M.; Hickox, A.E.; Heinz, M.G. Divergent Auditory Nerve Encoding Deficits Between Two Common Etiologies of Sensorineural Hearing Loss. J. Neurosci. 2019, 39, 6879–6887. [Google Scholar] [CrossRef]

- Parida, S.; Heinz, M.G. Distorted tonotopy severely degrades neural representations of connected speech in noise following acoustic trauma. J. Neurosci. 2022, 42, 1477–1490. [Google Scholar] [CrossRef]

- Bharadwaj, H.M.; Hustedt-Mai, A.R.; Ginsberg, H.M.; Dougherty, K.M.; Muthaiah, V.P.K.; Hagedorn, A.; Simpson, J.M.; Heinz, M.G. Cross-species experiments reveal widespread cochlear neural damage in normal hearing. Commun. Biol. 2022, 5, 733. [Google Scholar] [CrossRef]

- Bharadwaj, H.M.; Mai, A.R.; Simpson, J.M.; Choi, I.; Heinz, M.G.; Shinn-Cunningham, B.G. Non-invasive assays of cochlear synaptopathy—Candidates and considerations. Neuroscience 2019, 407, 53–66. [Google Scholar] [CrossRef]

- Houben, R.; Reinten, I.; Dreschler, W.A.; Mathijssen, R.; Dijkstra, T.M.H. Preferred Strength of Noise Reduction for Normally Hearing and Hearing-Impaired Listeners. Trends Hear. 2023, 27, 23312165231211437. [Google Scholar] [CrossRef] [PubMed]

| Model Fit Measures | ||||||||

| Model | R | R2 | Adjusted R2 | F | df1 | df2 | p | |

| 1 | 0.522 | 0.273 | 0.247 | 10.51 | 1 | 28 | 0.003 | |

| 2 | 0.576 | 0.331 | 0.282 | 6.69 | 2 | 27 | 0.004 | |

| Comparisons | Model | Model | ΔR2 | F | df1 | df2 | p | |

| 1 | 2 | 0.059 | 2.37 | 1 | 27 | 0.136 | ||

| Model Coefficients | ||||||||

| Model | Unstandardized | Standardized | t | p | Collinearity | |||

| B | SE | Beta | Tolerance | VIF | ||||

| 1 | (Constant) | −0.109 | 0.025 | −4.32 | <0.001 | |||

| Neural SNR | −0.008 | 0.003 | −0.522 | −3.24 | 0.003 | 1 | 1 | |

| 2 | (Constant) | −0.027 | 0.059 | −0.45 | 0.656 | |||

| Neural SNR | −0.007 | 0.003 | −0.454 | −2.77 | 0.01 | 0.926 | 1.08 | |

| Tolerance Rating | −0.021 | 0.014 | −0.252 | −1.54 | 0.136 | 0.926 | 1.08 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Arzac, S.; Dokic, N.; Donnelly, J.; Genser, N.; Nortwich, K.; Rooney, A. Cortical and Subjective Measures of Individual Noise Tolerance Predict Hearing Outcomes with Varying Noise Reduction Strength. Appl. Sci. 2024, 14, 6892. https://doi.org/10.3390/app14166892

Kim S, Arzac S, Dokic N, Donnelly J, Genser N, Nortwich K, Rooney A. Cortical and Subjective Measures of Individual Noise Tolerance Predict Hearing Outcomes with Varying Noise Reduction Strength. Applied Sciences. 2024; 14(16):6892. https://doi.org/10.3390/app14166892

Chicago/Turabian StyleKim, Subong, Susan Arzac, Natalie Dokic, Jenn Donnelly, Nicole Genser, Kristen Nortwich, and Alexis Rooney. 2024. "Cortical and Subjective Measures of Individual Noise Tolerance Predict Hearing Outcomes with Varying Noise Reduction Strength" Applied Sciences 14, no. 16: 6892. https://doi.org/10.3390/app14166892

APA StyleKim, S., Arzac, S., Dokic, N., Donnelly, J., Genser, N., Nortwich, K., & Rooney, A. (2024). Cortical and Subjective Measures of Individual Noise Tolerance Predict Hearing Outcomes with Varying Noise Reduction Strength. Applied Sciences, 14(16), 6892. https://doi.org/10.3390/app14166892