A Systematic Literature Review of Modalities, Trends, and Limitations in Emotion Recognition, Affective Computing, and Sentiment Analysis

Abstract

1. Introduction

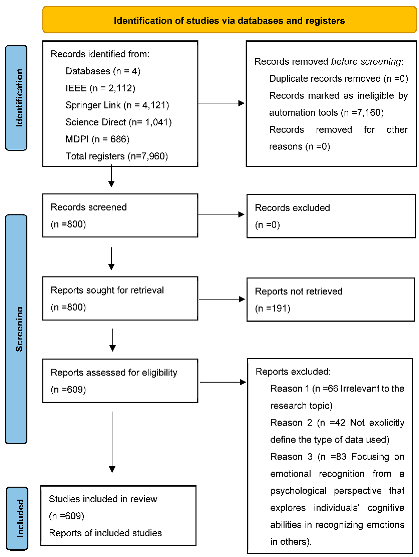

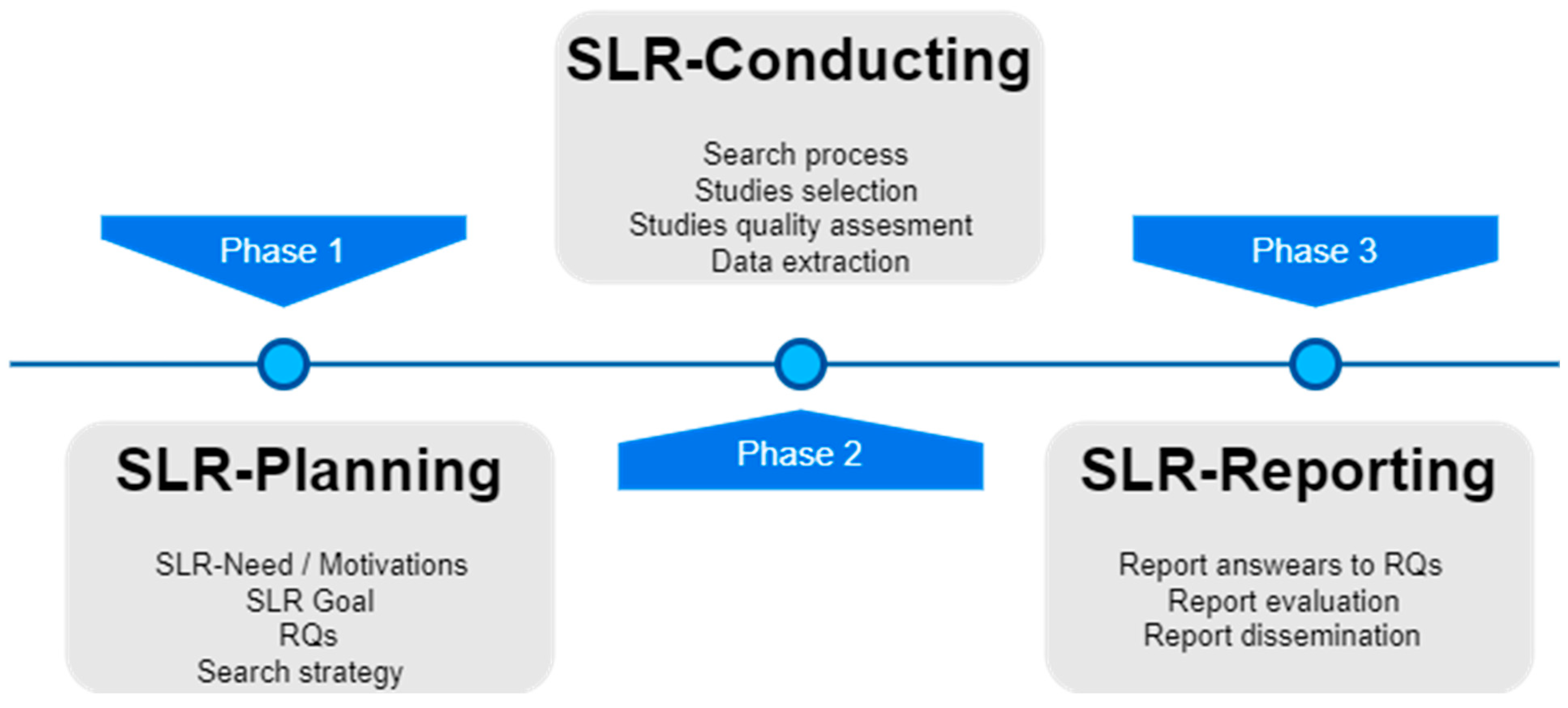

2. Methodology

2.1. Research Questions

2.2. Search Process

2.2.1. Search Terms

2.2.2. Inclusion and Exclusion Criteria

2.2.3. Quality Assessment

2.2.4. Data Extraction

3. Results

3.1. Overview

3.2. Unimodal Data Approaches

3.2.1. Unimodal Physical Approaches

3.2.2. Unimodal Speech Data Approaches

- Several articles mention the use of transfer learning for speech emotion recognition. This technique involves training models on one dataset and applying them to another. This can improve the efficiency of emotion recognition across different datasets.

- Some articles discuss multitask learning models, which are designed to simultaneously learn multiple related tasks. In the context of speech emotion recognition, this approach may help capture commonalities and differences across different datasets or emotions.

- Data augmentation techniques are mentioned in multiple articles, which involve generating additional training data from existing data, which can improve model performance and generalization.

- Attention mechanisms are a common trend for improving emotion recognition. Attention models allow the model to focus on specific features or segments of the input data that are most relevant for recognizing emotions, such as in multi-level attention-based approaches.

- Many articles discuss the use of deep learning models, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and some variants like “Two-Stage Fuzzy Fusion Based-Convolution Neural Network, “Deep Convolutional LSTM”, and “Attention-Oriented Parallel CNN Encoders”.

- While deep learning is prevalent, some articles explore novel feature engineering methods, such as modulation spectral features and wavelet packet information gain entropy, to enhance emotion recognition.

- From the list of articles on unimodal emotion recognition through speech, 7.14% address the challenge of recognizing emotions across different datasets or corpora. This is an important trend for making emotion recognition models more versatile.

- A few articles focus on making emotion recognition models more interpretable and explainable, which is crucial for real-world applications and understanding how the model makes its predictions.

- Ensemble methods, which combine multiple models to make predictions, are mentioned in several articles as a way to improve the performance of emotion recognition systems.

- Some articles discuss emotion recognition in specific contexts, such as call/contact centers, school violence detection, depression detection, analysis of podcast recordings, noisy environment analysis, in-the-wild sentiment analysis, and speech emotion segmentation of vowel-like and non-vowel-like regions. This indicates a trend toward applying emotion recognition in diverse applications.

3.2.3. Unimodal Text Data Approaches

3.2.4. Unimodal Physiological Data Approaches

- EEG signals continue to dominate the field of study as they provide direct information about brain activity and fluctuations in brain waves, which can reflect emotional states with high accuracy. EEG is particularly suitable for detecting subtle changes in emotional state and identifying specific patterns in the brain related to emotions. However, it requires the placement of electrodes on the scalp, which can be uncomfortable and limit mobility, and may be more susceptible to artifacts and noise than other physiological signals. During 2022 and 2023, the studies involving emotion recognition with these signals have grown a lot and some of the identified trends are as follows: using EEG signals to enhance human–computer interaction, especially in applications where an intuitive understanding of human emotions is required; some works focus on emotion recognition for patients with disorders of consciousness, during movie viewing, in virtual environments, and in driving scenarios; there is also a trend towards using EEG to aid in the detection and monitoring of mental health issues such as depression. There is a trend of exploring personalization methods in emotion recognition being applied to individual user characteristics, suggesting a direction towards more individualized and user-specific systems. In terms of the analysis models, most works focus on recognizing emotions from EEG signals using a variety of deep learning approaches and signal processing techniques. CNNs, RNNs, and combinations of them are widely used, but there are other trends like the following:

- Attention and self-attention mechanisms: These suggest that researchers are paying attention to the relevance of different parts of EEG signals for emotion recognition.

- Generative adversarial networks (GANs): Used for generating synthetic EEG data in order to improve the robustness and generalization of the models.

- Semi-supervised learning and domain transfer: Allow emotion recognition with limited datasets or datasets that are applicable to different domains, suggesting a concern for scalability and generalization of models.

- Interpretability and explainability: There is a growing interest in models that are interpretable and explainable, suggesting a concern for understanding how models make decisions and facilitating user trust in them.

- Utilization of transformers and capsule networks: Newer neural network architectures such as transformers and capsule networks are being explored for emotion recognition, indicating an interest in enhancing the modeling and representation capabilities of EEG signals.

- Although studies with a unimodal physical approach using signals different from EEG, like ECG, EDA, HR, and PPG, are still scarce, these can provide information about the cardiovascular system and the body’s autonomic response to emotions. Their limitations are that they may not be as specific or sensitive in detecting subtle or changing emotions. Noise and artifacts, such as motion, can affect the quality of these signals in practical situations and can be influenced by non-emotional factors, such as physical exercise and fatigue. Various studies explore the utilization of ECG and PPG signals for emotion recognition and stress classification. Techniques such as CNNs, LSTMs, attention mechanisms, self-supervised learning, and data augmentation are employed to analyze these signals and extract meaningful features for emotion recognition tasks. Bayesian deep learning frameworks are utilized for probabilistic modeling and uncertainty estimation in emotion prediction from HB data. These approaches aim to enhance human–computer interaction, improve mental health monitoring, and develop personalized systems for emotion recognition based on individual user characteristics.

3.3. Multi-Physical Data Approaches

- Most studies employ CNNs and RNNs, while others utilize variations of general neural networks, such as spiking neural networks (SNN) and tree-based neural networks. SNNs represent and transmit information through discrete bursts of neuronal activity, known as “spikes” or “pulses”, unlike conventional neural networks, which process information in continuous values. Additionally, several studies leverage advanced analysis models such as the stacked ensemble model and multimodal fusion models, which focus on integrating diverse sources of information to enhance decision-making. Transfer learning models and hybrid attention networks aim to capitalize on knowledge from related tasks or domains to improve performance in a target task. Attention-based neural networks prioritize capturing relevant information and patterns within the data. Semi-supervised and contrastive learning models offer alternative learning paradigms by incorporating both labeled and unlabeled data.

- The studies address diverse applications, including sarcasm, sentiment, and emotion recognition in conversations, financial distress prediction, performance evaluation in job interviews, emotion-based location recommendation systems, user experience (UX) analysis, emotion detection in video games, and in educational settings. This suggests that emotion recognition thorough multi-physical data analysis has a wide spectrum of applications in everyday life.

- Various audio and video signal processing techniques are employed, including pitch analysis, facial feature detection, cross-attention, and representational learning.

3.4. Multi-Physiological Data Approaches

- The fusion of physiological signals, such as EEG, ECG, PPG, GSR, EMG, BVP, EOG, respiration, temperature, and movement signals, is a predominant trend in these studies. The combination of multiple physiological signals allows for a richer representation of emotions.

- Most studies apply deep learning models, such as CNNs, RNNs, and autoencoder neural networks (AE), for the processing and analysis of these signals. Supervised and unsupervised learning approaches are also used.

- These studies focus on a variety of applications, such as emotion recognition in healthcare environments, brain–computer interfaces for music, emotion detection in interactive virtual environments, stress assessment in mobility environments for visually impaired people, among others. This indicates that emotion recognition based on physiological signals has applications in healthcare, technology, and beyond.

- Some studies focus on personalized emotion recognition, suggesting tailoring of models for each individual. This may be relevant for personalized health and wellness applications. Others focus on interactive applications and virtual environments useful for entertainment and virtual therapy.

- It is important to mention that the studies within this classification are quite limited in comparison to the previously described modalities. Although it appears that they are using similar physiological signals, the databases differ in terms of their approaches and generation methods. Therefore, there is an opportunity to establish a protocol for generating these databases, allowing for meaningful comparisons among studies.

3.5. Multi-Physical–Physiological Data Approaches

- Studies tend to combine multiple types of signals, such as EEG, facial expressions, voice signals, GSR, and other physiological data. Combining signals aims to take advantage of the complementarity of different modalities to improve accuracy in emotion detection.

- Machine learning models, in particular CNNs, are widely used in signal fusion for emotion recognition. CNN models can effectively process data from multiple modalities.

- Applications are also being explored in the health and wellness domain, such as emotion detection for emotional health analysis of people in smart environments.

- The use of standardized and widely accepted databases is important for comparing results between different studies; however, these are still limited.

- The trend towards non-intrusive sensors and wireless technology enables data collection in more natural and less intrusive environments, which facilitates the practical application of these systems in everyday environments.

4. Discussion

- Facial expression analysis approaches are currently being applied across various domains, including naturalistic settings (“in the wild”), on-road driver monitoring, virtual reality environments, smart homes, IoT and edge devices, and assistive robots. There is also a focus on mental health assessment, including autism, depression, and schizophrenia, and distinguishing between genuine and unfelt facial expressions of emotion. Efforts are being made to improve performance in processing faces acquired at a distance despite the challenges posed by low-quality images. Furthermore, there is an emerging interest in utilizing facial expression analysis in human–computer interaction (HCI), learning environments, and multicultural contexts.

- The recognition of emotions through speech and text has experienced tremendous growth, largely due to the abundance of information facilitated by advancements in technology and social media. This has enabled individuals to express their opinions and sentiments through various media, including podcast recordings, live videos, and readily available data sources such as social media platforms like Twitter, Facebook, Instagram, and blogs. Additionally, researchers have utilized unconventional sources like stock market data and tourism-related reviews. The variety and richness of these data sources indicate a wide range of segments where such emotion recognition analyses can be applied effectively.

- EEG signals continue to be a prominent modality for emotion recognition due to their highly accurate insights into emotional states. Between 2022 and 2023, studies in this field experienced exponential growth. The identified trends include utilizing EEG for enhancing human–computer interaction, recognizing emotions in various contexts such as patients with consciousness disorders, movie viewing, virtual environments, and driving scenarios. EEG is being used for detecting and monitoring mental health issues. There is also a growing focus on personalization, leading towards more individualized and user-specific emotion recognition systems, Other physiological signals, such as ECG, EDA, and HR, are also gaining attention, albeit at a slower pace.

- In the realm of multi-physical, multi-physiological, and multi-physical–physiological approaches, it is the former that appears to be laying the groundwork, as evidenced by the abundance of studies in this area. The latter two approaches, incorporating fusions with physiological signals, are still relatively scarce but seem to be paving the way for future researchers to contribute to their growth. Multimodal approaches, which integrate both physical and physiological signals, are finding diverse applications in emotion recognition. These range from healthcare systems, individual and group mood research, personality recognition, pain intensity recognition, anxiety detection, work stress detection, stress classification and security monitoring in public spaces, to vehicle security monitoring, movie audience emotion recognition, applications for autism spectrum disorder detection, music interfacing, and virtual environments.

- Bidirectional encoder representations from transformers: Used in sentiment analysis and emotion recognition from text, BERT models can understand the context of words in sentences by pre-training on a large text and then fine-tuning for specific tasks like sentiment analysis.

- CNNs: These are commonly applied in facial emotion recognition, emotion recognition from physiological signals, and even in speech emotion recognition by analyzing spectrograms.

- RNNS and variants (LSTM, GRU): These models are suited for sequential data like speech and text. LSTMs and GRUs are particularly effective in speech emotion recognition and sentiment analysis of time-series data.

- Graph convolutional networks (GCNs): Applied in emotion recognition from EEG signals and conversation-based emotion recognition, these can model relational data and capture the complex dependencies in graph-structured data, like brain connectivity patterns or conversational contexts.

- Attention mechanisms and transformers: Enhancing the ability of models to focus on relevant parts of the data, attention mechanisms are integral to models like transformers for tasks that require understanding the context, such as sentiment analysis in long documents or emotion recognition in conversations.

- Ensemble models: Combining predictions from multiple models to improve accuracy, ensemble methods are used in multimodal emotion recognition, where inputs from different modalities (e.g., audio, text, and video) are integrated to make more accurate predictions.

- Autoencoders and generative adversarial networks (GANs): For tasks like data augmentation in emotion recognition from EEG or for generating synthetic data to improve model robustness, these unsupervised learning models can learn compact representations of data or generate new data samples, respectively.

- Multimodal fusion models: In applications requiring the integration of multiple data types (e.g., speech, text, and video for emotion recognition), fusion models combine features from different modalities to capture more comprehensive information for prediction tasks.

- Transfer learning: Utilizing pre-trained models on large datasets and fine-tuning them for specific affective computing tasks, transfer learning is particularly useful in scenarios with limited labeled data, such as sentiment analysis in niche domains.

- Spatio-temporal models: For tasks that involve data with both spatial and temporal dimensions (like video-based emotion recognition or physiological signal analysis), models that capture spatio-temporal dynamics are employed, combining approaches like CNNs for spatial features and RNNs/LSTMs for temporal features.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Zhou, T.H.; Liang, W.; Liu, H.; Wang, L.; Ryu, K.H.; Nam, K.W. EEG Emotion Recognition Applied to the Effect Analysis of Music on Emotion Changes in Psychological Healthcare. Int. J. Environ. Res. Public Health 2022, 20, 378. [Google Scholar] [CrossRef] [PubMed]

- Hajek, P.; Munk, M. Speech Emotion Recognition and Text Sentiment Analysis for Financial Distress Prediction. Neural Comput. Appl. 2023, 35, 21463–21477. [Google Scholar] [CrossRef]

- Naim, I.; Tanveer, M.d.I.; Gildea, D.; Hoque, M.E. Automated Analysis and Prediction of Job Interview Performance. IEEE Trans. Affect. Comput. 2018, 9, 191–204. [Google Scholar] [CrossRef]

- Ayata, D.; Yaslan, Y.; Kamasak, M.E. Emotion Recognition from Multimodal Physiological Signals for Emotion Aware Healthcare Systems. J. Med. Biol. Eng. 2020, 40, 149–157. [Google Scholar] [CrossRef]

- Maithri, M.; Raghavendra, U.; Gudigar, A.; Samanth, J.; Barua, D.P.; Murugappan, M.; Chakole, Y.; Acharya, U.R. Automated Emotion Recognition: Current Trends and Future Perspectives. Comput. Methods Programs Biomed. 2022, 215, 106646. [Google Scholar] [CrossRef] [PubMed]

- Du, Z.; Wu, S.; Huang, D.; Li, W.; Wang, Y. Spatio-Temporal Encoder-Decoder Fully Convolutional Network for Video-Based Dimensional Emotion Recognition. IEEE Trans. Affect. Comput. 2021, 12, 565–578. [Google Scholar] [CrossRef]

- Montero Quispe, K.G.; Utyiama, D.M.S.; dos Santos, E.M.; Oliveira, H.A.B.F.; Souto, E.J.P. Applying Self-Supervised Representation Learning for Emotion Recognition Using Physiological Signals. Sensors 2022, 22, 9102. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Liu, Y.; Rong, L.; Zheng, Q.; Song, D.; Tiwari, P.; Qin, J. A Multitask Learning Model for Multimodal Sarcasm, Sentiment and Emotion Recognition in Conversations. Inf. Fusion 2023, 93, 282–301. [Google Scholar] [CrossRef]

- Leong, S.C.; Tang, Y.M.; Lai, C.H.; Lee, C.K.M. Facial Expression and Body Gesture Emotion Recognition: A Systematic Review on the Use of Visual Data in Affective Computing. Comput. Sci. Rev. 2023, 48, 100545. [Google Scholar] [CrossRef]

- Aranha, R.V.; Correa, C.G.; Nunes, F.L.S. Adapting Software with Affective Computing: A Systematic Review. IEEE Trans. Affect. Comput. 2021, 12, 883–899. [Google Scholar] [CrossRef]

- Kratzwald, B.; Ilić, S.; Kraus, M.; Feuerriegel, S.; Prendinger, H. Deep Learning for Affective Computing: Text-Based Emotion Recognition in Decision Support. Decis. Support. Syst. 2018, 115, 24–35. [Google Scholar] [CrossRef]

- Ab. Aziz, N.A.; K., T.; Ismail, S.N.M.S.; Hasnul, M.A.; Ab. Aziz, K.; Ibrahim, S.Z.; Abd. Aziz, A.; Raja, J.E. Asian Affective and Emotional State (A2ES) Dataset of ECG and PPG for Affective Computing Research. Algorithms 2023, 16, 130. [Google Scholar] [CrossRef]

- Jung, T.-P.; Sejnowski, T.J. Utilizing Deep Learning Towards Multi-Modal Bio-Sensing and Vision-Based Affective Computing. IEEE Trans. Affect. Comput. 2022, 13, 96–107. [Google Scholar] [CrossRef]

- Shah, S.; Ghomeshi, H.; Vakaj, E.; Cooper, E.; Mohammad, R. An Ensemble-Learning-Based Technique for Bimodal Sentiment Analysis. Big Data Cogn. Comput. 2023, 7, 85. [Google Scholar] [CrossRef]

- Tang, J.; Hou, M.; Jin, X.; Zhang, J.; Zhao, Q.; Kong, W. Tree-Based Mix-Order Polynomial Fusion Network for Multimodal Sentiment Analysis. Systems 2023, 11, 44. [Google Scholar] [CrossRef]

- Khamphakdee, N.; Seresangtakul, P. An Efficient Deep Learning for Thai Sentiment Analysis. Data 2023, 8, 90. [Google Scholar] [CrossRef]

- Jo, A.-H.; Kwak, K.-C. Speech Emotion Recognition Based on Two-Stream Deep Learning Model Using Korean Audio Information. Appl. Sci. 2023, 13, 2167. [Google Scholar] [CrossRef]

- Abdulrahman, A.; Baykara, M.; Alakus, T.B. A Novel Approach for Emotion Recognition Based on EEG Signal Using Deep Learning. Appl. Sci. 2022, 12, 10028. [Google Scholar] [CrossRef]

- Middya, A.I.; Nag, B.; Roy, S. Deep Learning Based Multimodal Emotion Recognition Using Model-Level Fusion of Audio–Visual Modalities. Knowl. Based Syst. 2022, 244, 108580. [Google Scholar] [CrossRef]

- Ali, M.; Mosa, A.H.; Al Machot, F.; Kyamakya, K. EEG-Based Emotion Recognition Approach for e-Healthcare Applications. In Proceedings of the 2016 Eighth International Conference on Ubiquitous and Future Networks (ICUFN), Vienna, Austria, 5–8 July 2016; pp. 946–950. [Google Scholar]

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R.W. Driver Emotion Recognition for Intelligent Vehicles. ACM Comput. Surv. (CSUR) 2020, 53, 1–30. [Google Scholar] [CrossRef]

- Zaman, K.; Zhaoyun, S.; Shah, B.; Hussain, T.; Shah, S.M.; Ali, F.; Khan, U.S. A Novel Driver Emotion Recognition System Based on Deep Ensemble Classification. Complex. Intell. Syst. 2023, 9, 6927–6952. [Google Scholar] [CrossRef]

- Du, Y.; Crespo, R.G.; Martínez, O.S. Human Emotion Recognition for Enhanced Performance Evaluation in E-Learning. Prog. Artif. Intell. 2022, 12, 199–211. [Google Scholar] [CrossRef]

- Alaei, A.; Wang, Y.; Bui, V.; Stantic, B. Target-Oriented Data Annotation for Emotion and Sentiment Analysis in Tourism Related Social Media Data. Future Internet 2023, 15, 150. [Google Scholar] [CrossRef]

- Caratù, M.; Brescia, V.; Pigliautile, I.; Biancone, P. Assessing Energy Communities’ Awareness on Social Media with a Content and Sentiment Analysis. Sustainability 2023, 15, 6976. [Google Scholar] [CrossRef]

- Bota, P.J.; Wang, C.; Fred, A.L.N.; Placido Da Silva, H. A Review, Current Challenges, and Future Possibilities on Emotion Recognition Using Machine Learning and Physiological Signals. IEEE Access 2019, 7, 140990–141020. [Google Scholar] [CrossRef]

- Egger, M.; Ley, M.; Hanke, S. Emotion Recognition from Physiological Signal Analysis: A Review. Electron. Notes Theor. Comput. Sci. 2019, 343, 35–55. [Google Scholar] [CrossRef]

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors 2018, 18, 2074. [Google Scholar] [CrossRef]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; de Sa Junior, A.R.; Pozzebon, E.; Sobieranski, A.C. A Survey on Facial Emotion Recognition Techniques: A State-of-the-Art Literature Review. Inf. Sci. 2022, 582, 593–617. [Google Scholar] [CrossRef]

- Assabumrungrat, R.; Sangnark, S.; Charoenpattarawut, T.; Polpakdee, W.; Sudhawiyangkul, T.; Boonchieng, E.; Wilaiprasitporn, T. Ubiquitous Affective Computing: A Review. IEEE Sens. J. 2022, 22, 1867–1881. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Dürichen, R.; Laerhoven, K. Van Wearable-Based Affect Recognition—A Review. Sensors 2019, 19, 4079. [Google Scholar] [CrossRef]

- Rouast, P.V.; Adam, M.T.P.; Chiong, R. Deep Learning for Human Affect Recognition: Insights and New Developments. IEEE Trans. Affect. Comput. 2021, 12, 524–543. [Google Scholar] [CrossRef]

- Ahmed, N.; Aghbari, Z.A.; Girija, S. A Systematic Survey on Multimodal Emotion Recognition Using Learning Algorithms. Intell. Syst. Appl. 2023, 17, 200171. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Keele University: Keele, UK, 2004; Volume 33, pp. 1–26. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef]

- Al Jazaery, M.; Guo, G. Video-Based Depression Level Analysis by Encoding Deep Spatiotemporal Features. IEEE Trans. Affect. Comput. 2021, 12, 262–268. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Exploiting Multi-CNN Features in CNN-RNN Based Dimensional Emotion Recognition on the OMG in-the-Wild Dataset. IEEE Trans. Affect. Comput. 2021, 12, 595–606. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. A Deeper Look at Facial Expression Dataset Bias. IEEE Trans. Affect. Comput. 2022, 13, 881–893. [Google Scholar] [CrossRef]

- Kulkarni, K.; Corneanu, C.A.; Ofodile, I.; Escalera, S.; Baro, X.; Hyniewska, S.; Allik, J.; Anbarjafari, G. Automatic Recognition of Facial Displays of Unfelt Emotions. IEEE Trans. Affect. Comput. 2021, 12, 377–390. [Google Scholar] [CrossRef]

- Punuri, S.B.; Kuanar, S.K.; Kolhar, M.; Mishra, T.K.; Alameen, A.; Mohapatra, H.; Mishra, S.R. Efficient Net-XGBoost: An Implementation for Facial Emotion Recognition Using Transfer Learning. Mathematics 2023, 11, 776. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Djuraev, O.; Akhmedov, F.; Mukhamadiyev, A.; Cho, J. Masked Face Emotion Recognition Based on Facial Landmarks and Deep Learning Approaches for Visually Impaired People. Sensors 2023, 23, 1080. [Google Scholar] [CrossRef]

- Babu, E.K.; Mistry, K.; Anwar, M.N.; Zhang, L. Facial Feature Extraction Using a Symmetric Inline Matrix-LBP Variant for Emotion Recognition. Sensors 2022, 22, 8635. [Google Scholar] [CrossRef]

- Mustafa Hilal, A.; Elkamchouchi, D.H.; Alotaibi, S.S.; Maray, M.; Othman, M.; Abdelmageed, A.A.; Zamani, A.S.; Eldesouki, M.I. Manta Ray Foraging Optimization with Transfer Learning Driven Facial Emotion Recognition. Sustainability 2022, 14, 14308. [Google Scholar] [CrossRef]

- Bisogni, C.; Cimmino, L.; De Marsico, M.; Hao, F.; Narducci, F. Emotion Recognition at a Distance: The Robustness of Machine Learning Based on Hand-Crafted Facial Features vs Deep Learning Models. Image Vis. Comput. 2023, 136, 104724. [Google Scholar] [CrossRef]

- Sun, Q.; Liang, L.; Dang, X.; Chen, Y. Deep Learning-Based Dimensional Emotion Recognition Combining the Attention Mechanism and Global Second-Order Feature Representations. Comput. Electr. Eng. 2022, 104, 108469. [Google Scholar] [CrossRef]

- Sudha, S.S.; Suganya, S.S. On-Road Driver Facial Expression Emotion Recognition with Parallel Multi-Verse Optimizer (PMVO) and Optical Flow Reconstruction for Partial Occlusion in Internet of Things (IoT). Meas. Sens. 2023, 26, 100711. [Google Scholar] [CrossRef]

- Barra, P.; De Maio, L.; Barra, S. Emotion Recognition by Web-Shaped Model. Multimed. Tools Appl. 2023, 82, 11321–11336. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Choudhury, D.; Dey, D. Edge-Enhanced Bi-Dimensional Empirical Mode Decomposition-Based Emotion Recognition Using Fusion of Feature Set. Soft Comput. 2018, 22, 889–903. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A Complete Dataset for Action Unit and Emotion-Specified Expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Zhao, G.; Huang, X.; Taini, M.; Li, S.Z.; Pietikäinen, M. Facial Expression Recognition from Near-Infrared Videos. Image Vis. Comput. 2011, 29, 607–619. [Google Scholar] [CrossRef]

- Barros, P.; Churamani, N.; Lakomkin, E.; Siqueira, H.; Sutherland, A.; Wermter, S. The OMG-Emotion Behavior Dataset. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Ullah, Z.; Qi, L.; Hasan, A.; Asim, M. Improved Deep CNN-Based Two Stream Super Resolution and Hybrid Deep Model-Based Facial Emotion Recognition. Eng. Appl. Artif. Intell. 2022, 116, 105486. [Google Scholar] [CrossRef]

- Zheng, W.; Zong, Y.; Zhou, X.; Xin, M. Cross-Domain Color Facial Expression Recognition Using Transductive Transfer Subspace Learning. IEEE Trans. Affect. Comput. 2018, 9, 21–37. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M. RoBERTa-GRU: A Hybrid Deep Learning Model for Enhanced Sentiment Analysis. Appl. Sci. 2023, 13, 3915. [Google Scholar] [CrossRef]

- Ren, M.; Huang, X.; Li, W.; Liu, J. Multi-Loop Graph Convolutional Network for Multimodal Conversational Emotion Recognition. J. Vis. Commun. Image Represent. 2023, 94, 103846. [Google Scholar] [CrossRef]

- Mai, S.; Hu, H.; Xu, J.; Xing, S. Multi-Fusion Residual Memory Network for Multimodal Human Sentiment Comprehension. IEEE Trans. Affect. Comput. 2022, 13, 320–334. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, D.; Sahli, H. Integrating Deep and Shallow Models for Multi-Modal Depression Analysis—Hybrid Architectures. IEEE Trans. Affect. Comput. 2021, 12, 239–253. [Google Scholar] [CrossRef]

- Mocanu, B.; Tapu, R.; Zaharia, T. Multimodal Emotion Recognition Using Cross Modal Audio-Video Fusion with Attention and Deep Metric Learning. Image Vis. Comput. 2023, 133, 104676. [Google Scholar] [CrossRef]

- Noroozi, F.; Marjanovic, M.; Njegus, A.; Escalera, S.; Anbarjafari, G. Audio-Visual Emotion Recognition in Video Clips. IEEE Trans. Affect. Comput. 2019, 10, 60–75. [Google Scholar] [CrossRef]

- Davison, A.K.; Lansley, C.; Costen, N.; Tan, K.; Yap, M.H. SAMM: A Spontaneous Micro-Facial Movement Dataset. IEEE Trans. Affect. Comput. 2018, 9, 116–129. [Google Scholar] [CrossRef]

- Happy, S.L.; Routray, A. Fuzzy Histogram of Optical Flow Orientations for Micro-Expression Recognition. IEEE Trans. Affect. Comput. 2019, 10, 394–406. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Marberger, C.; Van Laerhoven, K. Introducing WESAD, a Multimodal Dataset for Wearable Stress and Affect Detection. In Proceedings of the Proceedings of the 20th ACM International Conference on Multimodal Interaction, New York, NY, USA, 2 October 2018; ACM: New York, NY, USA, 2018; pp. 400–408. [Google Scholar]

- Miranda-Correa, J.A.; Abadi, M.K.; Sebe, N.; Patras, I. AMIGOS: A Dataset for Affect, Personality and Mood Research on Individuals and Groups. IEEE Trans. Affect. Comput. 2021, 12, 479–493. [Google Scholar] [CrossRef]

- Subramanian, R.; Wache, J.; Abadi, M.K.; Vieriu, R.L.; Winkler, S.; Sebe, N. ASCERTAIN: Emotion and Personality Recognition Using Commercial Sensors. IEEE Trans. Affect. Comput. 2018, 9, 147–160. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, C.; Wang, S.; Xia, T. Emotion Recognition Using Heterogeneous Convolutional Neural Networks Combined with Multimodal Factorized Bilinear Pooling. Biomed. Signal Process Control 2022, 77, 103877. [Google Scholar] [CrossRef]

- The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Available online: https://www.prisma-statement.org/prisma-2020-statement (accessed on 12 August 2024).

| Database | Resulted Studies with Key Terms | After Years Filter | After Article Type | Relevant Order |

|---|---|---|---|---|

| IEEE | 2112 | 1152 | 536 | 200 |

| Springer | 4121 | 1808 | 1694 | 200 |

| Science Direct | 1041 | 582 | 480 | 200 |

| MDPI | 686 | 643 | 635 | 200 |

| Database | Quantity |

|---|---|

| IEEE | 148 |

| Springer | 112 |

| Science Direct | 166 |

| MDPI | 183 |

| Modality | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | Total |

|---|---|---|---|---|---|---|---|

| Multi-physical | 8 | 6 | 8 | 22 | 27 | 71 | |

| Multi-physical–physiological | 2 | 3 | 6 | 7 | 18 | ||

| Multi-physiological | 2 | 6 | 3 | 6 | 4 | 21 | |

| Unimodal | 37 | 26 | 29 | 37 | 176 | 194 | 499 |

| Total | 49 | 32 | 35 | 51 | 210 | 232 | 609 |

| Article Title | Databases Used | Ref. |

|---|---|---|

| AffectNet: A Database for Facial Expression, Valence, and Arousal Computing in the Wild. | AffectNet | [35] |

| Video-Based Depression Level Analysis by Encoding Deep Spatiotemporal Features. | AVEC2013, AVEC2014 | [36] |

| Exploiting Multi-CNN Features in CNN-RNN Based Dimensional Emotion Recognition on the OMG in-the-Wild Dataset. | Aff-Wild, Aff-Wild2, OMG | [37] |

| A Deeper Look at Facial Expression Dataset Bias. | CK+, JAFFE, MMI, Oulu-CASIA, AffectNet, FER2013, RAF-DB 2.0, SFEW 2.0 | [38] |

| Automatic Recognition of Facial Displays of Unfelt Emotions. | CK+, OULU-CASIA, BP4D | [39] |

| Spatio-Temporal Encoder-Decoder Fully Convolutional Network for Video-Based Dimensional Emotion Recognition. | OMG, RECOLA, SEWA | [6] |

| Efficient Net-XGBoost: An Implementation for Facial Emotion Recognition Using Transfer Learning. | CK+, FER2013, JAFFE, KDEF | [40] |

| Masked Face Emotion Recognition Based on Facial Landmarks and Deep Learning Approaches for Visually Impaired People. | AffectNet | [41] |

| Facial Feature Extraction Using a Symmetric Inline Matrix-LBP Variant for Emotion Recognition. | JAFFE | [42] |

| Manta Ray Foraging Optimization with Transfer Learning Driven Facial Emotion Recognition. | CK+, FER-2013 | [43] |

| Emotion recognition at a distance: The robustness of machine learning based on hand-crafted facial features vs deep learning models. | CK+ | [44] |

| Deep learning-based dimensional emotion recognition combining the attention mechanism and global second-order feature representations. | AffectNet | [45] |

| On-road driver facial expression emotion recognition with parallel multi-verse optimizer (PMVO) and optical flow reconstruction for partial occlusion in internet of things (IoT). | CK+, KMU-FED | [46] |

| Emotion recognition by web-shaped model. | CK+, KDEF | [47] |

| Edge-enhanced bi-dimensional empirical mode decomposition-based emotion recognition using fusion of feature set | eNTERFACE, CK, JAFFE | [48] |

| A novel driver emotion recognition system based on deep ensemble classification | AffectNet, CK+, DFER, FER-2013, JAFFE, and custom- dataset) | [22] |

| 1.Facial emotion recognition for mental health assessment (depression, schizophrenia) | 14. Emotion recognition performance assessment from faces acquired at a distance. |

| 2. Emotion analysis in human-computer interaction | 15. Facial emotion recognition for IoT and edge devices |

| 3. Emotion recognition in the context of autism | 16. Idiosyncratic bias in emotion recognition |

| 4. Driver emotion recognition for intelligent vehicles | 17. Emotion recognition in socially assistive robots |

| 5. Assessment of emotional engagement in learning environments | 18. In the wild facial emotion recognition |

| 6. Facial emotion recognition for apparent personality trait analysis | 19. Video-based emotion recognition |

| 7. Facial emotion recognition for gender, age, and ethnicity estimation | 20. Spatio-temporal emotion recognition in videos |

| 8. Emotion recognition in virtual reality and smart homes | 21. Spontaneous emotion recognition |

| 9. Emotion recognition in healthcare and clinical settings | 22. Emotion recognition using facial components |

| 10. Emotion recognition in real-world and COVID-19 masked scenarios | 23. Comparing emotion recognition from genuine and unfelt |

| 11. Personalized and group-based emotion recognition | facial expressions. |

| 12. Music-enhanced emotion recognition | |

| 13. Cross-dataset emotion recognition |

| Database Name | Description | Advantages | Limitation |

|---|---|---|---|

| MELD (Multimodal Emotion Lines Dataset) [14] | Focuses on emotion recognition in movie dialogues. It contains transcriptions of dialogues and their corresponding audio and video tracks. Emotions are labeled at the sentence and speaker levels. | Large amount of data, multimodal (text, audio, video). | Emotions induced by movies. Manually labeled. |

| IEMOCAP (Interactive Emotional Dyadic Motion Capture), 2005 [55] | Focuses on emotional interactions between two individuals during acting sessions. It contains video and audio recordings of actors performing emotional scenes. | Realistic data, emotional interactions, a wide range of emotions. | Not real induced emotions (acting). |

| CMU-MOSI (Multimodal Corpus of Sentiment Intensity. 2014, 2017 [56] | Focuses on sentiment intensity in speeches and interviews. It includes transcriptions of audio and video, along with sentiment annotations. Updated in the 2017 CMU-MOSEI. | Emotions are derived from real speeches and interviews. | Relatively small size. |

| AVEC (Affective Behavior in the Context of E-Learning with Social Signals 2007–2016 [57] | AVEC is a series of competitions focused on the detection of emotions and behaviors in the context of online learning. It includes video and audio data of students participating in e-learning activities. | Emotions are naturally induced during online learning activities. | Context-specific data, enables emotion assessment in e-learning settings. |

| RAVDESS (The Ryerson Audio-Visual Database of Emotional Speech and Song) 2016 [58] | Audio and video database that focuses on emotion recognition in speech and song. It includes performances by actors expressing various emotions. | Diverse data in terms of emotions, modalities, and contexts. | Does not contain natural dialogues. |

| SAVEE (Surrey Audio–Visual Expressed Emotion) 2010 [59] | Focuses on emotion recognition in speech. It contains recordings of speakers expressing emotions through phrases and words. | Clean audio data. | |

| SAMM (Spontaneous Micro-expression Dataset) [60] | Focuses on spontaneous micro-expressions that last only a fraction of a second. It contains videos of people expressing emotions in real emotional situations. | Real spontaneous micro-expressions. | |

| CASME (Chinese Academy of Sciences Micro-Expression) [61] | Focus on the detection of micro-expressions in response to emotional stimuli. They contain videos of micro-expressions. | Induced by emotional stimuli. | Not multicultural. |

| Database Name | Description | Advantages | Limitation |

|---|---|---|---|

| WESAD (Wearable Stress and Affect Detection) [62] | It focuses on stress and affect recognition from physiological signals like ECG, EMG, and EDA, as well as motion signals from accelerometers. Data were collected while participants performed tasks and experienced emotions in a controlled laboratory setting, wearing wearable sensors. | Facilitates the development of wearable emotion recognition systems. | The dataset is relatively small, and participant diversity may be limited. |

| AMIGOS [63] | It is a multimodal dataset for personality traits and mood. Emotions are induced by emotional videos in two social contexts: one with individual viewers and one with groups of viewers. Participants’ EEG, ECG, and GSR signals were recorded using wearable sensors. Frontal HD videos and full-body videos in RGB and depth were also recorded. | Participants’ emotions were scored by self-assessment of valence, arousal, control, familiarity, liking, and basic emotions felt during the videos, as well as external assessments of valence and arousal. | Reduced number of participants. |

| DREAMER [13] | Records physiological ECG, EMG, and EDA signals and self-reported emotional responses. Collected during the presentation of emotional video clips. | Enables the study of emotional responses in a controlled environment and their comparison with self-reported emotions. | Emotions may be biased towards those induced by video clips, and the dataset size is limited. |

| ASCERTAIN [64] | Focus on linking personality traits and emotional states through physiological responses like EEG, ECG, GSR, and facial activity data while participants watched emotionally charged movie clips. | Suitable for studying emotions in stressful situations and their impact on human activity. | The variety of emotions induced is limited. |

| DEAP (Database for Emotion Analysis using Physiological Signals), [65,66] | Includes physiological signals like EEG, ECG, EMG, and EDA, as well as audiovisual data. Data were collected by exposing participants to audiovisual stimuli designed to elicit various emotions. | Provides a diverse range of emotions and physiological data for emotion analysis. | The size of the database is small. |

| MAHNOB-HCI (Multimodal Human Computer Interaction Database for Affect Analysis and Recognition) [13,66]. | Includes multimodal data, such as audio, video, physiological, ECG, EDA, and kinematic data. Data were collected while participants engaged in various human–computer interaction scenarios. | Offers a rich dataset for studying emotional responses during interactions with technology. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

García-Hernández, R.A.; Luna-García, H.; Celaya-Padilla, J.M.; García-Hernández, A.; Reveles-Gómez, L.C.; Flores-Chaires, L.A.; Delgado-Contreras, J.R.; Rondon, D.; Villalba-Condori, K.O. A Systematic Literature Review of Modalities, Trends, and Limitations in Emotion Recognition, Affective Computing, and Sentiment Analysis. Appl. Sci. 2024, 14, 7165. https://doi.org/10.3390/app14167165

García-Hernández RA, Luna-García H, Celaya-Padilla JM, García-Hernández A, Reveles-Gómez LC, Flores-Chaires LA, Delgado-Contreras JR, Rondon D, Villalba-Condori KO. A Systematic Literature Review of Modalities, Trends, and Limitations in Emotion Recognition, Affective Computing, and Sentiment Analysis. Applied Sciences. 2024; 14(16):7165. https://doi.org/10.3390/app14167165

Chicago/Turabian StyleGarcía-Hernández, Rosa A., Huizilopoztli Luna-García, José M. Celaya-Padilla, Alejandra García-Hernández, Luis C. Reveles-Gómez, Luis Alberto Flores-Chaires, J. Ruben Delgado-Contreras, David Rondon, and Klinge O. Villalba-Condori. 2024. "A Systematic Literature Review of Modalities, Trends, and Limitations in Emotion Recognition, Affective Computing, and Sentiment Analysis" Applied Sciences 14, no. 16: 7165. https://doi.org/10.3390/app14167165

APA StyleGarcía-Hernández, R. A., Luna-García, H., Celaya-Padilla, J. M., García-Hernández, A., Reveles-Gómez, L. C., Flores-Chaires, L. A., Delgado-Contreras, J. R., Rondon, D., & Villalba-Condori, K. O. (2024). A Systematic Literature Review of Modalities, Trends, and Limitations in Emotion Recognition, Affective Computing, and Sentiment Analysis. Applied Sciences, 14(16), 7165. https://doi.org/10.3390/app14167165