Abstract

Besides video content, a significant part of entertainment is represented by computer games and animations such as cartoons. Creating such entertainment is based on two fundamental steps: asset generation and character animation. The main problem stems from its repetitive nature and the needed amounts of concentration and skill. The latest advances in deep learning and generative techniques have provided a set of powerful tools which can be used to alleviate these problems by facilitating the tasks of artists and engineers and providing a better workflow. In this work we explore practical solutions for facilitating and hastening the creative process: character animation and asset generation. In character animation, the task is to either move the joints of a subject manually or to correct the noisy data coming out of motion capture. The main difficulties of these tasks are their repetitive nature and the needed amounts of concentration and skill. For the animation case, we propose two decoder-only transformer based solutions, inspired by the current success of GPT. The first, AnimGPT, targets the original animation workflow by predicting the next pose of an animation based on a set of previous poses, while the second, DenoiseAnimGPT, tackles the motion capture case by predicting the clean current pose based on all previous poses and the current noisy pose. Both models obtained good performances on the CMU motion dataset, with the generated results being imperceptible to the untrained human eye. Quantitative evaluation was performed using mean absolute error between the ground truth motion vectors and the predicted motion vector. For both networks AnimGPT and DenoiseAnimGPT errors were 0.345, respectively 0.2513 (for 50 frames) that indicates better performances compared with other solutions. For asset generation, diffusion models were used. Using image generation and outpainting, we created a method that generates good backgrounds by combining the idea of text conditioned generation and text conditioned image editing. A time coherent algorithm that creates animated effects for characters was obtained.

1. Introduction

Deep learning has proven to be a tool of immeasurable value over the last several years. Whether we talk about processing images, where we are able to extract objects, patterns, descriptions or altering the images altogether to obtain new ones, or about natural language processing, where we managed to obtain powerful translators, programs that can sense the intent of our messages and even tools that write articles by themselves, or about reinforcement learning, where models managed to beat even the best human players in different games. Deep learning managed to obtain results that few people could have imagined a decade ago.

Computer games combine computer graphics, algorithms, mathematics, physics and software engineering in the pursuit of creating alternate realities, which are further enhanced for extra appeal to the public with humanities such as philosophy, psychology, storytelling and art. In case of 3D games, the main part consists in 3D characters. These characters must move, jump, crouch, or, simply put, interact with the digital world in a way similar to how we are used to in the real world. Traditionally, the behaviour of the characters has been scripted by creating a specific animation for each possible action. These characters are accompanied by a skeleton, so only the joints must be moved to create movement of the whole character (similar to how our own body moves). By essentially creating a state machine and limiting the number of available actions, game developers were able to create simple frameworks for managing motion and obtained good enough results for creating games. Animations can be further combined with the help of animation layers [1].

Motion capture represents an important improvement in animation of the characters. By equipping actors with special suits whose main points can be easily tracked and translated into a virtual world. Motion capture made it possible to obtain the most realistic animations possible. This approach provided high quality animations, but it came with a cost: all the data had to be processed by hand in the cases in which there was no exact mapping between the skeleton points of the avatar and the skeleton points of the motion capture actor. This process is tedious and time-consuming, resulting in high costs of production for video games, both financially and time wise.

In both scenarios, the amount of manual work is immense because the same repetitive work must be done for each frame. Humans are prone to fatigue, so the animation process requires great amounts of time and effort. For animation generation, each new frame’s key-points, usually starting from the last frame positions, must be manually moved to create the animation and refined time and time again until the desired result is reached.

The problem of creating motion artificially, without human involvement, has attracted the attention of machine learning researchers because of the data-driven nature of the problem and several approaches have been tried. Prolific research in this subject has been done by Taku Komura’s group from the University of Edinburgh. They are working on this subject ever since machine learning was getting popular, so one could argue that they are indeed pioneers of machine learning in this subject.

For this task we propose AnimGPT, which generates the next frame based on all existing frames, which transforms the task of fully moving all the key-points into a refinement one. For motion capture, the resulting noisy raw data must be manually corrected, frame by frame. For this task, we propose DenoiseAnimGPT, which helps with this de-noising, cutting a part of the artist’s work.

Also, creating games requires a vast and diverse amount of assets, ranging from background and decor to buildings and plants to characters. Usually, these are either taken from a platform which sells such assets or a team of artists creates them. This is a costly and time consuming process, which can be optimised. The generative power of diffusion can be put to good use in this case. By combining image generation and outpainting we generate good backgrounds. ControlNet is coupled with background removal in order to create a tool that generates good assets more than simple sketches.

The aim of this project is to create a deep learning framework that manages to reduce the complexity of the game creation and the character animation processes by leveraging the current state of the art methods in deep learning in order to take full advantage of the available data.

The main results of the paper are the following:

- performing 3D characters animation for games by predicting the next pose based on all the previous ones, so that the number and scale of joint movement is minimal. We created two GPT-inspired decoder only Transformer models that remove a part of the tedious skeleton based animation process, AnimGPT and DenoiseAnimGPT. AnimGPT helps in the manual animation process by generating a better next pose than simply copying the current one, taking into account all the previous poses. Although the main objective is to generate the next pose and keeping the human in the loop, it can also be used auto-regressively to generate multiple poses. However, uncorrected errors do build up in time, resulting in poor performance for longer sequences. DenoiseAnimGPT is similar to the AnimGPT, but targets motion capture data. It removes the noise from these sources by taking into account both the corrected past frames and the current noisy frame and generating the current denoised frame. Both models ease the workload of experts, leading to increased productivity.

- asset generation by using diffusion models for fast moodboard creation for artists, which hastes game creation. By using image generation and outpainting, we created an algorithm that generates good backgrounds. A good improvement came with ControlNet, which, coupled with background removing algorithms, enabled the creation of a tool that generates good assets more than simple sketches. By combining the idea of text conditioned generation and text conditioned image editing, we created a time coherent algorithm that creates animated effects for characters.

2. Related Work

The deep learning framework for motion synthesis [2] is the first promising data-driven deep learning approach for 3D character animation. Given a trajectory, the model predicts every pose of the motion, effectively constructing the whole animation. The solution obtains accurate animation for all kinds of scenarios, from walking to punching or kicking. There are some problems. The first and biggest one is that this solution is not suitable for on-line motion, as it does not work in real time. The needed trajectory makes this approach good only for scripted scenarios, which is arguably quite a large chunk of the animation domain, but it is nevertheless a limitation that must be brought to attention. A good part of it is that artists can refine the results of the inference, which can speed up the animation process. A huge downside is the fact that one network is needed for every type of motion. Walking, swimming, punching, all need separate networks to achieve some results. This is highly undesirable, because every new motion that is needed for a project requires another network and more time and computation power. The users must provide the velocity and the trajectory of different parts of the body, which is in itself a rather heavy task. These problems make this solution impractical.

The ERD method [3] comes to solve the problem of on-line animation by adopting an LSTM that can generate motion sequences. While this works, its performance is worse for short-term motion generation and even though it generates motion for longer periods of time, it still suffers from the classical Recurrent Neural Network problems of error accumulation over time or the dying out phenomenon. Even though this solution can be improved, the problems are only delayed and since gaming sessions are sometimes several hours long, collapses are bound to happen. Moreover, the observations of the authors that the ERD does nothing more than interpolating between samples seen at train time is not promising. Another significant problem is that there is presented no straightforward way of incorporating user input in this approach. The approach of the authors is useful for offline animation generation, but the limitations of the RNNs make it unappealing. These problems makes the ERD an interesting, but unusable solution for creating a true animation generation framework.

The Phase-Functioned Neural Networks (PFNN) [4] represent a ray of light in the pursuit of real-time character control with their lightweight approach (which translates into fast processing) and good results. This approach is the first one that actually manages to create a data-driven model that can control the character with respect to the user input. Testing the model shows that the solution is robust and that it can be used in a practical scenario. However it has some problems. First of all, the animation is somehow unnatural and of low quality. It works, but it doesn’t appear of high quality. Considering that the task it aims to solve is nothing more than cyclical locomotion, which is easy to animate, its usefulness is debatable. A big problem in this regard is that the animation cannot be fixed by artists, because it is generated on the fly. A downside of using a cyclical phase function is that the model cannot rest. When receiving giving input through the game-pad or keyboard, the user expects the character to either stay still or perform some small movements. PFNN, however, makes the character move restlessly in place in an unnatural manner, even rotating the character in place. This is because, most likely, there was no standing still motion data in the dataset. This brings about another problem of PFNN, the limited range of motion. For example, the model does not adapt to the terrain that well (a staircase or a smooth ramp are considered alike to rough terrain, so the character hops while going up or down) and there is no animation for walking backwards or turning around (it relies on an awkward form of sidestepping for both). Moreover, even though the idea is splendid, the network itself is extremely simplistic, so its performance is bounded if one considers adding more types of motion. The PFNN is a wonderful idea, but it certainly needs a series of improvements to make it work in a practical scenario.

Transformers [5] are a special class of deep learning models whose mechanism revolves around attention, a weighting mechanism of the importance of past states when computing the context for deciding on the current one. Transformers appeared and are canonically linked to natural language processing, but their core functionality is not bound to processing words or language. They are fundamentally variable length sequence processing models and exactly what we need for our task. The original transformer was an encoder-decoder architecture focused on translation. The encoder would encode the input into a deep representation and would pass it to the decoder, which generates the output piece by piece. Encoder only and decoder only flavours of the model have appeared, with different aims in mind: the former, BERT [6] models, for obtaining embeddings, the latter, GPT [7] models, for generating text. These models also rely on tokens, discrete integers linked to a word/part of a word, that are easily translated into embeddings.

For asset generation diffusion models [8] are the state of the art when it comes to image generation and editing. Not only do they provide better looking results [9,10] than GANs [11], but coupling them with text conditioning has led to unprecedented flexibility and user friendliness [12].

First and foremost, the computation requirements for training diffusion models are enormous. They routinely need several A100 GPUs for even the most basic trainings. These GPUs are extremely expensive, making training diffusion models unfeasible and out of reach for the usual consumer. Because of this, research in the area is limited to people that have the monetary means, which are usually the big firms in the field, like OpenAI. Moreover, a considerable quantity of data is needed for good results. Handling such data on a regular workstation is unfeasible. These facts make it prohibitive for regular researchers to train good models. Luckily, there are models available. Sadly, because of such high compute costs, those models are not publicly available or are hidden behind paywalls. For example, GLIDE is made publicly available, but only a small, less powerful version of it, while DALL-E 2 is available through an API. The only notable exception is Stable Diffusion [13], which aims to deliver top-notch diffusion models to the people, open source.

Second of all, the performance of these models is not as great as one might believe. Like many other generative models, there are instances where these models fail. One hilarious case is their inability of generating hands. However, these cases are not isolated. The performance of these models is bound to the diversity of the dataset it was trained on. In Stable Diffusion’s case, the dataset is LAION [14], a publicly available web crawl of several billion images. While it is good and diverse dataset, it doesn’t cover all the situations one might want. While diffusion models have showcased a remarkable ability to combine concepts, they still have limitations. This means that the model might combine usual concepts, like animals and hats, which are plentiful on the internet, but as soon as you get out of the training distribution, the results are less than ideal. This problem is even more severe in the more constrained cases, such as image inpainting. In our tests, replacing items in an image is notoriously difficult, and even when it works, the results are less than ideal.

Paper [15] propose a simple, yet efficient way of improving the practicality of the diffusion process with a new model called ControlNet. ControlNet is a surrogate for fine-tuning the diffusion process which adds extra visual conditioning as input in order to offer better control over the result, without dropping the costly pre-trained diffusion models. The key idea is that while text guided generation leads to good results, more fine-grained requirements may be needed. Instead of generating the image and gradually editing it with the likes of inpainting, ControlNet aims to generate the desired composition from the start.

The authors observe that task specific datasets are several orders of magnitude lower in size compared to the datasets large text to image diffusion models are trained on, so extra care should be taken to prevent overfitting to the smaller dataset. To circumvent this and to transfer the generative power of the original model, they propose creating two instances of the model, one frozen and one trainable. The trainable model processes the extra conditioning and is attached to the frozen model through one by one convolutions initialised with zeros. The idea is to start from not influencing the diffusion model at all and to gradually increase the influence of the trainable model, and implicitly of the extra conditioning, until convergence. By doing this, the original training is not destroyed through fine-tuning. The resulting model can be trained in an end-to-end fashion on smaller datasets.

3. Proposed Method

This section presents our solutions for 3D characters animation and assets generation. For 3D character animation we described how we envisage both generation of the next pose and motion capture denoising. In case of asset generation we presented how can we obtain them through StableDiffusion conditioning the model on contours and scribbles.

3.1. 3D Characters Animation

Generation of the next pose of the character: Animating 3D rigid bodies by hand implies moving the parts of the skeleton incrementally to generate the illusion of movement. This technique is used heavily in stop motion animation. Although it can be done with dolls and figurines, our case implies virtual rigid bodies. This is done by taking into account the full motion up until that point, duplicating your subject’s last pose and modifying incrementally the position of the joints to advance the motion. Instead of moving all the necessary keypoints to the next pose by hand, we propose a machine learning model that predicts the next pose considering all the former poses.

To obtain a good model for next pose prediction, we created a decoder only autoregressive transformer that processes directly motion information. This basically means that we created a GPT-like model that generates motion instead of text, so we call our model AnimGPT.

AnimGPT, generates the next frame based on all existing frames, which transforms the task of fully moving all the key-points into a refinement one.

For motion capture, the resulting noisy raw data must be manually corrected, frame by frame. For this task, we propose DenoiseAnimGPT, which helps with this de-noising, cutting a part of the artist’s work.

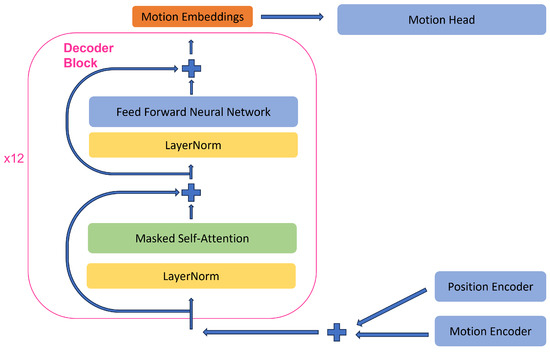

The architecture of AnimGPT is given in Figure 1. The core part of the architecture is the same, with a set of embeddings that get processed by the decoder only blocks, obtaining a final embedding which gets transformed in the result. However, instead of working with tokens, we’re working directly with motion data, which is represented by a vector of continuous joint rotations, which is a rather different type of data than the discrete tokens of the regular GPT processes. We could consider this motion data as a data point which can be given as input directly in the network. But we added an extra motion encoder which translates it into the usual sized embedding that gets processed by GPT. We keep the position encoder into place. Unlike the regular GPT, we do not transform the resulting motion embedding into a probability distribution over the possible tokens because we do not have such tokens. Instead we use a decoder, the motion head, to translate the embedding back into the motion space.

Figure 1.

The architecture of AnimGPT, with only one decoder block represented.

Motion Capture Denoising is still dealing with time sequences, so Transformer models are still the answer. Contrary to the former case, however, we have the full data sequence available, so we could also use BERT like models. These are usually trained by masking randomly parts of the sequence and having the model predict them, considering that the rest of the sequence is correct. However, in this case, many of the motion capture points could be noisy, so we cannot trust data blindly. Because of this, we opt to have the human in the loop and rely on their input. This means that the user should provide a limited set of denoised frames at the start and that our model will eliminate the noise from the rest.

We solve this problem with a similar approach to the next pose prediction model, because we implicitly ignore the noisy data with the masked self-attention mechanism. However, instead of predicting the next pose blindly, we also make use of the noisy current data to guide the model to a better result. We do this by adding another encoder, the noisy motion data encoder, to the mix and combining the resulting embedding with the raw motion embedding and the position embedding (DenoiseAnimGPT).

The main idea of these two models consists of using Transformer models due to their superior attention mechanism. Our solutions can be considered akin to ERD [3], because the motion data is encoded into embeddings. which are processed by the model, then translated back to the motion domain by the decoder. However, instead of using an LSTM, we make use of the superior Transformer. This choice is superior because instead of relying on the information stored in the RNN state and its memory, we rely on the model to choose the important motion states from the past when computing the current one. This architecture basically creates a self-learned embedding space in which it is easier to solve the problem at hand. Because we target only next pose generation or de-noising, we employ a decoder-only transformer.

3.2. Asset Generation with Diffusion

Many of the elements of simple games are immobile assets, so a big chunk of the artist’s time is spent on drawing many such assets, ranging from trees and rocks, to furniture and decorative elements. Backgrounds are a crucial type of asset in game creation because they set the mood of the game. In most games, it represents a bigger than the level picture that scrolls slower than the rest of the level. In others it represents the actual scene. Either way, generating a background image implies creating an image I with . This task can be easily improved through the use of diffusion models [16]. Diffusion models are the state of the art when it comes to image generation and editing. Not only do they provide better looking results than GANs, but coupling them with text conditioning has led to unprecedented flexibility and user friendliness.

Due to their step by step noising and de-noising of the input image training method, diffusion models have surpassed other generative methods such as VAEs and GANs in image quality. Moreover, one of the main focuses of diffusion models was to easily condition the generation through text. These two attributes of diffusion models make them particularly suitable for game asset generation. We resort to Stable Diffusion because it is powerful, pre-trained and publicly available. Because so far diffusion has mostly targeted one-image at a time generation, we omit its use in animation generation. New work in video diffusion shows impressive capabilities for video generation, which can be easily adapted to character animation, but this is beyond the scope of this work.

The model we focus on is StableDiffusion. It has the desirable property of generating images at high resolution [17], without the need of extra up-sampling, which makes working with it faster and more convenient. Also, since it is an open source model, the community takes interest in it, bringing constant improvements, like ControlNet [15] and providing tips and tricks, which are invaluable with diffusion models, where prompting [18] is crucial. Last, but not least, the model being an open source, it can be easily used and fine-tuned.

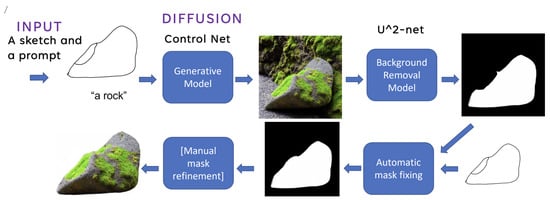

While the standard Stable Diffusion models can generate assets through textual descriptions, it lacks the control of an artist. Thus, we rely on conditioning the model on contours and scribbles. The idea is straightforward and presented in Figure 2: the artist effortlessly creates a doodle of the desired element and provides it to the model with an appropriate prompt. The model generates an image containing the asset, which is post-processed by a background removal tool to obtain a mask. This mask is automatically adjusted based on the idea that the input represents an object without holes. Optionally, the mask is corrected before it is applied to extract the element from the generated image.

Figure 2.

Asset generation algorithm model.

Another use-case is generating effects. Effects can be considered animations of non-object assets, for example the flames of a torch or the lightning effect engulfing a spell. These effects are usually easier to generate because they have different requirements than usual animations. First, they do not represent an actual object. This means that they must have the same style and colours, but do not have to keep the same shape, because the underlying phenomenon they are based on happens really fast. This also means that many frames must be drawn for obtaining convincing effects. Second, they benefit from not being repetitive, because they improve the dynamism. Third, they are more easily ignored.

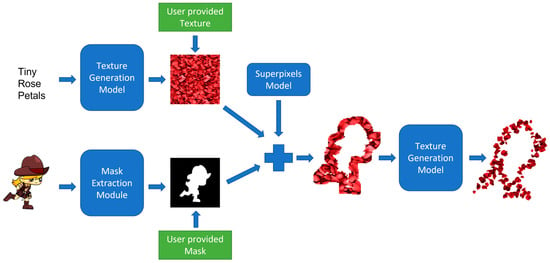

We propose an algorithm that leverages the generative power of diffusion models to create such effects. The algorithm can be observed in Figure 3. Starting from the effect description, we generate a texture image with a text conditioned diffusion model. Optionally, a texture image can be uploaded by the user. The purpose of the texture image is to provide a common starting point for all instances of the effect. We then extract the superpixels of the given texture image. The aim of the superpixels is to provide a noisier, more textured outline for the effect. At the same time, a mask of the area of the effect is automatically extracted if it was not provided by the user. Only the outline is of interest, so we extracted it and combined it with the superpixels, keeping only their intersection. This intermediary result represents the starting point of an image2image diffusion process which transforms it according to the initial prompt into the desired effect. By using the outline of the character, we concentrate the effect around the desired part, eliminating undesired empty regions.

Figure 3.

Effect generation algorithm model.

This process ensures that no matter the pose of the sprite, the starting points for the effect generation are similar, which prompts the image2image diffusion model to output coherent results, which can be combined to form the animated effect. Moreover, the different poses ensure that the intermediary image is not the same for two different poses, resulting in dynamism. The automatically extracted mask can be used jointly with the user provided mask to create effects on a specific part of the body. This approach is useful for games for situations like putting emphasis on attacks. This does not apply when animating stationary objects, like torches. In that case, we propose a random position jitter in the mask, which will lead to the desired coherent, yet dynamic effect.

4. Evaluation Results

Each part proposed in this paper: 3D character animation and asset generation was evaluated based on qualitative and/or quantitative results.

4.1. 3D Character Animation

For training the model that generates the next pose of the 3D character, we used the CMU motion dataset [19], which contains enough diversity for our use-case. This dataset is composed of 144 motion capture scenarios, with different subjects. Each scenario is composed of a set of activities from a certain domain, like dancing, running, walking, basketball, riding vehicles and so on. Each activity is represented by an individual motion capture file, consisting of several hundred frames. In our case, each such file represents a “document” for the GPT, with a total of 2553 “documents”. All the activities have been retargeted to a single skeleton, so there is no need for extra motion retargeting. The skeleton is defined by a hierarchy of joints. Each joint is defined by the joint type (“ROOT” for the root node, “JOINT” for the joints and “End site” for end nodes).

An item from the dataset is represented by a motion capture file data, meaning a vector of size . However, in a lot of cases the motion sequence is larger than the capacity of AnimGPT. Moreover, the animations needed for a game are not exactly long, so we have decided to crop the number of frames to either 256 or 512. However, cutting them is a bad idea, because the parts are logically linked. Instead, we have opted for a random sampling strategy from the motion data. Sampling all the motion data files with equal probability is not correct, since this would lead to oversampling of shorter animations. To solve this, we have weighted the sampling by linking more indices of the dataset to each sequence, proportionally with its length. More specifically, we have divided the length of the sequence to the fixed motion sequence length and taken the ceiling of the result. This results in a series of intervals.

Another choice regarding the data was to eliminate information regarding movement on the X and Z axes. Games are interactive, so the trajectory and speed of the motion is determined at run-time. This collides with the information from the X and Z axis, which represents horizontal movement in the environment. Because of this we have chosen to simplify the problem and eliminate the need to predict this data. We eliminate it by zeroing it over the full dataset instead of lowering the dimensionality of the result, for practical reasons: to be easier to generate the motion data for the result.

The data for the denoising experiment is obtained in the same way, but we also add noise to the data separately. We created additive noise by centering the noise in 0. Because of the normal distribution properties, with most of the values revolving around the mean, we always apply the noise instead of applying it to components with a set probability. We compute the standard deviation separately for each channel, on the whole dataset, to create a more believable noisy motion.

For generation of the next pose, we started from the architecture of the base GPT2, because we found its size of 110 million parameters to be fitting for the rather low amount of data we had available (compared to the millions of documents the language model has been trained on).

The AnimGPT model changes are rather straightforward. The original GPT2 uses the Pytorch Embedding class for translating from tokens to embeddings. These are nothing more than dictionaries with learnable parameters for each entry, which require as input the maximum number of elements. This approach works for natural language processing, because the words form a discrete space, but since we are dealing with motion, which forms a continuous space, we cannot use the same module. Each motion frame represents a vector of 132 channels, which can be considered a point in the motion space. Instead of trying to discretize this space, we employ a linear projection to bring the point into a higher dimensionality space, more exactly the 768 dimensional space the regular Transformer usually processes. This operation is the equivalent of translating a token to that index. Employing something more than a linear projection is superfluous, since we’re using a deep neural network to process the data anyway.

A similar operation must be done at the end, by replacing the model head. Instead of having a linear projection that translates the obtained deep embedding into a vector of logits for each token of the vocabulary, we use a linear projection that translates the embedding into a 132 dimensional point from the motion space, the next frame.

We train two models for 160 epochs, one processing sequences of 256 frames and one processing 512 frames ones. Both models work equally well. For the former we use a batch size of 32, while for the latter we can only afford a batch size of 20. We train both models from scratch, because the weights of the original GPT2 are optimised for another problem. We start with a learning rate of and decay it progressively during training.

A more important change was in the actual generation case. The usual GPT2 model inherits the GenerationMixin module, which contains the common code for all generative models, with different generation techniques. We have built a GPT, but our results are deterministic, unlike GPT’s case, so we do not use such methods. The solution was to override the generate function to use our generation techniques. Two methods were implemented. The first one is for the generation of the next pose, where we expect at each step the correct sequence of frames and generate the next one. The second one is free autoregressive generation, where we give the model a start sequence and let it generate the rest of it.

For motion capture denoising, we trained this model using the same CMU motion capture dataset as in the case of generation of the next pose case, but opt for a noising scheme to include artificial noise. We added Gaussian noise, which is more appropriate for this task. For this case, DenoiseAnimGPT uses mainly the same changes as AnimGPT. We opted for adding the noisy embedding to the input as an extra embedding besides the motion embeddings and the position embeddings. For this, we have added another linear projection at the input. To process it correctly, we apply a roll-over to the left, so that the masked self-attention will pay attention to the full noisy sequence, until the current point inclusively.

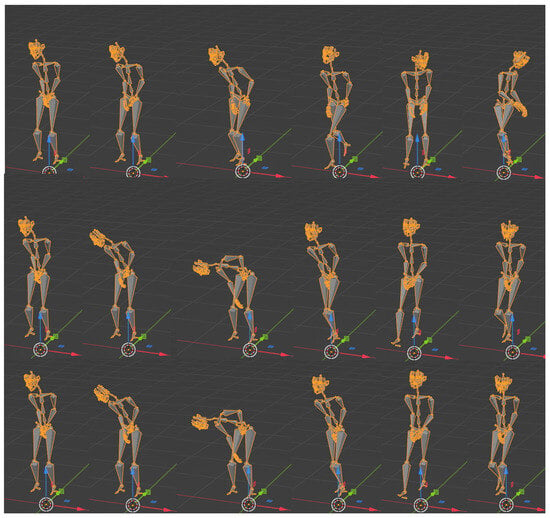

Qualitatively, AnimGPT obtained good results. Not only does it generate good quality animations overall, but it does a job for the next pose prediction problem. Some qualitative results can be observed in Figure 4. The top row is obtained by starting from the first several frames then generating the movement freely. The middle row is obtained by a simulated animation process, which we call 1b1, in which the artist guides the generation step by step and the model only predicts the next pose. We used as input the ground truth and predicted the next pose at each step. The results are composed of the predicted next steps. The free generation follows the input motion initially, but starts wandering around after a while. The next pose prediction, however, provides results that are identical to the ground truth, at least for the naked eye.

Figure 4.

AnimGPT results with the same input: top row, free generation, middle row, next step prediction, bottom row, ground truth.

For quantitative results, we use as a metric of comparison the mean absolute error between the ground truth motion vectors and the predicted motion vectors on the test set, a held out set of 569 motion data sequences. We also add the free generation to the comparison. We compute the results for the first 50, 100, 200, 350 and all the steps and report the results in Table 1. The free generation leads to increasing errors the more we run it, because it doesn’t necessarily generate the ground truth motion. However, this is to be expected and is in line with what is observed in [3]. We tried comparing our solution to theirs, but they target a different dataset, so direct comparisons are not possible. We also target a vastly different time-frame for the evaluation. The free generation model manages to generate good motion most of the time, but not the ground truth one. For our intended next pose prediction case, though, the model displays the desired behaviour with a comparable, low error for all time steps.

Table 1.

AnimGPT quantitative results.

We do not provide qualitative results for the DenoiseAnimGPT model here because they are not visible from pictures, only in video. However, we have observed that the model does a great job at denoising the input data. We measure this with the mean absolute error (see Table 2). Two versions of using the model are presented, together with the error induced by the noisy data.There is no other publicly available model to compare against for this dataset. The first one, denoised auto, uses a sequence of manually denoised frames and lets the model autoregressive denoise the rest, without further intervention. In denoised 1b1, after a frame is denoised automatically we consider that the user eliminates of the remaining noise before going to the next frame, just as in AnimGPT 1b1 case. The 1b1 version has greater performances compared with the autoregressive one, which is to be expected, since we have extra, clean information for inference. Comparing these results to AnimGPT shows that the denoising process leads to better animations for both the simple generation and the 1b1 generation [20]. The denoising model does not suffer from degradation over longer sequences in the autoregressive case, unlike AnimGPT, which suffers greatly. This is because we feed the noisy information, which keeps the generation direction of the model on the right path and lets it focus solely on the denoising. We can observe a slight degradation for longer sequences, but it’s not that significant for the overall quality of the results.

Table 2.

DenoiseAnimGPT quantitative results.

Ablation Study

In order to compare our results with some other existing ones, we started with the methods presented in Section 2 for 3D character animation. These methods are summarized in Table 3.

Table 3.

Summary of the related methods.

Since our method is trained on a different dataset (the CMU motion dataset) it is not feasible to make a direct comparison between our results and other existing ones (also, methods [2,4] used their own datasets).

Also, none of the models from the Related Work Section do exactly what AnimGPT did. In our case we generated the next pose based on all previous ones (correct and cleaned), so that it would be easier to animate the character. The main competitor is the ERD method from [3], but the code is not public. This method extends a LSTM network by augmenting the model with encoder and decoder networks. Thus, to be able to check the performances of our method against other existing results we replaced the transformer from both networks AnimGPT and DenoiseAnimGPT with a LSTM network and retrained them with the same dataset: CMU motion dataset. Thus, we created an architecture similar to the one used in the ERD method.

Results for AnimGPT compared with LSTM like model are given in Table 4 using mean absolute error between the ground truth motion vectors and the predicted motion vectors on the test set as the metric.

Table 4.

AnimGPT compared quantitative results.

Results for DenoiseAnimGPT compared with LSTM like model are given in Table 5.

Table 5.

DenoiseAnimGPT compared quantitative results.

From Table 4 and Table 5 we can observe that AnimGPT has better performances compared with the architecture similar with [3]. Even if we use 1b1 version, the error keeps accumulating over time in case of using the LSTM network.

For denoising, it seems to introduce more noise than it was initially. Even if we use 1b1, the error is reduced, but it is still far below as performance compared with our solution.

4.2. Asset Generation

For asset generation Stable Diffusion was used. The basic, text2image [24], generation is handled by StableDiffusionPipeline. This receives as input only the text prompt. Inpainting is done with StableDiffusionInpaintPipieline, the most complex of all, with inputs represented by a text prompt, an image and the inpainting mask. Dreambooth [25] is a standard text2image model, so StableDiffusionPipelines are used for it [26].

For the background generation approach we used two diffusion models, the StableDiffusion-2-1-base (https://huggingface.co/stabilityai/stable-diffusion-2-1-base) (accessed on 10 July 2024) with a discrete Euler scheduler for the background seed generation and the StableDiffusion-2-inpainting (https://huggingface.co/stabilityai/stable-diffusion-2-inpainting (accessed on 10 July 2024) for image outpainting. Since the two models must be used jointly, we run them using fp16. To remove unwanted artifacts, we used the negative prompts “logo, repetitions, writing, text, watermark”. To ensure a smoother transition, we blurred the margin of the outpainting mask with a gaussian kernel, so that a part of the border of the last frame is slightly changed. The used prompt for both the seed image generation and the image completion is enriched with attributes to ensure better generation quality. The consistency of the image is ensured by outpainting, so we do not force the seeds to match.

For the asset generation use-case we employ a version of ControlNet trained on user doodles. This provides a powerful model that is capable of turning even the simplest sketches into detailed assets. Moreover, if offers artist the fine-grained control they need for obtaining exactly the assets they want. Since the model was trained on natural, scraped of the internet images, we make use of a salient object detection models, bundled by the rembg (https://github.com/danielgatis/rembg) (accessed on 10 July 2024) package. More specifically, we used -Net [27], a clever pyramid of U-nets [28], where different levels compute the salient regions at different scales. This is run using onnx-runtime. However, the results are not always ideal, with portions of the object missing in some cases. Since the doodles usually represent objects without holes, we used the initial sketch to build an outline mask. We fixed the automatically detected object mask by intersecting it with the filled outline mask. Since this approach is not fail proof or perfect, we used an optional manual mask refinement step to correct the eventual mistakes, which can be integrated seamlessly in an application.

The visual effects pipeline requires two diffusion models, one for generating the initial texture image and one for editing the final effect. We use the same version of diffusion model, StableDiffusion-v1-5 (https://huggingface.co/runwayml/stable-diffusion-v1-5) (accessed on 10 July 2024), for both, but with different pipelines. For texture generation we used the usual text2image pipeline, but for the editing we use an image2image approach. Generating effects cannot be done without any of the two parts of the pipeline. Generating the starting image is crucial for consistency, otherwise the results would be unacceptably different. The image2image process is crucial for result quality, otherwise the effect would be just a cutout from an image, which is not desirable. It must also be noted that our approach is based on the StableDiffusion property that white regions are kept white as well as possible, so instead of generating a full picture through image2image, the model stylises only the content part. We use the same seed for the image2image part for consistency.

We computed the superpixels using the SLIC superpixels algorithm [29], pre-implemented in the Scikit image segmentation package. We use a compactness of 0.01 to focus more on colour than on spatial proximity and a sigma of 1. The number of segments is, however, the pivotal parameter. Choosing small values for it results in bigger superpixels, which leads to bigger elements being generated for the effect. Bigger values will result in an image closer to the given mask. We obtain the outline mask of the character by dilating the mask and doing a xor operation with the original. To have some overlap between the character and the mask, we apply a second dilation operation. The final mask is obtained by intersecting the outline mask with the superpixels, such that if a superpixel intersects the mask, it is kept. This approach leads to a bigger mask, which is then used for cutting the texture image. After the image2image diffusion, we combine the asset and the effect with the asset mask, after we erode it to ensure there are no artefact. This result is passed again through the initial mask detection algorithm to obtain an asset with transparent background.

Generating effects for non moving assets or for cases when there is not enough movement to generate enough diversity can easily be done by translating the mask randomly. We compute a random translation matrix and apply it to the mask with numpy, then we follow the algorithm exactly like in the former case. Once the image is generated, we apply the inverse transform to bring the effect back to its origin.

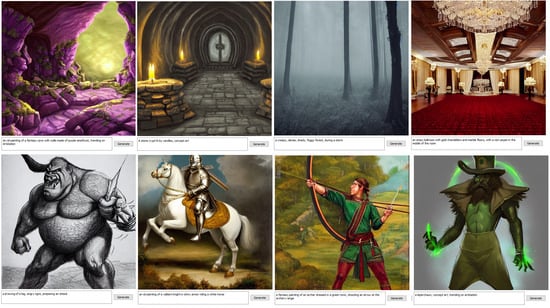

Obtained effects are good, but careful prompt editing should be done to obtain the best results. In general, the model does a great job with good descriptions of the outcome. The generation style is also important. The best use-cases we have identified for plain text guided image generation are the generation of backgrounds and settings for the action and the generation of starting points for the asset creation. Some results can be observed in Figure 5. The top row shows examples for the former. We can see the settings are believable and, if not ready to use, can be easily adapted for different projects. The bottom row targets the latter. While not perfect, the generated assets represent good starting points for the creative process. Either way, the results show great potential for creating moodboards.

Figure 5.

Stable Diffusion, text guided image generation results.

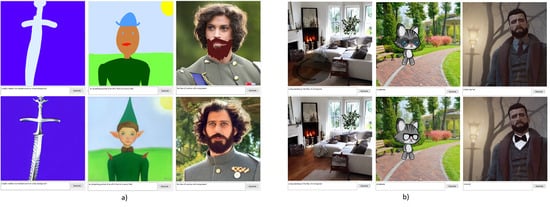

Image to image generation represents a great use-case for asset generation, because it allows the artist to guide the model better, with minimal effort. Stable Diffusion obtains great results from simple doodles, as it can be observed in Figure 6a. Artists get inspiration and better starting point for assets, characters and can also edit the appearance of the characters. However, the appearance change approach is limited, because it changes the whole picture. In our example, the identity of the person is lost. A good property of this solution is that multiple, cascading edits can be made. For example, we can generate the character in the middle, then keep adding details to the photo until we are happy with the results.

Figure 6.

Stable Diffusion, (a) image2image generation results (b) inpainting results.

Unlike image2image generation, the text guided inpainting part leaves the unmasked regions of the photo unchanged. Theoretically, we could mask a portion of the image we want to edit and use the prompt to replace that zone with what we want. This solution does not work as well as it should have. In Figure 6b, we can see that the model fails to place a dog on the floor of the living room, as instructed by the prompt and instead eliminates the carpet. The solution is able to edit the other two photos, with somewhat limited performance (the glasses look unrealistic and that shirt is too white). However, the model is limited. For example, in the case of the rightmost game character, trying to add a top hat results in failure every time.

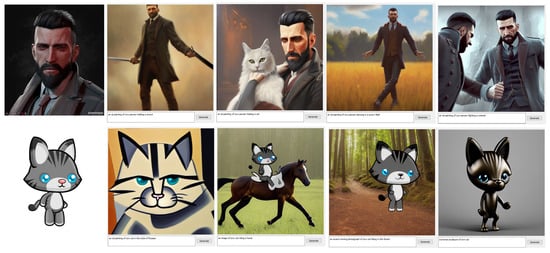

As it can be seen, the model learns the concept presented in the pictures and is able to transpose that concept in multiple settings. For example, the model is able to transfer the features of a 2D drawing in a 3D object (Figure 7—the cat sculpture). However, the usefulness of these results to our use-case is limited to providing inspiration, in game art or cutscenes.

Figure 7.

Stable Diffusion, Dreambooth results. Leftmost column are sample of images of the original concept.

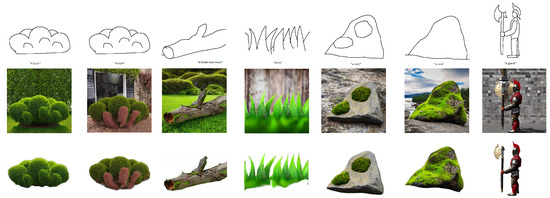

By coupling ControlNet with background removal, we allow artists to create assets according to their needs. Some results can be observed in Figure 8. As it can be seen, an inexperienced user like ourselves can generate high quality assets starting from nothing more than a vague description and a vague scribble of it. The generated results are realistic and highly detailed and their resolution is high enough to be used in practice, thanks to latent diffusion. ControlNet makes it easy for users to generate a variety of assets, including characters like the guard.

Figure 8.

Asset generation results using our pipeline, ranging from decorative elements to characters.

To demonstrate the usefulness of asset generation, we’ve created an extremely simplistic demo game with Pygame. Most assets are created with generative techniques, with the exception of the cat sprite, which we have animated with our CharacterGAN. The assets are generated with our asset generation pipeline, while the background is generated with our background generation pipeline, presented in the previous section. One setting from the game can be observed in Figure 9. While there are problems with blending the assets, especially with the lighting, it looks more than adequate. This result proves that generative techniques are a great tool for artists, because we managed to obtain a good looking result with limited artistic skills and experience in the field. An experienced artist could effortlessly blend the assets in the image using tools like Photoshop to obtain better looking scenes.

Figure 9.

Screenshot from a demo game created with the proposed generative techniques.

A set of results of our effect generation approach can be observed in Figure 10. Our pipeline enables users to generate various effects, limited only by imagination and common sense (it would be hard to make an effect with couches). The results are of good quality and diverse. The leftmost three effects are generated with a full body, automatically generated mask, while the rightmost three are generated with a mask limited to the head region. By providing custom masks, users can generate effects in whatever region they please. All results use the effects as background effects, but they can also be coupled with background removal and placed in the front. This enables creation of effects such as spells.

Figure 10.

Effect generation results and their corresponding prompts. The first character uses a fully body mask, while the second uses one just for the head.

The effects do not have to be bound to a character. By combining a prompt with a sensible mask of their choice, users can create an animated effect, as can be observed in Figure 11. We limit ourselves to providing just several frames of the animation. The position jitter ensures that the images are not identical, while the texture prior preserves the temporal coherence, resulting in the desired animated effect.

Figure 11.

Generating an animated effect with the prompt “purple lightning” and the mask given on the left, with the black background.

5. Conclusions and Future Work

The paper proposed two methods for character animation—transformer based solutions inspired from the GPT: AnimGPT and DenoiseAnimGPT. The former solves the problem of next pose prediction based on a set of previous poses. Thus, instead of making artists move for all keypoints of the skeleton for the next frame, the model predicts the next pose automatically, which reduces the amount of work. The latter model targets the problem of predicting the clean current pose based on all previous poses and the current noisy pose. Both models obtained good results performance trained on the CMU motion dataset. Also, diffusion models were used for asset generation. We created a method that generates backgrounds by combining the idea of text conditioned generation and text conditioned image editing.

As future work, the two models proposed for 3D character animation will be trained on other data sets. This implies that bigger models can be trained, and since going large scale has led to better results in natural language processing, motion sequences should follow suite. Even without bigger models, more data should lead to better generalisation and better quality animations, especially in the free generation case. For asset generation we will integrate more animated effects. Also, we will extend the method for generating assets for the 3D world.

Author Contributions

Conceptualization, I.G.M. and V.-C.L.-S.; methodology, I.G.M.; software, V.-C.L.-S.; validation, V.-C.L.-S.; formal analysis, I.G.M.; investigation, V.-C.L.-S.; resources, I.G.M.; data curation, V.-C.L.-S.; writing—original draft preparation, V.-C.L.-S.; writing—review and editing, I.G.M.; visualization, V.-C.L.-S.; supervision, I.G.M.; project administration, I.G.M.; funding acquisition, I.G.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant of the Ministry of Research, Innovation and Digitization, CCCDI-UEFISCDI, project number 97PTE 21/06/2022, PN-III-P2-2.1-PTE-2021-0255, within PNCDI III.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://mocap.cs.cmu.edu/.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hinz, T.; Fisher, M.; Wang, O.; Shechtman, E.; Wermter, S. Charactergan: Few-shot keypoint character animation and reposing. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022. [Google Scholar]

- Holden, D.; Saito, J.; Komura, T. A Deep Learning Framework for Character Motion Synthesis and Editing. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Fragkiadaki, K.; Levine, S.; Malik, J. Recurrent Network Models for Kinematic Tracking. arXiv 2015, arXiv:1508.00271. [Google Scholar]

- Holden, D.; Komura, T.; Saito, J. Phase-Functioned Neural Networks for Character Control. ACM Trans. Graph. (TOG) 2017, 36, 1–13. [Google Scholar] [CrossRef]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Po, R.; Wang, Y.; Golyanik, V.; Aberman, K.; Barron, J.T.; Bermano, A.; Chan, E.; Dekel, T.; Holynski, A.; Kanazawa, A.; et al. State of the Art on Diffusion Models for Visual Computing. Comput. Graph. Forum 2024, 43, e15063. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. arXiv 2021, arXiv:2105.05233. [Google Scholar]

- Kuznedelev, D.; Startsev, V.; Shlenskii, D.; Kastryulin, S. Does Diffusion Beat GAN in Image Super Resolution? arXiv 2024, arXiv:2405.17261. [Google Scholar]

- Adorni, G.; Boelter, F.; Lambertenghi, S.C. Investigating GANsformer: A Replication Study of a State-of-the-Art Image Generation Model. arXiv 2023, arXiv:2303.08577. [Google Scholar]

- Huang, Y.; Huang, J.; Liu, Y.; Yan, M.; Lv, J.; Liu, J.; Xiong, W.; Zhang, H.; Chen, S.; Cao, L. Diffusion Model-Based Image Editing: A Survey. arXiv 2024, arXiv:2402.17525. Available online: https://dblp.org/rec/journals/corr/abs-2402-17525.bib (accessed on 10 July 2024).

- Kurtis Pykes. How to Run Stable Diffusion Locally to Generate Images. Available online: https://www.datacamp.com/tutorial/how-to-run-stable-diffusion (accessed on 10 July 2024).

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. LAION-5B: An open large-scale dataset for training next generation image-text models. arXiv 2022, arXiv:2210.08402. [Google Scholar]

- Zhang, L.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3813–3824. [Google Scholar] [CrossRef]

- Ho, J.; Salimans, T. Classifier-Free Diffusion Guidance. arXiv 2022, arXiv:2207.12598. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT Understands, Too. AI Open 2023. [Google Scholar] [CrossRef]

- Carnegie Mellon University Motion Capture Database. Available online: http://mocap.cs.cmu.edu/ (accessed on 10 July 2024).

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Oflis, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Berkeley mhad: A comprehensive multimodal human action database. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Clearwater Beach, FL, USA, 15–17 January 2013; pp. 53–60. [Google Scholar]

- Xia, S.; Wang, C.; Chai, J.; Hodgins, J. Realtime style transfer for unlabeled heterogeneous human motion. ACM Trans. Graph. 2015, 34, 119:1–119:10. [Google Scholar] [CrossRef]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with CLIP latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Ruiz, N.; Li, Y.; Jampani, V.; Pritch, Y.; Rubinstein, M.; Aberman, K. DreamBooth: Fine Tuning Text-to-image Diffusion Models for Subject-Driven Generation. arXiv 2022, arXiv:2208.12242. [Google Scholar]

- von Platen, P.; Patil, S.; Lozhkov, A.; Cuenca, P.; Lambert, N.; Rasul, K.; Davaadorj, M.; Wolf, T. Diffusers: State-of-the-Art Diffusion Models. 2022. Available online: https://github.com/huggingface/diffusers (accessed on 10 July 2024).

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going Deeper with Nested U-Structure for Salient Object Detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).