Abstract

Anxiety in older individuals is understudied despite its prevalence. Investigating its occurrence can be challenging, yet understanding the factors influencing its recurrence is important. Gaining insights into these factors through an explainable, probabilistic approach can enhance improved management. A Bayesian network (BN) is well-suited for this purpose. This study aimed to model the recurrence of anxiety symptomatology in an older population within a five-month timeframe. Data included baseline socio-demographic and general health information for older adults aged 60 years or older with at least mild depressive symptoms. A BN model explored the relationship between baseline data and recurrent anxiety symptomatology. Model evaluation employed the Area Under the Receiver Operating Characteristic Curve (AUC). The BN model was also compared to four machine learning models. The model achieved an AUC of 0.821 on the test data, using a threshold of 0.367. The model demonstrated generalisation abilities while being less complex and more explainable than other machine learning models. Key factors associated with recurrence of anxiety symptomatology were: “Not being able to stop or control worrying”; “Becoming easily annoyed or irritable”; “Trouble relaxing”; and “depressive symptomatology severity”. These findings indicate a prioritised sequence of predictors to identify individuals most likely to experience recurrent anxiety symptomatology.

1. Introduction

The concepts of anxiety recurrence and remission are crucial for understanding the nature of anxiety disorder. Anxiety recurrence is defined as the re-emergence of symptoms after a period of remission [1]. Remission is achieved when the patient returns to their usual self, possibly presenting mild or no residual symptoms of the anxiety disorder [2].

A study by Scholten et al. [3] indicated that 23.5% of individuals with remitted anxiety will experience a recurrence of anxiety within two years. This study highlights that recurrent anxiety presents an obstacle to individual well-being and societal growth. Despite advancements in understanding its relationship with predictors, recurrent anxiety continues to pose significant challenges for those who suffer from it.

Minimising recurrent anxiety is crucial not only for individuals but also for society, as anxiety symptomatology affects well-being, social relationships, and work productivity [4], resulting in economic burden including increased treatment costs [5]. To prevent recurrent anxiety, it is essential to identify those at highest risk and understand the most important predictors. This understanding is crucial so that relapse prevention efforts can be targeted towards those patients most in need [1].

Several studies have examined the relationships between anxiety recurrence and its predictors. For instance, Mavissakalian and Guo [6] identified general measures of anxiety, fearfulness, work, and home disability as key predictors of the recurrence of anxiety symptomatology. In this study, logistic regression was employed to analyse a set of seven predictors, generating a combination of nine different models. The model that achieved the best performance highlighted the key predictors associated with recurrent anxiety symptomatology. Another study demonstrated that the discontinuation of antidepressant medication serves as a predictor for anxiety symptomatology recurrence [7]. This approach utilised odds ratios [8] to assess the impact of medication discontinuation on recurrence rates.

Further research has reinforced the importance of other predictors. Comorbid generalised anxiety and depression [2], worsening psychosocial impairment [9], and fear of anxiety-related sensations along with neuroticism [10] were all identified as important predictors of anxiety symptomatology recurrence. In each of these instances, proportional hazards regression was applied to evaluate the influence of these predictors [11].

While these methodologies are valuable for identifying predictors of anxiety recurrence, there are some limitations. In logistic regression, multicollinearity between predictors can cause difficulties in estimating the regression parameters accurately [12]. This issue is particularly challenging when analysing mental health disorders, where many predictors may have overlapping symptoms. Additionally, the interpretation of results from proportional hazards regression and odds ratios can be complex, particularly when dealing with multiple covariates and their interdependencies.

It is noteworthy that none of these studies have specifically focused on the older population, despite increasing concerns about anxiety symptomatology within this group. Anxiety in older adults is particularly troubling due to its high recurrence rates and its potential impact on the development and prognosis of other age-related health issues, such as disability and depression [13].

A 9-year prospective study involving older adults aged 55 to 85 at baseline demonstrated that the presence of more anxiety symptoms at baseline predicted the onset of anxiety symptomatology, emphasising the importance of targeting individuals displaying early symptoms but not meeting the specific diagnostic criteria for anxiety symptomatology [14].

In this context, the application of Bayesian networks (BNs) offers significant advantages, addressing some of the limitations present in current methods for understanding factors associated with recurrent anxiety symptomatology. BNs represent a graph-based approach that captures the interactions among variables, thereby enhancing the explainability [15] of their mutual influences [16]. By accounting for the interdependencies between variables, BNs provide a robust framework for feature selection [17], understanding relationships [18,19], extracting insights [20], and making predictions [21].

In the realm of mental health, BNs enable healthcare providers to simulate complex connections and gain a comprehensive view of the primary symptoms associated with a mental disorder [22]. This simultaneous modeling of all variables allows for a more flexible evaluation of the effects of interventions. Healthcare providers can test hypothetical interventions by adding or removing variables within the network, thereby assessing the impact of various strategies [23].

Moreover, the probabilistic nature of BNs [24] provides quantitative information regarding the uncertainty associated with predictions [25]. This feature is particularly valuable as it captures complex relationships more effectively compared to conventional machine learning techniques [26]. Thus, Bayesian networks offer a compelling alternative for enhancing our understanding of recurrent anxiety symptomatology.

The objective of this study is to use BNs to model the recurrence of anxiety symptomatology within a five-month timeframe in a population aged 60 years and older with mild to severe depressive symptoms and to investigate the key factors that have an impact on its occurrence.

2. Materials and Methods

2.1. Data

We utilised a dataset from randomised controlled trials assessing the effectiveness of mobile messaging psychosocial interventions for depression and subthreshold depression among older adults in resource-limited settings in Brazil [27]. Specifically, we focused on the groups that did not receive the psychosocial interventions, which comprised two subgroups: one with 305 individuals identified with depressive symptomatology (9-item Patient Health Questionnaire (PHQ-9) scores ≥ 10) and another with 231 individuals experiencing subthreshold depressive symptomatology (9-item Patient Health Questionnaire (PHQ-9) scores between 5 and 9, inclusive).

The data included baseline information on socio-demographics (gender, age, marital status, race), self-reported general health (hypertension, diabetes, depression, balance), depressive symptomatology (PHQ-9), anxiety symptomatology (GAD-7), loneliness (3-item UCLA), health-related quality of life (EQ-5D-5L), and capability well-being (ICECAP-O).

Additionally, the dataset provided information on two follow-up periods: the first at three months after receiving a single message (follow-up 1) and the second at five months after baseline (follow-up 2). For these follow-ups, the only data included were related to depression (PHQ-9) and anxiety (GAD-7).

2.2. Outcome

In this study, an individual was considered to have recurrent anxiety symptomatology if they had a total score of 10 or higher on the GAD-7 questionnaire in any of the following scenarios: (a) at baseline and follow-up 1; (b) at baseline and follow-up 2; (c) at baseline, follow-up 1, and follow-up 2.

Table A1 in the Appendix A summarises the socio-demographic and health-related characteristics of this cohort.

2.3. Methodology Overview

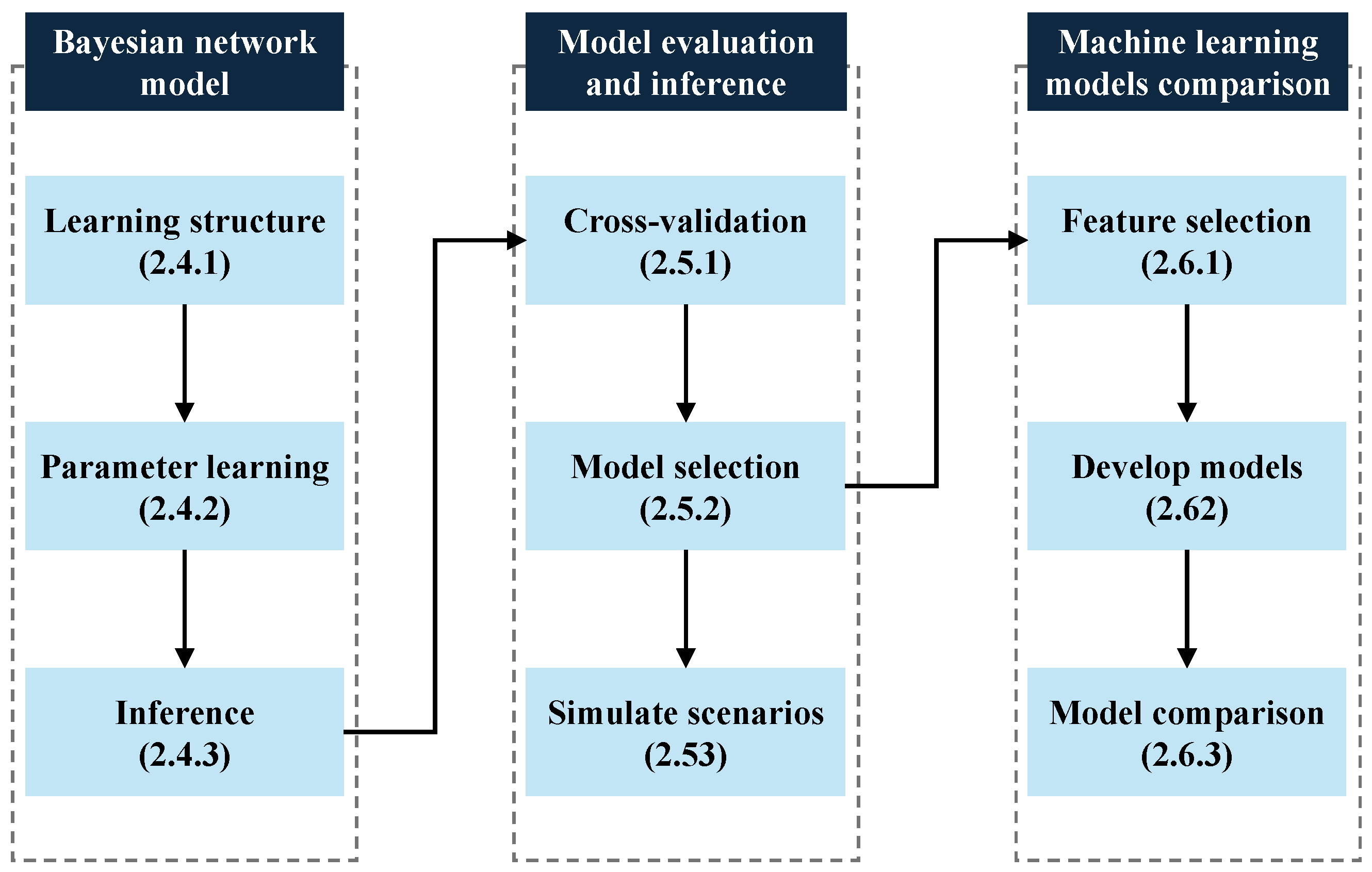

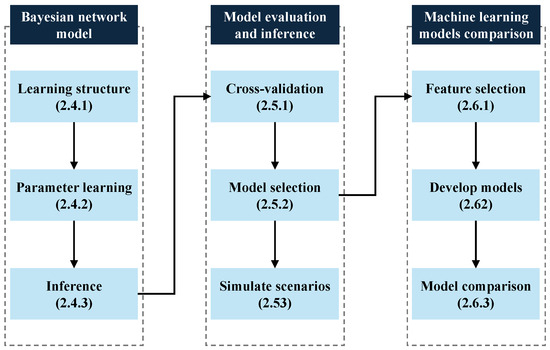

Figure 1 illustrates the nine-step methodology, organised into three main sections.

Figure 1.

Flowchart of the methodology.

The first section focuses on the development of the Bayesian network model. The second section details the evaluation of the Bayesian model and the simulation of scenarios to identify key predictors of recurrent anxiety symptomatology. The third section outlines the development of other machine learning models and comparison of their performance. Each section is further elaborated in the following subsections, corresponding to the numbered boxes in the figure.

2.4. Bayesian Network Model

To comprehend the relationships between the baseline data and our outcome, we developed a Bayesian network model. A Bayesian Network is a directed acyclic graph comprising nodes and edges, utilised to construct an approximation of the joint probability distribution over all variables and the outcome of interest. This joint probability distribution provides all necessary information for making probabilistic inferences on one variable given knowledge of the other variables in the distribution. Nodes represent variables, while directed edges, depicted by arrows, elucidate relationships among these variables [28].

2.4.1. Learning Structure

In our approach, we learned the BN from data [29,30]. We utilised a bootstrap approach [31] to generate 1000 samples of the BN using a constraint-based algorithm, named Incremental Association Markov Blanket (IAMB) [32]. The IAMB algorithm effectively constructs Bayesian networks by incrementally including variables based on mutual information [33], the latter of which quantifies the statistical relationship between variables. This approach helps to identify relevant variables and construct a network that precisely represents the relationships found in the data.

A bootstrap approach ensures that the results are robust and helps minimise false patterns. This method outputs a summary table, illustrated in Table 1, that shows pairs of variables (called “features”) and their relationships. The first two columns, “From” and “To”, show the features associated with the outcome. The last two columns, “Strength” and “Direction”, indicate the likelihood of a connection between the pairs and the direction of this connection. This process helps us understand which variables are related to the outcome, how strong their relationships are, and the direction of these relationships [34].

Table 1.

Bootstrap output example.

We only selected feature pairs where our outcome was present in either the “From” column or the “To” column. This selection was made because we are specifically interested in understanding the relationship between the outcome and the features at baseline. Additionally, we filtered only those directions with a probability greater than 50% and then ordered the summary table in descending order based on strength.

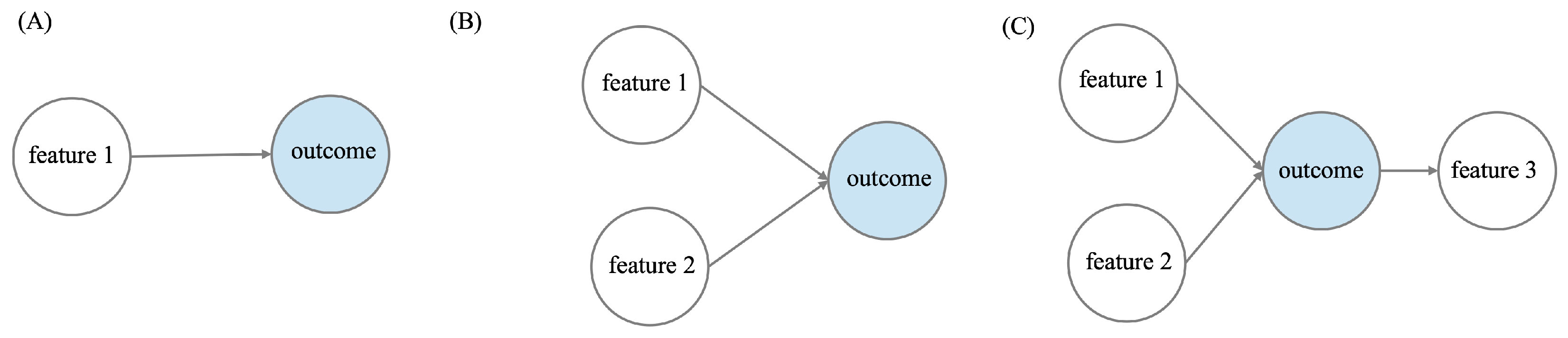

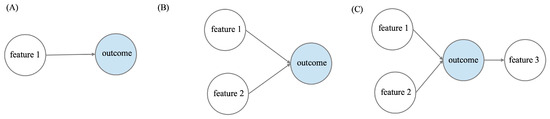

Next, we began constructing the first BN using only the first row of the table. As depicted in Table 1, this initial BN would consist of two nodes, with the “feature 1” node having an arrow pointing towards the “outcome” node (Figure 2A). We then proceeded to build another BN using the first two rows. In this case, our outcome node receives arrows from both the “feature 1” and “feature 2” nodes, as illustrated in Figure 2B. We continued this process until all the connections from the bootstrap output had been used.

Figure 2.

Bayesian networks constructed by incrementing the connections from the bootstrap output table. (A) First BN utilising the first row of the bootstrap output table. (B) Second BN utilising the first two rows of the bootstrap output table. (C) Third BN utilising the first three rows of the bootstrap output table.

2.4.2. Parameter Learning

In a Bayesian network, parameter learning involves estimating the Conditional Probability Distributions (CPD) for each node, based on observed data and assuming the network structure is known. These CPDs are derived from all possible combinations of the parent nodes’ values, with the resulting values referred to as parameters.

For each Bayesian network, defined by the incremental addition of nodes ordered by their strength, we computed the CPD associated with each node. This computation was carried out using the Bayesian method [35].

2.4.3. Inference

Predictions for the outcome were made using exact inference [28], where the posterior probability is calculated based on a set of events. These events consist of all possible values of the nodes connected to our outcome.

Specifically, for cases (A), (B), and (C) in Figure 2, the joint probability distributions can be described by Equations (1)–(3), respectively:

where , , and are the probability distributions of feature 1, 2, and 3. represents the conditional probability distribution of the outcome given feature 1. denotes the conditional probability distribution of the outcome given feature 1 and 2, and describes the conditional probability distribution of the feature 3 given the outcome.

We define features 1 and 2 as “parent” node of the “child” node outcome, while feature 3 is the “child” of its “parent” node outcome. Therefore, we can generalise the joint probability distribution for all other Bayesian network structures as shown in Equation (4):

where denotes the parent of node . The conditional probability for a node without parents is simply its prior probability .

To develop the Bayesian Network model, we utilised the bnlearn package in R [36].

2.5. Model Evaluation and Inference

To rigorously evaluate our data-driven approach statistically, for each BN constructed as explained in the previous section, we employed repeated cross-validation.

2.5.1. Cross-Validation

The repeated cross-validation was employed with four folds, repeated 25 times using different random samples from the training data. In each of the 25 iterations, the dataset was divided into subsets, allowing the models to be trained on three folds and validated on the remaining fold. This process was conducted solely with the training data, which represents 70% of our total dataset to avoid any bias when evaluating the performance of the model on the unseen test data.

For each fold, using the predictions described in the previous section, we recorded the Area Under the receiver operating Characteristic curve (AUC), F1-score, and the threshold that maximised both sensitivity and specificity simultaneously, as determined by Youden’s index, in both the training folds (three folds) and the validation fold (one fold).

2.5.2. Model Selection

The Bayesian network model was selected based on the one that achieved the highest AUC value during the validation phase, and this model was then tested on the remaining 30% of the data that had not been used for training. Using the BN that maximises the AUC, we learned the parameters on the entire training dataset, enabling us to generate new predictions for the test data using exact inference and the optimal threshold identified in the repeated cross-validation. Additionally, we calculated AUC and F1-score values on the test data for comparison with the cross-validation results.

2.5.3. Simulate Scenarios

Utilising the chosen BN and the learned parameters, we proceeded with an inference analysis by calculating the conditional probability tables of our outcome given the features individually. Moreover, we investigated the combinations of the features, assessing how their different values would collectively impact the outcome. We then analysed the individual contributions of features and also their interactions, elucidating potential synergistic or antagonistic effects on the outcome variable. This analysis was conducted by calculating probabilities, marginalising the selected features in each scenario.

2.6. Machine Learning Models Comparison

While BNs offer advantages such as simultaneously capturing the interdependencies of variables, uncovering complex hidden patterns, and allowing for the simulation of intervention effects, we also evaluated how the BN model performs compared to other machine learning models.

2.6.1. Feature Selection

We used Recursive Feature Elimination with Cross Validation (RFECV) to select the features for developing the machine learning models. RFECV [37] is a feature selection method that iteratively eliminates the least important features from the dataset, while evaluating the model’s performance through cross-validation. This process uses a supervised learning estimator that provides information regarding feature importance. In this study, we used Logistic Regression as the estimator. After selecting the features, we used the same selected features for all machine learning models.

2.6.2. Develop Models

It is common practice to fine-tune the parameters when developing a model, a process known as hyperparameter optimisation. This typically enhances the model’s performance. To fine-tune the hyperparameters and evaluate the model’s performance in a robust and unbiased manner, we applied nested cross-validation [38]. Nested cross-validation involves two main loops: an outer loop and an inner loop. In the outer loop, the dataset is divided into multiple folds. In each iteration of the outer loop, one fold is reserved as the test set, and the remaining folds are used as the training set. The outer loop is dedicated to evaluating the model.

In the inner loop, nested within the outer loop, hyperparameter tuning is performed. Here, the training set is further divided into folds, with one fold held out as a validation set, while the rest are used for training. Multiple models are trained and evaluated within this loop to find the best-performing set of hyperparameters.

After the inner loop completes, the set of hyperparameters that provided the best performance on the training folds is used on the test dataset reserved in the outer loop. The best performance is usually measured by maximising a defined metric, such as AUC or F1-score. The performance metrics obtained from each iteration of the outer loop are summarised to provide an overall assessment of the model’s performance.

In this study, we used nested cross-validation with four folds for both the inner and outer loops and 25 repetitions, resulting in 100 evaluations, similar to the repeated cross-validation used in the Bayesian network model. To fine-tune the hyperparameters, we employed Bayesian hyperparameter optimisation maximising the AUC metric [39].

Furthermore, we recorded the AUC metric, including the minimum, mean, maximum, and standard deviation from the outer loop iterations. Next, we selected the hyperparameters that resulted in the highest AUC value across the 100 evaluations and fitted the models with these hyperparameter settings on the test data.

2.6.3. Model Comparison

The Bayesian network model applied in this study was compared with four other models: Logistic Regression, the SGDClassifier [40], XGBoost [41], and the Relevance support machine (RVM) [42]. To ensure a fair comparison, we maintained identical splits of training and testing data across all four models.

We compared the AUC metrics of the four models with the AUC metric of the Bayesian network model.

The models were implemented in Python 3.7.7 using packages SGDClassifier, LogisticRegression, XGBClassifier, and EMRVM. Python is freely available open source software (Python Software Foundation).

3. Results

3.1. Data Processing

First, the data were filtered to ensure complete information on the GAD-7. We deleted 71 cases where information for the GAD-7 questionnaire was missing. This was because the study was designed to initially screen the GAD1 and GAD2 questions. If their sum was greater than or equal to 1, the remaining five questions were assessed; otherwise, these values were missing.

After the initial filtering, the descriptive analysis identified six features with more than 25% of the cases missing: “hypertensioncontrolled”, “diabetescontrolled”, “hypertensiontreatment”, “diabetestreatment”, “depressiontreatment”, and “falls”. However, when we correlated these six variables with other features, it was possible to infer the missing values.

For “hypertensioncontrolled” and “diabetescontrolled”, the missing cases were 99% correlated with not having hypertension and diabetes, respectively. Therefore, we filled the missing values for both features with “yes”, based on the assumption that if a participant does not have hypertension or diabetes, their condition is controlled.

For “hypertensiontreatment”, “diabetestreatment”, “depressiontreatment”, and “falls”, the missing cases were 100% correlated with not having hypertension, diabetes, depression, and balance problems, respectively. Thus, we filled these missing values with “no”, assuming that if a participant does not have the condition, they do not require treatment for it.

After filling in these missing values, there were still 5% of values missing randomly, affecting 14 individuals, who were consequently dropped from the analysis.

3.2. Bayesian Network Construction

The results of the bootstrap are illustrated in Table 2, showing nine connections between features and the outcome. They include “gad6” (Becoming easily annoyed or irritable), “gad2” (Not being able to stop or control worrying), “Depression group” (four PHQ-9 score groups: 5–9, 10–14, 15–19, 20 or above), “gad4” (Trouble relaxing), “ucla3” (How often one feels isolated from others), “gad1” (Feeling nervous, anxious, or on edge), “ucla1” (Lack of company), “phq5” (Poor appetite or overeating), and “depression” (Have you been diagnosed with depression).

Table 2.

Results of the 1000 samples bootstrap.

These nine connections are all directly connected to the outcome and appeared at least once in the 1000 bootstrap samples used while building the Bayesian Network, as discussed in Section 2.4.

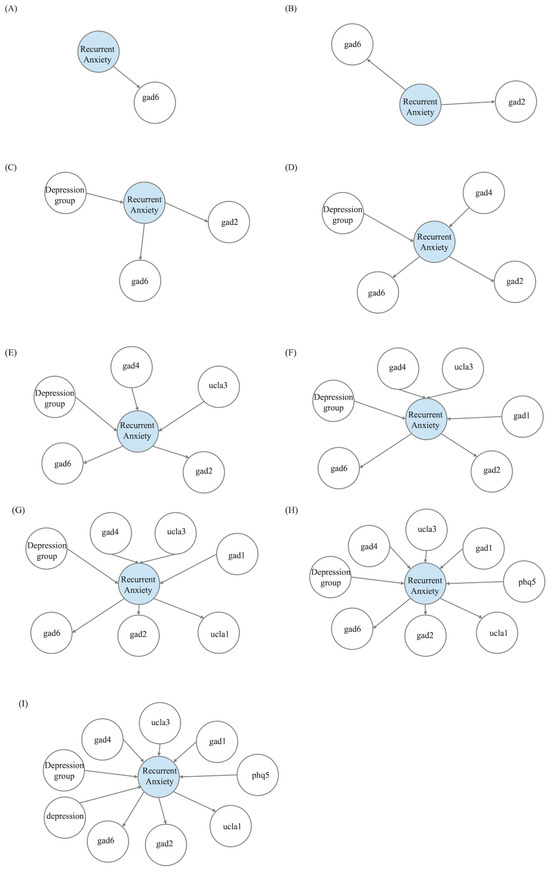

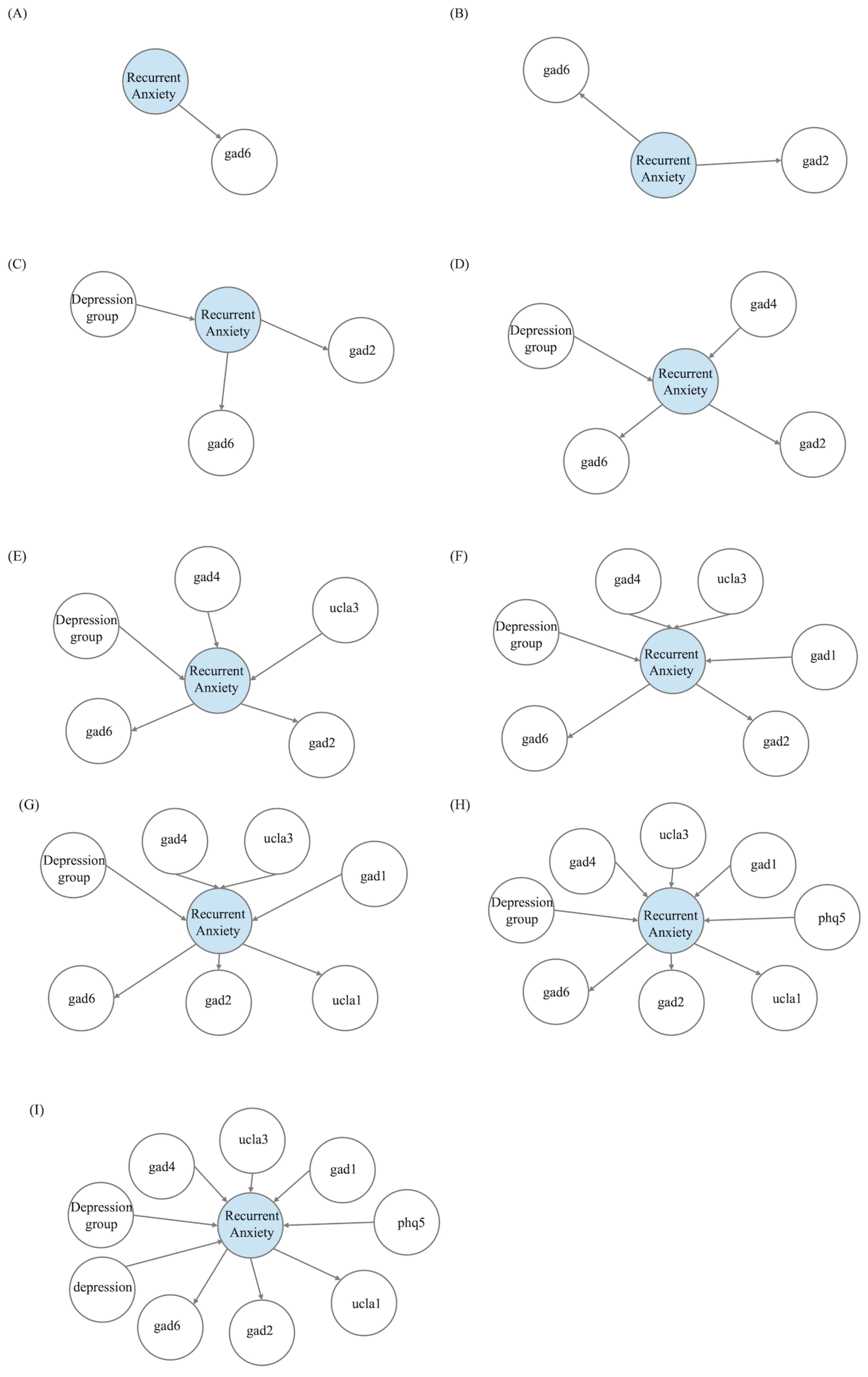

As explained in Section 2.4, we constructed nine different Bayesian Networks. We began with the first BN, which had two nodes, with a directed arrow from our outcome “Recurrent anxiety” to “gad6”. Next, we added the node “gad2” to the “Recurrent anxiety” node, maintaining the connection used in the first BN. Then, we incremented from BN number 3 to number 9 by adding nodes according to Table 2. All nine constructed BNs can be seen in Appendix A Figure A1.

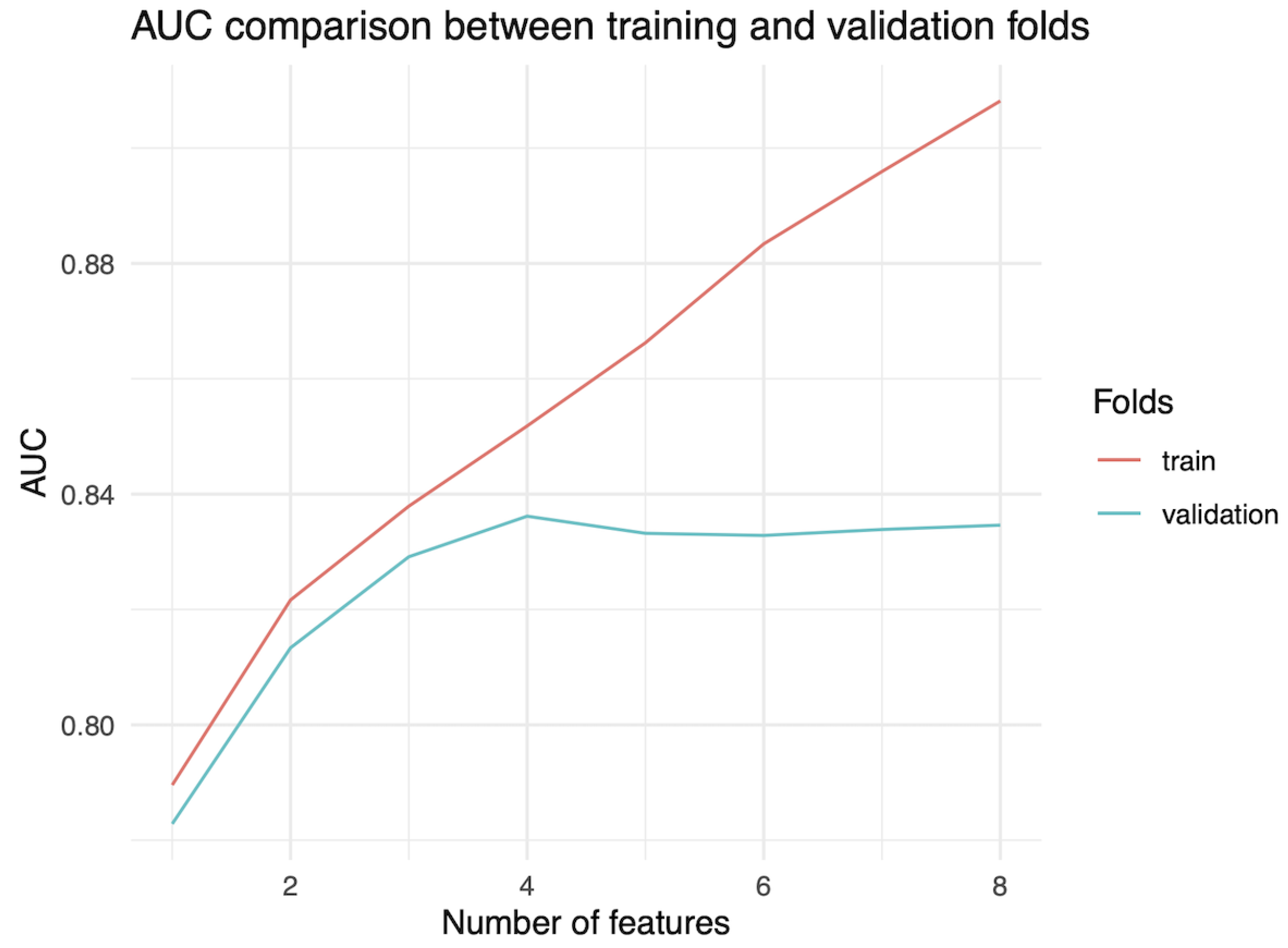

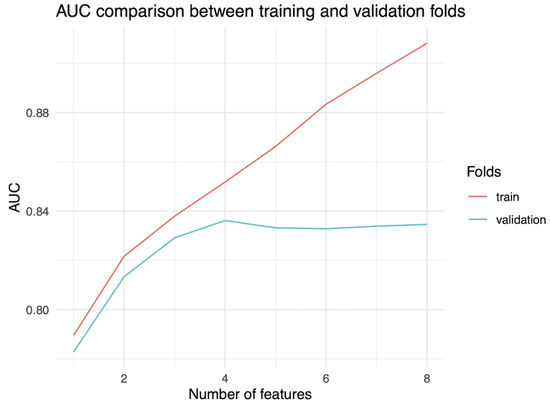

Figure 3 displays the average AUC values from 100 evaluations on repeated cross-validation versus the nine different BNs constructed from the bootstrap. Figure 3 shows that the AUC consistently increases for the training folds. However, for the validation folds, there is a peak when the number of features is four, indicating that the BN with four features performs best. This result suggests it has the highest potential for strong performance and effective generalisation among all nine possible BNs.

Figure 3.

Comparison of AUC metrics in training and validation folds.

3.3. Evaluation of the Model

Table 3 and Table 4 provide additional details regarding the AUC and F-1 score, respectively. We included the mean of the 100 evaluations from the training folds in the repeated cross-validation, as well as the minimum, mean, maximum, and standard deviation of the validation folds across all 100 evaluations. With a mean AUC of 0.836 and an F1-score of 0.765, the BN with four features was chosen for evaluation on the test data. This selection was based on the highest mean AUC achieved on the validation folds.

Table 3.

AUC metric summary of repeated cross-validation.

Table 4.

F1-score metric summary of repeated cross-validation.

Due to the small size of the dataset (308 observations on training data), a wide range of AUC values is observed for the chosen model (see Table 3) from 0.648 to 0.959, with a standard deviation of 0.056. Regarding the F1-score, we obtained minimum and maximum values of 0.538 and 0.918, respectively, and a standard deviation of 0.053 (Table 4) for the chosen model with four features.

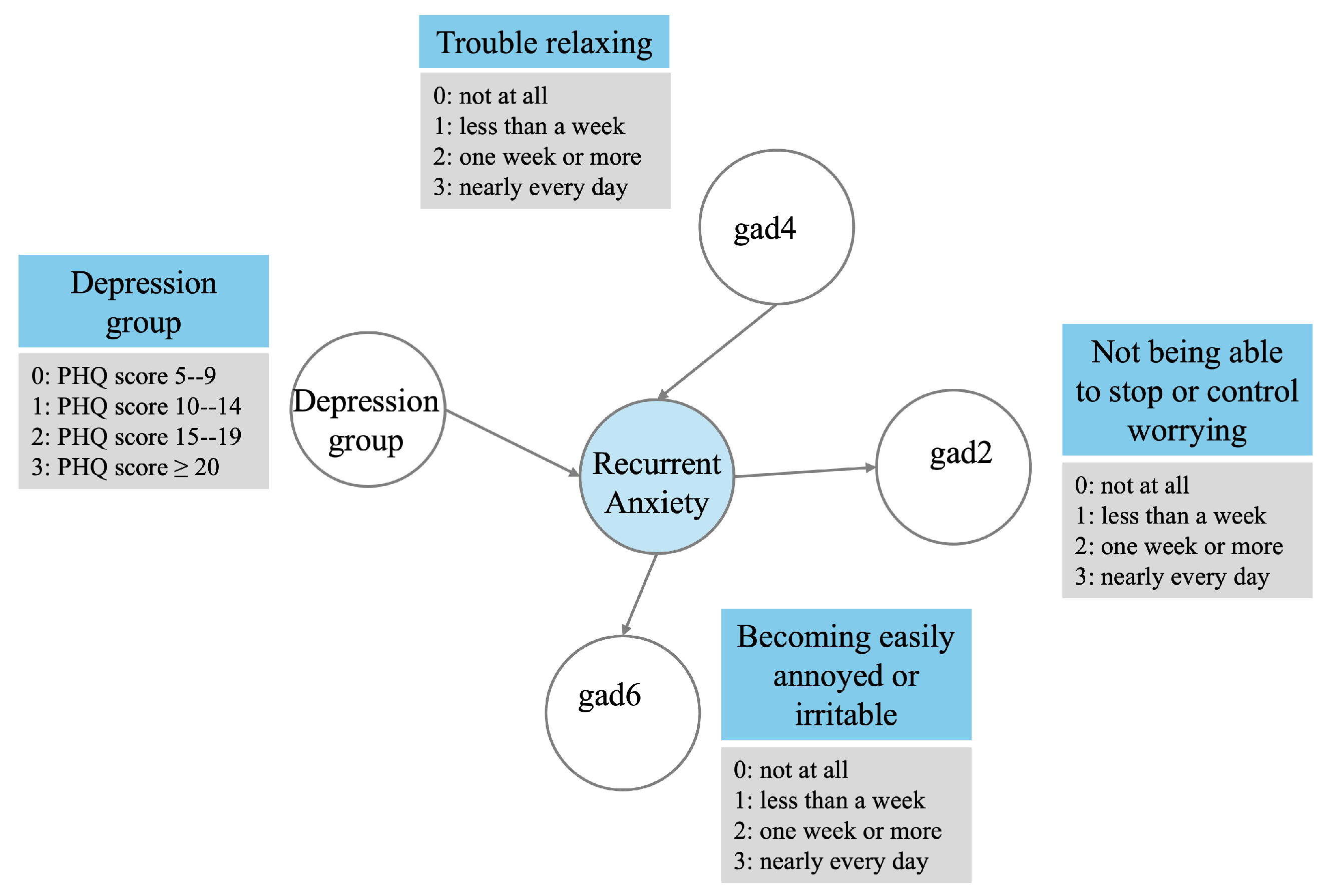

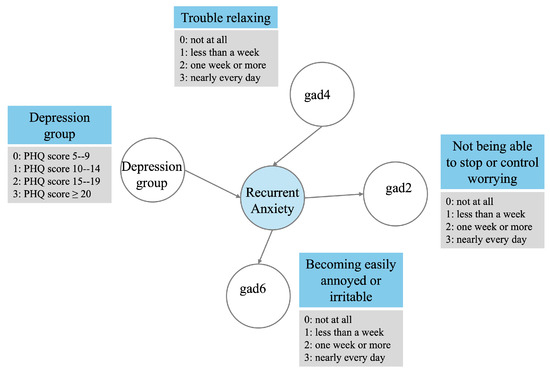

The selected BN is depicted in Figure 4, where “Depression group” (Four PHQ-9 score groups: 5–9, 10–14, 15–19, 20 or above) and “gad4” (Trouble relaxing) have a direct arrow pointing to the outcome. Additionally, “gad6” (Becoming easily annoyed or irritable) and “gad2” (Not being able to stop or control worrying) receive an arrow from the outcome.

Figure 4.

Optimal Bayesian network.

3.4. Performance of the Model on Unseen Data

Table 5 displays the metric values for training, repeated cross-validation, and test data. It is important to note that the training values here differ from those in the training values in Table 3 and Table 4. This is because in Table 5, the whole training dataset is utilised, whereas in Table 3 and Table 4, it is the average of the 100 evaluations of the training folds. The threshold that resulted in the maximum Youden’s index was 0.367.

Table 5.

Comparison of AUC and F1-score on training, validation, and test data.

3.5. Inference

The conditional probability tables for each feature and the outcome are illustrated in Table A2, Table A3, Table A4 and Table A5 of the Appendix A. These tables show that the features with more discriminatory power are “Not being able to stop or control worrying” (gad2) and “Becoming easily annoyed or irritable” (gad6). For instance, if an individual reports not being able to stop or control worrying nearly every day at baseline, the probability of reporting to have recurrent anxiety symptomatology is 0.752 (Table A2 of the Appendix A). Furthermore, from Table A3 of the Appendix A, if an individual reports to become easily annoyed nearly every day at baseline, the probability of reporting to have recurrent anxiety symptomatology is 0.745.

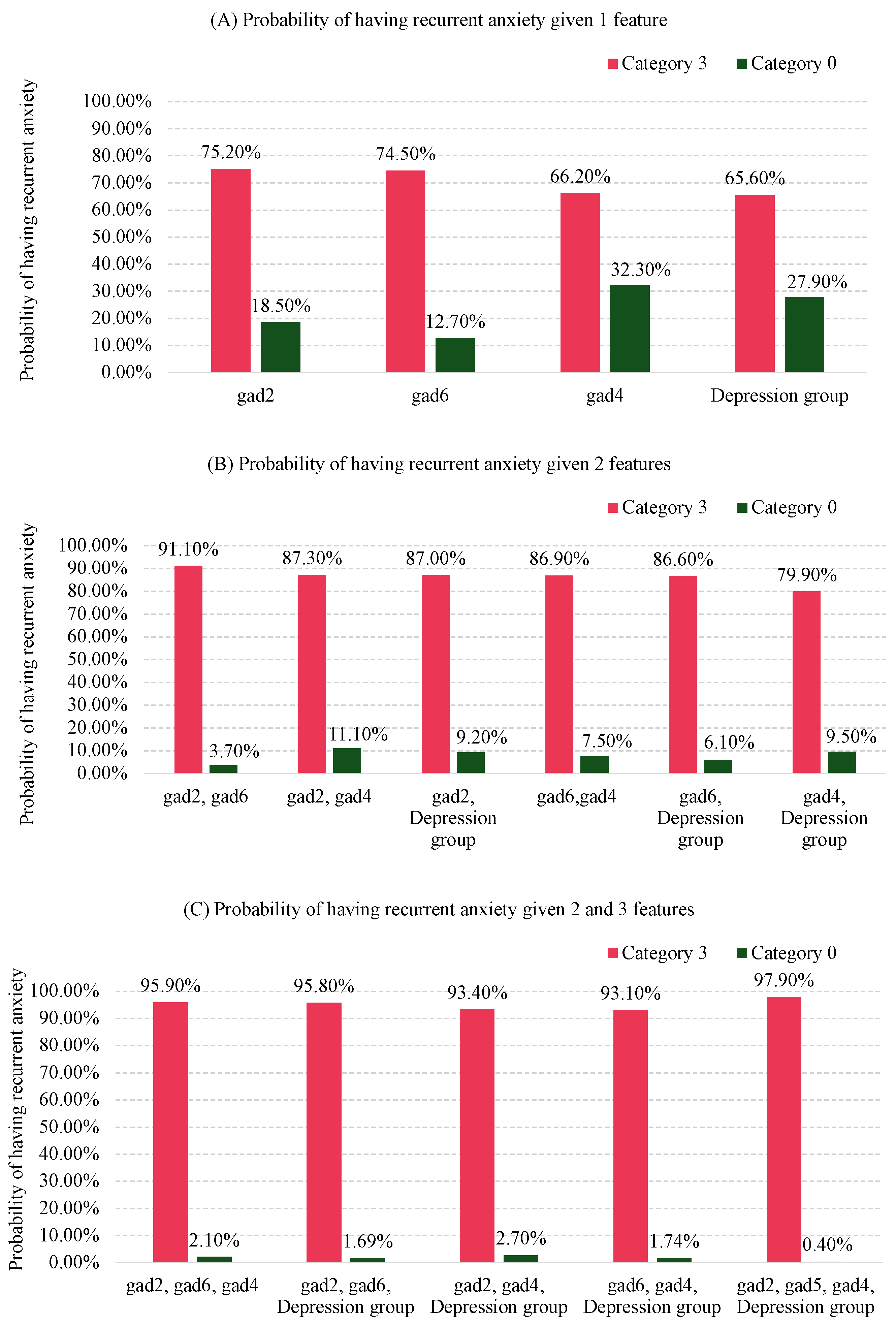

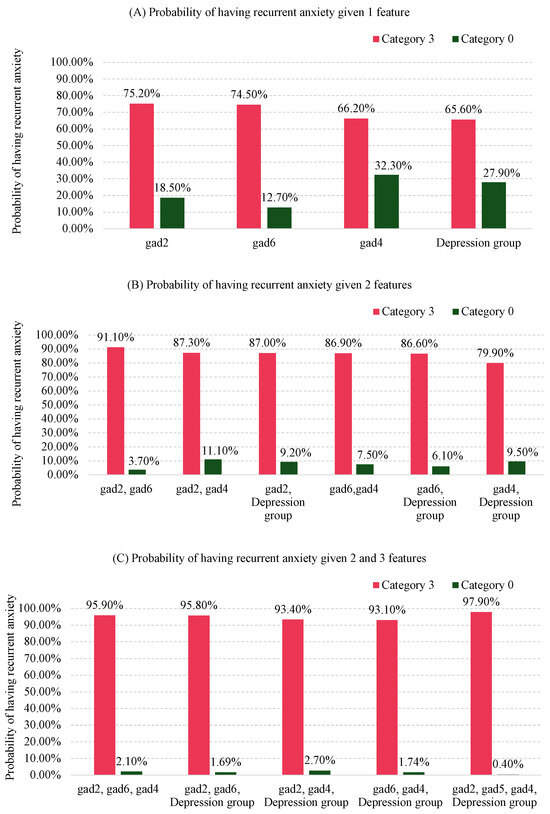

Although “Depression group” and “Trouble relaxing” (gad4) in isolation do not exhibit strong discriminatory performance similar to gad2 and gad6, Figure 5 demonstrates the significant contribution of these combined factors to the probability of recurrent anxiety symptomatology. For instance, the probability of having recurrent anxiety symptomatology is 0.752 if an individual reports “not being able to stop or control worrying” nearly every day (category “3” in Figure 4), compared with 0.185 if they report not worrying at all (category “0” in Figure 4), as shown in Figure 5A. When “not being able to stop or control worrying” (gad2) and “becoming easily annoyed or irritable” (gad6) are combined, the probability of recurrent anxiety symptomatology increases to 0.911 if both are reported nearly every day (category “3”). Conversely, this probability decreases to 0.037 if both are reported not at all (category “0”), as shown in Figure 5B.

Figure 5.

Posterior probabilities scenarios of having recurrent anxiety symptomatology when combining the features of the BN model for 1 feature (A), 2 features (B), and 3 or 4 features (C). Category 3: Nearly every day for gad2, gad4, gad6, and a PHQ-9 score of 20 or higher for the depression group. Category 0: Not at all for gad2, gad4, gad6, and a PHQ-9 score between 5 and 9 for the depression group.

As more features are considered, the probabilities continue to change. For example, in Figure 5C, the probability of recurrent anxiety symptomatology reaches 0.979 if an individual reports “not being able to stop or control worrying” (gad2), “becoming easily annoyed or irritable” (gad6), and “trouble relaxing” (gad4) nearly every day (category “3”), and has a PHQ-9 score 20 or over (category “3” in Figure 4).

Conversely, the probability of having recurrent anxiety symptomatology is just 0.004 if an individual reports “not at all” (category “0”) for gad2, gad6, and gad4, and falls into the category “0” for the depression group (PHQ-9 score between 5 and 9), as shown in Figure 5C.

3.6. Comparison of the Models

Using RFECV, we selected ten features to develop the Logistic Regression, SGDClassifier, XGBoost, and RVM models. The only feature in common with the Bayesian Network model was gad2. The remaining nine features are different and are described in Table A6 in the Appendix A.

Table 6 presents the AUC metric of the three models as well as the proposed BN model. The first four columns (Min, Mean, Max, SD) correspond to the 100 evaluations using nested cross-validation for the three models and the 100 evaluations of the repeated cross-validation in our approach. The last column indicates the AUC on the test data, which utilised the same data split for all five models, as discussed in Section 2.6.

Table 6.

Model performance comparison based on AUC metric.

From Table 6, it is observable that all four models outperformed the Bayesian network in terms of Mean AUC, with values of 0.897, 0.883, 0.884, and 0.894 for Logistic Regression, SGDClassifier, XGBoost, and RVM, respectively, compared with 0.836 for the Bayesian network. Additionally, the standard deviations of the three models are lower than that of the Bayesian network, with values of 0.037, 0.050, 0.039, and 0.035 for Logistic Regression, SGDClassifier, XGBoost, and RVM, respectively, compared with 0.056 for the Bayesian network.

However, upon evaluating the performance of the AUC metric on the test data, the Bayesian network exhibited an AUC of 0.821, while Logistic Regression, SGDClassifier, XGBoost, and RVM exhibited AUC values of 0.814, 0.842, 0.799, and 0.831, respectively. Given the cross-validation results on the training data, these test results should not be interpreted as meaning that the BN is superior, but they do show that the performance of the latter is not substantially inferior.

4. Discussion

The decision to use the BN was influenced by the observation that, after incorporating more than four features, the AUC on the validation set began to decline (Figure 3), while the AUC on the training set continued to increase. This suggests overfitting of the model. Overfitting occurs when a model performs well on the training data but poorly on the test data, indicating it may not generalise effectively to new, unseen data.

When we conducted predictions on the test data, we achieved an AUC of 0.821 and an F1-score of 0.743 (Table 5). These results closely align with those obtained through repeated cross-validation, falling within one standard deviation. This suggests that the model generalises well. It is expected that the training data yields higher values, as it is used to learn the parameters for making predictions. However, these values remain less than two standard deviations for F1-score. This further validates the robustness of the generalisation error estimated using repeated cross-validation with 100 evaluations.

One practical application provided by the analysis in Figure 5 is its usefulness in identifying individuals based on the three symptoms of anxiety and the level of depressive symptomatology severity—the four key factors relevant to recurrent anxiety symptomatology shown in Figure 4. This can help in developing specific interventions for these individuals.

If data and time are limited, clinicians should prioritise gathering information about gad2, as it has the highest probability of indicating recurrent anxiety symptomatology when the symptom occurs “nearly every day” (category 3 in Figure 5A. If constraints are less stringent, clinicians should prioritise information about both gad2 and gad6, as these combined have the highest probability of indicating recurrent anxiety symptomatology when both symptoms are in category 3 (Figure 5B).

Next, information about gad4 should be prioritised, as combining this with gad2 and gad6 has the highest probability of indicating recurrent anxiety symptomatology when the symptom is in category 3 (Figure 5C).

Finally, combining all three previous symptoms in category 3 with “Depression group” also in category 3 (PHQ score ≥ 20) increases the probability of having recurrent anxiety symptomatology to 97.9%. Conversely, having category 0 (“not at all”) for gad2, gad6, and gad4, and a PHQ score between 5 and 9 for the depression group, results in a probability of 0.4% for recurrent anxiety symptomatology. This analysis suggests a prioritised order of importance for the features to focus on.

To gain a different perspective on the model, we analysed the test data for all individuals who had baseline values of 0 for gad2, gad6, and the Depression group. This indicates an absence of symptoms related to “Not being able to stop or control worrying”, “Becoming easily annoyed or irritable”, and a PHQ-9 score between 5 and 9. This analysis aimed to explore the impact of other features not selected by the BN model on the outcome.

We found five individuals out of 106 (5%) who met these criteria, and none of them presented recurrent anxiety symptomatology. For instance, one case experienced “Feeling nervous, anxious, or on edge” (gad1) for more than half the days and “Worrying too much about different things” (gad3) nearly every day at baseline, yet did not present recurrent disorder. Another participant experienced gad1 for more than half the days and “Feeling afraid, as if something awful might happen” (gad7) nearly every day at baseline, but also did not present recurrent anxiety symptomatology. Additionally, a third individual experienced gad1, gad3, and gad7 for more than half the days each, and similarly did not present recurrent anxiety symptomatology. This further suggests that the focus should be on the features identified by the BN model.

Although this study primarily focused on older individuals, the Depression group identified by our BN model is also recognised as a predictor of recurrent anxiety symptomatology, as demonstrated in another study [2], albeit in the general population.

The Bayesian network model underperformed compared to Logistic Regression, SGDClassifier, XGBoost, and RVM. However, it is noteworthy that feature selection was applied to the machine learning models, resulting in 10 selected variables, while the BN model, due to its nature of selecting features while learning the network structure, utilised only 4. Consequently, with fewer features, one might expect higher variance, but this also results in a less complex model with a reduced risk of overfitting, leading to improved generalisation on new, unseen data. Table 5 illustrates that although the standard deviation of the machine learning models was smaller, the difference between the performance on new unseen test data and the mean of the nested cross-validation remained higher than that observed in the BN model.

Our approach offers notable advantages. While Logistic Regression can output probabilities for the outcome, it provides only a single point estimate. In contrast, the Bayesian network gives a probability distribution for the outcome, making it more robust for analysing uncertainties. Although the RVM uses Bayes’ theorem and is a probabilistic model, it does not provide clear explainability. XGBoost offers explainability, but does not provide probabilistic measures, while the SGDClassifier provides neither explainability nor probabilistic measures. Another significant advantage of the BN model is its potential to capture complex dependencies between variables, which is not typically achieved by the other four models.

Additionally, the BN model suggests a specific order of importance for the features, guiding the prioritisation of key factors to better identify individuals at risk of recurrent anxiety symptomatology. The network structure captures the joint probability distribution over all variables, enabling an analysis of how various combinations of factors influence the likelihood of recurrent anxiety. Furthermore, the model allows for the examination of predictors in isolation by marginalising the joint probability distribution. This approach enables the assessment of the isolated effect of specific variables without needing to remove others and rerun the model. This capability was demonstrated in the scenario simulations presented in Section 3.5.

In summary, the BN model enhances explainability by highlighting key factors and providing insights through probabilistic measures. These measures explicitly show the impact of increasing or decreasing the values of specific features, offering a clearer understanding of the dynamics at play.

5. Contributions

This work contributes by addressing the gap in identifying the most important factors that impact the recurrence of anxiety disorder by providing probabilistic measures, through the development of an explainable model giving insights to clinicians to prioritise actions to prevent the relapse. Although this methodology is applied in the context of anxiety recurrence, it can equally be applied to other mental health disorders.

Our approach combines constraint-based methods with bootstrap techniques to determine the best Bayesian network structure from a given dataset. This involves learning parameters and predicting outcomes for each network structure using repeated cross-validation.

Additionally, we identified key predictors that significantly impact recurrent anxiety in this population and proposed a specific order for addressing these predictors. By implementing interventions targeted at reducing these predictors (i.e., addressing the symptoms associated with them) in the recommended sequence, the likelihood of recurrence could potentially be reduced more effectively.

6. Conclusions

Using a Bayesian network, we aimed to understand the relationships of baseline data with the recurrence of anxiety symptomatology within five months. The model achieved an AUC of 0.821 and an F1-score of 0.743 on the test data.

We identified that “Not being able to stop or control worrying”, “Becoming easily annoyed or irritable”, and “Trouble relaxing”, along with “depressive symptomatology severity” were the most important predictors for understanding recurrent anxiety symptomatology in this population.

Our analysis indicates a prioritised sequence of predictors to analyse for identifying individuals most likely to experience recurrent anxiety symptomatology. This sequence is as follows: “Not being able to stop or control worrying”, “Becoming easily annoyed or irritable”, “Trouble relaxing”, and “depressive symptomatology severity”.

The prioritised order of the predictors was ensured by testing all possible combinations on the optimal BN structure, extracting probabilities through marginalisation of each combination. Marginalisation is a useful approach for checking these combinations because it allows us to zoom out and focus on the overall scenarios, while also enabling us to examine more detailed aspects if needed.

7. Limitations

The features selected in the model demonstrated relevance and achieved good performance for this specific dataset, which comprises older individuals aged 60 and over with a PHQ-9 total score of at least 5. Nevertheless, it is noteworthy to acknowledge that in different populations, different factors may be seen as indicators of recurring anxiety.

Introducing more granular data could improve the model’s performance. Furthermore, our contention that the BN model provides clinicians with information to prioritise the treatment of specific symptoms would need to be tested using a suitable clinical trial.

Despite utilising a bootstrap approach during construction to enhance the chances of finding the optimal solution, we cannot guarantee optimality of the found network, especially given the small dataset size, which introduces considerable variability. There may be other network layouts that could potentially outperform this approach. For instance, including additional variables in this study may lead to better performance. However, this is not a limitation of the proposed methodology, but rather of the data available during the research.

8. Future Work

Building on the current study’s findings, future research could explore the development of more sophisticated models by integrating separate Bayesian networks (BNs) designed for different mental health issues into a unified framework, specifically an object-oriented Bayesian network (OOBN). This approach holds significant potential for enhancing the predictive power and interpretability of mental health assessments by allowing for the modular representation of distinct yet related psychological conditions within a single cohesive model. This modular approach would facilitate the analysis of comorbidities, providing a more comprehensive understanding of how these conditions co-occur and influence each other.

Author Contributions

E.M., M.M.d.S.M., C.A.N., P.V.d.V. and M.S. conceived and designed the study. M.M.d.S.M. conducted the literature review regarding the psychology view. E.M. conducted the literature review, performed the machine learning analysis, and subsequently wrote the manuscript, which was reviewed by C.A.N., R.A., T.J.P., P.V.d.V. and M.S. All authors contributed to and approved the final manuscript.

Funding

This publication has emanated from research supported in part by a grant from Science Foundation Ireland under Grant number 18/CRT/6049 (Eduardo Maekawa). Additionally, this study was funded by the Sao Paulo Research Foundation (process number 2017/50094 2) and the Joint Global Health Trials initiative, jointly funded by the Department of Health and Social Care (DHSC), the Foreign, Commonwealth & Development Office (FCDO), the Medical Research Council (MRC), and Wellcome (process number MR/R006229/1). FAPESP supported CAN (2018/19343-9) and (2022/05107-7), MM (2021/03849-3). M.S. is supported by CNPq-Brazil (307579/2019-0).

Institutional Review Board Statement

Ethical approval was obtained from the Ethics Committee of the Hospital das Clí nicas da Faculdade de Medicina da Universidade de São Paulo (HCFMUSP) (CAPPesq, ref: 4.097.596 and CAPPesq, ref: 4.144.603). Additionally, the study was authorised by the Secretaria da Saúde do Municí pio de Guarulhos. Verbal informed consent to participate in the study was requested from all participants.

Informed Consent Statement

Participants were informed that non-identifiable data would be used for publications prior to providing consent in both assessments. Informed consent materials are available from the corresponding author upon request.

Data Availability Statement

Study documentation and data will be made available upon request in accordance with data sharing conditions.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AUC | Area Under the Receiver Operating Characteristic Curve |

| ML | Machine Learning |

| BN | Bayesian network |

| IAMB | Incremental Association Markov Blanket |

| CPD | Conditional Probability Distributions |

| RFECV | Recursive Feature Elimination with Cross Validation |

Appendix A

Appendix A.1

Figure A1.

Incremental construction of the Bayesian networks. (A) BN with one feature and the outcome. (B) BN with two features and the outcome. (C) BN with three features and the outcome. (D) BN with four features and the outcome. (E) BN with five features and the outcome. (F) BN with six features and the outcome. (G) BN with seven features and the outcome. (H) BN with eight features and the outcome. (I) BN with nine features and the outcome.

Figure A1.

Incremental construction of the Bayesian networks. (A) BN with one feature and the outcome. (B) BN with two features and the outcome. (C) BN with three features and the outcome. (D) BN with four features and the outcome. (E) BN with five features and the outcome. (F) BN with six features and the outcome. (G) BN with seven features and the outcome. (H) BN with eight features and the outcome. (I) BN with nine features and the outcome.

Table A1.

Table of characteristics.

Table A1.

Table of characteristics.

| Feature | Range | N | % Recurrency of Anxiety |

|---|---|---|---|

| Gender | Female | 263 | 0.494 |

| Male | 112 | 0.402 | |

| Age group | 70 year or over | 112 | 0.518 |

| Between 60 and 69 | 243 | 0.469 | |

| Marital status | Divorced | 68 | 0.397 |

| Married | 212 | 0.491 | |

| Single | 29 | 0.552 | |

| Widowed | 66 | 0.424 | |

| Race | Asian | 8 | 0.375 |

| Black | 36 | 0.611 | |

| Brown | 159 | 0.421 | |

| Native Brazilian | 3 | 0.333 | |

| White | 169 | 0.485 | |

| Depression group | PHQ-9 score: 10–14 | 87 | 0.494 |

| PHQ-9 score: 15–19 | 79 | 0.620 | |

| PHQ-9 score: 20 or above | 66 | 0.803 | |

| PHQ-9 score: 5–9 | 143 | 0.210 | |

| Hypertension | No | 106 | 0.396 |

| Yes | 269 | 0.494 | |

| Diabetes | No | 239 | 0.477 |

| Yes | 136 | 0.449 |

Table A2.

Conditional probability distribution—gad2: “Not being able to stop or control worrying”.

Table A2.

Conditional probability distribution—gad2: “Not being able to stop or control worrying”.

| Not at All | Less Than a Week | More Than Half the Days | Nearly Every Day | |

|---|---|---|---|---|

| No Anxiety | 0.8152756 | 0.8121899 | 0.6355257 | 0.2476558 |

| Anxiety | 0.1847244 | 0.1878101 | 0.3644743 | 0.7523442 |

Table A3.

Conditional probability distribution—gad6: “Becoming easily annoyed or irritable”.

Table A3.

Conditional probability distribution—gad6: “Becoming easily annoyed or irritable”.

| Not at All | Less Than a Week | More Than Half the Days | Nearly Every Day | |

|---|---|---|---|---|

| No Anxiety | 0.8726873 | 0.7803838 | 0.3191136 | 0.254687 |

| Anxiety | 0.1273127 | 0.2196162 | 0.6808864 | 0.745313 |

Table A4.

Conditional probability distribution—gad4: “Trouble relaxing”.

Table A4.

Conditional probability distribution—gad4: “Trouble relaxing”.

| Not at All | Less Than a Week | More Than Half the Days | Nearly Every Day | |

|---|---|---|---|---|

| No Anxiety | 0.6774251 | 0.7721824 | 0.5187359 | 0.3384495 |

| Anxiety | 0.3225749 | 0.2278176 | 0.4812641 | 0.6615505 |

Table A5.

Conditional probability distribution—Depression group.

Table A5.

Conditional probability distribution—Depression group.

| PHQ-9: 5–9 | PHQ-9: 10–14 | PHQ-9: 15–19 | PHQ-9: | |

|---|---|---|---|---|

| No Anxiety | 0.7211609 | 0.5201635 | 0.3372896 | 0.3441699 |

| Anxiety | 0.2788391 | 0.4798365 | 0.6627104 | 0.6558301 |

Table A6.

Features selected by the Recursive Feature Elimination with Cross-Validation.

Table A6.

Features selected by the Recursive Feature Elimination with Cross-Validation.

| Features | Description | Range of the Values |

|---|---|---|

| tobacco | Do you currently smoke? | 0: No |

| 1: Yes | ||

| phq5 | Over the last 2 weeks, | 0: Not at all |

| how often have you been | 1: Several days | |

| bothered by Poor appetite | 2: More than half the days | |

| or overeating | 3: Nearly every day | |

| gad2 | Over the last 2 weeks, | 0: Not at all |

| how often have you been | 1: Several days | |

| bothered by Not being able | 2: More than half the days | |

| to stop or control worrying | 3: Nearly every day | |

| gad5 | Over the last 2 weeks, | 0: Not at all |

| how often have you been | 1: Several days | |

| bothered by Being so restless | 2: More than half the days | |

| that it is hard to sit still | 3: Nearly every day | |

| gad3 | Over the last 2 weeks, | 0: Not at all |

| how often have you been | 1: Several days | |

| bothered by Worrying so | 2: More than half the days | |

| much about different things | 3: Nearly every day | |

| ucla1 | How often do you | 1: Hardly ever |

| feel that you lack | 2: Some of the time | |

| companionship | 3: Often | |

| depression | Are you currently receiving | 0: No |

| treatment | any treatment for depression | 1: Yes |

| hypertension | Have you been diagnosed | 0: No |

| with hypertesion? | 1: Yes | |

| icecap4 | Enjoyment and pleasure | 1: I cannot have any enjoyment and pleasure |

| 2: I can have a little enjoyment and pleasure | ||

| 3: I can have a lot of enjoyment and pleasure | ||

| 4: I can have all the enjoyment and pleasure | ||

| balance | Do you have balance | 0: No |

| disorder? | 1: Yes |

References

- Taylor, J.H.; Jakubovski, E.; Bloch, M.H. Predictors of anxiety recurrence in the Coordinated Anxiety Learning and Management (CALM) trial. J. Psychiatr. Res. 2015, 65, 154–165. [Google Scholar] [CrossRef]

- Bruce, S.E.; Yonkers, K.A.; Otto, M.W.; Eisen, J.L.; Weisberg, R.B.; Pagano, M.; Shea, M.T.; Keller, M.B. Influence of psychiatric comorbidity on recovery and recurrence in generalized anxiety disorder, social phobia, and panic disorder: A 12-year prospective study. Am. J. Psychiatry 2005, 162, 1179–1187. [Google Scholar] [CrossRef]

- Scholten, W.D.; Batelaan, N.M.; van Balkom, A.J.L.M.; WJH. Penninx, B.; Smit, J.H.; van Oppen, P. Recurrence of anxiety disorders and its predictors. J. Affect. Disord. 2013, 147, 180–185. [Google Scholar] [CrossRef] [PubMed]

- Alonso, J.; Angermeyer, M.C.; Bernert, S.; Bruffaerts, R.; Brugha, T.S.; Bryson, H.; de Girolamo, G.; Graaf, R.; Demyttenaere, K.; Gasquet, I.; et al. Disability and quality of life impact of mental disorders in Europe: Results from the European Study of the Epidemiology of Mental Disorders (ESEMeD) project. Acta Psychiatr. Scand. Suppl. 2004, 109, 38–46. [Google Scholar] [CrossRef]

- Smit, F.; Cuijpers, P.; Oostenbrink, J.; Batelaan, N.; de Graaf, R.; Beekman, A. Costs of nine common mental disorders: Implications for curative and preventive psychiatry. J. Ment. Health Policy Econ. 2006, 9, 193–200. [Google Scholar]

- Mavissakalian, M.R.; Guo, S. Early detection of relapse in panic disorder. Acta Psychiatr. Scand. 2004, 110, 393–399. [Google Scholar] [CrossRef] [PubMed]

- Donovan, M.R.; Glue, P.; Kolluri, S.; Emir, B. Comparative efficacy of antidepressants in preventing relapse in anxiety disorders—A meta-analysis. J. Affect. Disord. 2010, 123, 9–16. [Google Scholar] [CrossRef]

- Agresti, A. Categorical Data Analysis; John Wiley & Sons: New York, NY, USA, 2012; Volume 792. [Google Scholar]

- Rodriguez, B.F.; Bruce, S.E.; Pagano, M.E.; Keller, M.B. Relationships among psychosocial functioning, diagnostic comorbidity, and the recurrence of generalized anxiety disorder, panic disorder, and major depression. J. Anxiety Disord. 2005, 19, 752–766. [Google Scholar] [CrossRef]

- Calkins, A.W.; Otto, M.W.; Cohen, L.S.; Soares, C.N.; Vitonis, A.F.; Hearon, B.A.; Harlow, B.L. Psychosocial predictors of the onset of anxiety disorders in women: Results from a prospective 3-year longitudinal study. J. Anxiety Disord. 2009, 23, 1165–1169. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Harrell, F.E. Cox Proportional Hazards Regression Model. In Regression Modeling Strategies: With Applications to Linear Models, Logistic and Ordinal Regression, and Survival Analysis; Springer International Publishing: Cham, Switzerland, 2015; pp. 475–519. [Google Scholar] [CrossRef]

- Bayman, E.O.; Dexter, F. Multicollinearity in Logistic Regression Models. Anesth. Analg. 2021, 133, 362–365. [Google Scholar] [CrossRef] [PubMed]

- Wolitzky-Taylor, K.B.; Castriotta, N.; Lenze, E.J.; Stanley, M.A.; Craske, M.G. Anxiety disorders in older adults: A comprehensive review. Depress. Anxiety 2010, 27, 190–211. [Google Scholar] [CrossRef] [PubMed]

- Vink, D.; Aartsen, M.J.; Comijs, H.C.; Heymans, M.W.; Penninx, B.W.; Stek, M.L.; Deeg, D.J.; Beekman, A.T. Onset of anxiety and depression in the aging population: Comparison of risk factors in a 9-year prospective study. Am. J. Geriatr. Psychiatry 2009, 17, 642–652. [Google Scholar] [CrossRef]

- Kiser, A.C.; Schliep, K.C.; Hernandez, E.J.; Peterson, C.M.; Yandell, M.; Eilbeck, K. An artificial intelligence approach for investigating multifactorial pain-related features of endometriosis. PLoS ONE 2024, 19, e297998. [Google Scholar] [CrossRef]

- Pearl, J. From Bayesian networks to causal networks. In Mathematical Models for Handling Partial Knowledge in Artificial Intelligence; Springer Science+Business Media: New York, NY, USA, 1995; pp. 157–182. [Google Scholar]

- Maekawa, E.; Grua, E.M.; Nakamura, C.A.; Scazufca, M.; Araya, R.; Peters, T.; van de Ven, P. Bayesian Networks for Prescreening in Depression: Algorithm Development and Validation. JMIR Ment. Health 2024, 11, e52045. [Google Scholar] [CrossRef]

- Jinhai, Y.; Qassem, A.S.A.M.; Qi, J.; Chao, X.; Anan, W.; Qi, X.; Hongfei, L.; Dequan, D. Bayesian network analysis of drug treatment strategies for thyroid associated ophthalmopathy. Int. Ophthalmol. 2024, 44, 339. [Google Scholar] [CrossRef]

- Cui, F.F.; Sheng, P.J.; Ma, J.X.; Shi, T.; Wang, Y.W. Bayesian network prediction study on the impact of occupational health psychological factors on insomnia among thermal power generation workers. Chin. J. Ind. Hyg. Occup. Dis. 2024, 42, 447–452. [Google Scholar] [CrossRef]

- Hinojosa-Gonzalez, D.E.; Saffati, G.; Salgado-Garza, G.; Patel, S.; Kronstedt, S.; Jones, J.A.; Taylor, J.M.; Yen, A.E.; Slawin, J.R. Novel therapeutic regimens in previously untreated metastatic urothelial carcinoma: A systematic review and bayesian network meta-analysis. Urol. Oncol. Semin. Orig. Investig. 2024; in press. [Google Scholar] [CrossRef]

- Yazdanirad, S.; Haghighat, M.; Jahadinaeini, M.; Khoshakhlagh, A.; Mousavi, S. A Bayesian approach to reveal the role of psychological factors on turnover intention among nurses during the COVID-19 pandemic. BMC Health Serv. Res. 2024, 24, 877. [Google Scholar] [CrossRef]

- Tan, M.; Zhou, X.; Shen, L.; Li, Y.; Chen, X. Music’s Dual Role in Emotion Regulation: Network Analysis of Music Use, Emotion Regulation Self-Efficacy, Alexithymia, Anxiety, and Depression. Depress. Anxiety 2024, 2024, 1790168. [Google Scholar] [CrossRef]

- Chu, A.M.Y.; Chan, L.S.H.; Chang, S.S.Y.; Tiwari, A.; Yuk, H.; So, M.K.P. Applications of Bayesian networks in assessing the effects of family resilience on caregiver behavioral problems, depressive symptoms, and burdens. J. Comput. Soc. Sci. 2024. [Google Scholar] [CrossRef]

- Senkamalavalli, R.; Sankar, S.; Parivazhagan, A.; Raja, R.; Selvaraj, Y.; Srinivas, P.; Varadarajan, M.N. Enhancing clinical decision-making with cloud-enabled integration of image-driven insights. Indones. J. Electr. Eng. Comput. Sci. 2024, 36, 338–346. [Google Scholar] [CrossRef]

- Rohmer, J. Uncertainties in conditional probability tables of discrete Bayesian Belief Networks: A comprehensive review. Eng. Appl. Artif. Intell. 2020, 88, 103384. [Google Scholar] [CrossRef]

- TANG, M.; LI, X. Hyper-local Black Carbon Prediction by Integrating Land Use Variables with Explainable Machine Learning Model. Atmos. Environ. 2024, 336, 120733. [Google Scholar] [CrossRef]

- Scazufca, M.; Nakamura, C.A.; Seward, N.; Didone, T.V.N.; Moretti, F.A.; Oliveira da Costa, M.; Queiroz de Souza, C.H.; Macias de Oliveira, G.; Souza dos Santos, M.; Pereira, L.A.; et al. Self-help mobile messaging intervention for depression among older adults in resource-limited settings: A randomized controlled trial. Nat. Med. 2024, 30, 1127–1133. [Google Scholar] [CrossRef] [PubMed]

- Scutari, M.; Denis, J.B. Bayesian Networks: With Examples in R; Chapman and Hall/CRC: New York, NY, USA, 2021. [Google Scholar]

- Beretta, S.; Castelli, M.; Gonçalves, I.; Henriques, R.; Ramazzotti, D. Learning the structure of bayesian networks: A quantitative assessment of the effect of different algorithmic schemes. Complexity 2018, 2018, 1591878. [Google Scholar] [CrossRef]

- Kitson, N.K.; Constantinou, A.C. Learning Bayesian networks from demographic and health survey data. J. Biomed. Inform. 2021, 113, 103588. [Google Scholar] [CrossRef] [PubMed]

- Friedman, N.; Goldszmidt, M.; Wyner, A. Data analysis with bayesian networks: A bootstrap approach. In Proceedings of the Fifteenth Conference on Uncertainty in Artificial Intelligence, UAI’99, San Francisco, CA, USA, 30 July–1 August 1999; pp. 196–205. [Google Scholar]

- Tsamardinos, I.; Aliferis, C.F.; Statnikov, A.R.; Statnikov, E. Algorithms for large scale Markov blanket discovery. In Proceedings of the FLAIRS Conference, St. Augustine, FL, USA, 11–15 May 2003; Volume 2, pp. 376–380. [Google Scholar]

- Nicholson, A.; Jitnah, N. Using mutual information to determine relevance in Bayesian networks. In PRICAI’98: Topics in Artificial Intelligence, Proceedings of the 5th Pacific Rim International Conference on Artificial Intelligence, Singapore, 22–27 November 1998; Proceedings 5; Springer: Berlin/Heidelberg, Germany, 1998; pp. 399–410. [Google Scholar]

- Imoto, S.; Kim, S.Y.; Shimodaira, H.; Aburatani, S.; Tashiro, K.; Kuhara, S.; Miyano, S. Bootstrap analysis of gene networks based on Bayesian networks and nonparametric regression. Genome Inform. 2002, 13, 369–370. [Google Scholar]

- Ji, Z.; Xia, Q.; Meng, G. A review of parameter learning methods in Bayesian network. In Advanced Intelligent Computing Theories and Applications, Proceedings of the 11th International Conference, ICIC 2015, Fuzhou, China, 20–23 August 2015; Part III 11; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 3–12. [Google Scholar]

- Scutari, M. Learning Bayesian Networks with the bnlearn R Package. J. Stat. Softw. 2010, 35, 1–22. [Google Scholar] [CrossRef]

- Shahana, T.; Lavanya, V.; Bhat, A.R. Ensemble classifiers for bankruptcy prediction using SMOTE and RFECV. Int. J. Enterp. Netw. Manag. 2024, 15, 109–132. [Google Scholar] [CrossRef]

- Abdulaal, M.J.; Casson, A.J.; Gaydecki, P. Performance of Nested vs. Non-Nested SVM Cross-Validation Methods in Visual BCI: Validation Study. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Roma, Italy, 3–7 September 2018; pp. 1680–1684. [Google Scholar] [CrossRef]

- Victoria, A.H.; Maragatham, G. Automatic tuning of hyperparameters using Bayesian optimization. Evol. Syst. 2021, 12, 217–223. [Google Scholar] [CrossRef]

- Bottou, L. Large-Scale Machine Learning with Stochastic Gradient Descent. In Proceedings of the COMPSTAT’2010; Lechevallier, Y., Saporta, G., Eds.; Physica-Verlag HD: Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar] [CrossRef]

- Tipping, M. The Relevance Vector Machine. In Advances in Neural Information Processing Systems; Solla, S., Leen, T., Müller, K., Eds.; MIT Press: Cambridge, MA, USA, 1999; Volume 12. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).