D*-KDDPG: An Improved DDPG Path-Planning Algorithm Integrating Kinematic Analysis and the D* Algorithm

Abstract

1. Introduction

2. Relate Work

3. Methods

3.1. DDPG Introduction

3.2. KDDPG: Local Path Planning Method Based on Kinematic Analysis

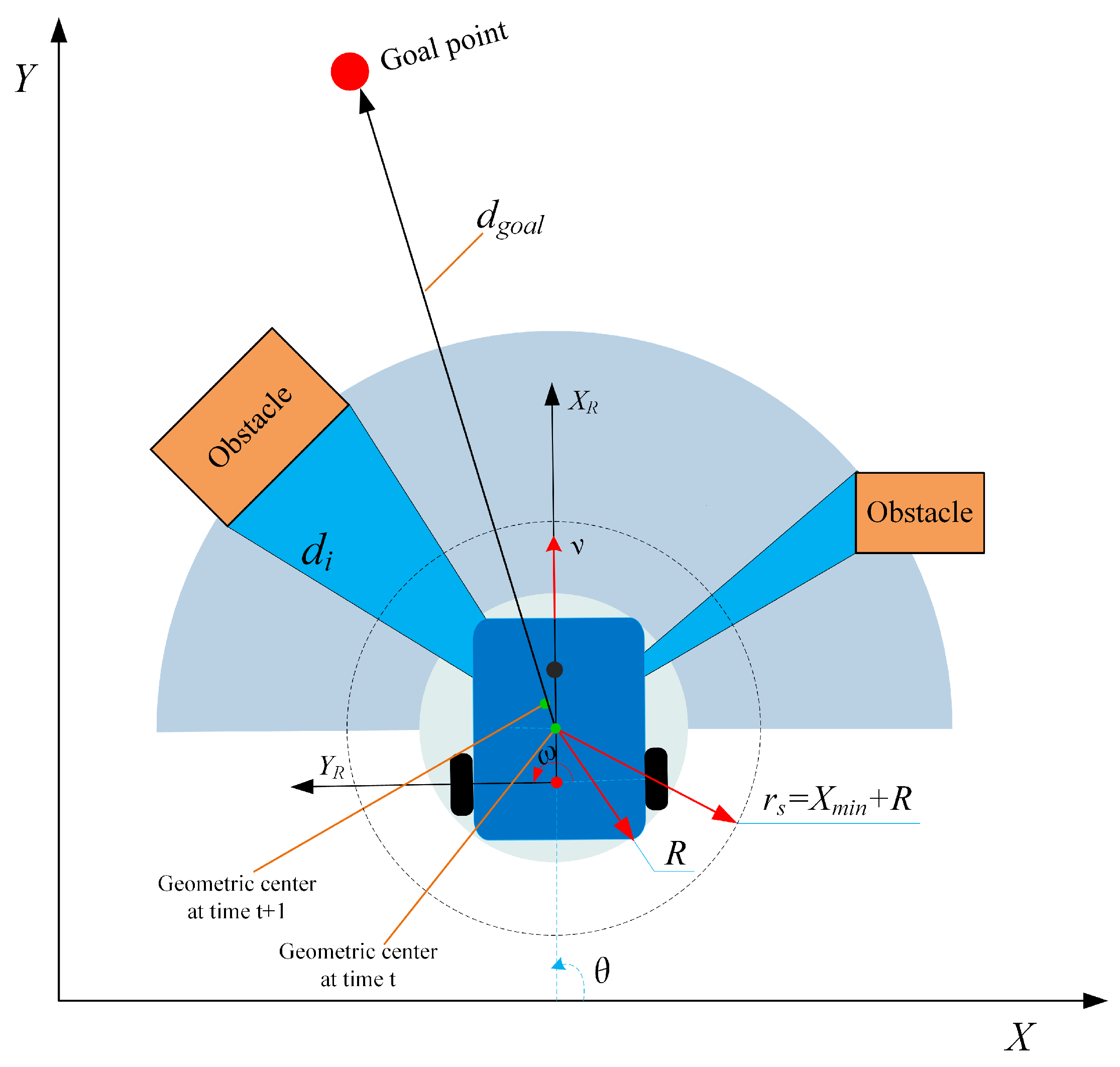

3.2.1. Kinematic Model and Environmental Perception Model

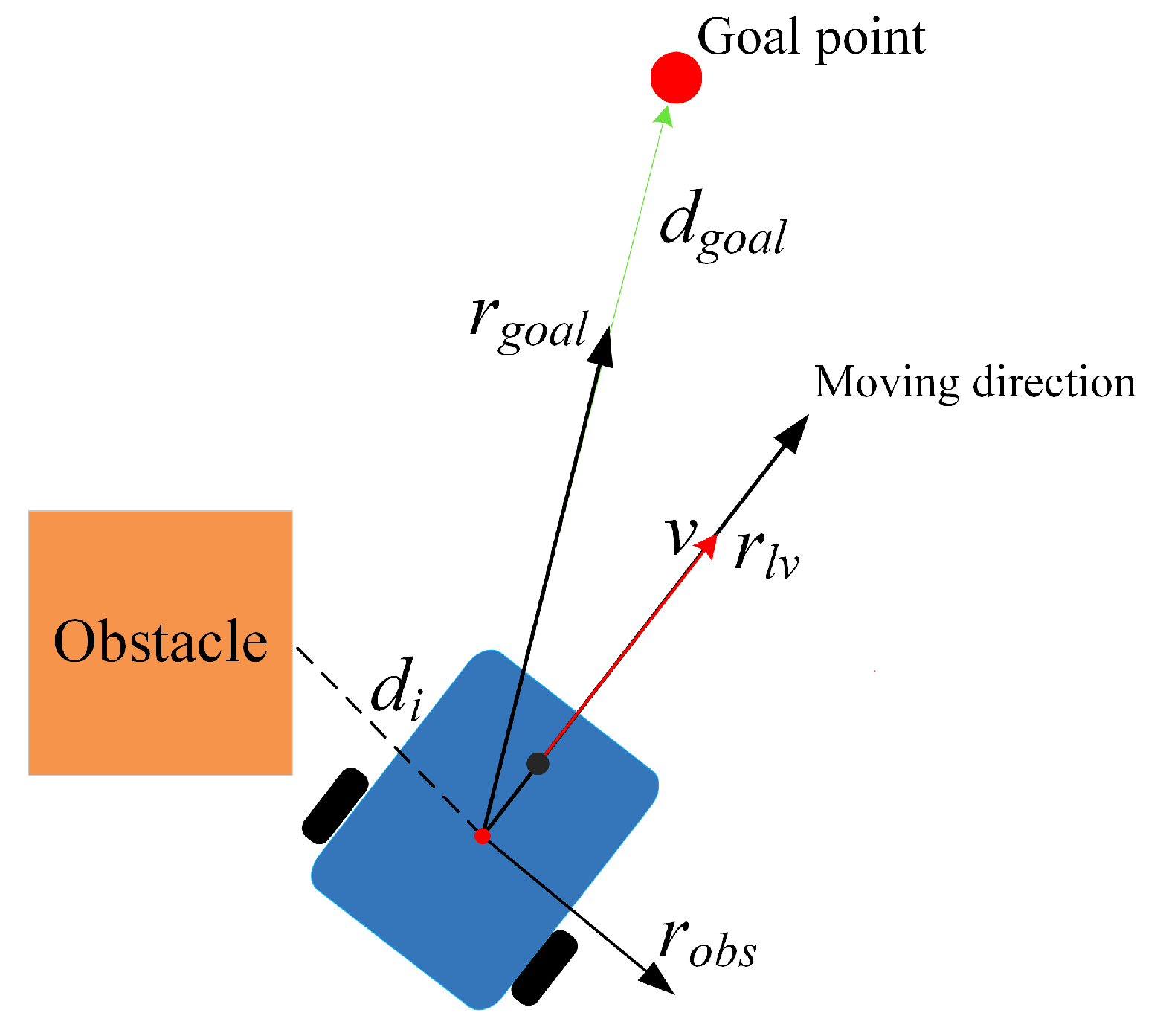

3.2.2. Reward Function Setup

3.3. Integration of Global Algorithm D* with KDDPG

| Algorithm 1 D*-KDDPG path-planning algorithm |

| Input: Map: 2D Grid Map, S: Start position, G: Goal position, S’(x, y): Current robot coordinates, dgoal: The distance of robot to goal, ε: Reference-Goal threshold, δ: Goal-Reach threshold, KDDPG_Move:Pre-trained KDDPG model, MAXTIME: Timeout threshold. Output: Path to the Goal position 1: function D*-KDDPG (Map, S, G, S’(x, y), dgoal, ε, δ, KDDPG_Move, MAXTIME) 2: Global Path ← D_star(Map, S, G) 3: StartTime ← GetCurrentTime() 4: for G’ in Global Path do 5: while dgoal > δ do 6: with ε 7: ReferenceGoal ← Select the point closest to threshold ε 8: KDDPG_Move (S’, ReferenceGoal) 9: TimeElapsed ← CurrentTime–StartTime 10: if TimeElapsed > MAXTIME or Collision then 11: return Fail to reach Goal 12: end if 13: end while 14: if dgoal <= δ then 15: return Success to reach Goal 16: end if 17: end for 18: end function |

4. Experiments and Results

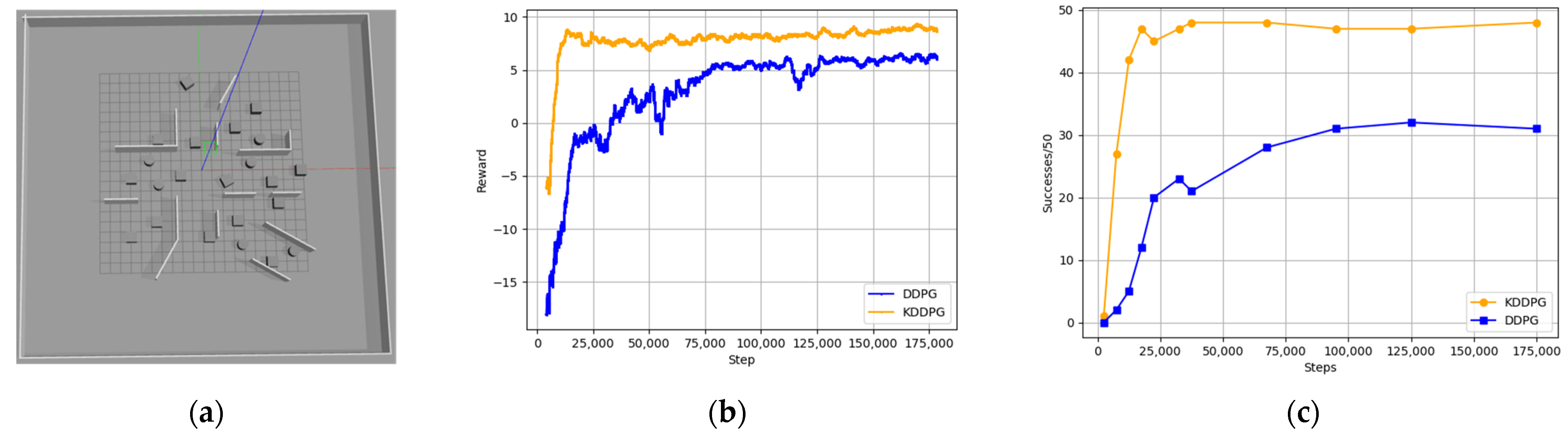

4.1. Training Experiment Setup

4.2. Model Training

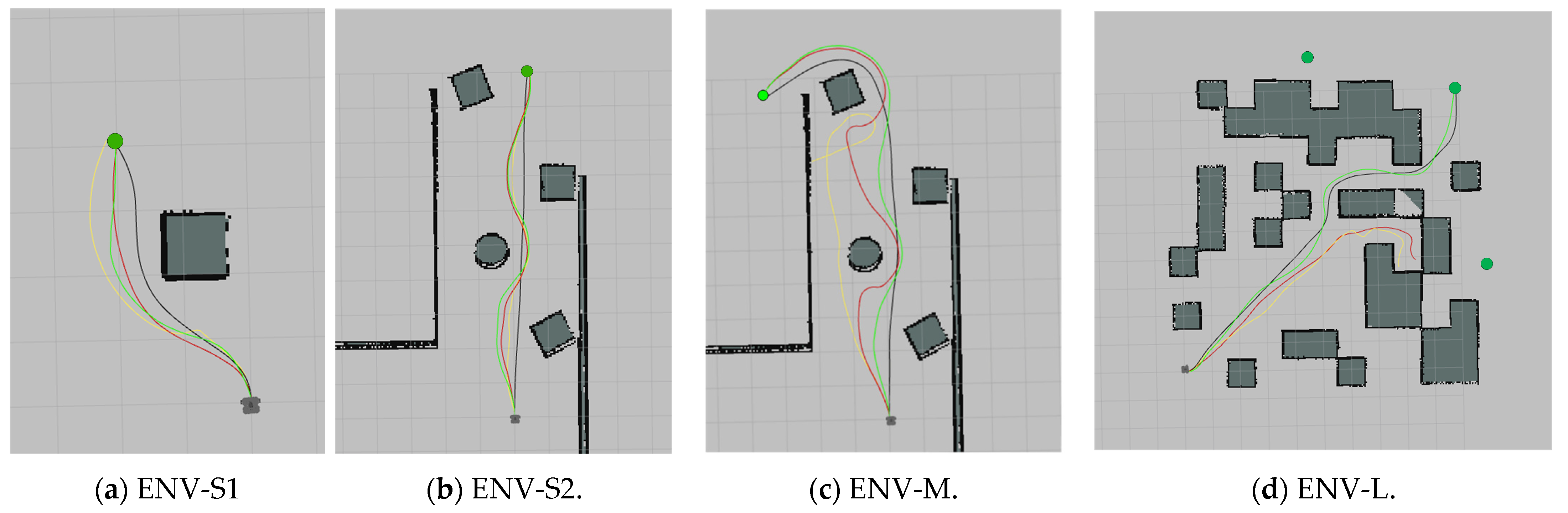

4.3. Experiments

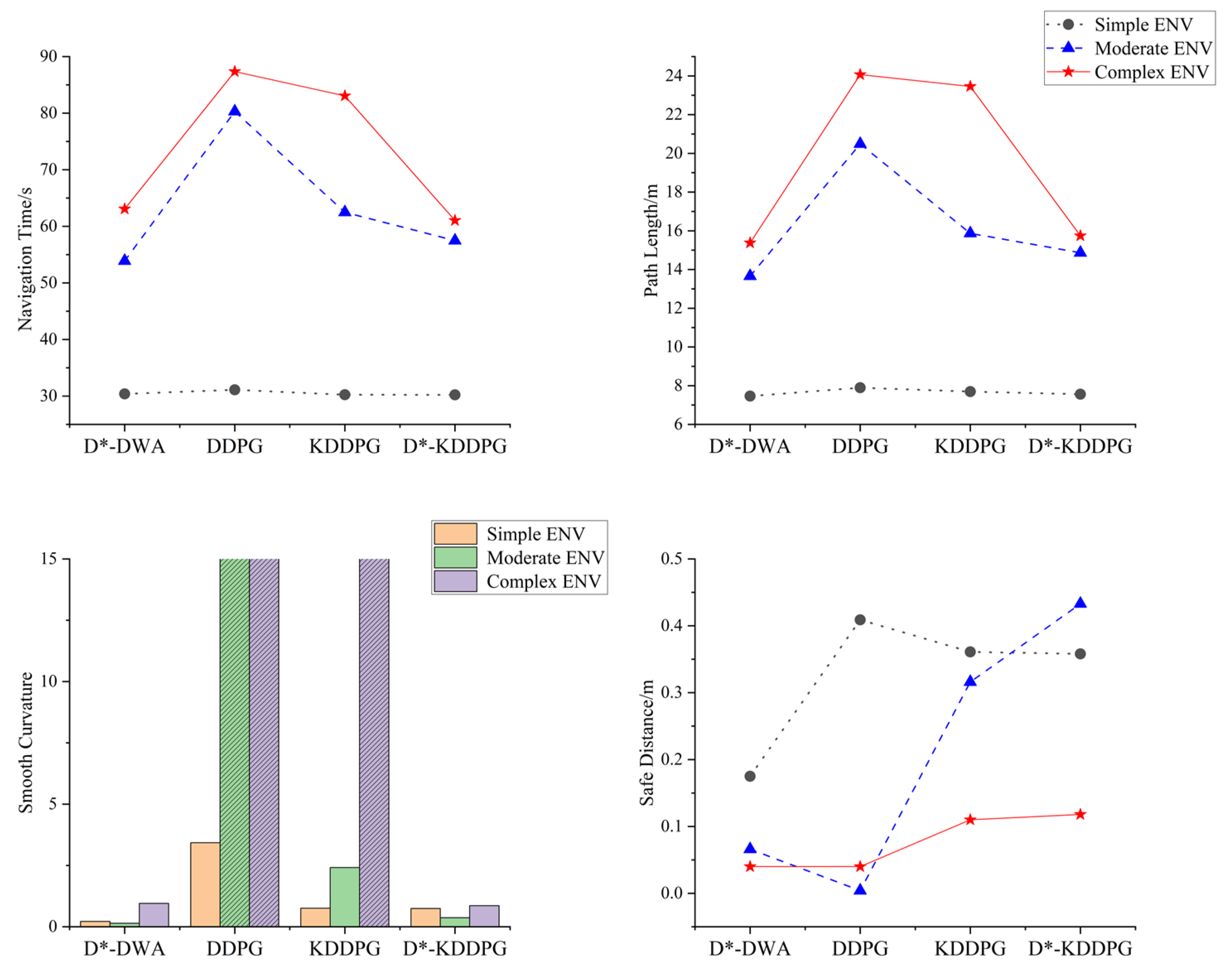

- Navigation Time (NT): The time required to complete the navigation.

- Path Length (PL): The total length of the generated path.

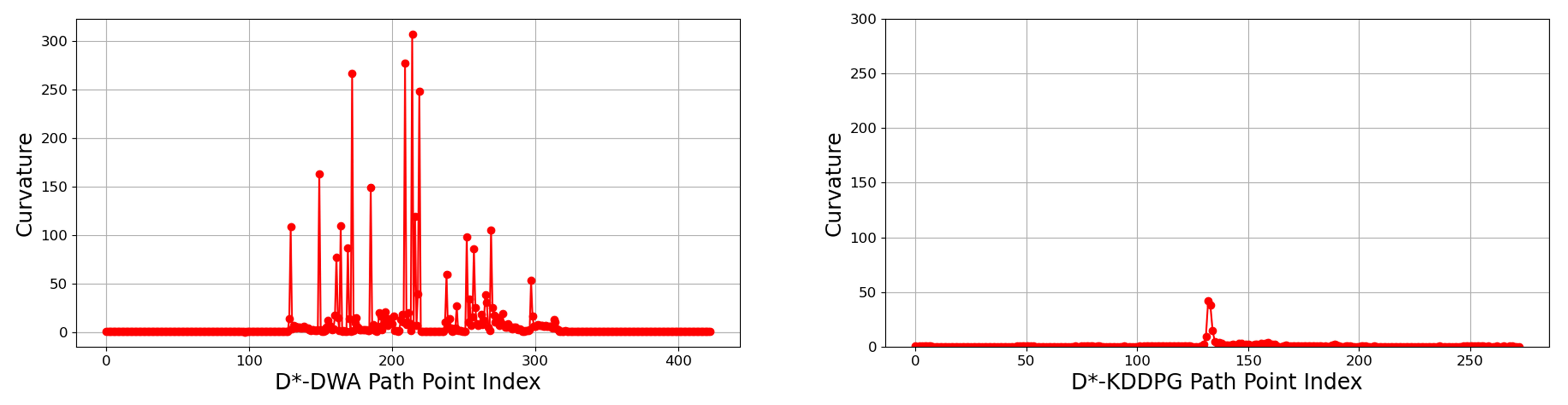

- Curvature Smoothness (CS): The integral of the square of the curvature, which is used to measure the smoothness of the path [18]; lower values indicate smoother paths.

- Safety Distance (SD): A distance used for collision detection, which is calculated by subtracting 0.141 m (a reference value based on the robot’s dimensions) from the radar’s detection distance. A collision is assumed to happen when SD reaches zero.

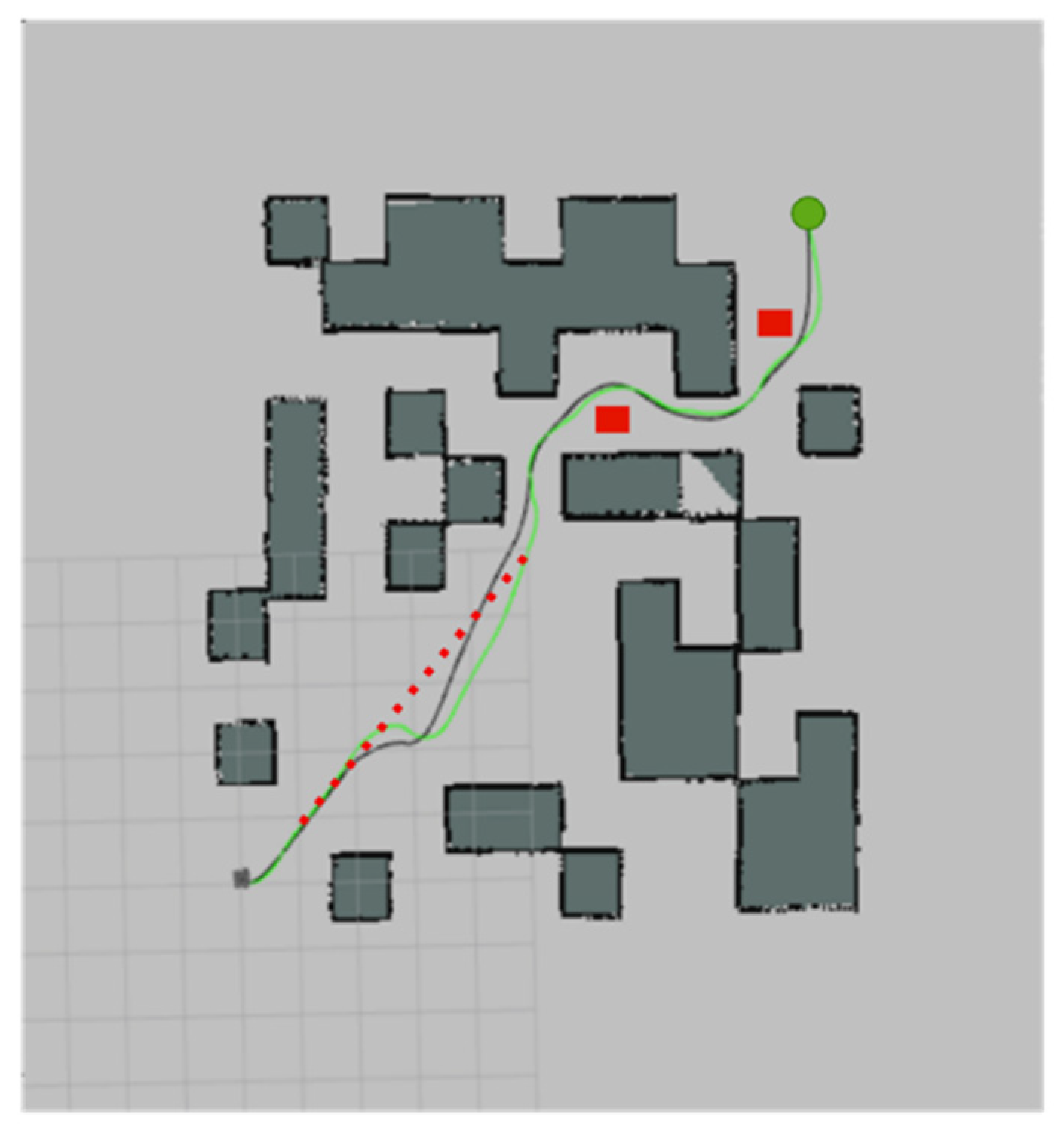

4.3.1. Static Environment Experiments

4.3.2. Dynamic Environment Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aradi, S. Survey of Deep Reinforcement Learning for Motion Planning of Autonomous Vehicles. IEEE Trans. Intell. Transport. Syst. 2022, 23, 740–759. [Google Scholar] [CrossRef]

- Yan, C.; Chen, G.; Li, Y.; Sun, F.; Wu, Y. Immune Deep Reinforcement Learning-Based Path Planning for Mobile Robot in Unknown Environment. Appl. Soft Comput. 2023, 145, 110601. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2015, arXiv:1509.02971 2015. [Google Scholar]

- Zhang, H.; Lin, W.; Chen, A. Path Planning for the Mobile Robot: A Review. Symmetry 2018, 10, 450. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, H.; Liu, S.; Wang, J.; Wang, Y.; Shangguan, D. Path Planning of Scenic Spots Based on Improved A* Algorithm. Sci. Rep. 2022, 12, 1320. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Zhu, H.; Xie, Y. Dynamic Obstacle Avoidance Research for Mobile Robots Incorporating Improved A-Star Algorithm and DWA Algorithm. In Proceedings of the 2023 3rd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI), Wuhan, China, 15–17 December 2023; pp. 896–900. [Google Scholar]

- Guan, C.; Wang, S. Robot Dynamic Path Planning Based on Improved A* and DWA Algorithms. In Proceedings of the 2022 4th International Conference on Control and Robotics (ICCR), Guangzhou, China, 2 December 2022; pp. 1–6. [Google Scholar]

- Wang, H.; Ma, X.; Dai, W.; Jin, W. Research on Obstacle Avoidance of Mobile Robot Based on Improved DWA Algorithm. Comput. Eng. Appl. 2023, 59, 326–332. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards. 1989. Available online: https://www.academia.edu/download/50360235/Learning_from_delayed_rewards_20161116-28282-v2pwvq.pdf (accessed on 25 July 2024).

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling Network Architectures for Deep Reinforcement Learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1995–2003. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic Policy Gradient Algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Deng, X.-H.; Hou, J.; Tan, G.-H.; Wan, B.-Y.; Cao, T.-T. Multi-Objective Vehicle Following Decision Algorithm Based on Reinforcement Learning. Kongzhi Yu Juece/Control Decis. 2021, 36, 2497–2503. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, Y.; Pu, Z.; Hu, J.; Wang, X.; Ke, R. Safe, Efficient, and Comfortable Velocity Control Based on Reinforcement Learning for Autonomous Driving. Transp. Res. Part C Emerg. Technol. 2020, 117, 102662. [Google Scholar] [CrossRef]

- Li, H.; Luo, B.; Song, W.; Yang, C. Predictive Hierarchical Reinforcement Learning for Path-Efficient Mapless Navigation with Moving Target. Neural Netw. 2023, 165, 677–688. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Liang, L. SLP-Improved DDPG Path-Planning Algorithm for Mobile Robot in Large-Scale Dynamic Environment. Sensors 2023, 23, 3521. [Google Scholar] [CrossRef] [PubMed]

- Horn, B.K. The Curve of Least Energy. ACM Trans. Math. Softw. (TOMS) 1983, 9, 441–460. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Robot size (mm) | 306 × 282.57 × 142.35 |

| Max angle speed (rad/s) | 1.82 |

| Max speed (m/s) | 0.26 |

| Max payload (kg) | 30 |

| Algorithm | a | b | c | d | e | f |

|---|---|---|---|---|---|---|

| D*-DWA | 4819 | 6546 | 17,661 | 1.71 | 190 | 2792 |

| DDPG | collision | collision | collision | 74 | 1.35 | collision |

| KDDPG | 20 | 2.79 | collision | 1.28 | 1.30 | 419 |

| D*-KDDPG | 2.61 | 1.03 | 137 | 0.666 | 0.519 | 11.9 |

| Algorithm | NT | PL | SC | SD |

|---|---|---|---|---|

| D*-DWA | 64.622 | 16.145 | 9.512 | 0.042 |

| D*-KDDPG | 63.075 | 16.554 | 2.915 | 0.108 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Liu, W.; Zhang, D.; Sui, X.; Huang, Y.; Ma, X.; Yang, X.; Wang, X. D*-KDDPG: An Improved DDPG Path-Planning Algorithm Integrating Kinematic Analysis and the D* Algorithm. Appl. Sci. 2024, 14, 7555. https://doi.org/10.3390/app14177555

Liu C, Liu W, Zhang D, Sui X, Huang Y, Ma X, Yang X, Wang X. D*-KDDPG: An Improved DDPG Path-Planning Algorithm Integrating Kinematic Analysis and the D* Algorithm. Applied Sciences. 2024; 14(17):7555. https://doi.org/10.3390/app14177555

Chicago/Turabian StyleLiu, Chunyang, Weitao Liu, Dingfa Zhang, Xin Sui, Yan Huang, Xiqiang Ma, Xiaokang Yang, and Xiao Wang. 2024. "D*-KDDPG: An Improved DDPG Path-Planning Algorithm Integrating Kinematic Analysis and the D* Algorithm" Applied Sciences 14, no. 17: 7555. https://doi.org/10.3390/app14177555

APA StyleLiu, C., Liu, W., Zhang, D., Sui, X., Huang, Y., Ma, X., Yang, X., & Wang, X. (2024). D*-KDDPG: An Improved DDPG Path-Planning Algorithm Integrating Kinematic Analysis and the D* Algorithm. Applied Sciences, 14(17), 7555. https://doi.org/10.3390/app14177555