The Potential of AI-Powered Face Enhancement Technologies in Face-Driven Orthodontic Treatment Planning

Abstract

:Featured Application

Abstract

1. Introduction

- A human ear and nose should be equally long;

- The mouth width should be equal to the chin-to-lips distance;

- The distance between the chin and the hairline should be 1/10 of the body height, the head should be 1/8 of the body height;

- Chin, nostrils, eyebrows, and hairline should enclose three equal facial thirds.

- Face detection—faces are detected and located using specific face-detecting algorithms that seek and identify facial landmarks and boundaries.

- Facial feature analysis—facial features (eyes, mouth, skin, nose, ears, etc.) are analyzed, for which the facial geometry needs to be understood.

- Image processing—certain aspects of the face are enhanced, using various techniques (brightness, contrast, color hue changes, etc.).

- Deep learning models—models trained on huge datasets of facial images are employed (namely convolutional neural networks) to grasp representations and patterns of facial features.

- Feature enhancement—based on learned patterns, specific facial features are enhanced (e.g., nose size, wrinkle reduction, and lip enlargement).

- Generative adversarial networks—in some cases, a generator and a discriminator interact in such a way that a generated image is evaluated regarding its authenticity, which yields even more realistic results.

- Customization and user preferences—based on the application, the type as well as the extent of desired enhancement is adjusted.

- Real-time processing—in case of video processing, multiple processes are employed at the same time.

- Quality assessment—in the end, the application itself evaluates the outcomes so that the modifications lead to a visually pleasing and natural-looking outcome.

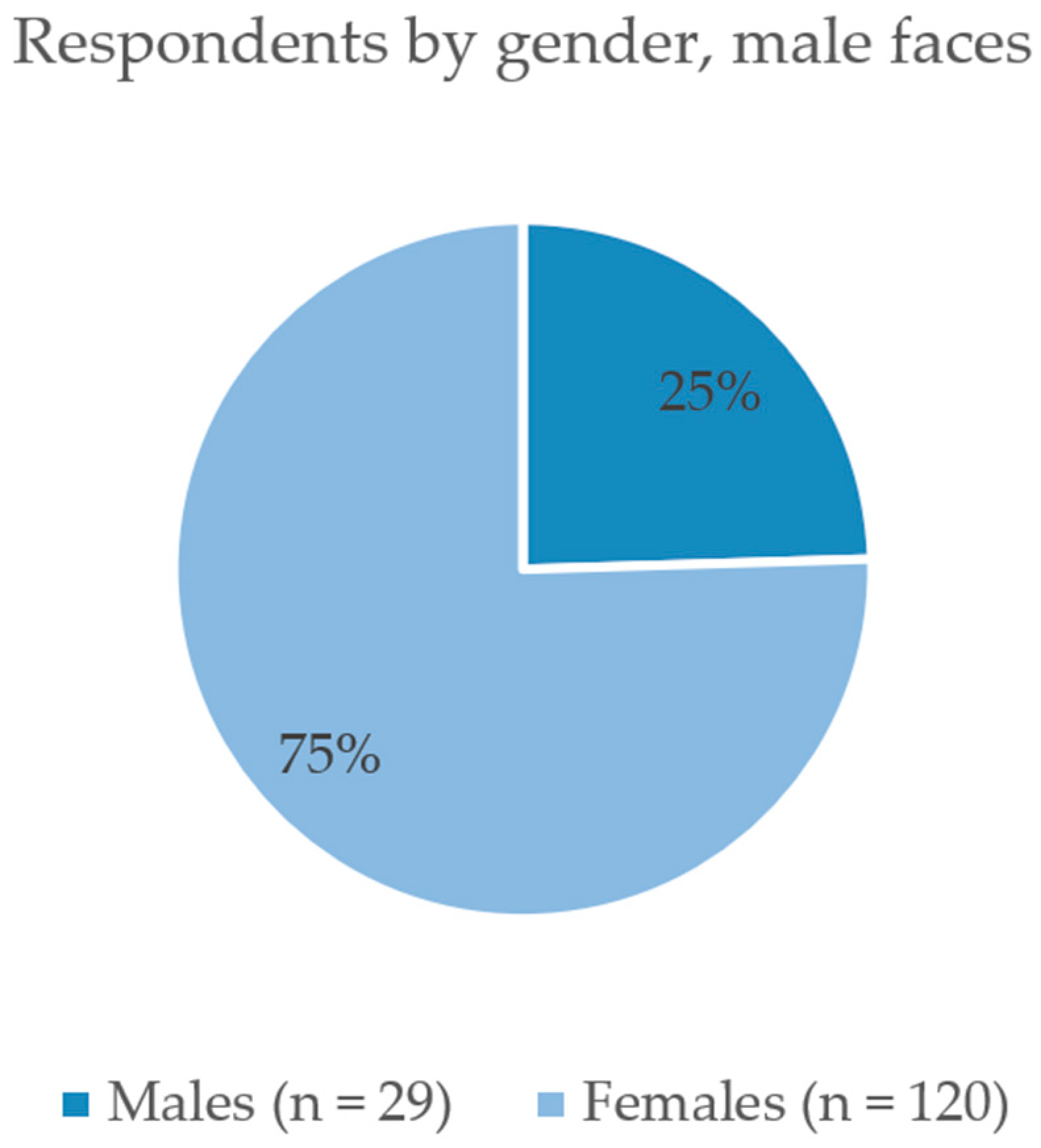

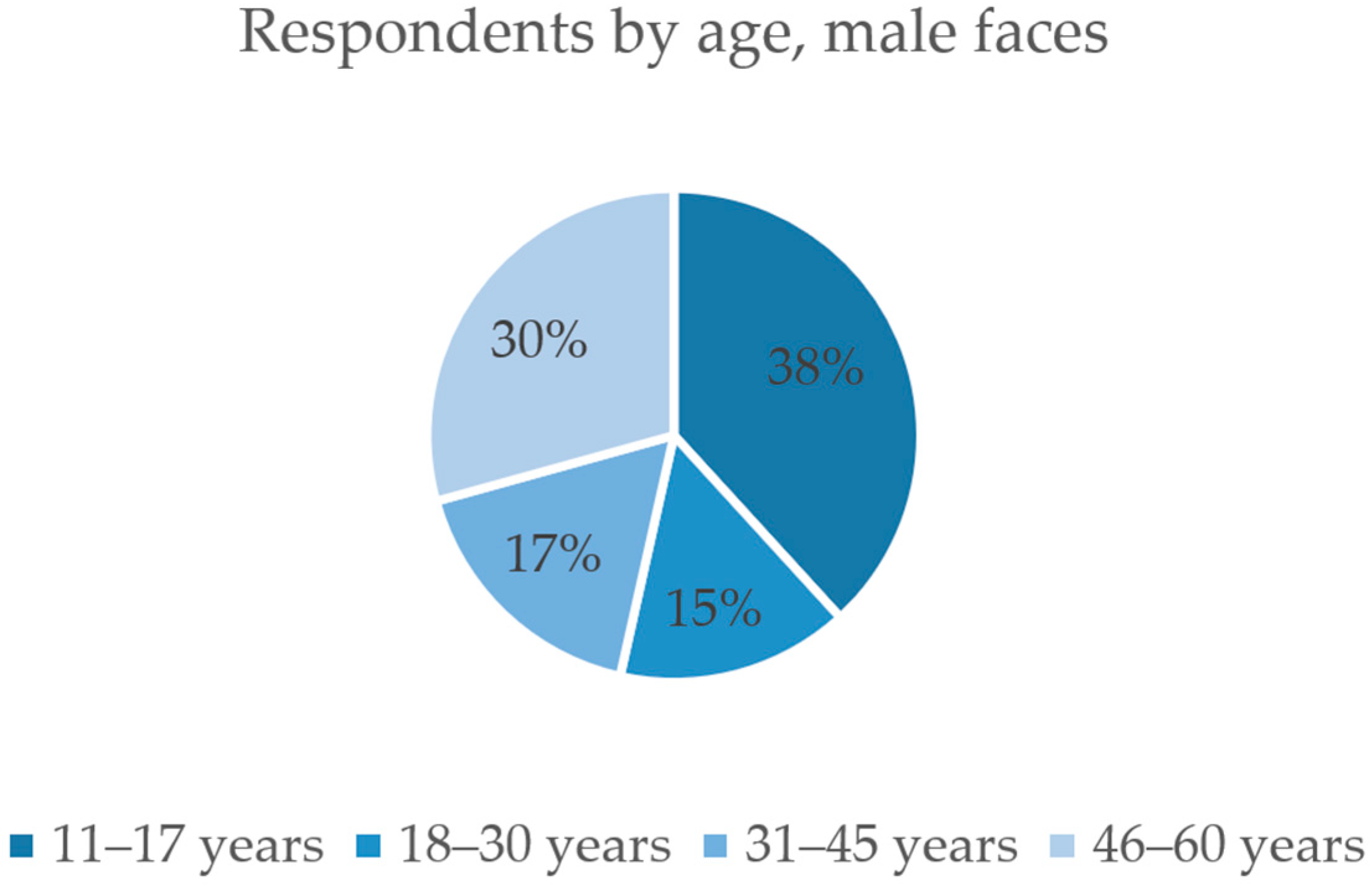

2. Materials and Methods

- Age: young adult;

- Pose: front facing;

- Ethnicity: Caucasian;

- Pose: natural.

- Which of the two faces (AI-enhanced or original) was considered more appealing?

- What was the difference of the numeric attractiveness score they achieved?

- What facial modifications were responsible for the biggest score differences?

- Were the observed changes located in the lower facial third?

- Could an orthodontic treatment influence the studied changes?

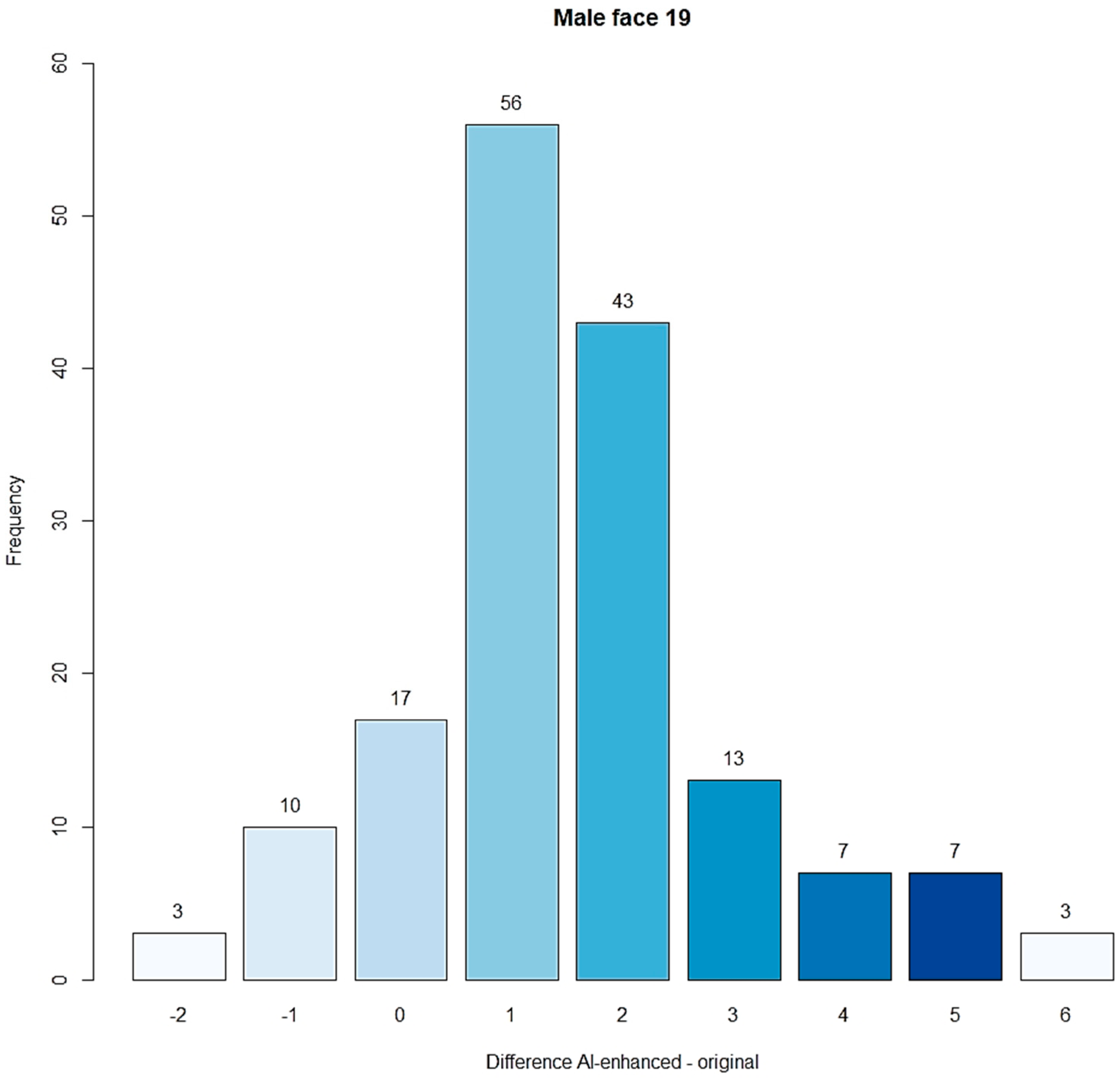

3. Results

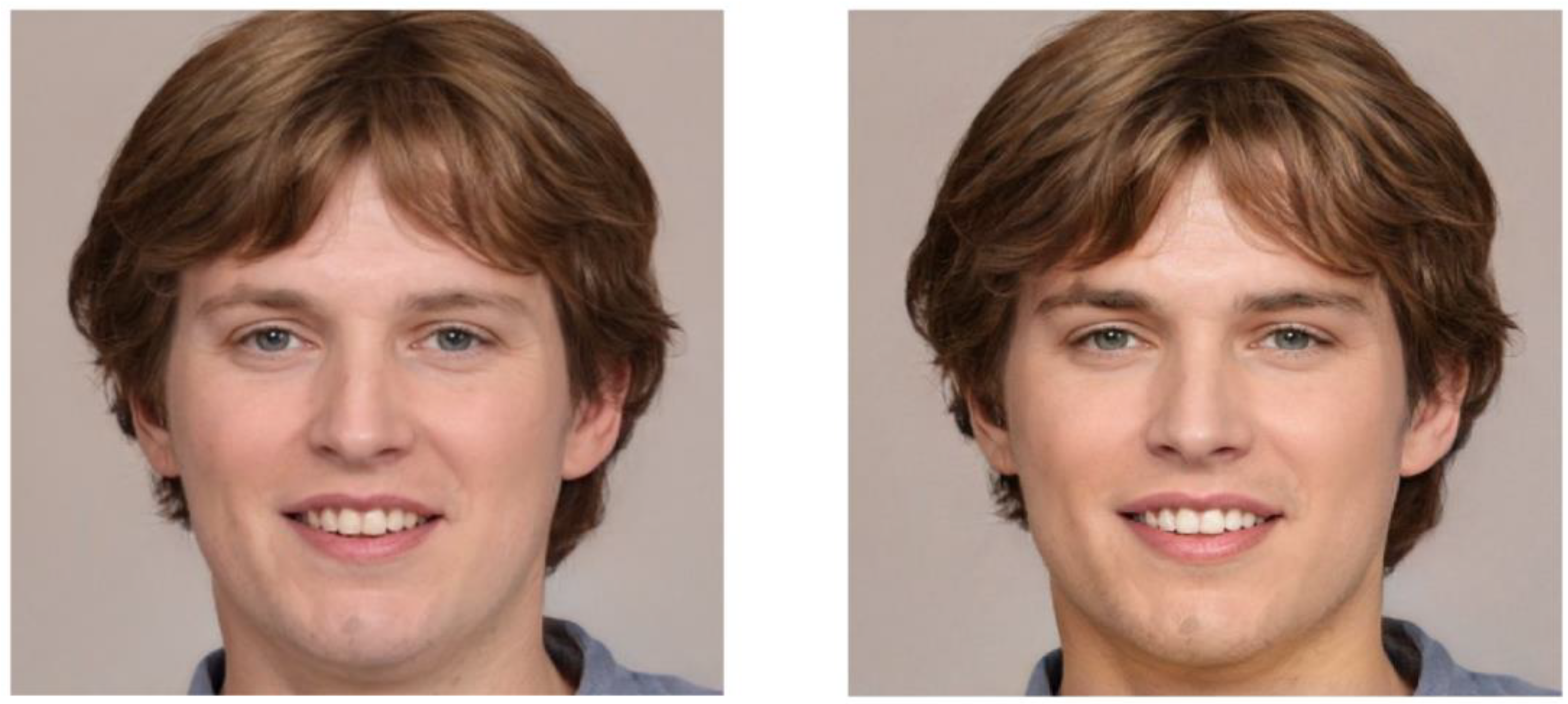

- Hair: the first picture depicts more abundant and slightly wavy hair that is surrounding the face, as opposed to more smooth hair reaching behind the ears in the second picture.

- Chin: the chin in the first picture is wider and more rounded, as opposed to a more prominent and narrower chin in the second picture.

- Smile: the person in the first picture has a wider smile, showing more teeth.

- Nose: the nose has a wider ala nasi area in the first picture.

- Eyes: in the first picture, the eyes are more open and seem to be bigger than in the second picture.

- Face: the face in the first picture has more rounded and smoother shape, whereas the face in the second picture has more rough and sharper edges.

- Cheeks: the first face has fuller cheeks compared to the second face.

4. Discussion

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McDermott, A. What Was the First “Art”? How Would We Know? Proc. Natl. Acad. Sci. USA 2021, 118, e2117561118. [Google Scholar] [CrossRef] [PubMed]

- Manjula, W.S.; Sukumar, M.R.; Kishorekumar, S.; Gnanashanmugam, K.; Mahalakshmi, K. Smile: A Review. J. Pharm. Bioallied Sci. 2015, 7, S271–S275. [Google Scholar] [CrossRef]

- Proffit, W.R.; Fields, H.W.; Larson, B.; Sarver, D.M. Contemporary Orthodontics, 6th ed.; Mosby: St. Louis, MO, USA, 2018. [Google Scholar]

- Kiyak, H.A. Cultural and Psychologic Influences on Treatment Demand. Semin. Orthod. 2000, 6, 242–248. [Google Scholar] [CrossRef]

- Arian, H.; Alroudan, D.; Alkandari, Q.; Shuaib, A. Cosmetic Surgery and the Diversity of Cultural and Ethnic Perceptions of Facial, Breast, and Gluteal Aesthetics in Women: A Comprehensive Review. Clin. Cosmet. Investig. Dermatol. 2023, 16, 1443–1456. [Google Scholar] [CrossRef] [PubMed]

- Edler, R.J. Background Considerations to Facial Aesthetics. J. Orthod. 2001, 28, 159–168. [Google Scholar] [CrossRef] [PubMed]

- Bos, A.; Hoogstraten, J.; Prahl-Andersen, B. Expectations of Treatment and Satisfaction with Dentofacial Appearance in Orthodontic Patients. Am. J. Orthod. Dentofac. Orthop. 2003, 123, 127–132. [Google Scholar] [CrossRef]

- Yao, J.; Li, D.-D.; Yang, Y.-Q.; McGrath, C.P.J.; Mattheos, N. What Are Patients’ Expectations of Orthodontic Treatment: A Systematic Review. BMC Oral Health 2016, 16, 19. [Google Scholar] [CrossRef]

- Hiemstra, R.; Bos, A.; Hoogstraten, J. Patients’ and Parents’ Expectations of Orthodontic Treatment. J. Orthod. 2009, 36, 219–228. [Google Scholar] [CrossRef]

- Damle, S.G. Creativity Is Intelligence. Contemp. Clin. Dent. 2015, 6, 441–442. [Google Scholar] [CrossRef]

- John Ray Quotes. Available online: https://www.brainyquote.com/quotes/john_ray_119945 (accessed on 3 March 2024).

- Patusco, V.; Carvalho, C.K.; Lenza, M.A.; Faber, J. Smile Prevails over Other Facial Components of Male Facial Esthetics. J. Am. Dent. Assoc. 2018, 149, 680–687. [Google Scholar] [CrossRef]

- Sarver, D.M. Dentofacial Esthetics: From Macro to Micro, 1st ed.; Quintessence Publishing Co, Inc.: Batavia, IL, USA, 2020; ISBN 978-1-64724-025-7. [Google Scholar]

- Trulsson, U.; Strandmark, M.; Mohlin, B.; Berggren, U. A Qualitative Study of Teenagers’ Decisions to Undergo Orthodontic Treatment with Fixed Appliance. J. Orthod. 2002, 29, 197–204; discussion 195. [Google Scholar] [CrossRef] [PubMed]

- Berneburg, M.; Dietz, K.; Niederle, C.; Göz, G. Changes in Esthetic Standards since 1940. Am. J. Orthod. Dentofacial Orthop. 2010, 137, e1–e9; discussion 450–451. [Google Scholar] [CrossRef] [PubMed]

- Sadrhaghighi, A.H.; Zarghami, A.; Sadrhaghighi, S.; Mohammadi, A.; Eskandarinezhad, M. Esthetic Preferences of Laypersons of Different Cultures and Races with Regard to Smile Attractiveness. Indian J. Dent. Res. 2017, 28, 156–161. [Google Scholar] [CrossRef] [PubMed]

- Uribe, F.A.; Chandhoke, T.K.; Nanda, R. Chapter 1-Individualized Orthodontic Diagnosis. In Esthetics and Biomechanics in Orthodontics, 2nd ed.; Nanda, R., Ed.; W.B. Saunders: St. Louis, MO, USA, 2015; pp. 1–32. ISBN 978-1-4557-5085-6. [Google Scholar]

- Svedström-Oristo, A.-L.; Pietilä, T.; Pietilä, I.; Alanen, P.; Varrela, J. Morphological, Functional and Aesthetic Criteria of Acceptable Mature Occlusion. Eur. J. Orthod. 2001, 23, 373–381. [Google Scholar] [CrossRef]

- Kasrovi, P.M.; Meyer, M.; Nelson, G.D. Occlusion: An Orthodontic Perspective. J. Calif. Dent. Assoc. 2000, 28, 780–788. [Google Scholar] [CrossRef]

- Andrews, L.F. The Six Keys to Normal Occlusion. Am. J. Orthod. 1972, 62, 296–309. [Google Scholar] [CrossRef]

- Naini, F.B. Facial Aesthetics: Concepts & Clinical Diagnosis; Wiley-Blackwell: Hoboken, NJ, USA, 2013; p. 434. ISBN 978-1-4051-8192-1. [Google Scholar]

- Chhibber, A.; Upadhyay, M.; Nanda, R. Chapter 13-Class II Correction with an Intermaxillary Fixed Noncompliance Device: Twin Force Bite Corrector. In Esthetics and Biomechanics in Orthodontics, 2nd ed.; Nanda, R., Ed.; W.B. Saunders: St. Louis, MO, USA, 2015; pp. 217–245. ISBN 978-1-4557-5085-6. [Google Scholar]

- Naini, F.B.; Moss, J.P.; Gill, D.S. The Enigma of Facial Beauty: Esthetics, Proportions, Deformity, and Controversy. Am. J. Orthod. Dentofac. Orthop. 2006, 130, 277–282. [Google Scholar] [CrossRef]

- Pallett, P.M.; Link, S.; Lee, K. New “Golden” Ratios for Facial Beauty. Vis. Res. 2010, 50, 149–154. [Google Scholar] [CrossRef]

- Ren, H.; Chen, X.; Zhang, Y. Correlation between Facial Attractiveness and Facial Components Assessed by Laypersons and Orthodontists. J. Dent. Sci. 2021, 16, 431–436. [Google Scholar] [CrossRef]

- Chrapla, P.; Paradowska-Stolarz, A.; Skoskiewicz-Malinowska, K. Subjective and Objective Evaluation of the Symmetry of Maxillary Incisors among Residents of Southwest Poland. Symmetry 2022, 14, 1257. [Google Scholar] [CrossRef]

- Ursano, A.M.; Sonnenberg, S.M.; Lapid, M.I.; Ursano, R.J. The Physician–Patient Relationship. In Tasman’s Psychiatry; Tasman, A., Riba, M.B., Alarcón, R.D., Alfonso, C.A., Kanba, S., Ndetei, D.M., Ng, C.H., Schulze, T.G., Lecic-Tosevski, D., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–28. ISBN 978-3-030-42825-9. [Google Scholar]

- Proffit, W.R. The Soft Tissue Paradigm in Orthodontic Diagnosis and Treatment Planning: A New View for a New Century. J. Esthet. Dent. 2000, 12, 46–49. [Google Scholar] [CrossRef]

- Tomášik, J.; Zsoldos, M.; Oravcová, Ľ.; Lifková, M.; Pavleová, G.; Strunga, M.; Thurzo, A. AI and Face-Driven Orthodontics: A Scoping Review of Digital Advances in Diagnosis and Treatment Planning. AI 2024, 5, 158–176. [Google Scholar] [CrossRef]

- Subramanian, A.K.; Chen, Y.; Almalki, A.; Sivamurthy, G.; Kafle, D. Cephalometric Analysis in Orthodontics Using Artificial Intelligence—A Comprehensive Review. BioMed Res. Int. 2022, 2022, 1880113. [Google Scholar] [CrossRef] [PubMed]

- Rauniyar, S.; Jena, S.; Sahoo, N.; Mohanty, P.; Dash, B.P. Artificial Intelligence and Machine Learning for Automated Cephalometric Landmark Identification: A Meta-Analysis Previewed by a Systematic Review. Cureus 2023, 15, e40934. [Google Scholar] [CrossRef] [PubMed]

- Serafin, M.; Baldini, B.; Cabitza, F.; Carrafiello, G.; Baselli, G.; Del Fabbro, M.; Sforza, C.; Caprioglio, A.; Tartaglia, G.M. Accuracy of Automated 3D Cephalometric Landmarks by Deep Learning Algorithms: Systematic Review and Meta-Analysis. Radiol. Medica 2023, 128, 544–555. [Google Scholar] [CrossRef]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where Is the Artificial Intelligence Applied in Dentistry? Systematic Review and Literature Analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef]

- Mohaideen, K.; Negi, A.; Verma, D.K.; Kumar, N.; Sennimalai, K.; Negi, A. Applications of Artificial Intelligence and Machine Learning in Orthognathic Surgery: A Scoping Review. J. Stomatol. Oral Maxillofac. Surg. 2022, 123, e962–e972. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, Y.; Wong, H.M. Development and Accuracy of Artificial Intelligence-Generated Prediction of Facial Changes in Orthodontic Treatment: A Scoping Review. J. Zhejiang Univ. Sci. B 2023, 24, 974–984. [Google Scholar] [CrossRef]

- Alqerban, A.; Alaskar, A.; Alnatheer, M.; Samran, A.; Alqhtani, N.; Koppolu, P. Differences in Hard and Soft Tissue Profile after Orthodontic Treatment with and without Extraction. Niger. J. Clin. Pract. 2022, 25, 325–335. [Google Scholar] [CrossRef]

- Zhou, Q.; Gao, J.; Guo, D.; Zhang, H.; Zhang, X.; Qin, W.; Jin, Z. Three Dimensional Quantitative Study of Soft Tissue Changes in Nasolabial Folds after Orthodontic Treatment in Female Adults. BMC Oral Health 2023, 23, 31. [Google Scholar] [CrossRef]

- Park, Y.S.; Choi, J.H.; Kim, Y.; Choi, S.H.; Lee, J.H.; Kim, K.H.; Chung, C.J. Deep Learning-Based Prediction of the 3D Postorthodontic Facial Changes. J. Dent. Res. 2022, 101, 1372–1379. [Google Scholar] [CrossRef]

- Ryu, J.; Lee, Y.-S.; Mo, S.-P.; Lim, K.; Jung, S.-K.; Kim, T.-W. Application of Deep Learning Artificial Intelligence Technique to the Classification of Clinical Orthodontic Photos. BMC Oral Health 2022, 22, 454. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Guo, Z.; Lin, J.; Ying, S. Artificial Intelligence for Classifying and Archiving Orthodontic Images. BioMed Res. Int. 2022, 2022, 1473977. [Google Scholar] [CrossRef]

- Volovic, J.; Badirli, S.; Ahmad, S.; Leavitt, L.; Mason, T.; Bhamidipalli, S.S.; Eckert, G.; Albright, D.; Turkkahraman, H. A Novel Machine Learning Model for Predicting Orthodontic Treatment Duration. Diagnostics 2023, 13, 2740. [Google Scholar] [CrossRef] [PubMed]

- Patcas, R.; Bornstein, M.M.; Schätzle, M.A.; Timofte, R. Artificial Intelligence in Medico-Dental Diagnostics of the Face: A Narrative Review of Opportunities and Challenges. Clin. Oral Investig. 2022, 26, 6871–6879. [Google Scholar] [CrossRef]

- Ruz, G.A.; Araya-Díaz, P.; Henríquez, P.A. Facial Biotype Classification for Orthodontic Treatment Planning Using an Alternative Learning Algorithm for Tree Augmented Naive Bayes. BMC Med. Inform. Decis. Mak. 2022, 22, 316. [Google Scholar] [CrossRef]

- Mladenovic, R.; Kalevski, K.; Davidovic, B.; Jankovic, S.; Todorovic, V.S.; Vasovic, M. The Role of Artificial Intelligence in the Accurate Diagnosis and Treatment Planning of Non-Syndromic Supernumerary Teeth: A Case Report in a Six-Year-Old Boy. Children 2023, 10, 839. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Kim, Y.-H.; Kim, T.-W.; Jung, S.-K. Evaluation of Artificial Intelligence Model for Crowding Categorization and Extraction Diagnosis Using Intraoral Photographs. Sci. Rep. 2023, 13, 5177. [Google Scholar] [CrossRef] [PubMed]

- Jha, N.; Lee, K.-S.; Kim, Y.-J. Diagnosis of Temporomandibular Disorders Using Artificial Intelligence Technologies: A Systematic Review and Meta-Analysis. PLoS ONE 2022, 17, e0272715. [Google Scholar] [CrossRef]

- Almășan, O.; Leucuța, D.-C.; Hedeșiu, M.; Mureșanu, S.; Popa, Ș.L. Temporomandibular Joint Osteoarthritis Diagnosis Employing Artificial Intelligence: Systematic Review and Meta-Analysis. J. Clin. Med. 2023, 12, 942. [Google Scholar] [CrossRef]

- Xu, L.; Chen, J.; Qiu, K.; Yang, F.; Wu, W. Artificial Intelligence for Detecting Temporomandibular Joint Osteoarthritis Using Radiographic Image Data: A Systematic Review and Meta-Analysis of Diagnostic Test Accuracy. PLoS ONE 2023, 18, e0288631. [Google Scholar] [CrossRef] [PubMed]

- Moon, J.-H.; Shin, H.-K.; Lee, J.-M.; Cho, S.J.; Park, J.-A.; Donatelli, R.E.; Lee, S.-J. Comparison of Individualized Facial Growth Prediction Models Based on the Partial Least Squares and Artificial Intelligence. Angle Orthod. 2024, 94, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Jeong, Y.; Nang, Y.; Zhao, Z. Automated Evaluation of Upper Airway Obstruction Based on Deep Learning. BioMed Res. Int. 2023, 2023, 8231425. [Google Scholar] [CrossRef] [PubMed]

- Tsolakis, I.A.; Kolokitha, O.-E.; Papadopoulou, E.; Tsolakis, A.I.; Kilipiris, E.G.; Palomo, J.M. Artificial Intelligence as an Aid in CBCT Airway Analysis: A Systematic Review. Life 2022, 12, 1894. [Google Scholar] [CrossRef]

- Fountoulaki, G.; Thurzo, A. Change in the Constricted Airway in Patients after Clear Aligner Treatment: A Retrospective Study. Diagnostics 2022, 12, 2201. [Google Scholar] [CrossRef]

- Amen, B. Sketch of Big Data Real-Time Analytics Model. In Proceedings of the Fifth International Conference on Advances in Information Mining and Management, Brussels, Belgium, 21–26 June 2015. [Google Scholar]

- Gulum, M.A.; Trombley, C.M.; Ozen, M.; Esen, E.; Aksamoglu, M.; Kantardzic, M. Why Are Explainable AI Methods for Prostate Lesion Detection Rated Poorly by Radiologists? Appl. Sci. 2024, 14, 4654. [Google Scholar] [CrossRef]

- Thakur, G.S.; Sahu, S.K.; Swamy, N.K.; Gupta, M.; Jan, T.; Prasad, M. Review of Soft Computing Techniques in Monitoring Cardiovascular Disease in the Context of South Asian Countries. Appl. Sci. 2023, 13, 9555. [Google Scholar] [CrossRef]

- Xiao, Q.; Lee, K.; Mokhtar, S.A.; Ismail, I.; Pauzi, A.L.b.M.; Zhang, Q.; Lim, P.Y. Deep Learning-Based ECG Arrhythmia Classification: A Systematic Review. Appl. Sci. 2023, 13, 4964. [Google Scholar] [CrossRef]

- Mou, L.; Qi, H.; Liu, Y.; Zheng, Y.; Matthew, P.; Su, P.; Liu, J.; Zhang, J.; Zhao, Y. DeepGrading: Deep Learning Grading of Corneal Nerve Tortuosity. IEEE Trans. Med. Imaging 2022, 41, 2079–2091. [Google Scholar] [CrossRef]

- Chen, T.; Tachmazidis, I.; Batsakis, S.; Adamou, M.; Papadakis, E.; Antoniou, G. Diagnosing Attention-Deficit Hyperactivity Disorder (ADHD) Using Artificial Intelligence: A Clinical Study in the UK. Front. Psychiatry 2023, 14, 1164433. [Google Scholar] [CrossRef]

- Cheng, G.; Li, Z.; Yao, X.; Li, K.; Wei, Z. Remote Sensing Image Scene Classification Using Bag of Convolutional Features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1735–1739. [Google Scholar] [CrossRef]

- Surovková, J.; Haluzová, S.; Strunga, M.; Urban, R.; Lifková, M.; Thurzo, A. The New Role of the Dental Assistant and Nurse in the Age of Advanced Artificial Intelligence in Telehealth Orthodontic Care with Dental Monitoring: Preliminary Report. Appl. Sci. 2023, 13, 5212. [Google Scholar] [CrossRef]

- Cellerino, A. Psychobiology of Facial Attractiveness. J. Endocrinol. Investig. 2003, 26, 45–48. [Google Scholar]

- Mogilski, J.K.; Welling, L.L.M. The Relative Contribution of Jawbone and Cheekbone Prominence, Eyebrow Thickness, Eye Size, and Face Length to Evaluations of Facial Masculinity and Attractiveness: A Conjoint Data-Driven Approach. Front. Psychol. 2018, 9, 2428. [Google Scholar] [CrossRef] [PubMed]

- Thornhill, R.; Gangestad, S.W. Facial Attractiveness. Trends Cogn. Sci. 1999, 3, 452–460. [Google Scholar] [CrossRef]

- Przylipiak, M.; Przylipiak, J.; Terlikowski, R.; Lubowicka, E.; Chrostek, L.; Przylipiak, A. Impact of Face Proportions on Face Attractiveness. J. Cosmet. Dermatol. 2018, 17, 954–959. [Google Scholar] [CrossRef]

- Shen, H.; Chau, D.K.P.; Su, J.; Zeng, L.-L.; Jiang, W.; He, J.; Fan, J.; Hu, D. Brain Responses to Facial Attractiveness Induced by Facial Proportions: Evidence from an fMRI Study. Sci. Rep. 2016, 6, 35905. [Google Scholar] [CrossRef]

- Iwasokun, G. Image Enhancement Methods: A Review. Br. J. Math. Comput. Sci. 2014, 4, 2251–2277. [Google Scholar] [CrossRef]

- Gemini–Chat to Supercharge Your Ideas. Available online: https://gemini.google.com (accessed on 5 March 2024).

- Top 10 Best AI Face Apps Review 2024. Available online: https://topten.ai/face-apps-review/ (accessed on 6 March 2024).

- Sha, A. 8 Best AI Photo Enhancers in 2024 (Free and Paid). Available online: https://beebom.com/best-ai-photo-enhancers/ (accessed on 6 March 2024).

- Wirth, S. InterFace Experiments: FaceApp as Everyday AI. Interface Crit. 2023, 4, 159–169. [Google Scholar] [CrossRef]

- Sudmann, A. (Ed.) The Democratization of Artificial Intelligence: Net Politics in the Era of Learning Algorithms; transcript: Bielefeld, Germany, 2019; pp. 9–32. ISBN 978-3-8394-4719-2. [Google Scholar]

- Pasquinelli, M.; Joler, V. The Nooscope Manifested: AI as Instrument of Knowledge Extractivism. AI Soc. 2021, 36, 1263–1280. [Google Scholar] [CrossRef]

- Offert, F.; Bell, P. Perceptual Bias and Technical Metapictures: Critical Machine Vision as a Humanities Challenge. AI Soc. 2021, 36, 1133–1144. [Google Scholar] [CrossRef]

- Orhan, K.; Jagtap, R. (Eds.) Artificial Intelligence in Dentistry; Springer International Publishing: Cham, Switzerland, 2023; ISBN 978-3-031-43826-4. [Google Scholar]

- Generated Photos|Unique, Worry-Free Model Photos. Available online: https://generated.photos (accessed on 24 August 2024).

- FaceApp: Face Editor. Available online: https://www.faceapp.com/ (accessed on 24 August 2024).

- Cox, D.R. Karl Pearson and the Chisquared Test. In Goodness of Fit Tests and Model Validity; Birkhäuser: Boston, MA, USA, 2000; pp. 3–8. [Google Scholar]

- Wickham, W. Ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Fleiss, J.L. Measuring Nominal Scale Agreement among Many Raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Gamer, M.; Lemon, J.; Fellows, I.; Singh, P. Irr: Various Coefficients of Interrater Reliability and Agreement; Version 0.84.1. Available online: https://CRAN.R-project.org/package=irr (accessed on 1 July 2024).

- Freiman, J.A.; Chalmers, T.C.; Smith, H.; Kuebler, R.R. The Importance of Beta, the Type II Error and Sample Size in the Design and Interpretation of the Randomized Control Trial. N. Engl. J. Med. 1978, 299, 690–694. [Google Scholar] [CrossRef]

- GIMP. Available online: https://www.gimp.org/ (accessed on 24 August 2024).

- GPT-4. Available online: https://openai.com/index/gpt-4/ (accessed on 24 August 2024).

- Goffman, E. The Presentation of Self in Everyday Life; Doubleday: Oxford, UK, 1959. [Google Scholar]

- Ozimek, P.; Lainas, S.; Bierhoff, H.-W.; Rohmann, E. How Photo Editing in Social Media Shapes Self-Perceived Attractiveness and Self-Esteem via Self-Objectification and Physical Appearance Comparisons. BMC Psychol. 2023, 11, 99. [Google Scholar] [CrossRef]

- Eisenthal, Y.; Dror, G.; Ruppin, E. Facial Attractiveness: Beauty and the Machine. Neural Comput. 2006, 18, 119–142. [Google Scholar] [CrossRef] [PubMed]

- Kagian, A.; Dror, G.; Leyvand, T.; Meilijson, I.; Cohen-Or, D.; Ruppin, E. A Machine Learning Predictor of Facial Attractiveness Revealing Human-like Psychophysical Biases. Vis. Res. 2008, 48, 235–243. [Google Scholar] [CrossRef]

- Gründl, M. Determinanten Physischer Attraktivität–Der Einfluss von Durchschnittlichkeit, Symmetrie Und Sexuellem Dimorphismus Auf Die Attraktivität von Gesichtern. Ph.D. Thesis, Universität Regensburg, Regensburg, Germany, 2013. [Google Scholar]

- Varlik, S.K.; Demirbaş, E.; Orhan, M. Influence of Lower Facial Height Changes on Frontal Facial Attractiveness and Perception of Treatment Need by Lay People. Angle Orthod. 2010, 80, 1159–1164. [Google Scholar] [CrossRef] [PubMed]

- Krishna Veni, S.; Elsayed, M.; Singh, I.S.; Nayan, K.; Varma, P.K.; Naik, M.K. Changes in Soft Tissue Variable of Lips Following Retraction of Anterioir Teeth-A Cephalometric Study. J. Pharm. Bioallied Sci. 2023, 15, S248–S251. [Google Scholar] [CrossRef] [PubMed]

- Dibot, N.M.; Tieo, S.; Mendelson, T.C.; Puech, W.; Renoult, J.P. Sparsity in an Artificial Neural Network Predicts Beauty: Towards a Model of Processing-Based Aesthetics. PLoS Comput. Biol. 2023, 19, e1011703. [Google Scholar] [CrossRef]

- Bichu, Y.M.; Hansa, I.; Bichu, A.Y.; Premjani, P.; Flores-Mir, C.; Vaid, N.R. Applications of Artificial Intelligence and Machine Learning in Orthodontics: A Scoping Review. Prog. Orthod. 2021, 22, 18. [Google Scholar] [CrossRef]

- Gao, J.; Wang, X.; Qin, Z.; Zhang, H.; Guo, D.; Xu, Y.; Jin, Z. Profiles of Facial Soft Tissue Changes during and after Orthodontic Treatment in Female Adults. BMC Oral Health 2022, 22, 257. [Google Scholar] [CrossRef] [PubMed]

- Čartolovni, A.; Tomičić, A.; Lazić Mosler, E. Ethical, Legal, and Social Considerations of AI-Based Medical Decision-Support Tools: A Scoping Review. Int. J. Med. Inform. 2022, 161, 104738. [Google Scholar] [CrossRef]

- Murphy, K.; Di Ruggiero, E.; Upshur, R.; Willison, D.J.; Malhotra, N.; Cai, J.C.; Malhotra, N.; Lui, V.; Gibson, J. Artificial Intelligence for Good Health: A Scoping Review of the Ethics Literature. BMC Med. Ethics 2021, 22, 14. [Google Scholar] [CrossRef] [PubMed]

- Morley, J.; Machado, C.C.V.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The Ethics of AI in Health Care: A Mapping Review. Soc. Sci. Med. 2020, 260, 113172. [Google Scholar] [CrossRef]

- Keskinbora, K.H. Medical Ethics Considerations on Artificial Intelligence. J. Clin. Neurosci. 2019, 64, 277–282. [Google Scholar] [CrossRef]

- Hagendorff, T. The Ethics of AI Ethics—An Evaluation of Guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- Mörch, C.M.; Atsu, S.; Cai, W.; Li, X.; Madathil, S.A.; Liu, X.; Mai, V.; Tamimi, F.; Dilhac, M.A.; Ducret, M. Artificial Intelligence and Ethics in Dentistry: A Scoping Review. J. Dent. Res. 2021, 100, 1452–1460. [Google Scholar] [CrossRef]

- Favaretto, M.; Shaw, D.; De Clercq, E.; Joda, T.; Elger, B.S. Big Data and Digitalization in Dentistry: A Systematic Review of the Ethical Issues. Int. J. Environ. Res. Public Health 2020, 17, 2495. [Google Scholar] [CrossRef] [PubMed]

- Kazimierczak, N.; Kazimierczak, W.; Serafin, Z.; Nowicki, P.; Nożewski, J.; Janiszewska-Olszowska, J. AI in Orthodontics: Revolutionizing Diagnostics and Treatment Planning—A Comprehensive Review. J. Clin. Med. 2024, 13, 344. [Google Scholar] [CrossRef]

- Hulsen, T. Explainable Artificial Intelligence (XAI): Concepts and Challenges in Healthcare. AI 2023, 4, 652–666. [Google Scholar] [CrossRef]

- Bispo, J.P. Social Desirability Bias in Qualitative Health Research. Rev. Saude Publica 2022, 56, 101. [Google Scholar] [CrossRef]

- Mazor, K.M.; Clauser, B.E.; Field, T.; Yood, R.A.; Gurwitz, J.H. A Demonstration of the Impact of Response Bias on the Results of Patient Satisfaction Surveys. Health Serv. Res. 2002, 37, 1403–1417. [Google Scholar] [CrossRef] [PubMed]

- Miller, E.J.; Steward, B.A.; Witkower, Z.; Sutherland, C.A.M.; Krumhuber, E.G.; Dawel, A. AI Hyperrealism: Why AI Faces Are Perceived as More Real Than Human Ones. Psychol. Sci. 2023, 34, 1390–1403. [Google Scholar] [CrossRef]

- Niazi, S.K.; Mariam, Z. Computer-Aided Drug Design and Drug Discovery: A Prospective Analysis. Pharmaceuticals 2024, 17, 22. [Google Scholar] [CrossRef]

- Sabe, V.T.; Ntombela, T.; Jhamba, L.A.; Maguire, G.E.M.; Govender, T.; Naicker, T.; Kruger, H.G. Current Trends in Computer Aided Drug Design and a Highlight of Drugs Discovered via Computational Techniques: A Review. Eur. J. Med. Chem. 2021, 224, 113705. [Google Scholar] [CrossRef]

- Sadybekov, A.V.; Katritch, V. Computational Approaches Streamlining Drug Discovery. Nature 2023, 616, 673–685. [Google Scholar] [CrossRef] [PubMed]

- Urbina, F.; Lentzos, F.; Invernizzi, C.; Ekins, S. Dual Use of Artificial-Intelligence-Powered Drug Discovery. Nat. Mach. Intell. 2022, 4, 189–191. [Google Scholar] [CrossRef]

- Khalid, N.; Qayyum, A.; Bilal, M.; Al-Fuqaha, A.; Qadir, J. Privacy-Preserving Artificial Intelligence in Healthcare: Techniques and Applications. Comput. Biol. Med. 2023, 158, 106848. [Google Scholar] [CrossRef]

- Pascadopoli, M.; Zampetti, P.; Nardi, M.G.; Pellegrini, M.; Scribante, A. Smartphone Applications in Dentistry: A Scoping Review. Dent. J. 2023, 11, 243. [Google Scholar] [CrossRef]

- Lee, J.; Bae, S.-R.; Noh, H.-K. Commercial Artificial Intelligence Lateral Cephalometric Analysis: Part 1-the Possibility of Replacing Manual Landmarking with Artificial Intelligence Service. J. Clin. Pediatr. Dent. 2023, 47, 106–118. [Google Scholar] [CrossRef]

- Little, A.C.; Jones, B.C.; DeBruine, L.M. Facial Attractiveness: Evolutionary Based Research. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2011, 366, 1638–1659. [Google Scholar] [CrossRef] [PubMed]

- Muñoz-Reyes, J.A.; Iglesias-Julios, M.; Pita, M.; Turiegano, E. Facial Features: What Women Perceive as Attractive and What Men Consider Attractive. PLoS ONE 2015, 10, e0132979. [Google Scholar] [CrossRef] [PubMed]

- Zheng, R.; Ren, D.; Xie, C.; Pan, J.; Zhou, G. Normality Mediates the Effect of Symmetry on Facial Attractiveness. Acta Psychol. 2021, 217, 103311. [Google Scholar] [CrossRef] [PubMed]

- He, D.; Gu, Y.; Sun, Y. Correlations between Objective Measurements and Subjective Evaluations of Facial Profile after Orthodontic Treatment. J. Int. Med. Res. 2020, 48, 0300060520936854. [Google Scholar] [CrossRef]

- Putrino, A.; Abed, M.R.; Barbato, E.; Galluccio, G. A Current Tool in Facial Aesthetics Perception of Orthodontic Patient: The Digital Warping. Dental. Cadmos. 2021, 89, 46–52. [Google Scholar] [CrossRef]

- Thurzo, A.; Stanko, P.; Urbanova, W.; Lysy, J.; Suchancova, B.; Makovnik, M.; Javorka, V. The WEB 2.0 Induced Paradigm Shift in the e-Learning and the Role of Crowdsourcing in Dental Education. Bratisl. Med. J. 2010, 111, 168–175. [Google Scholar]

- Birhane, A. The Unseen Black Faces of AI Algorithms. Nature 2022, 610, 451–452. [Google Scholar] [CrossRef]

- Duran, G.S.; Gökmen, Ş.; Topsakal, K.G.; Görgülü, S. Evaluation of the Accuracy of Fully Automatic Cephalometric Analysis Software with Artificial Intelligence Algorithm. Orthod. Craniofac. Res. 2023, 26, 481–490. [Google Scholar] [CrossRef]

- Fjeld, J.; Achten, N.; Hilligoss, H.; Nagy, A.; Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI; Berkman Klein Center Research Publication: Cambridge, MA, USA, 2020. [Google Scholar]

- Floridi, L. Establishing the Rules for Building Trustworthy AI. Nat. Mach. Intell. 2019, 1, 261–262. [Google Scholar] [CrossRef]

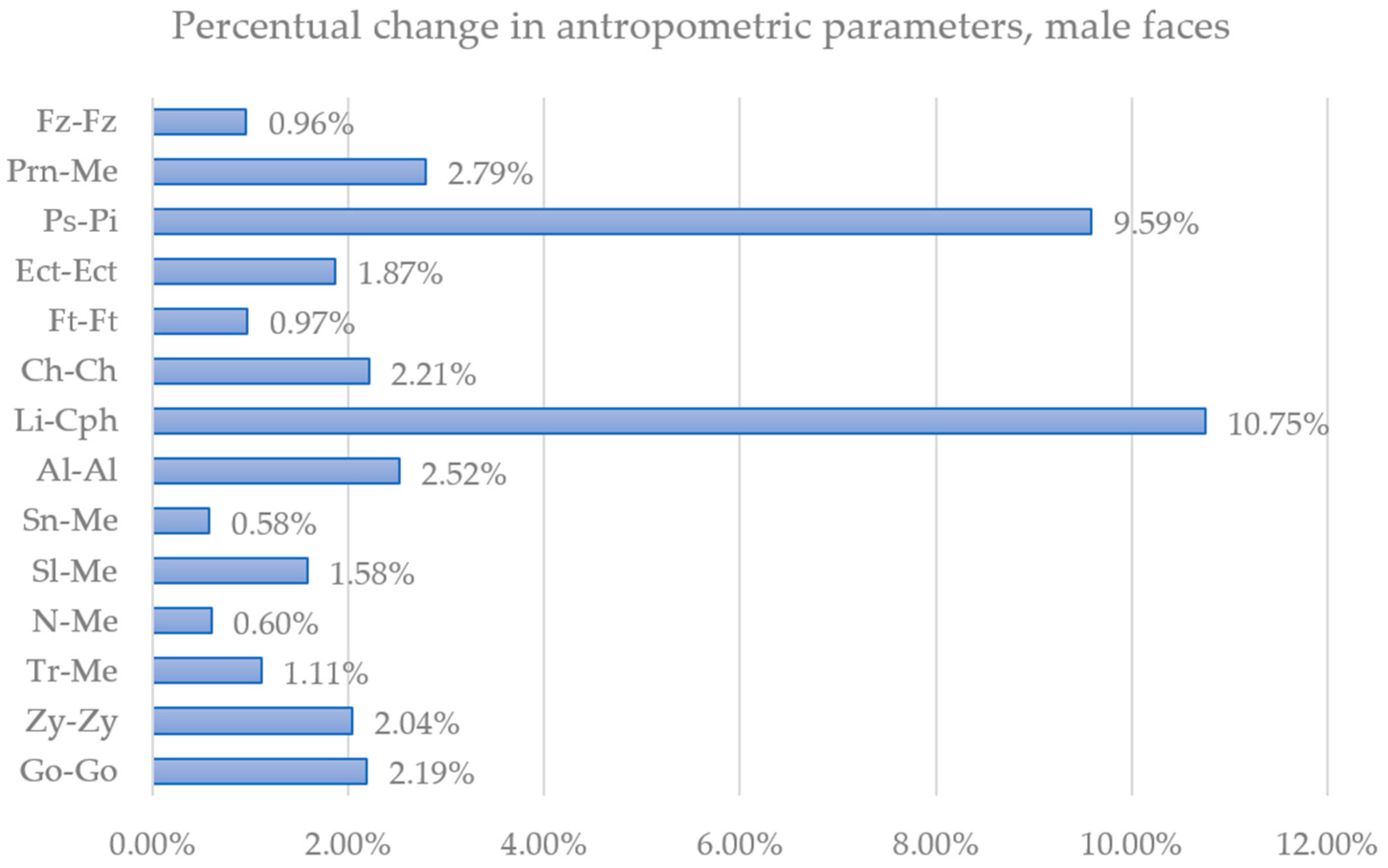

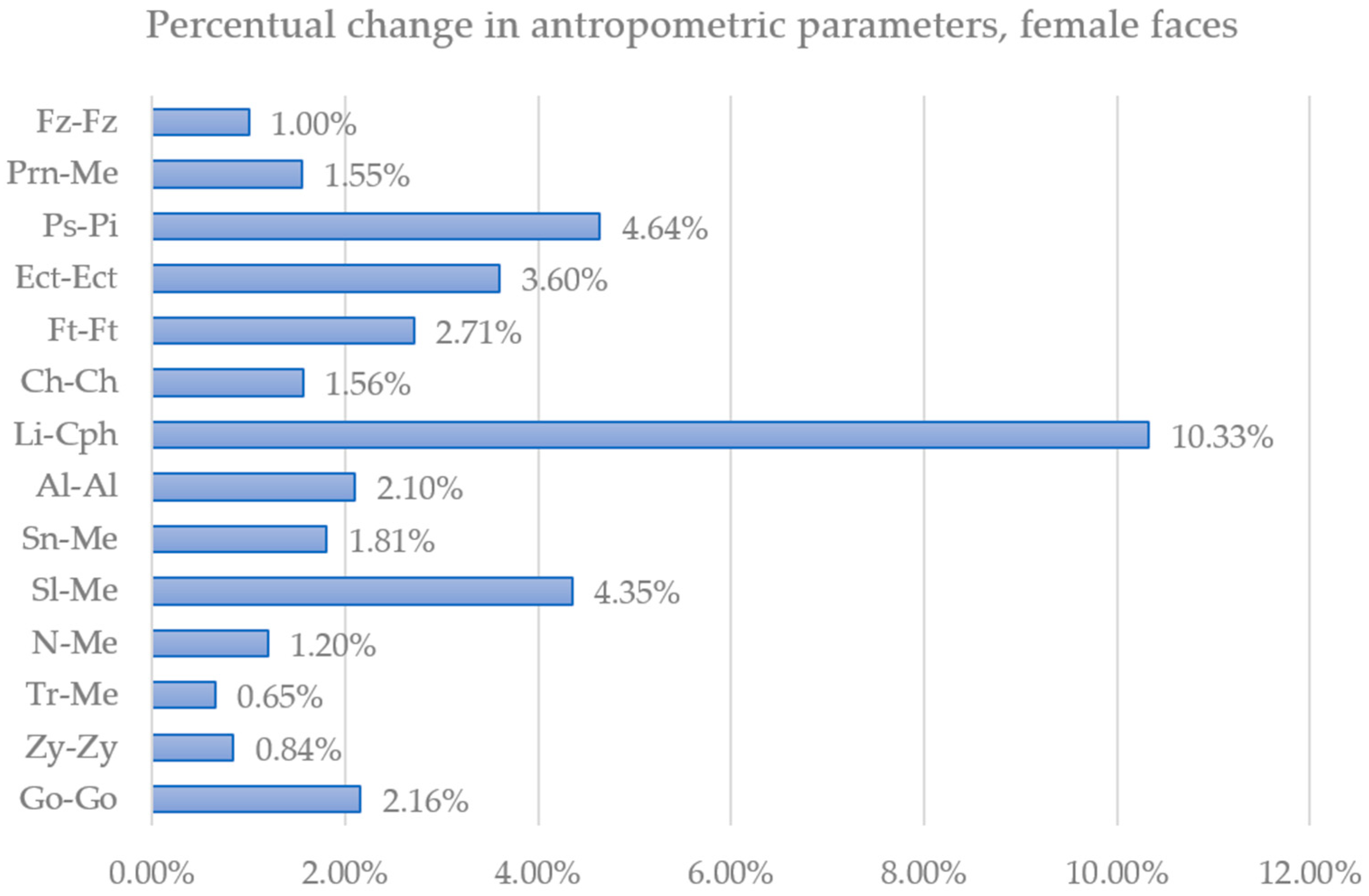

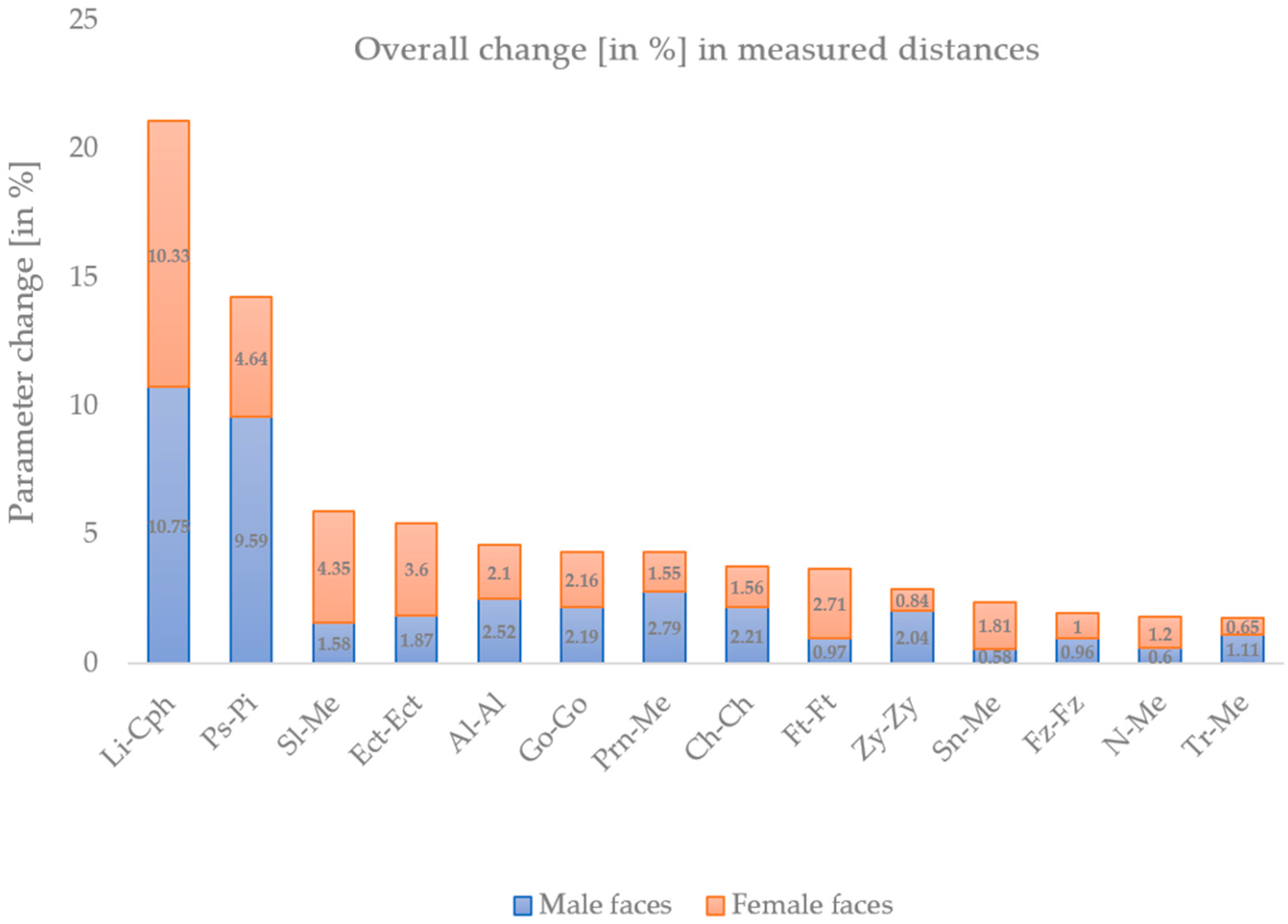

| Abbreviation | Craniometric Points | Significance |

|---|---|---|

| Ch-Ch | Ch—cheilion | Mouth width |

| Go-Go | Go—gonion | Mandible width |

| Zy-Zy | Zy—zygion | Middle face width |

| Tri-Me | Tri—trichion, Me—menton | Face height |

| Sl-Me | Sl—sublabiale, Me—menton | Chin height |

| Sn-Me | Sn—subnasale, Me—menton | The distance from the nose to chin |

| N-Me | N—nasion, Me—menton | Anterior face height |

| Al-Al | Al—alare | Nose width |

| Ft-Ft | Ft—frontotemporale | The distance of the most outer points of superciliary arches on the frontozygomatic suture |

| Fz-Fz | Fz—frontozygomaticus | The distance of the most outer points of superciliary arches |

| Ps-Pi | Ps/i—palpebrale superius/inferius | Eye height |

| Ect-Ect | Ect—exocanthion | Eye width |

| Li-Cph | Li—labrale inferius, Cph—crista philtri | The distance between the upper and lower lip lines |

| Prn-Me | Prn—pronasale, Me—menton | The distance between the nose tip and chin |

| Original | FaceApp | p-Value | Original | FaceApp | p-Value | ||

|---|---|---|---|---|---|---|---|

| Male face 1 | 30.82 | 69.18 | 0.000013 | Female face 1 | 13.48 | 86.52 | <0.000001 |

| Male face 2 | 36.48 | 63.52 | 0.000649 | Female face 2 | 51.06 | 48.94 | 0.7209 |

| Male face 3 | 24.53 | 75.47 | <0.000001 | Female face 3 | 26.60 | 73.40 | <0.000001 |

| Male face 4 | 15.72 | 84.28 | <0.000001 | Female face 4 | 12.06 | 87.94 | <0.000001 |

| Male face 5 | 28.30 | 71.70 | <0.000001 | Female face 5 | 36.17 | 63.83 | 0.000003 |

| Male face 6 | 15.72 | 84.28 | <0.000001 | Female face 6 | 14.89 | 85.11 | <0.000001 |

| Male face 7 | 13.21 | 86.79 | <0.000001 | Female face 7 | 26.95 | 73.05 | <0.000001 |

| Male face 8 | 15.72 | 84.28 | <0.000001 | Female face 8 | 34.04 | 65.96 | <0.000001 |

| Male face 9 | 19.50 | 80.50 | <0.000001 | Female face 9 | 9.93 | 90.07 | <0.000001 |

| Male face 10 | 15.72 | 84.28 | <0.000001 | Female face 10 | 17.38 | 82.62 | <0.000001 |

| Male face 11 | 22.64 | 77.36 | <0.000001 | Female face 11 | 24.11 | 75.89 | <0.000001 |

| Male face 12 | 23.27 | 76.73 | <0.000001 | Female face 12 | 26.60 | 73.40 | <0.000001 |

| Male face 13 | 39.62 | 60.38 | 0.008869 | Female face 13 | 20.21 | 79.79 | <0.000001 |

| Male face 14 | 18.87 | 81.13 | <0.000001 | Female face 14 | 20.21 | 79.79 | <0.000001 |

| Male face 15 | 22.64 | 77.36 | <0.000001 | Female face 15 | 19.50 | 80.50 | <0.000001 |

| Male face 16 | 17.61 | 82.39 | <0.000001 | Female face 16 | 22.70 | 77.30 | <0.000001 |

| Male face 17 | 29.56 | 70.44 | <0.000001 | Female face 17 | 43.26 | 56.74 | 0.02364 |

| Male face 18 | 35.85 | 64.15 | 0.000359 | Female face 18 | 12.77 | 87.23 | <0.000001 |

| Male face 19 | 9.43 | 90.57 | <0.000001 | Female face 19 | 23.76 | 76.24 | <0.000001 |

| Male face 20 | 11.95 | 88.05 | <0.000001 | Female face 20 | 30.85 | 69.15 | <0.000001 |

| Male face 21 | 11.95 | 88.05 | <0.000001 | Female face 21 | 19.86 | 80.14 | <0.000001 |

| Male face 22 | 16.35 | 83.65 | <0.000001 | Female face 22 | 39.72 | 60.28 | 0.000553 |

| Male face 23 | 26.42 | 73.58 | <0.000001 | Female face 23 | 22.34 | 77.66 | <0.000001 |

| Male face 24 | 10.69 | 89.31 | <0.000001 | Female face 24 | 9.93 | 90.07 | <0.000001 |

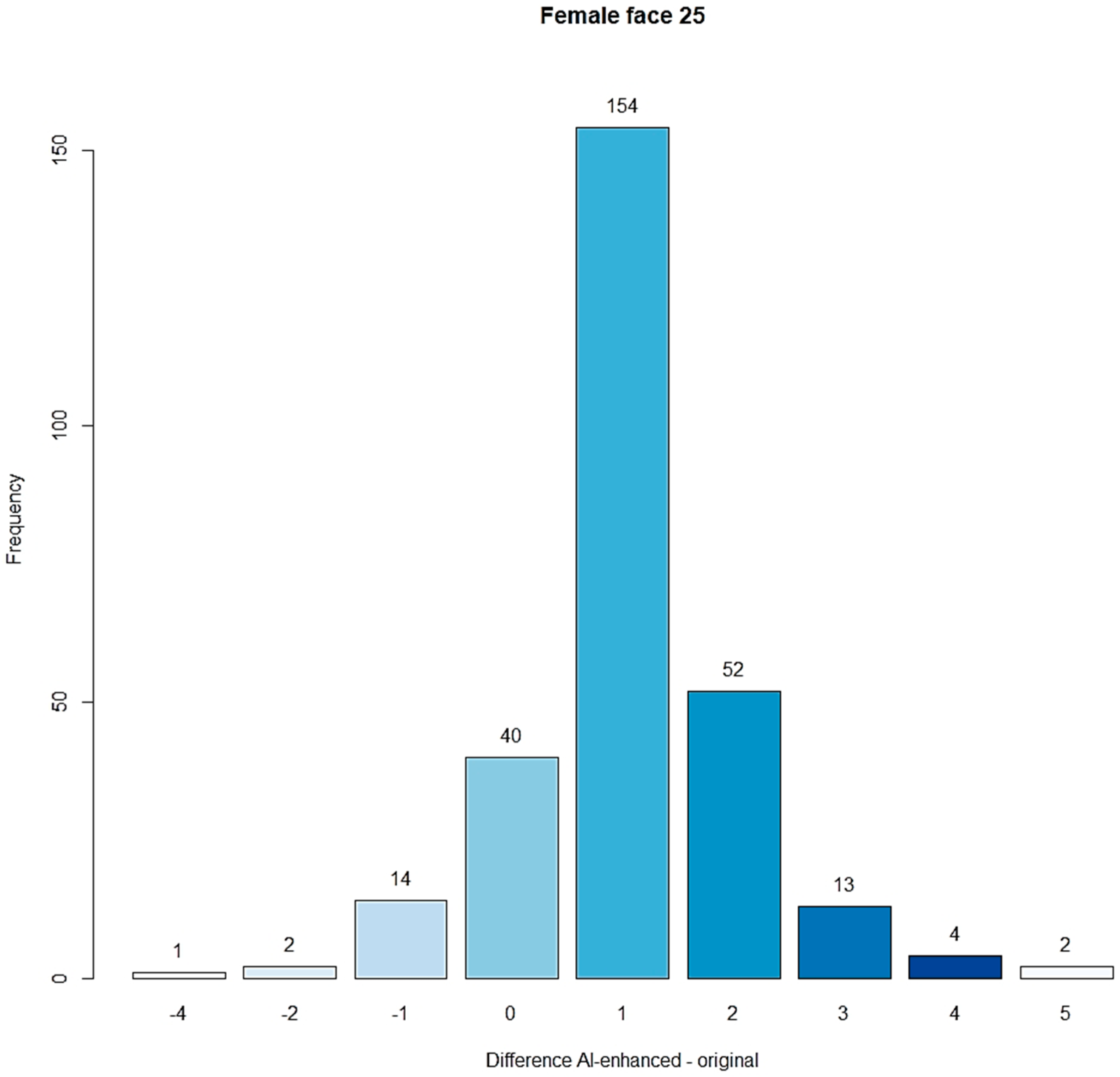

| Male face 25 | 33.96 | 66.04 | 0.000052 | Female face 25 | 7.09 | 92.91 | <0.000001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tomášik, J.; Zsoldos, M.; Majdáková, K.; Fleischmann, A.; Oravcová, Ľ.; Sónak Ballová, D.; Thurzo, A. The Potential of AI-Powered Face Enhancement Technologies in Face-Driven Orthodontic Treatment Planning. Appl. Sci. 2024, 14, 7837. https://doi.org/10.3390/app14177837

Tomášik J, Zsoldos M, Majdáková K, Fleischmann A, Oravcová Ľ, Sónak Ballová D, Thurzo A. The Potential of AI-Powered Face Enhancement Technologies in Face-Driven Orthodontic Treatment Planning. Applied Sciences. 2024; 14(17):7837. https://doi.org/10.3390/app14177837

Chicago/Turabian StyleTomášik, Juraj, Márton Zsoldos, Kristína Majdáková, Alexander Fleischmann, Ľubica Oravcová, Dominika Sónak Ballová, and Andrej Thurzo. 2024. "The Potential of AI-Powered Face Enhancement Technologies in Face-Driven Orthodontic Treatment Planning" Applied Sciences 14, no. 17: 7837. https://doi.org/10.3390/app14177837