Rolling Shutter-Based Underwater Optical Camera Communication (UWOCC) with Side Glow Optical Fiber (SGOF)

Abstract

:1. Introduction

- Underwater VLC systems reported in the studies usually employ LD or LED Txs. Here, we employ SGOF Tx, which is lightweight, flexibly bendable, and water resistant. It can also provide 360° “omni-directional” uniform light emission around its circumference.

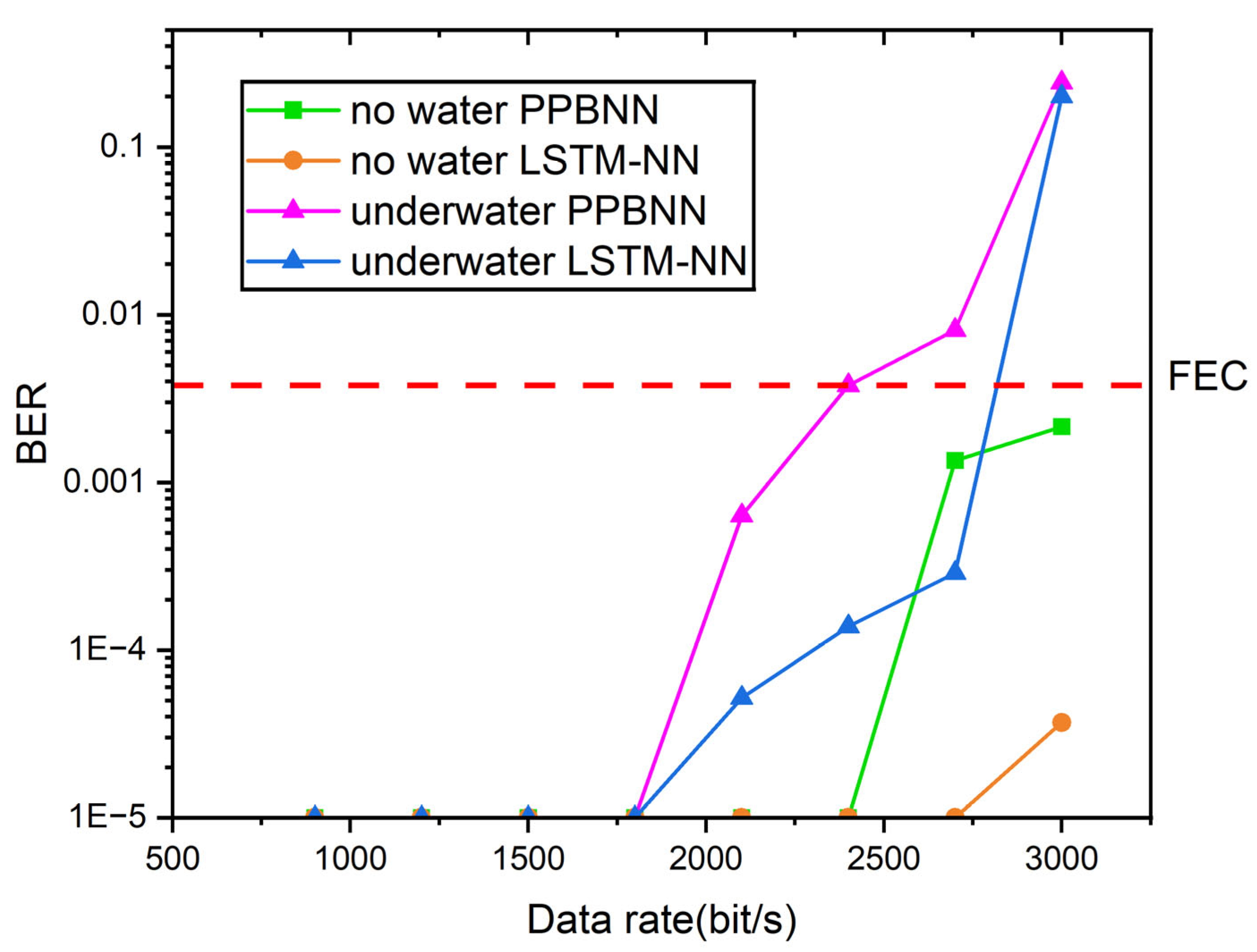

- Underwater VLC systems reported in the studies usually employ PD or APD Rxs. Here, we employ a CMOS image sensor-based OCC, which allows reliable and larger FOV detection.

- In this demonstration, both SGOF Tx and the CMOS image sensor are immersed in water to achieve more accurate experimental results.

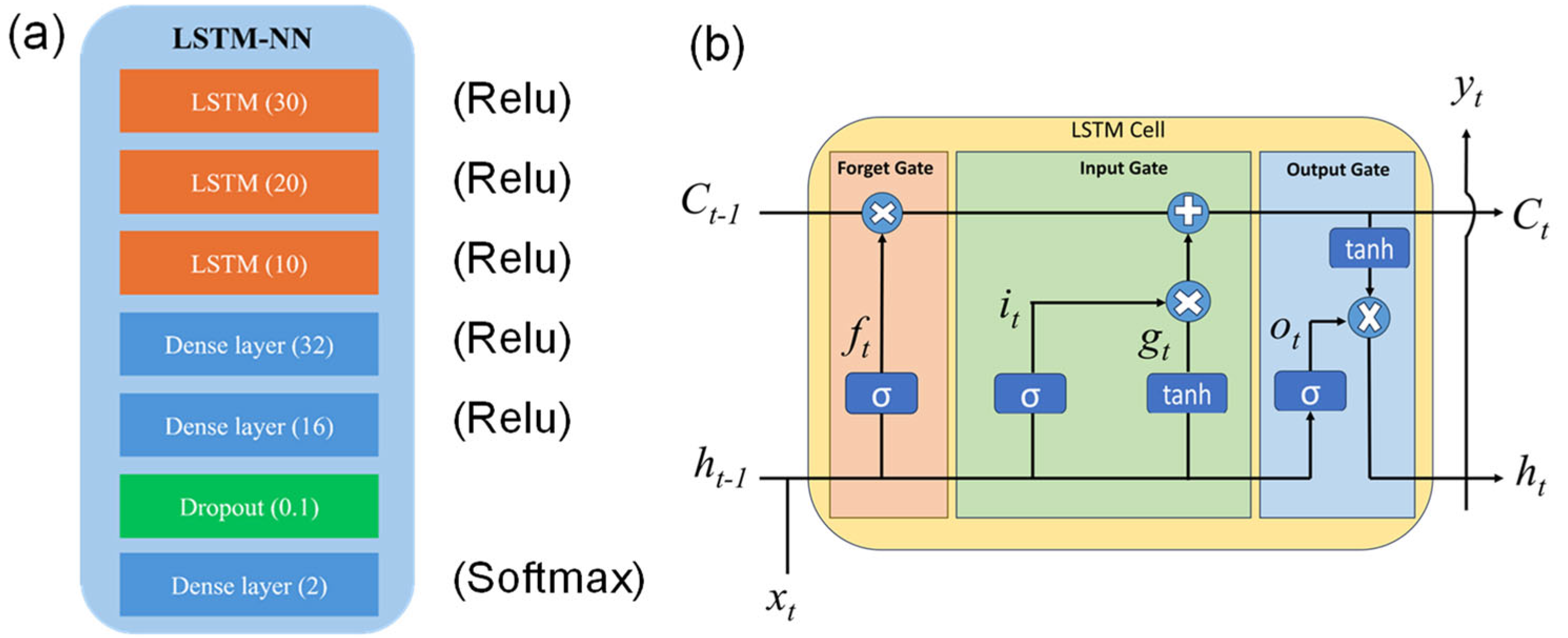

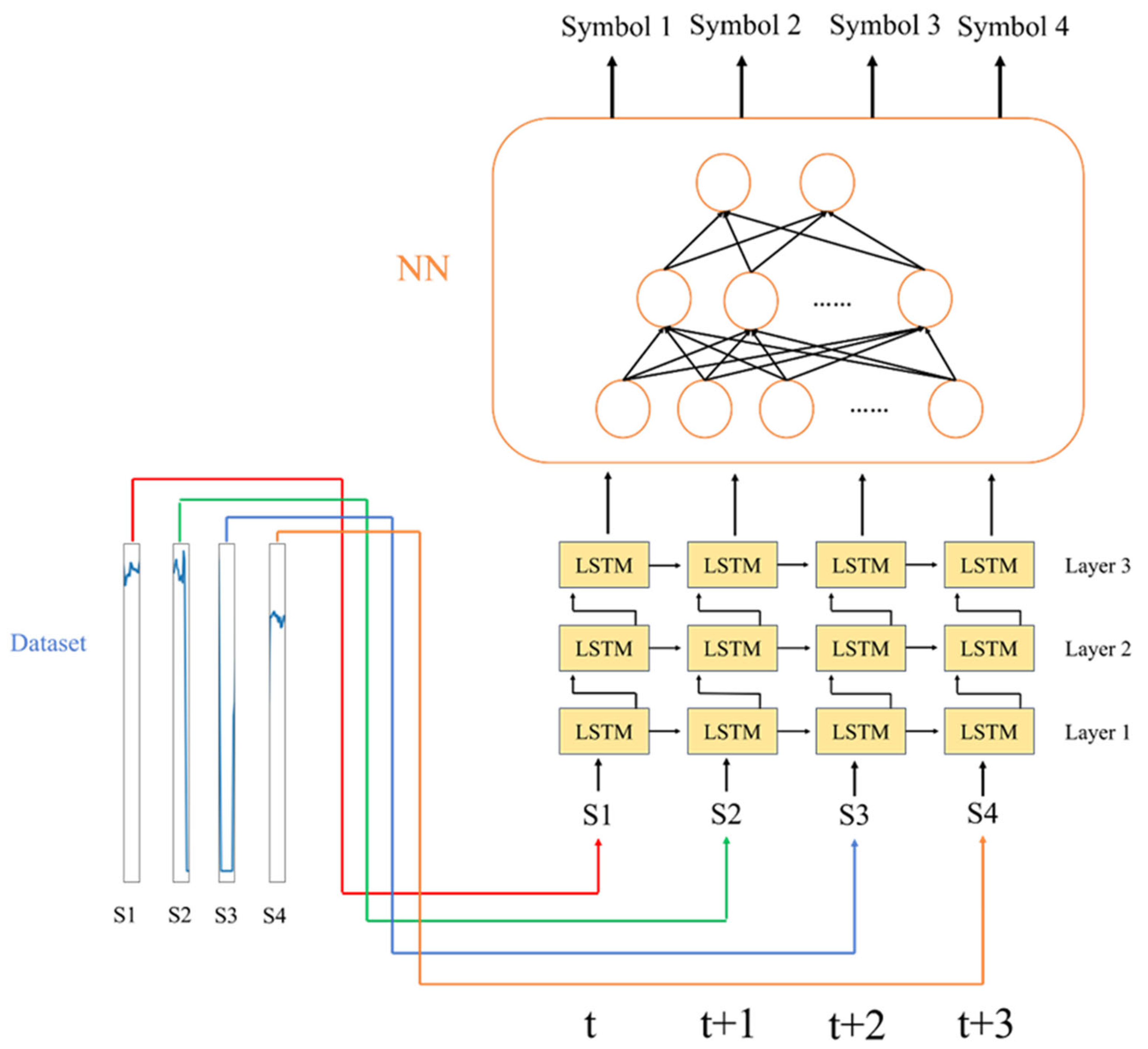

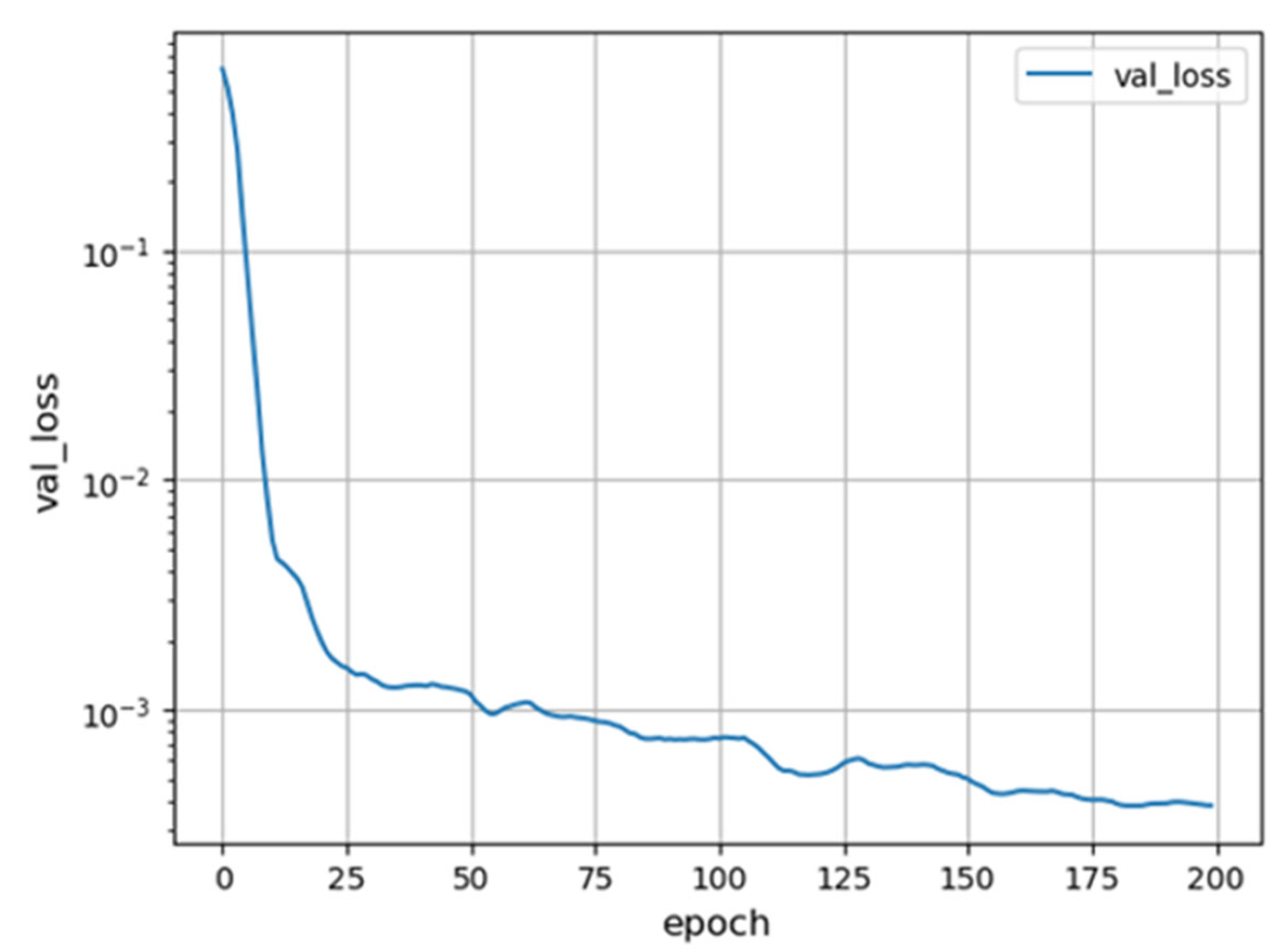

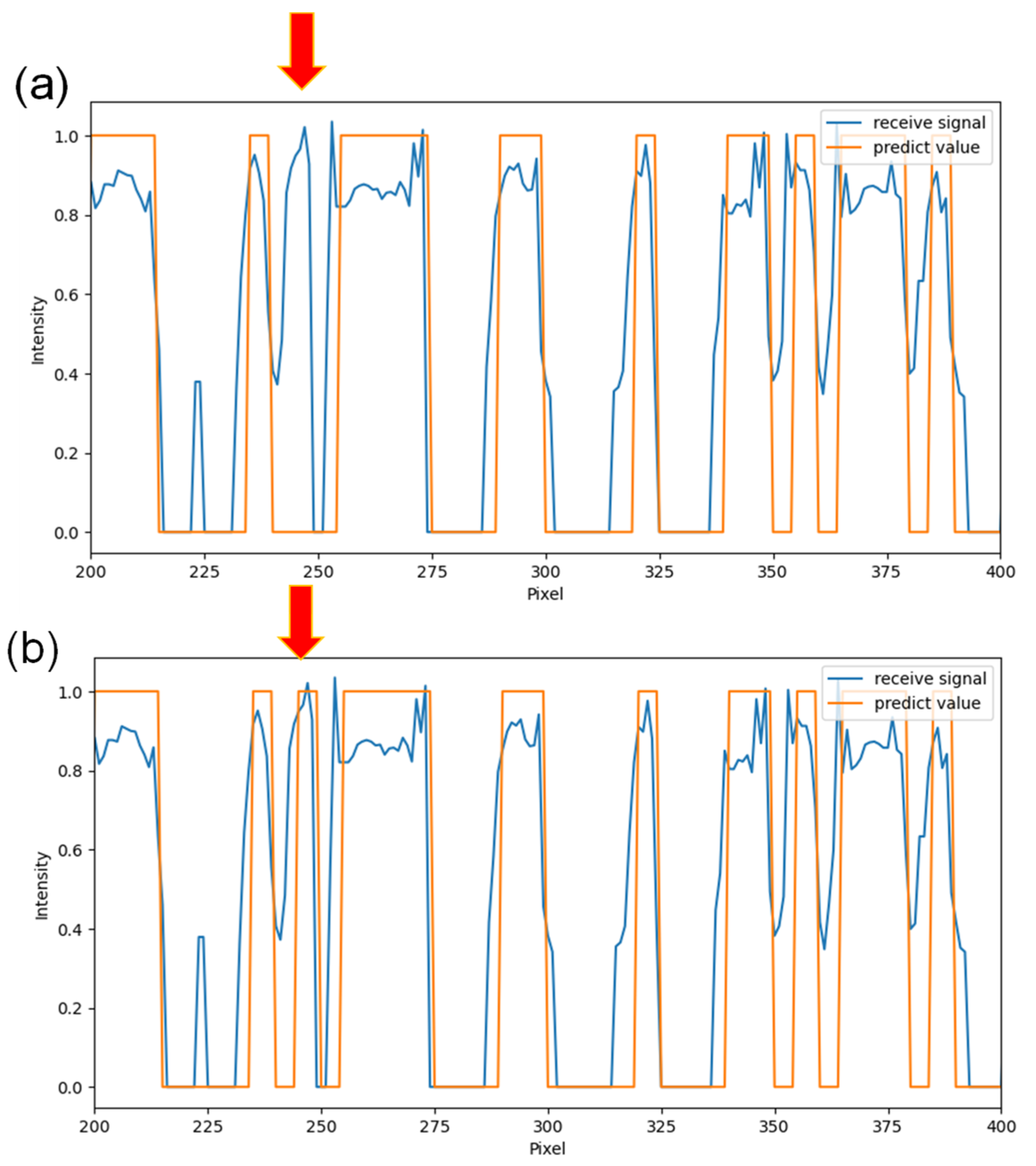

- Here, RS decoding is realized by the proposed LSTM-NN, which has the time-memorizing characteristics to enhance the decoding of the OCC signal.

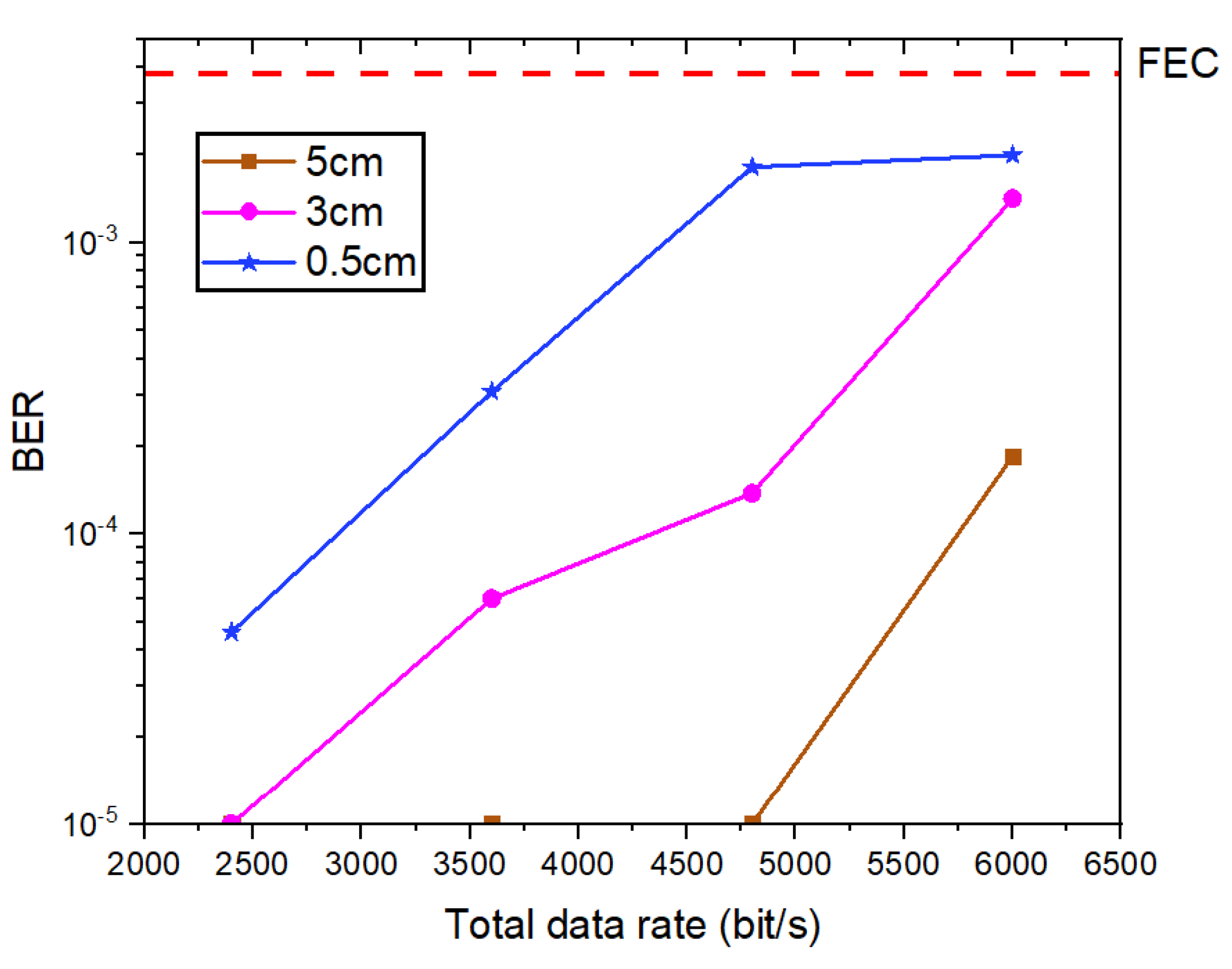

- Parallel SGOF Txs are also studied to achieve spatial multiplexing, enhancing the total transmission capacity.

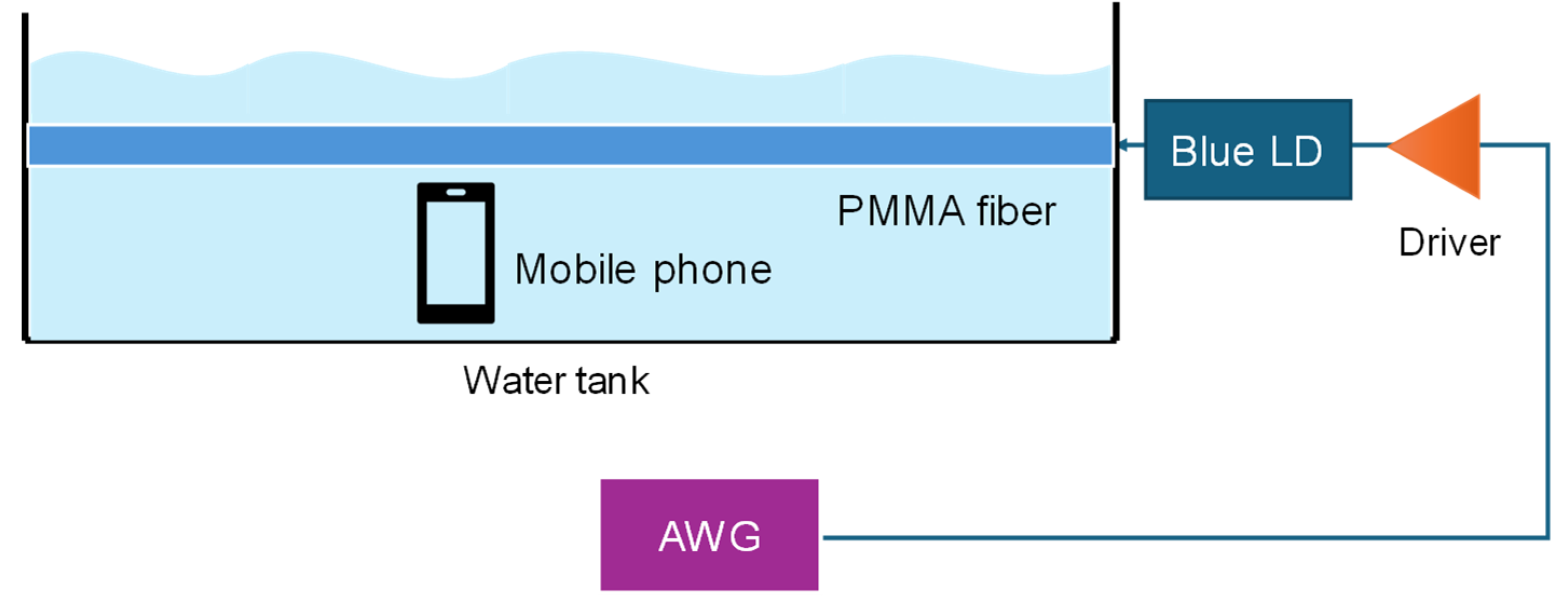

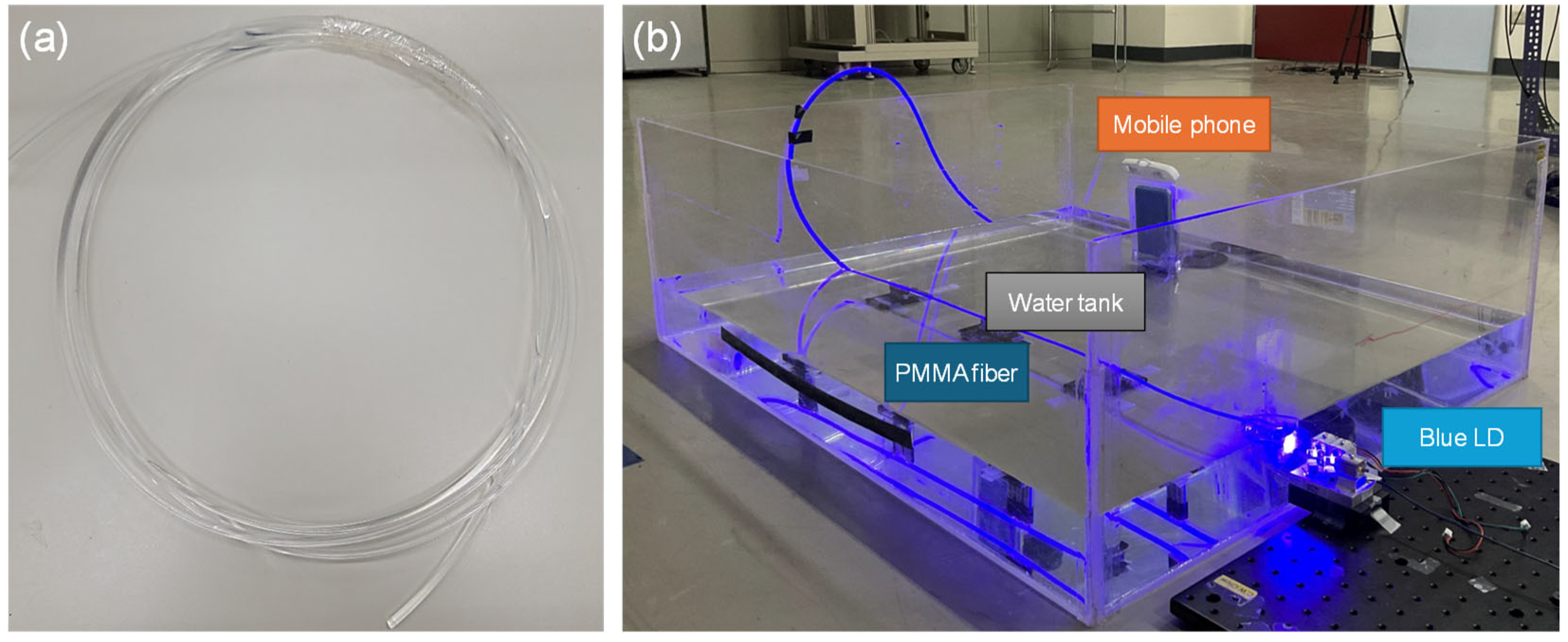

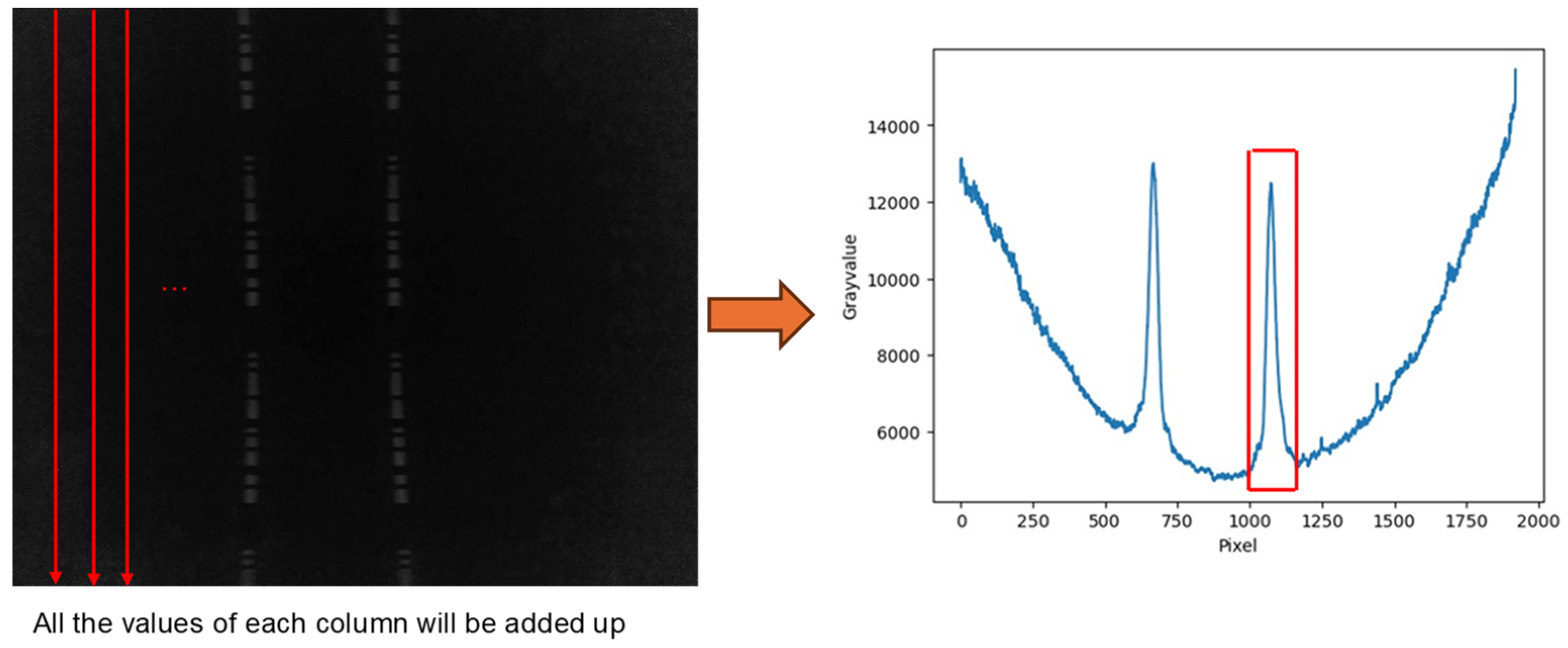

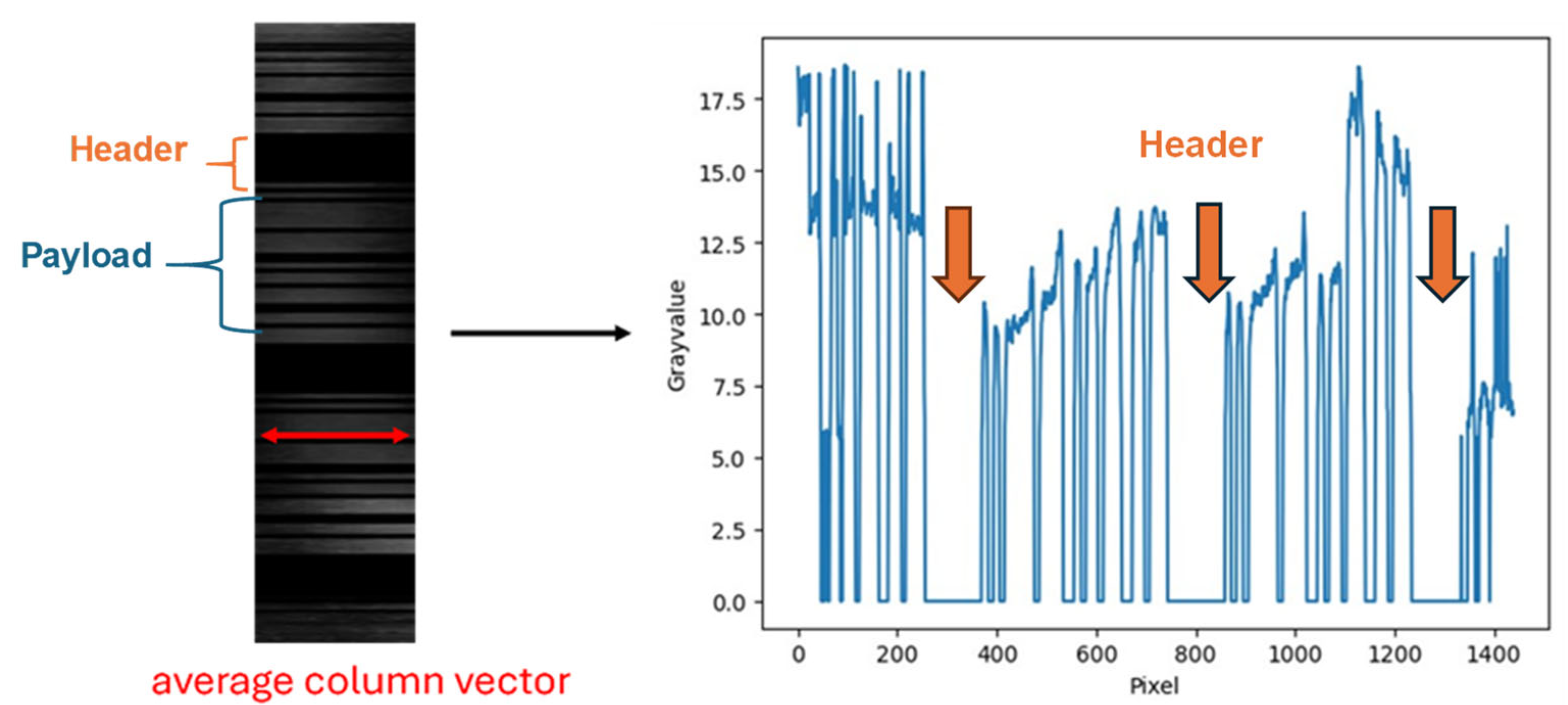

2. Experimental Setup

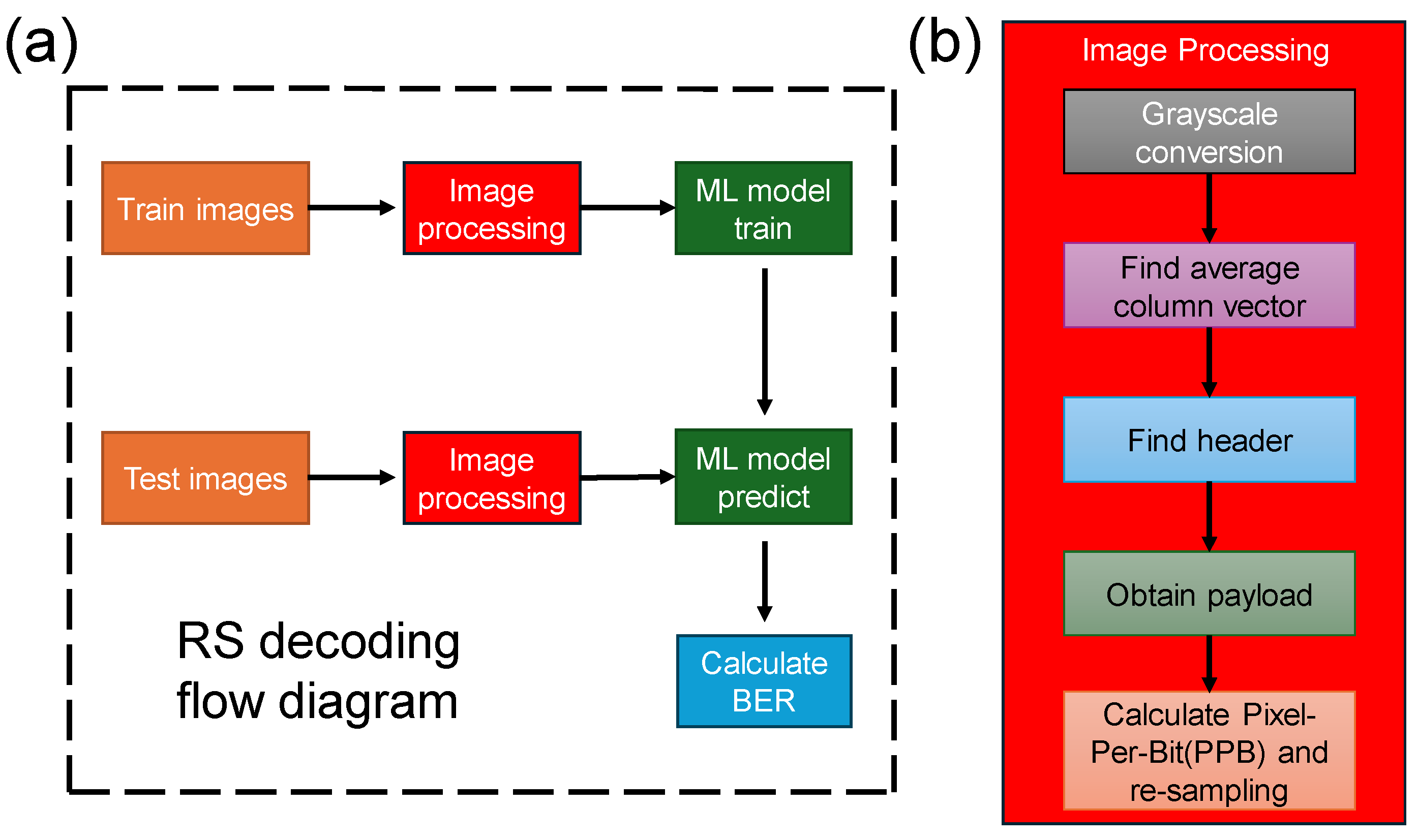

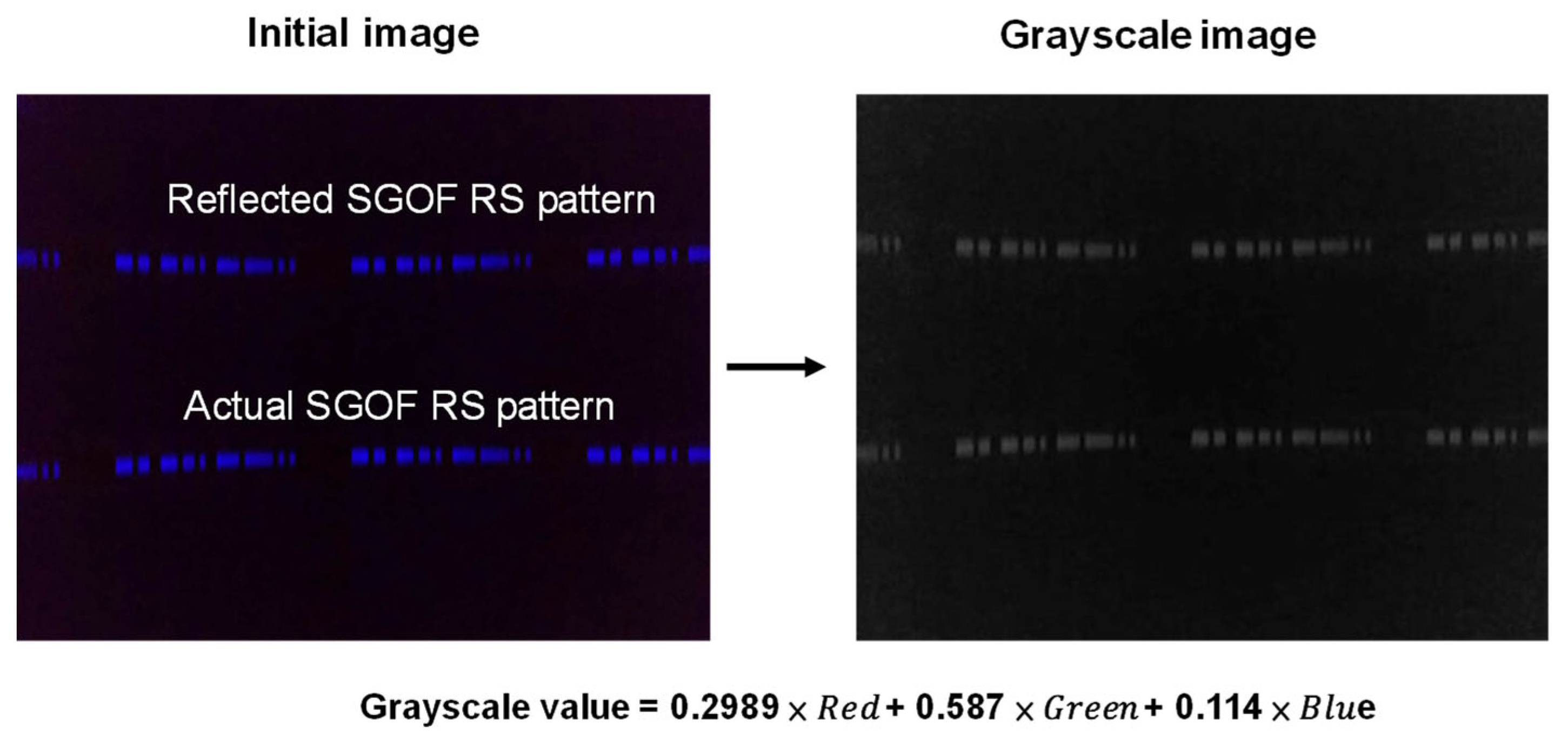

3. AI/ML Algorithm

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Komine, T.; Nakagawa, M. Fundamental analysis for visible-light communication system using LED lights. IEEE Trans. Consum. Electron. 2004, 50, 100–107. [Google Scholar] [CrossRef]

- O’Brien, D.C.; Zeng, L.; Le-Minh, H.; Faulkner, G.; Walewski, J.W.; Randel, S. Visible light communications: Challenges and possibilities. In Proceedings of the 2008 IEEE 19th International Symposium on Personal, Indoor and Mobile Radio Communications, Cannes, France, 15–18 September 2008; pp. 1–5. [Google Scholar]

- Chow, C.W.; Yeh, C.H.; Liu, Y.; Liu, Y.F. Digital signal processing for light emitting diode based visible light communication. IEEE Photon. Soc. Newslett. 2012, 26, 9–13. [Google Scholar]

- Lee, C.; Shen, C.; Oubei, H.M.; Cantore, M.; Janjua, B.; Ng, T.K.; Farrell, R.M.; El-Desouki, M.M.; Speck, J.S.; Nakamura, S.; et al. 2 Gbit/s data transmission from an unfiltered laser-based phosphor-converted white lighting communication system. Opt. Exp. 2015, 23, 29779–29787. [Google Scholar] [CrossRef] [PubMed]

- Chi, Y.C.; Hsieh, D.H.; Tsai, C.T.; Chen, H.Y.; Kuo, H.C.; Lin, G.R. 450-nm GaN laser diode enables high-speed visible light communication with 9-Gbps QAM-OFDM. Opt. Exp. 2015, 23, 13051–13059. [Google Scholar]

- Huang, X.H.; Lu, H.H.; Chang, P.S.; Liu, C.X.; Lin, Y.Y.; Ko, T.; Chen, Y.T. Bidirectional white-lighting WDM VLC–UWOC converged systems. J. Light. Technol. 2021, 39, 4351–4359. [Google Scholar] [CrossRef]

- Haas, H.; Elmirghani, J.; White, I. Optical wireless communication. Phil. Trans. R. Soc. 2020, 378, 37820200051. [Google Scholar] [CrossRef]

- Serafimovski, N.; Jungnickel, V.; Jang, Y.M.; Qiang, J.L. An Overview on High Speed Optical Wireless/Light Communications. IEEE 802.11-17/0962r1. 2017. Available online: https://www.google.com.hk/url?sa=t&source=web&rct=j&opi=89978449&url=https://www.ieee802.org/802_tutorials/2017-07/11-17-0962-03-00lc-An-Overview-on-High-Speed-Optical-Wireless-Light.pdf&ved=2ahUKEwjog5Oo16CIAxV4slYBHcwSCkgQFnoECBIQAQ&usg=AOvVaw3wccs1P_LroY6R9zUmvzkC (accessed on 1 September 2024).

- Chow, C.W. Recent advances and future perspectives in optical wireless communication, free space optical communication and sensing for 6G. J. Light. Technol. 2024, 42, 3972–3980. [Google Scholar] [CrossRef]

- Haas, H.; Yin, L.; Wang, Y.; Chen, C. What is LiFi? J. Light. Technol. 2016, 34, 1533–1544. [Google Scholar] [CrossRef]

- Chi, N.; Zhou, Y.; Wei, Y.; Hu, F. Visible light communication in 6G: Advances, challenges, and prospects. IEEE Veh. Technol. Mag. 2020, 15, 93–102. [Google Scholar] [CrossRef]

- Lu, H.H.; Li, C.Y.; Lin, H.H.; Tsai, W.S.; Chu, C.A.; Chen, B.R.; Wu, C.J. An 8 m/9.6 Gbps underwater wireless optical communication system. IEEE Photon. J. 2016, 8, 7906107. [Google Scholar] [CrossRef]

- Chen, L.K.; Shao, Y.; Di, Y. Underwater and water-air optical wireless communication. J. Light. Technol. 2022, 40, 1440–1452. [Google Scholar] [CrossRef]

- Tsai, S.Y.; Chang, Y.H.; Chow, C.W. Wavy water-to-air optical camera communication system using rolling shutter image sensor and long short term memory neural network. Opt. Express 2024, 32, 6814–6822. [Google Scholar] [PubMed]

- Singh, H.B.; Pal, R. Submarine communications. Def. Sci. J. 1993, 43, 43–51. [Google Scholar] [CrossRef]

- Quazi, A.H.; Konrad, W.L. Underwater acoustic communications. IEEE Commun. Mag. 1982, 20, 24–30. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Pompili, D.; Melodia, T. Underwater acoustic sensor networks: Research challenges. Ad Hoc Netw. 2005, 3, 257–279. [Google Scholar] [CrossRef]

- Soliman, D. Augmented Microscopy: Development and Application of High-Resolution Optoacoustic and Multimodal Imaging Techniques for Label-Free Biological Observation. Ph.D. Thesis, Technische Universität München, Munich, Germany, 2016. [Google Scholar]

- Zhu, S.; Chen, X.; Liu, X.; Zhang, G.; Tian, P. Recent progress in and perspectives of underwater wireless optical communication. Prog. Quantum Electron. 2020, 73, 100274. [Google Scholar]

- Chi, N.; Shi, M. Advanced modulation formats for underwater visible light communications [Invited]. Chin. Opt. Lett. 2018, 16, 120603. [Google Scholar]

- Wang, F.M.; Liu, Y.F.; Shi, M.; Chen, H.; Chi, N. 3.075 Gb/s underwater visible light communication utilizing hardware pre-equalizer with multiple feature points. Opt. Eng. 2019, 58, 056117. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhu, X.; Hu, F.; Shi, J.; Wang, F.; Zou, P.; Liu, J.; Jiang, F.; Chi, N. Common-anode LED on a Si substrate for beyond 15 Gbit/s underwater visible light communication. Photon. Res. 2019, 7, 1019–1029. [Google Scholar] [CrossRef]

- Hong, X.J.; Fei, C.; Zhang, G.W.; Du, J.; He, S. Discrete multitone transmission for underwater optical wireless communication system using probabilistic constellation shaping to approach channel capacity limit. Opt. Lett. 2019, 44, 558–561. [Google Scholar] [CrossRef]

- Tsai, W.S.; Lu, H.H.; Wu, H.W.; Su, C.W.; Huang, Y.C. A 30 Gb/s PAM4 underwater wireless laser transmission system with optical beam reducer/expander. Sci. Rep. 2019, 9, 8065. [Google Scholar] [CrossRef] [PubMed]

- Hu, S.; Mi, L.; Zhou, T.; Chen, W. 35.88 attenuation lengths and 3.32 bits/photon underwater optical wireless communication based on photon-counting receiver with 256-PPM. Opt. Express 2018, 26, 21685–21699. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.L.; Chen, X.W.; Lu, J.; Liu, X.; Shi, J.; Zheng, L.; Liu, R.; Zhou, X.; Tian, P. Toward long-distance underwater wireless optical communication based on A high sensitivity single photon avalanche diode. IEEE Photon. J. 2020, 12, 7902510. [Google Scholar] [CrossRef]

- Su, X.; Ullah, I.; Liu, X.; Choi, D. A Review of Underwater Localization Techniques, Algorithms, and Challenges. J. Sens. 2020, 2020, 6403161. [Google Scholar] [CrossRef]

- Ullah, I.; Chen, J.; Su, X.; Esposito, C.; Choi, C. Localization and Detection of Targets in Underwater Wireless Sensor Using Distance and Angle Based Algorithms. IEEE Access 2019, 7, 45693–45704. [Google Scholar] [CrossRef]

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS camera sensor for visible light communication. In Proceedings of the 2012 IEEE Globecom Workshops, Anaheim, CA, USA, 3–7 December 2012; pp. 1244–1248. [Google Scholar]

- Chow, C.W.; Liu, Y.; Yeh, C.H.; Chang, Y.H.; Lin, Y.S.; Hsu, K.L.; Liao, X.L.; Lin, K.H. Display light panel and rolling shutter image sensor based optical camera communication (OCC) using frame-averaging background removal and neural network. J. Light. Technol. 2021, 39, 4360–4366. [Google Scholar] [CrossRef]

- Roberts, R.D. Undersampled frequency shift ON-OFF keying (UFSOOK) for camera communications (CamCom). In Proceedings of the 2013 22nd Wireless and Optical Communication Conference, Chongqing, China, 16–18 May 2013. [Google Scholar]

- Chow, C.W.; Shiu, R.J.; Liu, Y.C.; Liu, Y.; Yeh, C.H. Non-flickering 100 m RGB visible light communication transmission based on a CMOS image sensor. Opt. Exp. 2018, 26, 7079–7084. [Google Scholar] [CrossRef]

- Luo, P.; Ghassemlooy, Z.; Le Minh, H.; Tang, X.; Tsai, H.M. Undersampled phase shift ON-OFF keying for camera communication. In Proceedings of the 2014 Sixth International Conference on Wireless Communications and Signal Processing (WCSP), Hefei, China, 23–25 October 2014; pp. 1–6. [Google Scholar]

- Tsai, T.T.; Chow, C.W.; Chang, Y.H.; Jian, Y.H.; Liu, Y.; Yeh, C.H. 130-m Image sensor based Visible Light Communication (VLC) using under-sample modulation and spatial modulation. Opt. Comm. 2022, 519, 128405. [Google Scholar] [CrossRef]

- Chang, Y.H.; Tsai, S.Y.; Chow, C.W.; Wang, C.C.; Tsai, D.C.; Liu, Y.; Yeh, C.H. Unmanned-aerial-vehicle based optical camera communication system using light-diffusing fiber and rolling-shutter image-sensor. Opt. Express 2023, 31, 18670–18679. [Google Scholar] [CrossRef]

- Komanec, M.; Yánez, C.G.; Eöllös-Jarošíková, K.; Zvánovec, S. Side-emitting fiber-based distributed receiver for visible light communication uplink. Opt. Lett. 2023, 48, 6180–6183. [Google Scholar]

- Teli, S.R.; Eollosova, K.; Zvanovec, S.; Ghassemlooy, Z.; Komanec, M. Optical camera communications link using an LED-coupled illuminating optical fiber. Opt. Lett. 2021, 46, 2622–2625. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.H.; Chow, C.W.; Lin, Y.Z.; Jian, Y.H.; Wang, C.C.; Liu, Y.; Yeh, C.H. Bi-Directional Free-Space Visible Light Communication Supporting Multiple Moveable Clients Using Light Diffusing Optical Fiber. Sensors 2023, 23, 4725. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Deng, R.; Chen, L.K. 47-kbit/s RGB-LED-based optical camera communication based on 2D-CNN and XOR-based data loss compensation. Opt. Express 2019, 27, 33840–33846. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.S.; Chow, C.W.; Liu, Y.; Chang, Y.H.; Lin, K.H.; Wang, Y.C.; Chen, Y.Y. PAM4 rolling-shutter demodulation using a pixel-per-symbol labeling neural network for optical camera communications. Opt. Express 2021, 29, 31680–31688. [Google Scholar] [CrossRef]

- Peng, C.W.; Chow, C.W.; Tsai, D.C.; Liu, Y.; Yeh, C.H. Mitigation of PAM4 rolling shuttered pattern grayscale ambiguity in demodulation utilizing long short term memory neural network (LSTM-NN) in optical wireless communication systems. Opt. Comm. 2023, 532, 129260. [Google Scholar]

- Kim, B.W.; Lee, J.H.; Jung, S.Y. Transition-based Data Decoding for Optical Camera Communications Using a Rolling Shutter Camera. Curr. Opt. Photon. 2018, 2, 422–430. [Google Scholar]

- Higa, A.; Hisano, D.; Nakayama, Y. Demonstration of symbol timing synchronization for rolling shutter-based optical camera communication. Opt. Express 2024, 32, 29125–29137. [Google Scholar] [CrossRef]

- Liu, Y. Decoding mobile-phone image sensor rolling shutter effect for visible light communications. Opt. Eng. 2016, 55, 016103. [Google Scholar] [CrossRef]

- Younus, O.I.; Hassan, N.B.; Ghassemlooy, Z.; Haigh, P.A.; Zvanovec, S.; Alves, L.N.; Minh, H.L. Data Rate Enhancement in Optical Camera Communications Using an Artificial Neural Network Equaliser. IEEE Access 2020, 8, 42656–42665. [Google Scholar] [CrossRef]

- Kallweit, J.; Pätzel, M.; Pursche, F.; Jabban, J.; Morobeid, M.; Gries, T. An Overview on Methods for Producing Side-Emitting Polymer Optical Fibers. Textiles 2021, 1, 337–360. [Google Scholar] [CrossRef]

- Huang, J.; Kˇremenáková, D.; Militký, J.; Zhu, G. Evaluation of Illumination Intensity of Plastic Optical Fibres with Tio2 Particles by Laser Treatment. Autex Res. J. 2015, 15, 13–18. [Google Scholar] [CrossRef]

- Kokhanovsky, A.A. Light Scattering Reviews 10; Springer: Berlin/Heidelberg, Germany, 2016; ISBN 978-3-662-46761-9. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Value |

|---|---|

| Diameter | 3 mm |

| Density | 1.15 g/cm3 |

| Length | 2 m |

| Refractive index | 1.39 |

| Melting point | 130–140 °C |

| Parameter | Feature |

|---|---|

| Resolution | 1920 × 1440 |

| Shutter type | Rolling shutter |

| Optical format | 1/2.8″ optical format |

| Pixel size | 1.12 μm × 1.12 μm |

| Frame rate | 30 fps |

| Shutter speed | 1/10,000 s |

| Fiber Separation (cm) | Occupied Pixel (Pixels) |

|---|---|

| 0.5 | 20 |

| 3 | 113 |

| 5 | 184 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.-F.; Chang, Y.-H.; Chen, Y.-J.; Chow, C.-W. Rolling Shutter-Based Underwater Optical Camera Communication (UWOCC) with Side Glow Optical Fiber (SGOF). Appl. Sci. 2024, 14, 7840. https://doi.org/10.3390/app14177840

Li J-F, Chang Y-H, Chen Y-J, Chow C-W. Rolling Shutter-Based Underwater Optical Camera Communication (UWOCC) with Side Glow Optical Fiber (SGOF). Applied Sciences. 2024; 14(17):7840. https://doi.org/10.3390/app14177840

Chicago/Turabian StyleLi, Jia-Fu, Yun-Han Chang, Yung-Jie Chen, and Chi-Wai Chow. 2024. "Rolling Shutter-Based Underwater Optical Camera Communication (UWOCC) with Side Glow Optical Fiber (SGOF)" Applied Sciences 14, no. 17: 7840. https://doi.org/10.3390/app14177840