Numerical Evaluation of Hot Air Recirculation in Server Rack

Abstract

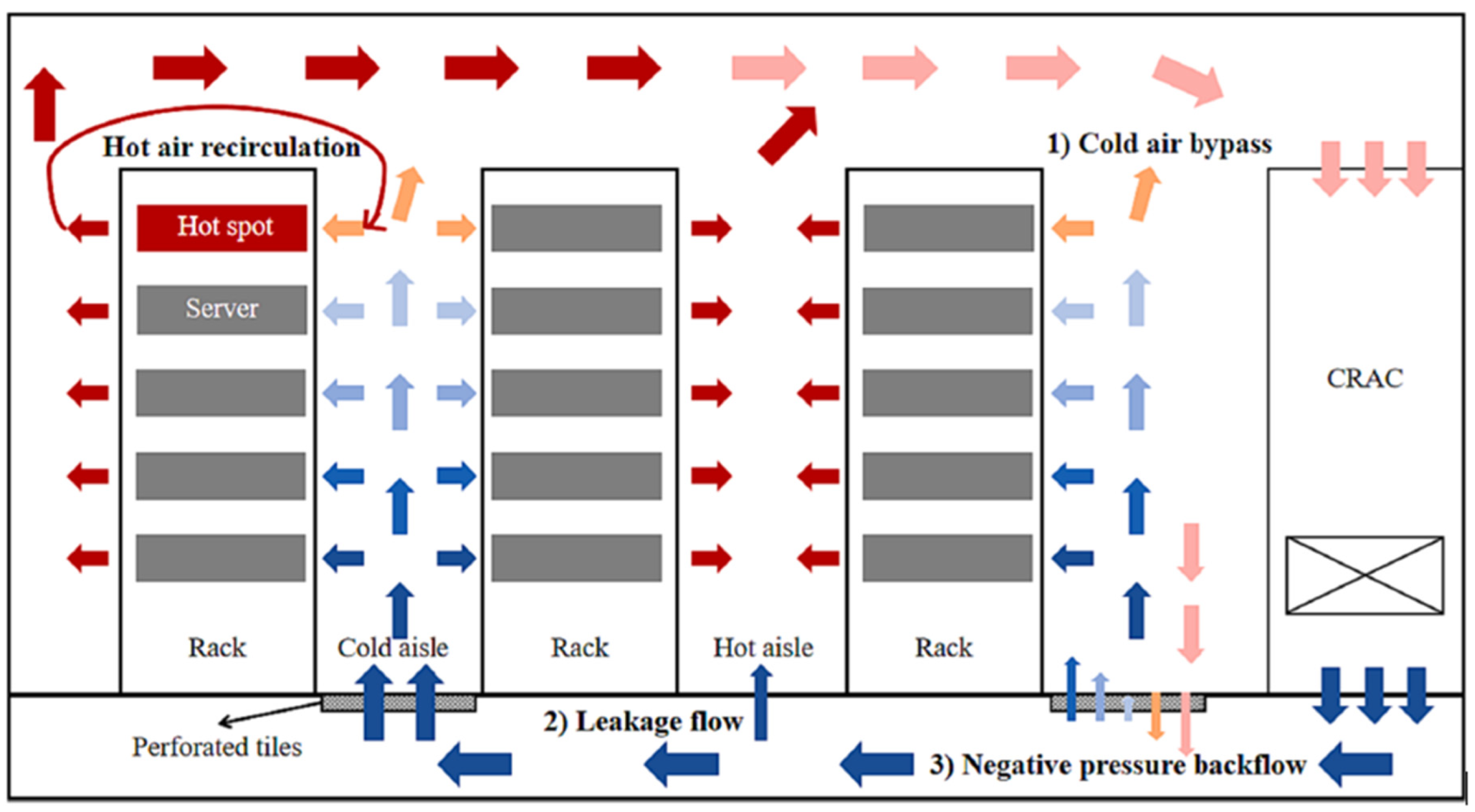

:1. Introduction

2. Materials and Methods

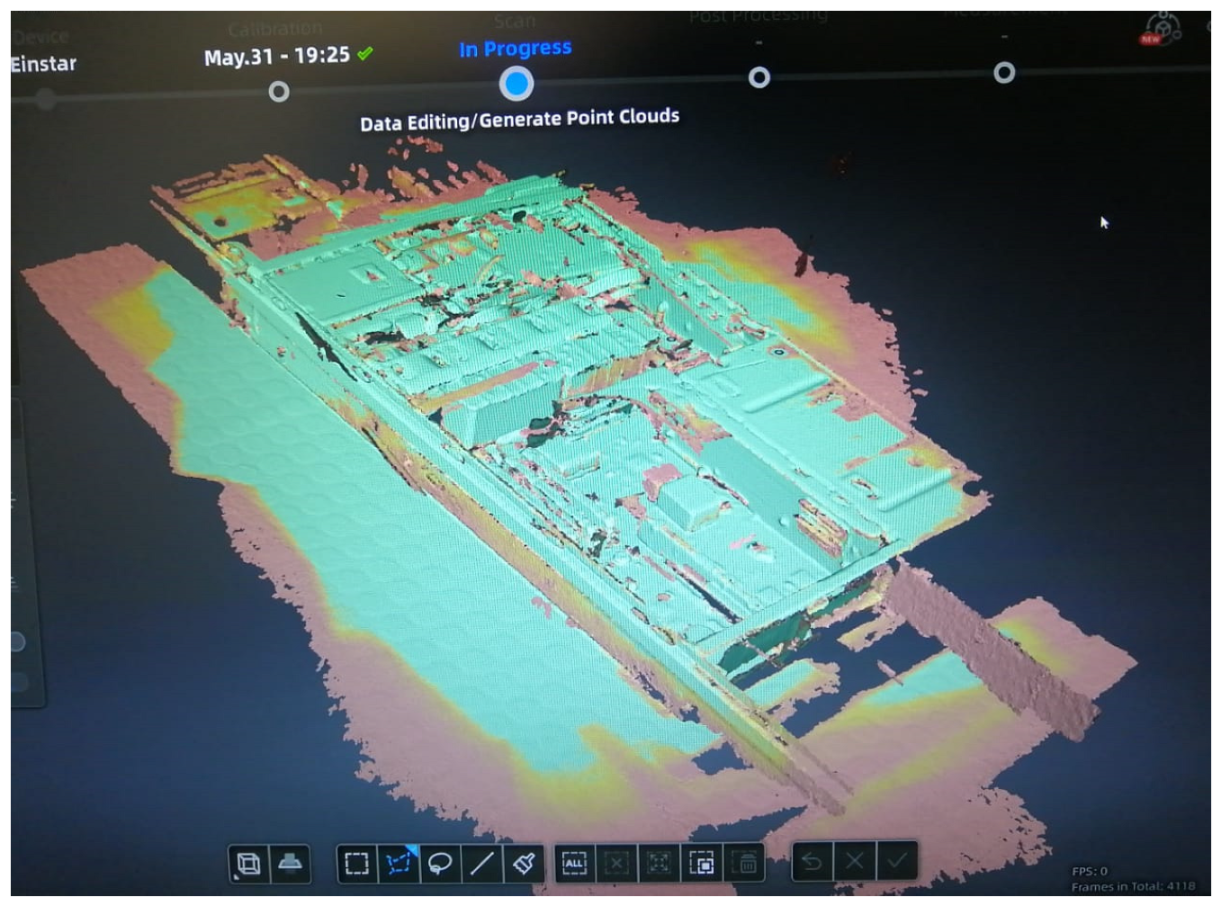

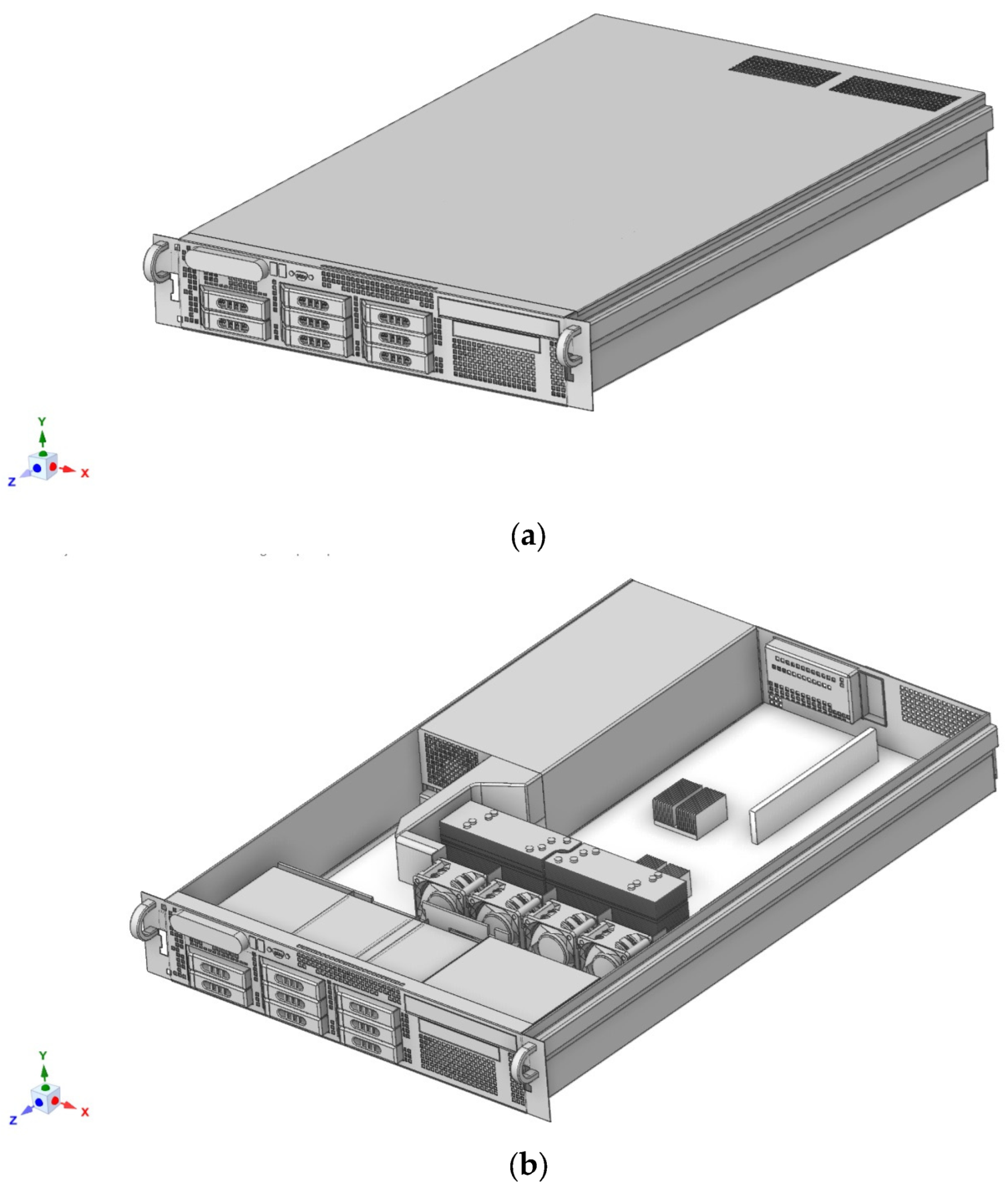

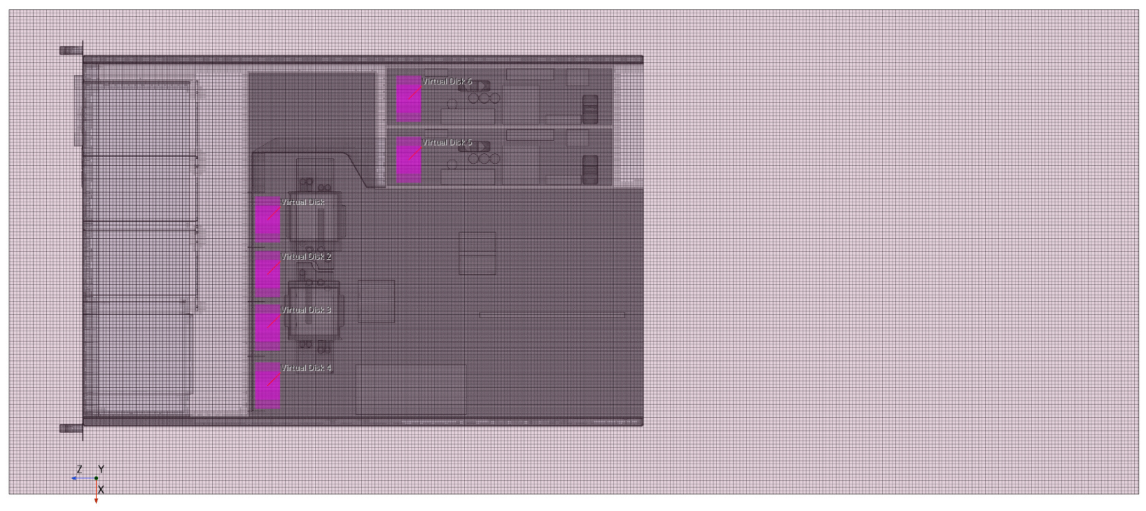

2.1. Geometrical Configuration

2.2. Fan Specification and Component Power

3. Numerical Analysis

3.1. Governing Equation and the Large-Eddy Simulation Turbulence Model

3.1.1. SGS Modeling

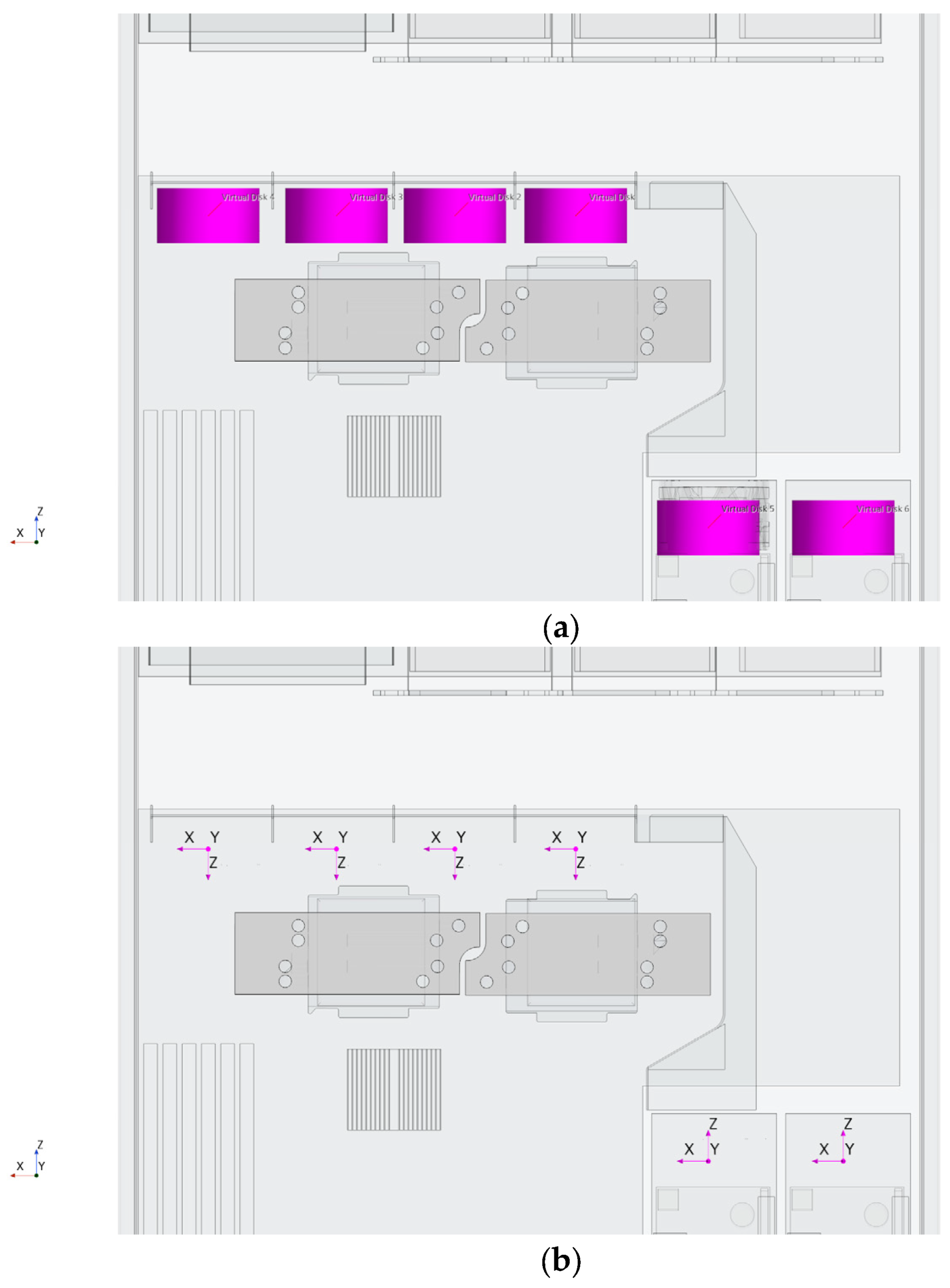

3.1.2. Virtual Disk Model: Actuator Disk Methods

3.2. Mesh Sensitivity Study

3.3. Model Validation

3.3.1. Boundary Conditions

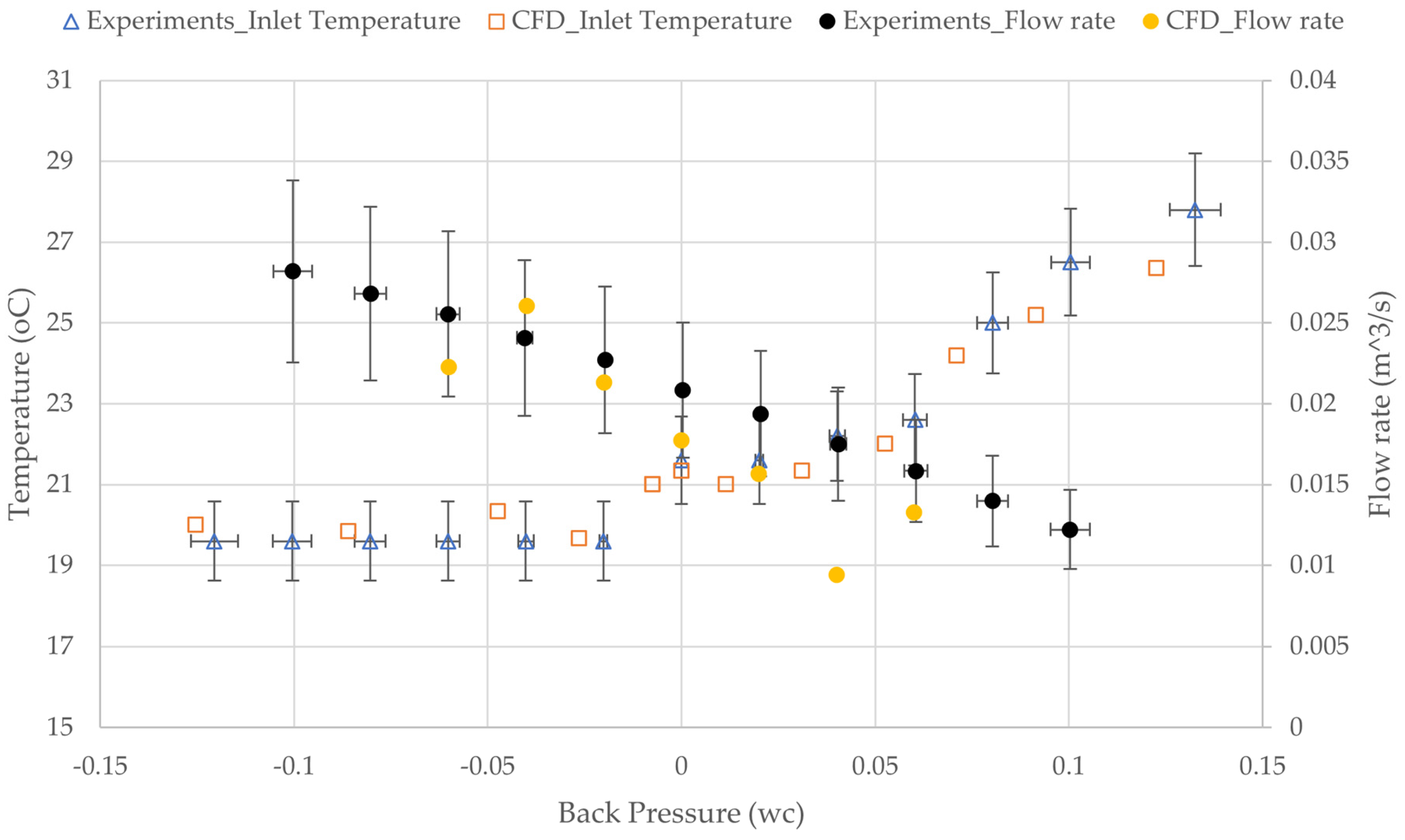

3.3.2. Model Validation Results

3.4. Solver and Numerical Parameters

4. Results and Discussion

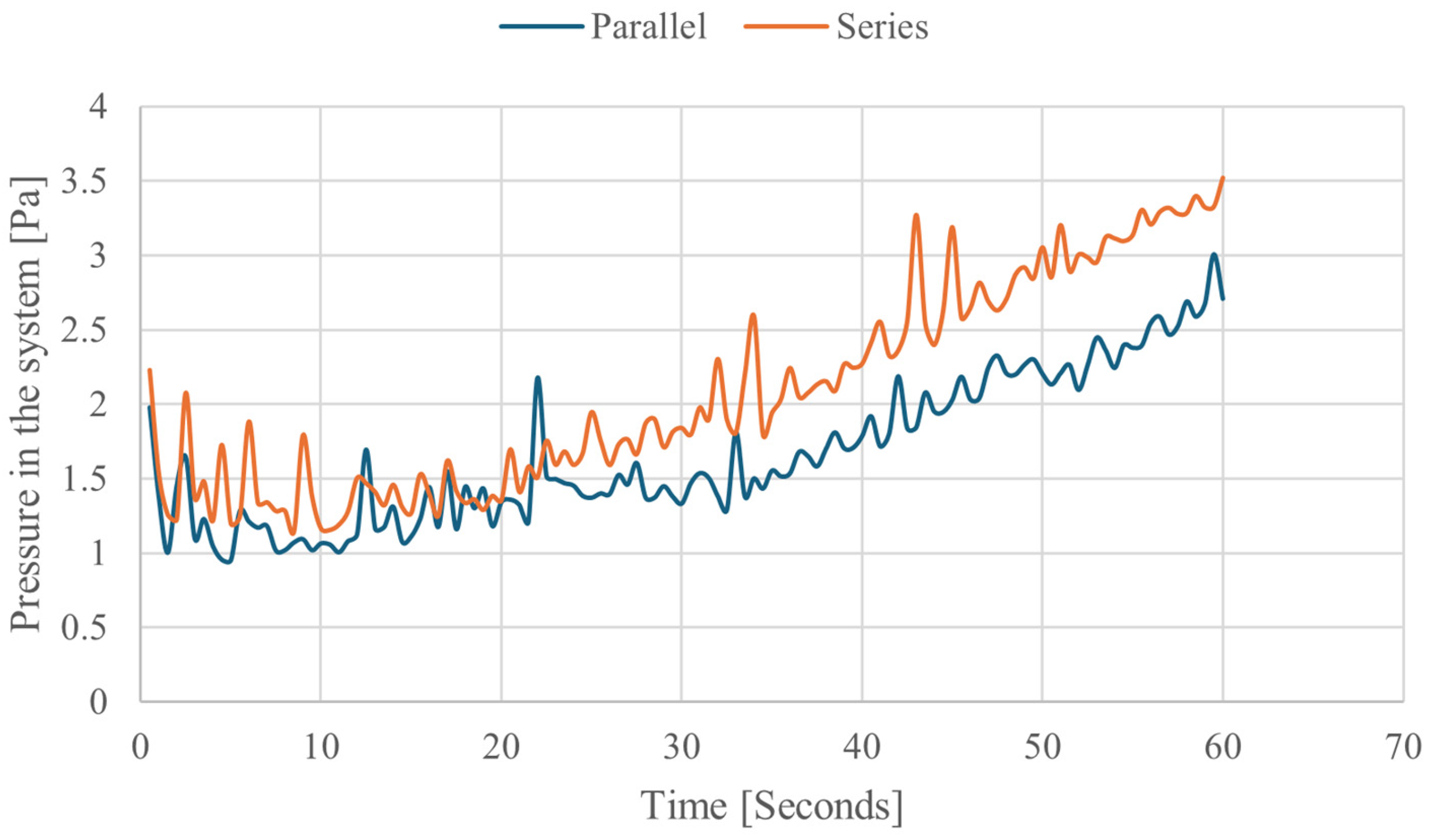

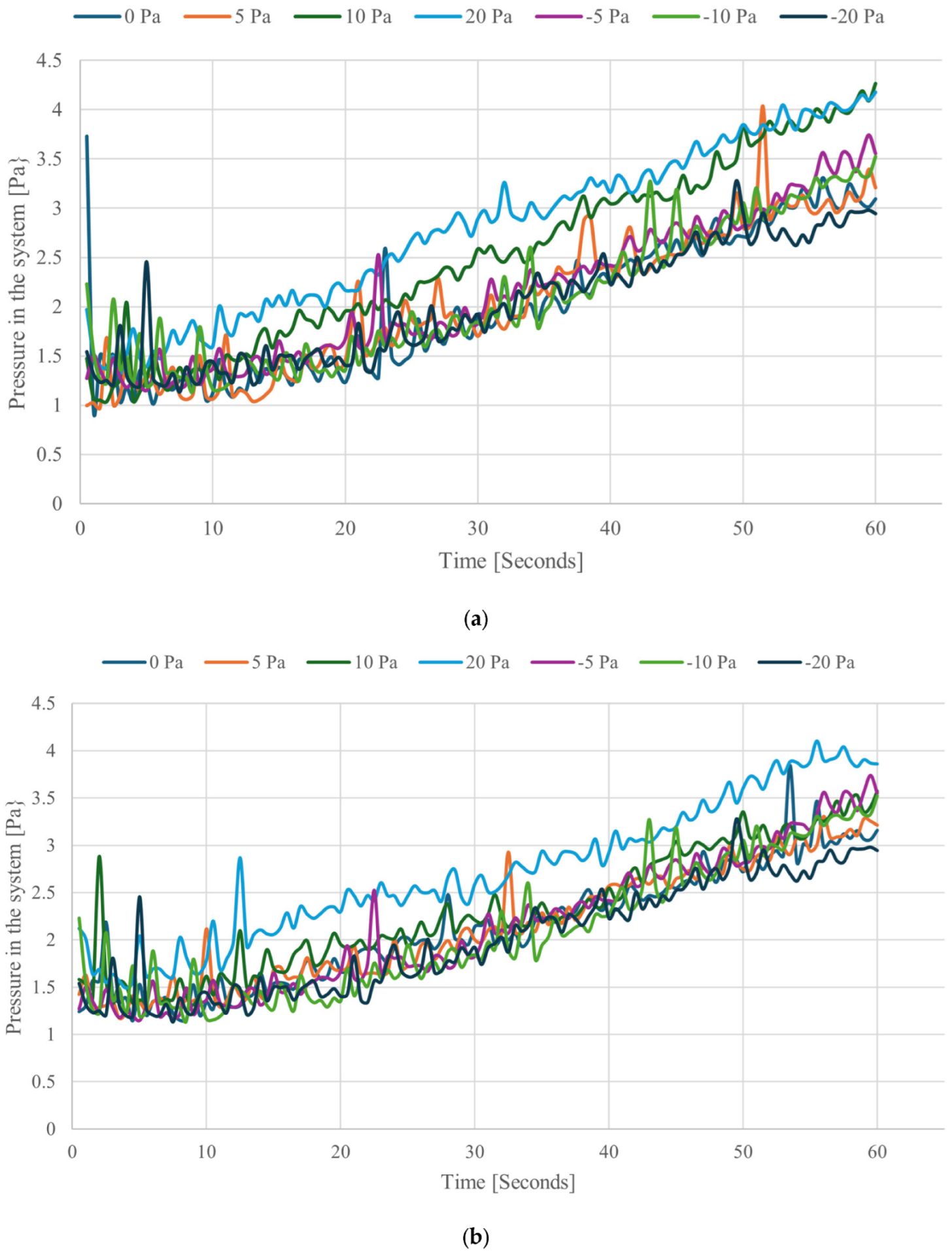

4.1. Pressure in the System

4.2. Inlet Air Temperature

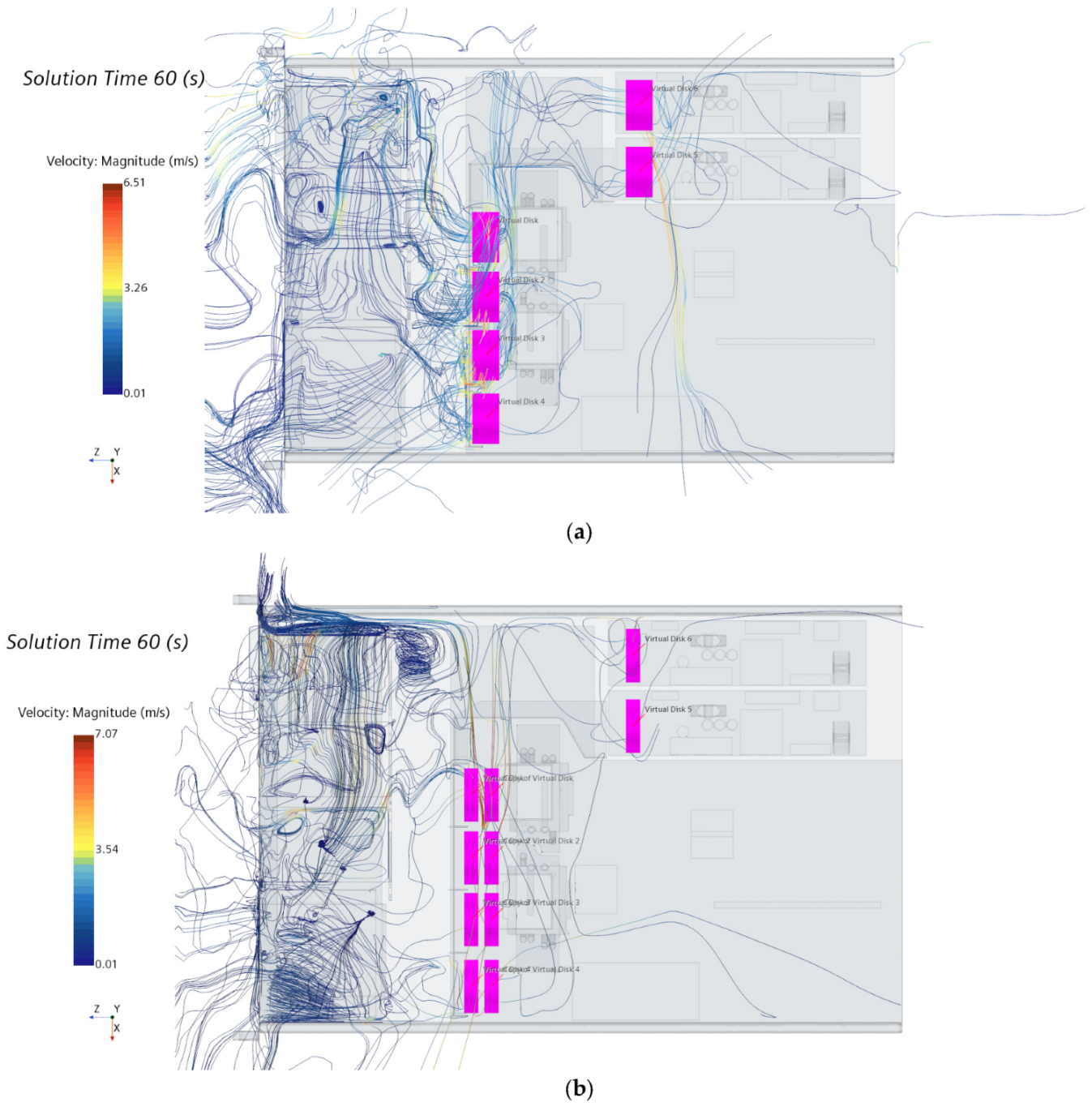

4.3. Airflow Distribution: Fan Configuration

5. Conclusions

- The server-supply inlet temperature was used to validate the CFD model based on the experimental results. Based on the results, there was an average of 3% between the experimental data and CFD model results under similar environmental and operational conditions. This study represents a significant advancement towards real-life modeling of complex configurations.

- The use of a compact fan (virtual disk model) to simulate the fans did not affect the accuracy of the simulation results. The model was validated against experimental results, with results within a 3% accuracy. Utilization of this feature shortens the computation time.

- Increasing the server fan head does not address the issue of hot air recirculation in the server, which aggravates the situation. The server inlet air temperature increased by an average of ~5% from −20 Pa to −5 Pa back pressure, and by 19% at the free delivery point (0 Pa). The inlet air temperature was further increased by 10% at back pressures of 5–20 Pa. The outlet air temperature decreased by 1%, signifying poor cooling effectiveness owing to high static pressure.

- This also highlights the importance of correctly sizing the server fans, which can significantly impact the overall server thermal performance, even under low-load conditions. It was established that a positive net system pressure does not necessarily result in the elimination of hot-air recirculation. Server fan sizing or fan set configuration should be dictated by the required server component temperature, i.e., CPU.

- The current work serves as a base for integrating liquid and air-cooling systems to form hybrid cooling systems for high-density racks in legacy data centers, where in-row CDU(L2A) is used in conjunction with CRAH/CRAC as the cooling source. Future work in this area can include rearranging the high thermal components and placing them down towards the discharge section of the server. Different types of cooling fluids should be considered to cool the components.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| CPU | Central Processing Unit |

| CRAH | Computer Room Air Handler |

| CRAC | Computer Room Air Conditioning |

| CHPC | Center for High-Performance Computing |

| CDU | Coolant Distribution Unit |

| L2A | Liquid to Air |

| IT | Information Technology |

| ITE | Information Technology Equipment |

| CFD | Computational Fluid Dynamics |

| 3D | Three Dimensional |

| Q | Flowrate |

| RAM | Random-Access Memory |

| SAS | Serial Attached SCSI |

| STP | Standard for Exchange of Product |

| CAD | Computer Aided Design |

| TDP | Thermal Design Power |

| LES | Large-Eddy Simulation |

| Density | |

| Filtered Velocity | |

| μ | Molecular Viscosity |

| τ | Stress Tensor |

| Filtered Pressure | |

| Eddy Viscosity | |

| Smagorinsky Constant | |

| J | Advance Ratio |

| Kt | Thrust |

| Kq | Torque |

| GCI | Grid Convergence Index |

| ∆t | Timestep |

| ∆x | Grid size |

| Rpm | Revolution Per Minute |

| Inch wc | Inches Water Column |

| EADM | Extended Actuator Disk Model |

| REEADM | Reverse-Engineered Empirical Actuator Disk Model |

| IPMI IAT | Intelligent Platform Management Interface inlet air temperature |

References

- Azari, R.; Rashed-Ali, H. Research Methods in Building Science and Technology; Springer Nature: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Wu, C.; Buyya, R. Cloud Data Centers and Cost Modeling: A Complete Guide to Planning, Designing and Building a Cloud Data Center, 1st ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2015. [Google Scholar]

- Alkharabsheh, S.A.; Sammakia, B.G.; Shrivastava, S.K. Experimentally Validated Computational Fluid Dynamics Model for a Data Center With Cold Aisle Containment. J. Electron. Packag. 2015, 137, 21010. [Google Scholar] [CrossRef]

- Koomey, J.; Berard, S.; Sanchez, M.; Wong, H. Implications of Historical Trends in the Electrical Efficiency of Computing. IEEE Ann. Hist. Comput. 2011, 33, 46–54. [Google Scholar] [CrossRef]

- Zhou, R.; Wang, Z.; Bash, C.E.; McReynolds, A. Modeling and Control for Cooling Management of Data Centers with Hot Aisle Containment. In Proceedings of the ASME 2011 International Mechanical Engineering Congress and Exposition, Denver, CO, USA, 11–17 November 2011; pp. 739–746. [Google Scholar] [CrossRef]

- Wang, L.; Khan, S.U.; Dayal, J. Thermal aware workload placement with task-temperature profiles in a data center. J. Supercomput. 2012, 61, 780–803. [Google Scholar] [CrossRef]

- Capozzoli, A.; Primiceri, G. Cooling systems in data centers: State of art and emerging technologies. Energy Procedia 2015, 83, 484–493. [Google Scholar] [CrossRef]

- Khalili, S.; Alissa, H.; Nemati, K.; Seymour, M.; Curtis, R.; Moss, D.; Sammakia, B. Impact of server thermal design on the cooling efficiency: Chassis design. J. Electron. Packag. Trans. ASME 2019, 141, 1–11. [Google Scholar] [CrossRef]

- Shah, A.J.; Carey, V.P.; Bash, C.E.; Patel, C.D. Exergy Analysis of Data Center Thermal Management Systems. J. Heat. Transfer 2008, 130, 383–446. [Google Scholar] [CrossRef]

- Khalili, S.; Alissa, H.; Nemati, K.; Seymour, M.; Curtis, R.; Moss, D.; Sammakia, B. Impact of Internal Design on the Efficiency of IT Equipment in a Hot Aisle Containment System: An Experimental Study. In Proceedings of the ASME 2018 International Technical Conference and Exhibition on Packaging and Integration of Electronic and Photonic Microsystems, San Francisco, CA, USA, 27–30 August 2018. [Google Scholar] [CrossRef]

- Zhao, R.; Du, Y.; Yang, X.; Zhou, Z.; Wang, W.; Yang, X. A critical review on the thermal management of data center for local hotspot elimination. Energy Build. 2023, 1–16, 297. [Google Scholar] [CrossRef]

- Kodama, Y.; Itoh, S.; Shimizu, T.; Sekiguchi, S.; Nakamura, H.; Mori, N. Imbalance of CPU temperatures in a blade system and its impact for power consumption of fans. Cluster Comput. 2013, 16, 27–37. [Google Scholar] [CrossRef]

- Khalili, S.; Tradat, M.; Sammakia, B.; Alissa, H.; Chen, C. Impact of Fans Location on the Cooling Efficiency of IT Servers. In Proceedings of the 2019 18th IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm), Las Vegas, NV, USA, 28–31 May 2019; pp. 1266–1274. [Google Scholar] [CrossRef]

- Heydari, A.; Gharaibeh, A.; Tradat, M.; Soud, Q.; Manaserh, Y.; Radmard, V.; Eslami, B.; Rodriguez, J.; Sammakia, B. Experimental evaluation of direct-to-chip cold plate liquid cooling for high-heat-density data centers. Appl. Therm. Eng. 2024, 239, 122122. [Google Scholar] [CrossRef]

- Wilkinson, M.B.; Louw, F.G.; van der Spuy, S.J.; von Backström, T.W. A Comparison of Actuator Disc Models for Axial Flow Fans in Large Air-Cooled Heat Exchangers. In Proceedings of the ASME Turbo Expo 2016: Turbomachinery Technical Conference and Exposition, Seoul, Republic of Korea, 13–17 June 2016; pp. 1–13. [Google Scholar] [CrossRef]

- Kusyumov, A.; Kusyumov, S.; Mikhailov, S.; Romanova, E.; Phayzullin, K.; Lopatin, E.; Barakos, G. Main rotor-body action for virtual blades model. EPJ Web Conf. 2018, 180, 02050. [Google Scholar] [CrossRef]

- Barakos, G.N.; Fitzgibbon, T.; Kusyumov, A.N.; Kusyumov, S.A.; Mikhailov, S.A. CFD simulation of helicopter rotor flow based on unsteady actuator disk model. Chin. J. Aeronaut. 2020, 33, 2313–2328. [Google Scholar] [CrossRef]

- Chu, W.-X.; Wang, C.-C. A review on airflow management in data centers. Appl. Energy 2019, 240, 84–119. [Google Scholar] [CrossRef]

- Pardey, Z.M.; Demetriou, D.W.; Erden, H.S.; VanGilder, J.W.; Khalifa, H.E.; Schmidt, R.R. Proposal for standard compact server model for transient data center simulations. ASHRAE Trans. 2015, 121, 413–421. [Google Scholar]

- Vangilder, J.; Vaishnani, Y.; Tian, W.; Condor, M. A Compact Rack Model for Data Center CFD Modeling. In Proceedings of the 2020 19th IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm), Orlando, FL, USA, 21–23 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 350–356. [Google Scholar] [CrossRef]

- Vangilder, J.; Healey, C.; Pardey, Z.; Zhang, X. A compact server model for transient data center simulations. ASHRAE Trans. 2013, 119, 358–370. [Google Scholar]

- Wang, C. Noise source analysis for two identical small axial-flow fans in series under operating condition. Appl. Acoust. 2018, 129, 13–26. [Google Scholar] [CrossRef]

- Roda-Casanova, V.; Sanchez-Marin, F. An adaptive mesh refinement approach for solving non-Hertzian elastic contact problems. Meccanica 2018, 53, 2013–2028. [Google Scholar] [CrossRef]

- Korpela, S.A. Principles of Turbomachinery; John Wiley & Sons: New York, NY, USA, 2019. [Google Scholar]

- Yang, Z. Large-eddy simulation: Past, present and the future. Chin. J. Aeronaut. 2015, 28, 11–24. [Google Scholar] [CrossRef]

- Veloudis, I.; Yang, Z.; McGuirk, J.J. LES of wall-bounded flows using a new subgrid scale model based on energy spectrum dissipation. J. Appl. Mech. Trans. ASME 2008, 75, 0210051–02100511. [Google Scholar] [CrossRef]

- Leishman, J.G. Aerodynamics of horizontal axis wind turbines. In Advances in Wind Energy Conversion Technology; Springer: Berlin, Heidelberg, 2011; pp. 1–69. [Google Scholar] [CrossRef]

- Helma, S. Surprising Behaviour of the Wageningen B-Screw Series Polynomials. J. Mar. Sci. Eng. 2020, 8, 211. [Google Scholar] [CrossRef]

- Ramayee, L.; Supradeepan, K. Grid convergence study on flow past a circular cylinder for beginners. AIP Conf. Proc. 2021, 2317, 030020. [Google Scholar] [CrossRef]

- Teschner, T.-R. Grid Convergence Study {pyGCS}. 2021, PyPI: pyGCS 0.2.0. Mar. 10, 2021, PyPI: pyGCS 0.2.0. Available online: https://pypi.org/project/pyGCS/0.2.0/ (accessed on 1 September 2024).

- Celik, I.B.; Ghia, U.; Roache, P.J.; Freitas, C.J.; Coleman, H.; Raad, P.E. Procedure for estimation and reporting of uncertainty due to discretization in CFD applications. J. Fluids Eng. Trans. ASME 2008, 130, 0780011–0780014. [Google Scholar] [CrossRef]

- Roache, P.J. Persperctive: A method for uniform reporting of grid refinement studies. J. Fluid Eng. 1994, 116, 405–413. [Google Scholar] [CrossRef]

- Ahmadi, M.; Ghaderi, A.; Nezhad, H.M.; Kuriqi, A.; Di Francesco, S. Numerical investigation of hydraulics in a vertical slot fishway with upgraded configurations. Water 2021, 13, 2711. [Google Scholar] [CrossRef]

- Chetverushkin, B.; Saveliev, A.; Saveliev, V. Kinetic consistent MHD algorithm for incompressible conductive fluids. Comput. Fluids 2023, 255, 105724. [Google Scholar] [CrossRef]

| Operation System Hardware | Hardware Performance Specification |

|---|---|

| CPU | Intel Core i7-11800H and above |

| Graphics card | NVIDIA GTX1060 and above |

| Graphic memory | 6 GB and above |

| RAM | 32 GB and above |

| USB | 2.0 and above |

| Operating system | Windows 10/Windows 11 (both 64-bit only) |

| Component | Quantity | TDP [Watt] |

|---|---|---|

| Intel Processor-E5345 | 2 | 80 |

| DIMM | 8 | 3 |

| Hard drives | 2 | 6 |

| Power supplies | 2 | 83 |

| J | Kt | Kq | Eta |

|---|---|---|---|

| 0.01 | 0.0614 | 0.1496 | 0.00019 |

| 0.3 | 0.0473 | 0.1314 | 0.013 |

| 0.625 | 0.0318 | 0.116 | 0.0048 |

| 1.55 | −0.0127 | −0.0521 | −0.017 |

| 2 | −0.0343 | −0.1503 | −0.03 |

| 2.6 | −0.063 | −0.2813 | −0.045 |

| f | Base Size [mm] | Cell Mesh Size [-] | Outlet Pressure [Pa] | Fan Thrust [N] | Error (Outlet Pressure) | Error (Fan Thrust) |

|---|---|---|---|---|---|---|

| 1 | 5.5 | 1.220578 × 106 | 2.254 | 1.251 × 10−3 | 0.000% | 0.000% |

| 2 | 5 | 1.246704 × 106 | 2.124 | 1.231 × 10−3 | 5.768% | 1.599% |

| 3 | 4.5 | 1.287264 × 106 | 2.114 | 1.223 × 10−3 | 0.471% | 0.650% |

| 4 | 4 | 1.799871 × 106 | 2.107 | 1.245 × 10−3 | 0.331% | −1.799% |

| 5 | 3.5 | 1.962441 × 106 | 2.130 | 1.235 × 10−3 | −1.092% | 0.803% |

| 6 | 3 | 1.989244 × 106 | 2.095 | 1.240 × 10−3 | 1.643% | −0.405% |

| Back-Pressure Pa | Temp, Inlet Air °C (Parallel) | Temp, Outlet Air °C (Parallel) | Temp, Inlet Air °C (Series) | Temp, Outlet Air °C (Series) | Percentage Difference (IAT) |

|---|---|---|---|---|---|

| −20 | 20 | 30.97 | 20.7 | 30.97 | 3% |

| −10 | 19.8 | 30.68 | 20.4 | 30.68 | 3% |

| −5 | 20.3 | 31.15 | 21.85 | 31.14 | 7% |

| 0 | 19.7 | 31.53 | 24.19 | 31.52 | 19% |

| 5 | 21 | 31.1 | 22.08 | 31.3 | 5% |

| 10 | 21.4 | 30.67 | 25.18 | 30.67 | 15% |

| 20 | 24.2 | 30.71 | 26.98 | 33.06 | 10% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Madihlaba, N.B.; Kunene, T.J. Numerical Evaluation of Hot Air Recirculation in Server Rack. Appl. Sci. 2024, 14, 7904. https://doi.org/10.3390/app14177904

Madihlaba NB, Kunene TJ. Numerical Evaluation of Hot Air Recirculation in Server Rack. Applied Sciences. 2024; 14(17):7904. https://doi.org/10.3390/app14177904

Chicago/Turabian StyleMadihlaba, Nelson Bafana, and Thokozani Justin Kunene. 2024. "Numerical Evaluation of Hot Air Recirculation in Server Rack" Applied Sciences 14, no. 17: 7904. https://doi.org/10.3390/app14177904