Active Learning for Biomedical Article Classification with Bag of Words and FastText Embeddings

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Work

1.3. Contributions

- Several different active learning setups are examined, including not only different query selection strategies, but also different initialization methods and early stopping criteria.

- A collection of 15 datasets consisting of scientific article abstracts from biomedical systematic literature review studies, of varying size and class imbalance ratio, is used for the experiments.

- The prediction quality is rigorously evaluated using -fold cross-validation with the area under the precision-recall curve adopted as the quality measure. In addition, potential workload reduction in an SLR process is estimated using the WSS measure.

2. Materials and Methods

2.1. Text Representation Methods

2.1.1. Bag of Words

2.1.2. FastText

2.2. Classification Algorithms

2.2.1. Support Vector Machines

2.2.2. Random Forest

2.3. Active Learning

2.3.1. Scenario

| Algorithm 1 Active learning scenario |

|

2.3.2. Initial Training Set Selection

2.3.3. Query Selection

2.3.4. Early Stopping Criteria

2.3.5. Confidence-Based Stopping

2.3.6. Stability-Based Stopping

3. Results

3.1. Datasets

3.2. Algorithm Implementations and Setup

3.2.1. Text Representation Methods

Bag of Words (BOW)

FastText (FT)

3.2.2. Classification Algorithms

3.2.3. Active Learning

3.3. Predictive Performance Evaluation

3.3.1. Classification Quality

3.3.2. SLR Workload Reduction

3.4. Active Learning Predictive Performance: Aggregated

- The random forest algorithm with the bag of words representation achieves the best overall performance regardless of the initialization and query selection methods and the percentage of data used.

- The random forest and SVM algorithms with the FastText representation perform nearly identically to each other and just marginally worse than RF-BOW.

- For the SVM algorithm, the bag of words representation is clearly inferior to the FastText representation, both with respect to the passive learning performance and, to an even greater extent, with respect to active learning effectiveness, which may suggest a negative effect of its higher dimensionality, which is particularly visible with small training sets.

- The initialization method has little impact on the predictive performance except for the very earliest stages of learning when cluster initialization sometimes gives a minor improvement.

- The uncertainty, minority, and threshold sampling strategies are similarly effective and make it possible to approach the passive learning level after using about 50% of the data for the the most successful algorithm-representation configurations, RF-BOW, RF-FT, and SVM-FT, and even with 25–33% of data, they are not far behind.

- Random sampling is less effective than the three best query selection strategies except for the SVM algorithm with the bag of words representation, which performs worse than the other algorithm–representation combinations regardless of the query selection strategy.

- Diversity sampling performs worse than random sampling, yielding a lower level of prediction quality at nearly all stages of active learning.

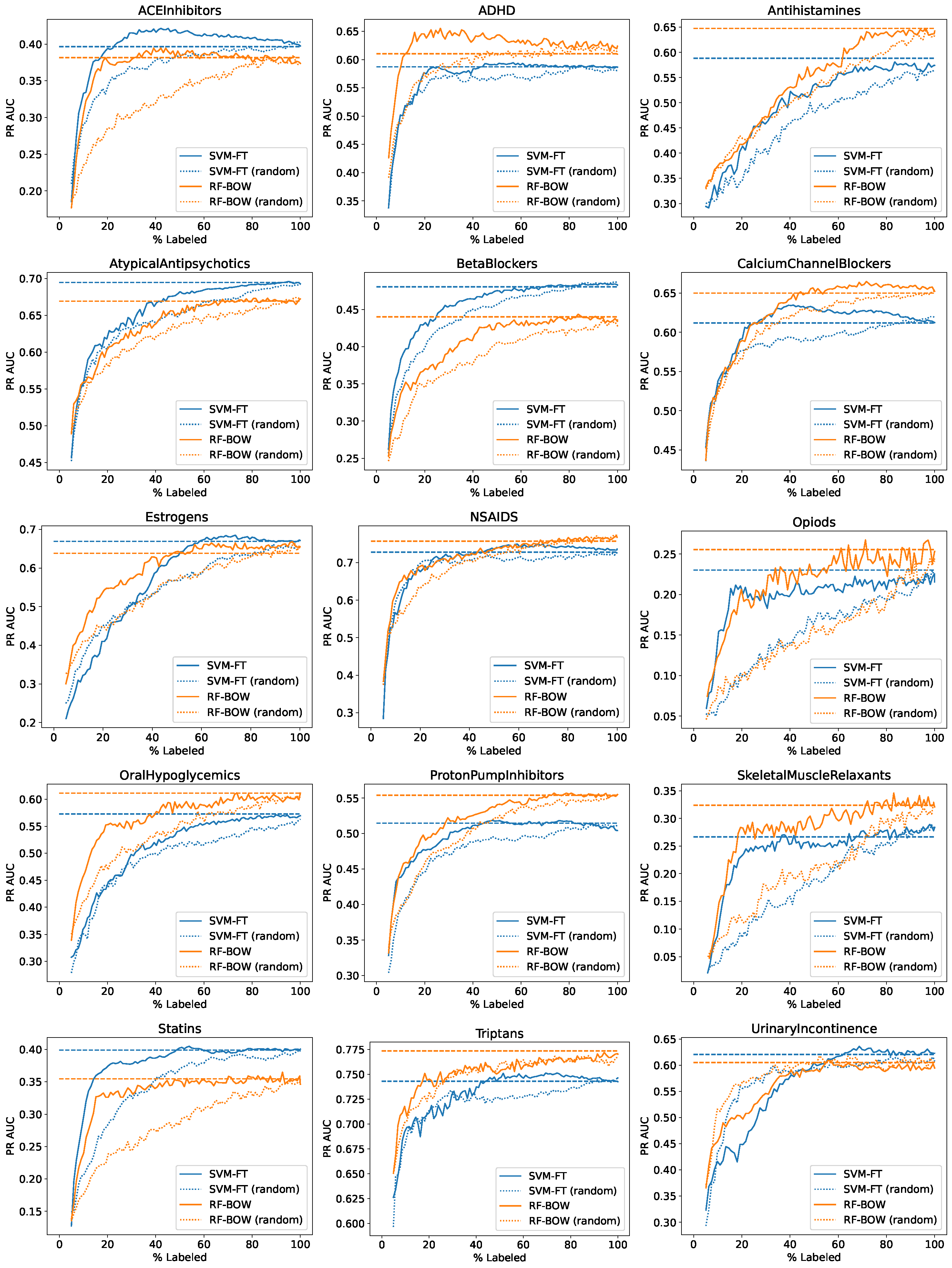

3.5. Active Learning Predictive Performance: Detailed

- The predictive performance varies heavily across datasets, with PR AUC values ranging from about to more than .

- For most datasets, the passive learning performance level is approached or exceeded after labeling no more than 50% of available data, with some exceptions observed mainly for RF-BOW, which tends to benefit from additional training data more often than SVM-FT.

- RF-BOW outperforms SVM-FT for datasets, SVM-FT outperforms RF-BOW for four datasets, and they perform similarly to each other for datasets.

- Random sampling is inferior to uncertainty sampling for nearly all datasets, sometimes quite substantially (e.g., for Opiods, SkeletalMuscleRelaxants, or Statins).

3.6. Early Stopping Criteria: Aggregated

- The performance of early stopping criteria heavily depends on the classification algorithm: the same stopping criteria tend to stop active learning with SVM earlier than with RF with the biggest differences for stability-based criteria.

- Differences between the early stopping criteria performance for the same classification algorithm with different text representations are less pronounced, although stability-based criteria stop SVM with the FastText representation considerably earlier than with bag of words.

- The most universal early stopping criterion, providing roughly comparable performance for all algorithm–representation configurations, is the confidence criterion with a – threshold.

- Stability-based stopping may work well, but it definitely requires quite different thresholds for the SVM and random forest algorithms (above for the former, between and for the latter).

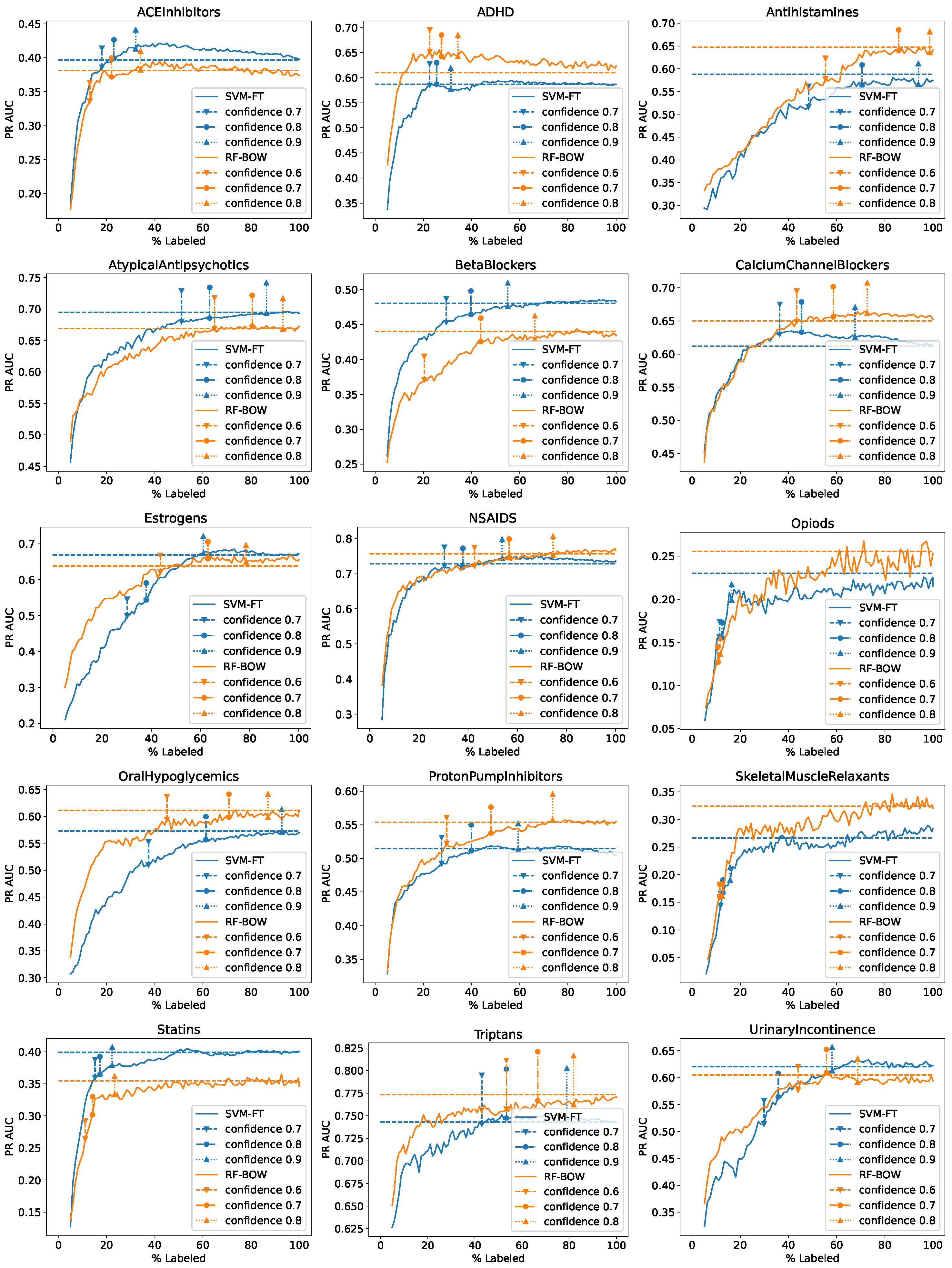

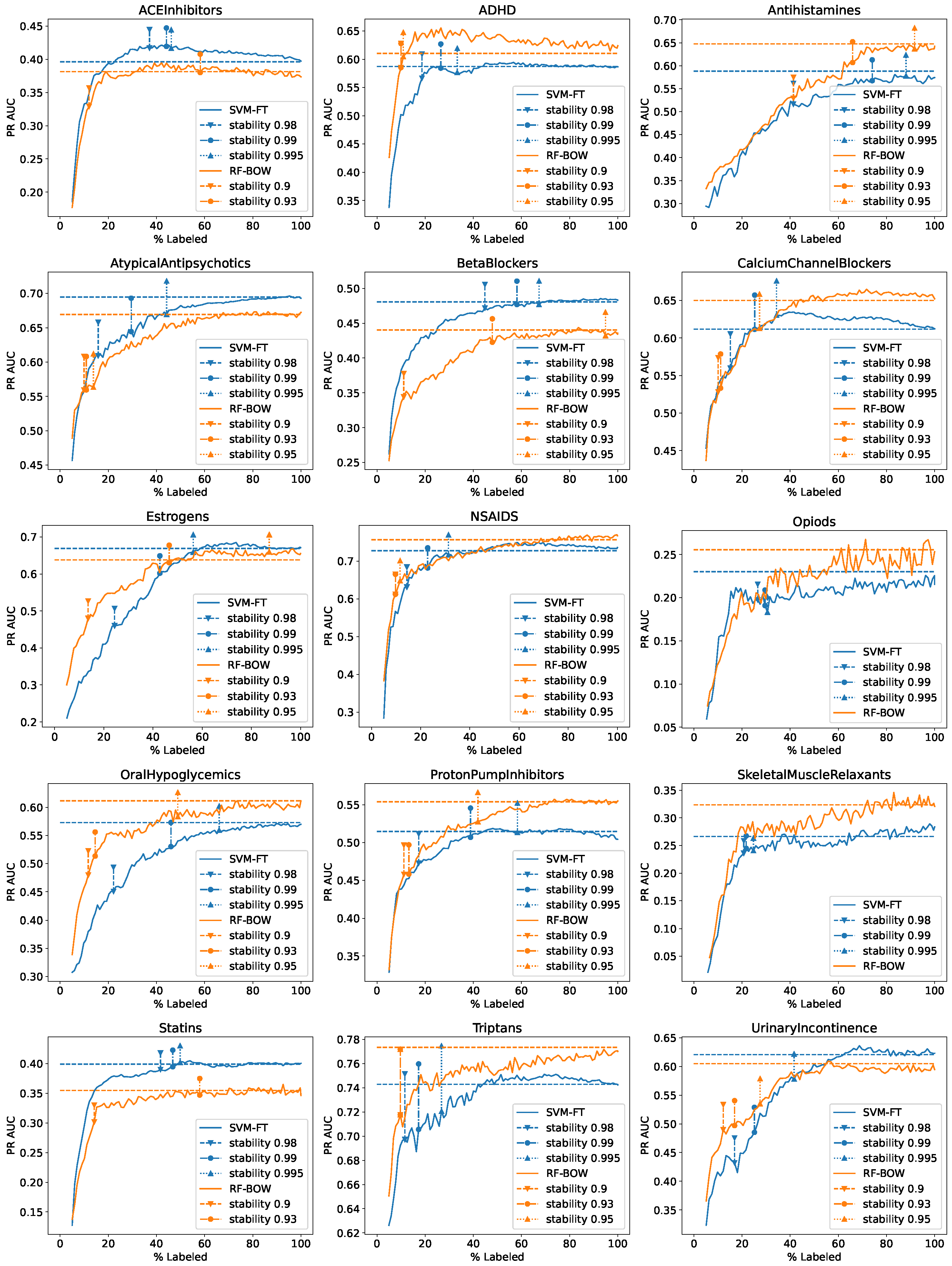

3.7. Early Stopping Criteria: Detailed

- No single stopping criterion and threshold parameter value works best for all datasets.

- The confidence-based criterion with a fixed threshold value of for SVM-FT or 0.7 for RF-BOW provide quite reliable stopping signals for most datasets with some notable exceptions being Opiods and SkeletalMuscleRelaxants.

- The stability-based criterion produces mixed results, and while for SVM-FT, it delivers useful stopping signals for most datasets with the threshold, it is hard to recommend a similarly reasonable threshold value for RF-BOW.

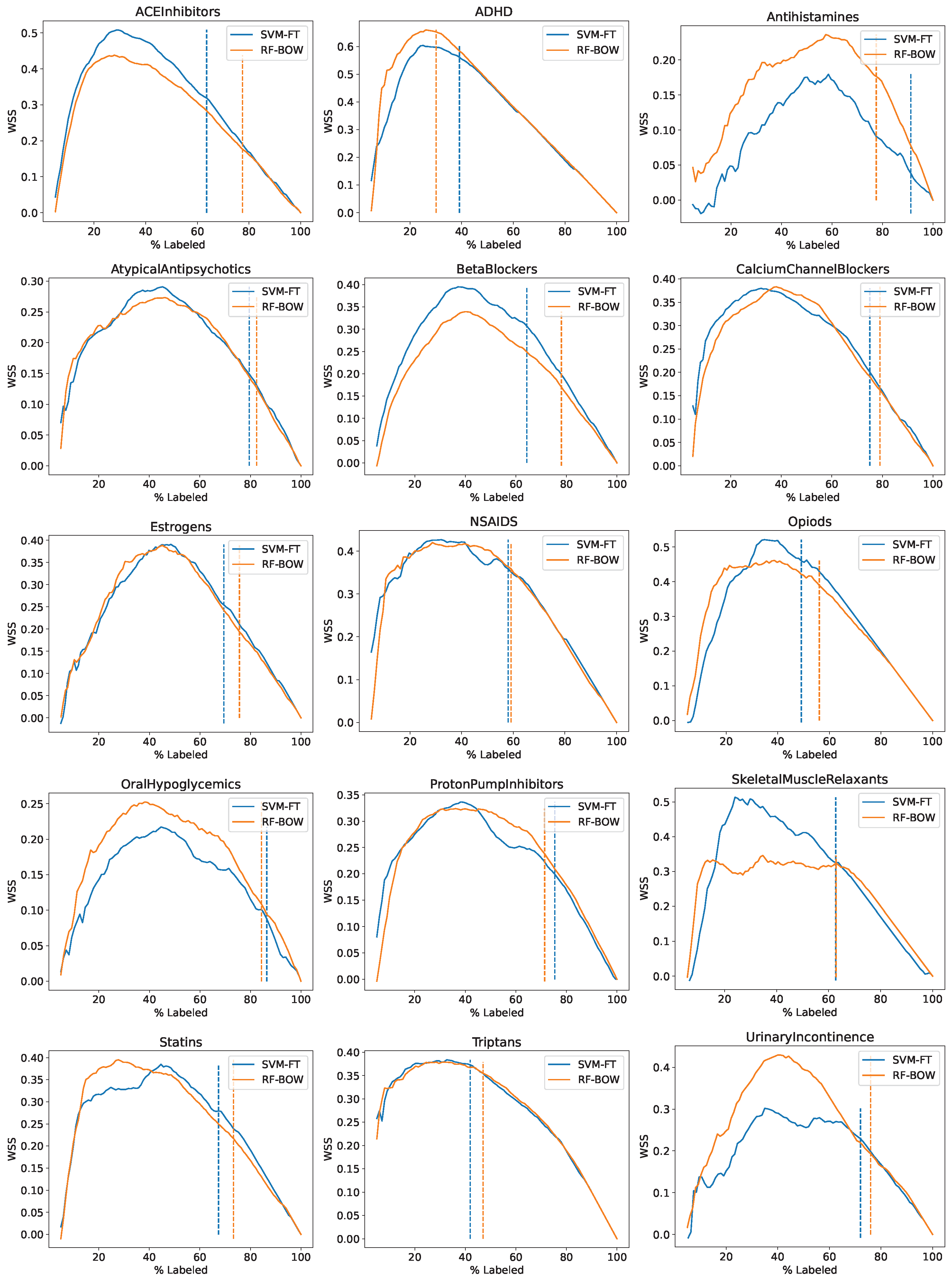

3.8. SLR Workload Reduction

- The highest workload reduction is typically achieved after labeling 20–40% of articles and ranges from about to about .

- The recall level of is typically achieved after labeling 60–80% of articles, which corresponds to WSS@95% values between and for SVM-FT ( on average) or between and for RF-BOW ( on average).

- With respect to peak WSS values, RF-BOW outperforms SVM-FT for datasets, SVM-FT outperforms RF-BOW for datasets, and they perform similarly to each other for eight datasets.

- With respect to WSS@95%, SVM-FT outperforms RF-BOW for datasets, RF-BOW outperforms SVM-FT for datasets, and they perform similarly to each other (within a margin) for datasets.

3.9. Discussion

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McCallum, A.; Nigam, K. A Comparison of Event Models for Naive Bayes Text Classification. In Proceedings of the AAAI/ICML-98 Workshop on Learning for Text Categorization, Menlo Park, CA, USA, 26–27 July 1998. [Google Scholar]

- Joachims, T. Text Categorization with Support Vector Machines: Learning with Many Relevant Features. In Proceedings of the Tenth European Conference on Machine Learning (ECML-1998), Berlin, Germany, 21–23 April 1998. [Google Scholar]

- Radovanović, M.; Ivanović, M. Text Mining: Approaches and Applications. Novi Sad J. Math. 2008, 38, 227–234. [Google Scholar]

- Rousseau, F.; Kiagias, E.; Vazirgiannis, M. Text Categorization as a Graph Classification Problem. In Proceedings of the Fifty-Third Annual Meeting of the Association for Computational Linguistics and the Sixth International Joint Conference on Natural Language Processing (ACL-IJCNLP-2015), Beijing, China, 26–31 July 2015. [Google Scholar]

- Dařena, F.; Žižka, J. Ensembles of Classifiers for Parallel Categorization of Large Number of Text Documents Expressing Opinions. J. Appl. Econ. Sci. 2017, 12, 25–35. [Google Scholar]

- Zymkowski, T.; Szymański, J.; Sobecki, A.; Drozda, P.; Szałapak, K.; Komar-Komarowski, K.; Scherer, R. Short Texts Representations for Legal Domain Classification. In Proceedings of the Twenty-First International Conference on Artificial Intelligence and Soft Computing (ICAISC-2022); Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- García Adeva, J.J.; Pikatza Atxaa, J.M.; Ubeda Carrillo, M.; Ansuategi Zengotitabengoa, E. Automatic Text Classification to Support Systematic Reviews in Medicine. Expert Syst. Appl. 2014, 41, 1498–1508. [Google Scholar] [CrossRef]

- van den Bulk, L.M.; Bouzembrak, Y.; Gavai, A.; Liu, N.; van den Heuvel, L.J.; Marvin, H.J.P. Automatic Classification of Literature in Systematic Reviews on Food Safety Using Machine Learning. Curr. Res. Food Sci. 2022, 5, 84–95. [Google Scholar] [CrossRef]

- Cohn, D.; Atlas, L.; Ladner, R. Improving Generalization with Active Learning. Mach. Learn. 1994, 15, 201–221. [Google Scholar] [CrossRef]

- Tharwat, A.; Schenck, W. A Survey on Active Learning: State-of-the-Art, Practical Challenges and Research Directions. Mathematics 2023, 11, 820. [Google Scholar] [CrossRef]

- Borisov, V.; Leemann, T.; Seßler, K.; Haug, J.; Pawelczyk, M.; Kasneci, G. Deep Neural Networks and Tabular Data: A Survey. arXiv 2022, arXiv:2110.01889. [Google Scholar] [CrossRef] [PubMed]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.Y.; Li, Z.; Gupta, B.B.; Chen, X.; Wang, X. A Survey of Deep Active Learning. ACM Comput. Surv. 2020, 54, 180. [Google Scholar] [CrossRef]

- Cichosz, P. Bag of Words and Embedding Text Representation Methods for Medical Article Classification. Int. J. Appl. Math. Comput. Sci. 2023, 33, 603–621. [Google Scholar] [CrossRef]

- Tong, S.; Koller, D. Support Vector Machine Active Learning with Applications to Text Classification. J. Mach. Learn. Res. 2001, 2, 45–66. [Google Scholar]

- Zhu, J.; Wang, H.; Yao, T.; Tsou, B.K. Active Learning with Sampling by Uncertainty and Density for Word Sense Disambiguation and Text Classification. In Proceedings of the Twenty-Second International Conference on Computational Linguistics (COLING-2008), Stroudsburg, PA, USA, 18–22 August 2008. [Google Scholar]

- Miwa, M.; Thomas, J.; O’Mara-Eves, A.; Ananiadou, S. Reducing Systematic Review Workload through Certainty-Based Screening. J. Biomed. Inform. 2014, 51, 242–253. [Google Scholar] [CrossRef] [PubMed]

- Goudjil, M.; Koudil, M.; Bedda, M.; Ghoggali, N. A Novel Active Learning Method Using SVM for Text Classification. Int. J. Autom. Comput. 2018, 15, 290–298. [Google Scholar] [CrossRef]

- Flores, C.A.; Figueroa, R.L.; Pezoa, J.E. Active Learning for Biomedical Text Classification Based on Automatically Generated Regular Expressions. IEEE Access 2021, 9, 38767–38777. [Google Scholar] [CrossRef]

- van de Schoot, R.; de Bruin, J.; Schram, R.; Zahedi, P.; de Boer, J.; Weijdema, F.; Kramer, B.; Huijts, M.; Hoogerwerf, M.; Ferdinands, G.; et al. An Open Source Machine Learning Framework for Efficient and Transparent Systematic Reviews. Nat. Mach. Intell. 2021, 3, 125–133. [Google Scholar] [CrossRef]

- Miller, B.; Linder, F.; Mebane, W.R. Active Learning Approaches for Labeling Text: Review and Assessment of the Performance of Active Learning Approaches. Political Anal. 2020, 28, 532–551. [Google Scholar] [CrossRef]

- Jacobs, P.F.; Maillette de Buy Wenniger, G.; Wiering, M.; Schomaker, L. Active Learning for Reducing Labeling Effort in Text Classification Tasks. In Proceedings of the Artificial Intelligence and Machine Learning: Proceedings of the Thirty-Third Benelux on Artificial Intelligence (BNAIC/Benelearn-2021), Cham, Switzerland, 10–12 November 2021. [Google Scholar]

- Ferdinands, G.; Schram, R.; de Bruin, J.; Bagheri, A.; Oberski, D.L.; Tummers, L.; Teijema, J.J.; van de Schoot, R. Performance of Active Learning Models for Screening Prioritization in Systematic Reviews: A Simulation Study into the Average Time to Discover Relevant Record. Syst. Rev. 2023, 12, 100. [Google Scholar] [CrossRef]

- Teijema, J.J.; Hofstee, L.; Brouwer, M.; de Bruin, J.; Ferdinands, G.; de Boer, J.; Vizan, P.; van den Brand, S.; Bockting, C.; van de Schoot, R.; et al. Active Learning-Based Systematic Reviewing Using Switching Classification Models: The Case of the Onset, Maintenance, and Relapse of Depressive Disorders. Front. Res. Metrics Anal. 2023, 8, 1178181. [Google Scholar] [CrossRef]

- Cohen, A.M.; Hersh, W.R.; Peterson, K.; Yen, P.Y. Reducing Workload in Systematic Review Preparation Using Automated Citation Classification. J. Am. Med. Inform. Assoc. 2006, 13, 206–219. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V.N. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Zhai, C.X. (Eds.) Mining Text Data; Springer: New York, NY, USA, 2012. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. arXiv 2016, arXiv:1607.04606. [Google Scholar] [CrossRef]

- Dumais, S.T.; Platt, J.C.; Heckerman, D.; Sahami, M. Inductive Learning Algorithms and Representations for Text Categorization. In Proceedings of the Seventh International Conference on Information and Knowledge Management (CIKM-98), Bethesda, MD, USA, 2–7 November 1998; pp. 148–155. [Google Scholar]

- Szymański, J. Comparative Analysis of Text Representation Methods Using Classification. Cybern. Syst. 2014, 45, 180–199. [Google Scholar] [CrossRef]

- Salton, G.; Buckley, C. Term Weighting Approaches in Automatic Text Retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.S.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Platt, J.C. Fast Training of Support Vector Machines using Sequential Minimal Optimization. In Advances in Kernel Methods: Support Vector Learning; Schölkopf, B., Burges, C.J.C., Smola, A.J., Eds.; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Hamel, L.H. Knowledge Discovery with Support Vector Machines; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Platt, J.C. Probabilistic Outputs for Support Vector Machines and Comparison to Regularized Likelihood Methods. In Advances in Large Margin Classifiers; Smola, A.J., Barlett, P., Schölkopf, B., Schuurmans, D., Eds.; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Rios, G.; Zha, H. Exploring Support Vector Machines and Random Forests for Spam Detection. In Proceedings of the First International Conference on Email and Anti Spam (CEAS-2004), Mountain View, CA, USA, 30–31 July 2004. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Koprinska, I.; Poon, J.; Clark, J.; Chan, J. Learning to Classify E-Mail. Inf. Sci. Int. J. 2007, 177, 2167–2187. [Google Scholar] [CrossRef]

- Xue, D.; Li, F. Research of Text Categorization Model Based on Random Forests. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence and Communication Technology (CICT-2015), Los Alamitos, CA, USA, 13–14 February 2015. [Google Scholar]

- Cichosz, P. A Case Study in Text Mining of Discussion Forum Posts: Classification with Bag of Words and Global Vectors. Int. J. Appl. Math. Comput. Sci. 2018, 28, 787–801. [Google Scholar] [CrossRef]

- Yuan, W.; Han, Y.; Guan, D.; Lee, S.; Lee, Y.K. Initial Training Data Selection for Active Learning. In Proceedings of the Fifth International Conference on Ubiquitous Information Management and Communication (ICUIMC-2011), Seoul, Republic of Korea, 21–23 February 2011. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Springer: New York, NY, USA, 1981. [Google Scholar]

- Jolliffe, I.T. Pricipal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Fu, Y.; Zhu, X.; Li, B. A Survey on Instance Selection for Active Learning. Knowl. Inf. Syst. 2013, 35, 249–283. [Google Scholar] [CrossRef]

- Nguyen, V.; Shaker, M.H.; Hüllermeier, E. How to Measure Uncertainty in Uncertainty Sampling for Active Learning. Mach. Learn. 2022, 111, 89–122. [Google Scholar] [CrossRef]

- Kurlandski, L.; Bloodgood, M. Impact of Stop Sets on Stopping Active Learning for Text Classification. In Proceedings of the Sixteenth International Conference on Semantic Computing (ICSC-2022), Los Alamitos, CA, USA, 26–28 January 2022. [Google Scholar]

- Zhu, J.; Wang, H.; Hovy, E.; Ma, M. Confidence-Based Stopping Criteria for Active Learning for Data Annotation. ACM Trans. Speech Lang. Process. 2010, 6, 3. [Google Scholar] [CrossRef]

- Bloodgood, M.; Vijay-Shanker, K. A Method for Stopping Active Learning Based on Stabilizing Predictions and the Need for User-Adjustable Stopping. In Proceedings of the Thirteenth Conference on Computational Natural Language Learning (CoNLL-2009). Association for Computational Linguistics, Boulder, CO, USA, 4–5 June 2009. [Google Scholar]

- Systematic Drug Class Review Gold Standard Data. Available online: https://dmice.ohsu.edu/cohenaa/systematic-drug-class-review-data.html (accessed on 1 July 2024).

- Cohen, A.M. Optimizing Feature Representation for Automated Systematic Review Work Prioritization. In Proceedings of the AMIA Annual Symposium Proceedings, AMIA, Washington, DC, USA, 8–12 November 2008; pp. 121–125. [Google Scholar]

- Matwin, S.; Kouznetsov, A.; Inkpen, D.; Frunza, O.; O’Blenis, P. A New Algorithm for Reducing the Workload of Experts in Performing Systematic Reviews. J. Am. Med. Inform. Assoc. 2010, 17, 446–453. [Google Scholar] [CrossRef] [PubMed]

- Jonnalagadda, S.; Petitti, D. A New Iterative Method to Reduce Workload in Systematic Review Process. Int. J. Comput. Biol. Drug Des. 2013, 6, 5–17. [Google Scholar] [CrossRef]

- Khabsa, M.; Elmagarmid, A.; Ilyas, I.; Hammady, H.; Ouzzani, M. Learning to Identify Relevant Studies for Systematic Reviews Using Random Forest and External Information. Mach. Learn. 2016, 102, 465–482. [Google Scholar] [CrossRef]

- Ji, X.; Ritter, A.; Yen, P.Y. Using Ontology-Based Semantic Similarity to Facilitate the Article Screening Process for Systematic Reviews. J. Biomed. Inform. 2017, 69, 33–42. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830, Library version 1.1.3. [Google Scholar]

- Honnibal, M.; Montani, I.; Van Landeghem, S.; Boyd, A. spaCy: Industrial-Strength Natural Language Processing in Python. Library version 3.3.1. 2022. Available online: https://github.com/explosion/spaCy/blob/master/CITATION.cff (accessed on 1 July 2024).

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. fastText: Library for Efficient Text Classification and Representation Learning. Library version 0.9.2. 2020. Available online: https://fasttext.cc/ (accessed on 1 July 2024).

- Egan, J.P. Signal Detection Theory and ROC Analysis; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Fawcett, T. An Introduction to ROC Analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A. A Survey of Cross-Validation Procedures for Model Selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Wallace, B.C.; Trikalinos, T.A.; Lau, J.; Brodley, C.; Schmid, C.H. Semi-Automated Screening of Biomedical Citations for Systematic Reviews. BMC Bioinform. 2010, 11, 55. [Google Scholar] [CrossRef]

- Cohen, A.M. Performance of Support-Vector-Machine-Based Classification on 15 Systematic Review Topics Evaluated with the WSS@95 Measure. J. Am. Med. Inform. Assoc. 2011, 18, 104. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Menardi, G.; Torelli, N. Training and Assessing Classification Rules with Imbalanced Data. Data Min. Knowl. Discov. 2014, 28, 92–122. [Google Scholar] [CrossRef]

- Zhu, X.; Goldberg, A. Introduction to Semi-Supervised Learning; Morgan & Claypool: San Rafael, CA, USA, 2009. [Google Scholar]

- Text Classification Data from 15 Drug Class Review SLR Studies. Available online: https://figshare.com/articles/dataset/Text_Classification_Data_from_15_Drug_Class_Review_SLR_Studies/23626656/1?file=41457282 (accessed on 1 July 2024).

| Ref. | Representations | Algorithms | AL Setups | Evaluation | Data |

|---|---|---|---|---|---|

| [14] | BOW (TF-IDF) | SVM | initialization: random; query: margin; stopping: none | error, precision–recall breakeven; train–test split | Reuters, subset of Newsgroups |

| [15] | BOW | maxent | initialization: cluster; query: uncertainty; stopping: none | accuracy; k-fold CV | subset of Newsgroups, WebKB |

| [16] | BOW, LDA | SVM | initialization: unknown, query: uncertainty, minority, committee; stopping: none | utility, coverage, ROC AUC; train–test split, train+pool evaluation | 3 medical and 4 social science SLR studies |

| [17] | BOW (TF-IDF) | SVM | initialization: random; query: uncertainty (custom); stopping: none | accuracy; train–test split | Reuters, Newsgroups, WebKB |

| [18] | BOW (1- and 2-gram) | regex classifier, NB, SVM, BERT | initialization: random; query: uncertainty (custom); stopping: query score variance | accuracy, precision F1; k-fold CV | 3 biomedical datasets |

| [19] | BOW (TF-IDF) | NB | initialization: random; query: minority; stopping: none | WSS, RRF; train+pool evaluation | 2 biomedical SLR studies, 1 software fault prediction SLR study, 1 longitudinal SLR study |

| [20] | BOW | SVM, NB, LR | initialization: random; query: margin, committee; stopping: none | F1; train–test split | Twitter (refugee topic), Wikipedia talk pages (toxic comments), Breibart news |

| [21] | — | BERT | initialization: random; query: uncertainty (custom); stopping: none | accuracy; train–test split | Stanford Sentiment Treeban, KvK-Frontpages |

| [22] | BOW (TF-IDF), doc2vec | SVM, NB, LR, RF | initialization: random; query: minority; stopping: none | WSS, RRF, TD; train+pool evaluation | 6 biomedical SLR studies |

| [23] | BOW (TF-IDF), sBERT | CNN, SVM, RF, LR, NB | initialization: random; query: minority; stopping: none | WSS, TD, recall; train+pool evaluation | 1 biomedical SLR study |

| Dataset | Size | Relevant % |

|---|---|---|

| ACEInhibitors | 2215 | 7.54 |

| ADHD | 797 | 10.41 |

| Antihistamines | 285 | 31.23 |

| AtypicalAntipsychotics | 1020 | 32.45 |

| BetaBlockers | 1849 | 14.49 |

| CalciumChannelBlockers | 1100 | 23.18 |

| Estrogens | 344 | 22.67 |

| NSAIDS | 355 | 23.38 |

| Opiods | 1760 | 2.44 |

| OralHypoglycemics | 466 | 28.11 |

| ProtonPumpInhibitors | 1193 | 18.69 |

| SkeletalMuscleRelaxants | 1341 | 2.24 |

| Statins | 2727 | 5.50 |

| Triptans | 585 | 34.36 |

| UrinaryIncontinence | 283 | 24.03 |

| (a) uncertainty sampling | (b) diversity sampling | ||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||

| 5% | 0.19 | 0.29 | 0.32 | 0.32 | 5% | 0.19 | 0.29 | 0.32 | 0.32 |

| 10% | 0.27 | 0.40 | 0.42 | 0.40 | 10% | 0.24 | 0.34 | 0.35 | 0.36 |

| 25% | 0.36 | 0.48 | 0.50 | 0.48 | 25% | 0.30 | 0.40 | 0.40 | 0.41 |

| 33% | 0.39 | 0.50 | 0.51 | 0.50 | 33% | 0.34 | 0.43 | 0.43 | 0.43 |

| 50% | 0.44 | 0.53 | 0.54 | 0.52 | 50% | 0.40 | 0.48 | 0.47 | 0.47 |

| 66% | 0.47 | 0.54 | 0.54 | 0.53 | 66% | 0.43 | 0.50 | 0.50 | 0.50 |

| 75% | 0.48 | 0.54 | 0.55 | 0.53 | 75% | 0.45 | 0.52 | 0.51 | 0.51 |

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | 100% | 0.49 | 0.54 | 0.55 | 0.53 |

| (c) minority sampling | (d) threshold sampling | ||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||

| 5% | 0.19 | 0.29 | 0.32 | 0.32 | 5% | 0.19 | 0.29 | 0.31 | 0.33 |

| 10% | 0.27 | 0.39 | 0.41 | 0.39 | 10% | 0.26 | 0.38 | 0.42 | 0.41 |

| 25% | 0.35 | 0.46 | 0.49 | 0.48 | 25% | 0.36 | 0.48 | 0.49 | 0.48 |

| 33% | 0.39 | 0.49 | 0.51 | 0.50 | 33% | 0.39 | 0.49 | 0.51 | 0.50 |

| 50% | 0.44 | 0.53 | 0.53 | 0.53 | 50% | 0.43 | 0.52 | 0.53 | 0.52 |

| 66% | 0.46 | 0.54 | 0.55 | 0.53 | 66% | 0.46 | 0.53 | 0.54 | 0.53 |

| 75% | 0.47 | 0.54 | 0.55 | 0.53 | 75% | 0.47 | 0.53 | 0.55 | 0.53 |

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | 100% | 0.49 | 0.54 | 0.55 | 0.53 |

| (e) random sampling | |||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||||||

| 5% | 0.19 | 0.29 | 0.32 | 0.33 | |||||

| 10% | 0.25 | 0.37 | 0.38 | 0.38 | |||||

| 25% | 0.37 | 0.46 | 0.45 | 0.45 | |||||

| 33% | 0.39 | 0.48 | 0.47 | 0.47 | |||||

| 50% | 0.43 | 0.50 | 0.50 | 0.49 | |||||

| 66% | 0.46 | 0.51 | 0.52 | 0.51 | |||||

| 75% | 0.47 | 0.52 | 0.53 | 0.52 | |||||

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | |||||

| (a) uncertainty sampling | (b) diversity sampling | ||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||

| 5% | 0.19 | 0.28 | 0.33 | 0.33 | 5% | 0.19 | 0.30 | 0.33 | 0.33 |

| 10% | 0.27 | 0.40 | 0.43 | 0.41 | 10% | 0.24 | 0.35 | 0.36 | 0.36 |

| 25% | 0.38 | 0.49 | 0.50 | 0.49 | 25% | 0.31 | 0.42 | 0.41 | 0.41 |

| 33% | 0.40 | 0.51 | 0.52 | 0.50 | 33% | 0.34 | 0.45 | 0.43 | 0.43 |

| 50% | 0.45 | 0.53 | 0.53 | 0.53 | 50% | 0.40 | 0.48 | 0.47 | 0.47 |

| 66% | 0.47 | 0.53 | 0.54 | 0.53 | 66% | 0.43 | 0.51 | 0.49 | 0.49 |

| 75% | 0.48 | 0.54 | 0.54 | 0.53 | 75% | 0.45 | 0.52 | 0.51 | 0.51 |

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | 100% | 0.49 | 0.54 | 0.55 | 0.53 |

| (c) minority sampling | (d) threshold sampling | ||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||

| 5% | 0.19 | 0.29 | 0.33 | 0.34 | 5% | 0.19 | 0.28 | 0.33 | 0.33 |

| 10% | 0.28 | 0.40 | 0.42 | 0.39 | 10% | 0.26 | 0.38 | 0.44 | 0.41 |

| 25% | 0.36 | 0.48 | 0.49 | 0.48 | 25% | 0.37 | 0.48 | 0.50 | 0.48 |

| 33% | 0.40 | 0.50 | 0.51 | 0.50 | 33% | 0.40 | 0.50 | 0.51 | 0.50 |

| 50% | 0.44 | 0.53 | 0.53 | 0.53 | 50% | 0.44 | 0.52 | 0.52 | 0.52 |

| 66% | 0.47 | 0.54 | 0.54 | 0.53 | 66% | 0.47 | 0.53 | 0.54 | 0.52 |

| 75% | 0.48 | 0.54 | 0.55 | 0.53 | 75% | 0.48 | 0.53 | 0.54 | 0.52 |

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | 100% | 0.49 | 0.54 | 0.55 | 0.53 |

| (e) random sampling | |||||||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | ||||||

| 5% | 0.19 | 0.29 | 0.33 | 0.33 | |||||

| 10% | 0.25 | 0.37 | 0.38 | 0.39 | |||||

| 25% | 0.37 | 0.45 | 0.46 | 0.45 | |||||

| 33% | 0.40 | 0.47 | 0.47 | 0.47 | |||||

| 50% | 0.44 | 0.50 | 0.50 | 0.49 | |||||

| 66% | 0.47 | 0.52 | 0.52 | 0.51 | |||||

| 75% | 0.47 | 0.52 | 0.53 | 0.52 | |||||

| 100% | 0.49 | 0.54 | 0.55 | 0.53 | |||||

| (a) size | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| random–uncertainty | −0.47 | −0.59 | −0.60 | −0.58 |

| random–diversity | −0.47 | −0.59 | −0.60 | −0.65 |

| random–minority | −0.46 | −0.52 | −0.57 | −0.65 |

| random–threshold | −0.49 | −0.64 | −0.68 | −0.65 |

| random–random | −0.46 | −0.60 | −0.61 | −0.62 |

| cluster–uncertainty | −0.45 | −0.59 | −0.58 | −0.61 |

| cluster–diversity | −0.48 | −0.59 | −0.62 | −0.66 |

| cluster–minority | −0.49 | −0.52 | −0.58 | −0.60 |

| cluster–threshold | −0.40 | −0.63 | −0.66 | −0.60 |

| cluster–random | −0.50 | −0.60 | −0.59 | −0.64 |

| (b) relevant % | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| random–uncertainty | 0.74 | 0.78 | 0.71 | 0.78 |

| random–diversity | 0.77 | 0.82 | 0.75 | 0.83 |

| random–minority | 0.77 | 0.75 | 0.71 | 0.77 |

| random–threshold | 0.73 | 0.79 | 0.74 | 0.78 |

| random–random | 0.71 | 0.77 | 0.80 | 0.80 |

| cluster–uncertainty | 0.74 | 0.78 | 0.69 | 0.84 |

| cluster–diversity | 0.75 | 0.84 | 0.75 | 0.87 |

| cluster–minority | 0.77 | 0.75 | 0.69 | 0.80 |

| cluster–threshold | 0.60 | 0.76 | 0.75 | 0.77 |

| cluster–random | 0.74 | 0.77 | 0.76 | 0.84 |

| (a) smaller | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| uncertainty | 0.00 | 0.01 | 0.03 | 0.02 |

| diversity | −0.06 | −0.04 | −0.04 | −0.03 |

| minority | −0.01 | 0.00 | 0.02 | 0.02 |

| threshold | 0.00 | 0.01 | 0.02 | 0.02 |

| (b) bigger | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| uncertainty | 0.01 | 0.06 | 0.06 | 0.04 |

| diversity | −0.05 | −0.04 | −0.04 | −0.04 |

| minority | 0.01 | 0.05 | 0.06 | 0.05 |

| threshold | 0.00 | 0.04 | 0.06 | 0.04 |

| (c) less imbalanced | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| uncertainty | −0.01 | 0.02 | 0.01 | 0.02 |

| diversity | −0.05 | −0.02 | −0.04 | −0.03 |

| minority | −0.01 | 0.00 | 0.01 | 0.01 |

| threshold | −0.01 | 0.00 | 0.01 | 0.01 |

| (d) more imbalanced | ||||

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

| uncertainty | 0.01 | 0.05 | 0.07 | 0.05 |

| diversity | −0.05 | −0.05 | −0.04 | −0.04 |

| minority | 0.01 | 0.05 | 0.07 | 0.06 |

| threshold | 0.01 | 0.03 | 0.06 | 0.05 |

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

|---|---|---|---|---|

| confidence 0.5 | 10% | 10% | 10% | 10% |

| confidence 0.6 | 19% | 23% | 34% | 29% |

| confidence 0.7 | 26% | 30% | 48% | 40% |

| confidence 0.8 | 36% | 38% | 61% | 54% |

| confidence 0.9 | 54% | 55% | 77% | 72% |

| confidence 0.95 | 73% | 71% | 89% | 84% |

| confidence 0.98 | 85% | 83% | 98% | 95% |

| stability 0.9 | 14% | 12% | 25% | 28% |

| stability 0.93 | 17% | 13% | 38% | 44% |

| stability 0.95 | 21% | 15% | 58% | 54% |

| stability 0.98 | 43% | 25% | 93% | 87% |

| stability 0.99 | 64% | 37% | 97% | 95% |

| stability 0.995 | 76% | 47% | 100% | 97% |

| stability 0.998 | 84% | 56% | 100% | 100% |

| SVM-BOW | SVM-FT | RF-BOW | RF-FT | |

|---|---|---|---|---|

| confidence 0.5 | 0.27 | 0.40 | 0.42 | 0.40 |

| confidence 0.6 | 0.34 | 0.48 | 0.52 | 0.49 |

| confidence 0.7 | 0.37 | 0.50 | 0.54 | 0.51 |

| confidence 0.8 | 0.40 | 0.51 | 0.54 | 0.53 |

| confidence 0.9 | 0.45 | 0.53 | 0.55 | 0.53 |

| confidence 0.95 | 0.47 | 0.54 | 0.55 | 0.53 |

| confidence 0.98 | 0.48 | 0.54 | 0.55 | 0.53 |

| stability 0.9 | 0.30 | 0.42 | 0.50 | 0.49 |

| stability 0.93 | 0.32 | 0.43 | 0.52 | 0.52 |

| stability 0.95 | 0.35 | 0.44 | 0.54 | 0.53 |

| stability 0.98 | 0.42 | 0.48 | 0.55 | 0.53 |

| stability 0.99 | 0.46 | 0.51 | 0.55 | 0.53 |

| stability 0.995 | 0.48 | 0.53 | 0.55 | 0.53 |

| stability 0.998 | 0.48 | 0.53 | 0.55 | 0.53 |

| SVM-FT | RF-BOW | |

|---|---|---|

| ACEInhibitors | 0.32 | 0.18 |

| ADHD | 0.56 | 0.65 |

| Antihistamines | 0.04 | 0.18 |

| AtypicalAntipsychotics | 0.15 | 0.13 |

| BetaBlockers | 0.31 | 0.17 |

| CalciumChannelBlockers | 0.20 | 0.16 |

| Estrogens | 0.25 | 0.19 |

| NSAIDS | 0.36 | 0.36 |

| Opiods | 0.46 | 0.39 |

| OralHypoglycemics | 0.09 | 0.11 |

| ProtonPumpInhibitors | 0.20 | 0.24 |

| SkeletalMuscleRelaxants | 0.33 | 0.32 |

| Statins | 0.28 | 0.22 |

| Triptans | 0.37 | 0.35 |

| UrinaryIncontinence | 0.23 | 0.19 |

| AVERAGE | 0.28 | 0.26 |

| Size | Relevant % | |

|---|---|---|

| SVM-FT | 0.31 | −0.50 |

| RF-BOW | 0.04 | −0.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cichosz, P. Active Learning for Biomedical Article Classification with Bag of Words and FastText Embeddings. Appl. Sci. 2024, 14, 7945. https://doi.org/10.3390/app14177945

Cichosz P. Active Learning for Biomedical Article Classification with Bag of Words and FastText Embeddings. Applied Sciences. 2024; 14(17):7945. https://doi.org/10.3390/app14177945

Chicago/Turabian StyleCichosz, Paweł. 2024. "Active Learning for Biomedical Article Classification with Bag of Words and FastText Embeddings" Applied Sciences 14, no. 17: 7945. https://doi.org/10.3390/app14177945