Expanding Ground Vehicle Autonomy into Unstructured, Off-Road Environments: Dataset Challenges

Abstract

:1. Introduction

Related Datasets

2. Off-Road Dataset Challenges

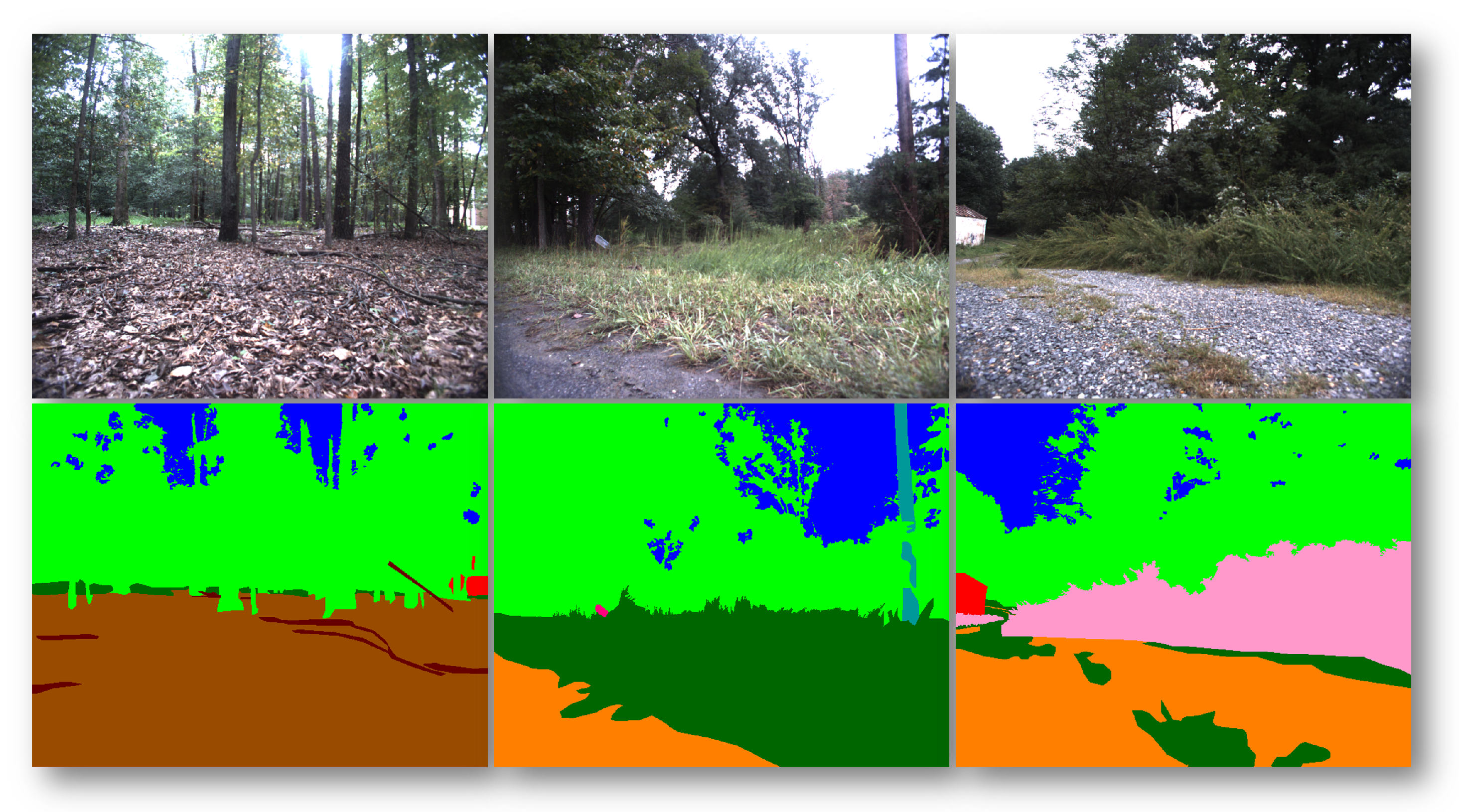

2.1. Annotating Off-Road Data: Importance and Challenges

2.2. Defining Boundaries in Off-Road Imagery

3. Off-Road Autonomy Dataset: A Preview

- 1.

- RGB Imagery;

- 2.

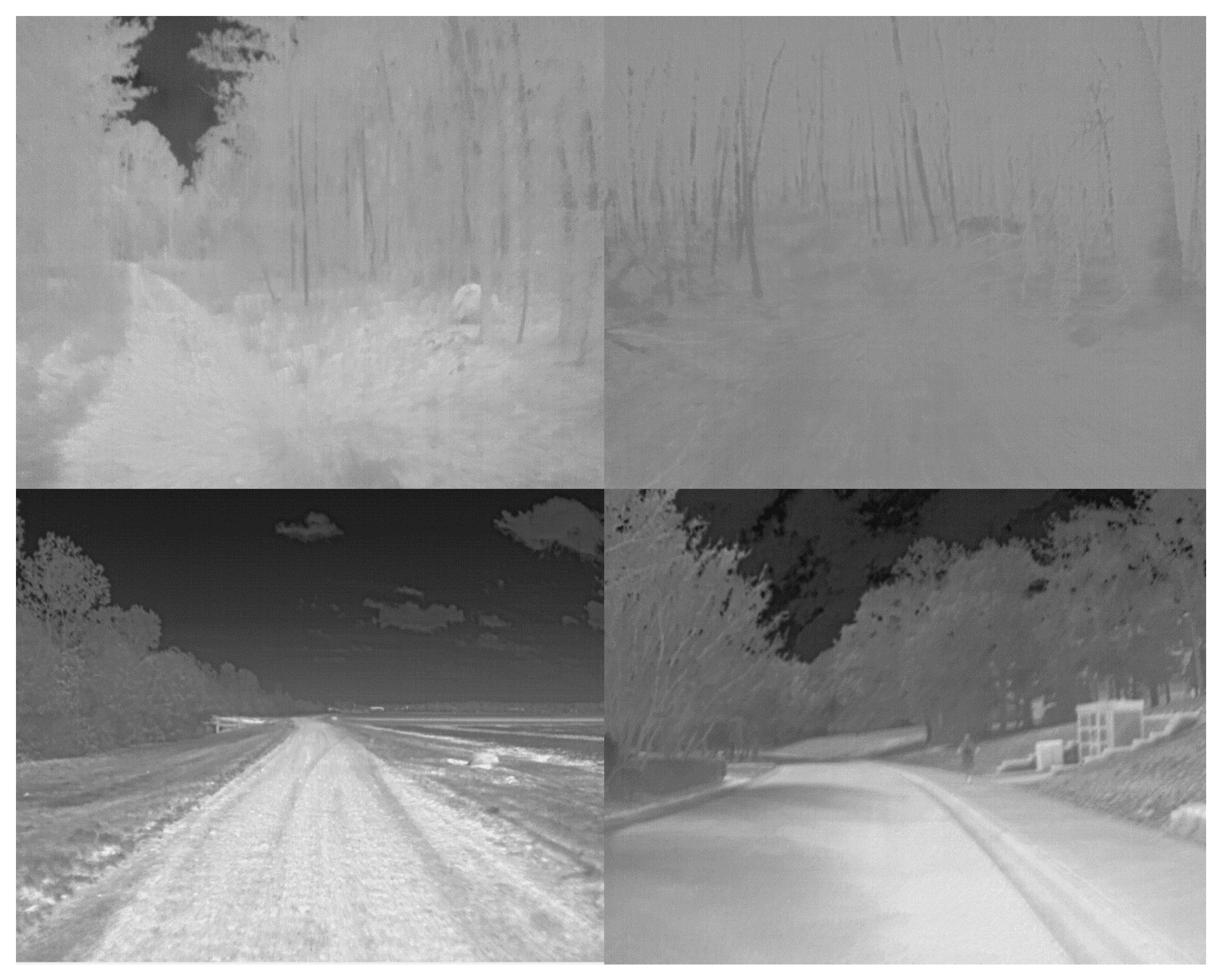

- Longwave Infrared Imagery;

- 3.

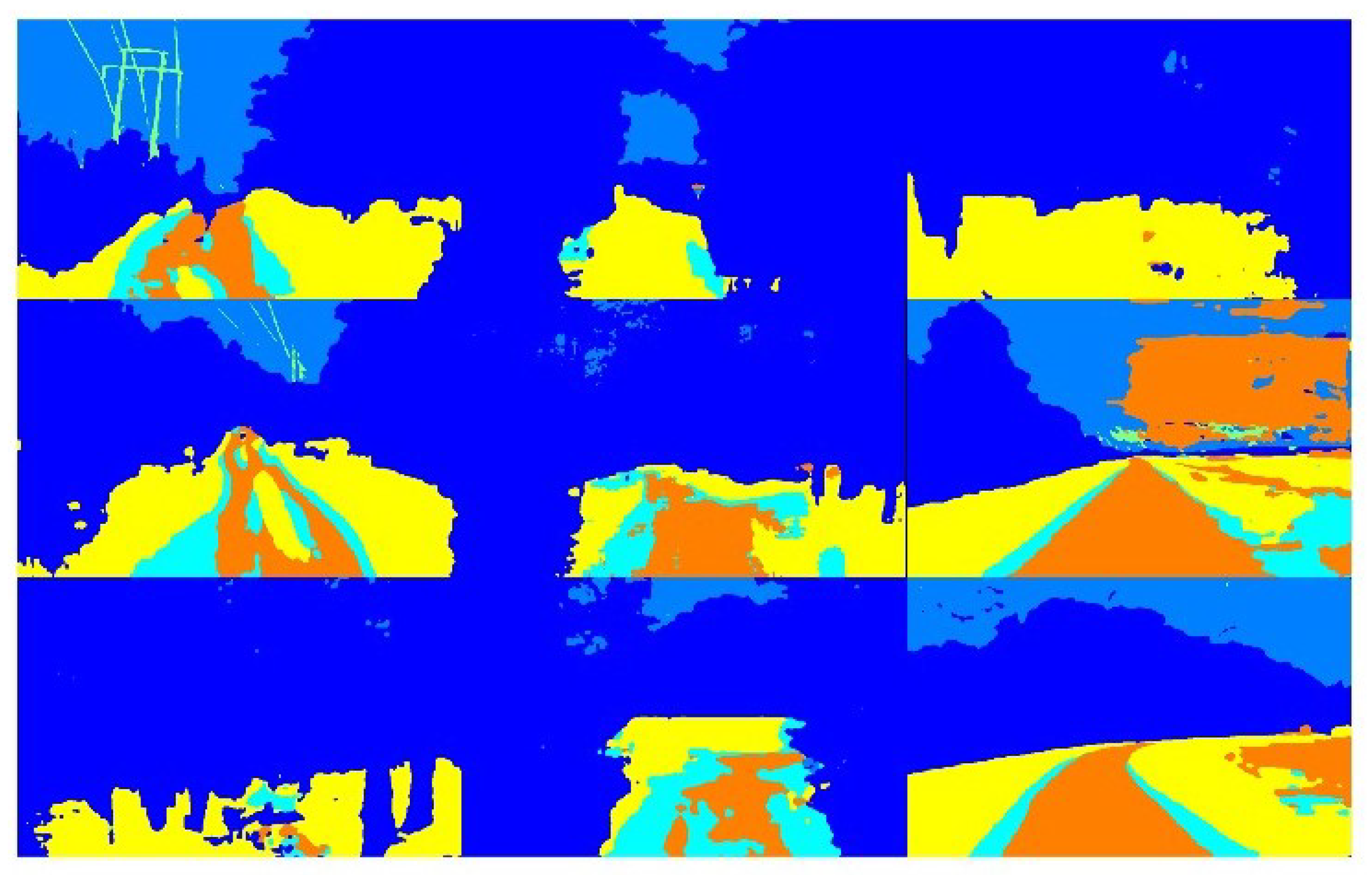

- LiDAR 3D Point Clouds.

3.1. Proposed Path Forward for Dataset Curation Supporting Off-Road Autonomy

3.1.1. High-Level, Categorical Class Labels

3.1.2. Embracing Imperfect Class Labels

3.2. Multi-Stage AI System of Systems and Imitation Learning Strategy

3.2.1. Multi-Stage AI System of Systems

3.2.2. Deep Reinforcement Learning-Based Imitation Learning

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gao, Y.; Lin, T.; Borrelli, F.; Tseng, E.; Hrovat, D. Predictive control of autonomous ground vehicles with obstacle avoidance on slippery roads. In Proceedings of the Dynamic Systems and Control Conference, Cambridge, MA, USA, 12–15 September 2010; Volume 44175, pp. 265–272. [Google Scholar]

- Febbo, H.; Liu, J.; Jayakumar, P.; Stein, J.L.; Ersal, T. Moving obstacle avoidance for large, high-speed autonomous ground vehicles. In Proceedings of the 2017 American Control Conference (ACC), Seattle, DC, USA, 24–26 May 2017; pp. 5568–5573. [Google Scholar]

- Guastella, D.C.; Muscato, G. Learning-based methods of perception and navigation for ground vehicles in unstructured environments: A review. Sensors 2020, 21, 73. [Google Scholar] [CrossRef] [PubMed]

- Islam, F.; Nabi, M.; Ball, J.E. Off-road detection analysis for autonomous ground vehicles: A review. Sensors 2022, 22, 8463. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Liu, B. Path planning and path tracking for collision avoidance of autonomous ground vehicles. IEEE Syst. J. 2021, 16, 3658–3667. [Google Scholar] [CrossRef]

- Terapaptommakol, W.; Phaoharuhansa, D.; Koowattanasuchat, P.; Rajruangrabin, J. Design of obstacle avoidance for autonomous vehicle using deep Q-network and CARLA simulator. World Electr. Veh. J. 2022, 13, 239. [Google Scholar] [CrossRef]

- Wang, N.; Li, X.; Zhang, K.; Wang, J.; Xie, D. A survey on path planning for autonomous ground vehicles in unstructured environments. Machines 2024, 12, 31. [Google Scholar] [CrossRef]

- Tesla. Tesla Vehicle Safety Report. 2024. Available online: https://www.tesla.com/VehicleSafetyReport (accessed on 1 August 2024).

- Waymo. Waymo Significantly Outperforms Comparable Human Benchmarks over 7+ Million Miles of Rider-Only Driving. 2023. Available online: https://waymo.com/blog/2023/12/waymo-significantly-outperforms-comparable-human-benchmarks-over-7-million/ (accessed on 1 August 2024).

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Ni, J.; Chen, Y.; Chen, Y.; Zhu, J.; Ali, D.; Cao, W. A survey on theories and applications for self-driving cars based on deep learning methods. Appl. Sci. 2020, 10, 2749. [Google Scholar] [CrossRef]

- Youssef, F.; Houda, B. Comparative study of end-to-end deep learning methods for self-driving car. Int. J. Intell. Syst. Appl. 2020, 10, 15. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Wijayathunga, L.; Rassau, A.; Chai, D. Challenges and solutions for autonomous ground robot scene understanding and navigation in unstructured outdoor environments: A review. Appl. Sci. 2023, 13, 9877. [Google Scholar] [CrossRef]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A RUGD Dataset for Autonomous Navigation and Visual Perception in Unstructured Outdoor Environments. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. RELLIS-3D Dataset: Data, Benchmarks and Analysis. arXiv 2020, arXiv:2011.12954. [Google Scholar]

- Sivaprakasam, M.; Maheshwari, P.; Castro, M.G.; Triest, S.; Nye, M.; Willits, S.; Saba, A.; Wang, W.; Scherer, S. TartanDrive 2.0: More Modalities and Better Infrastructure to Further Self-Supervised Learning Research in Off-Road Driving Tasks. arXiv 2024, arXiv:2402.01913. [Google Scholar]

- Triest, S.; Sivaprakasam, M.; Wang, S.J.; Wang, W.; Johnson, A.M.; Scherer, S. Tartandrive: A large-scale dataset for learning off-road dynamics models. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2546–2552. [Google Scholar]

- Kishore, A.; Choe, T.E.; Kwon, J.; Park, M.; Hao, P.; Mittel, A. Synthetic data generation using imitation training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3078–3086. [Google Scholar]

- Jaipuria, N.; Zhang, X.; Bhasin, R.; Arafa, M.; Chakravarty, P.; Shrivastava, S.; Manglani, S.; Murali, V.N. Deflating dataset bias using synthetic data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 772–773. [Google Scholar]

- Nabati, M.; Navidan, H.; Shahbazian, R.; Ghorashi, S.A.; Windridge, D. Using synthetic data to enhance the accuracy of fingerprint-based localization: A deep learning approach. IEEE Sens. Lett. 2020, 4, 6000204. [Google Scholar] [CrossRef]

- Meng, Z.; Zhao, S.; Chen, H.; Hu, M.; Tang, Y.; Song, Y. The vehicle testing based on digital twins theory for autonomous vehicles. IEEE J. Radio Freq. Identif. 2022, 6, 710–714. [Google Scholar] [CrossRef]

- Price, S.R.; Price, S.R.; Anderson, D.T. Introducing fuzzy layers for deep learning. In Proceedings of the 2019 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), New Orleans, LA, USA, 23–26 June 2019; pp. 1–6. [Google Scholar]

- Talpur, N.; Abdulkadir, S.J.; Alhussian, H.; Hasan, M.H.; Aziz, N.; Bamhdi, A. A comprehensive review of deep neuro-fuzzy system architectures and their optimization methods. Neural Comput. Appl. 2022, 34, 1837–1875. [Google Scholar] [CrossRef]

- Pan, Y.; Cheng, C.A.; Saigol, K.; Lee, K.; Yan, X.; Theodorou, E.A.; Boots, B. Imitation learning for agile autonomous driving. Int. J. Robot. Res. 2020, 39, 286–302. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, B.; Tomizuka, M. Deep imitation learning for autonomous driving in generic urban scenarios with enhanced safety. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2884–2890. [Google Scholar]

- Le Mero, L.; Yi, D.; Dianati, M.; Mouzakitis, A. A survey on imitation learning techniques for end-to-end autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14128–14147. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, Y.; Pu, Z.; Hu, J.; Wang, X.; Ke, R. Safe, efficient, and comfortable velocity control based on reinforcement learning for autonomous driving. Transp. Res. Part C Emerg. Technol. 2020, 117, 102662. [Google Scholar] [CrossRef]

- Huang, Z.; Wu, J.; Lv, C. Efficient deep reinforcement learning with imitative expert priors for autonomous driving. IEEE Trans. Neural Networks Learn. Syst. 2022, 34, 7391–7403. [Google Scholar] [CrossRef]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a robot: Deep reinforcement learning, imitation learning, transfer learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef] [PubMed]

- Schaal, S. Is imitation learning the route to humanoid robots? Trends Cogn. Sci. 1999, 3, 233–242. [Google Scholar] [CrossRef] [PubMed]

- Johns, E. Coarse-to-fine imitation learning: Robot manipulation from a single demonstration. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 4613–4619. [Google Scholar]

- Wang, L.; Liu, Z. Data-driven product design evaluation method based on multi-stage artificial neural network. Appl. Soft Comput. 2021, 103, 107117. [Google Scholar] [CrossRef]

- Injadat, M.; Moubayed, A.; Nassif, A.B.; Shami, A. Multi-stage optimized machine learning framework for network intrusion detection. IEEE Trans. Netw. Serv. Manag. 2020, 18, 1803–1816. [Google Scholar] [CrossRef]

- Vemulapalli, R.; Pouransari, H.; Faghri, F.; Mehta, S.; Farajtabar, M.; Rastegari, M.; Tuzel, O. Knowledge Transfer from Vision Foundation Models for Efficient Training of Small Task-specific Models. In Proceedings of the ICML, Vienna, Austria, 21 July 2024. [Google Scholar]

- Ross, S.; Bagnell, J.A. Reinforcement and imitation learning via interactive no-regret learning. arXiv 2014, arXiv:1406.5979. [Google Scholar]

- Reddy, S.; Dragan, A.D.; Levine, S. Sqil: Imitation learning via reinforcement learning with sparse rewards. arXiv 2019, arXiv:1905.11108. [Google Scholar]

- Zhu, Y.; Wang, Z.; Merel, J.; Rusu, A.; Erez, T.; Cabi, S.; Tunyasuvunakool, S.; Kramár, J.; Hadsell, R.; de Freitas, N.; et al. Reinforcement and imitation learning for diverse visuomotor skills. arXiv 2018, arXiv:1802.09564. [Google Scholar]

- Le, H.; Jiang, N.; Agarwal, A.; Dudík, M.; Yue, Y.; Daumé III, H. Hierarchical imitation and reinforcement learning. In Proceedings of the International Conference on Machine Learning. PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 2917–2926. [Google Scholar]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. Deep reinforcement learning framework for autonomous driving. arXiv 2017, arXiv:1704.02532. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Price, S.R.; Land, H.B.; Carley, S.S.; Price, S.R.; Price, S.J.; Fairley, J.R. Expanding Ground Vehicle Autonomy into Unstructured, Off-Road Environments: Dataset Challenges. Appl. Sci. 2024, 14, 8410. https://doi.org/10.3390/app14188410

Price SR, Land HB, Carley SS, Price SR, Price SJ, Fairley JR. Expanding Ground Vehicle Autonomy into Unstructured, Off-Road Environments: Dataset Challenges. Applied Sciences. 2024; 14(18):8410. https://doi.org/10.3390/app14188410

Chicago/Turabian StylePrice, Stanton R., Haley B. Land, Samantha S. Carley, Steven R. Price, Stephanie J. Price, and Joshua R. Fairley. 2024. "Expanding Ground Vehicle Autonomy into Unstructured, Off-Road Environments: Dataset Challenges" Applied Sciences 14, no. 18: 8410. https://doi.org/10.3390/app14188410