Abstract

Sitting posture recognition systems have gained significant attention due to their potential applications in various domains, including healthcare, ergonomics, and human-computer interaction. This paper presents a comprehensive literature review and analysis of existing sitting posture recognition systems. Through an extensive examination of relevant research articles and conference papers, we identify and analyze the underlying technologies, methodologies, datasets, performance metrics, and applications associated with these systems. The review encompasses both traditional methods, such as vision-based approaches and sensor-based techniques, as well as emerging technologies such as machine learning and deep learning algorithms. Additionally, we examine the challenges, constraints, and future trends in the field of sitting posture recognition systems. Researchers, practitioners, and policymakers who want to comprehend the most recent developments and latest trends in sitting posture recognition technology will find great value in this study.

1. Introduction

An average office worker in today’s world uses up to 75% of their day sitting, with over half of that time occurring during extended periods of almost complete inactivity [1]. The swift advancements in technology, particularly in the utilization of electronic devices, have impacted employees. The contemporary nature of office work has transformed job roles from active to sedentary [2]. Sedentary behaviors encompass activities marked by low energy expenditure, often entailing prolonged periods in a resting position. The escalation of musculoskeletal disorders amongst the people working in offices is not only associated with sedentary behavior but also with excessive computer use [3]. The shift from paper-based work to computer-based work is considered one of the contributing factors to this concern. Research indicates that an average employee who works a desk job sits for nine and a half hours per day. When not working, most Americans spend 8.07 h a day sitting down [4]. In today’s world, sedentary lifestyles are gradually becoming a bigger problem. According to estimates from the World Health Organization, between 60 and 85 percent of people worldwide do not get enough exercise [5]. A European survey indicates a significant rise in the percentage of teleworkers, increasing from 10% in most European countries to over 50% due to the pandemic [6]. In a survey conducted by the Australian National Health Survey in 2018, 44% of the adult population characterized their work hours as predominantly spent sitting [7]. Research conducted in other high-income nations revealed that urban, desk-based working populations exhibited prolonged and excessive sedentary behavior, with an average of 77% of their waking hours spent sitting continuously in office settings [8].

The position of the spine and all of its surrounding structures is referred to as posture. Right alignment is maintained by a person with good posture in all sitting, standing, and laying postures. Maintaining proper arm posture, keeping the spine in a neutral position, and placing your legs so that they are flat on the floor with your knees and feet about hip-width apart are the components of the ideal sitting position. Four major body parts make up the sitting position, according to the ISO 11226-2000 international standard for evaluating ergonomic aspects of working postures [9]: the trunk, head, upper extremity, and lower extremity. Sitting upright is regarded as the optimal position because it distributes force evenly over both hips and minimizes lumbar compensation [10]. The spinal discs and back muscles are strained by bad posture, which can lead to pain and discomfort in your lower and upper back. Sitting with the head tilted forward for extended periods can cause neck pain, stiffness, and headaches. It can also impede blood flow to the legs and feet, leading to swelling, numbness, and tingling [11]. Slouching or hunching forward can strain the muscles in the shoulders and cause pain and discomfort [12,13]. A summary of health issues arising as a result of bad posture is outlined in Table 1.

As of 2021, the Health and Safety Executive reported that 470,000 workers in the UK suffered from musculoskeletal diseases related to their jobs. Approximately 166,000 cases (16%) affect the lower limbs, 212,000 cases (45%) the upper limbs or neck, and 182,000 cases (39%) the back. Every year, more than 2.34 million individuals lose their lives due to occupational injuries or diseases. The work-related fatality was highest among Asians (65%), followed by Africa (11.8%) and Europe (11.7%) [14]. Musculoskeletal diseases afflict millions of computer workers, constituting the most prevalent source of occupational illness in the USA. In 2016, these musculoskeletal disorders accounted for the largest portion of healthcare spending, estimated at $380.9 billion [15].

Table 1.

Health issues related to bad posture.

Table 1.

Health issues related to bad posture.

| Sr. No. | Health Issue | Reason |

|---|---|---|

| 1 | Poor circulation | Sitting with poor posture for long periods [11] |

| 2 | Digestive issues | Slouching compresses your abdominal organs [16] |

| 3 | Lower back pain | prolonged awkward postures [17] |

| 4 | Neck and shoulder pain | Slouched [12,13] |

| 5 | Cardiovascular | Prolonged sitting [18,19] |

| 6 | Teeth grinding and jaw pain | Due to leaning forward [20] |

| 7 | Diminished lung function | Leaning or hunching over |

| 8 | Fatigue | Body out of alignment |

| 9 | Reduced Flexibility | Reduced flexibility in the hips, hamstrings, and other muscles, leading to stiffness and discomfort [6] |

| 10 | Depression | Slumped posture [21] |

| 11 | Abnormalities in spine | Poor sitting postures can lead to abnormalities in the spine [22] |

| 12 | Trunk Asymmetry | Prolonged static or cross-legged seated postures [23,24] |

| 13 | Musculoskeletal pain, low back pain, and spinal deformity | Asymmetrical sitting postures [25] |

Correct body posture is critical for maintaining overall human health and well-being. Ideal upright posture is often considered a reflection of musculoskeletal well-being and serves as a major indicator of the healthiness of the movement system [26]. When the body is properly aligned, with the spine in its natural curves and the muscles and joints balanced, it reduces the strain on muscles, ligaments, and joints. Fortunately, numerous remedies exist to address posture-related concerns and promote overall well-being. The best remedy is learning about good posture; when people are informed of correct posture, they are expected to implement healthy posture practices in their daily lives [27]. Setting alerts or reminders can also help in maintaining a proper sitting posture by warning individuals to take breaks, move around regularly, change positions, and stretch every so often [28]. By integrating reminders into routines, individuals can endorse better sitting habits and decrease the risk of long-term posture-related diseases. Ergonomic adjustments in the workplace and daily activities can also significantly contribute to posture correction. This includes optimizing workstation setup, using supportive chairs and pillows, and taking frequent breaks to stretch and change positions [29,30]. Recent advances in IT and sensor technology have enabled the development of real-time supervisory systems that monitor and improve sitting posture. These systems detect poor sitting habits by using pressure sensors embedded in the backrest and seat, helping to encourage a more upright posture. Robertson et al. [29] conducted a study indicating a reduction in musculoskeletal risk over 16 months through training individuals in ergonomic posture. Additionally, it has been shown by studies [30] and [31] that ergonomic modifications can reduce pain and symptoms related to musculoskeletal disorders. Taieb-Maimon et al. [32] also found a decrease in posture-related risks within three weeks of using a camera to display the sagittal posture of seated individuals.

Several review studies have already been conducted encompassing the topic of posture recognition systems. The review conducted by [33] provides an overview of the application of smart technologies and sensors integrated into offices and other types of chairs for disease prevention. However, it only covers 15 articles published between 2010 and 2020. The study conducted by [34] is similar to our study, suggesting only a few areas for future research, but they do not provide more specific recommendations on methodologies or frameworks for addressing the identified gaps. A most recent review by [27] included 223 research articles but lacked discussion on how recent advancements in deep learning and computer vision contribute to the development of more effective sitting posture recognition systems. This research addresses the gap in synthesizing the vast and fragmented body of research on sitting posture recognition systems, providing a consolidated source of information that highlights the advancements, challenges, and future directions in the field.

It provides a comprehensive literature review and analysis of sitting posture recognition systems, encompassing traditional methods and emerging technologies such as machine learning and deep learning algorithms. It also examines various challenges, constraints, and future trends in sitting posture recognition. In short, through an exploration of the available literature, we analyze prevalent practices, including the types of sensors and machine learning algorithms commonly employed, while also identifying potential areas where further research is needed. This research adds value to the subject area by providing a systematic and detailed review of existing sitting posture recognition systems.

2. Systematic Review Methodology

2.1. Formulation of Research Questions

The following research questions are designed to guide a comprehensive literature review and analysis, focusing on both the technological advancements and practical applications of sitting posture recognition systems:

RQ1: What are the current methodologies and technologies employed in sitting posture recognition systems, and how do they compare in terms of accuracy, cost, and ease of implementation?

RQ2: How effective are machine learning algorithms in enhancing the accuracy of sitting posture recognition systems compared to traditional methods?

RQ3: How do recent advancements in deep learning and computer vision contribute to the development of more effective sitting posture recognition systems?

RQ4: What are the major challenges and limitations faced by existing sitting posture recognition systems, and what are the potential solutions proposed in recent literature?

These questions aim to thoroughly explore the current state of sitting posture recognition systems, identifying areas for improvement and potential future research directions.

2.2. Search Approach

This systematic review of the literature covers publications from 2000 to 2022, utilizing databases such as Scopus, IEEE Xplore, and PubMed. To accommodate variations in methodological terminology, a flexible search string is employed. By amalgamating synonyms for the keywords “human”, “posture”, “estimation”, and “method” a comprehensive search string is constructed as follows: TITLE-ABS-KEY (human AND (posture OR pose) AND Sitting AND (estimation OR classification OR recognition)). Additional key terms are incorporated: “video”, “vision”, and “Kinect”. While irrelevant subareas are excluded following random sampling. Language restriction is applied, limiting results to English-language publications.

2.3. Study Selection Model

After evaluating the search terms in isolation and iteratively, the authors agreed on the final set. These finalized search phrases were employed to retrieve articles from the specified databases. Subsequently, the titles and abstracts of the retrieved papers underwent meticulous scrutiny and analysis. Following that, papers were narrowed down using predetermined inclusion and exclusion criteria. This rigorous process ensured a comprehensive and selective approach to identifying relevant literature.

2.4. Exclusion Criteria

The following criteria were used to determine which papers were excluded:

- The article is not concerned with sitting postures.

- The method and classification precision of each posture were not stated or reported.

- If an author used the same methodology in a conference article and a journal article, the conference article was removed to prevent duplication.

- The same authors mention an identical methodology in two papers with few changes. This study provided a greater degree of detail.

2.5. Journal and Conference Publications

After systematically applying the predefined inclusion and exclusion criteria to the available literature, a rigorous process led to the selection of a total of 120 articles for further analysis. These chosen articles comprise a diverse set of scholarly works that have met the specified criteria. The breakdown of the selected articles by publication type and the compilation includes:

- 70 Journal Articles: These are comprehensive pieces of scholarly writing published in academic journals, showcasing a depth of research and analysis within their respective fields.

- 49 Conference Proceedings: These represent papers presented at academic conferences, providing insights into the latest research findings and ideas exchanged within specific academic communities.

- 1 Book Chapter: This category encompasses a chapter from a larger book, contributing a focused exploration of a particular topic within the context of a broader subject.

2.6. Publication by Year

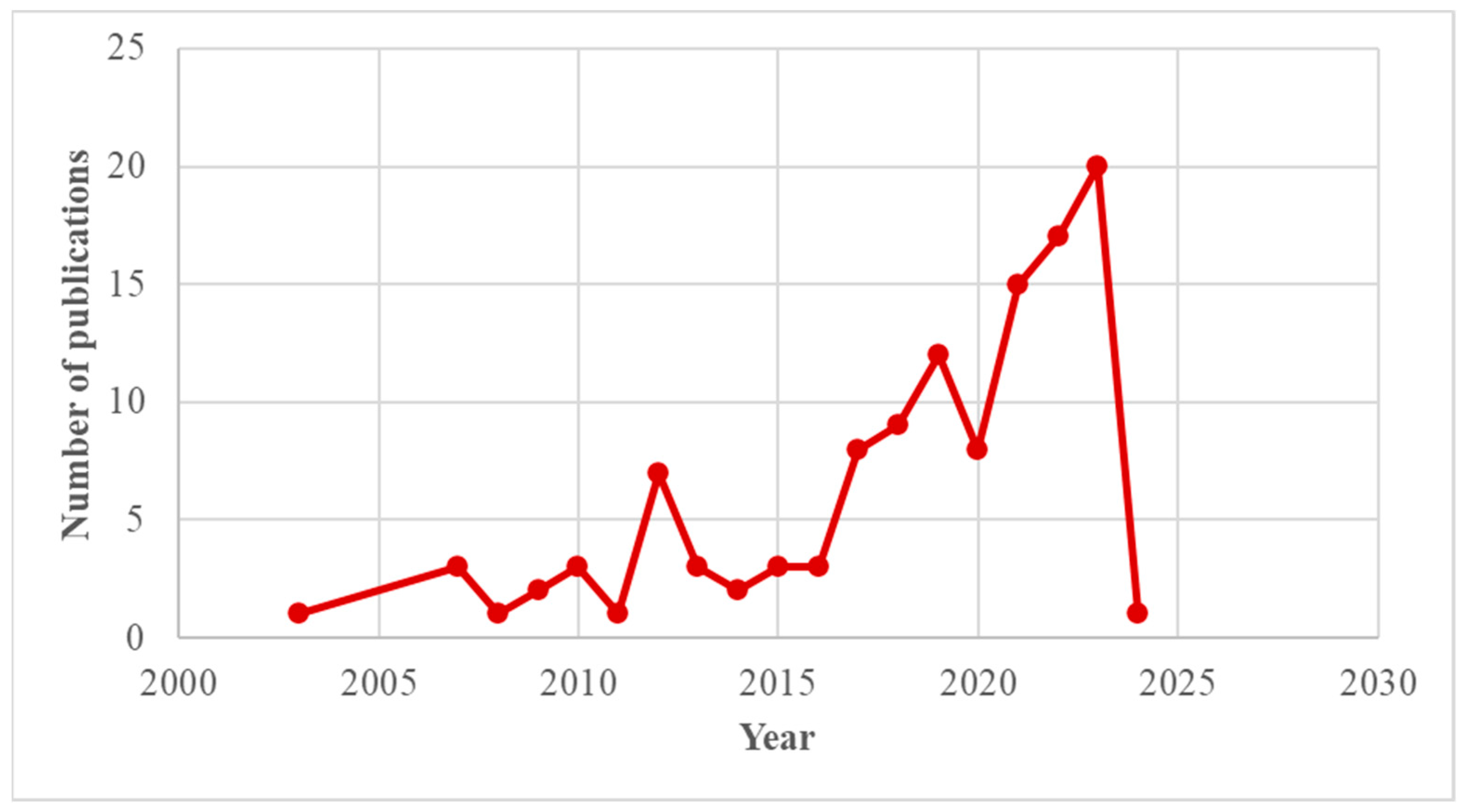

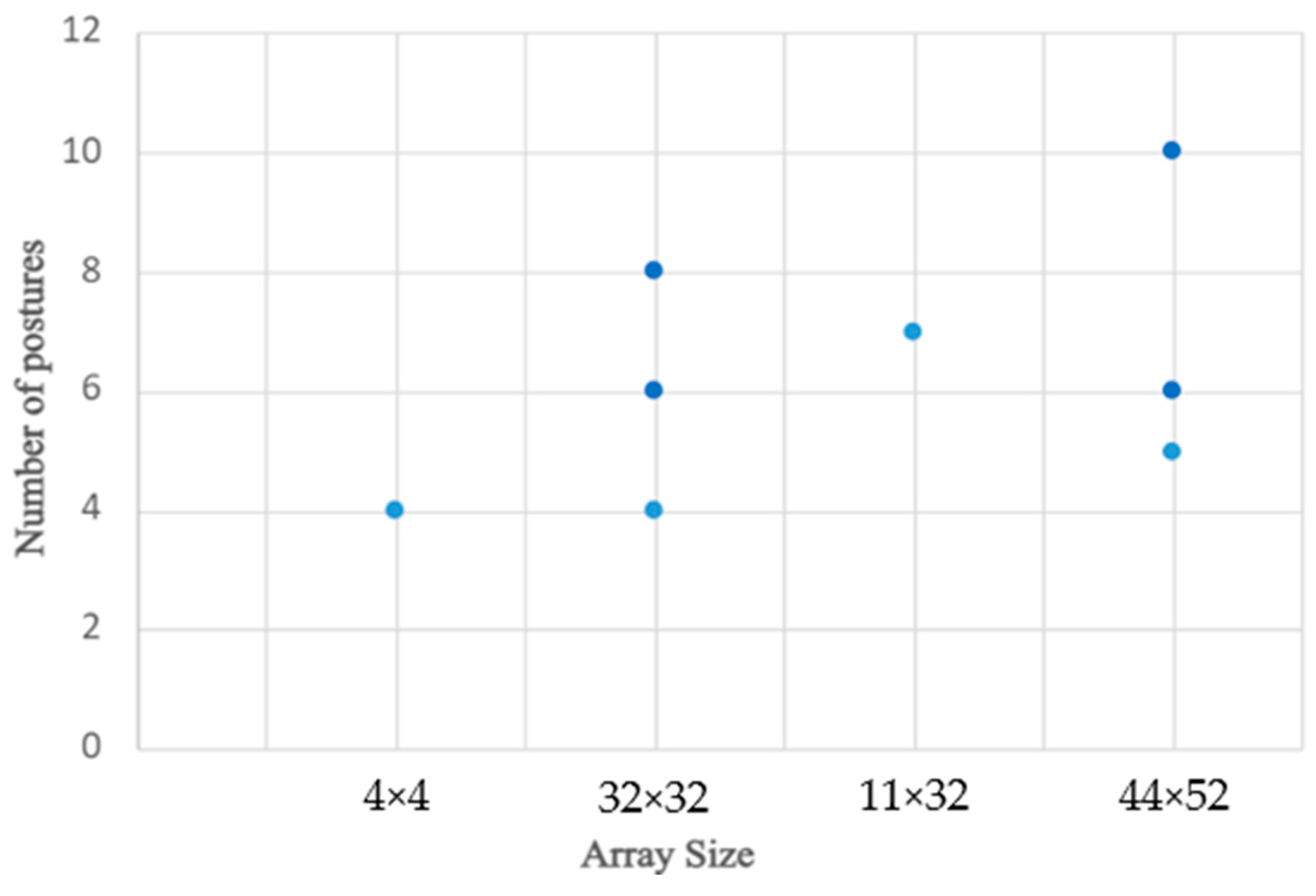

Figure 1 depicts a line graph illustrating the publication trend of articles from 2003 to 2023. During the period from 2003 to 2016, only 24.5% of the articles (26 in total) about the subject were published. Subsequently, there was a consistent increase in publication volume, reaching a peak in 2019, constituting 12.75% of the overall total (13 articles). This upward trajectory continued through 2021, with the growth sustained over the preceding three years, resulting in 39.92% (40 articles) of the total articles being published by that year.

Figure 1.

Breakdown of publication based on year.

2.7. Classification of Articles by Journal

Table 2 presents the distribution of articles across various journals and conferences. Only those journals and conferences with more than one publication were documented. Most of these publications were centered around sensors, sensor networks, the Internet of Things, computational intelligence, and biomedical engineering. Notably, “Sensor” emerged as the leading journal, contributing the highest number of articles at 13.33% of the total (16 articles), followed by the IEEE Sensors Journal published 3.33% (4 articles), and Sensor and Actuators contributed 3.33% (4 articles). Each of the International Journal of Distributed Sensor Networks, IEEE Internet of Things Journal, and IEEE Transactions on Biomedical Engineering accounted for 1.67% (2 articles).

Table 2.

Article classification by journal.

3. Sensing Technologies

Researchers have put forth several solutions to tackle the challenge of posture recognition. The crux of such systems lies in the sensing devices. The existing literature on sitting posture recognition systems classifies them into various categories based on the type of sensor used. To achieve accurate and reliable monitoring of sitting posture, sensors must surround users to capture the relevant features. Sitting posture recognition typically employs a variety of sensors to capture and analyze body positions. Commonly utilized sensors for this purpose include pressure sensors, flex sensors, accelerometers, gyroscopes, inertial measurement units, strain gauges, and ultrasonic sensors. The pressure sensors offer seamless integration into office environments, such as chairs or desks, enabling non-intrusive monitoring of sitting postures without the need for users to wear any devices. This approach strikes a balance between accuracy and convenience, allowing for continuous and unobtrusive monitoring of sitting postures without compromising user comfort. Strategically positioned pressure sensors in the office environment can seamlessly collect pertinent data concerning ergonomics and sitting posture, all without disrupting the user’s natural movements or activities. The taxonomy of sensors used in the existing research is provided in Table 3.

Table 3.

Taxonomy of sensors used for sitting posture recognition.

Table 4 below presents the comparison of different sensing technologies used in posture recognition applications, briefly providing their functionality, strengths, and limitations. These sensors can be used individually or in combination to enhance the accuracy and reliability of sitting posture recognition systems. The choice of sensors depends on factors such as the desired accuracy, cost, and specific application requirements.

Table 4.

Comparison of different sensing technologies for posture recognition.

3.1. Pressure Sensor

Pressure sensors are critical components in various applications, ranging from industrial systems to medical devices. They measure the pressure and convert this information into an electrical signal, which can be read by a computer or control system. Pressure Range: The minimum and maximum pressures the sensor can measure. Key specifications of pressure sensors include accuracy, sensitivity, response time, operating temperature, durability, and material compatibility.

3.1.1. Pressure Sensor Types

The most widely used approach is the chair with integrated pressure sensors due to its non-intrusive and privacy-preserving features, which may make people more receptive over time. The user has direct contact with the pressure sensors, creating a seamless interface. These sensors measure the distribution of pressure applied to a surface, such as a chair. They can be embedded in the seat and backrest to detect variations in pressure caused by different sitting postures. The research studies [55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70] use pressure sensors to recognize different sitting postures.

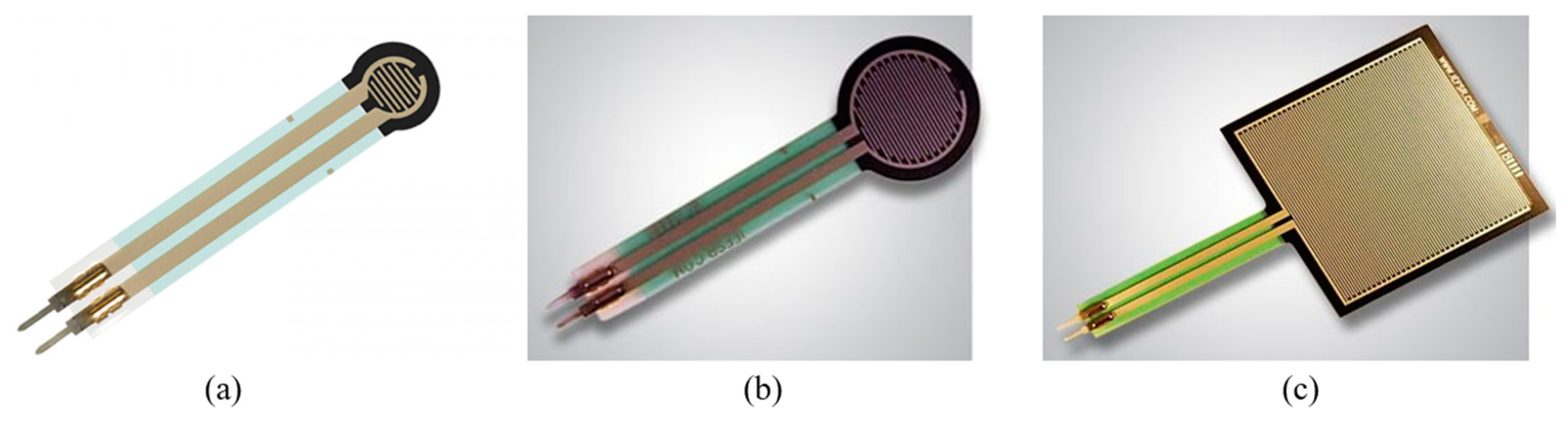

Force Sensing Resistors

Force-resistive sensors, or force-sensitive resistors (FSRs), are electronic devices designed to detect and measure the force or pressure applied by changing their electrical resistance in response to the applied force. These are mainly constructed using substances that demonstrate a variation in electrical resistance; mechanical distortion is applied. The electrical resistance of the sensor is inversely proportional to the force applied, thus providing a continuous or analog output signal that corresponds to the force exerted on them. These analog output signals can be converted into digital values using analog-to-digital converters (ADCs) for further processing and analysis by microcontrollers or other electronic devices. Force-resistive sensors are simple, low-cost, and flexible but limited in accuracy, durability, and sensitivity to environmental factors such as temperature and humidity. Figure 2 shows the FSR 400, FSR 402, and FSR 406 models from Interlink with two wires.

Figure 2.

Interlink FSR models (a) FSR-400 (b) FSR-402 (c) FSR-406.

Some of the studies making use of FSR for posture recognition are shown in Table 5.

Table 5.

Force-sensitive resistors are used for posture recognition.

Textile Pressure Sensor

The textile pressure sensor is a type of sensor embedded into cloth or textile materials to detect and quantify pressure or force applied to the surface. The most common measurement principle for textile moisture sensors is a resistance measurement between two conductive electrodes. Polyester, cotton, or polypropylene are examples of insulating materials used to make the support cloth over which the electrodes are constructed. The fabric has relatively little electrical conductivity when it is “dry”. Ma et al. [76] employed 12 such sensors situated on the bottom rest and backrest of a chair. They applied decision trees, SVM, multilayer perceptron, naïve Bayes, and k-NN algorithms to categorize five distinct sitting postures specific to wheelchairs. The study yielded a high accuracy rate of 99.51% with decision trees. Nevertheless, their approach utilized a greater number of sensors compared to our study and focused solely on detecting five wheelchair-specific postures. Meyer et al. [77] optimized the textile pressure sensor for low hysteresis and used it for classifying different sitting positions in chairs with an average recognition rate of 82%. The naïve Bayes classifier was used to classify the data of nine subjects. The textile sensor array of 240 elements was embedded in a cushion as shown in Figure 3 (1: common electrode, 2: spacer, 3: sensing electrodes, and 4: electrodes switched to ground).

Figure 3.

Textile pressure sensor with 240 elements [77].

Load Cells

Transducers that transform mechanical force into electrical impulses are called load cells (LCs). Usually, they are made of a metal frame on which strain gauges are fastened. The strain gauges stretch or compress as a result of the load cell’s small deformation in response to force exerted. The strain gauges’ electrical resistance is altered by this deformation, which modifies the voltage output in proportion to the force exerted. Load cells are versatile, reliable, wide range, and exhibit high accuracy, but they are expensive and require calibration regularly while being sensitive to environmental conditions. In the context of posture recognition, load cells are used to measure the distribution of body weight and pressure exerted on different parts of a surface, such as a seat plate. Roh et al. [78] used load cells such as P0236-I42 on the seat plate to measure body weight distribution and categorize postures by employing machine learning with an accuracy ranging from 96.31% to 97.94%. Researchers in [79] placed 3 LCs over a cushion and classified eight distinct postures, attaining an overall classification accuracy of 98.50%.

3.1.2. Sensor Arrangements and Placement

There are mainly two main types of sensor arrangements used for detecting variation in pressure: symmetrical and asymmetrical. An example of symmetrical is sensor arrays, and the asymmetrical approach uses a discrete number of sensors as chosen by the designer.

The discrete sensors approach is the most popular approach used for posture recognition due to its simplicity, inexpensiveness, easy installation, and flexibility in placement. Different studies have utilized varying numbers of sensors, ranging from a minimal setup with just a few sensors to more complex arrangements with numerous sensors varying from 1 to 10. Most of the researchers used either 4 [58,60,71,78,79], 6 [62,75,80,81,82], or 9 [69,74,83,84] sensors. Some researchers, such as [61], used 8 sensors. Another configuration used includes 1 [85], 3 [56], 5 [58], 7 [61], 8 [86], and 13 [73].

A sensor array is a collection of individual pressure sensors arranged in a grid or matrix pattern, designed to measure the distribution of pressure over a surface. This is often used to detect and analyze how pressure is applied across a given area, providing detailed information about force distribution and contact patterns. A sensor array, comprising multiple sensors distributed across a surface, can provide a holistic view of the sitting posture. It enables capturing three-dimensional spatial data, offering detailed insights into posture dynamics. The most popular sensor array configuration used is 44 × 52 [55,70,87] and 32 × 32. Some researchers also used other configurations, such as 11 × 13 [65], 3 × 3 [74,83], and 4 × 4 [64]. These arrays provide a detailed pressure mapping offering a comprehensive view of pressure distribution, enabling precise posture recognition and analysis. They are non-intrusive and can easily be embedded within seating surfaces; they do not interfere with user comfort or normal sitting behavior. However, installing and maintaining a sensor array is a complex process, and the cost of deployment is high.

Sensor placement is crucial in posture recognition using pressure sensors because it directly impacts the accuracy and reliability of the system. Almost all the approaches using pressure sensor arrays use an approach where sensors are placed on the seat except the solution offered by [68], which also placed the sensor array of the same size at the back of the seat. Similarly, in the case of the asymmetrical sensor used, the majority of the researchers placed the sensor in the sensor cushion seat, whereas [61,62,80] also placed the sensor on the back of the seat. Ref. [56] placed an ultrasonic sensor at the back of the seat in addition to a pressure sensor array placed on the seat. Refs. [61,80] also placed a sensor on the arm to get more information. Ref. [88] used a micro-sized LiDAR sensor consisting of an infrared laser and a CMOS image sensor to obtain a 3D point cloud in real-time with x, y, and z coordinate information. It scans the person’s sitting posture and represents it in point cloud form while maintaining privacy, ensuring that no identifiable information is captured.

3.1.3. Number of Postures

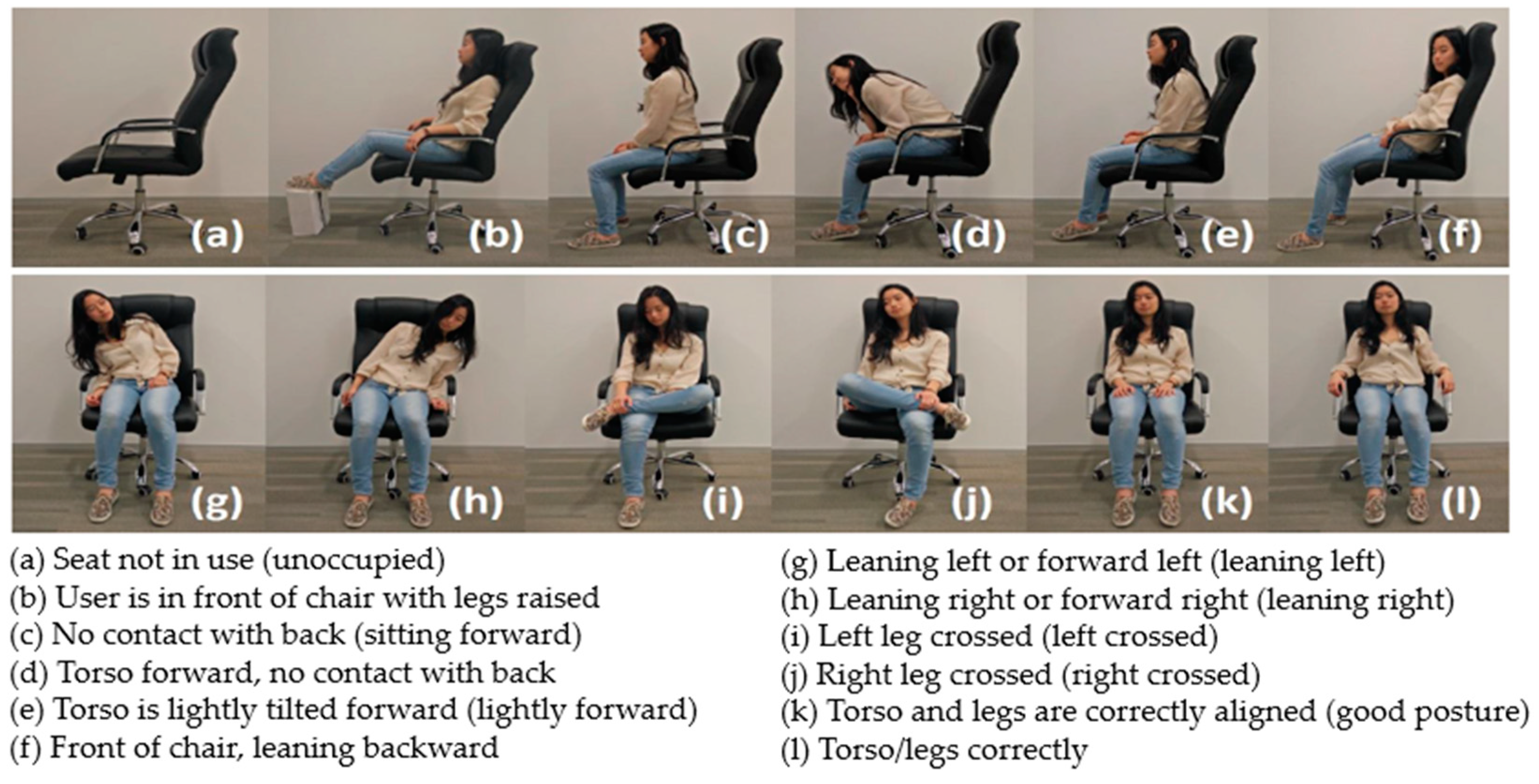

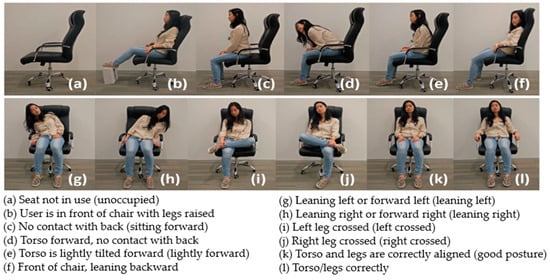

Different sitting postures can vary widely, particularly in the context of ergonomics, health, and activity. Some common types of sitting postures are depicted in Figure 4.

Figure 4.

Different sitting postures [61].

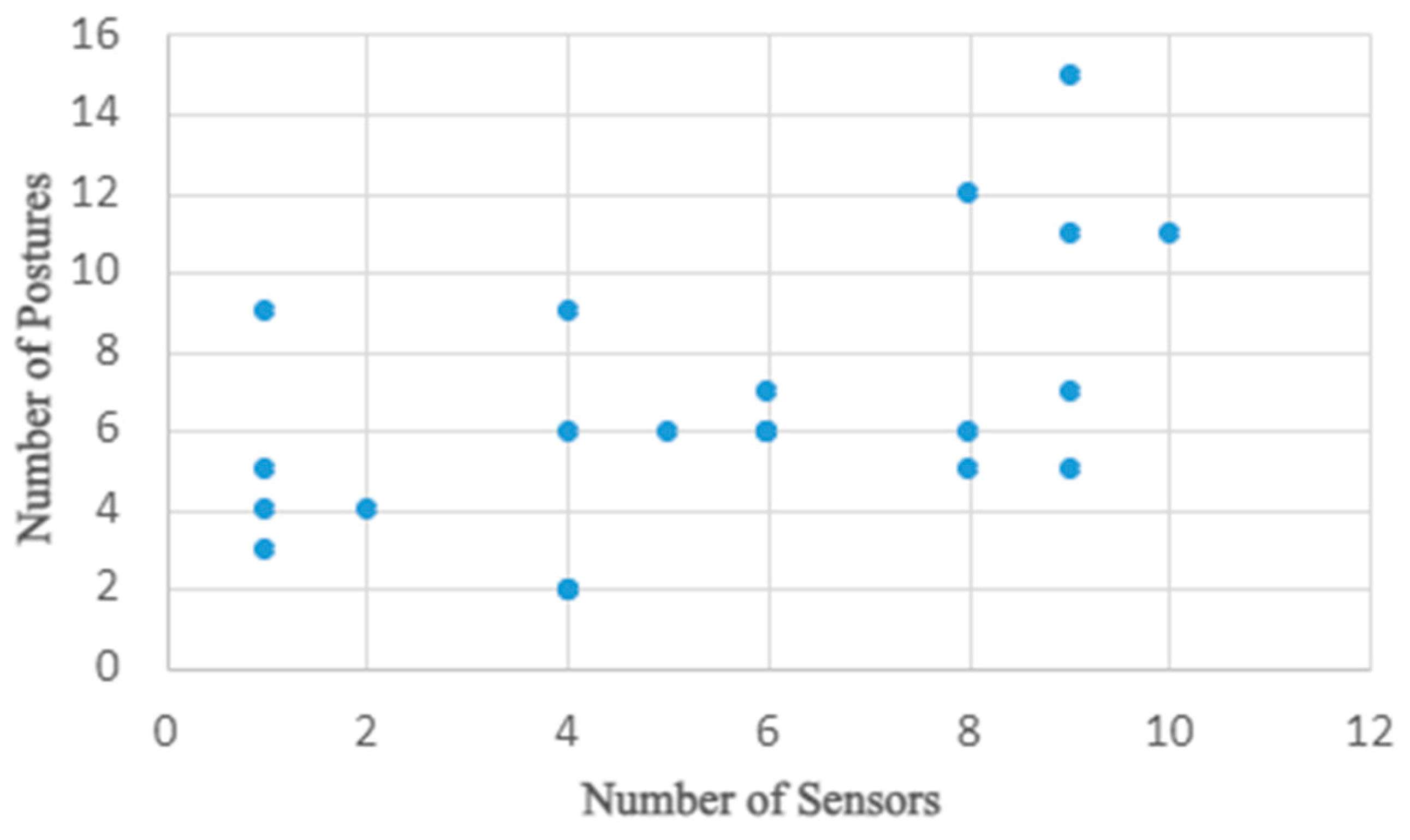

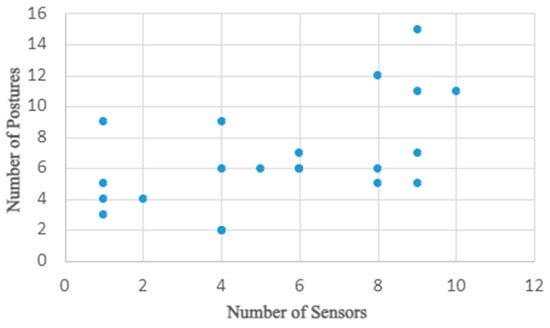

The number of postures recognized using sensor approaches varies from 4 to 15: 4 [56,64,67,71], 5 [68,70], 6 [58,62,80,87,89,90,91], 7 [65,75], 8 [66,72], 9 [88], 10 [87], 11 [83], 12 [61], and 15 [92] as depicted in Figure 5, which reveals that there is no straightforward relationship where an increment in the number of sensors consistently results in the recognition of a greater number of postures. Some studies with fewer sensors have successfully recognized many postures, while others with more sensors have identified fewer postures. The effectiveness of posture recognition often depends more on factors such as the tactical positioning of sensors, the quality of data collected, and the sophistication of the data processing and machine learning algorithms used. For example, a well-placed set of a few high-quality sensors can sometimes outperform a larger array of less optimally placed sensors.

Figure 5.

Number of sensors used, and postures recognized.

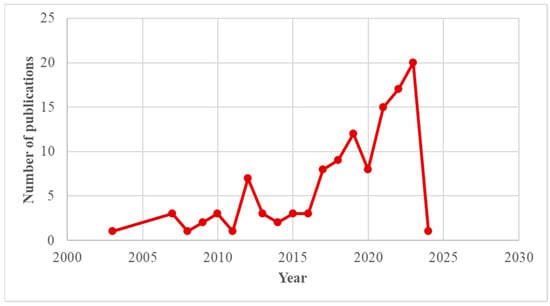

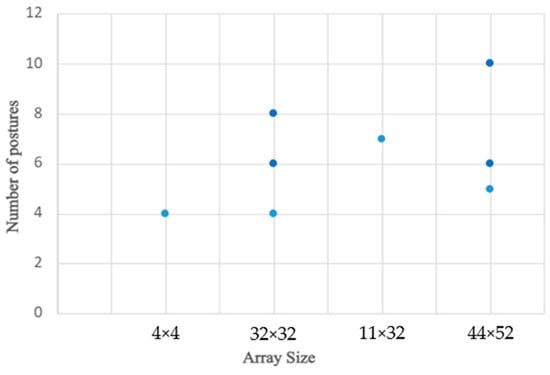

In the case of array configurations, as shown in Figure 6, higher resolution in pressure sensor arrays significantly enhances the ability to recognize a greater number of postures. Higher-resolution arrays consist of more sensors per unit area, providing finer granularity in the pressure data. This increased detail allows for more precise detection and differentiation of subtle changes in pressure distribution, which is crucial for accurately identifying various postures.

Figure 6.

Resolution of sensor array vs. postures recognized.

3.1.4. Hardware Platform Used

A typical sitting posture recognition system collects the data through the sensors and then applies different algorithms to extract features and recognize posture. Most of the systems deploy microcontrollers for the acquisition of data from the sensors. technologies to detect and identify different sitting positions. The popular microcontroller choices for data collection include Arduino Mega [60,64,71,81,93,94], STM32 [59,75,90], Atmel Mega 328 [61,62,83], ESP32 [74], and Odroid N2+ [95]. The machine learning algorithms are executed on resource-constrained computing devices such as the Raspberry Pi 4 [65,88,96] or data is fed to laptops [80,91,97].

3.2. Strain Sensors

Strain sensors, also known as strain gauges, are devices used to measure the amount of deformation or strain in an object. When an external force is applied to an object, it deforms, and strain sensors detect this deformation and convert it into an electrical signal. Strain sensors typically consist of a backing material attached to a thin, flexible grid made of materials such as foil. This grid is often constructed from semiconductor or metallic materials. Strain sensors can be embedded in seat cushions or backrests to measure the distribution of pressure and the deformation of the seat as a person sits. The data collected from these sensors can be used to detect different sitting postures based on how the pressure is distributed across the seat.

3.2.1. Flex Sensor

Flex sensors are an interesting choice for sitting posture recognition due to their ability to detect bending and flexing in various materials. They are typically constructed by printing a conductive material on top of a flexible substrate, such as plastic. When the sensor is bent, this conductive substance creates a resistive element that modifies its resistance. The conductive substance extends when the sensor is bent, changing the resistance. The resistance changes more when the sensor is bent further. The flex sensors are normally embedded in the seat or backrest to monitor how these parts bend as a person sits. They are robust enough for long-term usage in settings where posture monitoring is required constantly, such as office chairs or ergonomic evaluations, because they are made to survive frequent bending. Also, they are relatively inexpensive compared to other sensors such as strain gauges or depth cameras, as well as consume less power. However, these sensors have a limited measurement range and nonlinear output, complicating data processing. Also, they have limited precision and are susceptible to wear and tear. Hu [80] proposed a system that used six flex sensors installed on chairs to classify seven different postures by employing ANN, achieving 97.78% accuracy with a floating-point evaluation and 97.43% accuracy with the 9-bit fixed-point implementation.

3.2.2. Strain Gauge

A strain gauge sensor measures strain in an object’s (deformation). It typically consists of a thin, flexible substrate with a metallic foil pattern. This foil is arranged in a grid pattern that deforms along with the substrate. When an object is subjected to external forces and deforms, the strain gauge converts this deformation into an electrical signal for measurement and analysis. Strain gauges are highly sensitive and can detect very subtle changes in posture, making them ideal for applications requiring detailed posture analysis. Also, they offer more precise measurements of deformation, which can be directly correlated to specific posture changes. However, strain gauges require more careful placement and calibration compared to FSRs, making them more complex to integrate into a chair or seating system. Also, more sensitive to environmental changes such as temperature, which might necessitate additional compensation measures. Furthermore, they tend to be more expensive, especially when high precision and durability are required.

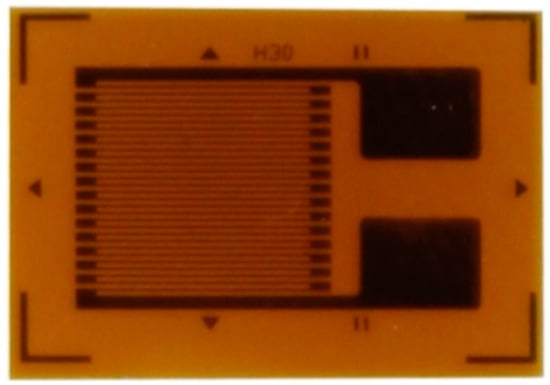

Liang et al. [98] utilized eight BF350 high-precision resistance strain gauges (shown in Figure 7) strategically distributed across the seat cushion to collect detailed pressure data from the human buttocks. This arrangement allowed for the effective identification of eight distinct sitting postures through the deployment of a convolutional neural network. Qian et al. [85] attached a strain gauge built using inverse piezoresistive nanocomposite sensors to the user’s back to collect sitting posture data. A 3-layer BP neural network is then used to differentiate between four different sitting postures with an identification accuracy of 98.75%.

Figure 7.

BF350 high-precision resistance strain gauges.

3.3. Inertial Measurement Unit

Using a mix of accelerometers, gyroscopes, and occasionally magnetometers, Inertial Measurement Units (IMUs) are electronic devices that measure and report a body’s specific force, angular rate, and occasionally the magnetic field surrounding the body. They are commonly used to track the orientation and movement of an object in space without relying on external references, which makes them a powerful tool for sitting posture recognition. The raw data from IMUs (acceleration, angular velocity, and sometimes magnetic orientation) is processed using algorithms to interpret the posture. IMUs can be embedded in wearables such as belts, vests, or posture-correcting braces. They can also be integrated into chairs to monitor how the user sits over time. They are versatile, highly sensitive, compact, and portable, which makes them a good choice for use in posture recognition applications. IMUs can suffer from drifting, consuming more power, and the raw data from IMUs is complex and requires significant processing to extract meaningful posture information.

3.3.1. Accelerometers

Accelerometers measure acceleration and can be employed to detect changes in orientation and movement. Placed strategically on a chair or worn on the body, accelerometers can identify different sitting positions. Otoda et al. [99] employed eight accelerometers—six on the seat and two on the backrest—to determine variations in inclination angles across the X, Y, and Z axes. These measurements formed the feature vector for training an RF model to classify nineteen different postures. Based on data from 20 subjects, the mean accuracy of the model was estimated at 80.1% only. This might be due to the large number of postures assessed. Moreover, it is only feasible to employ these sensors if the chair is prone to tilting or deforming when moved. Gupta et al. [100] employed three triaxial accelerometers to detect posture using unsupervised algorithms. These accelerometers were positioned on the user’s back for gathering data. The data received from each sensor were changed into feature vectors and subsequently used to train different algorithms such as OCSVM, Isolation Forest, and K-means. Among all, the K-means achieved the highest accuracy of 99.3%. Ref. [95] used a commercial accelerometer sensor such as MPU-6050 mounted at various body positions to collect accelerometric and gyroscopic data from different body positions and recognize four different postures. Three machine learning classification algorithms were assessed, resulting in an overall accuracy of around 98.7% for real-time posture classification. Among these algorithms, the random forest model performed better in terms of precision, recall, accuracy, and F1-score.

3.3.2. Inertial Sensor

Ref. [101] used inertial sensors available in a smartphone, such as an accelerometer, gyroscope, and magnetometer attached to the upper rear trunk for tracking the precise movement of the body to recognize postures. Inertial sensors provide precise measurements of motion, orientation, and magnetic fields. This high-resolution data enables detailed tracking of body movements and postures, enhancing the accuracy of behavior recognition algorithms. Tang et al. [84] used wearable devices with IMUs integrated into them. Four 9-axis IMUs are uniformly placed along the thoracic and lumbar regions, capturing kinematic data during static and dynamic movements. They deployed LSTM-RNN for classifying seven static sitting postures. The study by [96] deployed two inertial sensors in a belt, gathering data related to pose, addressing the portability limitation of existing solutions. The sensors were placed in the middle of the back and at the top right shoulder to provide more precise and accurate posture monitoring.

3.4. Non-Contact Technology

3.4.1. RFID

Radio Frequency Identification (RFID) technology offers a unique approach to posture monitoring, particularly in environments where non-intrusive and seamless integration is desired. It consists of small, lightweight tags that can be attached to different parts of the body or embedded in clothing. These tags transmit data to RFID readers, which process this information to determine the position and movement of the tags. They are commonly used for tracking and identification and can also be employed in innovative ways to monitor sitting posture. The system leverages the movement, orientation, and presence of RFID tags attached to key body parts or objects (such as a chair) to infer posture. Li et al. [102] used commercial RFID technology for a more convenient and flexible solution for posture monitoring. They placed an RFID tag next to the user, as shown in Figure 8, unlike wearable sensor-based approaches that require attaching sensors to specific points on the body. The position of the RFID tag remains stable beside the user, unaffected by factors such as breathing movements, ensuring consistent and reliable posture detection. By analyzing the phase variations induced by different postures, the system can acquire high-performance posture recognition. Feng et al. [103] effectively identified seven common sitting postures affixing three RFID tags on the back of a user. They leverage the relationship between RFID tag phase changes and sitting postures, extracting valuable features to identify postures using suitable machine learning algorithms. Yao et al. [104] employed several passive RFID tags and analyzed the distribution of Received Signal Strength Indicator (RSSI) from them for recognizing the posture in real-time as individuals assumed various postures.

Figure 8.

Posture recognition setup using RFID [102].

3.4.2. Ultrasonic Sensor

Ultrasonic sensors are used in sitting posture recognition systems to detect and measure distances by emitting ultrasonic waves and interpreting the reflections from nearby objects. These sensors are particularly valuable in applications where non-contact, real-time monitoring of posture is desired. They can be strategically placed around a chair (as shown in Figure 9), for example, on the seat, backrest, or armrests. By continuously measuring the distance between the sensor and the user’s body (such as the back, shoulders, or head), the system can infer the user’s posture. They are relatively inexpensive, making them a cost-effective option for developing posture recognition systems, especially when compared to more complex technologies such as cameras or IMUs. However, they are sensitive to the environment (such as temperature, air currents, and surface reflection), lack precision, and require line of sight.

Niijima1 [105] used an ultrasonic sensor placed on a monitor and a wearable speaker and estimated the sitting posture by measuring the space between the computer screen and the eyes of the user, generating auditory feedback when a poor posture is judged. Qu et al. [106] used ultrasonic sensors to detect various postures with an accuracy of 92.01%. They first extracted the features and input into both static and dynamic-oriented networks to accurately classify five different postures and four activities. Ma [107] used a circular microphone array together with a modified frequency modulated continuous wave (FMCW) technique for accurate interpretation of the acoustic signals to assess and recognize different sitting postures. LiDAR devices can be expensive, which may impact the feasibility of widespread adoption in various environments. Traditional LiDAR devices are often bulky and not easily portable, which can limit their use in dynamic or mobile settings. Handling and processing large amounts of point cloud data generated by LiDAR devices can be computationally intensive and require efficient algorithms for analysis.

Figure 9.

Example of an ultrasonic sensor-based posture recognition system [106].

Figure 9.

Example of an ultrasonic sensor-based posture recognition system [106].

3.5. Sensor Fusion

The majority of researchers use a single kind of sensor to detect posture, but a small number of studies incorporate several sensor types into systems. This multi-sensor approach leverages the strengths of different sensors to improve the accuracy and reliability of the posture recognition system. For example, Zhang [55] combined pressure sensors with infrared sensors. The integration of these two modalities complements each other, leading to improved accuracy in recognizing sitting postures. A low-resolution infrared map is created by the IRA sensor, which measures the geographic distribution of temperature. Simultaneously, the pressure array sensor gathers the pressure distribution associated with various sitting positions. Similarly, Jeong and Park [108] employed 6 pressure and 6 IR sensors placed on the seat cushion and backrest, respectively. Utilizing the K-nearest neighbors (KNN) algorithm, they successfully classified eleven distinct postures with an accuracy rate of 92%. Ramakrishna et al. [56] used pressure sensors together with ultrasonic sensors to recognize the posture of a subject in the wheelchair. The pressure sensors are placed on the seat and the ultrasonic sensor is connected to the backrest. The former provides information about the pressure distribution, while the latter provides information about the degree of learning of the subject. Cho et al. [54] used 16 pressure and 2 ultrasonic sensors on the seat and at the neck support region, respectively, achieving a 96% accuracy rate in classifying fifteen sitting postures using LBCNet. Ramalingam [109] designed and developed a smartphone application that acquires data from a gyrometer, pressure, and ultrasonic sensors to help people correct their postures.

3.6. Image Sensors

Image sensors are devices that convert light into electrical signals to create images. The image sensors can be divided into two categories, such as CCD (charge-coupled device) and CMOS (complementary metal-oxide-semiconductor). In CCD sensors, the electrical charges are transferred across the sensor and read at one corner. Although CCD sensors are often more costly and power-intensive, they are renowned for their excellent image quality and minimal noise. Because CMOS sensors feature separate amplifiers and ADCs for every pixel, readout speeds can be increased while power consumption can be decreased. CMOS sensors are widely deployed in consumer electronics as they are cost-effective and versatile. Image sensors can be used to capture real-time images or video of a person’s sitting posture. The data from these images can be analyzed using computer vision algorithms to detect and categorize various sitting postures. When compared to other sensor approaches, the use of image sensors for posture recognition offers many advantages, such as:

- Non-Intrusive monitoring

- Detailed visual information allows for a more comprehensive analysis of posture.

- It captures many frames, and it is easier to identify postures.

- Video cameras enable real-time monitoring and recognition.

- Individuals do not need to wear any sensors and devices.

- It is more robust against different environmental conditions.

3.6.1. Video Camera-Based Technologies

In posture recognition, both standard RGB and depth cameras are frequently used. RGB cameras are more affordable and easier to use. Depth cameras measure the distance between objects, and it increases the accuracy of the recognition. Other types of cameras are single-camera, multi-camera, static and dynamic cameras, wearable cameras, and infrared cameras, which are also used in sitting posture recognition. Multi-cameras and dynamic cameras capture more frames, and it increases the robustness of the recognition and processing time. Single cameras monitor only from one angle, and it might be difficult to determine posture.

Data is collected from video cameras, and several computer vision methods are applied to identify sitting positions. Infrared cameras are also used in posture recognition, especially in harsh environments such as nights and in non-visible weather conditions. Computer vision-based methods are very frequently used in face recognition, object identification, and scenario recognition. Indoor, outdoor, offline, real-time, static, and moving cameras also affect the success of these methods. Data collection, background subtraction, object detection, object tracking, feature extraction, and classification are the steps of the regular computer vision algorithm in posture identification. Brulin et al. [110] proposed a computer vision-based home monitoring system for elderly people. Change detection and object tracking methods are applied to find out the features of moving objects. The fuzzy logic-based classification algorithm gives 74.27% accuracy for four class problems. The proposed algorithm is successful for home monitoring emergency applications. Kulikajevas [111] proposed a hierarchical image composition method in video data for eight-class posture identification. RGB-D frames are retrieved from video sequences, and then the frame semantic method is applied to finalize layers. Hierarchical ANN algorithms get RGB-D frames as an input and output a prediction tree. The proposed algorithm has 91.47% accuracy for video applications. This method is reliable even though 30% of the object’s torso is visible in the camera.

Infrared and video camera solutions use the following procedure to identify sitting postures.

- Data is collected from video cameras. The camera might be static, moving, or multiple. Different sets of sitting postures should be collected, and labels prepared to correct and incorrect classes.

- Frames are extracted, and image and data preprocessing techniques are used to enhance the quality of input data.

- Images can be used as color images or converted to RGB format.

- The pose estimation model is used to detect key points in the human body, such as joints and limbs.

- Object detection and tracking methods are applied to extract feature sets.

- Train a machine learning model to recognize different postures based on the extracted features, such as deep learning, rule-based algorithms, and the Bayes networks.

Techniques for real-time applications and feedback alert systems can be integrated into the model. Ref. [112] worked on exercise pose recognition from RGB video data. Model formulated using 2D coordinates from 2D exercise pose. There are 18 features extracted from human body joints. 97.01% accuracy is achieved with several sets of data sets. Sitting posture recognition has some limitations because of camera position, weather conditions, and obstacles. Infrared cameras solve some of the limitations, especially those related to weather conditions. Park proposed human detection and posture estimation algorithms from infrared camera inputs. The k-means clustering algorithm is applied to the IR frame, and then morphological filtering is applied to detect objects. In pose estimation, template matching, and threshold techniques are used. Kato used a video camera, infrared camera, and depth sensor to collect driver posture data. Parameters such as wavelength, attenuation in the atmosphere, the influence of optical reflection, and optical transmission to glass have increased the accuracy of the identification systems when using an infrared camera. Pose identification of drivers has several limitations. The proposed algorithm uses the following steps to find out the driver’s pose via infrared camera data:

- Extract the driver’s skin area using image-processing techniques.

- Extract the face skin area using the labeling process.

- Find out possible feature areas such as nose, mouth, and ears, because this place has a lower temperature than other places.

- Classification of the driver’s face based on the direction. Extract other features of the driver rather than the face.

Studies such as [110,113,114,115,116,117,118,119,120,121,122] used image and video frames as input to recognize sitting posture. In this work, object detection, tracking, and feature extraction are used to create an input matrix for the classification. Depth images are employed alongside RGB images to enhance understanding and analysis of visual data. There are several works based on depth images in sitting posture recognition, such as [68,110,116,118,119,121,123,124,125,126,127,128], which collected data using smartphone cameras and static cameras. Experiments show that camera-based applications have a greater number of features that increase the accuracy of the recognition.

Infrared cameras have low resolution and reduced color information if compared to regular videos. It affects object detection and feature quality in the processing phase. In addition to that, infrared cameras are sensitive to environmental changes, which affect their performance. Infrared cameras are expensive, and high-resolution ones have limited availability. Sitting posture estimation can be complex with infrared cameras when there is an overlap between moving objects. High-resolution and depth-sensing cameras are very suitable for automated pose detection. Consistent and well-distributed lighting increases the robustness of the estimation.

3.6.2. Kinect Camera-Based Technologies

General video cameras are more versatile but may lack the specialized features necessary for detailed body tracking and analysis. The Kinect camera is a depth-sensing camera system developed by Microsoft primarily for gaming and interactive entertainment applications. These cameras excel in depth sensing, body tracking, and real-time feedback, making them ideal for applications requiring accurate posture recognition and motion analysis. In-depth sensing allows for accurate 3D reconstruction of the scene, in contrast to general video cameras, which typically capture 2D images without depth information. Kinect cameras typically have a wide field of view, allowing them to acquire the complete body or multiple people within a scene. Kinect can be used in office environments to monitor employees’ sitting posture throughout the workday [129], as shown in Figure 10.

Figure 10.

Example of a Kinect sensor-based system for sitting posture recognition [129].

Yao [130] used joint information provided by the Kinect sensor to detect neck and torso angles. By tracking these angles from depth images over time, the author devised a method that determines whether a sitting posture is healthy or not with 100% accuracy. Shin proposed a sitting posture monitoring system using Kinect, which employs an IR depth camera to record the user’s sitting posture data and alert them to incorrect postures. Min et al. [131] used a Microsoft Kinect sensor to detect and monitor key bone points of the human body. Faster R-CNN is employed for scene recognition to reliably identify objects and extract relevant information. Gaussian-mixture behavioral clustering is then used for semantic analysis to comprehend the scene. By combining the pertinent aspects of the scene with the human skeleton’s features, the system generates semantic features to distinguish between different sitting postures. He [124] obtained the three-dimensional position information of joints using Kinect’s real-time skeleton tracking technology to monitor sitting posture and alert the user if bad posture is detected. Chin [132] utilized the Kinect sensor to detect sitting positions, which were subsequently utilized to train posture recognition models such as Support Vector Machine (SVM) and artificial neural network (ANN). MATLAB was employed for training the posture recognition algorithms. The experimental results and analysis indicated that SVM with a linear kernel outperformed other models in terms of accuracy. Chen [133] suggested a sitting posture recognition system for students in classrooms using a deep learning neural network model by extracting sitting properties.

A deep fusion neural network method is suggested by Yang [116] based on Visual Geometry Group (VGG), Resnet, and deep transformer networks. Images are captured using Kinect 2.0, which is further improved through random flipping and random. The VGG network extracts shallow features, the Resnet adds constant mapping to extract multi-dimensional features from the image, and the Vision Transformer network uses this fused feature information to recognize six different sitting postures with an accuracy of 98.3%. Sun [134] developed a database of sitting postures using Kinect to collect body index maps. They employed the Structural Similarity Index (SSIM) to monitor posture changes and the Broad Learning System (BLS) to categorize postures in real-time. Additionally, they proposed a double-threshold cascade algorithm to detect exaggerated frames in videos. Experimental results showed that their algorithm could identify eight different sitting postures at 162 frames per second with an accuracy of 98.58%. Sun [126] captured real-time video data from pupils who are seated using Kinect cameras and identified two sitting postures using CNN and claimed that the CNN-based feature learning approach offers strong anti-interference, good accuracy, and resistance to light and environmental influences. Some of the studies using the Kinect camera are listed in Table 6.

Table 6.

Kinect camera-based solutions.

4. Machine Learning in Posture Recognition

In posture identification, machine learning algorithms are used for classification, prediction, and clustering. ML algorithms are very useful in any area, from agriculture, finance, and engineering to space research. We can classify algorithms into two parts: one is the probabilistic-based methods, and the other is the ruling-based methods. Both are widely used in sitting posture recognition research to identify positions. Deep learning-based sitting posture recognition algorithms have been developed, achieving high accuracy and addressing privacy concerns summarized in Table 7.

Min [103] proposed a faster R-CNN algorithm in feature extraction, and then the Gaussian Mixture behavioral algorithm was used for scene understanding. This method is very powerful against multi-object detection. The accuracy of this work is 84.48%. Quan [104] trained sensor data with a three-layer backpropagating neural network algorithm to identify normal sitting, slight hunchback, and severe hunchback positions. The neural network-based method is very effective and robust; accuracy is 98.75%.

Sun [134] developed a new identification method using a broad learning system, which is a different type of deep learning algorithm. The proposed technique makes the process simple and faster than other neural networks. In this work, accuracy is 98.58% with 162 fps speed. Wang [135] proposed the spiking neural network method, which is the combination of logistic regression and liquid state machine. Data collected from 19 participants are classified into 15 classes, such as upright, leaning right, leaning back, and sitting on the leading edge. Ye [89] applied the feedforward neural network algorithm to classify nine sitting postures. In this work, data is collected in real-time, and 90.64% accuracy is obtained. Neural networks and deep learning classification algorithms are very successful in posture recognition. One of the reasons is the size of the data, and the other one is that NN algorithms can be easily adapted to different types of data in the training process.

Sinha [101] used inertial sensor data to generate five sitting chair positions. The naïve Bayes, Support Vector Machine, and k-nearest network algorithms are applied in the training process. SVM gives the highest accuracy with 99.9% after correlation-based feature selection. In this work, there are five simple sitting positions classified as left movement, right movement, front movement, back movement, and straight movement. Posture recognition works have some constraints, such as limited visibility and occlusion between objects. Kulikajevas [111] proposed a deep recurrent hierarchical network algorithm to reduce this common problem. This algorithm uses an RGB depth frame and produces high-quality features from these frames. The accuracy of this novel algorithm is 91.47% with 10 fps data.

Neural networks are frequently used for sitting posture recognition [67,87,116,119,125,128]. Proposed methods use neural networks and deep learning in the feature extraction and classification part of the recognition. One of the constraints of using these methods is the training time and overfitting. Success ratios in most of the work are more than 90%. Research such as [61,74,95,96,136,137,138] applied rule-based algorithms such as decision tree, random forest, and k-nearest. Rule-based algorithms are successful regarding low data dependency, training and testing time, no black box issues, and reliability. On the other hand, they have constraints on complex or non-linear relationships in data. Fuzzy-based position classification is applied by [110] and [62]. Fuzzy logic can increase the success ratio in uncertainty between positions. The former had an auspicious result of 99% accuracy, while the latter, using the fuzzy logic-based system, has more than 80% accuracy, which is less than most of the neural network and rule-based algorithms.

Table 7.

Machine learning algorithms in sitting posture recognition.

Table 7.

Machine learning algorithms in sitting posture recognition.

| Author | ML Algorithm | Accuracy |

|---|---|---|

| [116] | Very Deep Convolutional Networks | 98.3% |

| [95] | Random forest | 98.7% |

| Decision tree | 97.1% | |

| K-nearest neighbors | 94.3% | |

| [139] | Graph convolutional network | 92.7% |

| [118] | Light Convolution Core (LCC) Convolutional Block Attention Module (CBAM) | 99.5% |

| [119] | Recurrent neural network | 88.0% |

| [120] | Small scale convolutional neural network | 91.5% |

| [121] | µLBP + Logistic Regression | 62.4% |

| µLBP + decision tree | 81.7% | |

| µLBP + naïve Bayes classifier | 61.6% | |

| µLBP + neural network | 87.3% | |

| µLBP + random forest | 92.6% | |

| [87] | DCNN using 2 sensors | 73.4% |

| One branch TSPN using 2 sensors | 86.6% | |

| Four Unimodal Models using 2 sensors | 76.7% | |

| TSPN using 2 sensors | 90.6% | |

| TSPN using 2 sensors (no data augmentation) | ||

| [96] | K-nearest neighbors | 97.91% |

| Support Vector Machine | 98.56% | |

| Decision tree | 98.71% | |

| Random forest | 99.1% | |

| Naïve Bayes classifier | 89.53% | |

| Boosting | 99.01% | |

| K-means (unsupervised) | 57.79% | |

| [136] | Random forest | 96.6% |

| Logistic | 95.5% | |

| Decision tree | 94.3% | |

| [61] | K-nearest neighbors | 95.0% |

| [74] | Support vector machines | 85.35% |

| K-nearest neighbors | 90.3% | |

| Logistic Regression | 79.61% | |

| Decision tree | 88.26% | |

| LightGBM | 95.41% | |

| [137] | k-NN | 98.07% |

| Random forest | 98.65% | |

| SVM (linear) | 95.96% | |

| Decision tree | 98.85% | |

| LightGBM | 99.03% | |

| [62] | Rule-based fuzzy logic | >80% |

| [110] | Fuzzy logic | 99.0% |

| [90] | ISOM-based sitting posture recognition algorithm | 95.7% |

| [111] | Deep recurrent hierarchical network | 91.5% |

| [101] | naïve Bayes | 91.98% |

| SVM | 99.51% | |

| KNN | 99.7% | |

| [122] | Linear SVM, Poly-SVM, RBF SVM | 98.6% |

| [131] | Faster-R-CNN | 76.76% |

| [85] | Gradient Descent | 96.63% |

| Gradient Descent with Momentum | 97.67% | |

| Gradient Descent with Adaptive Learning Rate | 97.79% | |

| Resilient Backpropagation | 98.29% | |

| Levenberg-Marquardt | 98.76% | |

| [138] | FG1NN | 97.83% |

| NN50 | 95.83% | |

| WG1NN | 98.0% | |

| WG20NN | 98.08% | |

| WG30NN | 98.25% | |

| [67] | Spiking neural network | 88.52% |

| Linear Regression | >90% |

Table 8 provides a summary of the machine learning algorithm used in sensor-based posture recognition approaches. It can be seen that RF, DT, KNN, and SVM are mostly widely used algorithms, though they have been replaced by deep learning algorithms recently.

Table 8.

ML algorithms used in sensor-based technology.

Several machine learning algorithms are applied to video and image-based data for sitting posture classifications. Table 9 shows the methodology, data volume, and accuracy of these methods. Brulin [110] proposed a fuzzy logic-based recognition method for high-level processing, acquiring 99% accuracy in 21 videos. The CNN algorithm is used by Sun [126] in a 408-frame training set. The accuracy is 82.86%, which is lower than other proposed algorithms. Convolutional neural networks [84,120,126], and Support Vector Machines [72,122] are the most frequently applied methods in video-based data.

Table 9.

Algorithms used in video camera-based technologies.

Table 10 presents various algorithms used in Kinect camera-based technologies for analyzing sitting postures. The table includes the reference, the algorithm employed, the size of the dataset (measured in frames or videos), and the accuracy percentage where available. Here’s a detailed breakdown of each row. The table illustrates the diversity of approaches in the field of sitting posture recognition using Kinect cameras, highlighting the importance of algorithm choice and dataset size in achieving high accuracy.

Table 10.

Algorithms in Kinect camera-based technologies.

5. Integration with IoT, Alerts, and Energy Consumption

5.1. Integration with IoT

The emergence of the Internet of Things (IoT) has facilitated the creation of measurement systems specifically designed to prevent health issues and monitor conditions in smart homes and workplaces. These IoT systems can aid in monitoring individuals engaged in computer-based tasks, helping to prevent the onset of common musculoskeletal disorders associated with prolonged incorrect sitting postures during work hours. Many studies have incorporated this feature into posture recognition systems such as Muppavaram et al. [61] connected to the AWS cloud using the ESP8266 Wi-Fi module and used the RESTful API to exchange data between the controller and the Elastic Cloud Compute instance deployed on the cloud. Data is stored in a MySQL database and generates reports for end users as well as for health practitioners. Mura et al. [71] sent the sensor data to a desktop computer, which employed a Java-based algorithm to identify posture. Each posture adjustment was documented in an online database to facilitate additional examination of the seating habits. Hu et al. [82] gathered pressure data from sensors in an office and sent it to a mobile application through Bluetooth connectivity, which in turn employed a machine learning algorithm to analyze sitting posture, transmitting the recorded posture data to a remote web server. It also generated alerts in visual form on the smartphone. Ishac et al. [83] connected to mobile applications using BLE, which in turn provided connectivity to the outside world using Wi-Fi or cellular data.

5.2. Feedback and Alerts

Sitting posture recognition systems often incorporate feedback mechanisms to help users maintain or correct their posture. The type of feedback provided can vary depending on the system’s design, intended application, and user preferences. Visual feedback involves providing information through visual cues that the user can see. This can be performed via screens, indicators, or graphical interfaces. Auditory feedback involves using sound cues to notify the user of their posture status. This can include tones, spoken messages, or alarms. Haptic feedback uses tactile sensations to alert the user, typically through vibrations or gentle pressure applied to the body. Delayed feedback provides an overview or summary of the user’s posture over a specific period, rather than immediate alerts. This can be used for longer-term posture training or assessment. The choice of feedback type in a sitting posture recognition system depends on the specific use case, user preferences, and the desired outcomes. Combining multiple types of feedback can be particularly effective, providing a comprehensive approach to posture correction and long-term habit formation. The examples of feedback used in existing literature are given in Table 11.

Table 11.

Types of feedback used in posture recognition systems.

5.3. Energy Consumption

Energy consumption is an important aspect to consider when evaluating the overall feasibility and sustainability of posture recognition systems. Many factors contribute to the consumption, which includes type of sensor, number of sensors, data acquisition system, and computation complexity of the algorithm used. The type of sensors used in smart sensing chairs can significantly impact energy consumption. For instance, pressure sensors and textile pressure sensors may have different power requirements compared to more complex sensors such as image sensors or Inertial Measurement Units (IMUs). IMUs can be power-intensive when used in wearable devices requiring efficient power management strategies. Flex sensors typically consume very little power, making them suitable for battery-operated devices or systems where power efficiency is important, such as in wearable posture monitors. Passive RFID tags consume virtually no power, as they rely on the reader for energy, while active RFID tags, which have a battery, typically consume between 10 and 100 mW. RGB cameras and depth cameras (such as Kinect) are the most power-intensive. RGB cameras can consume significant power, ranging from 200 to 500 mW for low-resolution cameras to several watts (W) for high-definition or high-frame-rate cameras. Depth cameras, such as the Kinect, can consume significant power, typically between 2 and 10 W [129], depending on the resolution and frame rate. The choice of low-power sensors can help minimize energy usage while maintaining functionality. Also, these systems are designed for real-time posture monitoring, which may require continuous operation of sensors and data processing units. This continuous operation can lead to higher energy consumption compared to systems that operate intermittently. Implementing energy-efficient algorithms and sleep modes for sensors when not in use could help mitigate this issue. Also, a sparse sensing configuration [37,48] consumes less energy compared to a sensor array, which typically contains a large number of sensors, and scanning them requires more energy [64,65,74,83].

The choice of hardware also contributes to energy consumption, as most of the systems deploy microcontrollers for the acquisition of data from the sensors and technologies to detect and identify different sitting positions. The popular microcontroller choices for data collection include Arduino Mega [60,64,71,81,93,94], STM32 [59,75,90], Atmel Mega 328 [61,62,83], ESP32 [74], and Odroid N2+ [95]. These platforms typically use higher energy as they are not customized and thus contain peripherals that are never used but do contribute to power. Therefore, the use of customized hardware may contribute to the reduction in energy consumption [61].

The energy required for data processing and analysis is another consideration [62]. If the processing is completed locally on the chair, it may consume more energy than if data is sent to a cloud server for processing. Balancing local processing with cloud computing can optimize energy use while ensuring timely feedback for users. For smart sensing chairs that are portable or require battery power, energy consumption directly affects battery life. Efficient energy management strategies are essential to ensure that the chairs can operate for extended periods without frequent recharging, which is crucial for user convenience and sustainability. This can be achieved through duty cycle management [28] and by introducing the sleep mode [62]. The design of user feedback systems can also influence energy consumption. For example, using visual or auditory feedback may require additional power, while haptic feedback systems could be more energy efficient. The choice of feedback mechanisms should consider both user experience and energy efficiency.

The machine learning algorithms are executed on resource-constrained computing devices such as the Raspberry Pi 4 [65,88,96] or data is fed to laptops [80,91,97]. The power consumption of algorithms for sitting posture recognition varies widely depending on their complexity and the computational resources they require. Simple algorithms such as threshold-based methods and decision trees are highly energy-efficient, making them suitable for low-power devices such as wearables and battery-operated systems. More complex algorithms, such as SVMs, neural networks, and HMMs, offer greater accuracy and flexibility but require significantly more power, making them better suited for applications where power is not a limiting factor or where high computational resources are available. Though significant advances have been made in optimizing the ML algorithms, estimating the energy consumed accurately is still a problem [144].

6. Challenges and Future Recommendations

In the development and implementation of sitting posture monitoring systems based on machine learning algorithms and sensor technology, several challenges are encountered. Some of the common challenges faced include:

6.1. Sensor-Related Challenges

- The current state of sitting position detection employs costly high-resolution pressure sensor arrays or invasive wearable/visual sensors, which prevents widespread deployment [87,145].

- Many sensor-based systems rely on specific sensor devices, which can hinder widespread adoption and limit flexibility in real-world environments [58].

- Proper placement of inertial sensors or pressure sensors on the body or chair to capture relevant data for posture detection can be challenging. Incorrect sensor placement may lead to inaccurate readings and unreliable results. There are no scientifically proven guidelines for the optimal number of sensors used and their placement. There is a tradeoff between area coverage and the number of sensors used. Discrete pressure sensors can be strategically placed at specific points on the seating surface, allowing for customization based on the user’s anatomy and seating preferences. This flexibility in sensor placement enables targeted monitoring of key pressure points, such as the seat and backrest, to provide detailed posture feedback. The pressure sensor array provides the benefit of more comprehensive and detailed information about the distribution of pressure across the seated surface due to its high spatial resolution [74,101].

- Ensuring the monitoring system is portable, comfortable to wear, and does not restrict movement is important for user acceptance. Designing wearable devices that are discreet and practical for daily use presents a challenge.

- Balancing the need for accurate sensing capabilities with user comfort and wearability to ensure that the textiles are comfortable to wear for extended periods [136].

- Integrating multiple sensors, such as the pressure and the infrared sensor arrays, into a cohesive system can be challenging. Ensuring that the sensors work together seamlessly to capture relevant data for sitting posture recognition without interference or data loss may have been a challenge [54,56,96].

- Combining data from different modalities, such as pressure and infrared sensors, to accurately recognize sitting postures requires sophisticated data fusion techniques. Challenges may have arisen in developing and optimizing algorithms for fusing multimodal data effectively [87,96,108].

- Collecting and processing a large amount of data from sensors in real-time can be computationally intensive. Efficient data processing techniques are required to handle the volume of data generated by the sensors [137].

- Existing sensor-based methodologies, such as video-based and wearable sensor-based systems, raise privacy concerns due to the intrusive nature of the sensors [146].

- While some systems achieve high accuracy in recognizing sitting postures, there are limitations in terms of accuracy range and usability, with accuracy ranging from 80% to 99.1% [73,108,137].

- Limited sample size is one of the challenges faced by the researchers [25,90,97].

6.2. Video Camera Related Challenges

- Postures may be partially occluded by objects or other body parts [72,118].

- Real-time processing is more confusing than offline applications, especially in healthcare, sports, and military applications [60,126].

- Environment conditions might affect the accuracy of the recognition, such as light, distance, camera quality and angle, and outside weather conditions [90,116].

- Data collection and privacy is another critical point to consider. Private data usage concerns affect this research works [87,124].

- Training and testing data availability is another challenge. Usually in machine learning algorithms we need to collect large datasets, which is time-consuming and expensive [84,121,128].

- There are several wearable devices, and cameras are used in posture recognition. It might be difficult to accept by users [74,88].

- It is difficult to make a general model because there are several types of postures and clothes [116].

- Ambiguity is another challenge. Postures might close each other, and our models cannot distinguish between them [66,98].

6.3. Algorithm-Related Challenges

- Training ML models for posture identification requires a significant volume of labeled data and optimization of algorithms. Fine-tuning models to achieve high accuracy and generalizability can be a complex task.

- Ensuring the accuracy and precision of posture recognition algorithms is crucial for reliable monitoring. Variability in individual postures and movements can pose challenges in accurately classifying sitting positions.

- Optimizing the hardware prototype and deep learning model for efficient data collection, processing, and recognition of sitting postures may have presented technical challenges. Balancing performance, power consumption, and cost-effectiveness of the system could have been areas of focus.

- There is a need for algorithm optimization so that algorithms can perform calculations efficiently and effectively within the stringent time constraints of real-time systems [137].

- Lack of dataset [75].

6.4. Other Challenges

- Providing real-time feedback to users about their posture and encouraging corrective actions can be a challenge. Designing user-friendly interfaces and feedback mechanisms is essential for user engagement and behavior modification.

- Integrating sensor data with machine learning algorithms and feedback mechanisms in a seamless system requires coordination across different components. Ensuring compatibility and synchronization between hardware and software components is essential.

- Balancing the cost of implementing the intelligent correction seat system with its effectiveness and benefits can be a challenge, especially in ensuring affordability for widespread adoption.

- Achieving high accuracy in recognizing a wide range of sitting postures across different individuals and scenarios can be challenging. Ensuring that the system can generalize well to various body types, postures, and environmental conditions may have been a challenge [59].

- Encouraging user acceptance and compliance with the system for long-term monitoring of sitting postures in a workplace setting can be challenging. Ensuring that the system is user-friendly, non-intrusive, and provides meaningful feedback to users may have been a consideration.

- Addressing the diverse range of user heights, weights, and sitting preferences poses a challenge in creating a universal correction solution that caters to the needs of different individuals.

- The creation of useful sitting posture monitoring systems with pressure sensors is severely hampered by the intrinsic complexity of human behavior [58].

- Many existing solutions, such as video-based approaches, are impractical due to privacy concerns and reliance on specific sensor devices, highlighting the challenge of developing non-intrusive and privacy-preserving systems [102].

Addressing these challenges through robust system design, algorithm optimization, user-centered design principles, and continuous testing and refinement can lead to the development of effective sitting posture monitoring systems that promote better posture habits and overall well-being.

6.5. Future Directions

There are several works that have been performed in sitting posture detection in the literature. The researchers in this work aim to improve accuracy, usability, and applicability. Researchers suggest increasing hardware, data volume, and posture classes and enhancing classification algorithms to achieve these aims. In addition to that, some of the researchers are planning to work on real-time applications for healthcare, entertainment, and traffic. Using a small number of sensors and multiple posture detections are the other challenges in this area.

- The researchers plan to expand the dataset to include more participants with diverse health situations and ages. Additionally, they aim to realize a complete posture detection system based on ML algorithms with a feedback system to warn users of bad posture detection.

- By employing multi-sensors and a neural network algorithm, sitting posture can be more accurately monitored, and ambient influences may be avoided. The multi-sensor approach offers multi-dimensional analysis and action recognition with enhanced range and accuracy.

- Optimizing sensor layout to improve resolution, exploring the system’s capability to recognize additional sitting postures related to health risks, and testing the system on individuals with various physical characteristics.

- Development of personalized correction solutions tailored to individual users based on their specific sitting habits, body characteristics, and ergonomic needs.

- Customizing sensor placement based on individual user characteristics, such as height and build, to ensure accurate pressure readings and symmetrical sensor positioning.

- Conducting clinical validation studies to evaluate the effectiveness of the correction seat system in preventing and reducing musculoskeletal disorders associated with prolonged sitting.

- Focus on enhancing the user experience of the correction seat system through ergonomic design, intuitive interfaces, and customizable settings to promote user engagement and adherence.