A Class-Incremental Learning Method for Interactive Event Detection via Interaction, Contrast and Distillation

Abstract

1. Introduction

- We introduce an interactive event-detection mode: “Machine recommends event triggers→Manual review and correction→Machine incremental learning”. This model better aligns with the operational mode required for high-precision applications and provides a reference for subsequent research.

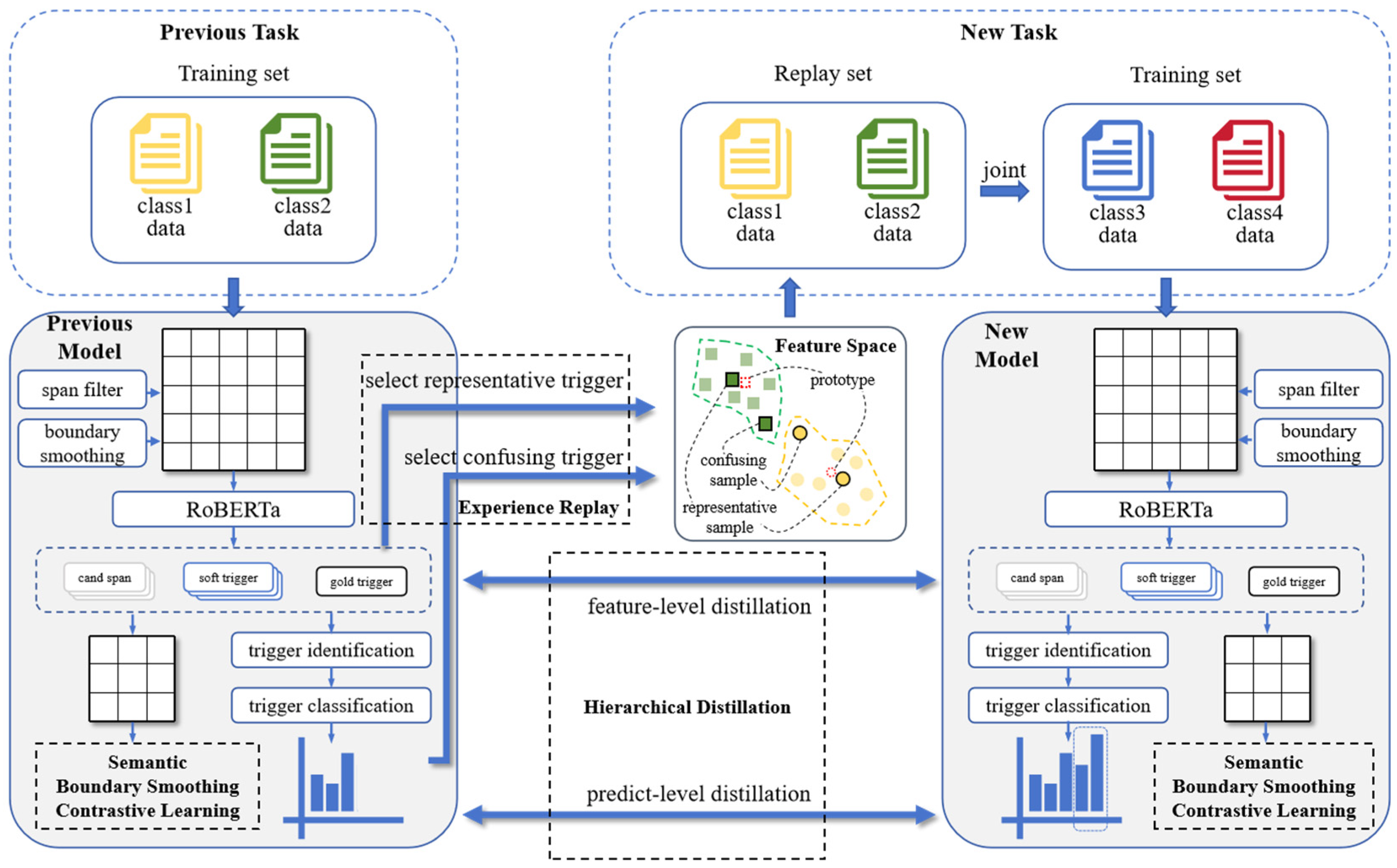

- We designed the ICD method for class-incremental learning in interactive event-detection tasks. This method constructs a replay set based on representative and confusable samples, designs semantic-boundary smoothing-based contrastive learning to improve performance with few samples, and employs hierarchical distillation to preserve existing knowledge.

- Experimental results demonstrate that our method significantly outperforms other baseline methods in class-incremental learning tasks within interactive detection. Specifically, under the 2-way 5-shot and 2-way 10-shot conditions of ACE and DuEE, our method improves upon the best baseline methods by 6.86%, 4.53%, 3.90%, and 3.00%, respectively.

2. Related Work

2.1. Event Detection

2.1.1. Span-Based Classification

2.1.2. Sequence Labeling

2.1.3. Pointer Networks

2.1.4. Others

2.2. Incremental Learning

2.3. Class-Incremental Learning in Event Detection

3. Problem Definition

4. Method

4.1. Event-Trigger Recommendation Backbone

| Algorithm 1 Candidate recommendation algorithm considering multiple events | |

| Input: candidate trigger set , candidate trigger confidence tuple set , where Output: candidate triggers and event type for all potential events | |

| 1. | # Initialize the model output |

| 2. | Arrange the in descending order according to trigger confidence |

| 3. | # The set of candidate triggers representing potential events |

| 4. | for in do |

| 5. | if does not overlap with any do |

| 6. | |

| 7. | end if |

| 8. | end for |

| 9. | for in do |

| 10. | # Candidate triggers and their confidence tuple for one potential event |

| 11. | for in do |

| 12. | if overlaps with do |

| 13. | |

| 14. | end if |

| 15. | end for |

| 16. | # For each potential event, the maximum K joint confidences are found, and their corresponding tuples, like (candidate trigger, event type, joint confidence), are output as recommendations |

| 17. | end for |

4.2. Experience Replay

4.2.1. Representative Sample

4.2.2. Confusable Sample

4.3. Semantic-Boundary-Smoothing Contrastive Learning

4.4. Hierarchical Distillation

4.4.1. Feature-Level Distillation

4.4.2. Predict-Level Distillation

4.5. Model Training

5. Experiments

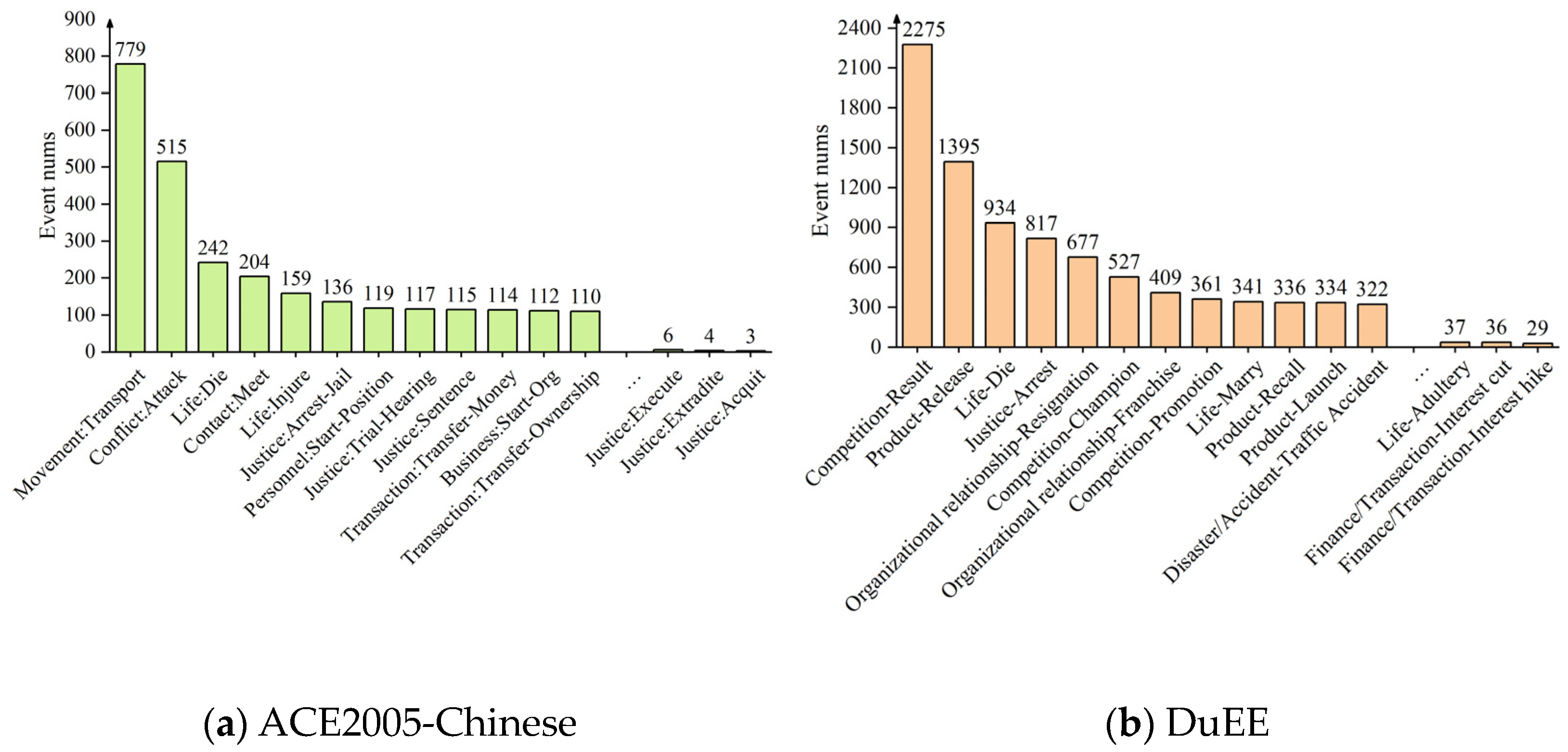

5.1. Benchmarks and Evaluation Metrics

5.2. Implementation Details

5.3. Baseline Methods

5.4. Main Results

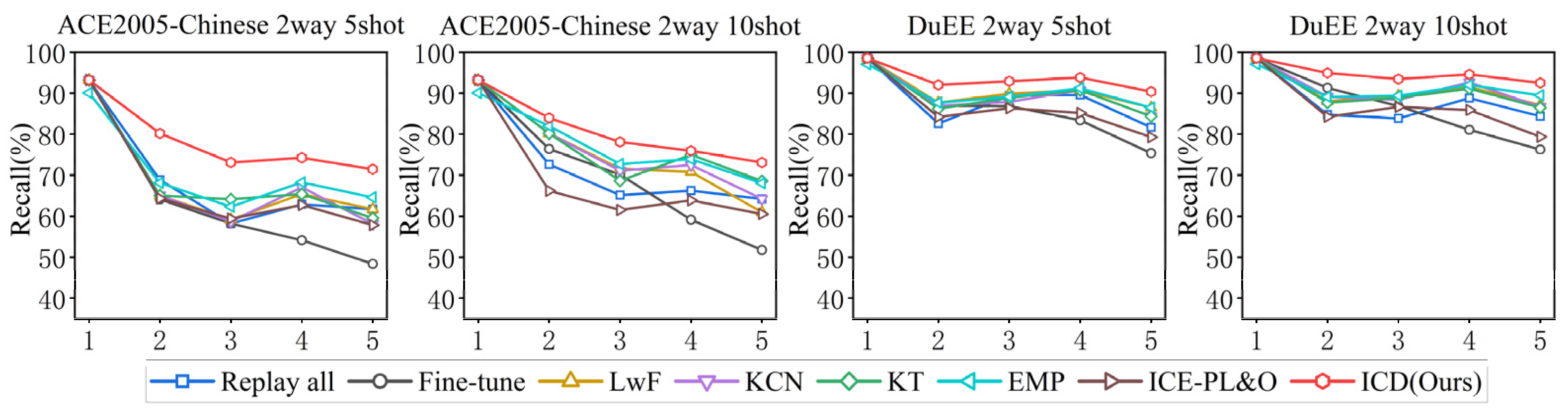

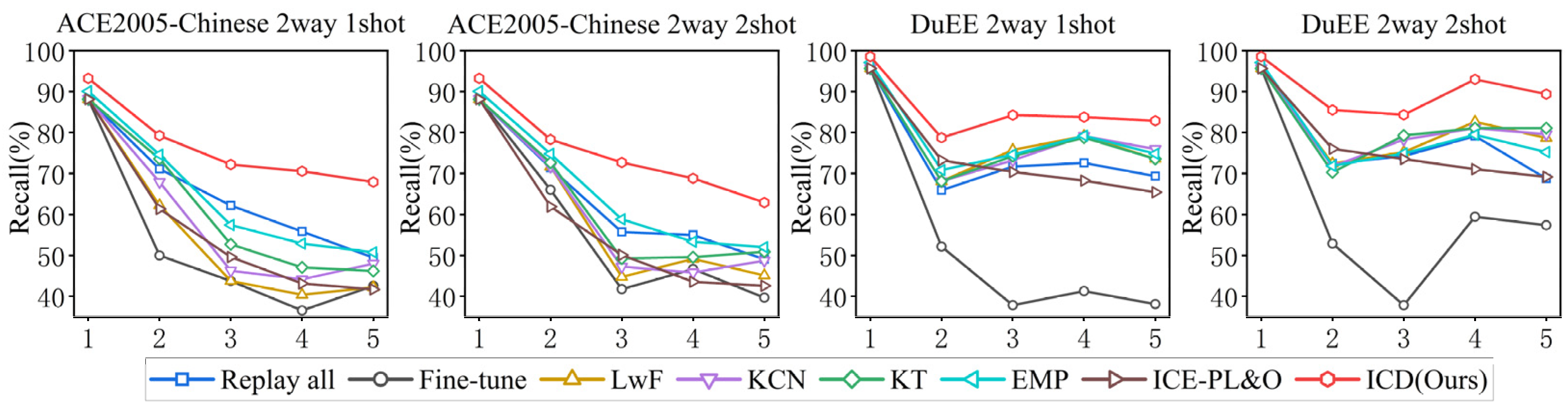

- Compared to previous incremental-learning baseline methods, our approach significantly outperforms these methods across all sub-tasks. Specifically, in the ACE 5-shot and 10-shot, and DuEE 5-shot and 10-shot settings, our method improves the final recall rate by 6.86%, 4.53%, 7.69%, and 4.53%, respectively, compared to the best baseline model. This demonstrates the clear effectiveness of our method in handling continuously arriving data.

- Although the Joint method retrains using all available data, it is not always the optimal baseline method. In our setup, the initial task has a sufficient number of samples, while the training samples for subsequent new tasks are limited. This imbalance in sample sizes affects the performance of the Joint method. In contrast, our approach improves upon Joint by 6.86%, 5.69%, 3.90%, and 3.00% in the ACE 5-shot and 10-shot, and DuEE 5-shot and 10-shot settings, respectively. This indicates that our method effectively mitigates the negative impact of sample imbalance in few-shot incremental learning conditions.

- The recall rates in the 10-shot setting are generally higher than in the 5-shot setting, which suggests that increasing the number of samples is an effective way to enhance model performance. This implies that generating more samples can address the challenges posed by few-shot learning. Additionally, our performance on ACE is noticeably better than on DuEE. We believe this is because ACE involves greater variability in the expression patterns of the same event type, making it more challenging, whereas DuEE’s triggers have a more consistent style, which is relatively simpler.

5.5. Ablation Study

- Effectiveness of Replay: The inclusion of old-class data in replay increases the final recall rate by 8.41% and 5.70%, respectively, demonstrating a significant effect of storing old data on incremental learning. Furthermore, compared to random replay, selecting representative and confusable samples improves the recall rate of the final sub-task by 4.18% and 2.55%, respectively. In addition, the ablation of either component leads to a decrease in the final recall rate, while the simultaneous ablation of both components results in an even further decline. This indicates that each component plays a positive role, and, when used together, they produce additional benefits, highlighting the fact that constructing replay samples from these two perspectives adds more value.

- Effectiveness of Contrastive Learning: Our method improves the final recall rate by 5.68% and 4.10%, compared to the removal of contrastive learning. This suggests that, in the context of few-shot incremental learning, using contrastive learning with spans around gold triggers provides additional information to the model, thereby improving performance.

- Effectiveness of Hierarchical Distillation: Compared to the exclusion of hierarchical distillation, our method achieves an increase in final recall rate by 1.71% and 2.06%, respectively. This relatively modest improvement indicates that while distillation loss helps retain original knowledge, its impact is somewhat limited.

5.6. In-Depth Analysis

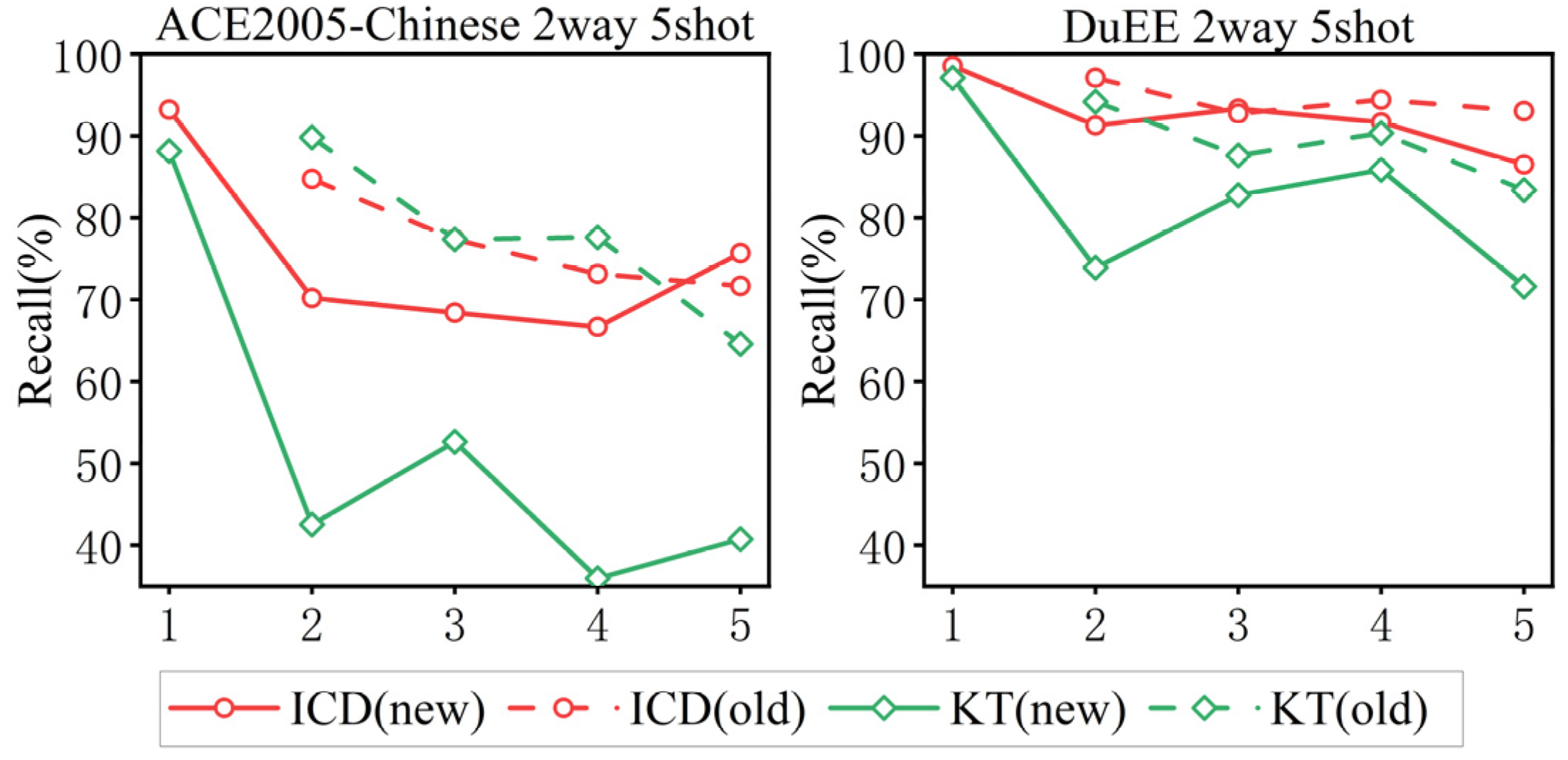

5.6.1. Analysis of Old- and New-Class Performance

5.6.2. Analysis of Positive/Negative Threshold for Contrast

5.6.3. Evaluation in Extreme Scenarios

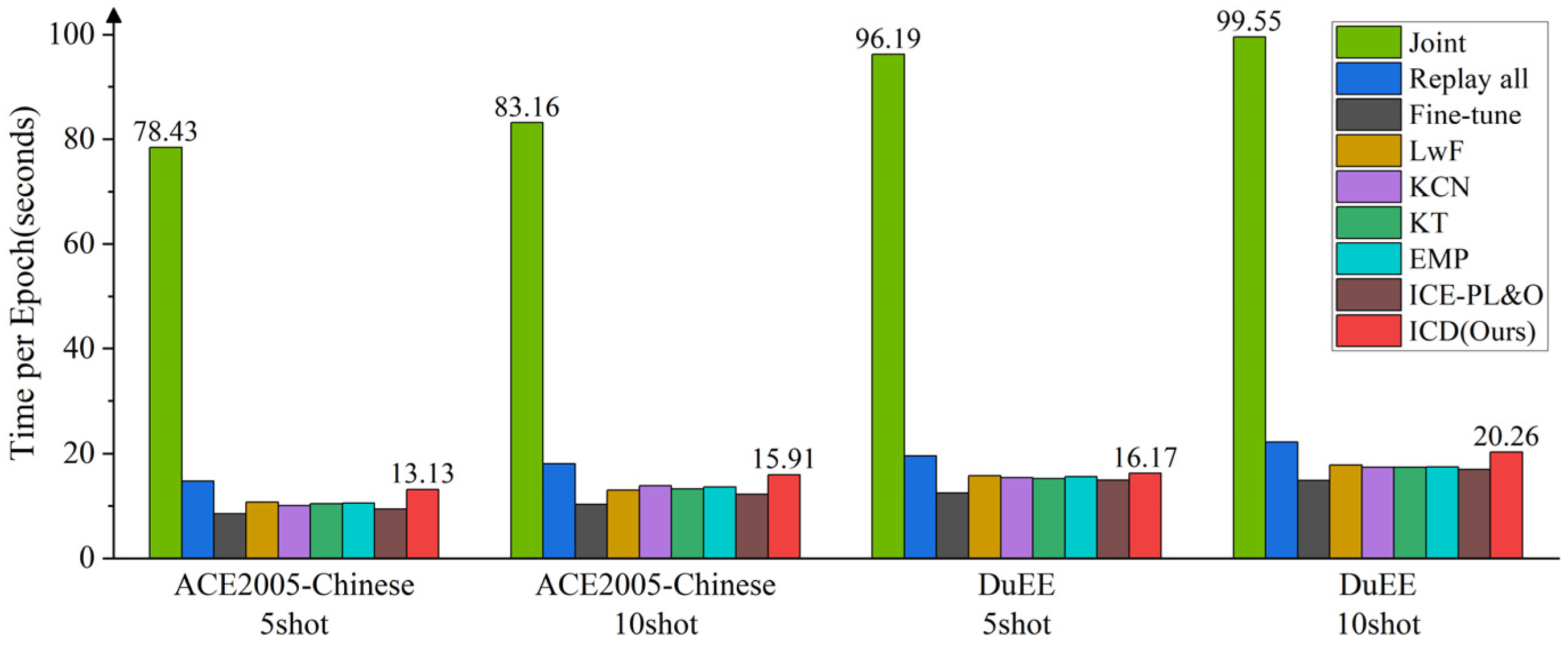

5.6.4. Analysis of Computational Complexity

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Duan, J.; Zhang, X. Chinese Event Trigger Recommendation Model for High-Accuracy Applications. Preprints 2024, 2024091129. [Google Scholar] [CrossRef]

- Cao, P.; Chen, Y.; Zhao, J.; Wang, T. Incremental Event Detection via Knowledge Consolidation Networks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing 2020, Online, 16–20 November 2020; pp. 707–717. [Google Scholar] [CrossRef]

- Yu, P.; Ji, H.; Natarajan, P. Lifelong Event Detection with Knowledge Transfer. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online, 7–11 November 2021. [Google Scholar]

- Liu, M.; Chang, S.; Huang, L. Incremental Prompting: Episodic Memory Prompt for Lifelong Event Detection. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2157–2165. [Google Scholar] [CrossRef]

- Liu, M.; Huang, L. Teamwork Is Not Always Good: An Empirical Study of Classifier Drift in Class-incremental Information Extraction. arXiv 2023, arXiv:2305.16559. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Chen, Y.; Xu, L.; Liu, K.; Zeng, D.; Zhao, J. Event Extraction Via Dynamic Multi-Pooling Convolutional Neural Networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; Volume P15-1, pp. 167–176. [Google Scholar]

- Wang, S.; Yu, M.; Chang, S.; Sun, L.; Huang, L. Query and Extract: Refining Event Extraction as Type-oriented Binary Decoding. In Findings of the Association for Computational Linguistics: ACL 2022; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 169–182. [Google Scholar] [CrossRef]

- Wang, S.; Yu, M.; Huang, L. The Art of Prompting: Event Detection based on Type Specific Prompts. arXiv 2022, arXiv:2204.07241. [Google Scholar]

- Guan, Y.; Chen, J.; Lecue, F.; Pan, J.; Li, J.; Li, R. Trigger-Argument based Explanation for Event Detection. In Findings of the Association for Computational Linguistics: ACL; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023. [Google Scholar]

- Nateras, L.G.; Dernoncourt, F.; Nguyen, T. Hybrid Knowledge Transfer for Improved Cross-Lingual Event Detection via Hierarchical Sample Selection. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, USA, 9–14 July 2023. [Google Scholar]

- Yue, Z.; Zeng, H.; Lan, M.; Ji, H.; Wang, D. Zero- and Few-Shot Event Detection via Prompt-Based Meta Learning. arXiv 2023, arXiv:2305.17373. [Google Scholar]

- Liu, J.; Sui, D.; Liu, K.; Liu, H.; Zhao, Z. Learning with Partial Annotations for Event Detection. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, USA, 9–14 July 2023. [Google Scholar]

- Xu, C.; Zeng, Z.; Duan, J.; Qi, Q.; Zhang, X. Extracting Events Using Spans Enhanced with Trigger-Argument Interaction. In Proceedings of the International Conference on Intelligent Systems and Knowledge Engineering, Fuzhou, China, 17–19 November 2023. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Cho, K.; Grishman, R. Joint Event Extraction via Recurrent Neural Networks; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016. [Google Scholar]

- Wei, Y.; Liu, S.; Lv, J.; Xi, X.; Yan, H.; Ye, W.; Mo, T.; Yang, F.; Wan, G. DESED: Dialogue-based Explanation for Sentence-level Event Detection. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2483–2493. [Google Scholar]

- Zheng, S.; Wang, F.; Bao, H.; Hao, Y.; Zhou, P.; Xu, B. Joint Extraction Of Entities And Relations Based on a Novel Tagging Scheme. arXiv 2017, arXiv:1706.05075. [Google Scholar]

- Tian, C.; Zhao, Y.; Ren, L. A Chinese Event Relation Extraction Model Based on BERT. In Proceedings of the 2019 2nd International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 25–28 May 2019. [Google Scholar]

- Cao, H.; Li, J.; Su, F.; Li, F.; Fei, H.; Wu, S.; Li, B.; Zhao, L.; Ji, D. OneEE: A One-Stage Framework for Fast Overlapping and Nested Event Extraction. arXiv 2022, arXiv:2209.02693. [Google Scholar]

- Li, H.; Mo, T.; Fan, H.; Wang, J.; Wang, J.; Zhang, F.; Li, W. KiPT: Knowledge-injected Prompt Tuning for Event Detection. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 1943–1952. [Google Scholar]

- Yang, S.; Feng, D.; Qiao, L.; Kan, Z.; Li, D. Exploring Pre-Trained Language Models for Event Extraction and Generation; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume P19-1, pp. 5284–5294. [Google Scholar]

- Xinya, D.; Claire, C. Event Extraction by Answering (Almost) Natural Questions; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 671–683. [Google Scholar]

- Yunmo, C.; Tongfei, C.; Seth, E.; Benjamin, V.D. Reading the Manual: Event Extraction as Definition Comprehension. arXiv 2020, arXiv:1912.01586. [Google Scholar]

- Li, F.; Peng, W.; Chen, Y.; Wang, Q.; Pan, L.; Lyu, Y.; Zhu, Y. Event Extraction as Multi-Turn Question Answering; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 829–838. [Google Scholar]

- Wan, X.; Mao, Y.; Qi, R. Chinese Event Detection without Triggers Based on Dual Attention. Appl. Sci. 2023, 13, 4523. [Google Scholar] [CrossRef]

- Yan, Y.; Liu, Z.; Gao, F.; Gu, J. Type Hierarchy Enhanced Event Detection without Triggers. Appl. Sci. 2023, 13, 2296. [Google Scholar] [CrossRef]

- Li, Z.; Hoiem, D. Learning Without Forgetting. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2935–2947. [Google Scholar] [CrossRef] [PubMed]

- D’Autume, C.D.M.; Ruder, S.; Kong, L.; Yogatama, D. Episodic Memory In Lifelong Language Learning. In Proceedings of the Advances in Neural Information Processing Systems 32 (NIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32, pp. 13122–13131. [Google Scholar]

- Rebuffi, S.-A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. Icarl: Incremental Classifier and Representation Learning. In Proceedings of the Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 5533–5542. [Google Scholar] [CrossRef]

- Lopez-Paz, D.; Ranzato, M.A. Gradient Episodic Memory For Continual Learning. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Mallya, A.; Lazebnik, S. PackNet: Adding Multiple Tasks to a Single Network by Iterative Pruning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7765–7773. [Google Scholar]

- Serrà, J.; Surís, D.; Miron, M.; Karatzoglou, A. Overcoming catastrophic forgetting with hard attention to the task. In Proceedings of the International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; Volume 80. [Google Scholar]

- Cao, Y.; Peng, H.; Wu, J.; Dou, Y.; Li, J.; Yu, P.S. Knowledge-Preserving Incremental Social Event Detection via Heterogeneous GNNs. In Proceedings of the WWW ’21: The Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 3383–3395. [Google Scholar]

- Wei, K.; Zhang, Z.; Jin, L.; Guo, Z.; Li, S.; Wang, W.; Lv, J. HEFT: A History-Enhanced Feature Transfer framework for incremental event detection. Knowl.-Based Syst. 2022, 254, 109601. [Google Scholar] [CrossRef]

- Luo, R.; Xu, J.; Zhang, Y.; Ren, X.; Sun, X. PKUSEG: A Toolkit for Multi-Domain Chinese Word Segmentation. arXiv 2019, arXiv:1906.11455. [Google Scholar]

- Li, X.; Li, F.; Pan, L.; Chen, Y.; Peng, W.; Wang, Q.; Lyu, Y.; Zhu, Y. DuEE: A Large-Scale Dataset for Chinese Event Extraction in Real-World Scenarios. In Natural Language Processing and Chinese Computing; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Kwon, H. Adversarial Image Perturbations with Distortions Weighted by Color on Deep Neural Networks. Multimed. Tools Appl. 2022, 82, 13779–13795. [Google Scholar] [CrossRef]

| Datasets | Task | Training Set | Validation Set | Test Set |

|---|---|---|---|---|

| ACE2005 | 100 | 30 | 30 | |

| 5/10 | 30 | 30 | ||

| DuEE2005 | 70 | 20 | 20 | |

| 5/10 | 20 | 20 |

| Item | Configuration |

|---|---|

| Operating system | Ubuntu 20.04.3 LTS x86_64 |

| CPU | 40 vCPU Intel(R) Xeon(R) Platinum 8457C |

| GPU | L20(48 GB) × 2 |

| Memory | 200 GB |

| Python | 3.8.10 |

| Framework | Pytorch(1.10.0) +Transformers(4.38.2) + Cuda(12.4) |

| Parameter | Description | Value | |

|---|---|---|---|

| ACE2005 | DuEE | ||

| Main parameters | |||

| trigger-identification threshold | 0.2 | 0.2 | |

| positive/negative threshold for contrast | 0.2 | 0.2 | |

| focusing parameter of modified focal loss | 2 | 2 | |

| limitation of span length | 5 | 5 | |

| Optimizer parameters | |||

| Learning_rate | initial learning rate | 1 × 10−5 | 1 × 10−5 |

| warm_up_steps | warm-up steps | 500 | 500 |

| lr_decay_steps | decay steps | 3000 | 3000 |

| min_lr_rate | minimum learning rate | 1 × 10−6 | 1 × 10−6 |

| ACE2005-Chinses | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Methods | 2-Way 5-Shot | 2-Way 10-Shot | ||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| Joint | / | / | / | / | 64.62 | / | / | / | / | 67.43 |

| Replay all | 93.22 | 68.87 | 58.21 | 62.92 | 61.73 | 93.22 | 72.64 | 65.17 | 66.25 | 64.26 |

| Fine-tune | 93.22 | 64.15 | 58.21 | 54.17 | 48.38 | 93.22 | 76.42 | 70.15 | 59.17 | 51.79 |

| LwF | 93.22 | 65.09 | 59.20 | 65.42 | 61.73 | 93.22 | 80.19 | 71.64 | 70.83 | 61.01 |

| KCN | 93.22 | 65.09 | 58.71 | 67.08 | 58.12 | 93.22 | 80.19 | 71.14 | 72.50 | 64.26 |

| KT | 93.22 | 65.09 | 64.18 | 65.42 | 59.57 | 93.22 | 80.19 | 68.66 | 75.00 | 68.59 |

| EMP | 90.08 | 68.12 | 62.34 | 68.27 | 64.62 | 90.08 | 82.01 | 72.75 | 73.94 | 68.08 |

| ICE-PL&O | 93.22 | 64.38 | 59.41 | 62.76 | 57.83 | 93.22 | 66.23 | 61.54 | 63.9 | 60.56 |

| ICD(Ours) | 93.22 | 80.19 | 73.13 | 74.28 | 71.48 | 93.22 | 83.96 | 78.12 | 75.97 | 73.12 |

| DuEE | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Methods | 2-Way 5-Shot | 2-Way 10-Shot | ||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| Joint | / | / | / | / | 84.08 | / | / | / | / | 87.99 |

| Replay all | 98.55 | 82.61 | 89.39 | 89.58 | 81.68 | 98.55 | 84.78 | 83.84 | 88.80 | 84.38 |

| Fine-tune | 98.55 | 86.96 | 86.87 | 83.40 | 75.38 | 98.55 | 91.30 | 86.87 | 81.08 | 76.28 |

| LwF | 98.55 | 87.68 | 89.90 | 90.73 | 86.49 | 98.55 | 88.04 | 89.39 | 91.51 | 87.09 |

| KCN | 98.55 | 86.96 | 87.88 | 90.73 | 84.38 | 98.55 | 89.13 | 88.38 | 92.66 | 86.67 |

| KT | 98.55 | 86.23 | 88.89 | 90.73 | 84.38 | 98.55 | 87.68 | 88.89 | 91.12 | 86.49 |

| EMP | 97.10 | 87.68 | 89.13 | 91.22 | 86.49 | 97.10 | 89.13 | 89.39 | 92.00 | 89.49 |

| ICE-PL&O | 98.55 | 84.22 | 86.34 | 85.19 | 79.28 | 98.55 | 84.26 | 86.66 | 85.90 | 79.37 |

| ICD(Ours) | 98.55 | 92.03 | 92.93 | 93.82 | 90.39 | 98.55 | 94.93 | 93.43 | 94.60 | 92.49 |

| DuEE | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Methods | 2-Way 5-Shot | 2-Way 10-Shot | ||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| ICD (Ours) | 98.55 | 92.03 | 92.93 | 93.82 | 90.39 | 98.55 | 94.93 | 93.43 | 94.60 | 92.49 |

| -replay | 98.55 | 90.66 | 88.89 | 84.17 | 81.98 | 98.55 | 89.20 | 89.93 | 91.66 | 86.79 |

| -replay (representative) | 98.55 | 92.03 | 92.07 | 92.81 | 87.24 | 98.55 | 94.39 | 91.16 | 92.99 | 91.13 |

| -replay (confusable) | 98.55 | 91.78 | 90.53 | 93.54 | 88.26 | 98.55 | 94.93 | 93.94 | 93.82 | 91.59 |

| -replay (repr&conf) | 98.55 | 92.16 | 89.53 | 92.54 | 86.21 | 98.55 | 94.93 | 90.47 | 91.74 | 89.94 |

| -contrast | 95.65 | 90.66 | 91.79 | 92.17 | 84.71 | 95.65 | 92.95 | 92.33 | 92.08 | 88.39 |

| -distill | 98.55 | 91.20 | 92.76 | 93.12 | 88.68 | 98.55 | 94.93 | 92.42 | 93.05 | 90.43 |

| Threshold | ACE | DuEE | ||

|---|---|---|---|---|

| 5-Shot | 10-Shot | 5-Shot | 10-Shot | |

| 0.1 | 89.69 | 91.89 | 68.59 | 71.11 |

| 0.2 | 90.39 | 92.49 | 71.48 | 73.12 |

| 0.3 | 89.69 | 91.29 | 66.07 | 71.11 |

| 0.4 | 87.69 | 86.49 | 63.90 | 71.11 |

| 0.5 | 85.59 | 87.69 | 62.46 | 67.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duan, J.; Zhang, X. A Class-Incremental Learning Method for Interactive Event Detection via Interaction, Contrast and Distillation. Appl. Sci. 2024, 14, 8788. https://doi.org/10.3390/app14198788

Duan J, Zhang X. A Class-Incremental Learning Method for Interactive Event Detection via Interaction, Contrast and Distillation. Applied Sciences. 2024; 14(19):8788. https://doi.org/10.3390/app14198788

Chicago/Turabian StyleDuan, Jiashun, and Xin Zhang. 2024. "A Class-Incremental Learning Method for Interactive Event Detection via Interaction, Contrast and Distillation" Applied Sciences 14, no. 19: 8788. https://doi.org/10.3390/app14198788

APA StyleDuan, J., & Zhang, X. (2024). A Class-Incremental Learning Method for Interactive Event Detection via Interaction, Contrast and Distillation. Applied Sciences, 14(19), 8788. https://doi.org/10.3390/app14198788