A Reliable Aggregation Method Based on Threshold Additive Secret Sharing in Federated Learning with Quality of Service (QoS) Support

Abstract

1. Introduction

- We construct a threshold additive secret sharing scheme and propose a reliable distributed federating learning system. The model can be aggregated even when some servers are offline or not functioning.

- We apply the QoS concept to federated learning to provide models of differing accuracy levels to users based on their QoS level.

- We implement the proposed federated learning system and evaluate it using two real datasets. We also analyze the probability of system failure and the successful attack rate in our t-out-of-n secret sharing scheme.

2. Related Work

2.1. Federated Learning

2.2. Privacy-Preserving Federated Learning

3. Preliminaries

3.1. 2-Out-of-2 Additive Secret Sharing

- On input secret S,

- select uniformly at random.

- set .

- output .

- On input ,

- Output .

3.2. Build 2-Out-of-n Additive Secret Sharing from 2-Out-of-2 Additive Secret Sharing

- On input secret S,

- For to

- -

- Execute .

- For to

- -

- Set , where binary representation of j is . Here we use q to represent , so , and j is

- output .

- On input ,

- Convert i and j to their binary representations, and .

- Find a digit position k where , the corresponding or the other way around, .

- Execute and output S, where .

4. Federated Learning with Threshold Secure Aggregation

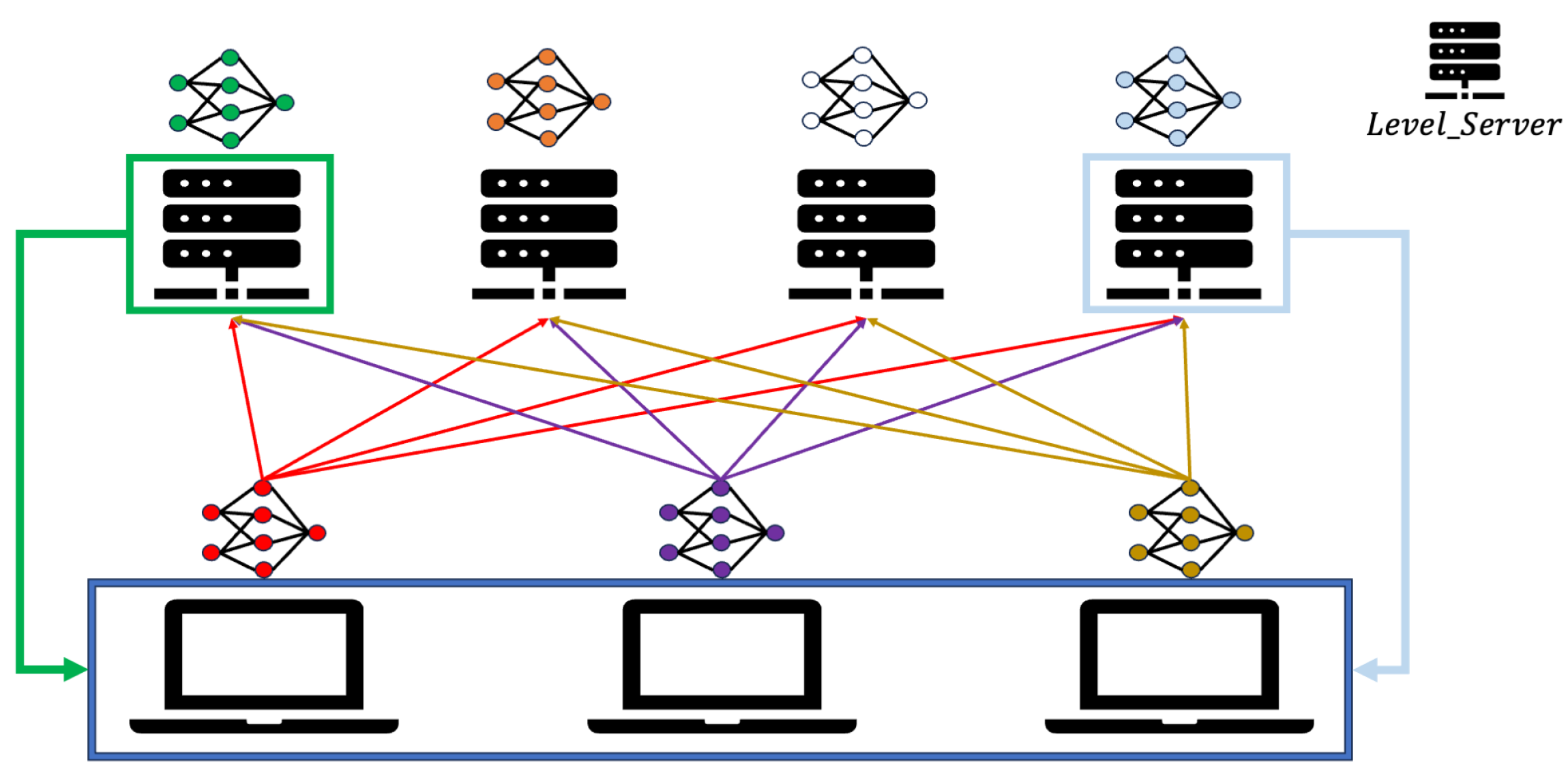

4.1. Overview

4.2. Threshold Additive Secret Sharing

4.2.1. n-Out-of-n Additive Secret Sharing

- On input secret S,

- For to

- -

- select uniformly at random.

- Set .

- Output .

- :

- On input ,

- Output .

4.2.2. Build t-out-of-n Additive Secret Sharing from t-out-of-t Additive Secret Sharing

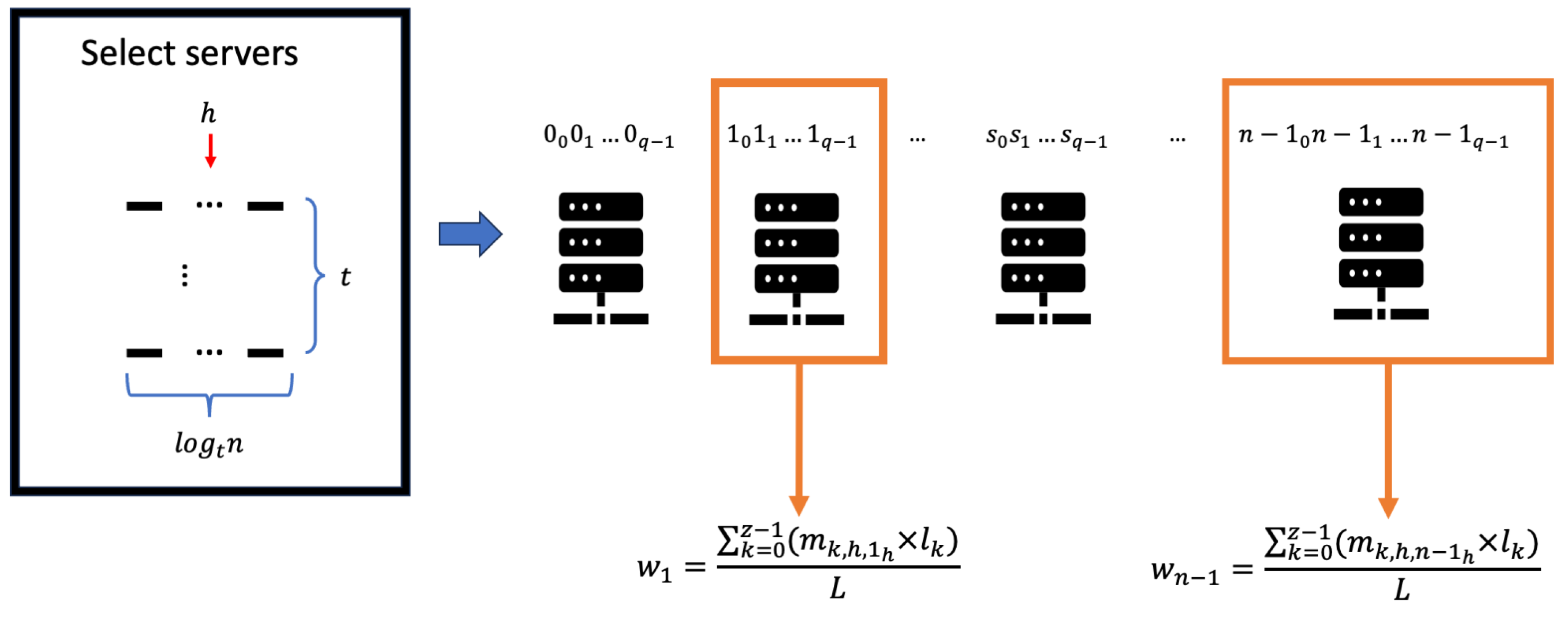

- On input secret S,

- For to q

- -

- Execute .

- For to

- -

- Set , where t-base representation of j is .

- Output .

- On input t Gs selected from ,

- Convert the index i of the selected t Gs into t-base representation. For example, if is selected, then and .

- Find a digit position k where the k-th digit of the t-base representation of the indices of the t selected Gs is different for each index, and use k to find corresponding . For example, if is selected, then , is the share we need. Finally, we obtain t shares from t selected Gs, and they can conveniently form .

- Execute and output S, where .

4.3. Proposed Approach

| Algorithm 1 Splitting gradients after local training |

| Input: all servers’ number length q, is the gradients obtained after training for , t is the number of servers that the clients choose to eventually aggregate. for to do for to do a random integer in the range F end for end for |

| Algorithm 2 Sending shares and data volume to servers |

| Input: is the q group of gradient shares of , is the identifier of , is the data volume of . for to do sends to end for sends to |

| Algorithm 3 Select servers |

| Input: number of servers n, all servers’ number length q, is the set of the number of each server, t is the number of servers that the clients choose to eventually aggregate. Output: , h while len(num) do random for to t do Generate a random q-digit number composed of the digits 0 to Replace the h-th digit with fixnum if e not in nume in S then num end if end for end while return num, h |

| Algorithm 4 Server aggregation |

| Input: num is the identifier of the selected t server, number of clients a, is the k-th share of the j-th secret shared by , is each client’s data volume, h is the different bit of the selected server. for r in num do for to z do Server r calculates weighted average end for return end for |

| Algorithm 5 Model update |

| Input: number of clients z, t is the number of servers that the clients choose to eventually aggregate, w is the weighted average of the gradient shares computed by the servers. for r in do Broadcast to each client end for Clients sum up all the weighted averages w they have received and use new gradients to update the model. |

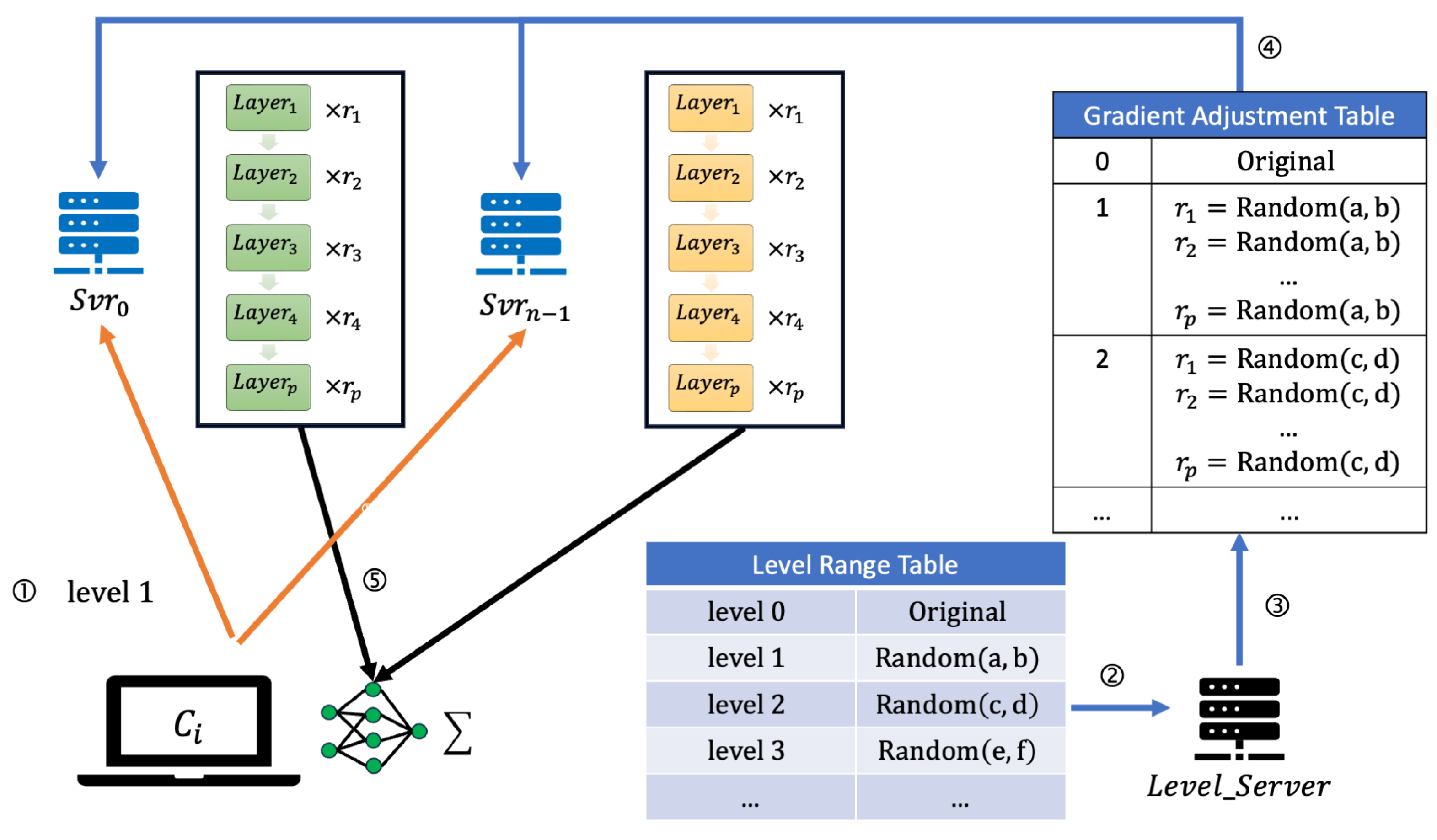

5. Quality of Service in Federated Learning

- Step 1:

- Before federated learning begins, clients choose their level according to their needs and pay the corresponding amount. All client choices are sent to each server in the first round of federated learning. The transmission process, shown in Algorithm 6, is very similar to the previous one, Algorithm 2, except that the clients send their selected level to the servers. Level information is only transmitted after the first round of local training in federated learning, and subsequent rounds are carried out according to Algorithm 2.

- Step 2:

- To allow clients of different levels to receive with different degrees of adjustment, we formulate the Level Range Table for the level server. The table lists the random number ranges for each level. In Step 4, the generated random numbers are used to adjust the gradients.

- Step 3:

- The level server generates a Gradient Adjustment Table. This table lists p random numbers () in the corresponding range for each level according to the Level Range Table (Algorithm 7).

- Step 4:

- The level server sends the Gradient Adjustment Table to t selected servers. These servers adjust the model gradients based on the level indicated by the client in Step 1. They multiply the i-th layer of the model by the corresponding level in the Gradient Adjustment Table (Algorithm 8).

- Step 5:

- Like the model update in Section 4.3 (Algorithm 5), t servers send the adjusted w to each client. After receiving t gradient shares from t servers, each client sums the shares to obtain new gradients corresponding to their level and then uses these new gradients to update the model.

| Algorithm 6 Sending information to servers |

| Input: is the q group of gradient shares of , is the identifier of , is the data volume of , is the level chosen by . for to do sends to end for sends and to |

| Algorithm 7 Generate Gradient Adjustment Table |

| Input: Level Range Table, model layer number p. Output: Gradient Adjustment Table . for each level do Generate p random number based on the Level Range Table. end for |

| Algorithm 8 Gradient adjustment |

| Input: Gradient Adjustment Table , the is the weighted average gradient shares by the , model layer number p, is the level selected by . for to p do The gradient of the j-th layer in is adjusted by multiplying with of the in . end for |

6. Evaluation

6.1. Learning Performance and Computational Cost

6.1.1. Experimental Environment

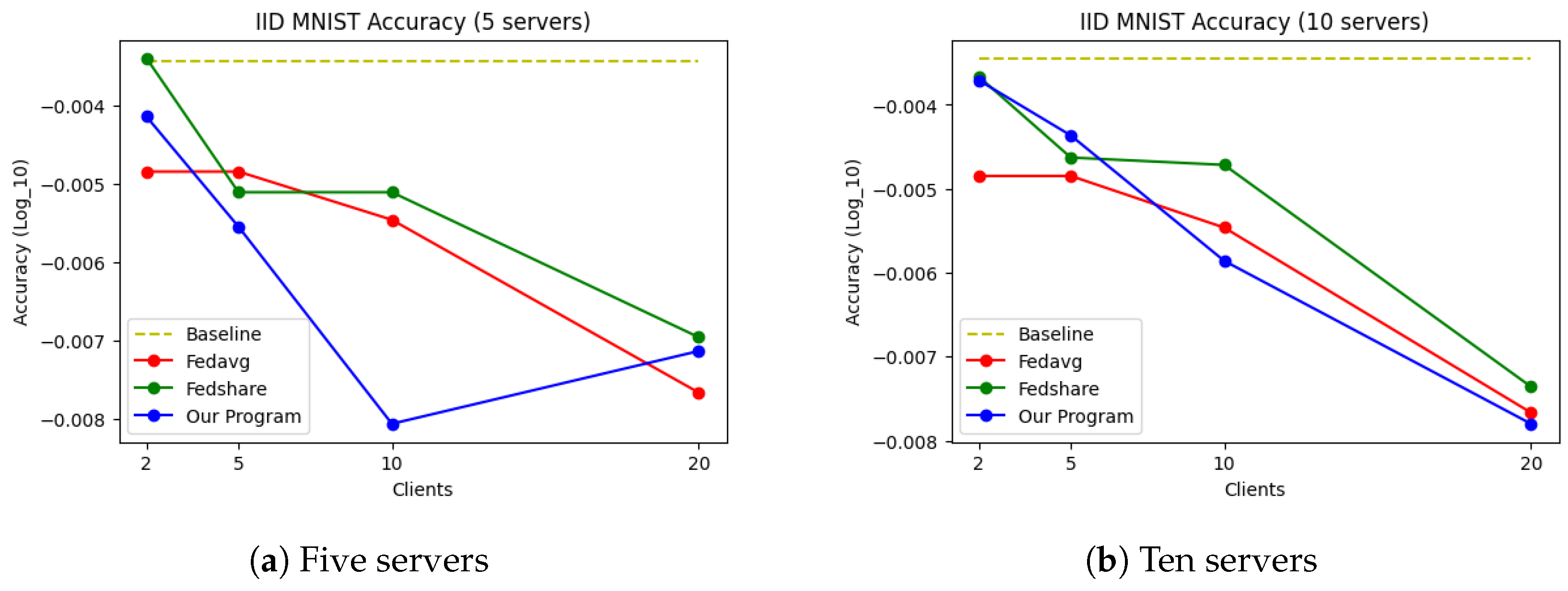

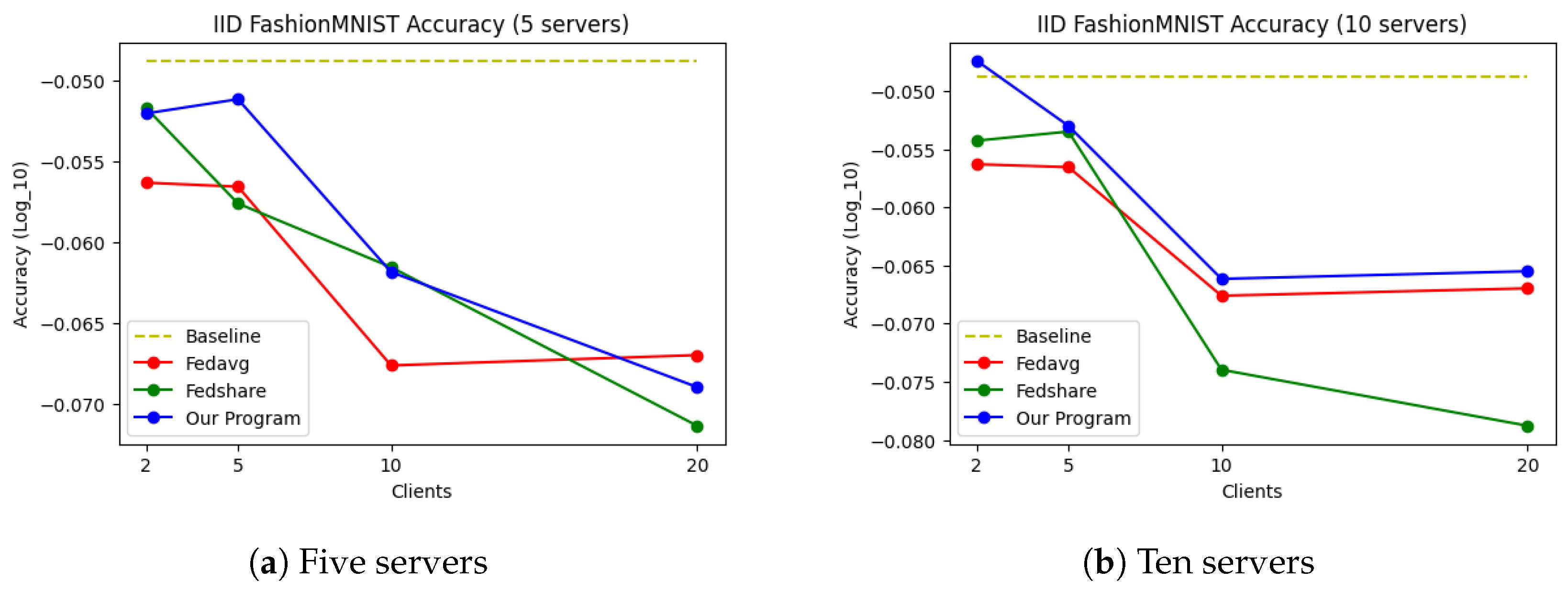

6.1.2. Accuracy Comparison for Independent and Identically Distributed Data (IID)

6.1.3. Accuracy Comparison for Non-Independent and Non-Identically Distributed Data (Non-IID)

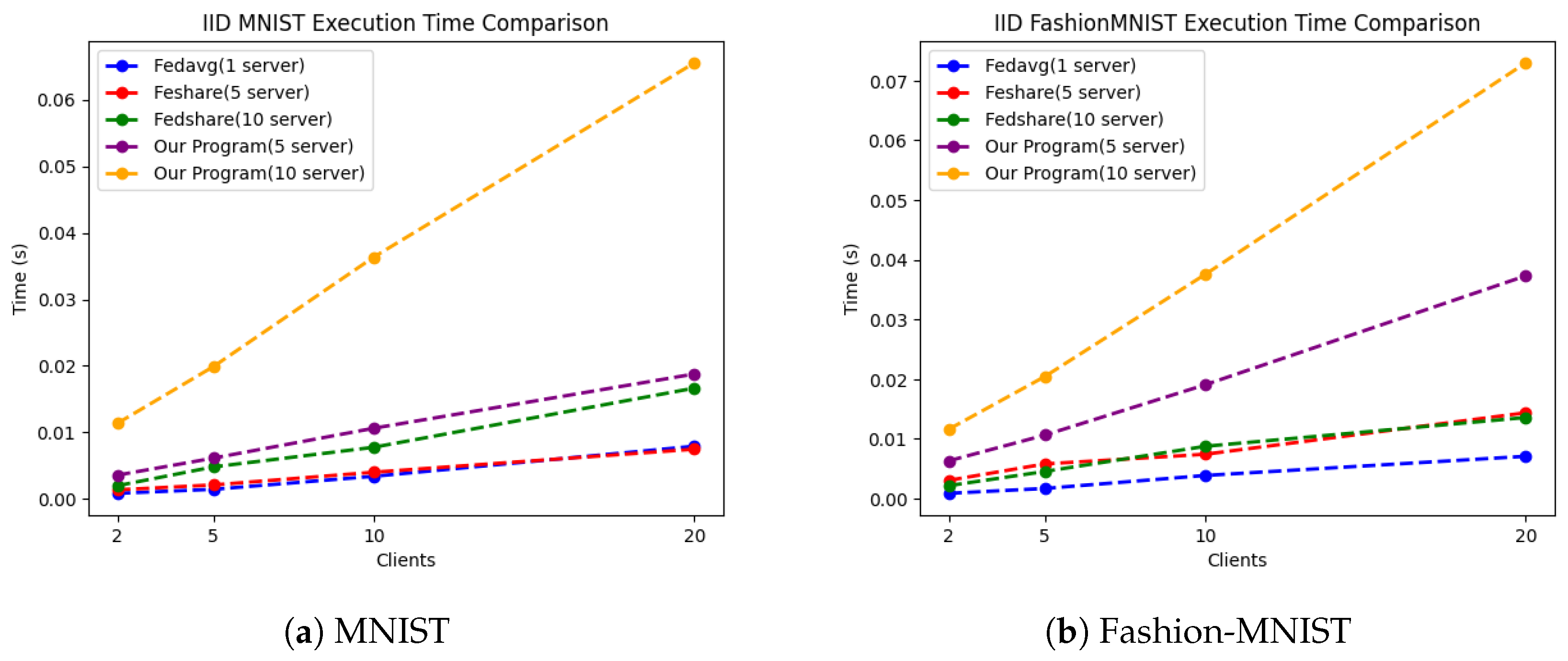

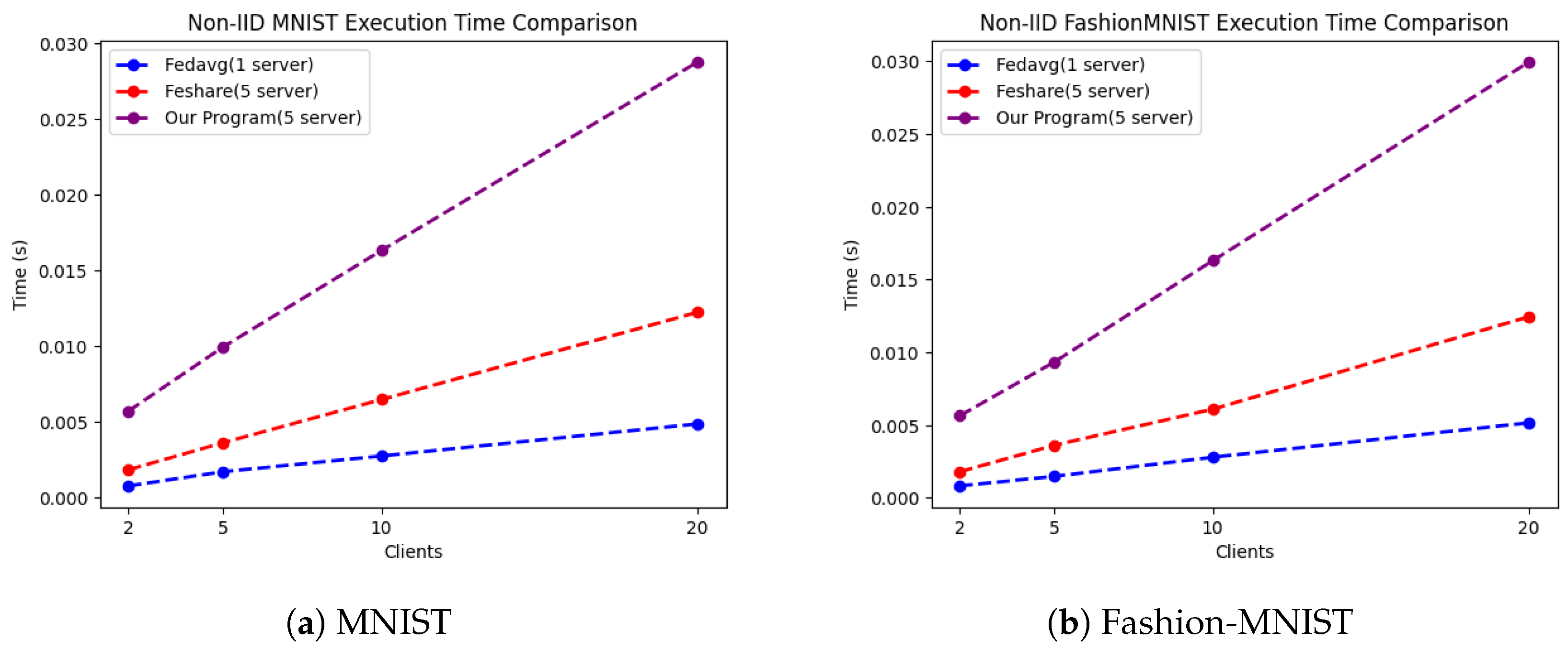

6.1.4. Computational Cost for IID and Non-IID Data

6.2. The Recovery Probability for Server Errors

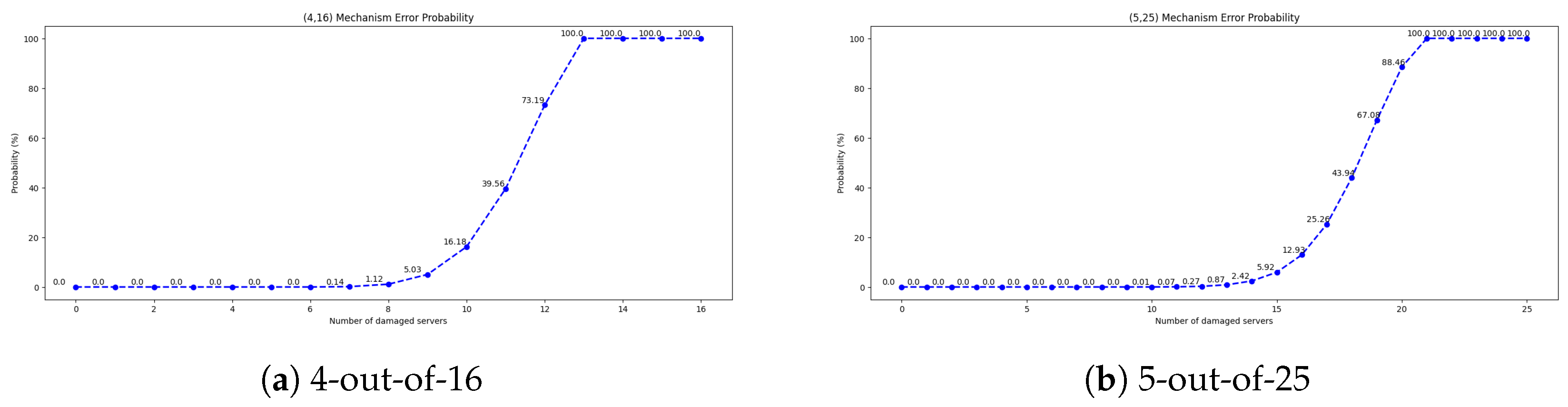

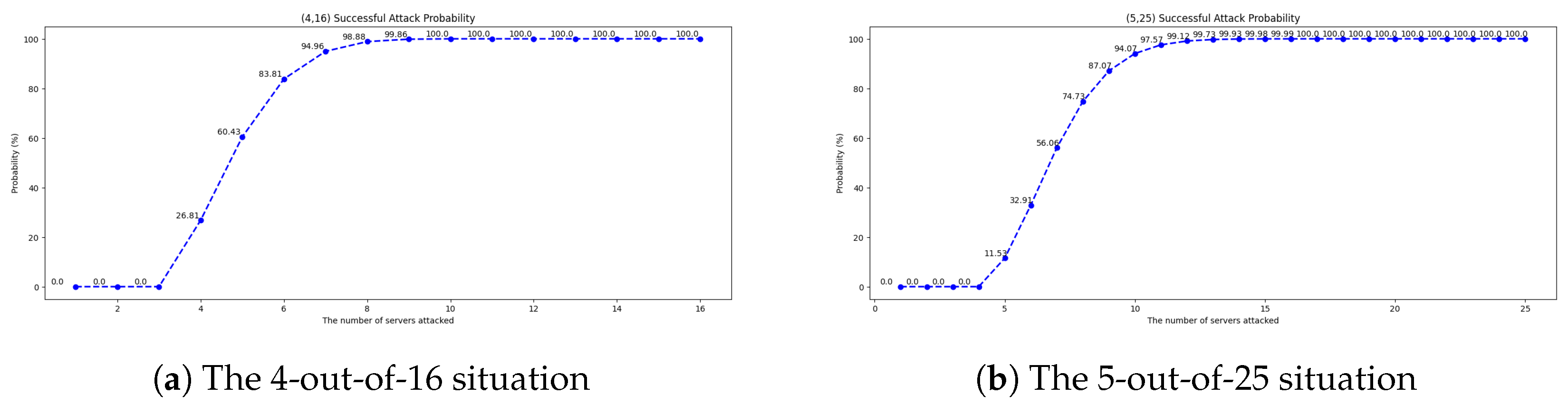

6.3. The Successful Attack Probability for Compromised Servers

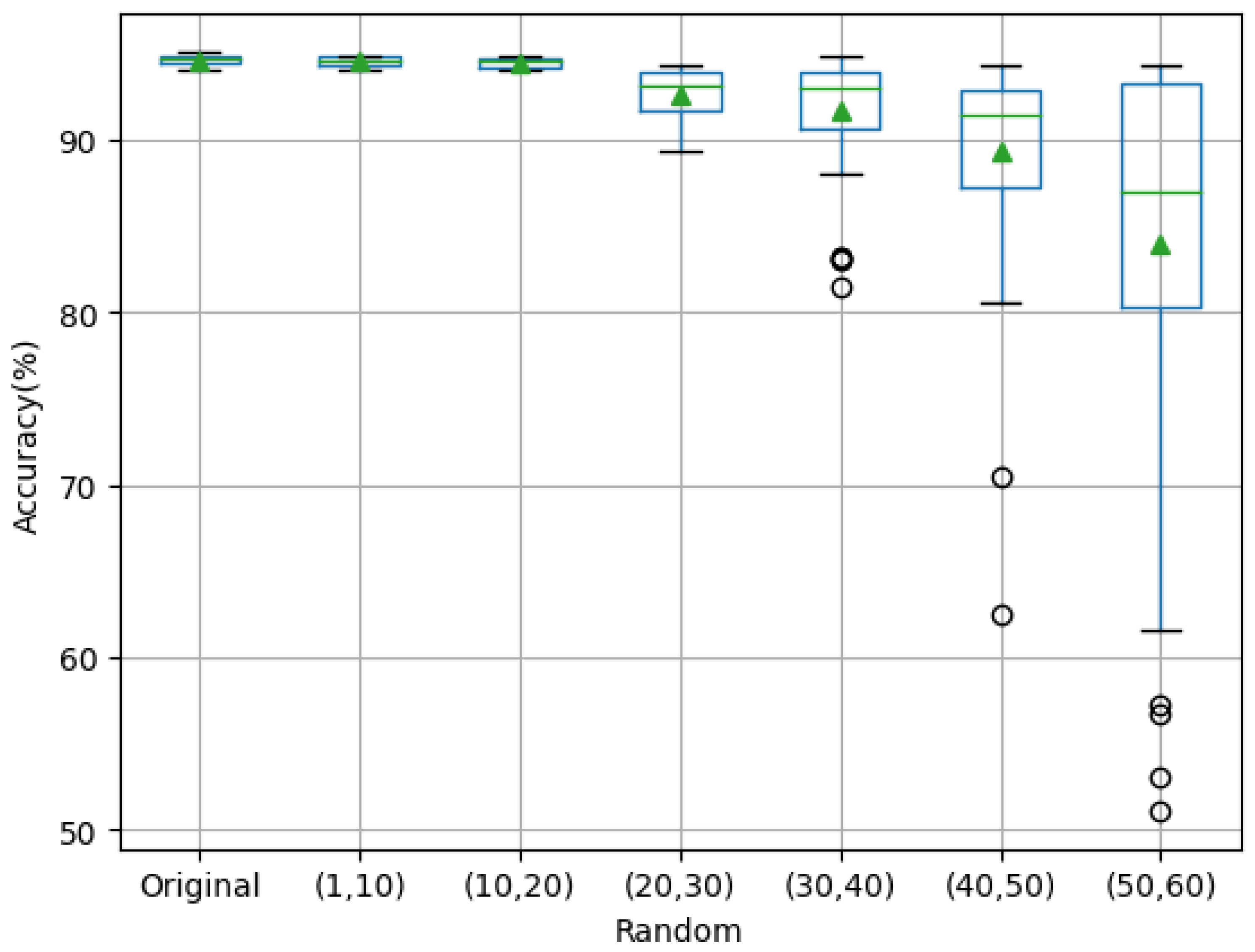

6.4. Quality of Federated Learning Experiment

7. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SA | Selective Availability |

| DLG | Deep Leakage from Gradients |

| SMC | Secure Multiparty Computation |

| GPS | Global Positioning System |

References

- McMahan, H.B.; Moore, E.; Ramage, D.; y Arcas, B.A. Federated Learning of Deep Networks using Model Averaging. arXiv 2016, arXiv:1602.05629. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. arXiv 2023, arXiv:1602.05629. [Google Scholar]

- Zhu, L.; Liu, Z.; Han, S. Deep leakage from gradients. In Proceedings of the Annual Conference on Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Zhao, B.; Mopuri, K.R.; Bilen, H. idlg: Improved deep leakage from gradients. arXiv 2020, arXiv:2001.02610. [Google Scholar]

- Wei, W.; Liu, L.; Loper, M.; Chow, K.H.; Gursoy, M.E.; Truex, S.; Wu, Y. A framework for evaluating gradient leakage attacks in federated learning. arXiv 2020, arXiv:2004.10397. [Google Scholar]

- Geiping, J.; Bauermeister, H.; Dröge, H.; Moeller, M. Inverting gradients-how easy is it to break privacy in federated learning? Adv. Neural Inf. Process. Syst. 2020, 33, 16937–16947. [Google Scholar]

- More, Y.; Ramachandran, P.; Panda, P.; Mondal, A.; Virk, H.; Gupta, D. SCOTCH: An efficient secure computation framework for secure aggregation. arXiv 2022, arXiv:2201.07730. [Google Scholar]

- Fazli Khojir, H.; Alhadidi, D.; Rouhani, S.; Mohammed, N. FedShare: Secure aggregation based on additive secret sharing in federated learning. In Proceedings of the 27th International Database Engineered Applications Symposium, Heraklion, Crete, Greece, 5–7 May 2023; pp. 25–33. [Google Scholar]

- Han, D.J.; Choi, M.; Park, J.; Moon, J. FedMes: Speeding up federated learning with multiple edge servers. IEEE J. Sel. Areas Commun. 2021, 39, 3870–3885. [Google Scholar] [CrossRef]

- Qu, Z.; Li, X.; Xu, J.; Tang, B.; Lu, Z.; Liu, Y. On the convergence of multi-server federated learning with overlapping area. IEEE Trans. Mob. Comput. 2022, 22, 6647–6662. [Google Scholar] [CrossRef]

- Dwork, C. Differential privacy. In International Colloquium on Automata, Languages, and Programming; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Yao, A.C. Protocols for secure computations. In Proceedings of the 23rd Annual Symposium on Foundations of Computer Science (SFCS 1982), Chicago, IL, USA, 3–5 November 1982; pp. 160–164. [Google Scholar]

- Park, J.; Lim, H. Privacy-preserving federated learning using homomorphic encryption. Appl. Sci. 2022, 12, 734. [Google Scholar] [CrossRef]

- Fang, H.; Qian, Q. Privacy preserving machine learning with homomorphic encryption and federated learning. Future Internet 2021, 13, 94. [Google Scholar] [CrossRef]

- Zhang, C.; Li, S.; Xia, J.; Wang, W.; Yan, F.; Liu, Y. {BatchCrypt}: Efficient homomorphic encryption for {Cross-Silo} federated learning. In Proceedings of the 2020 USENIX Annual Technical Conference (USENIX ATC 20), Online, 15–17 July 2020; pp. 493–506. [Google Scholar]

- Ma, J.; Naas, S.A.; Sigg, S.; Lyu, X. Privacy-preserving federated learning based on multi-key homomorphic encryption. Int. J. Intell. Syst. 2022, 37, 5880–5901. [Google Scholar] [CrossRef]

- Ku, H.; Susilo, W.; Zhang, Y.; Liu, W.; Zhang, M. Privacy-preserving federated learning in medical diagnosis with homomorphic re-encryption. Comput. Stand. Interfaces 2022, 80, 103583. [Google Scholar] [CrossRef]

- Chuanxin, Z.; Yi, S.; Degang, W. Federated learning with Gaussian differential privacy. In Proceedings of the 2020 2nd International Conference on Robotics, Intelligent Control and Artificial Intelligence, Shanghai, China, 17–19 October 2020; pp. 296–301. [Google Scholar]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Triastcyn, A.; Faltings, B. Federated learning with bayesian differential privacy. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2587–2596. [Google Scholar]

- Sun, L.; Qian, J.; Chen, X. LDP-FL: Practical private aggregation in federated learning with local differential privacy. arXiv 2020, arXiv:2007.15789. [Google Scholar]

- Duan, J.; Zhou, J.; Li, Y. Privacy-preserving distributed deep learning based on secret sharing. Inf. Sci. 2020, 527, 108–127. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Y.; Jolfaei, A.; Yu, D.; Xu, G.; Zheng, X. Privacy-preserving federated learning framework based on chained secure multiparty computing. IEEE Internet Things J. 2020, 8, 6178–6186. [Google Scholar] [CrossRef]

- Xu, R.; Baracaldo, N.; Zhou, Y.; Anwar, A.; Ludwig, H. Hybridalpha: An efficient approach for privacy-preserving federated learning. In Proceedings of the P12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 13–23. [Google Scholar]

- Columbia University, Department of Computer Science. COMS W4261: Introduction to Cryptography. 2019. Available online: https://www.cs.columbia.edu/~tal/4261/F19/secretsharingf19.pdf (accessed on 11 August 2023).

- Shamir, A. How to share a secret. Commun. ACM 1979, 22, 612–613. [Google Scholar] [CrossRef]

- Bisong, E.; Bisong, E. Google colaboratory. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 59–64. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Annual Conference on Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Deng, L. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

| Notation | Description |

|---|---|

| server | |

| client | |

| n | number of servers |

| t | number of selected threshold |

| q | number of bits converted from server number, |

| z | number of clients |

| the gradient of the i-th client’s model | |

| the k-th share of the j-th secret shared by the i-th client | |

| the amount of data from the ith client | |

| L | the total data amount, |

| h | the different bit numbers of the selected server |

| w | the weighted average obtained by servers |

| the set of numbers of the selected t servers | |

| S | the set of the number of all servers |

| the random number for adjusting gradient of model i-th layer | |

| p | the number of layers in the model |

| the membership level | |

| the Gradient Adjustment Table generated by the level server |

| Accuracy (%) | Maximum | Minimum | Average | Percentage Drop | |

|---|---|---|---|---|---|

| Random | |||||

| Original | 95.09 | 94.09 | 94.62 | − | |

| (1, 10) | 94.85 | 94.04 | 94.54 | 0.08 | |

| (10, 20) | 94.77 | 94.07 | 94.44 | 0.18 | |

| (20, 30) | 94.26 | 89.32 | 92.62 | 2.11 | |

| (30, 40) | 94.77 | 81.45 | 91.64 | 3.15 | |

| (40, 50) | 94.35 | 62.52 | 89.29 | 5.63 | |

| (50, 60) | 94.34 | 51.11 | 83.97 | 11.25 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ting, Y.-T.; Chi, P.-W.; Kuo, C.-T. A Reliable Aggregation Method Based on Threshold Additive Secret Sharing in Federated Learning with Quality of Service (QoS) Support. Appl. Sci. 2024, 14, 8959. https://doi.org/10.3390/app14198959

Ting Y-T, Chi P-W, Kuo C-T. A Reliable Aggregation Method Based on Threshold Additive Secret Sharing in Federated Learning with Quality of Service (QoS) Support. Applied Sciences. 2024; 14(19):8959. https://doi.org/10.3390/app14198959

Chicago/Turabian StyleTing, Yu-Ting, Po-Wen Chi, and Chien-Ting Kuo. 2024. "A Reliable Aggregation Method Based on Threshold Additive Secret Sharing in Federated Learning with Quality of Service (QoS) Support" Applied Sciences 14, no. 19: 8959. https://doi.org/10.3390/app14198959

APA StyleTing, Y.-T., Chi, P.-W., & Kuo, C.-T. (2024). A Reliable Aggregation Method Based on Threshold Additive Secret Sharing in Federated Learning with Quality of Service (QoS) Support. Applied Sciences, 14(19), 8959. https://doi.org/10.3390/app14198959