Abstract

Visual servoing technology is well developed and applied in many automated manufacturing tasks, especially in tools’ pose alignment. To access a full global view of tools, most applications adopt an eye-to-hand configuration or an eye-to-hand/eye-in-hand cooperation configuration in an automated manufacturing environment. Most research papers mainly put efforts into developing control and observation architectures in various scenarios, but few have discussed the importance of the camera’s location in the eye-to-hand configuration. In a manufacturing environment, the quality of camera estimations may vary significantly from one observation location to another, as the combined effects of environmental conditions result in different noise levels of a single image shot in different locations. In this paper, we propose an algorithm for the camera’s moving policy so that it explores the camera workspace and searches for the optimal location where the image’s noise level is minimized. Also, this algorithm ensures the camera ends up at a suboptimal (if the optimal one is unreachable) location among the locations already searched with the limited energy available for moving the camera. Unlike a simple brute-force approach, the algorithm enables the camera to explore space more efficiently by adapting the search policy by learning the environment. With the aid of an image-averaging technique, this algorithm, in the use of a solo camera, achieves observation accuracy in eye-to-hand configurations to a desirable extent without filtering out high-frequency information in the original image. An automated manufacturing application was simulated, and the results show the success of this algorithm’s improvement in observation precision with limited energy.

1. Introduction

In the automated industry, automatic alignment plays a critical role in many different applications, such as micromanipulation, autonomous welding, and industrial assembly [1,2,3]. Visual servoing is the technique commonly used in tasks of alignment as it can guide robotic manipulators to their desired poses (positions and orientations) [4,5,6]. Visual servoing is the method of controlling a robot’s motion using real-time feedback from visual sensors that continuously extract image features [7].

According to the relative positions between cameras and the tool manipulators, visual servoing can be generally divided into two categories: eye-in-hand (EIH) configuration and eye-to-hand (ETH) configuration [8,9]. In EIH, a camera is mounted directly on a robot manipulator, in which case the motion of the robot induces the motion of the camera, while in ETH, the camera is fixed in the workspace and observes the motion of the robot from a stationary configuration. Both configurations have their own merits and drawbacks regarding the field-of-view limit and precision that oppose each other. EIH has a partial but precise view of the scene, whereas ETH has a global but less precise view of it [10]. For complex tasks in automated manufacturing, one configuration of visual servoing is inadequate as these tasks require the camera to provide global views and be maneuverable enough to explore the scene [10]. To take advantage of both stationary and robot-mounted sensors, two configurations can be employed in a cooperative scheme, which can be seen in some studies [11,12,13].

In recent years, many works have been published to show visual servoing paradigms being applied in industrial environments. For example, Lippiello et al. [14] presented a position-based visual servoing approach for a multi-arm robotic cell equipped with a hybrid eye-in-hand/eye-to-hand multicamera system. Zhu et al. [15] discussed Abbe errors in a 2D vision system for robotic drilling. Four laser displacement sensors were used to improve the accuracy of the vision-based measurement system. Liu et al. [16,17] proposed a visual servoing method for the automative assembly of aircraft components. With the measurements from two CCD cameras and four distance sensors, the proposed method can accurately align the ball-head, which is fixed on aircraft structures in a finite time. Sensor accuracy is a key element in many studies as it is not only a requirement for some delicate applications but also affects the robustness of visual servoing techniques [18].

However, gaps exist between real-world applications and the open-space experiments proposed in the above studies. Real-world products are usually manufactured in a confined and complicated environment where complex factors may significantly degrade the quality of observed signals given from even the most precise sensors. For example, illumination changes in space [18] can be a serious issue for camera estimation in real manufacturing processes, which is not normally addressed in research experiments where illumination is usually sufficient and equally distributed.

In this paper, we would like to address the importance of camera location in ETH or ETH/EIH cooperative configurations and discuss the benefits of developing algorithms that place the camera in an optimal location. To observe a single point in the workspace, there are an infinite number of poses that a fixed camera can be placed in the space. Among all possible poses of the camera that keep the same target within the field-of-view limit, the image noise at different locations may play a great role in the precision of observations. Many environmental conditions (such as illumination, temperature, etc.) affect the noise level of a single image [19]. In a manufacturing environment, the quality of estimations from a camera may vary significantly from one observation location to another. For instance, the precision of an estimation improves greatly if the camera moves from a location where it is placed within the shade of machines to a location that has better illumination. Also, places near machines may be surrounded by strong electrical signals that introduce extra noise into the camera sensors.

Many papers in visual servoing consider applying filters to reduce image noise and, therefore, increase the precision of observations [20,21,22,23]. Gaussian white noise can be dealt with using spatial filters, e.g., the Gaussian filter, the Mean filter, and the Wiener filter [24]. In addition, wavelet methods [25,26] have the benefit of keeping more useful details but at the expense of computational complexity. However, filters that operate in the wavelet domain still inevitably filter out (or blur) some important high-frequency useful information of the original image, even though they succeed in preserving more edges compared with spatial filters. Especially at locations where the signal-to-noise ratio (SNR) in an image is low, no matter what filters are applied, it is difficult to safeguard the edges of the noise-free image as well as reduce the noise to a desirable level. Thus, developing an algorithm that searches for locations of the camera where the SNR is high is beneficial.

We can approach the denoising problem with multiple noisy images taken from the same perspective. This method is called signal averaging (or image averaging in the application of image processing) [27]. Assume images taken from the same perspective but at different times have the same noise levels. Then, averaging multiple images at the same perspective will reduce the unwanted noise as well as retain all the original image details. We can also assume the robot’s end-effector holds the camera rigidly so that any shaking and drift are negligible when shooting pictures. Moreover, the number of images required for averaging has a quadratic dependence on the ratio of an image’s original noise level to the reduced level that is desirable for observations. Therefore, the number of images required is a measurement of the noise level in a single image. Furthermore, in the denoising process, precise estimations require that the original image details are retained as much as possible. Based on previous statements, we decided to choose image averaging over all other denoising techniques in this work.

Frame-based cameras, which detect, track, and match visual features by processing images at consecutive frames, cause a fundamental problem of delays in image processing and the consequent robot action [28]. By averaging more images for an observation, fewer noises are maintained in the averaged image, but more processing time is required for acquiring visual features; it is a typical tradeoff between high-rate and high-resolution in cameras [18]. The control loop frequency is limited in many cases due to the low rate of imaging, which undermines the capability/usage of visual servoing techniques in high-speed operations. Therefore, it is rewarding for an eye-in-hand configuration to find the location of the camera where the original image noise is lowest; thus, the least number of images is needed in order to facilitate the manufacturing speed.

Brute-force search [29] is a simple and straightforward approach to looking for the optimal location of the camera. It systematically searches all possible locations in space and gives the best solution. This method is only feasible when the size of the search space is small enough. Otherwise, brute force, which checks every possibility, is usually inefficient, especially for large datasets or complex problems, where the number of possibilities can grow exponentially. An intelligent algorithm is necessarily developed for the sake of energy efficiency and time consumption.

In this paper, we propose an algorithm that efficiently searches the camera’s workspace to find an optimal location (if its orientation is fixed) of the camera so that a single image taken at this location has the smallest noise level among images taken at all locations in the space. With limited energy for moving the camera, this algorithm also ensures the camera ends up at a suboptimal (if the optimal pose is unreachable) location among the locations already searched.

2. Denoising Technique Comparison

In this section, we further discuss various existing image-denoising techniques and conduct a comparison study of some techniques based on their noise-reduction rate and high-frequency data loss.

Various denoising techniques have been proposed so far. A good noise removal algorithm ought to remove as much noise as possible while safeguarding edges. Gaussian white noise has been dealt with using spatial filters, e.g., the Gaussian filter, the Mean filter, and the Wiener filter 25]. Images can be viewed as a matrix with variant intensity values at each pixel. The spatial filter is a square matrix that performs convolutional production with the image matrix to produce filtered (noise-reduced) images, i.e.,

where is the original (noisy) image matrix entry at the coordinate . is the spatial filter (or kernel), and * accounts for the convolution. is the filtered (noisy-reduced) image matrix entry at the coordinate . All the spatial filters have the benefit of being computationally efficient but the shortcoming of blurring edges [25].

Also, image Gaussian noise reduction can be approached in the wavelet domain. In the conventional Fourier Transform, only frequency information is provided, while temporal information is lost in the transformation process. However, wavelet transformation will keep both the frequency and temporal information of the image [25]. The most widely used wavelet noise-decreasing method is the wavelet threshold method, as it can not only obtain an approximate optimal estimation of the original image but also calculate speedily and adapt widely [25]. The method can be divided into three steps. The first step is to decompose the noise-polluted image by wavelet transformation. Then, wavelets of useful signals will be retained, while most wavelets of noise will be set to zero according to the set threshold. The last step is to synthesize the new noise-reduced image by inverse wavelet transformation of the cleaned wavelets in the previous step [25,26]. The wavelet transformation method has the benefit of keeping more useful details but is more computationally complex than spatial filters. The threshold affects the performance of the filter. Soft thresholding provides smoother results, while a hard threshold provides better edge preservation [25]. However, whichever threshold is selected, filters that operate in the wavelet domain still filter out (or blur) some important high-frequency useful details in the original image, even though more edges are preserved than with spatial filters.

All the aforementioned methods present ways to reduce noise in image processing starting from a noisy image. We can approach this problem with multiple noisy images taken from the same perspective. Assume the same perspective ensures the same environmental conditions (illumination, temperature, etc.), which affect the image noise level. Given the same conditions, an image noise level taken at a particular time should be very similar to another image taken at a different time. This redundancy can be used for the purpose of improving image precision estimation in the presence of noise. The method that uses this redundancy to reduce noise is called signal averaging (or image averaging in the application of image processing) [27]. Image averaging has the natural advantage of retaining all the image details as well as reducing unwanted noises, given that all the images for the averaging technique are taken from the same perspective.

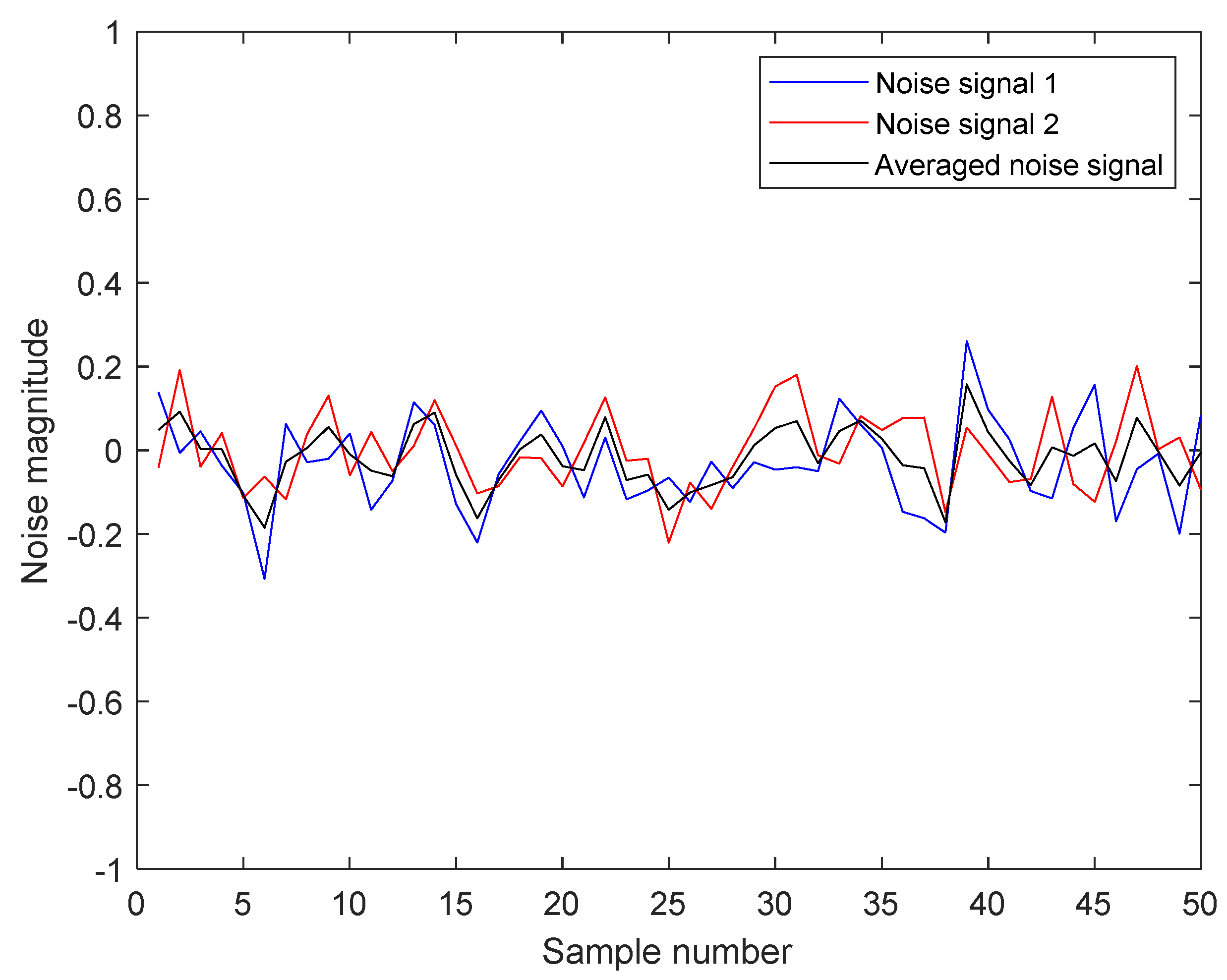

The image-averaging technique is illustrated in Figure 1. Assume a random, unbiased noise signal and that this noise signal is completely uncorrelated with the image signal itself. As noisy images are averaged, the original true image is kept the same, and the magnitude of the noise signal is compressed, thus improving the signal-to-noise ratio. In Figure 1, we generated two random signals with the same standard deviation, and they are, respectively, represented by blue and red lines. The black line is the average of the two signals, whose magnitude is significantly decreased compared to each of the original signals. In general, we can come up with a mathematical relationship between the noise level reduction and the sample size for averaging. Assume we have N numbers of Gaussian white noise samples with the standard deviation . Each sample is denoted as , where i represents the sample signal. Therefore, we can acquire:

where is the variance, is the expectation of the signal, and is the standard deviation.

Figure 1.

An example of noise level reduction by image averaging.

By averaging N Gaussian white noise signals, we can write:

where is the average of N noise signals. Equation (3) demonstrates that a total number of N samples is required to reduce the signal noise level by . Since our goal is to reduce the image noise within a fixed threshold (represented by a constant standard deviation), a smaller deviation in the original image requires much fewer samples to make an equivalent noise-reduction estimation. Thus, it is worthwhile for the camera to move around, rather than being stationary, to find the best locations where the image noise level estimation is small. In general, we can reduce the noise level as much as needed by taking more samples.

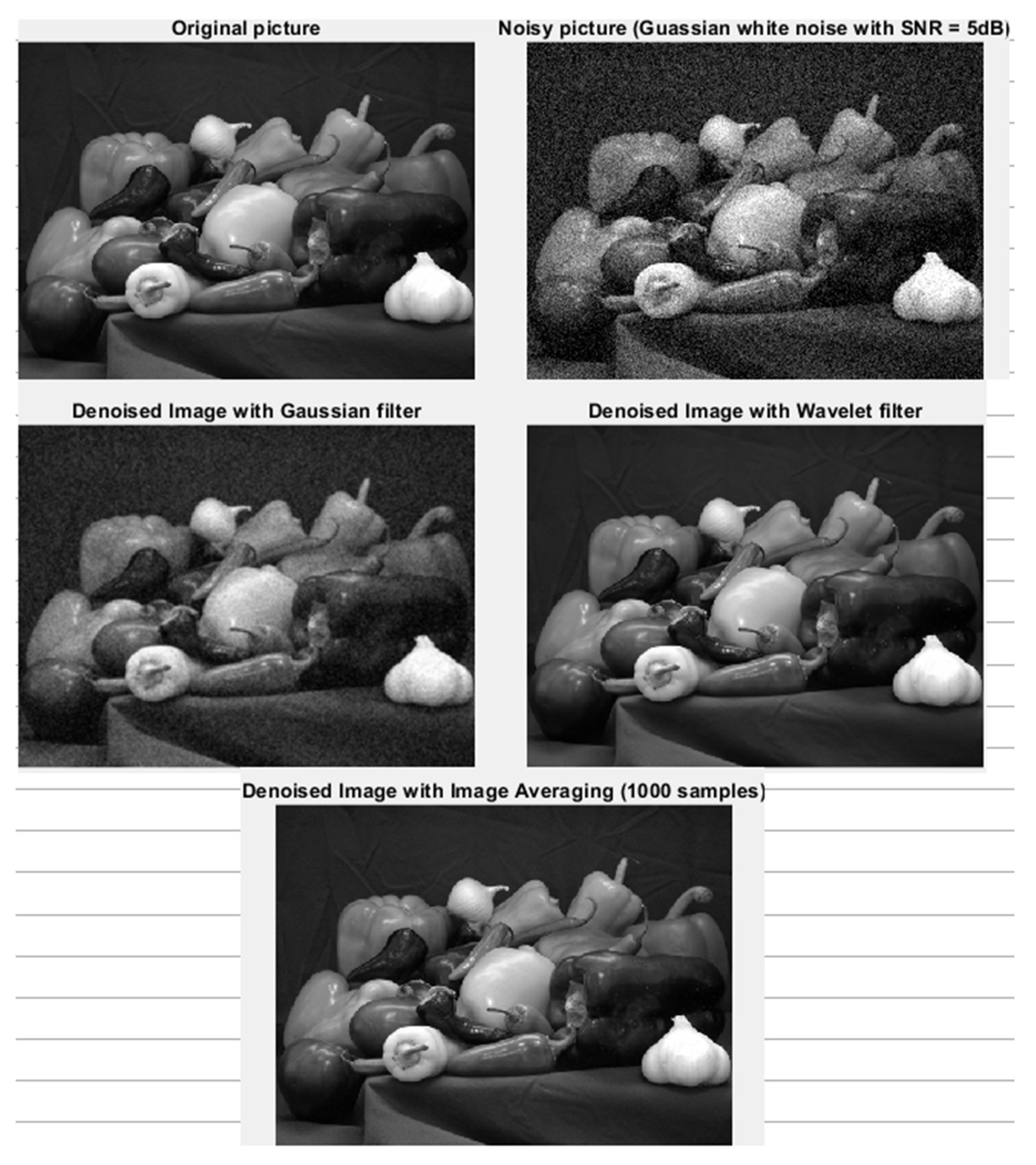

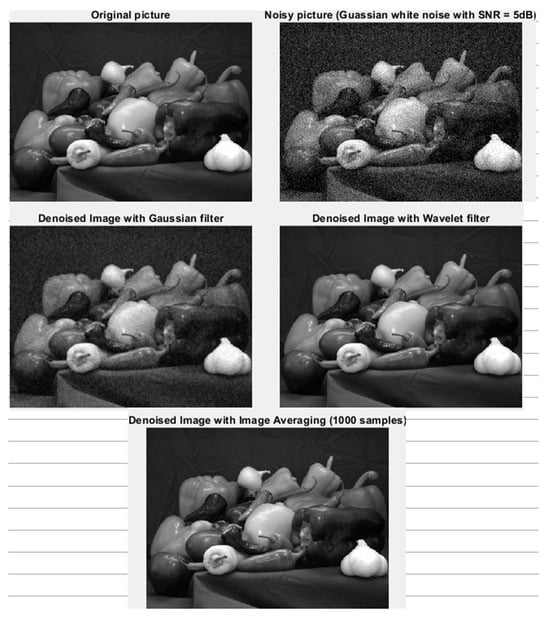

A comparison of the above denoising methods is presented in the following: In Figure 2, a default picture from MATLAB R2023b is polluted with Gaussian-distributed white noise with an SNR equating to 5 dB. A spatial filter (Gaussian filter), a wavelet filter (the wavelet threshold method), and the image-averaging technique (1000 average sample) were applied to denoise the noisy image. Figure 1 shows all techniques succeed in reducing the noise to some extent, while the wavelet filter and the image-averaging technique perform better than the spatial filter regarding denoising. The difference between the wavelet filter and the image-averaging technique is not obvious in Figure 2 but becomes more significant in the frequency domain.

Figure 2.

Original image, noisy image, and denoised images.

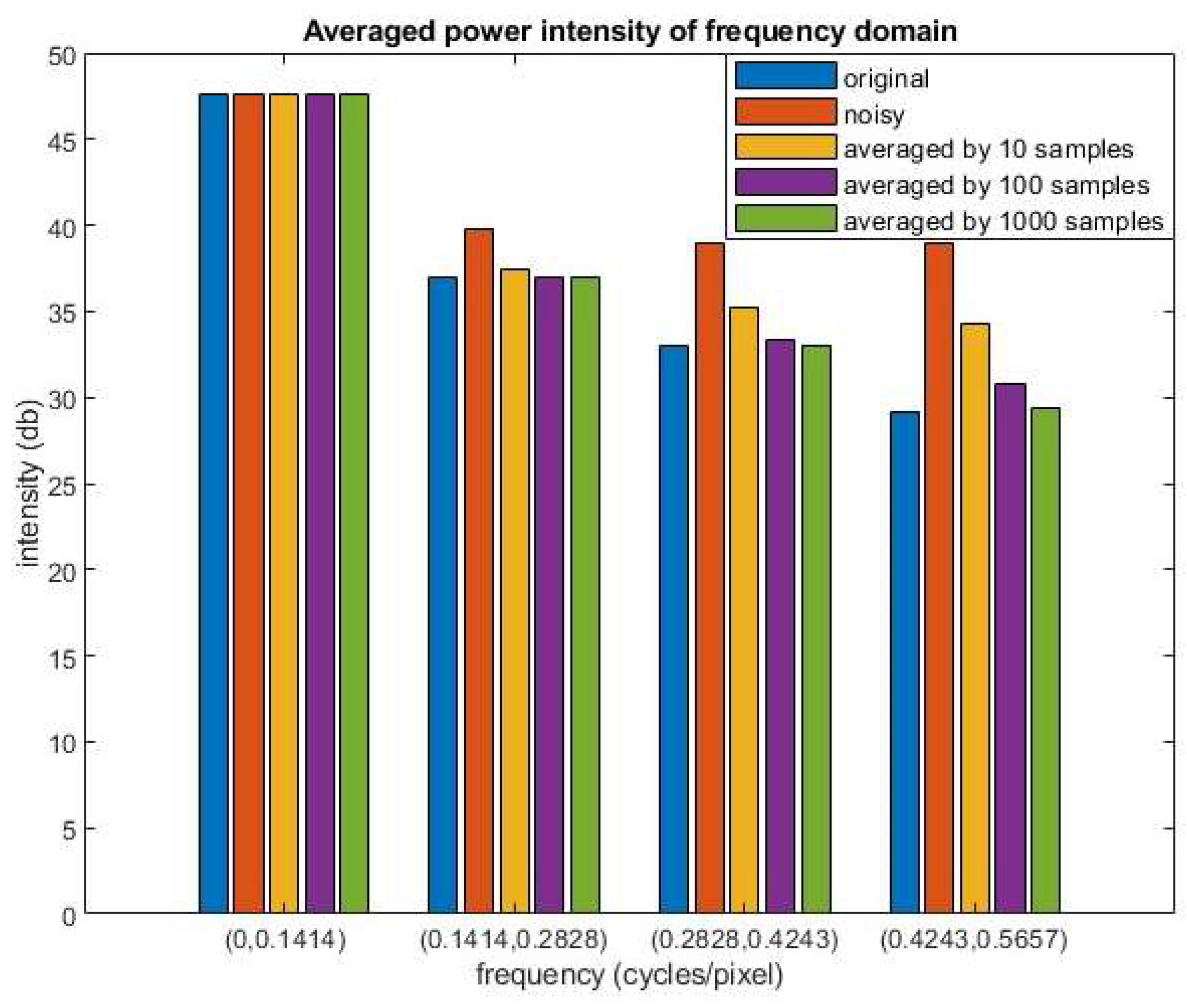

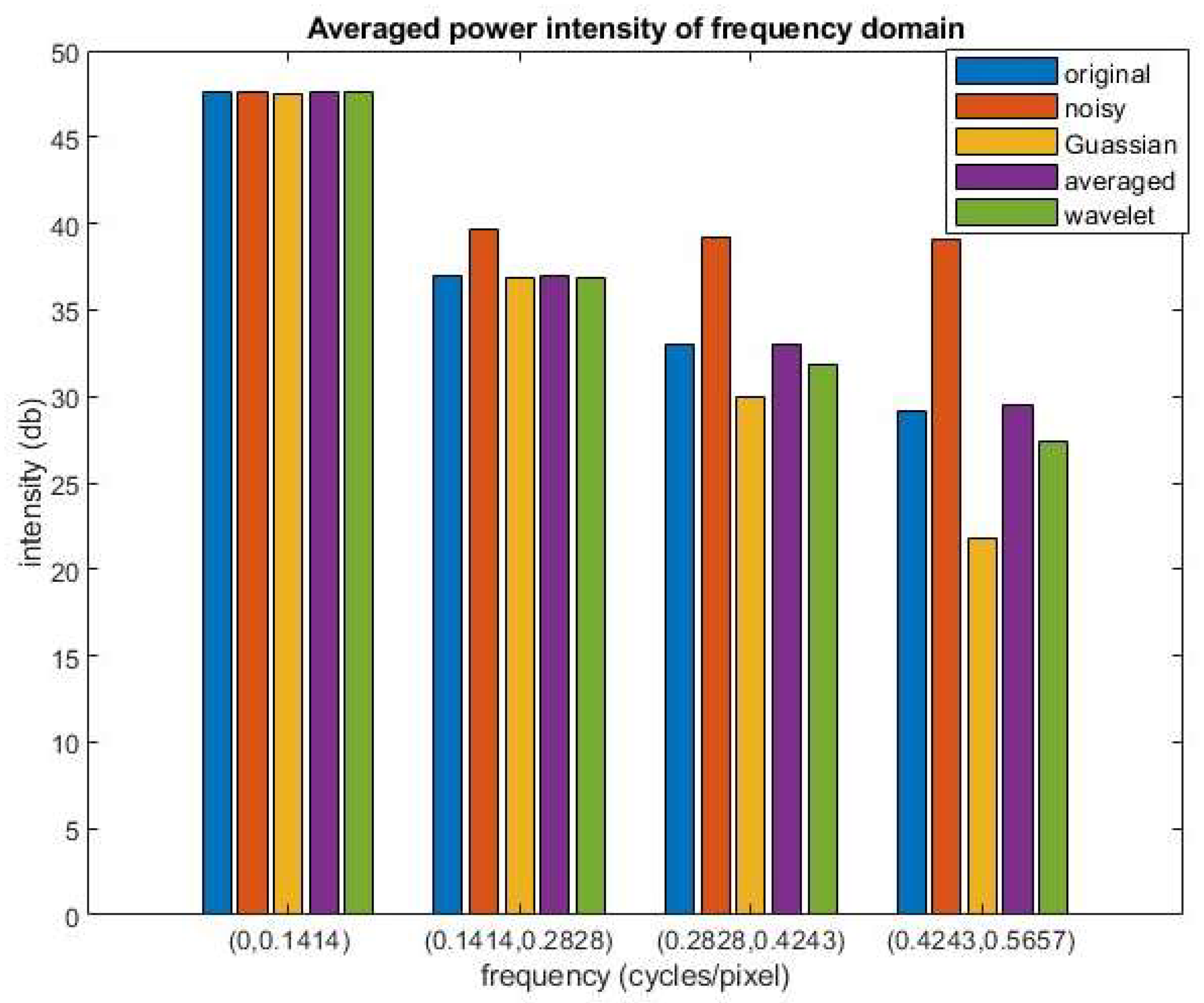

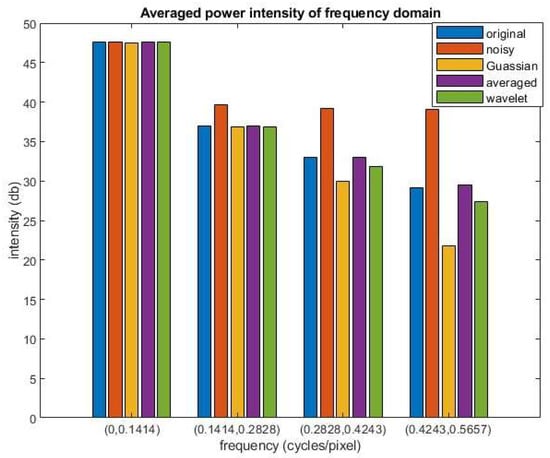

Figure 3 compares the performance in the frequency domain of image-averaging methods with different samples, and Figure 4 shows the difference in the frequency domain after applying the above three denoising methods. The power intensity is separated from low to high, as shown in those two figures. By comparing the original image and the noisy image, it can be observed that noise intensity is larger in the higher frequency domain. Figure 3 shows that by averaging more samples, the noise level decreases in all frequencies. In particular, the averaged image is almost the same as the original one when 1000 samples are averaged.

Figure 3.

Frequency analysis of image-averaging technique with varying samples.

Figure 4.

Frequency analysis of different denoising techniques.

Figure 4 shows the image generated from the Gaussian filter has a lower power intensity than that of the original image with high frequency, which indicates that a lot of high-frequency data in the original image has been filtered out as well. Although it performs better than a Gaussian filter, the wavelet filter still loses some details of the original image. Only the averaging method can reduce the noise level and retain all the information.

3. Algorithm Flowchart

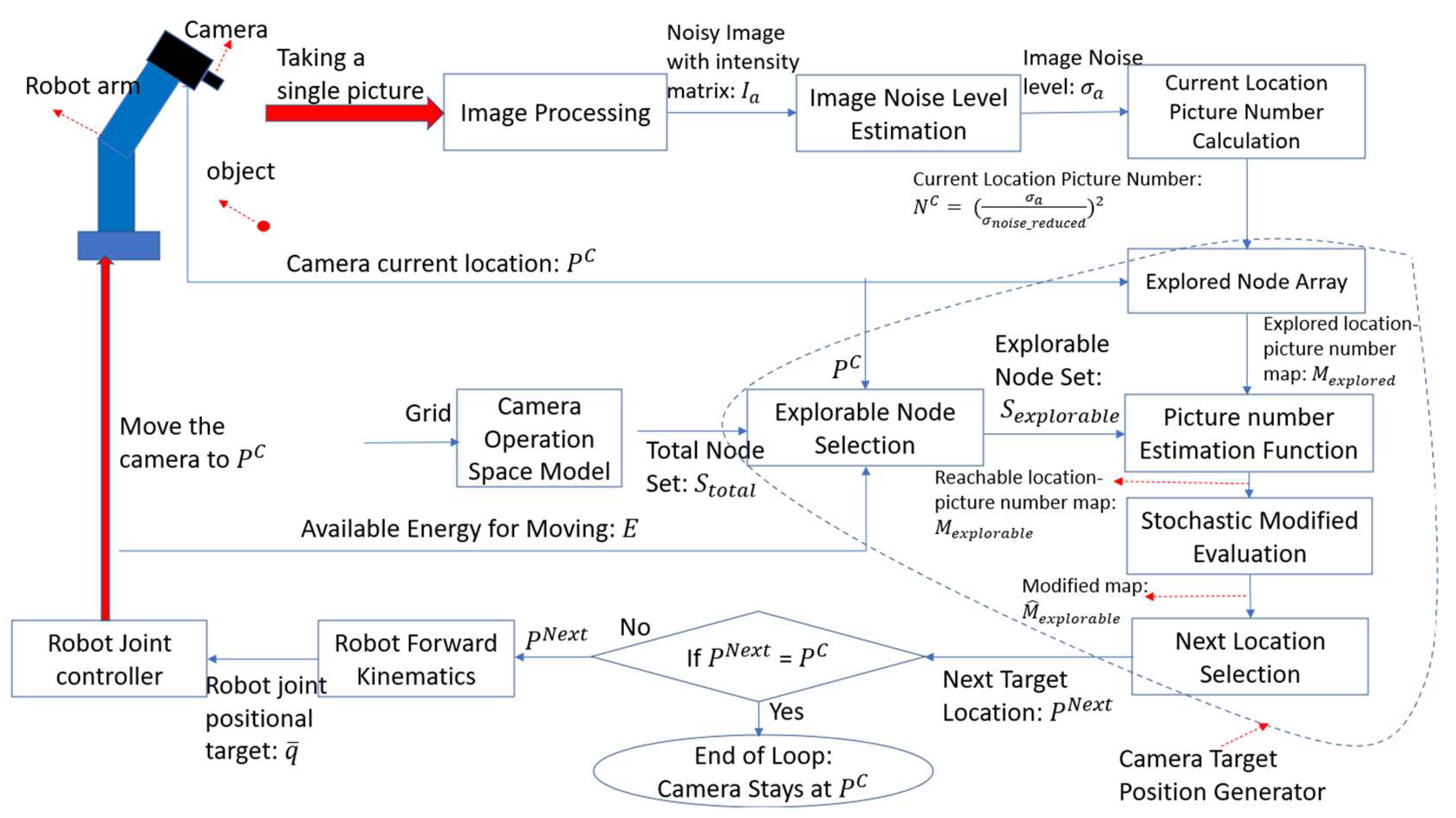

The proposed algorithm aims to search and move the camera to the location where the least number of pictures are needed to reduce the noise level of the averaged image to a degree . A flowchart describing the algorithm is shown in Figure 5.

Figure 5.

Algorithm flowchart. (The red arrows refer to processes involving hardware of the system, and the blue arrows refer to processes executed only with software).

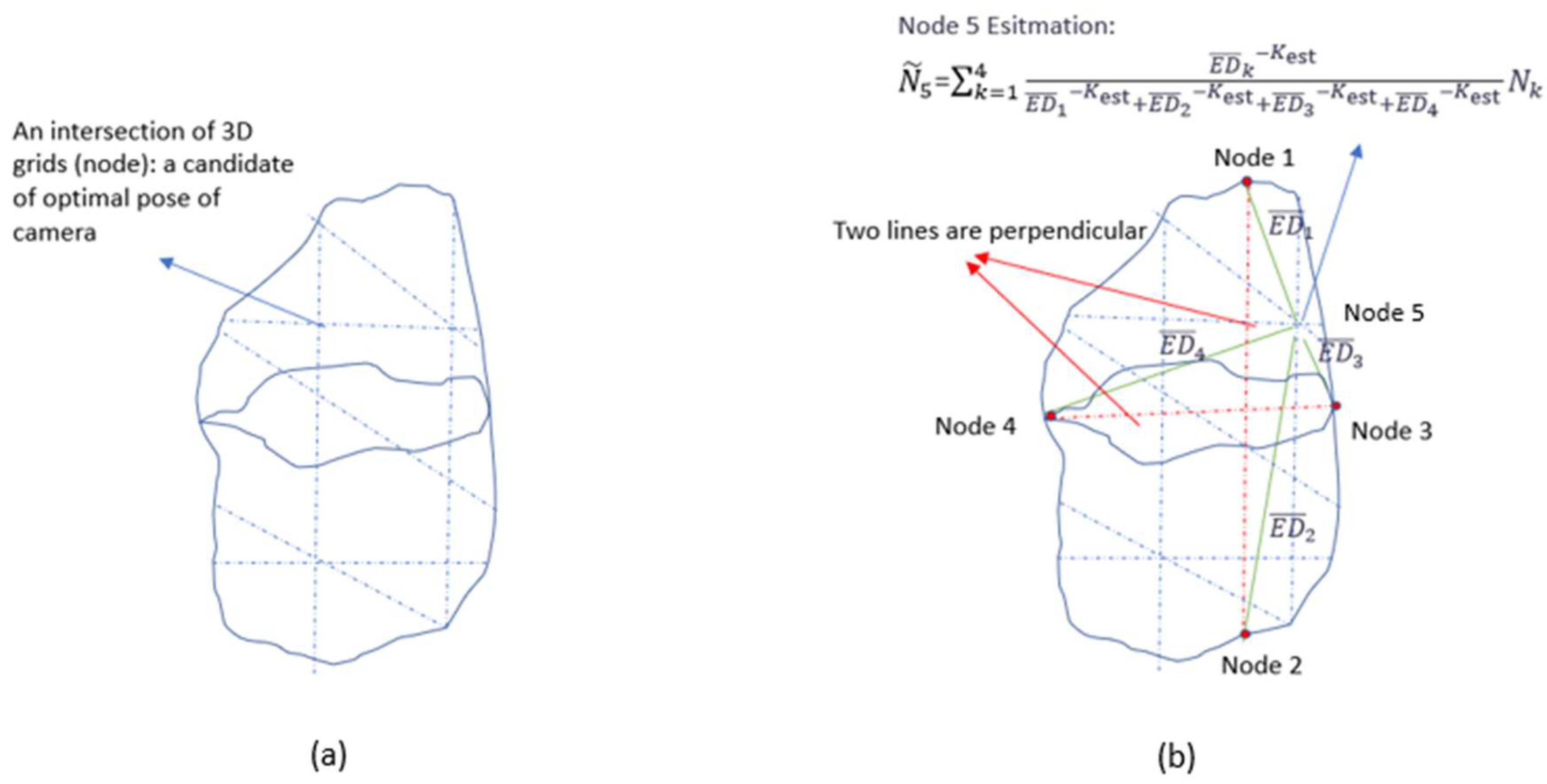

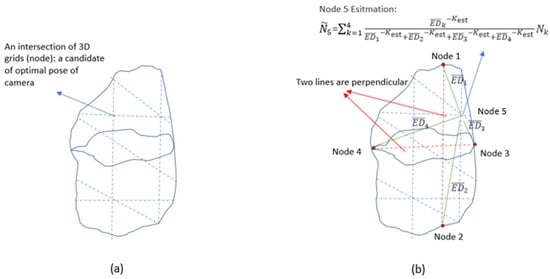

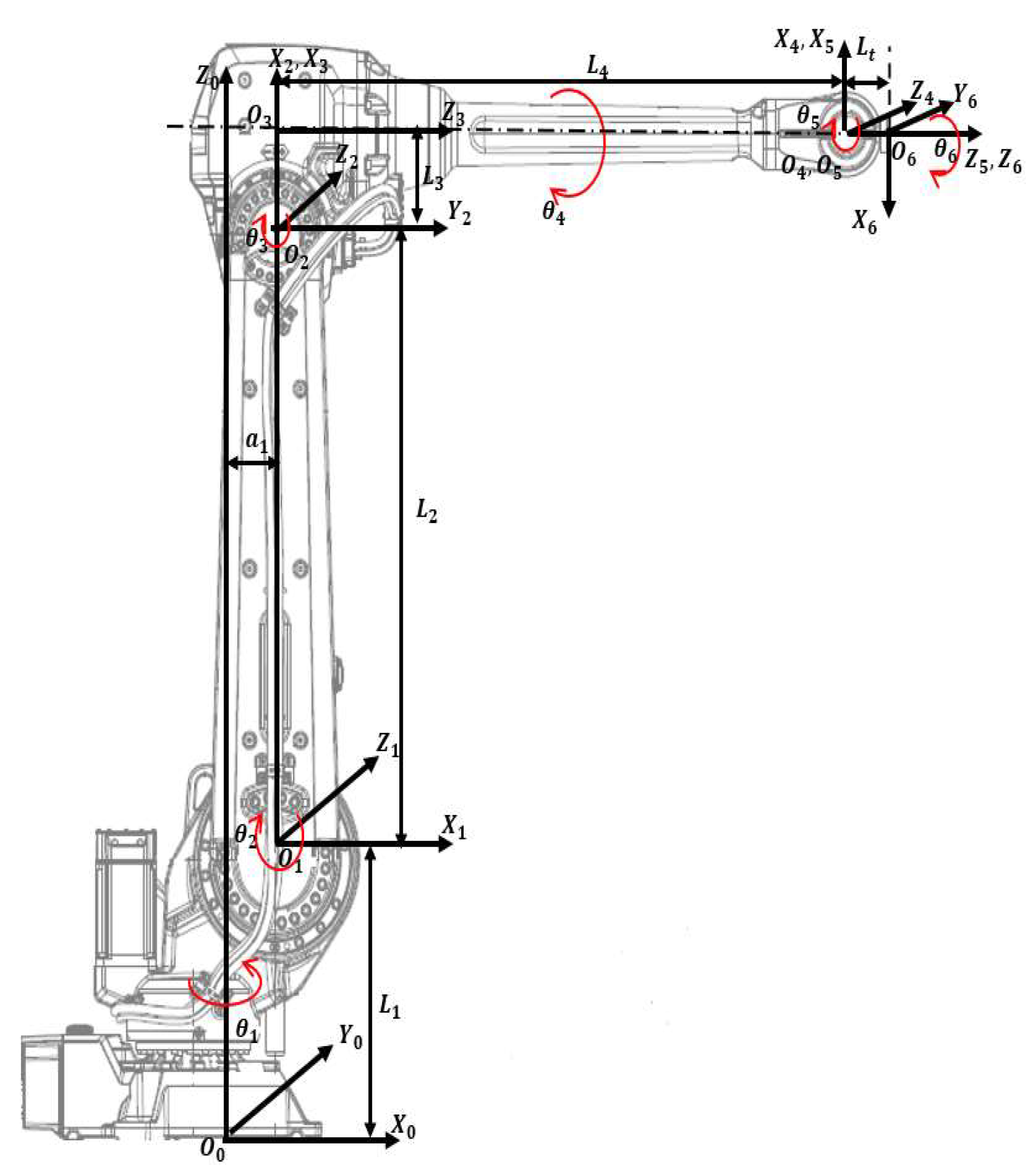

A camera, mounted on a 6-joint robot manipulator, moves freely in 6 degrees of freedom (6 DoFs) in space. Assuming the camera is fixed in orientation towards the face of the object of interest, only the position (3 DoFs) of the camera can vary from the movement of the robot arm. The camera can only move to the locations within the maximum reach of the robot manipulator, and the object can only be detectable within the view angle of the camera. These constraints create a camera operational space (Figure 6a), which is then gridded with a certain resolution of distance. All the intersections of grids come up with a set of nodes (), which are all possible candidates of locations that the camera may move and search utilizing the algorithm. Each node is indexed and denoted as .

Figure 6.

(a) The operational space of the camera is an arbitrary closed 3D geometry in the space shown in the plot. The gridded area is in 3D (blue dashed lines). (b) Four initial nodes are chosen so that their connection lines (red dashed line) are perpendicular. The picture number of Node 5 can be estimated by Equation (4) from four initial explored nodes. represents Euclidean distance (green line).

The algorithm iteratively commands the camera to take a picture at one location, generate the next target location, and move to that location until it finds the optimal location that requires the least number of pictures. In one iteration, the camera takes a photo at the current location and after image processing, which transforms a picture into a pixel’s intensity matrix, it produces a noisy image with an intensity matrix A previously developed algorithm [30] estimates the noise level across the image. Then, at the current location of the camera, the number of pictures needed to reduce the noise level to is calculated by the equation: . Based on this information ( at ), the algorithm then generates the next target location of the camera, . The decision-making process of generating is illustrated in the dashed circle of the below figure. In general, this algorithm first determines, from the current location , the set of grid nodes that can be explorable () in the next iteration based on the available moving energy E. Then, a hash map regarding the estimated number of pictures required for each explorable node () is evaluated from an estimation function that utilizes data from the hash map of all explored nodes (). A stochastic process further modifies the hash map to add fidelity to the estimation model. In the end, the next node’s location is selected so that it has the smallest number of images required among all nodes in If the next generated target position is the same as the current position , is the optimal location, and the camera stays and observes the object at this location. Otherwise, the robot joint controller moves the robot joint angles to the targets (calculated from forward kinematics [31] (Appendix B, Equations (A1)–(A5))) so that the camera will move to the next target position, .

4. Core Algorithm

The core of this algorithm is to generate the target locations to be explored in the camera’s operational space. The details of this algorithm are shown in the area circled with dashed lines in Figure 5. In this section, we put effort into the development of the picture number estimation function, the explorable nodes set, the stochastic modified evaluation function, the next location selection process, and the estimated energy cost function.

4.1. Picture Number Estimation Function

In each iteration of the algorithm, the camera moves to a new node with location , and the algorithm evaluates the number of pictures needed at this location. The node is then set to be explored and saved in a set Each node that has been explored in iterations is one-on-one paired with the values of the location and picture numbers of that node. All pairs that are expressed as : () are saved in a hash map . Then, is used to develop an estimation function that can estimate the number of pictures needed for the rest of the unexplored nodes. The estimated picture number calculated for all nodes in space is denoted as . Expressions of any element in are:

For any node index in the Total Node Set: , where is the total number of nodes.

where is a positive constant parameter.

Equation (4) is a weighted function concerning each explored node. If one explored node is closer to the node , its picture number has a larger weight (effect) on the estimation of . Thus, a minus sign of is utilized to indicate the negative correlation between the Euclidean distance and weight.

Equation (4) shows that at least some initial nodes are required to be explored before their values can be used to estimate the picture numbers in other nodes. For the estimation function to work properly by covering all nodes in the camera operational space, four initial nodes are selected in the following steps:

- Find the first two nodes on the boundary of operational space so that the Euclidean distance between these two nodes is the largest;

- Select the other two nodes whose connection line is perpendicular to the previous connection line, where the Euclidean distance between these two nodes is also the largest among all possible node pairs;

- Move the camera to these four nodes in space with proper orientation. Take a single image at each location and estimate the number of pictures required at those locations. Save all four nodes in Sexplored and their values in Mexplored.

Figure 6b shows the selection of four initial nodes in a camera operational space and an example of the use of the estimation function to estimate the picture number in one node.

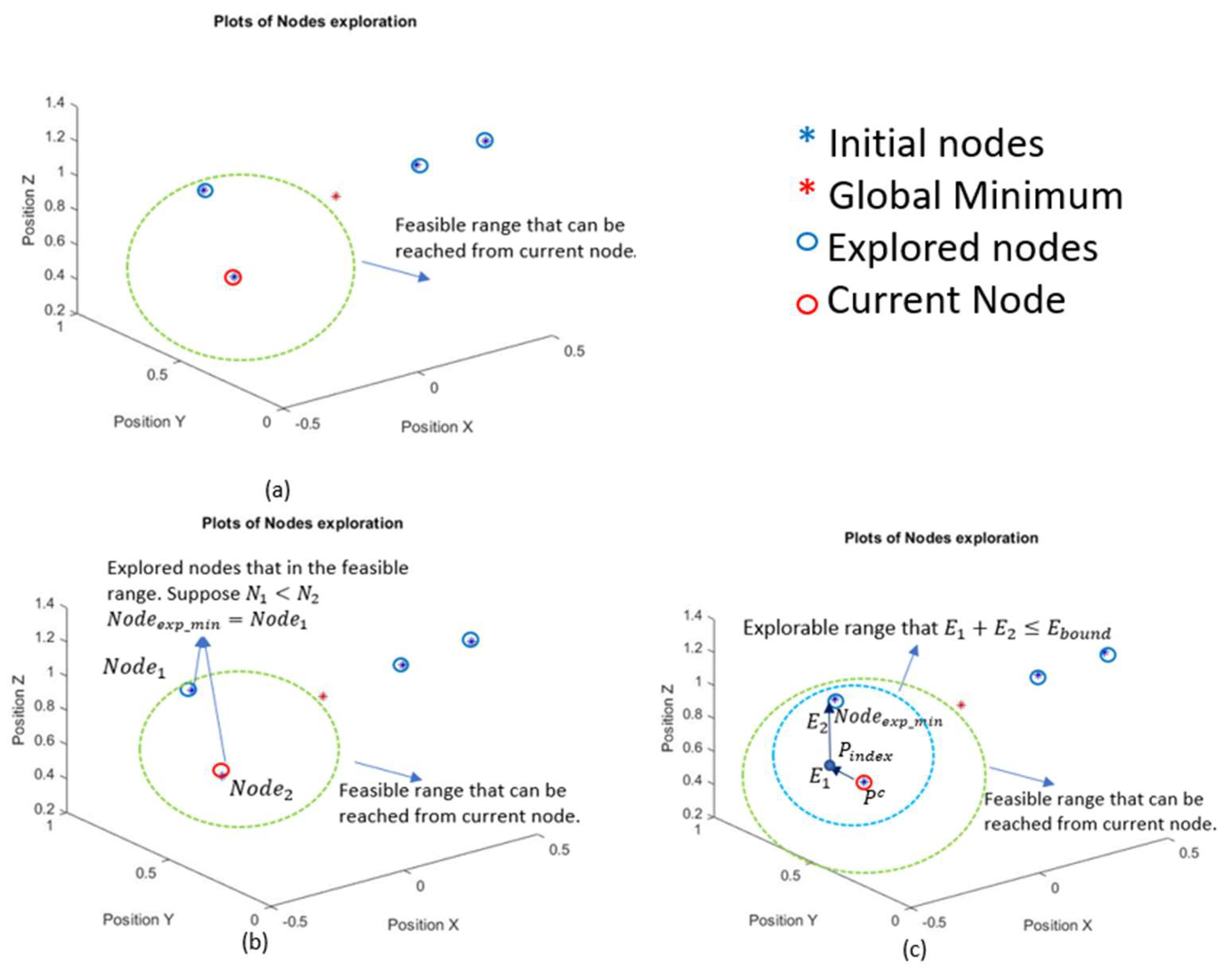

4.2. Explorable Node Selection

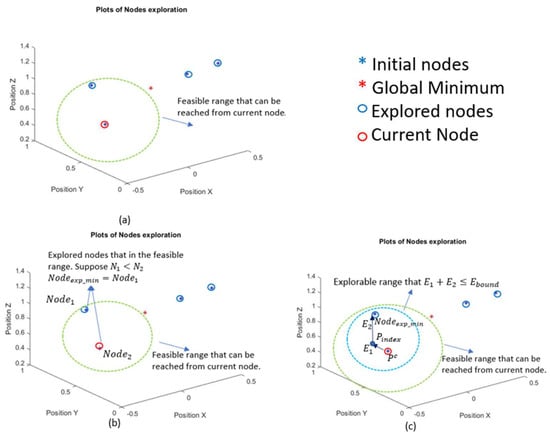

A stage in the algorithm, Explorable Node Selection, generates a set of nodes which contains all the camera’s next explorable locations from the current location with current energy for moving The explorable set is found using the following steps (Figure 7):

Figure 7.

Find the explorable set when the global minimum (red star) is inaccessible. (a) Step 1: Obtain the feasible range (green dashed line). (b) Step 2: Find the explored node with a minimum picture number in the feasible range. Initial nodes (blue stars) are explored (blue circles). is . (c) Step 3: Obtain explorable range when . The explorable range (blue dashed line) is a subset of .

- With current energy bound Ebound, find all nodes in the space that can be reached from the current node Pc.

In other words, all feasible nodes are in the set:

where is the position of a node, and is the estimated energy cost from .

When the current energy bound is greater than a predefined energy threshold (), then the algorithm is safe to explore all nodes in the feasible range in the next iteration loop. In other words:

Note: is generated from a dynamic model of a robot-arm control system. The details of this function are developed in the following section of this paper;

- 2.

- When Ebound ≤ ET, step 2 and step 3 are applied to find the explorable set. In the feasible set, look at all explored nodes and find the one that has the smallest number of pictures. In other words:

is the intersection set between and ;

- 3.

- The estimation function may not give accurate results for some unexplored nodes. Therefore, it is possible that the algorithm may make the camera end up in a node that has a large number of pictures in some iteration loops. Therefore, our algorithm needs to make sure, at worst, it has enough energy to go back to the best (minimum number of pictures) node that was explored when the available energy is low (Ebound ≤ ET). This further reduces the feasible set Sfeasible. The explorable set Sexplorable can be written as:

Figure 7 illustrates the selection of explorable node sets.

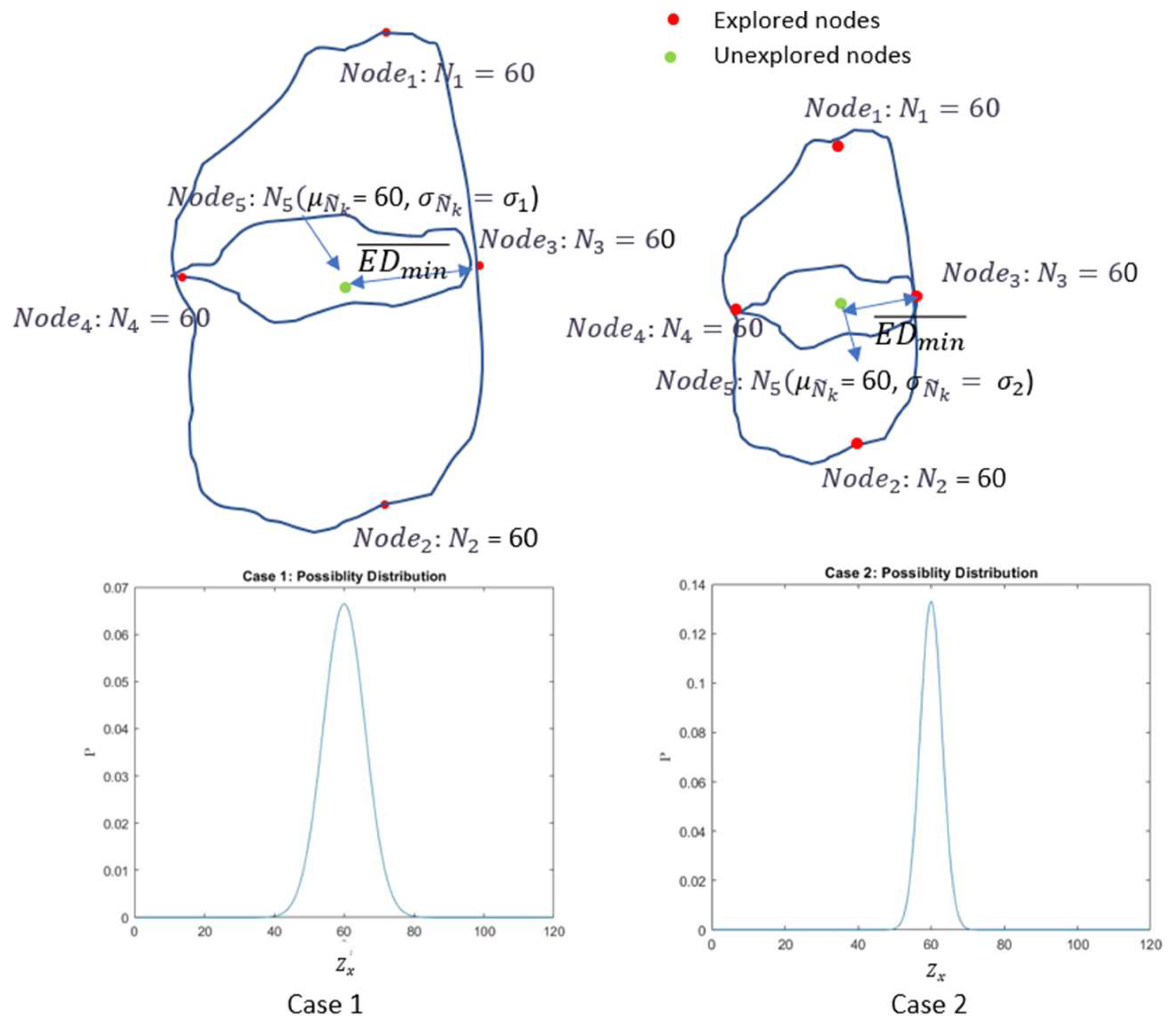

4.3. Stochastic Modified Evaluation

For all nodes in we set up a hash map . For each node, its location and number of required pictures for that node are given as: : (). The number of pictures required for nodes in are calculated from the estimation function in Equation (4).

The algorithm tends to select nodes located around the explored node that has the minimum number of pictures because, from Equation (4), these nodes tend to have the smallest estimated number of pictures. However, this process does not guarantee reaching the global minimum, therefore, minimizing the number of pictures. To sufficiently explore unknown nodes as well as exploit information from already explored nodes, a stochastic process is introduced to modify the picture number estimation function given in Equation (4).

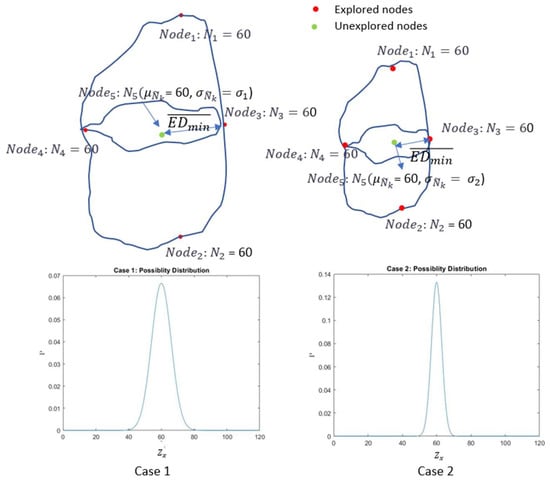

Assume when the locations of a camera in space deviate a smaller amount from each other, the difference of real picture number values at those locations is also smaller. Thus, among all unexplored nodes, the estimated picture number of an unexplored node, which is closer to the explored node, is more deterministic. To emphasize this feature, we make the estimated picture number follow a normal distribution with a mean being equal to the value from Equation (4) and standard deviation . As an unexplored node is further away from explored nodes, the larger value of should be assumed, which means more indeterministic estimation (illustrated in Figure 8). The following Equations (14)–(16) summarize the above discussion:

Figure 8.

Both case 1 and case 2 have the same estimated value of an unexplored node (green point) from Equation (4). However, the node in case 1 has a larger distance to explored nodes (red points) compared to that in case 2. Therefore, we set different standard deviations ( > ) to differentiate possibility distributions. This approach distinguishes nodes that have the same estimations from Equation (4) to have different likelihoods to be explored in the next iteration.

For any nodes in its picture number estimation should follow the following normal distributions:

where is the probability of a random variable at . is the estimated value from Equation (4) of is a constant parameter, and is the smallest Euclidean distance among distances between that node and explored nodes.

As discussed above, the algorithm ensures that the camera, at any iteration, always has enough energy to move back to the previous best node . Therefore, a new distribution can be generated, which sets values bigger than the minimum () in the original distribution to be the minimum value. Then, Equations (14) to (16) are modified with a new random variable and its expectations as follows:

where is the stochastic modified estimated value for the node .

Then, a new hash map is set up with pairs as : (). The next target node to be explored is the one that has the smallest modified estimated value in the map:

4.4. Estimated Energy Cost Function

As discussed above, an estimated energy cost function calculates the estimated energy cost from . This function is used to find the feasible node set and explorable node set . In this section, the equations for the cost function are derived from the robot positioning–controlled system’s response. Also, tradeoffs between the energy cost and the settling time of moving are discussed.

The energy of moving the camera between two locations from to , results from the energy cost of DC motors in 6-DoFs robot arm joints rotating from joint angles to . Therefore, can be described as the sum of the time integration of rotational power in each joint:

where is the voltage, and is the current in the DC motor’s circuit of the joint. and are the initial time and final time of the joint moving from its initial angle to its final angle .

Without detailed derivation, the dynamic model of 6-dof revolutionary robotic manipulators and DC motors can be expressed as [31]:

where Equations (23) and (24) express the joint dynamics equation. is the joint revolute variable. represent the moment of inertia of the motor and the gear of the model. is the gear ratio at the joint, and is the damping effect of the gear. represents the entry of the inertial matrix of the robot manipulator at the row and column. are Christoffel symbols and for a fixed , with . is the derivative of potential energy V with respect to joint variance. is the torque constant in is the back emf constant, and R is Armature Resistance.

Take as the actuator input to the dynamic system (23) measured at the joint from a designed controller. Therefore, equals the right side of Equation (23). That is:

In addition, without derivation, the expression of current in the Laplace domain [31] is:

where L is armature inductance, is the back emf constant, and is convolutional multiplication. Therefore, the instant current in the time domain is a function of the instant voltage and the instant angle .

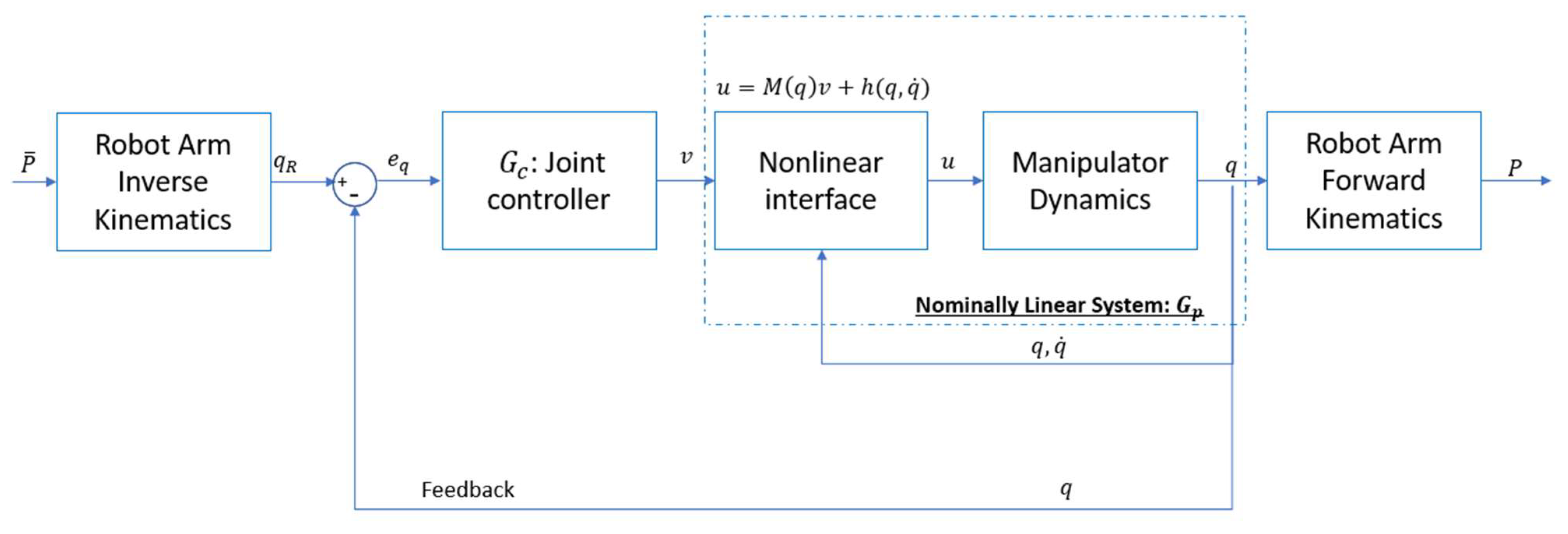

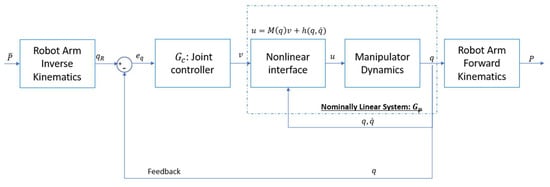

Various controller designs, such as the PID controller [32] and Youla controller [33], of 6-DoF revolute robotic manipulators have been well developed in many papers. In this paper, we use a previous Youla controller design [33] with feedback linearization (Figure 9) as the position controller of the robot manipulator that holds the camera.

Figure 9.

The block diagram of feedback linearization Youla control design used for the joint control loop.

From Figure 5, the actuator input can be derived from the virtual input so that:

where M and H are nonlinear functions of and , the first derivative of

Without showing the controller development in this paper, the 1-DoF controller transfer function and 1-DoF nominal plant with as the virtual actuator input in the feedback linearization are expressed as follows:

where is a constant parameter in the controller design.

Then, the following transfer functions can be calculated as:

By taking inverse Laplace transform and as step input, the following Equations (37)–(39) in the time domain are:

With expressions of and , Equations (27) and (31) show that:

and combine Equations (30) and (40):

where F and G are nonlinear functions of .

It has been shown so far that the estimated energy cost from location to is a function of and .

and are derived from the inverse kinematics process [31] (shown in Appendix B, Equations (A6)–(A13)):

The development of Equations (37) to (39) assumes that the target angle of each joint is a step input. A more realistic assumption is to set as a delayed input.

where is a time constant that measures the time delay of the target in the real positioning control. , and when , it indicates no delay exists in the input.

The Laplace form of (45) is:

With the new expression of , Equations (37) to (39) can be developed as:

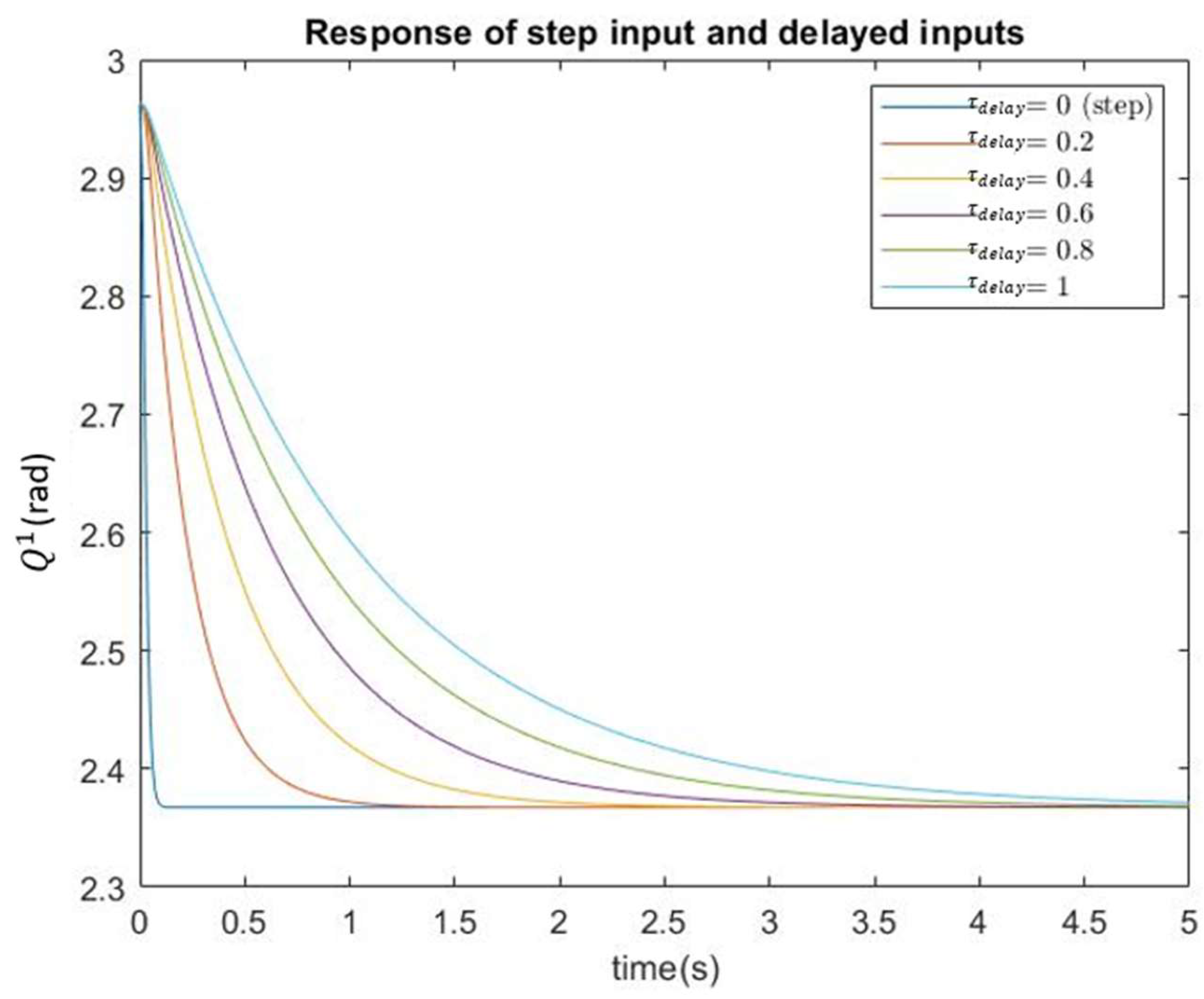

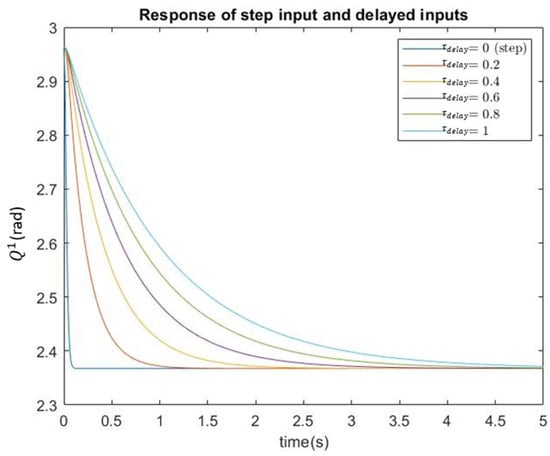

A simulation scenario is set up to calculate how estimated energy cost changes with varying Set , and and use Equations (42)–(44) and (47)–(49). Figure 10 shows the response of one angle with varying , and Table 1 presents the estimated energy cost and settling time with varying .

Figure 10.

Response of with varying .

Table 1.

Estimated energy cost and settling time with varying delay constant.

Table 1 shows a tradeoff between the estimated energy cost and settling time; reducing the energy cost of moving the camera inevitably increases the response time. This finding matches the results in Figure 6.

Systematic delays are inevitable in controlled system design. Delays are incurred in many sources, such as the time required for sensors to detect and process changes, for actuator responses to control signals, and for controllers to process and compute signals. In a real manufacturing environment, larger delays cause slower motion of the camera when searching the area, but a slower response results in less energy consumption from actuators in the manipulator.

If the delay of input is given or can be measured, the estimated energy cost can be calculated through the process in this section. However, if the value is unknown, the delay constant must be chosen and decided based on the following criteria:

- Select small τdelay for a conservative algorithm that searches a small area but ensures it ends up with the minimum that has been explored;

- Select large τdelay for an aggressive algorithm that searches a large area but risks not ending up with the minimum that has been explored.

In this section, the estimated energy cost function is well developed. However, the estimation function is developed from an ideal camera movement. The accuracy of the estimation can be negatively influenced by some unmodeled uncertainties, such as the backlash from gears in robot manipulators, unmodeled compliance components from joint vibration, etc. To tackle the problem, we can derive an online updated model of the estimation function by comparing estimated voltage and current and real-time measurements. The parameter values of motors and gears used in the simulations are summarized in Appendix A, Table A4.

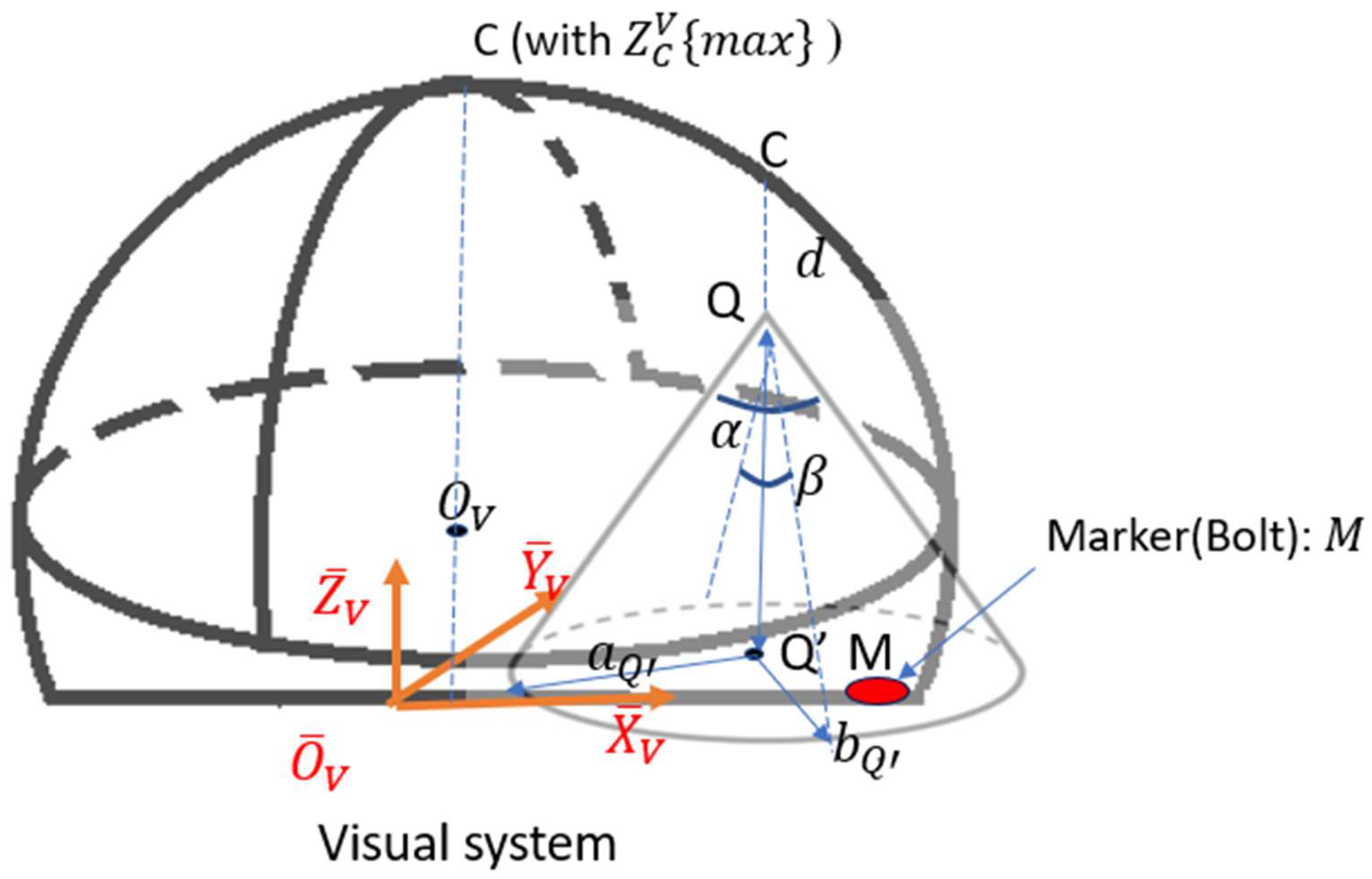

5. Simulation Setup

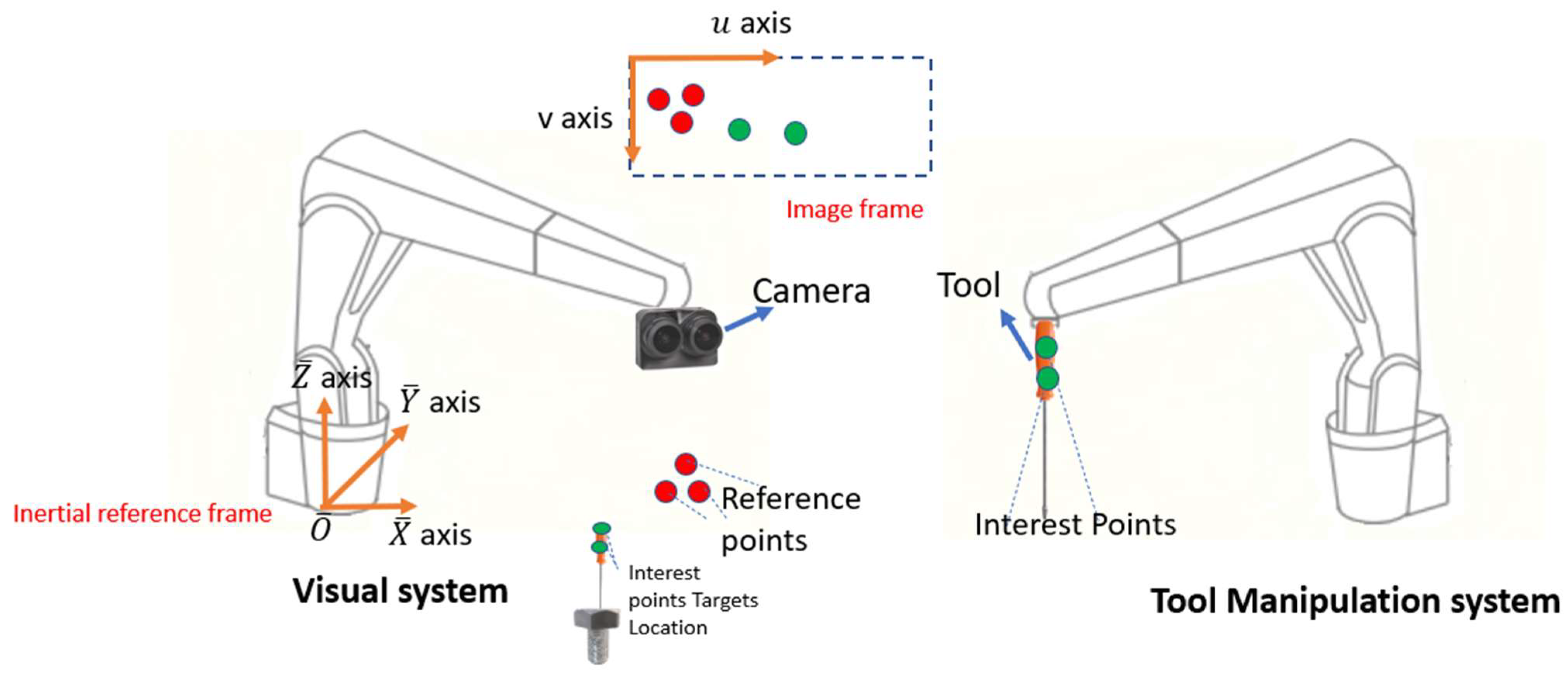

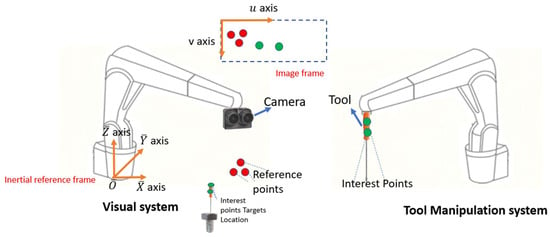

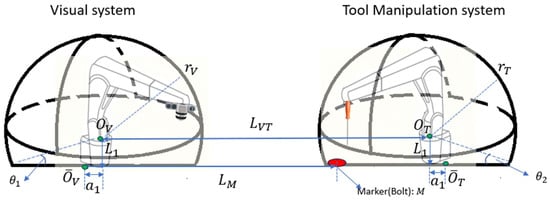

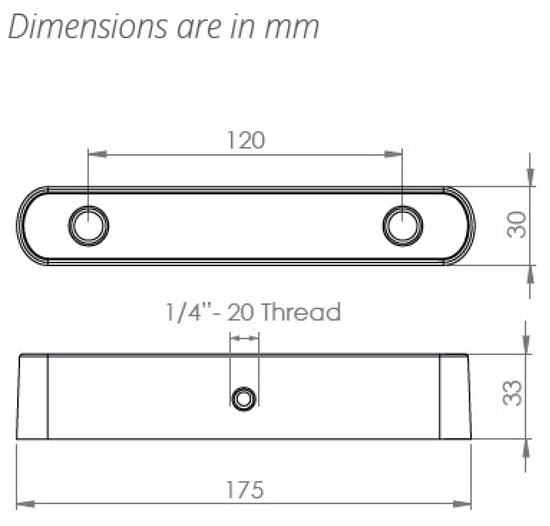

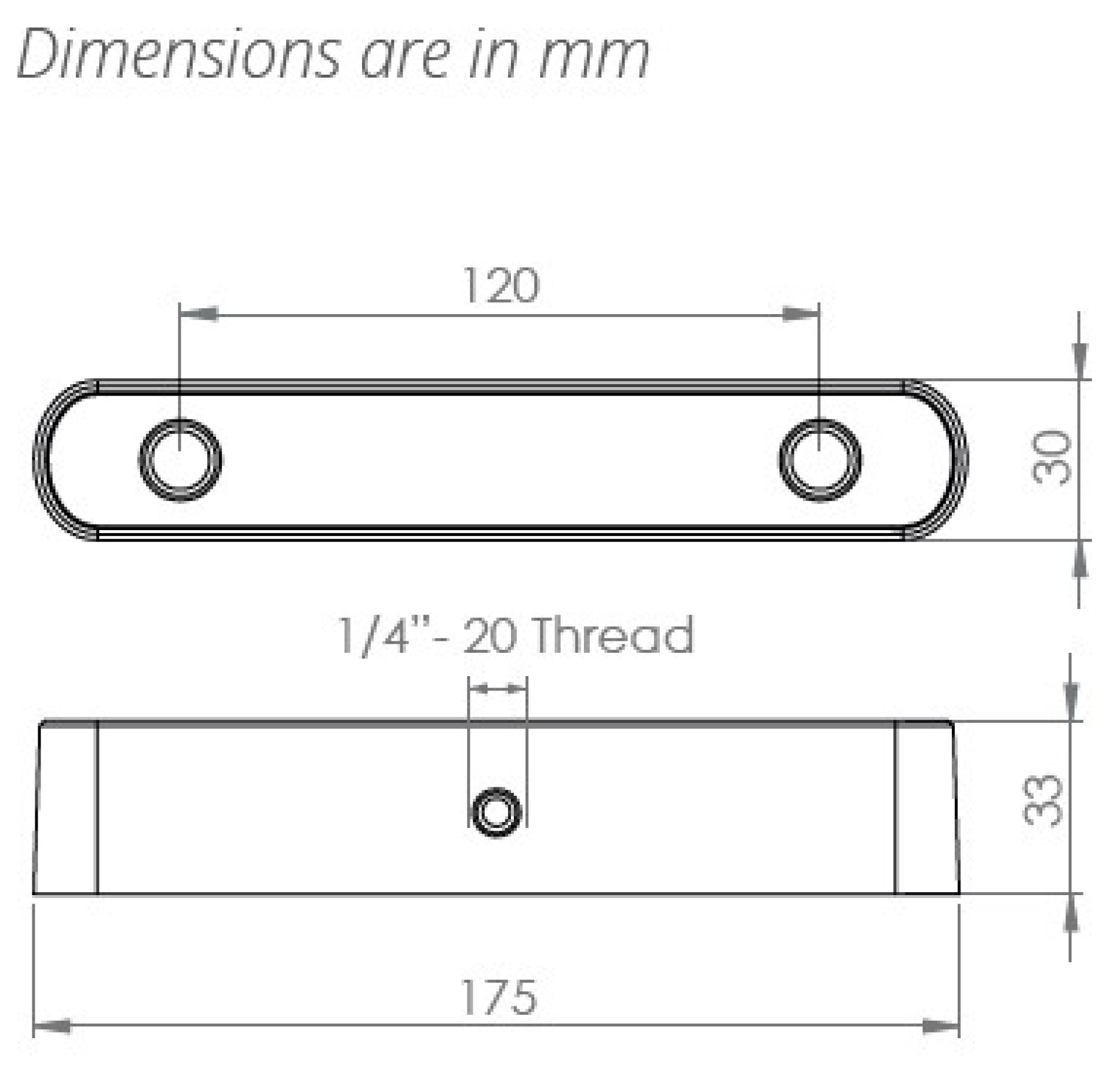

The developed algorithm was simulated on an application in automated manufacturing (Figure 11). This is a multi-robot system composed of a visual system and a tool manipulation system. In the visual system, a camera is mounted on an elbow robot arm, while a tool is held by the end-effector of the robot manipulator arm. The goal of the visual system is to provide a precise estimation of the tool pose so that the tool manipulator can control the pose with guidance from the visual system. The first step of the manufacturing process is to move the camera in the space and search for the best location so that the number of images required for averaging is minimized to reduce the image noises within an acceptable limit. Then, the camera is fixed and provides continuous vision data to the tool manipulator. Therefore, the control and operation of the two manipulators are asynchronous. The model of both robot manipulators is ABB IRB 4600 (ABB, Zurich, Switzerland) [34], and the model of the stereo camera we chose in this project is Zed 2 (Stereolabs, San Francisco, CA, USA) [35].

Figure 11.

The topology of the multi-robotic system for accurate positioning control.

We first define the camera’s operational space in this application and then provide the simulation results of a made-up scenario in the next.

In the above discussion, a camera operational space is gridded with nodes, and each node is a potential candidate for the camera’s optimal position. The camera operational space is defined by the three geometric constraints below:

- The camera can only be allowed to locations where fiducial markers that are attached to the tool are recognizable on the image frame. Therefore, the fiducial markers are in the angle of view of the camera, and the distance between the markers and the camera center is within a threshold;

- The camera can only be allowed to locations within the reach of the robot arm;

- The camera can only be allowed in locations where the visual system and the tool manipulation system do not physically interfere with each other.

From the specifications of the stereo camera and the robot arm (Appendix A, Table A1 and Table A3), the geometry and dimensions of the camera operational space are analyzed in the following part of the subsections.

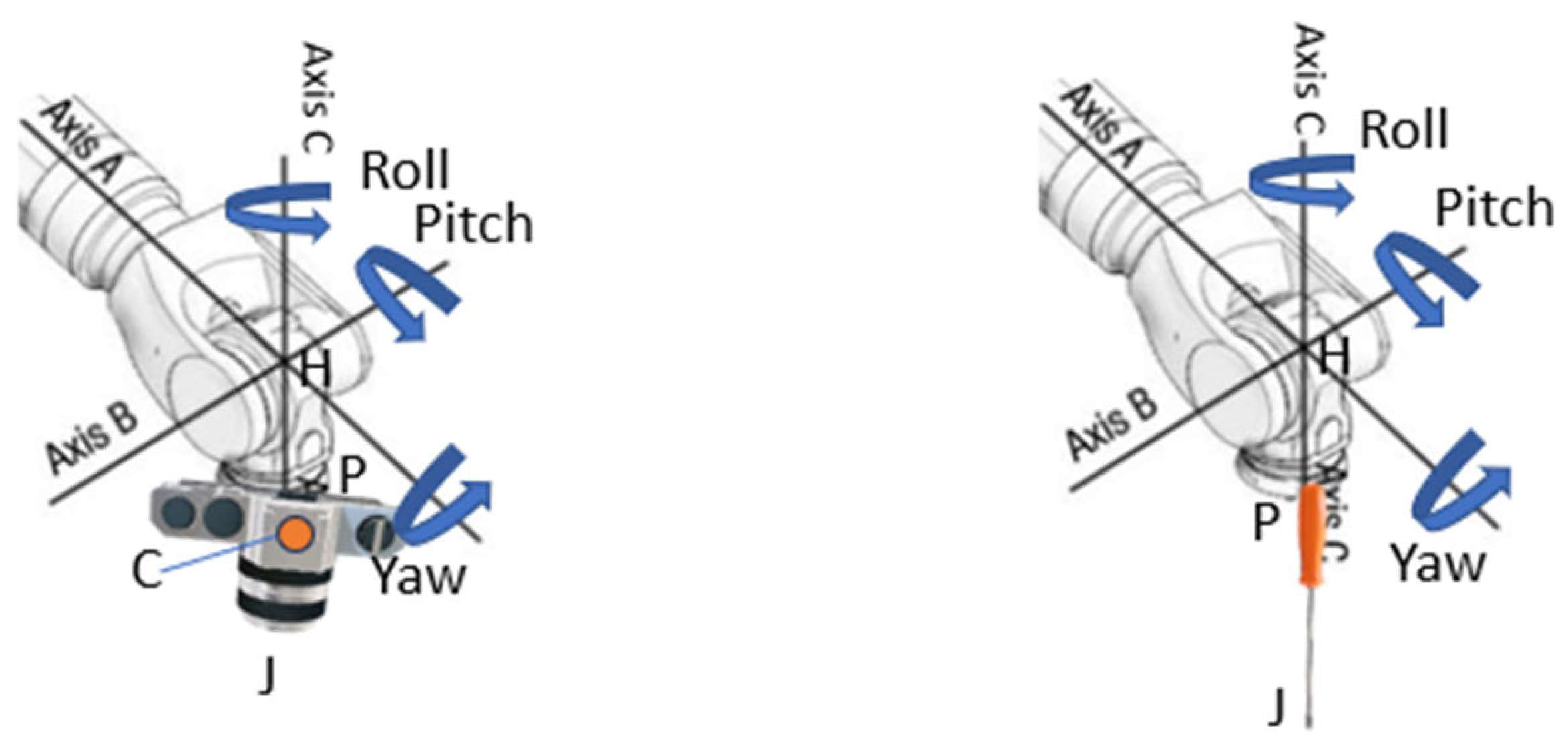

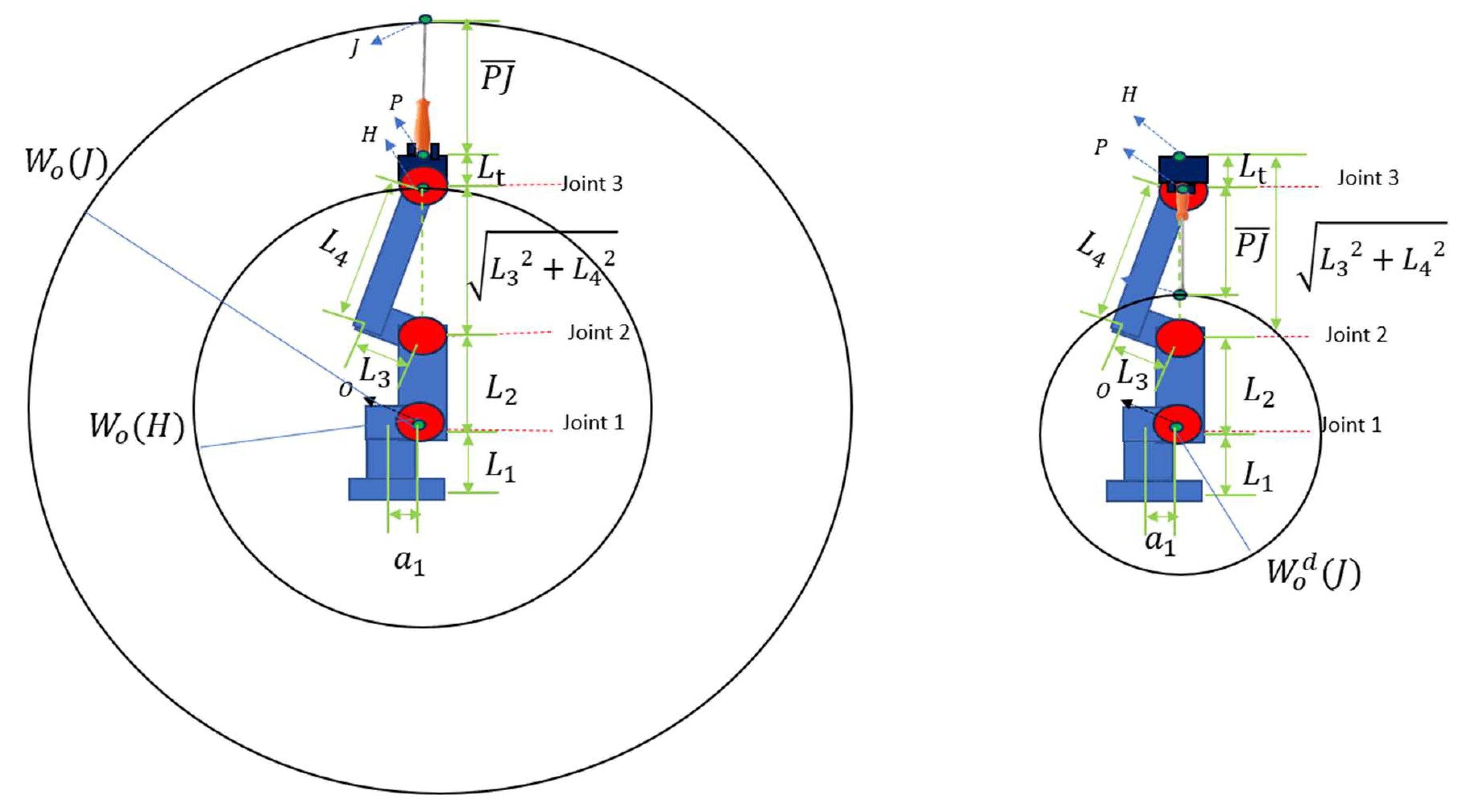

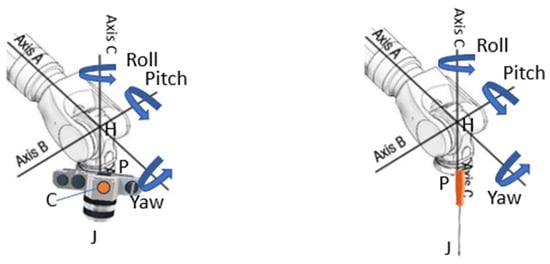

5.1. Reachable and Dexterous Workspace of Two-Hybrid Systems

In the spherical wrist model of the robot arm, three rotational axes represent the pitch, roll, and yaw of the end-effector independently. As shown in Figure 12, these three axes intersect at point . Point is the location of the end-effector, and it is the place where the camera or tool is mounted. For the elbow manipulator mounted with the stereo camera, the camera is placed so that its optical center line coincides with axis C. The location of camera optical center C is determined, and a good estimation of it can be found in this paper [36]. For now, assume the optical center is in the middle of (set ). For the elbow manipulator mounted with the tool, the screwdriver is attached at point . Point J indicates the far end of the object (tool or camera) attached to the end-effector. Point H is kept stationary no matter which axis rotates. Therefore, the task of positioning and rotation is decoupled in the spherical wrist robot model.

Figure 12.

Spherical wrist model.

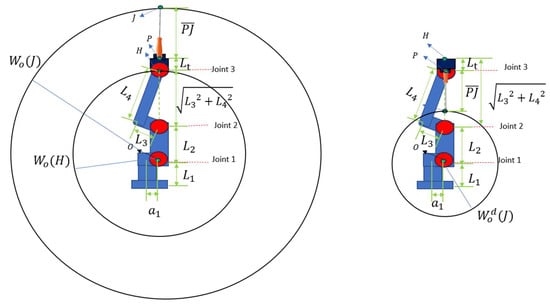

The idea of reachable and dexterous workspaces of an ideal elbow manipulator has been introduced in Ref. [37]. An ideal elbow manipulator is a manipulator whose angles of rotation are free to move in the whole operational range [0, 2]. However, a realistic elbow manipulator is limited to moving its joints within certain ranges of angle.

The workspace of an ideal elbow manipulator (Figure 13) is a sphere centered at joint 1 of the manipulator, denoted as point . The reachable workspace concerning the center o of a manipulator is the aggregate of all possible locations of point attached to the end-effector and denoted as . The dexterous workspace to the center o of a manipulator is the aggregate of all possible locations that point can reach all possible orientations of the end-effector and is denoted as . The reachable workspace of is denoted as .

Figure 13.

Workspace of an ideal elbow manipulator.

It can be shown in the figure:

For an ideal elbow manipulator, the radiuses of workspaces are expressed below:

where are link lengths of the manipulator. is the length of the end-effector, and is the length of the object that mounts on the end-effector.

As the camera and the tool must be able to rotate in all 3 DoFs when they are at the target position, the dexterous workspace is used as the working space of the visual system and the tool manipulation system. The dexterous workspace of the optical center is used for the visual system, and the dexterous workspace of the far endpoint of the tool is used for the tool manipulator system.

(From Specification, Appendix A, Table A1.)

(From Specification, Appendix A, Table A1.)

Figure 14 shows the workspace setup for the hybrid system. The dexterous space of the robot arm with the camera is a sphere with a radius of , and its sphere center is , and the dexterous space of the robot arm with tools is a sphere with a radius , and the center of this sphere is Two systems intersect with the ground at an angle of and .

Figure 14.

The workspace configuration of the multi-robotic system.

To avoid interference between the visual and the tool manipulation systems (as the third geometric constraint of the operational space), the reachable workspaces (the maximum reach) of the two systems should have no overlap. The reachable workspaces of the two systems are calculated from Equation (51):

(From Specification, Appendix A, Table A1.)

(From Specification, Appendix A, Table A1.)

Let be the distance between and . The following relationship must be satisfied:

The visual system is able to detect the tool when it gets close to the target pose (just above the bolt). Also, reference points are put near the tool’s target pose. Assume a marker is put where the bolt is, and this marker represents an approximated location of all interest points and reference points. Therefore, the marker should be within the dexterous space of the tool manipulation system. Set up a coordinate system with (the projection of on the ground with offset) as the origin. The projection of on the ground with offset is Assume the marker is placed on the line . The distance m from marker M to should be:

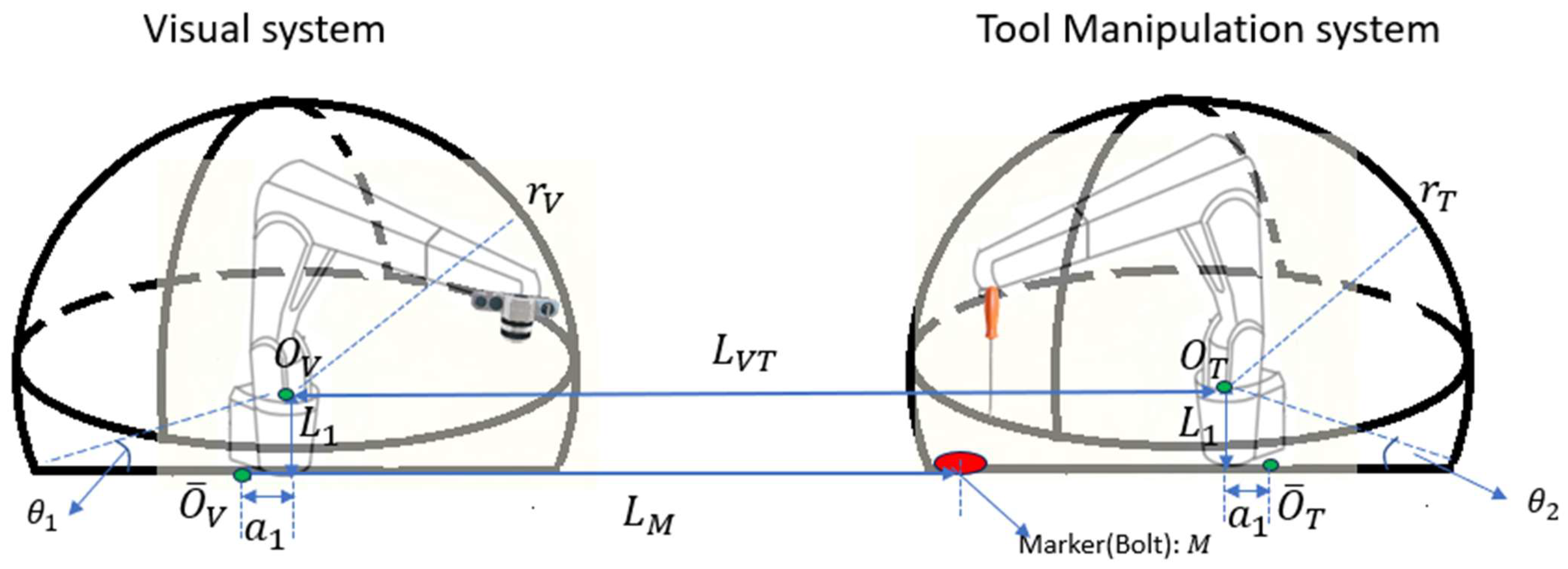

5.2. Detectable Space for the Stereo Camera

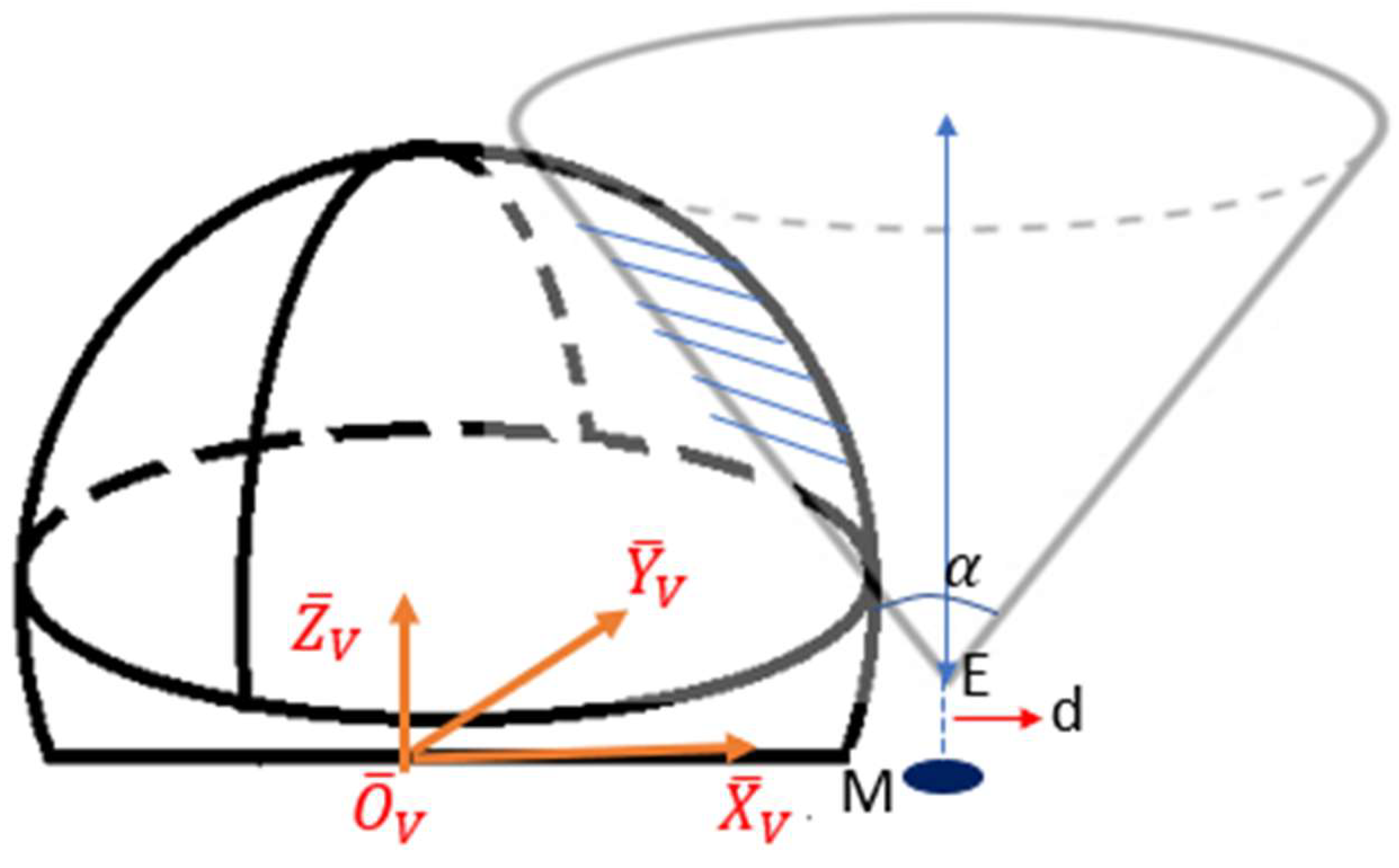

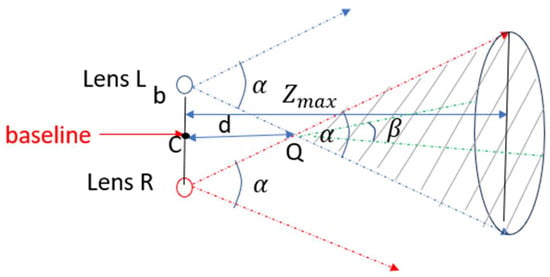

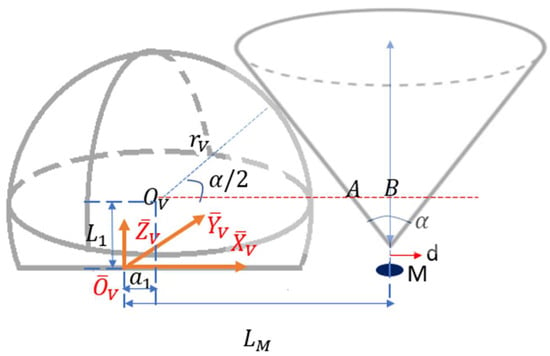

From Appendix A, Table A3, the angle of view in width and height for both lenses in the stereo camera is = , and . The detectable space for each lens can be modeled as the inner area of a cone with angles and And the overlapped space is the detectable space for the stereo camera. The model is shown below (Figure 15). Point C is the center of the baseline whose length is denoted as or the center of the camera system. The overlap area is also a cone with angles of vertex and , with offset from the baseline. Point Q is the vertex of the cone.

Figure 15.

The detectable space of the stereo camera.

There is an upper bound of the distance between the object to the camera center; if the object is too far away from the camera center, the dimensions of the projected images are too small to be measured. Suppose to have a clear image of the fiducial markers (circle shapes), it is required that the diameter of the projected image should take at least 5-pixel numbers in the image frame:

where is the pixel number in the image frame.

Select the Zed camera’s mode so that its resolution is 1920 × 1082, and the image sensor size is 5.23 mm × 2.94 mm. Then, the number resolution in unit length is:

Then, the range of the markers’ diameter on the image frame is:

Also, assume in the inertial frame, the diameter of the attached fiducial marks is:

Then, from the pinhole model of the camera, the range of distance between the markers and camera center Z is:

where is the maximum depth of the camera to detect the markers. This parameter defines the height of the cone in Figure 15. The detectable space (abiding by the second geometric constraint) forms an elliptic cone with different angles of the vertex.

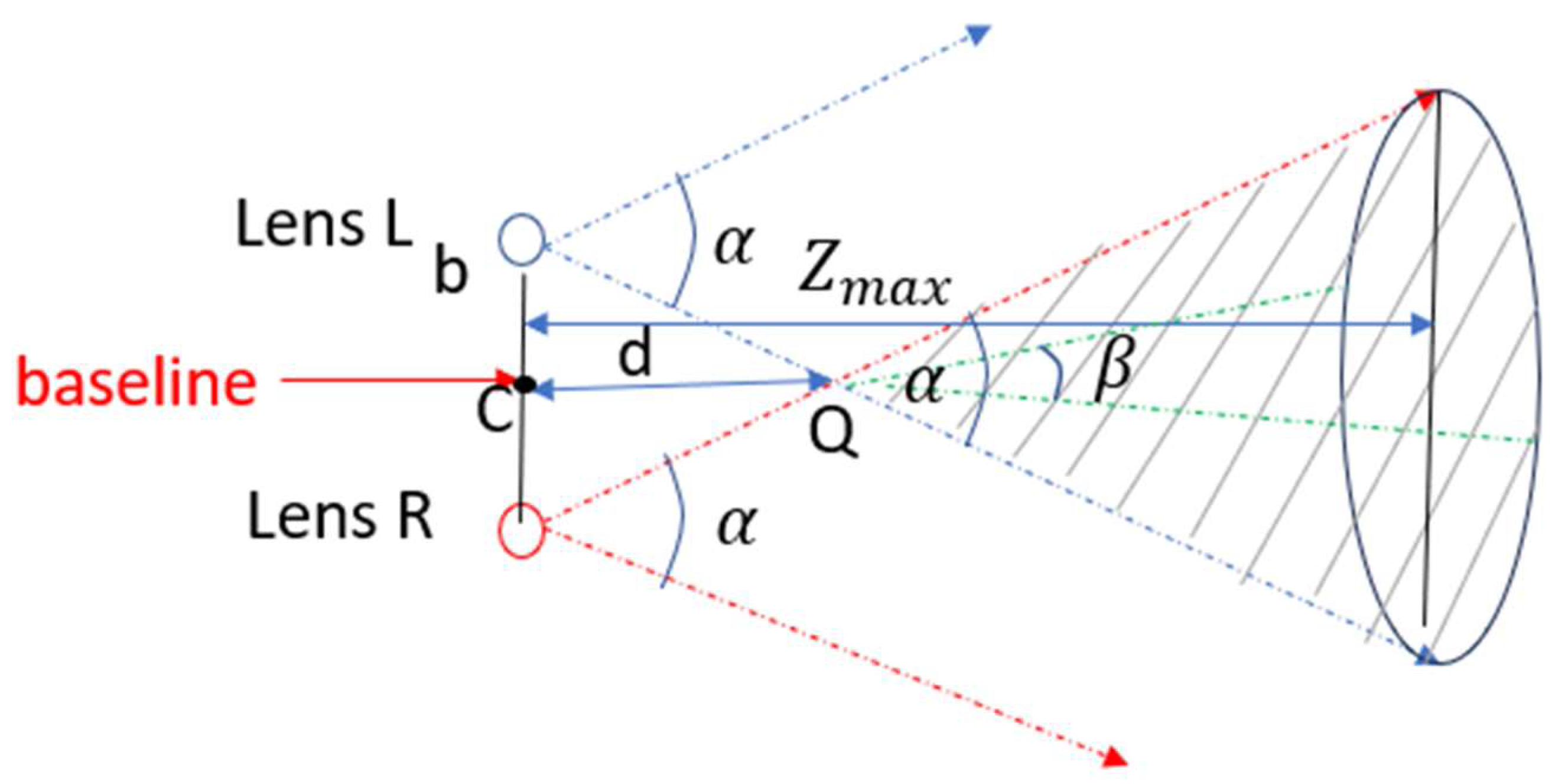

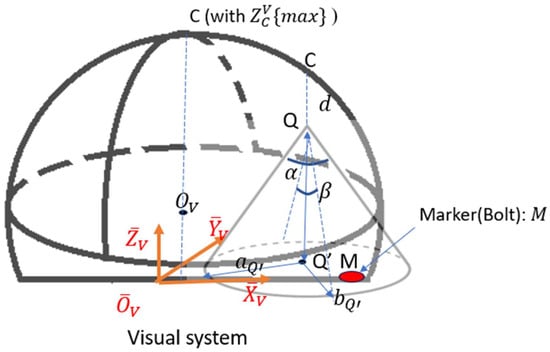

5.3. Camera Operational Space Development

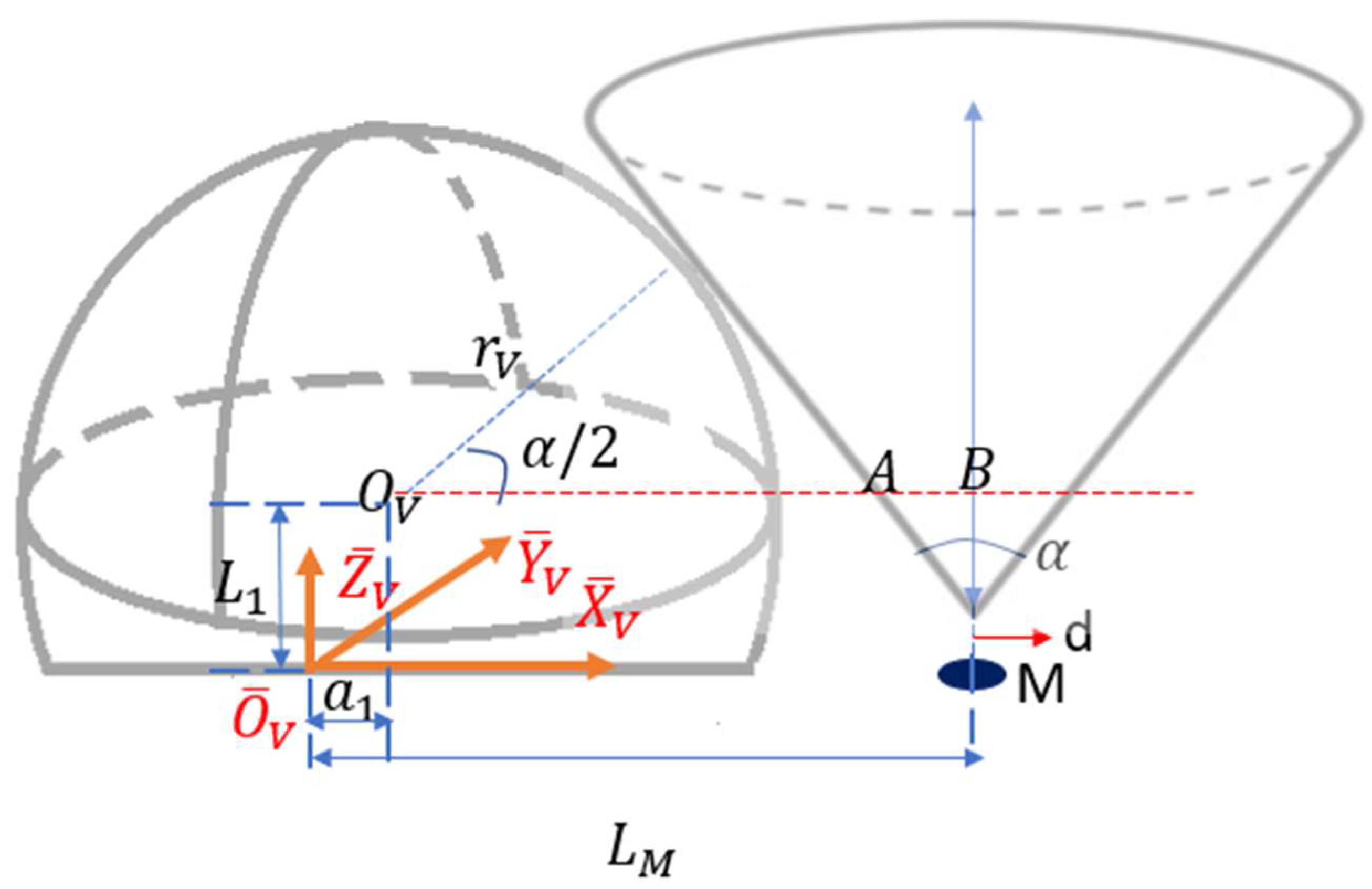

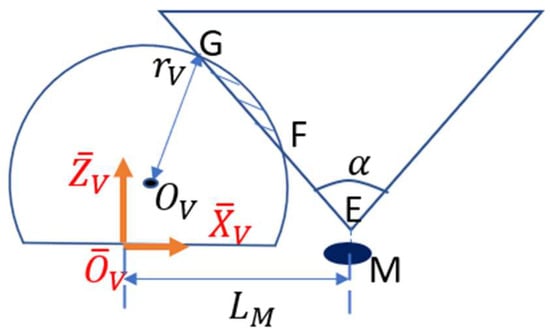

As in Figure 16, set the camera lens so that it is always parallel to the face of the marker and the baseline is parallel to the axis. In this case, the lens is kept facing downward when detecting the marker on the ground. As discussed in the above section, the detectable space of the camera is modeled as a cone. This cone intersects with the horizontal plane and forms an oval. To have the camera detect the marker, the marker must be contained inside the oval.

Figure 16.

The detectable elliptic cone in the inertial visual system frame.

An inertia coordinate system is set up with its origin seated at the bottom center of the visual system. Make point the location of the camera center, and its coordinates in the inertial frame are (, , ). is the vertex of the detectable elliptic cone, and is the projection of on the horizontal plane. is the marker.

The elliptic cone of the camera’s detectable space always intersects with the horizontal plane. Consider an extreme case when point is located at the apex of the visual system workspace, as shown in Figure 16. Then, the coordinate of at the inertial frame is:

where , defined above, is the maximum depth of the camera to detect the markers. Therefore, the cone intersects with the horizontal plane when the camera center is placed at any location of the workspace’s contour.

From the expression of Cartesian coordinates in the inertia frame:

where is the same offset as in Equation (62). and are the major radius and the minor radius of the oval. Also, the coordinates of points and are and . is the center of the oval. To ensure the marker is inside the projected oval, it is required that:

Plug Equation (69) into Equation (70):

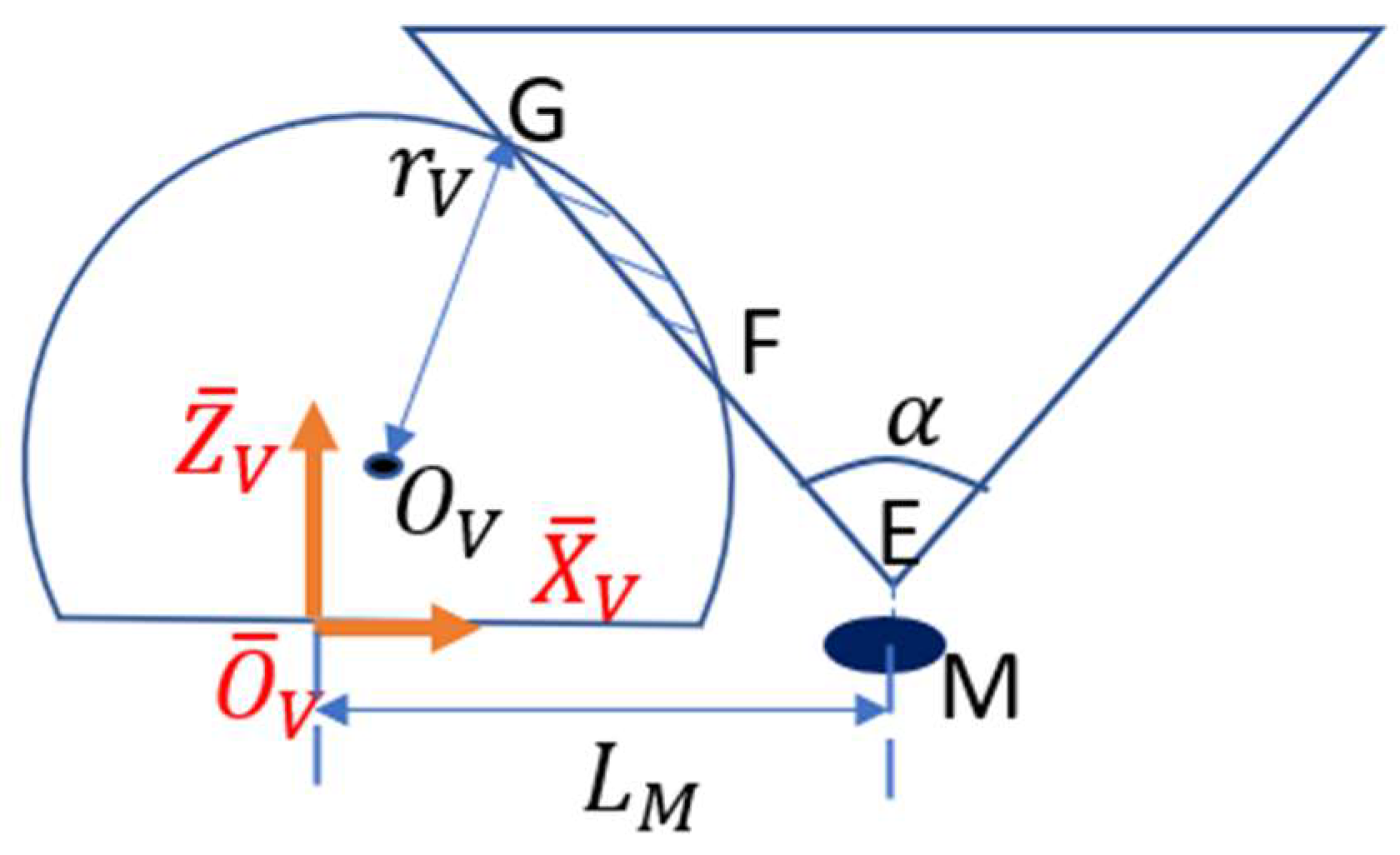

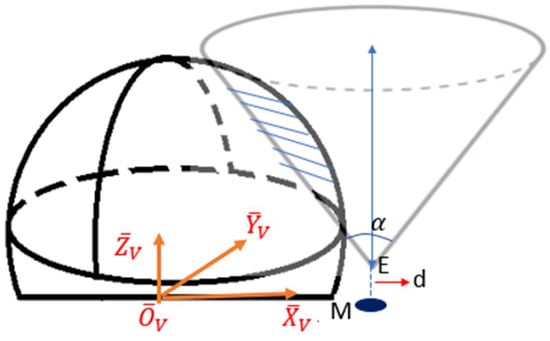

Inequality (71) is exactly a mathematic expression of all points (, , ) within a cone whose opening is in the positive Z direction with its vertex at (,) and the opening parameters as Therefore, this inequality defines the second geometric constraint of the camera center. It forms an elliptical cone with its vertex located in Figure 17 at point E, which is the offset point of the marker from the ground. As (, , ) should also be within the sphere of the workspace (abiding by the first geometric constraint), the camera operational space is presented as the overlap between the cone and the sphere (the shaded area in Figure 17).

Figure 17.

Illustration of camera operational space (the shaded area).

From Figure 17, the marker should not be too far from the visual system, otherwise there is no overlap region between the cone and the sphere. The largest value of , which is the distance from the marker to the coordinate center, occurs when the cone is tangent to the sphere, as shown in Figure 18. To find the limit of , draw a horizontal line across point so that it intersects the surface of the cone at point A and the center line of the cone at point B, as shown in Figure 18. Let and be the line segment lengths.

Figure 18.

An extreme case of marker position.

Therefore, from the geometry, the upper limit of is:

Combining with the lower bound of in Equation (61), the marker M should be placed in the system so that:

5.4. Mathematic Expression for Node Coordinates within the Camera Operational Space

From Equation (73), the closer the marker’s distance to its lower bound, the larger the operational space. In general, a larger operational space is preferred because the camera has a larger space for searching the optimal location. Set to equal its lower bound:

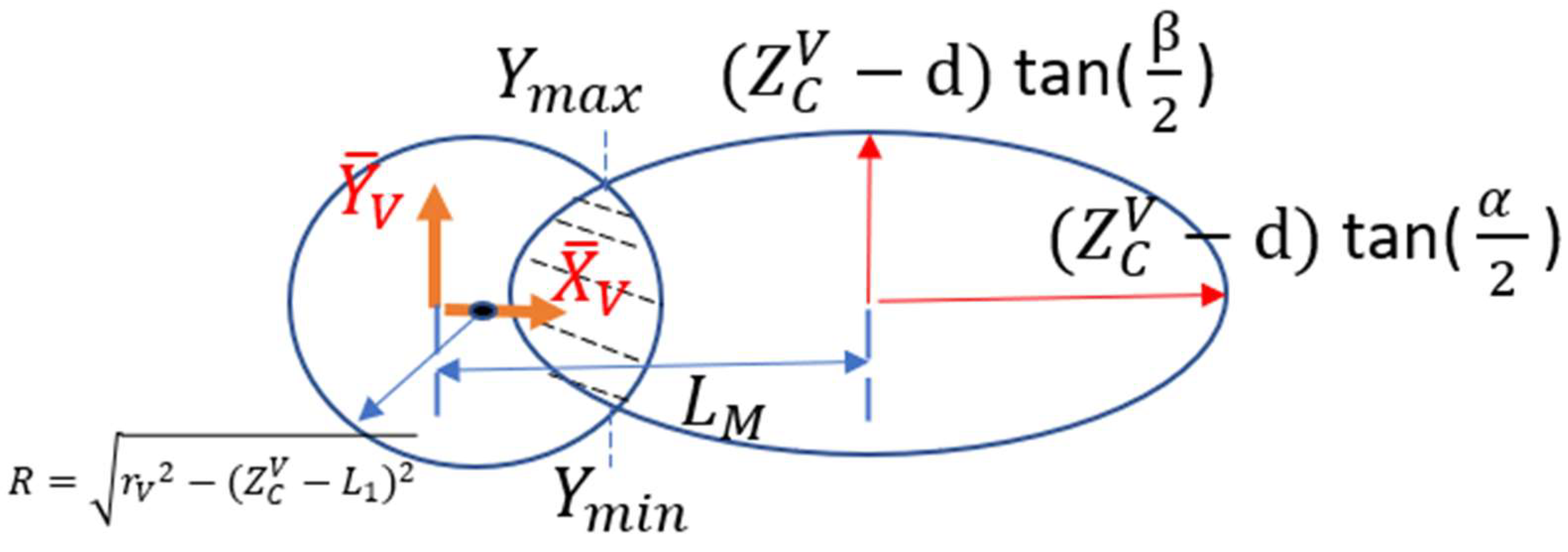

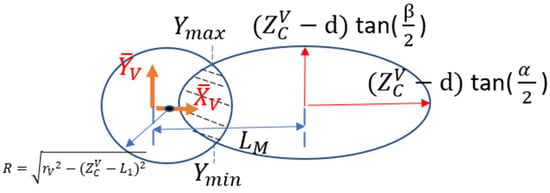

Get the cross-section area at the plane (Figure 19).

Figure 19.

The camera operational space cross-section at plane.

The function of the semi-circle and the line EFG is:

Combine Equations (75) and (76) and solve:

Solve Equation (77) by plugging numbers:

and are the largest and smallest coordinates of the camera center that is within the camera operation space.

Take a value of ( coordinates of the camera center ) in the range (,) = (,). Set a plane , and that plane intersects the camera operational space and forms a shade area, which is the overlap between the circle and the oval, as shown in Figure 14. The inequality governing points within the sphere at a specific is:

The inequality governing points within the cone at is:

By plotting those inequalities in Figure 20, the shaded area is where and ( and coordinates of the camera center ) should be located within. The intersection of the two curves occurs when equality holds for (80) and (81):

Figure 20.

The camera operational space cross-section at plane.

Solve by plugging numbers:

and are the largest and the smallest coordinates of the camera center in the camera operation space with specific .

Take in the range (, ), then for specific :

and are the largest and the smallest coordinates of the camera center in the camera operation space with specific and .

5.5. Numerical Solution of the Ideal Camera Operation Space

With known parameters from the camera and robot arm specifications, all possible locations of the camera center (, , ) inside the camera operational space can be derived from the following steps:

- Calculate and by Equation (77) and mesh grid in (,);

- Take the mesh value and calculate and by Equations (82) and (83) and mesh grid in (,);

- Take the mesh and calculate and by Equations (85) and (86) and mesh grid in (,).

Each above computed (, , ) is inside the camera operation space, and these three steps completely account for all the geometric constraints defined above. The number of mesh points (nodes available for searching) depends on the mesh grid size.

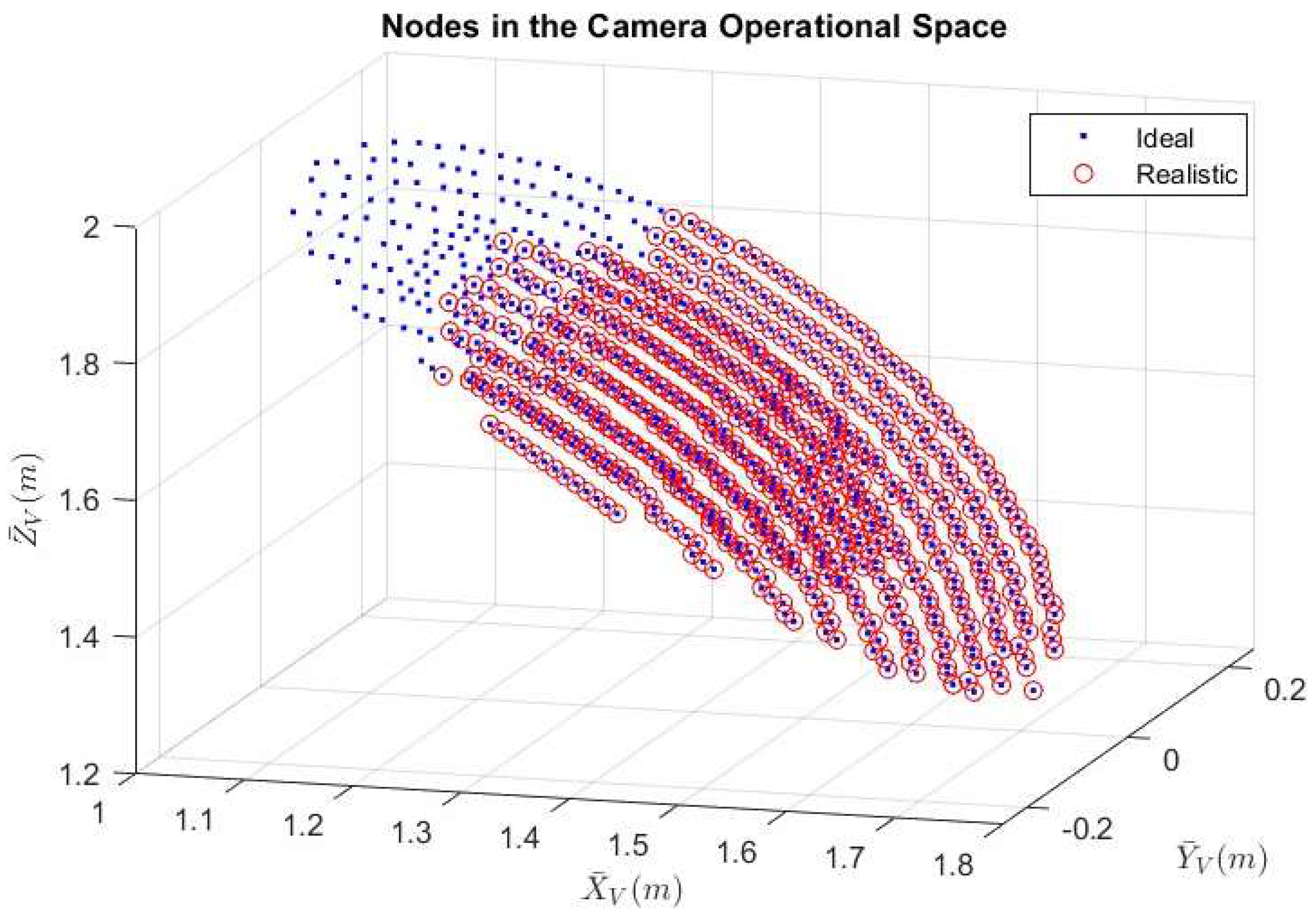

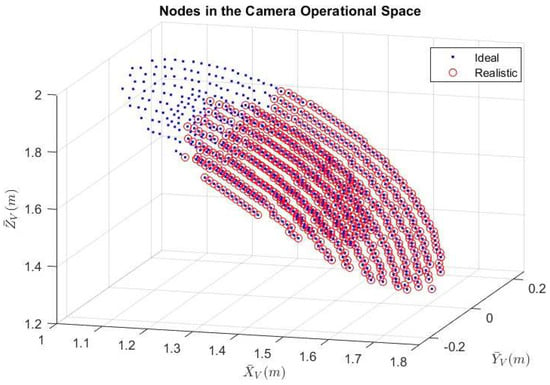

5.6. The Camera Operational Space from the Realistic Robot Manipulator

The above procedures of finding the camera operational space assume the use of ideal robotic manipulators, whose joints are free to move from . However, for realistic robotic manipulators, their revolutionary movement is limited within certain ranges of angles, and the ranges of motion in every joint of the specific robot manipulator ABB IRB 4600 are listed in Appendix A, Table A2.

The geometric shape of the operational space for a realistic robotic manipulator is usually irregular (unlike an ideal manipulator whose operational space is the combination of a sphere and a cone), and the mathematical methods of directly finding the nodes within the space are computationally expensive. A faster way of generating realistic camera operational space is to decrease the ideal operational space by taking out nodes that are out of the operational ranges. These outlier nodes can be found in the inverse kinematic model of the robotic manipulator. Figure 21 shows the decreased nodes from the ideal camera operational space to the realistic space.

Figure 21.

Reduction of nodes from the ideal to the realistic camera operational space.

6. Simulation Results

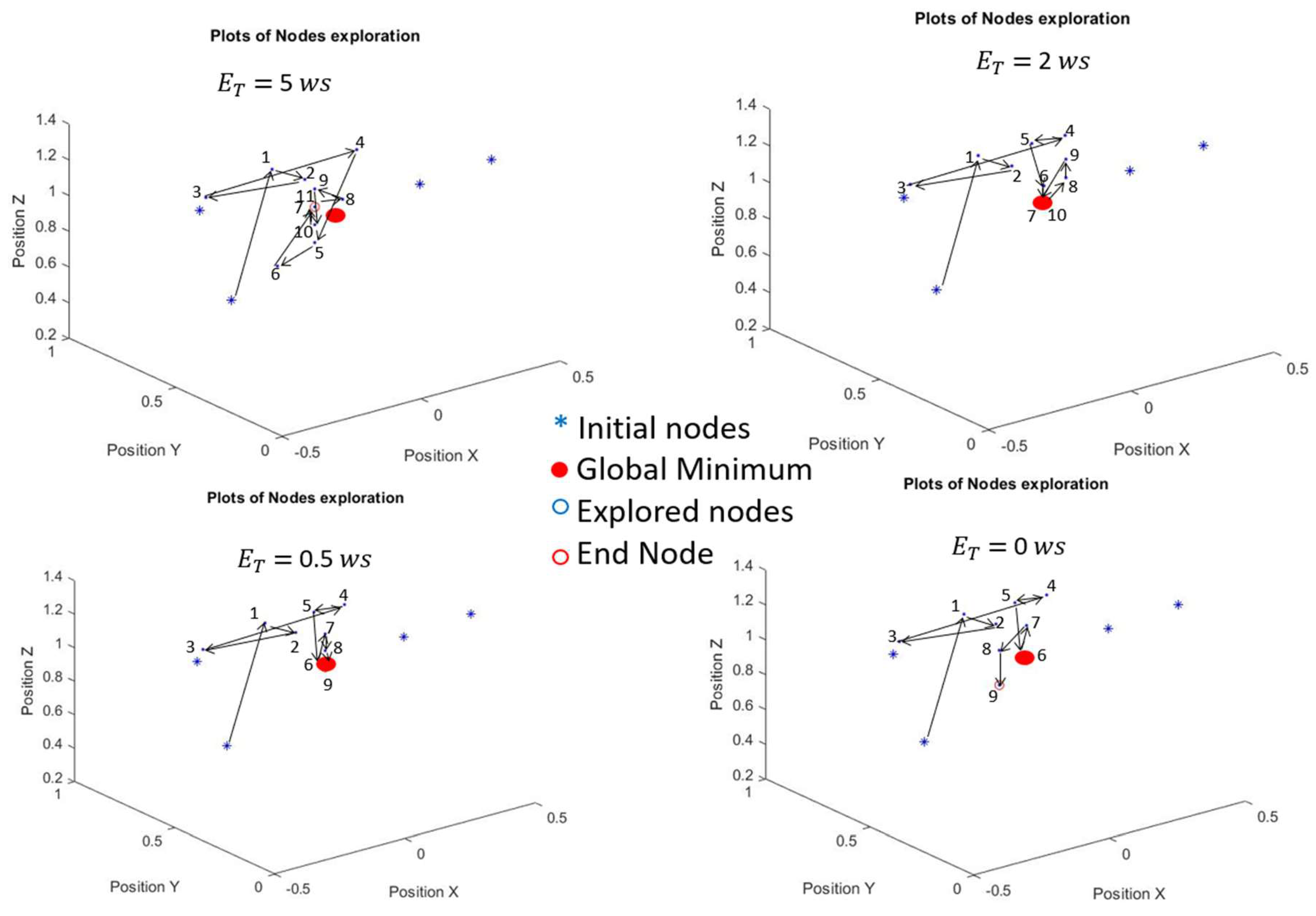

A simulation in MATLAB was used to validate the proposed algorithm. In the simulation, the environment was set up with two minimums in the space (only one is the global minimum). and are tunable parameters and set as . The initial available energy bound is set to be 12 (watt-second (ws) or Joule). Figure 22 shows the sequences of nodes being explored in iteration steps by setting different energy thresholds .

Figure 22.

Plots of sequential exploration with different ET.

By trials of different simulation runs, it can be concluded that:

- When the ET is large (in this scenario, 3 ws < ET ≤ 12 ws), the algorithm is conservative, so it has only searched a small area that excludes the global minimum;

- When the ET is in the mid-range (in this scenario, 0.5 ws < ET ≤ 3 ws), the algorithm drives the camera to the global minimum.

When the ET is small (in this scenario, the algorithm is aggressive. Although it has searched a large number of areas that include the global minimum, it does not have enough energy to move the camera back to the best location explored in the previous stages.

The two tunable parameters and are selected randomly in the simulation. These parameters are used in the models to estimate the noise spatial distribution in the environment. As stated in Section 4, the positive parameter indicates that the weight of an explored node in the estimation function is based on its Euclidean distance from the node to be estimated. The larger in Equation (4) implies that Euclidean distance has a smaller influence on the weight. In other words, with increasing , the estimation result of an unexplored node is more different than that of an explored node nearby. As a result, the algorithm is more likely to search nodes around the local minimum. Similarly, is proportional to the magnitude of the standard deviation in the stochastic modified process. A smaller causes a smaller standard deviation and thus a more deterministic evaluation of an unexplored node, which discourages the algorithm to explore areas that are away from the local minimum.

Therefore, by increasing or decreasing , we should expect that the algorithm has a higher tendency to exploit over explore. In this paper, we developed a parameter to present the degree of exploration in a simulation by measuring the average distance between the new explored node to the nearest previously explored node.

where is the total number of iterations in a simulation, and is the position of the new explored node at the iteration. is the index of explored node set as defined in Equation (6) and is the Euclidean distance between two nodes as defined in Equation (7).

In a simulation, a large value of the parameter means new explored nodes in each search iteration are generally far away from previously explored nodes, and the algorithm succeeds in exploring a large area. A sensitivity test was performed by varying and to show how these two parameters affect the searching process in the same simulation environment. Table 2 presents the sensitivity analysis of by keeping a constant = while Table 3 presents the sensitivity analysis of by keeping a constant = (No unit). All analysis tests are based on the same scenario in Figure 22 with 20 (ws) and = 2 (ws).

Table 2.

Sensitivity analysis with varying Kest. (Ksd = 50 (m−1)).

Table 3.

Sensitivity Analysis with Varying Ksd. (Kest = 50).

Table 2 and Table 3 show that by decreasing or increasing , the algorithm explores more areas, as indicated by the parameter . The iteration numbers reduce as more areas have been explored because fewer nodes can be reached when the total energy for moving is bounded. Those conclusions can be derived from the analysis. On one hand, the algorithm is too conservative and searches few areas when is too large or is too small. On the other hand, the algorithm is too aggressive and skips exploiting when is too small or is too large. Both cases will make the node not end up with the global minimum. Therefore, a moderate combination of and is preferred. Future research can focus on the development of adaptive algorithms that tune and over iterations based on errors between real measurements and estimations.

In addition, the numerical values of were also picked randomly for testing. In real applications, a fixed should be selected before the operation of this algorithm. The selection of is related to the specific application scenario, size of the camera’s operational space, and total energy available at the beginning. However, the general rule of thumb is the following: choose small values of when both and operational space is large to encourage exploring over exploiting; otherwise, choose a large .

7. Conclusions

In this article, we developed an algorithm so that a camera can explore the workspace in a manufacturing environment and search for a location so that the image noise is minimized within the space that it can reach. This article also provides a detailed development of the camera’s operational space for a specific application. The results in a virtual environment show the algorithm succeeds in bringing the camera to the optimal (or suboptimal if the optimal one is unreachable) position in this specific scenario. By reducing the processing time of images, this algorithm can be used for various visual servoing applications in high-speed manufacturing.

However, challenges arise in some possible scenarios of real applications. For instance, if environmental factors vary too rapidly across the space, a fine gridding of the space is required to manifest those variations in the estimation function, which increases computational complexity, resulting in poor real-time performances. The same issues exist in cases where the operational space of the camera is too large.

Other limitations include that this algorithm is incapable of differentiating contrast in images at different locations. Low contrast other than image noises occurs as another issue in the visual servoing environment; for instance, low contrast in soft tissues has been shown to have negative effects on the performance of visual servoing controllers in medical applications [38]. In addition, this algorithm does not account for cases when the object is partially obstructed, which is very common in manufacturing environment. Limitations in the camera hardware that cannot account for additive noise are also not addressed in this paper. Those effects cannot be eliminated by any image denoising processes. For example, sensors with a lower dynamic range may produce images where the details are lost in extreme lighting conditions (bright sunlight or deep shadows). Another example is that some lenses may cause vignetting (darkening around the edges) or distortion, which can degrade the perceived image quality.

The simulation results show how the energy threshold affects the behavior of the algorithm. A moderate threshold is suggested so that the algorithm can search for enough amount of area but enables the camera to be driven back to the minimum with the limited energy available. The analysis of ’s value in different applications is a focus of our future work. This may also include adaptive algorithms that update and online and an adaptive function for energy estimation. Also, orientations of the camera are fixed in this paper. In the future, we would like to develop advanced algorithms that provide not only the optimal of positions but also the orientations inside the space.

In summary, this paper initially explores an adaptive algorithm to search for the optimal location in space with respective to image noises for observation in ETH or ETH/EIH cooperative configurations. Although several improvements of this algorithm can be addressed in the future, it has already shown a great potential to be applied as an upgraded feature in some real manufacturing tasks.

Author Contributions

Methodology, R.L.; Investigation, R.L.; Writing—original draft, R.L.; Writing—review & editing, F.F.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In this section, we show the geometric model of a specific robot manipulator ABB IRB 4600 45/2.05 [23] and a figure of the camera model Zed 2 with dimensions [24]. This section also contains specification tables of the robots’ dimensions, camera, and motor installed inside the joints of the manipulators.

Figure A1.

IRB ABB 4600 model with attached frames.

Figure A1.

IRB ABB 4600 model with attached frames.

Figure A2.

Zed 2 stereo camera model with dimensions.

Figure A2.

Zed 2 stereo camera model with dimensions.

Table A1.

Specification table of ABB IRB 4600 45/2.05 model (dimensions).

Table A1.

Specification table of ABB IRB 4600 45/2.05 model (dimensions).

| Parameters | Values |

|---|---|

| Length of Link 1: | 495 mm |

| Length of Link 2: | 900 mm |

| Length of Link 3: | 175 mm |

| Length of Link 3: | 960 mm |

| Length of Link 1 Offset: | 175 mm |

| Length of Spherical Wrist: | 135 mm |

| Tool Length (Screwdriver): | 127 mm |

Table A2.

Specification table of ABB IRB 4600 45/2.05 model (axis working range).

Table A2.

Specification table of ABB IRB 4600 45/2.05 model (axis working range).

| Axis Movement | Working Range |

|---|---|

| Axis 1 Rotation | +180 to −180 |

| Axis 2 Arm | +150 to −90 |

| Axis 3 Arm | +75 to −180 |

| Axis 4 Wrist | +400 to −400 |

| Axis 5 Bend | +120 to −125 |

| Axis 6 Turn | +400 to −400 |

Table A3.

Specification table of stereo camera Zed 2.

Table A3.

Specification table of stereo camera Zed 2.

| Parameters | Values |

|---|---|

| Focus Length: f | 2.8 mm |

| Baseline: B | 120 mm |

| Weight: W | 170 g |

| Depth Range: | 0.5–25 m |

| Diagonal Sensor Size: | 6 mm |

| Sensor Format: | 16:9 |

| Sensor Size: W × H | 5.23 mm × 2.94 mm |

| Angle of View in Width: | |

| Angle of View in Height: | 55.35 |

Table A4.

Specification table of motors and gears.

Table A4.

Specification table of motors and gears.

| Parameters | Values |

|---|---|

| DC Motor | |

| Armature Resistance: | 0.03 |

| Armature Inductance: | 0.1 mH |

| Back emf Constant: | 7 mv/rpm |

| Torque Constant: | 0.0674 N/A |

| Armature Moment of Inertia: | 0.09847 kg |

| Gear | |

| Gear Ratio: | 200:1 |

| Moment of Inertia: | 0.05 kg |

| Damping Ratio: | 0.06 |

Appendix B

In this section, we show forward kinematics and inverse kinematics of the six DoF revolute robot manipulators. The results are consistent with the model ABB IRB 4600.

Forward kinematics refers to the use of kinematic equations of a robot to compute the position of the end-effector from specified values for the joint angles and parameters. Equations (A1)–(A5) are summarized below:

where , , and are the end-effector’s directional vectors of yaw, pitch, and rin the base frame (Figure A1). And is the vector of absolute position of the center of the end-effector in base frame . For the specific model ABB IRB 4600-45/2.05 (ABB, Zurich, Switzerland) (handling capacity: 45 kg/reach 2.05 m), the dimensions and mass are summarized in Table A1.

Inverse kinematics refers to the mathematical process of calculating the variable joint angles needed to place the end-effector in a given position and orientation relative to the inertial base frame. Equations (A6)–(A13) are summarized below:

where , , , and have been defined above in the forward kinematic discussion.

References

- Chang, W.-C.; Wu, C.-H. Automated USB peg-in-hole assembly employing visual servoing. In Proceedings of the 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan, 22–24 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 352–355. [Google Scholar]

- Xu, J.; Liu, K.; Pei, Y.; Yang, C.; Cheng, Y.; Liu, Z. A noncontact control strategy for circular peg-in-hole assembly guided by the 6-DOF robot based on hybrid vision. IEEE Trans. Instrum. Meas. 2022, 71, 3509815. [Google Scholar] [CrossRef]

- Zhu, W.; Liu, H.; Ke, Y. Sensor-based control using an image point and distance features for rivet-in-hole insertion. IEEE Trans. Ind. Electron. 2020, 67, 4692–4699. [Google Scholar] [CrossRef]

- Qin, F.; Xu, D.; Zhang, D.; Pei, W.; Han, X.; Yu, S. Automated Hooking of Biomedical Microelectrode Guided by Intelligent Microscopic Vision. IEEE/ASME Trans. Mechatron. 2023, 28, 2786–2798. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, J. Automatic Alignment Welding Device and Alignment Method Thereof. Patent No. CN103785950A, 11 December 2013. [Google Scholar]

- Guo, P.; Zhang, Z.; Shi, L.; Liu, Y. A contour-guided pose alignment method based on Gaussian mixture model for precision assembly. Assem. Autom. 2021, 41, 401–411. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control Part I: Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, X.; Zhang, J.; Xu, D.; Zhang, D.; Wu, W. Robotic grasping and alignment for small size components assembly based on visual servoing. Int. J. Adv. Manuf. Technol. 2020, 106, 4827–4843. [Google Scholar] [CrossRef]

- Hao, T.; Xu, D.; Qin, F. Image-Based Visual Servoing for Position Alignment with Orthogonal Binocular Vision. IEEE Trans. Instrum. Meas. 2023, 72, 5019010. [Google Scholar] [CrossRef]

- Flandin, G.; Chaumette, F.; Marchand, E. Eye-in-hand/eye-to-hand cooperation for visual servoing. In Proceedings of the 2000 ICRA Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No.00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 3, pp. 2741–2746. [Google Scholar] [CrossRef]

- Muis, A.; Ohnishi, K. Eye-to-hand approach on eye-in-hand configuration within real-time visual servoing. In Proceedings of the 8th IEEE International Workshop on Advanced Motion Control, 2004, AMC ’04, Kawasaki, Japan, 25–28 March 2004. [Google Scholar] [CrossRef]

- Ruf, A.; Tonko, M.; Horaud, R.; Nagel, H.H. Visual Tracking of An End-Effector by Adaptive Kinematic Prediction. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems, Innovative Robotics for Real-World Applications, IROS’97, Grenoble, France, 11 September 1997; Volume 2, pp. 893–898. [Google Scholar]

- de Mena, J.L. Virtual Environment for Development of Visual Servoing Control Algorithms. Master’s Thesis, Lund Institute Technology, Lund, Sweden, 2002. [Google Scholar]

- Lippiello, V.; Siciliano, B.; Villani, L. Position-based visual servoing in industrial multirobot cells using a hybrid camera configuration. IEEE Trans. Robot. 2007, 23, 73–86. [Google Scholar] [CrossRef]

- Zhu, W.; Mei, B.; Yan, G.; Ke, Y. Measurement error analysis and accuracy enhancement of 2D vision system for robotic drilling. Robot. Comput. Integr. Manuf. 2014, 30, 160–171. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, W.; Ke, Y. Pose alignment of aircraft structures with distance sensors and CCD cameras. Robot. Comput. Integr. Manuf. 2017, 48, 30–38. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, W.; Dong, H.; Ke, Y. An adaptive ball-head positioning visual servoing method for aircraft digital assembly. Assem. Autom. 2019, 39, 287–296. [Google Scholar] [CrossRef]

- Runge, G.; McConnell, E.; Rucker, D.C.; O’Malley, M.K. Visual servoing of continuum robots: Methods, challenges, and prospects. Sci. Robot. 2022, 7, eabm9419. [Google Scholar]

- Irie, K.; Woodhead, I.M.; McKinnon, A.E.; Unsworth, K. Measured effects of temperature on illumination-independent camera noise. In Proceedings of the 2009 24th International Conference Image and Vision Computing New Zealand, Wellington, New Zealand, 23–25 November 2009; pp. 249–253. [Google Scholar] [CrossRef]

- Wu, K.; Wu, L.; Ren, H. An image based targeting method to guide a tentacle-like curvilinear concentric tube robot. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Bali, Indonesia, 5–10 December 2014; pp. 386–391. [Google Scholar]

- Ma, X.; Song, C.; Chiu, P.W.; Li, Z. Autonomous flexible endoscope for minimally invasive surgery with enhanced safety. IEEE Robot. Autom. Lett. 2019, 4, 2607–2613. [Google Scholar] [CrossRef]

- Alambeigi, F.; Wang, Z.; Hegeman, R.; Liu, Y.-H.; Armand, M. Autonomous data-driven manipulation of unknown anisotropic deformable tissues using unmodelled continuum manipulators. IEEE Robot. Autom. Lett. 2018, 4, 254–261. [Google Scholar] [CrossRef]

- Vrooijink, G.J.; Denasi, A.; Grandjean, J.G.; Misra, S. Model predictive control of a robotically actuated delivery sheath for beating heart compensation. Int. J. Robot. Res. 2017, 36, 193–209. [Google Scholar] [CrossRef] [PubMed]

- Patidar, P.; Gupta, M.; Srivastava, S.; Nagawat, A.K. Image De-noising by Various Filters for Different Noise. Int. J. Comput. Appl. 2010, 9, 45–50. [Google Scholar] [CrossRef]

- Das, S.; Saikia, J.; Das, S.; Goñi, N. A comparative study of different noise filtering techniques in digital images. Int. J. Eng. Res. Gen. Sci. 2015, 3, 180–191. [Google Scholar]

- Zhao, R.M.; Cui, H.M. Improved threshold denoising method based on wavelet transform. In Proceedings of the 2015 7th International Conference on Modelling, Identification and Control (ICMIC), Sousse, Tunisia, 8–20 December 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Ng, J.; Goldberger, J.J. Signal Averaging for Noise Reduction. In Practical Signal and Image Processing in Clinical Cardiology; Goldberger, J., Ng, J., Eds.; Springer: London, UK, 2010; pp. 69–77. [Google Scholar]

- Muthusamy, R.; Ayyad, A.; Halwani, M.; Swart, D.; Gan, D.; Seneviratne, L.; Zweiri, Y. Neuromorphic eye-in-hand visual servoing. IEEE Access 2021, 9, 55853–55870. [Google Scholar] [CrossRef]

- Cormen, T.H.; Leiserson, C.E.; Ronald, L. Rivest, and Clifford Stein. Introduction to Algorithms, 3rd ed.; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Chen, G.; Zhu, F.; Heng, P.A. An Efficient Statistical Method for Image Noise Level Estimation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 477–485. [Google Scholar] [CrossRef]

- Spong, M.W.; Vidyasagar, M. Robot Dynamics and Control; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1989. [Google Scholar]

- Marzouk, W.W.; Elbaset, A.A.; Galal, A.I. Modelling, Analysis and Simulation for A 6 Axis Arm Robot by PID Controller. Int. J. Mech. Prod. Eng. Res. Dev. 2019, 9, 363–376. [Google Scholar] [CrossRef]

- Li, R.; Assadian, F. Role of Uncertainty in Model Development and Control Design for a Manufacturing Process. In Production Engineering and Robust Control; Majid, T., Pengzhong, L., Liang, L., Eds.; IntechOpen: London, UK, 2022; pp. 137–167. [Google Scholar] [CrossRef]

- Anonymous. ABB IRB 4600-40/2.55 Product Manual [Internet]. 2013. Available online: https://www.manualslib.com/manual/1449302/Abb-Irb-4600-40-2-55.html#manual (accessed on 17 July 2024).

- Anonymous. Stereolabs Docs: API Reference, Tutorials, and Integration. Available online: https://www.stereolabs.com/docs (accessed on 18 July 2024).

- Peer, P.; Solina, F. Where physically is the optical center? Pattern Recognit. Lett. 2006, 27, 1117–1121. [Google Scholar] [CrossRef]

- Tourassis, V.D.; Emiris, D.M. A comparative study of ideal elbow and dual-elbow robot manipulators. Mech. Mach. Theory 1993, 28, 357–373. [Google Scholar] [CrossRef]

- Huang, S.; Lou, C.; Zhou, Y.; He, Z.; Jin, X.; Feng, Y.; Gao, A.; Yang, G.-Z. MRI-guided robot intervention—Current state-of-the-art and new challenges. Med-X 2023, 1, 4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).