Fully Automatic Grayscale Image Segmentation: Dynamic Thresholding for Background Adaptation, Improved Image Center Point Selection, and Noise-Resilient Start/End Point Determination

Abstract

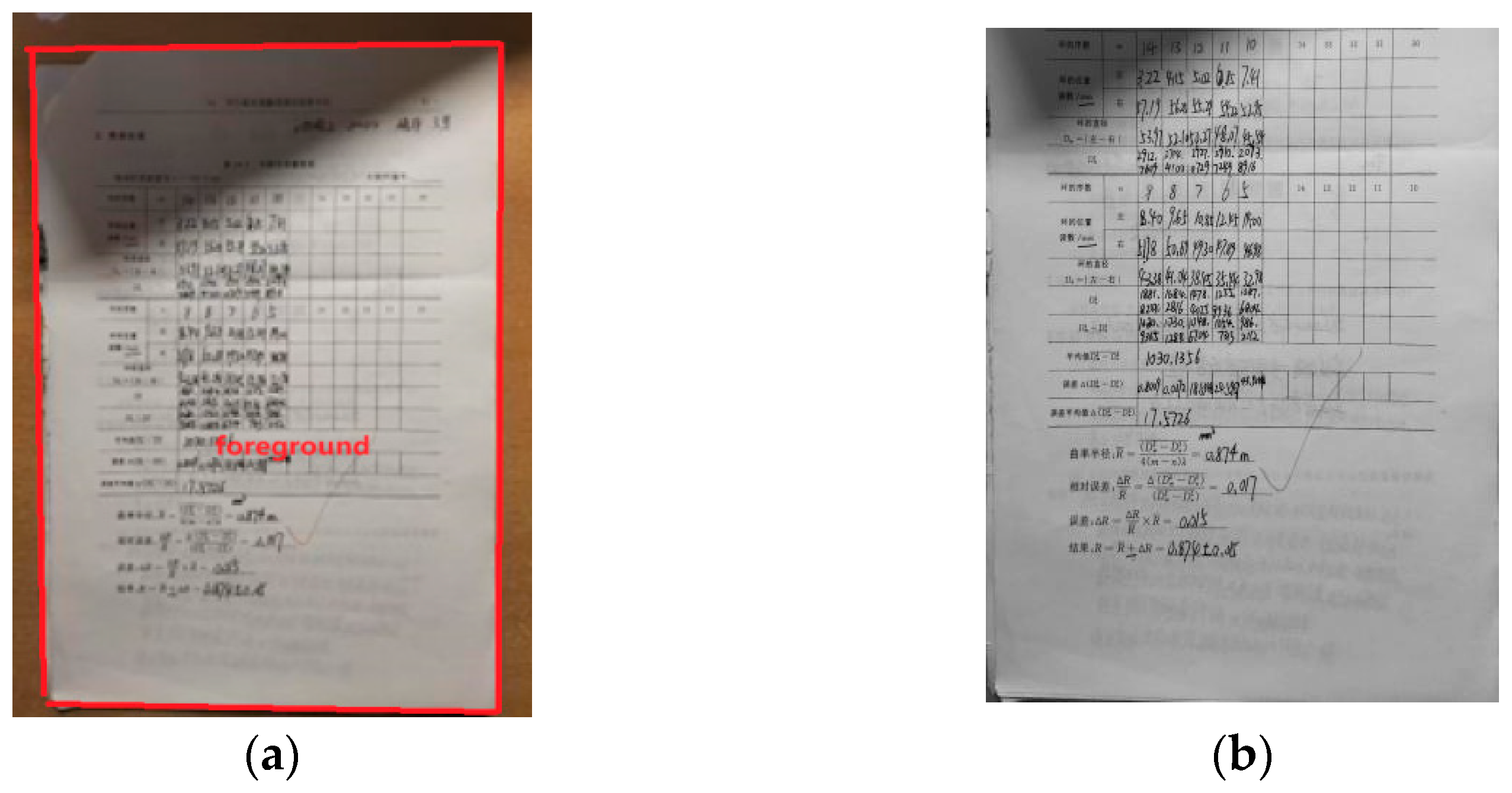

1. Introduction

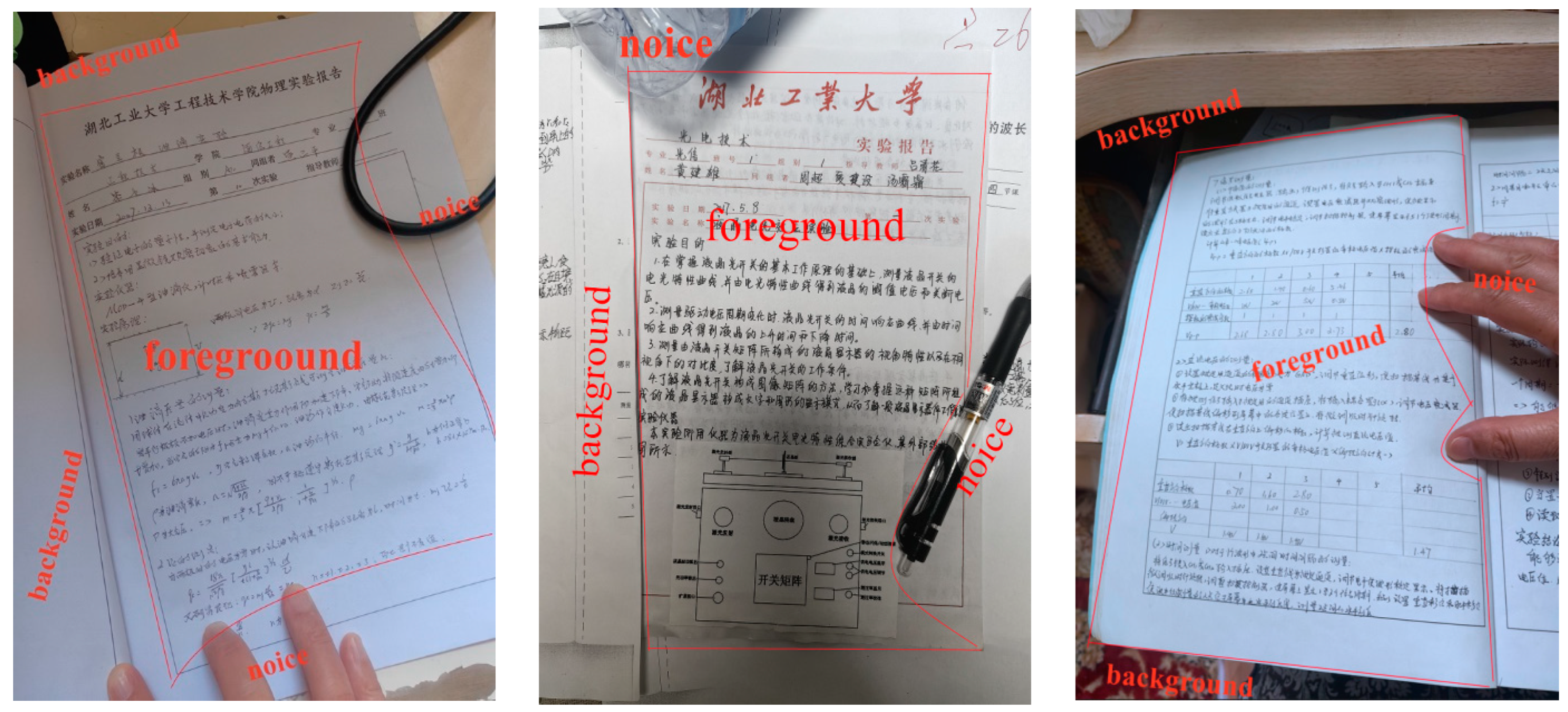

- (1)

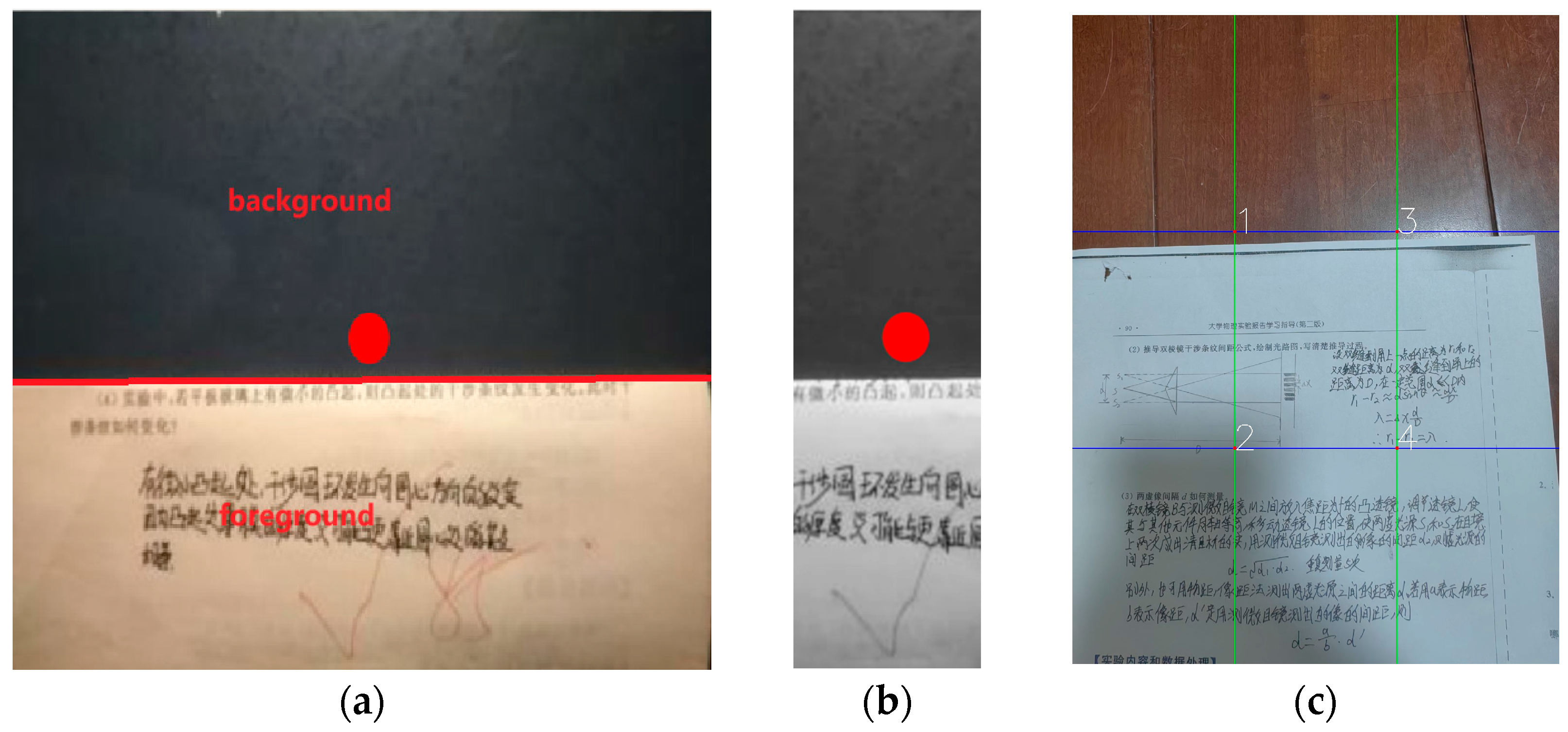

- Ineffective segmentation of the foreground across diverse image backgrounds.

- (2)

- Suboptimal performance when the image center point lies outside the target area.

- (3)

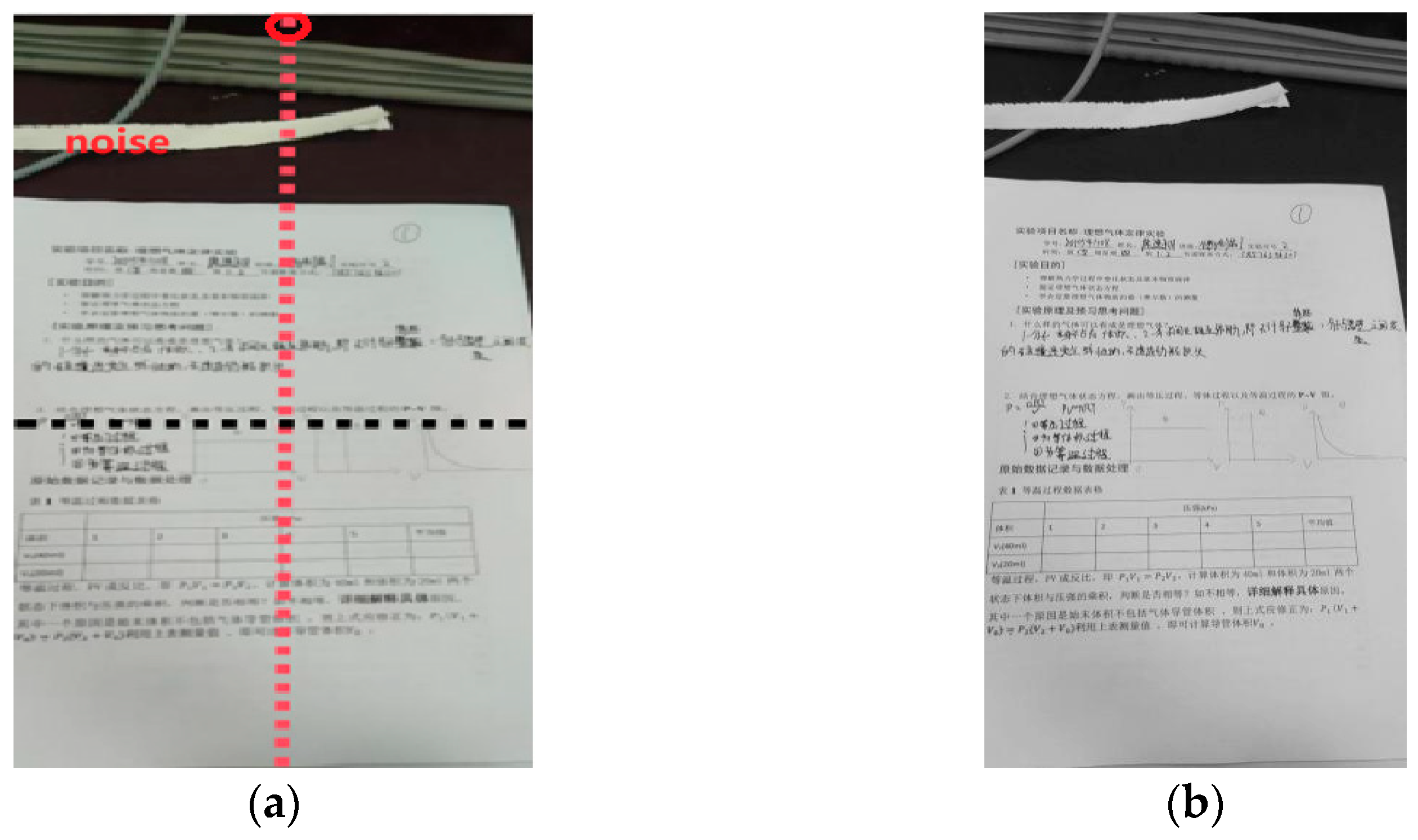

- High sensitivity to noise and reduced robustness in determining the start and end points of the segmentation area.

- (1)

- Dynamic Thresholding for Background Adaptation

- (2)

- Improved Image Center Point Selection

- (3)

- Noise-Resilient Start/End Point Determination

2. Preliminary

Magfreehome

3. Methodology

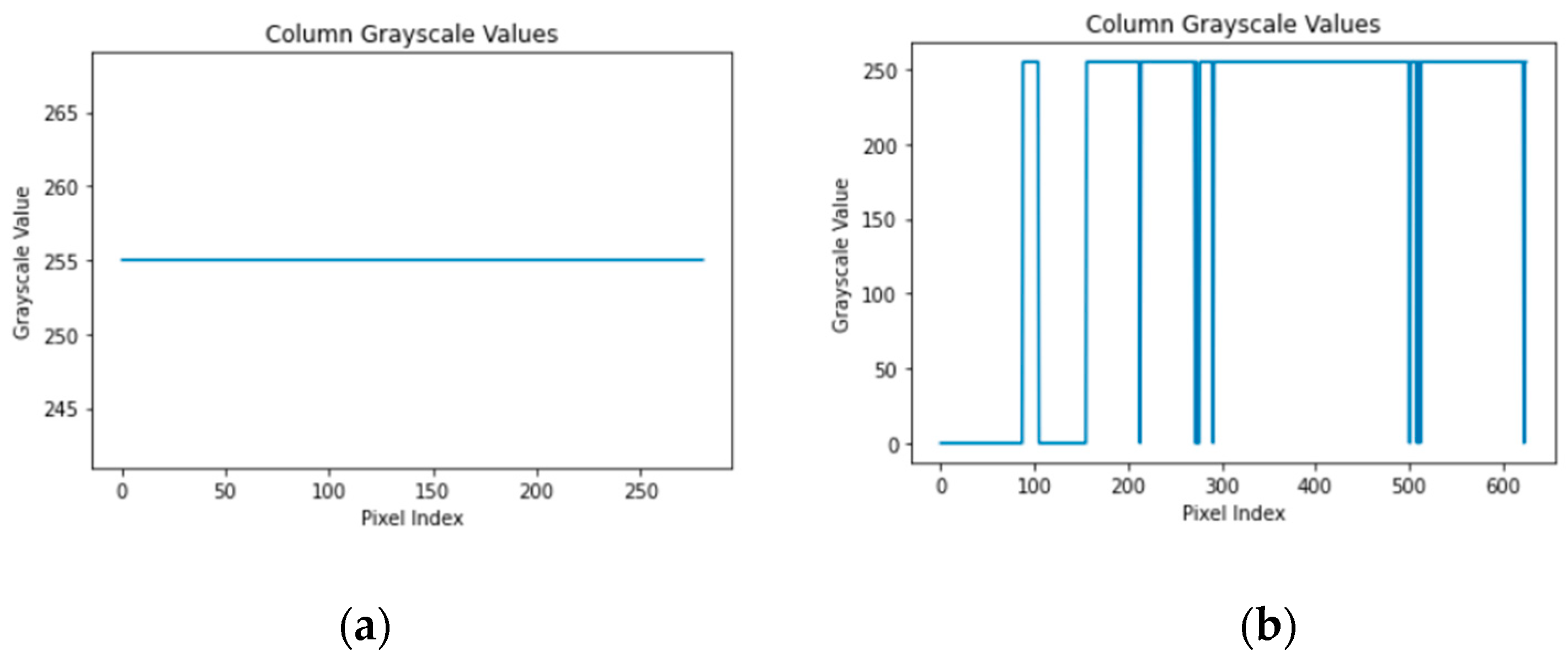

3.1. Improved Scheme Based on OTSU Dynamic Threshold Calculation: Strategy 1

| Algorithm 1. Shadow Detection and Segmentation | |

| Input: , , | |

| Output: Shadow and segmentation reset | |

| 1: | If < 5, |

| 2: | no shadow |

| 3: | else If |

| 4: | no shadow |

| 5: | Else |

| 6: | shadow |

| 7: | Resets the segmentation start or end point to the image edge |

| 8: | end |

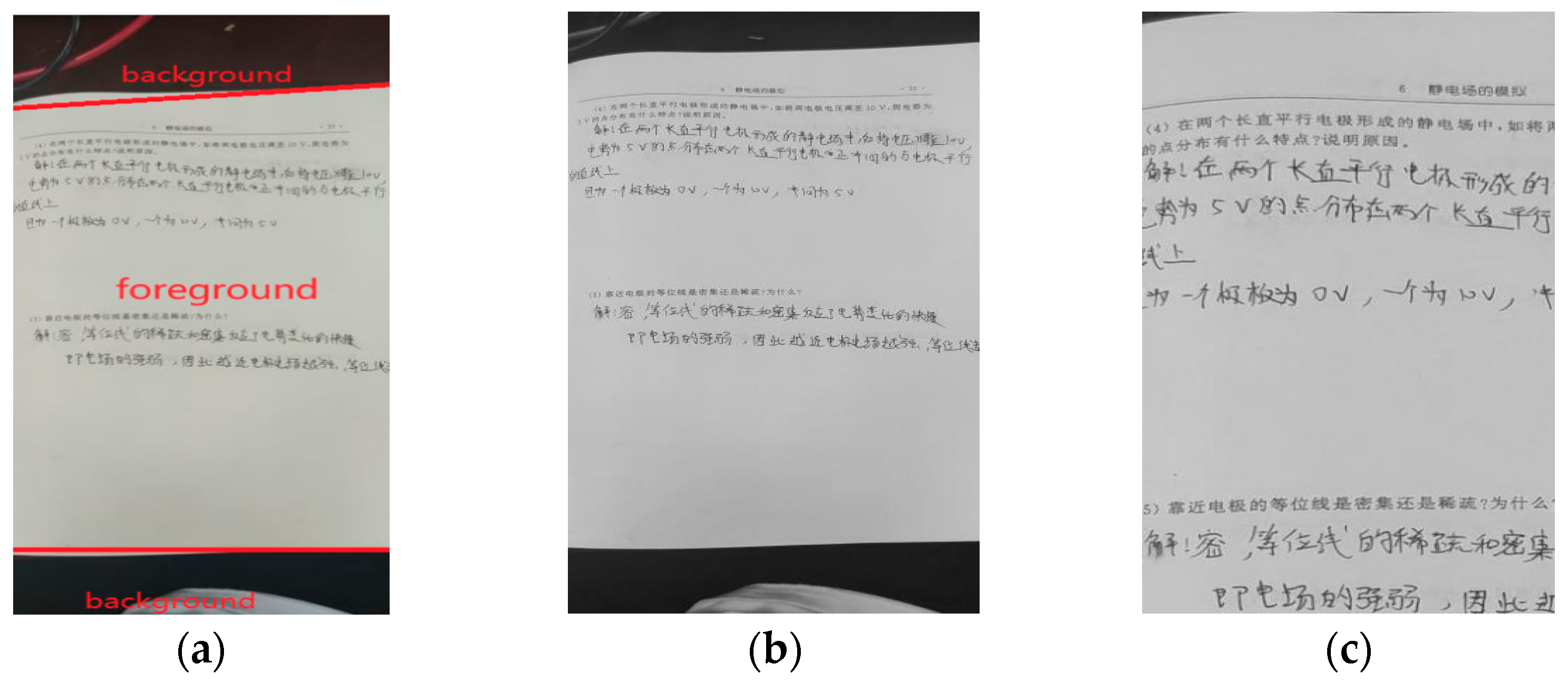

3.2. An Improved Method for Selecting Image Center Points: Strategy 2

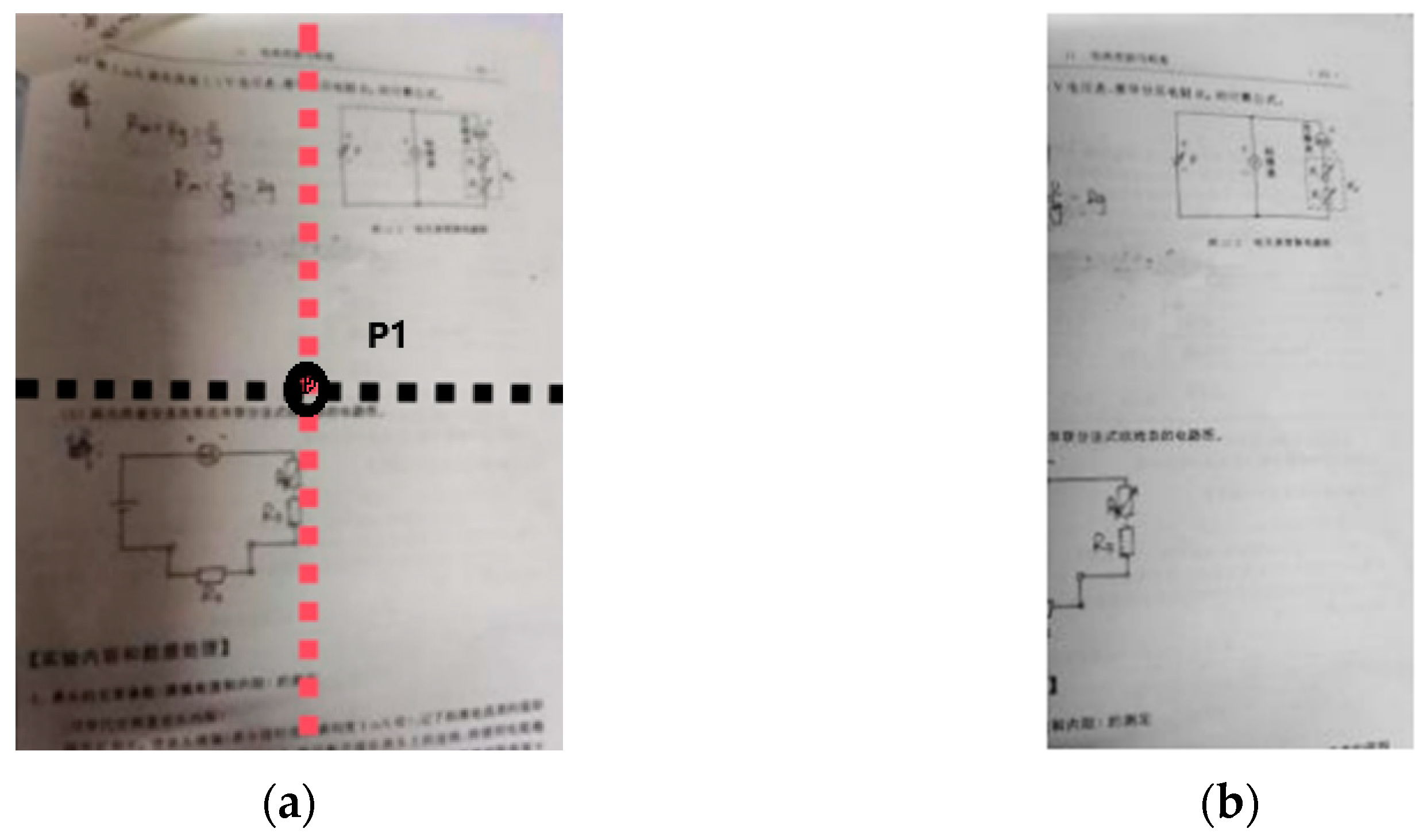

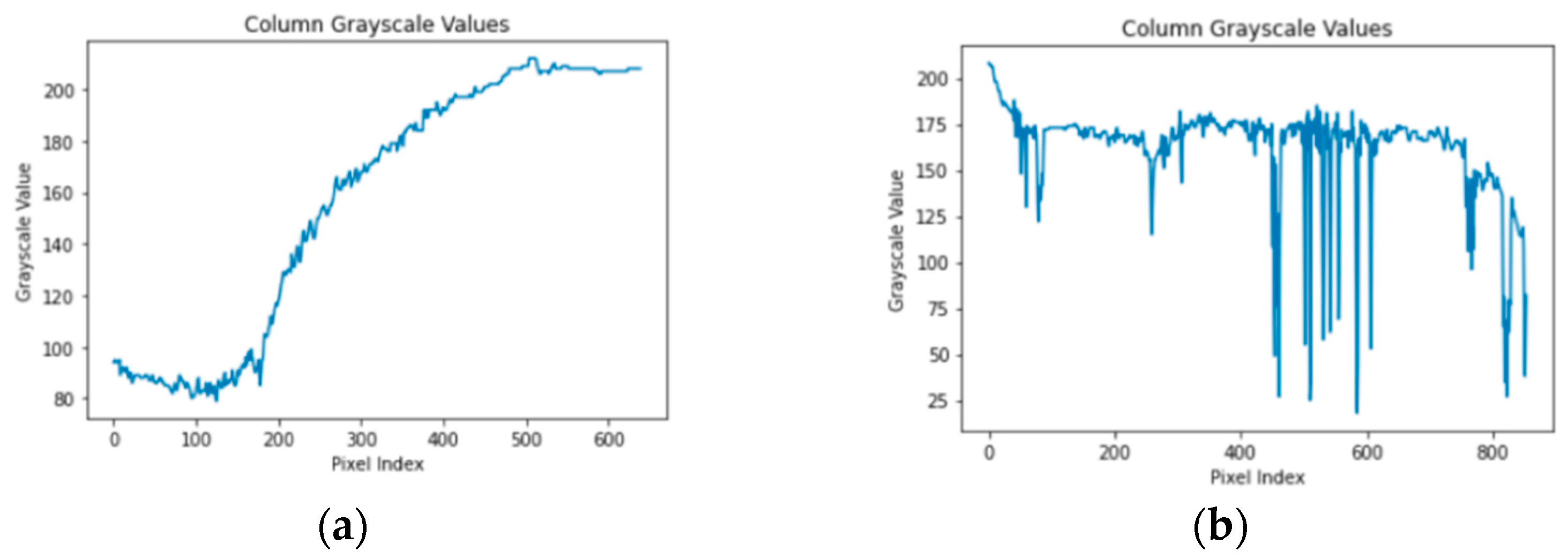

3.3. A Method for Determining the Start/End Points of the Segmentation Region Based on Continuity Detection: Strategy 3

3.4. Fully Automatic Grayscale Image Segmentation Algorithm

4. Experiments

4.1. Dataset, Testing Environment and Testing Plan

- (1)

- Incomplete or excessive segmentation due to fixed thresholds (10,436 images, 69.28%);

- (2)

- Segmentation errors caused by improper selection of the image center point (3905 images, 25.92%);

- (3)

- Incomplete segmentation due to weak anti-interference capabilities (723 images, 4.80%).

4.2. Comparison of Image Segmentation Effectiveness

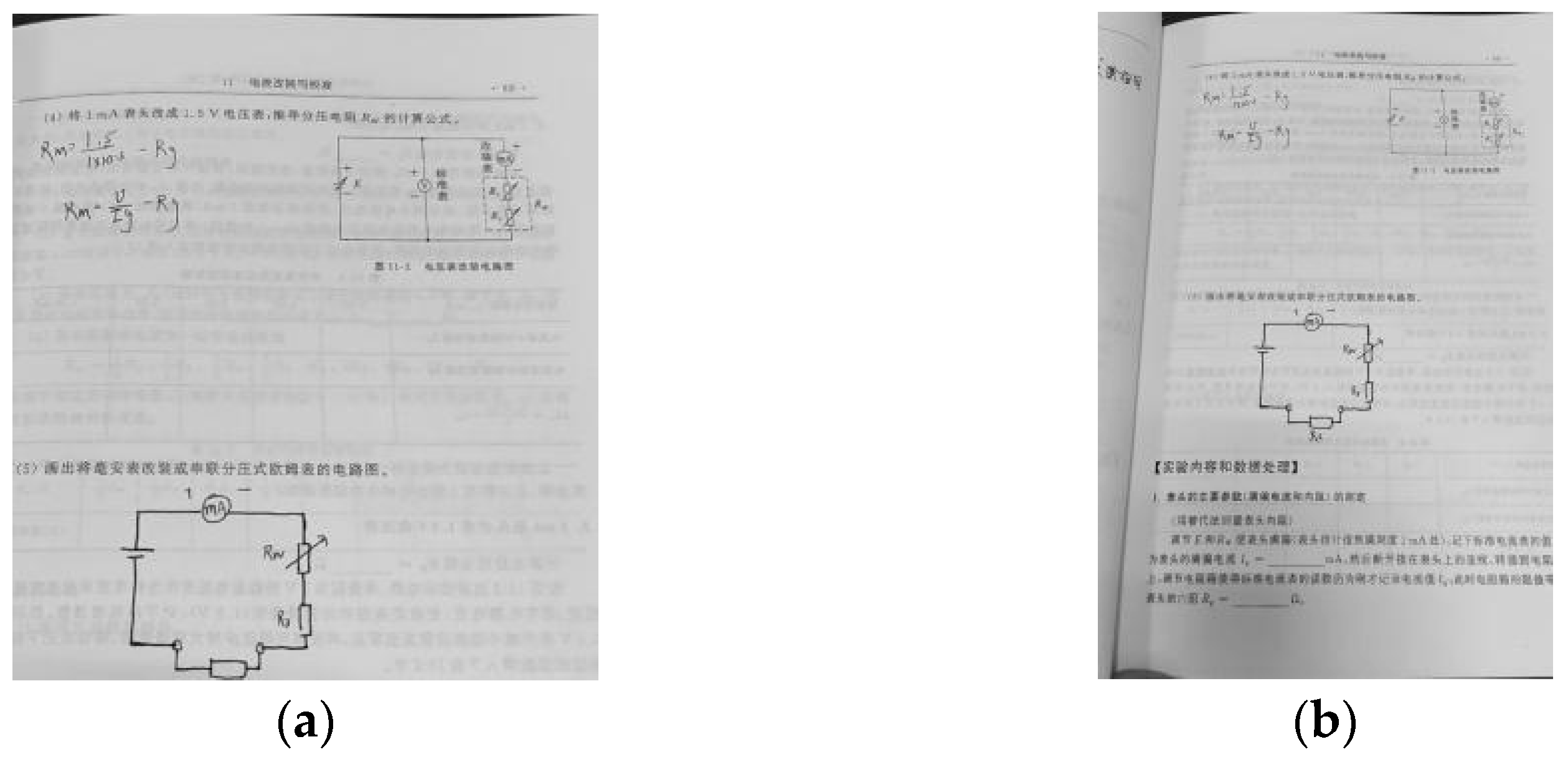

4.2.1. Effectiveness of the Improved OTSU Dynamic Threshold Calculation Strategy

4.2.2. Effectiveness of the Improved Image Center Point Selection Method

4.2.3. Effectiveness of the Continuity Detection-Based Segmentation Start/End Point Determination Method

4.3. Comparison of Algorithm Performance

4.3.1. Performance Metrics

- False Negative (FN): The number of images incorrectly identified by the algorithm as not requiring segmentation, despite actually needing it;

- True Negative (TN): The number of images correctly identified by the algorithm as not requiring segmentation;

- True Positive (TP): The number of images correctly identified by the algorithm as requiring segmentation;

- False Positive (FP): The number of images incorrectly identified by the algorithm as requiring segmentation, despite not needing it.

4.3.2. Experimental Results and Analysis

- (1)

- Accuracy: The proposed algorithm achieved a maximum accuracy of 93.83%, representing an improvement of 15.51% over the original algorithm, with an average increase of 14.97%. This success can be attributed to three key factors: the dynamic threshold calculation method, based on the improved OTSU algorithm, enables the algorithm to adaptively adjust the threshold according to varying image backgrounds, leading to enhanced segmentation; the continuity detection method precisely identifies the start and end points of the foreground, effectively excluding impurities and other interference factors; and the improved image center point selection method, which utilizes a multi-center point strategy, makes the algorithm more resilient to noise variations in complex background images.

- (2)

- Precision: The proposed algorithm achieved a maximum precision of 98.71%, reflecting an increase of only 1.02% over the original algorithm. This modest improvement is attributed to the algorithm’s heightened sensitivity to noise and impurities, despite maintaining high accuracy. The lowest precision, recorded at 96.40%, represents a 2.70% decrease compared to the original algorithm, which was due to occasional misjudgments when processing specific types of images.

- (3)

- Recall: The proposed algorithm achieved a maximum recall rate of 91.48%, representing an improvement of 16.72% over the original algorithm, with an average increase of 17.33%. This significant improvement is primarily attributed to the continuity detection-based method for determining the segmentation start and end points, which effectively excludes impurities and interference.

- (4)

- Processing Speed: The proposed algorithm’s processing speed was slightly reduced by 0.585 images per second, approximately 1.34%. However, considering the significant improvements in precision and recall rates, this minor decrease in processing speed is a worthwhile trade-off.

4.4. Ablation Experiment

- (1)

- Single Strategy Usage

- (2)

- Combination of Two Strategies

- (3)

- Combination of All Three Strategies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- 1.

- Start the Run: Click the “Reproducible Run” button, which will trigger the main script (run file) in the project.

- 2.

- Execute the Main Script: The run file will automatically call the ImageSlicer.py file located in the /code/directory.

- 3.

- After the image processing is complete, the results will be saved in the result/result data folder.

References

- Image Processing Procedure. Available online: https://blog.csdn.net/sinat_31608641/article/details/102789221 (accessed on 27 February 2023).

- Rafique, A.A.; Gochoo, M.; Jala, A.; Kim, K. Maximum entropy scaled super pixels segmentation for multi-object detection and scene recognition via deep belief network. Multimed. Tools Appl. 2023, 80, 13401–13430. [Google Scholar] [CrossRef]

- Huang, L.; Ruan, S.; Denoeux, T. Application of belief functions to medical image segmentation: A review. Inform. Fusion 2023, 91, 737–756. [Google Scholar] [CrossRef]

- Cao, W.W.; Yuan, G.; Liu, Q.; Peng, C.T.; Xie, J.; Yang, X.D.; Ni, X.Y.; Zheng, J. ICL-Net: Global and local inter-pixel correlations learning network for skin lesion segmentation. IEEE J. Biomed. Health Inform. 2022, 27, 145–156. [Google Scholar] [CrossRef] [PubMed]

- Dang, T.V.; Bui, N.T. Multi-scale fully convolutional network-based semantic segmentation for mobile robot navigation. Electronics 2023, 12, 533. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, J.; Shu, Y.; Chen, Y.; Chen, J.; Yang, Y.; Tang, W.; Zhang, Y. Study of convolutional neural network-based semantic segmentation methods on edge intelligence devices for field agricultural robot navigation line extraction. Comput. Electron. Agric. 2023, 209, 107811. [Google Scholar] [CrossRef]

- Schein, K.E.; Marc, H.; Rauschnabel, P.A. How do tourists evaluate augmented reality services? Segmentation, awareness, devices and marketing use cases. In Springer Handbook of Augmented Reality; Springer International Publishing: Cham, Switzerland, 2023; pp. 451–469. [Google Scholar]

- Klingenberg, S.; Fischer, R.; Zettler, I.; Makransky, G. Facilitating learning in immersive virtual reality: Segmentation, summarizing, both or none? J. Comput. Assist. Learn. 2023, 39, 218–230. [Google Scholar] [CrossRef]

- Min, H.; Zhang, Y.M.; Zhao, Y.; Zhao, Y.; Jia, W.; Lei, Y.; Fan, C. Hybrid feature enhancement network for few-shot semantic segmentation. Pattern Recognit. 2023, 137, 109291. [Google Scholar] [CrossRef]

- Tummala, S.K. Morphological operations and histogram analysis of SEM images using Python. Indian J. Eng. Mater. Sci. 2023, 29, 796–800. [Google Scholar]

- Lin, X.F.; Li, C.J.; Adams, S.; Kouzani, A.Z.; Jiang, R.; He, L.G.; Hu, Y.J.; Vernon, M.; Doeven, E.; Webb, L.; et al. Self-supervised leaf segmentation under complex lighting conditions. Pattern Recognit. 2023, 135, 109021. [Google Scholar] [CrossRef]

- Bagwari, N.; Kumar, S.; Verma, V.S. A comprehensive review on segmentation techniques for satellite images. Arch. Comput. Methods Eng. 2023, 30, 4325–4358. [Google Scholar] [CrossRef]

- Physics Experiment Management System of Hubei University of Technology. Available online: https://wlsy.wjygrit.cn/login (accessed on 12 October 2023).

- Yu, Y.; Wang, C.P.; Fu, Q.; Kou, R.; Huang, F.; Yang, B.; Yang, T.; Gao, M. Techniques and challenges of image segmentation: A review. Electronics 2023, 12, 1199. [Google Scholar] [CrossRef]

- Gopalakrishnan, C.; Iyapparaja, M. Multilevel thresholding based follicle detection and classification of polycystic ovary syndrome from the ultrasound images using machine learning. Int. J. Syst. Assur. Eng. Manag. 2021, 1–8. [Google Scholar] [CrossRef]

- Du, J.L.; Zhang, Y.Q.; Jin, X.Y.; Zhang, X. A cell image segmentation method based on edge feature residual fusion. Methods 2023, 219, 111–118. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.Y.; Han, X.; Fu, C.N. Comparison of Object Region Segmentation Algorithms of PCB Defect Detection. Trait. Signal 2023, 40, 797–802. [Google Scholar] [CrossRef]

- Fang, K. Threshold segmentation of PCB defect image grid based on finite difference dispersion for providing accuracy in the IoT based data of smart cities. Int. J. Syst. Assur. Eng. Manag. 2022, 13, 121–131. [Google Scholar] [CrossRef]

- Khairnar, S.; Thepade, S.D.; Gite, S. Effect of image binarization thresholds on breast cancer identification in mammography images using OTSU, Niblack, Burnsen, Thepade’s SBTC. Intell. Syst. Appl. 2021, 10, 200046. [Google Scholar] [CrossRef]

- Abualigah, L.; Khaled, H.; Almotairi, K.H.; Elaziz, M.A. Multilevel thresholding image segmentation using meta-heuristic optimization algorithms: Comparative analysis, open challenges and new trends. Appl. Intell. 2023, 53, 11654–11704. [Google Scholar] [CrossRef]

- Sasmal, B.; Dhal, K.G. A survey on the utilization of Superpixel image for clustering based image segmentation. Multimed. Tools Appl. 2023, 82, 35493–35555. [Google Scholar] [CrossRef]

- Luo, Z.F.; Yang, Y.W.; Gou, Y.R.; Li, X. Semantic segmentation of agricultural images: A survey. Inf. Process. Agric. 2023, 11, 172–186. [Google Scholar] [CrossRef]

- Amiriebrahimabadi, M.; Zhina, R.; Najme, M. A Comprehensive Survey of Multi-Level Thresholding Segmentation Methods for Image Processing. Arch. Comput. Methods Eng. 2024, 31, 1–51. [Google Scholar] [CrossRef]

- Miao, T.; Zhen, H.C.; Wang, H.; Chen, J. Rapid extraction of spaceborne SAR flood area based on iterative threshold segmentation. Syst. Eng. Electron 2022, 44, 2760–2768. [Google Scholar]

- Lang, J.W.; Bai, F.Z.; Wang, J.X.; Gu, N.T. Progress on region segmentation techniques for optical interferogram. Opt. Instrum. 2023, 45, 87–94. [Google Scholar]

- Ingh, N.S.; Bhandari, A.K. Multiclass variance based variational decomposition system for image segmentation. Multimed. Tools Appl. 2023, 82, 41609–41639. [Google Scholar]

- Hosny, K.M.; Khalid, A.M.; Hamza, H.M.; Mirjalili, S. Multilevel thresholding satellite image segmentation using chaotic coronavirus optimization algorithm with hybrid fitness function. Neural Comput. Appli. 2023, 35, 855–886. [Google Scholar] [CrossRef]

- Abualigah, L.; Al-Okbi, N.K.; Elaziz, M.A.; Houssein, E.H. Boosting Marine Predators Algorithm by Salp Swarm Algorithm for Multilevel Thresholding Image Segmentation. Multimed. Tools Appl. 2022, 81, 16707–16742. [Google Scholar] [CrossRef]

- Abdel-Basse, M.; Mohamed, R.; Abouhawwash, M. A new fusion of whale optimizer algorithm with Kapur’s entropy for multi-threshold image segmentation: Analysis and validations. Artif. Intel. Rev. 2022, 55, 6389–6459. [Google Scholar] [CrossRef]

- Zou, Y.B.; Zhang, J.Y.; Zhou, H.; Sun, F.S.; Xia, P. Tsallis Entropy Thresholding Based on Multi-scale and Multi-direction Gabor Transform. J. Electron. Inf. Technol. 2023, 45, 707–717. [Google Scholar]

- Naik, M.K.; Panda, R.; Abraham, A. An entropy minimization based multilevel colour thresholding technique for analysis of breast thermograms using equilibrium slime mould algorithm. Appl. Soft Comput. 2021, 113, 107955. [Google Scholar] [CrossRef]

- Abdel-Khalek, S.; Ishak, A.B.; Omer, O.A.; Obada, A.S.H.F. A two-dimensional image segmentation method based on genetic algorithm and entropy. Optik 2017, 131, 414–422. [Google Scholar] [CrossRef]

- Shubham, S.; Bhandari, A.K. A generalized Masi entropy based efficient multilevel thresholding method for color image segmentation. Multimed. Tools Appl. 2019, 78, 17197–17238. [Google Scholar] [CrossRef]

- Ray, S.; Parai, S.; Das, A.; Dhal, K.G.; Naskar, P.K. Cuckoo search with differential evolution mutation and Masi entropy for multi-level image segmentation. Multimed. Tools and Appl. 2022, 81, 4073–4117. [Google Scholar] [CrossRef]

- Li, H.B.; Zheng, G.; Sun, K.J.; Jang, Z.C.; Yao, L.; Jia, H.M. A logistic chaotic barnacles mating optimizer with Masi entropy for color image multilevel thresholding segmentation. IEEE Access 2020, 8, 213130–213153. [Google Scholar] [CrossRef]

- Li, H.; Peng, S. Image-Based Fire Detection Using Dynamic Threshold Grayscale Segmentation and Residual Network Transfer Learning. Mathematics 2023, 11, 3940. [Google Scholar] [CrossRef]

- Salvi, M.; Acharya, U.R.; Molinari, F.; Meiburger, K.M. The impact of pre-and post-image processing techniques on deep learning frameworks: A comprehensive review for digital pathology image analysis. Comput. Biol. Med. 2021, 128, 104129. [Google Scholar] [CrossRef] [PubMed]

- Kirichev, M.; Slavov, T.; Momcheva, G. Fuzzy U-net neural network design for image segmentation. In The International Symposium on Bioinformatics and Biomedicine; Springer International Publishing: Cham, Switzerland, 2020; Volume 374, pp. 177–184. [Google Scholar]

- Ge, Y.F.; Zhang, Q.; Sun, Y.T.; Shen, Y.D.; Wang, X.Y. Grayscale medical image segmentation method based on 2D&3D object detection with deep learning. BMC Med. Imaging 2022, 22, 33. [Google Scholar]

- Srivastava, V.; Gupta, S.; Singh, R.; Gautam, V.K. A multi-level closing based segmentation framework for dermatoscopic images using ensemble deep network. Int. J. Syst. Assur. Eng. Manag. 2024, 15, 3926–3939. [Google Scholar] [CrossRef]

- Venugopal, V.; Joseph, J.; Das, M.V.; Nath, M.K. DTP-Net: A convolutional neural network model to predict threshold for localizing the lesions on dermatological macro-images. Comput. Biol. Med. 2022, 148, 105852. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Zhou, Y.; Tran, S.; Park, M.; Hadley, S.M. Lacharite and Q. Bai, A self-learning approach for beggiatoa coverage estimation in aquaculture. In Australasian Joint Conference on Artificial Intelligence; Springer International Publishing: Cham, Switzerland, 2022; pp. 405–416. [Google Scholar]

- Ramesh Babu, P.; Srikrishna, A.; Gera, V.R. Diagnosis of tomato leaf disease using OTSU multi-threshold image segmentation-based chimp optimization algorithm and LeNet-5 classifier. J. Plant Dis. Prot. 2024, 1–16. [Google Scholar] [CrossRef]

- Python OpenCV Image Black Trimming. Available online: https://blog.csdn.net/magefreehome/article/details/125307141?spm=1001.2014.3001.5502 (accessed on 15 June 2022).

- Harris, C.R.; Millman, K.J.; Van Der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Gray Value Summary. Available online: https://blog.csdn.net/Tony_Stark_Wang/article/details/79953180 (accessed on 15 April 2018).

- Threshold Based Segmentation Method. Available online: https://blog.csdn.net/weixin_44686138/article/details/130189165 (accessed on 27 September 2023).

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Chen, L.P.; Gao, J.H.; Lopes, A.M.; Zhang, Z.Q.; Chu, Z.B.; Wu, R.C. Adaptive fractional-order genetic-particle swarm optimization Otsu algorithm for image segmentation. Appl. Intell. 2023, 53, 26949–26966. [Google Scholar] [CrossRef]

- Gong, J.; Li, L.Y.; Chen, W.N. Fast recursive algorithms for two-dimensional thresholding. Pattern Recognit. 1998, 31, 295–300. [Google Scholar] [CrossRef]

- Sahoo, P.K.; Arora, G. A thresholding method based on two-dimensional Renyi’s entropy. Pattern Recognit. 2004, 37, 1149–1161. [Google Scholar] [CrossRef]

| Number of Image | Magefreehome | Our Algorithm | ||||||

|---|---|---|---|---|---|---|---|---|

| Speed (Number/s) | Accuracy | Precision | Recall | Speed (Number/s) | Accuracy | Precision | Recall | |

| 423 | 48.6 | 77.17% | 99.10% | 69.18% | 48.34 | 88.58% | 96.40% | 83.59% |

| 2098 | 48.43 | 74.74% | 98.76% | 68.30% | 47.56 | 90.94% | 98.05% | 86.79% |

| 6370 | 47.54 | 74.61% | 96.66% | 66.75% | 47.09 | 91.35% | 97.77% | 86.46% |

| 20014 | 32.14 | 78.32% | 97.69% | 74.76% | 31.38 | 93.83% | 98.71% | 91.48% |

| Algorithm Combination | Speed (Number/s) | Accuracy | Precision | Recall |

|---|---|---|---|---|

| Magefreehome | 32.14 | 78.32% | 97.69% | 74.76% |

| Magefreehome + Strategy1 | 32.09 | 86.32% | 92.31% | 82.05% |

| Magefreehome + Strategy2 | 33.44 | 83.49% | 97.12% | 75.94% |

| Magefreehome + Strategy3 | 31.73 | 84.93% | 99.04% | 80.47% |

| Magefreehome + Strategy1 + Strategy2 | 32.41 | 86.76% | 94.23% | 85.96% |

| Magefreehome + Strategy1 + Strategy3 | 32.75 | 89.04% | 96.15% | 88.50% |

| Magefreehome + Strategy2 + Strategy3 | 32.55 | 84.02% | 98.05% | 79.69% |

| Magefreehome + Strategy1 + Strategy2 + Strategy3 | 31.38 | 93.83% | 98.71% | 91.48% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Gui, X. Fully Automatic Grayscale Image Segmentation: Dynamic Thresholding for Background Adaptation, Improved Image Center Point Selection, and Noise-Resilient Start/End Point Determination. Appl. Sci. 2024, 14, 9303. https://doi.org/10.3390/app14209303

Li J, Gui X. Fully Automatic Grayscale Image Segmentation: Dynamic Thresholding for Background Adaptation, Improved Image Center Point Selection, and Noise-Resilient Start/End Point Determination. Applied Sciences. 2024; 14(20):9303. https://doi.org/10.3390/app14209303

Chicago/Turabian StyleLi, Junyan, and Xuewen Gui. 2024. "Fully Automatic Grayscale Image Segmentation: Dynamic Thresholding for Background Adaptation, Improved Image Center Point Selection, and Noise-Resilient Start/End Point Determination" Applied Sciences 14, no. 20: 9303. https://doi.org/10.3390/app14209303

APA StyleLi, J., & Gui, X. (2024). Fully Automatic Grayscale Image Segmentation: Dynamic Thresholding for Background Adaptation, Improved Image Center Point Selection, and Noise-Resilient Start/End Point Determination. Applied Sciences, 14(20), 9303. https://doi.org/10.3390/app14209303