Automated Evaluation of C-ITS Message Content for Enhanced Compliance and Reliability

Abstract

:1. Introduction

2. Materials and Methods

2.1. Types of C-ITS Messages

- Cooperative Awareness Messages (CAMs): provide real-time data about the position, speed, and status of vehicles.

- Decentralized Environmental Notification Messages (DENMs): alert vehicles and infrastructure about hazardous conditions or events.

- In-Vehicle Information Messages (IVIs): convey road sign information and other relevant data directly to drivers.

- Signal Phase and Timing Messages (SPaTs): communicate traffic signal status and timing to vehicles.

- MAP Messages: provide detailed descriptions of intersections, including lane configurations and connections.

- Signal Request Messages (SRMs) and signal Status Messages (SSMs): used for communication between vehicles and traffic signal controllers, often for prioritizing emergency vehicles or public transport.

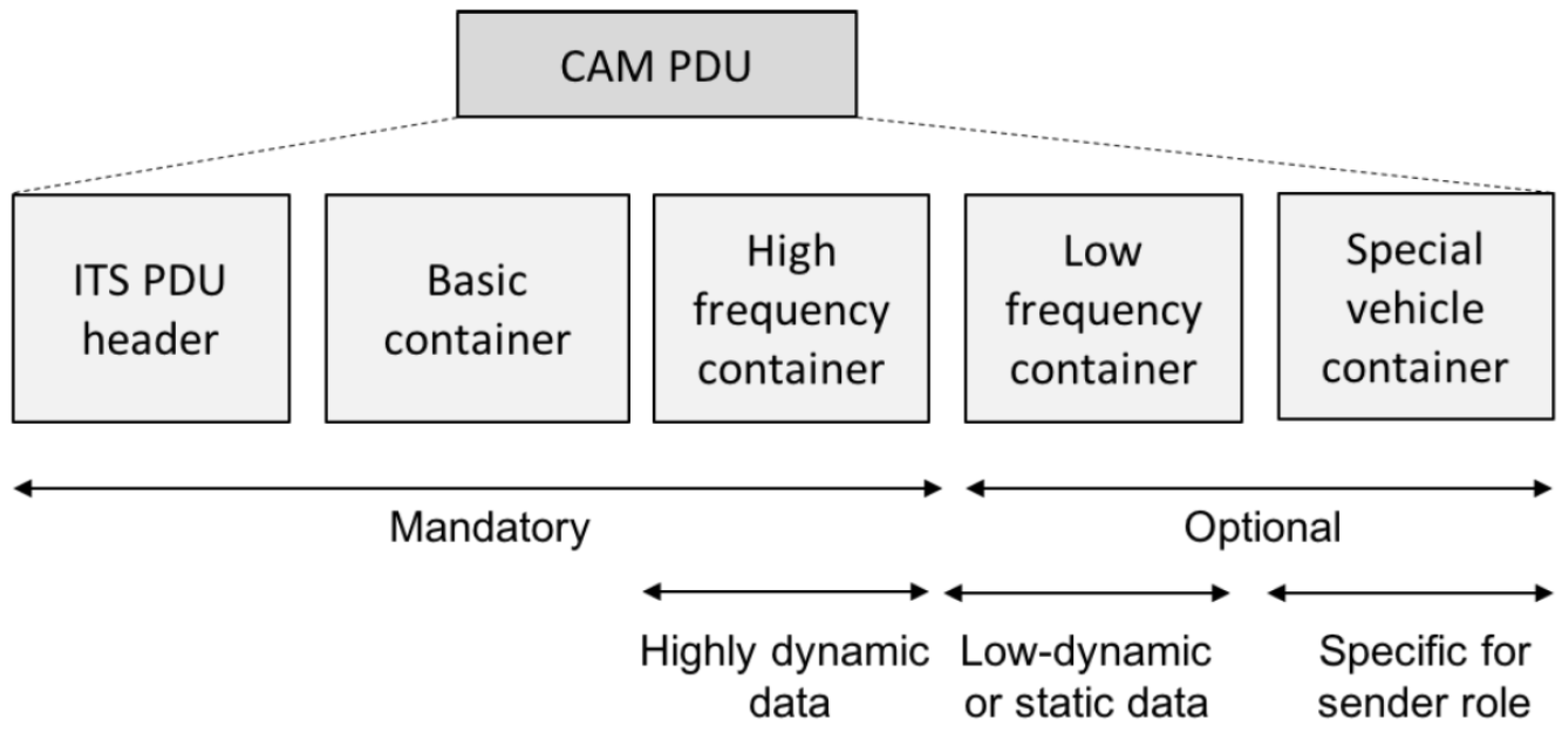

2.1.1. Cooperative Awareness Messages (CAMs)

- Basic Container:

- −

- stationType: This parameter specifies the type of the device sending the message (e.g., passenger car, truck, etc.). It helps recipients of the message understand the context in which the message was sent.

- −

- referencePosition: Contains information about the geographical location of the device at the time the message was sent. This includes latitude, longitude, altitude, and measurement accuracy. This parameter is crucial for determining the vehicle’s position and for applications such as navigation and proximity detection.

- High-Frequency Container:

- −

- This container includes data that changes frequently and is transmitted at a higher frequency. It typically includes information about speed, direction, and the current state of the vehicle. The high-frequency container is mandatory, and its content is important for applications requiring current and dynamic information about the vehicle.

- Low-Frequency Container:

- −

- This container contains less frequently changed information, transmitted at a lower frequency. It may include static data such as vehicle properties (type, size). According to the standard, the low-frequency container must be included in all CAMs from the first message generated after the activation of the cooperative awareness basic service. Additionally, it must be included if the time elapsed since the last CAM generation with the low-frequency container is equal to or greater than 500 ms.

- Special Vehicle Container:

- −

- This container holds specific information about vehicles that may have special requirements or characteristics. Similar to the low-frequency container, the special vehicle container must be included in the first CAM after the activation of the CA basic service. It must also be included if the time elapsed since the last CAM generation with the special vehicle container is equal to or greater than 500 ms.

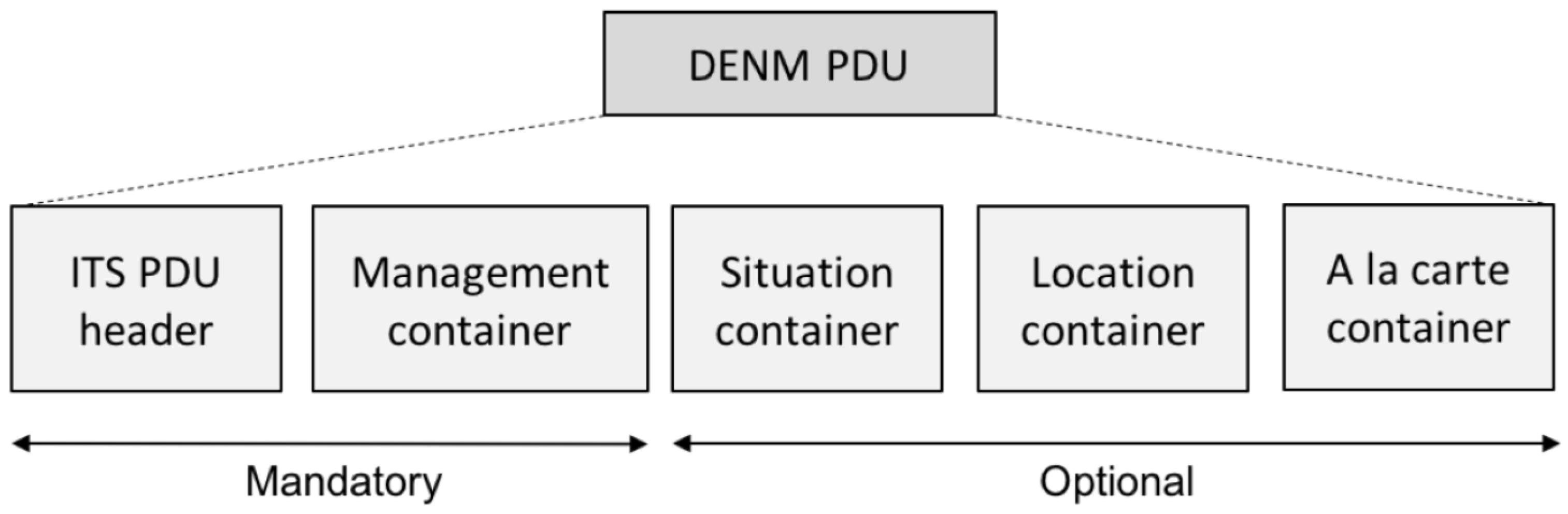

2.1.2. Decentralized Environmental Notification Messages (DENMs)

- ITS PDU Header: This header includes information about the protocol version, message type, and Intelligent Transport System Station (ITS-S) ID of the originating ITS-S.

- DENM Payload: The payload comprises four primary containers, which are transmitted in a fixed sequence:

- −

- Management Container: Contains information pertinent to the management of the DENM, including action IDs, detection times, reference times, and other elements such as termination, event position, relevance distance, relevance traffic direction, validity duration, and transmission interval.

- −

- Situation Container: Provides details about the event type and its context. It must include the informationQuality and eventType elements and may include linkedCause and eventHistory. The informationQuality value ranges from 1 (lowest) to 7 (highest), while eventType includes causeCode and subCauseCode to describe the specific event.

- −

- Location Container: Describes the event’s location. It must include traces and may also include optional elements such as eventSpeed, eventPositionHeading, and roadType.

- −

- à la Carte Container: This container is used to include additional application-specific data not covered by the other containers. It can include elements such as lanePosition, impactReduction, externalTemperature, roadWorks, positioningSolution, and stationaryVehicle, depending on the use case.

2.2. Description of Testing Tools

2.2.1. Karlos

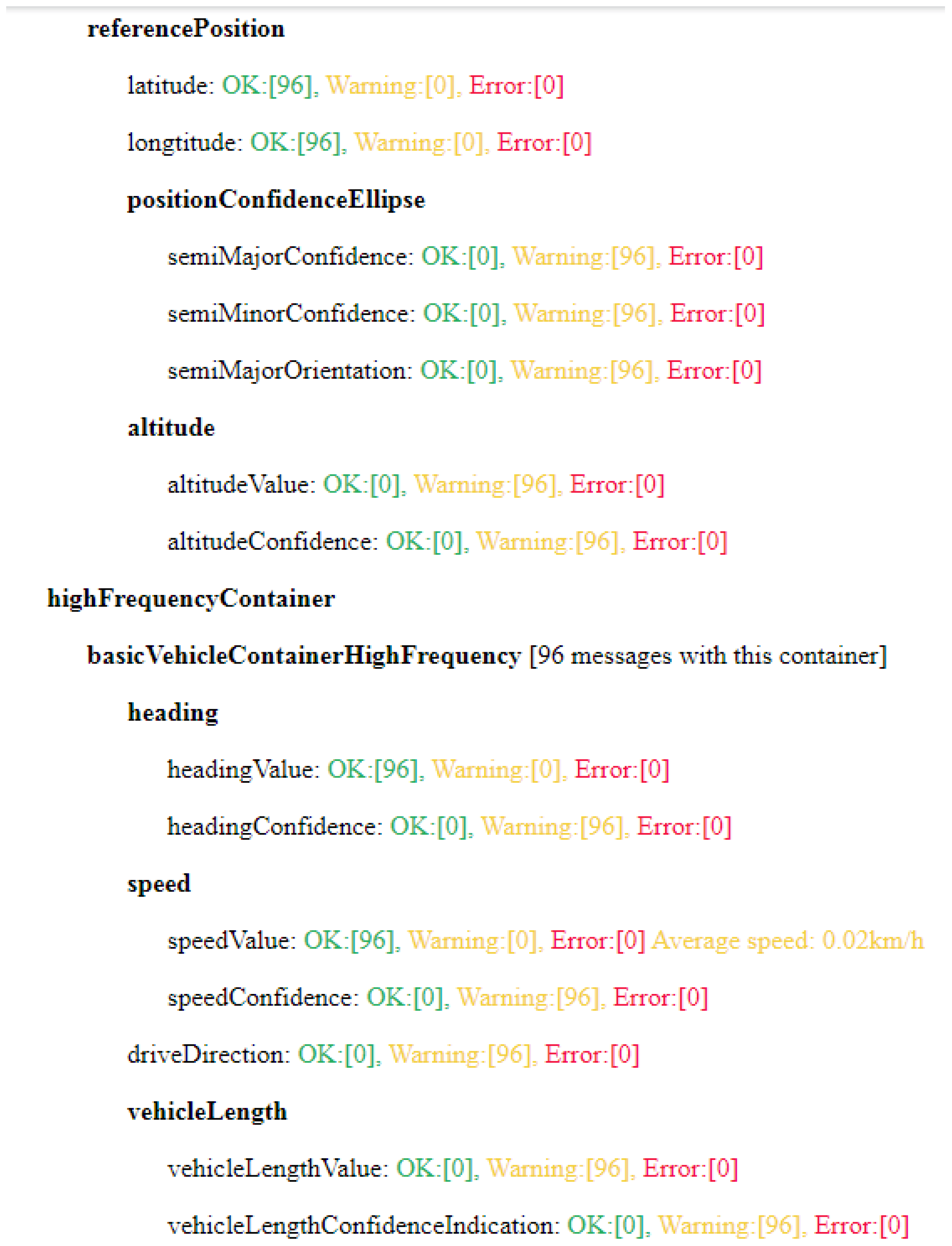

- Summary—This file contains selected parameters from CAMs/DENMs and compares their values with the relevant ranges defined in the respective standards. Users have the option to specify in advance which parameters will be included in this summary. This approach allows for targeted analysis, such as focusing only on speed values (speedValue) and their deviations from expected values. An example of such a summary is shown in Figure 3. Here, you can see an example of a list of parameters and their evaluation based on the number of messages. The number in brackets indicates the number of messages corresponding to each state (OK, error, warning).

- Complete List—This file provides detailed information about each individual CAM/ DENM, enabling users to trace specific messages and conduct in-depth analysis. This list is crucial for potential retrospective tracking of anomalies or erroneous messages.

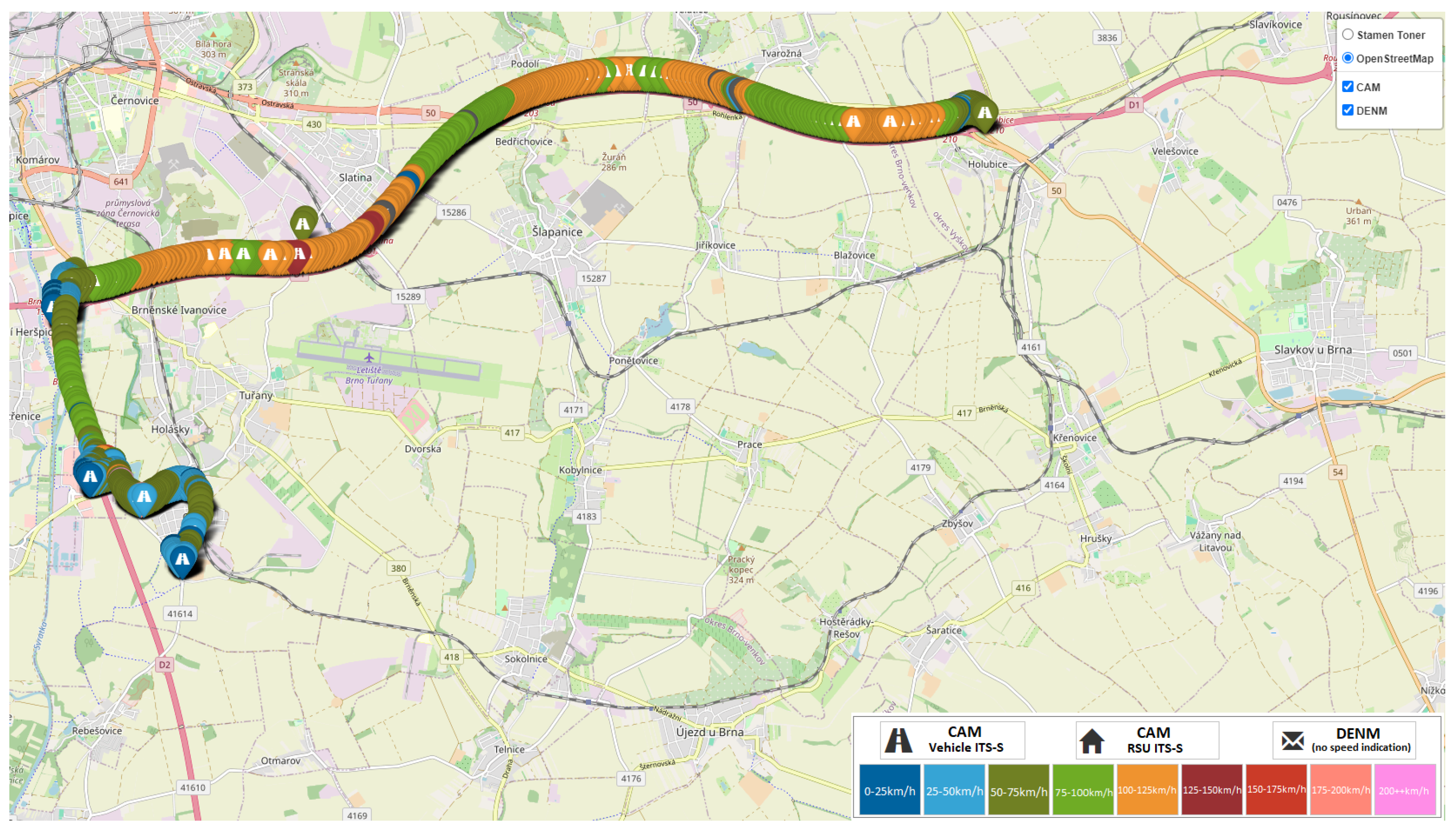

- Map Visualization—This feature allows CAMs/DENMs to be displayed on a map. Each CAM is shown based on the speed indicated in the message, with different speeds represented by different colors. Each message can be clicked on the map to view detailed information about it. An example of this map visualization is shown in Figure 4.

- Correct Result—The analyzed parameter value lies within the permissible range according to the specification. If possible, an interpretation of the value is also provided.

- Result with Warning—This type of result indicates that the parameter value is permissible according to the specification, but its actual significance is limited. This typically occurs in situations where the parameter suggests that the actual value is unavailable or where an optional parameter is missing in the message.

- Incorrect Result—This result indicates situations where the parameter value lies outside the defined range, or where the parameter indicates too high a measurement uncertainty, rendering the measurement invalid. An incorrect result also occurs when a mandatory parameter is missing in the message.

2.2.2. C-ITS SIM

- Broadcasting Verification: One OBU transmits the messages while the second OBU receives and verifies them. This setup allows for accurate identification of any issues with message transmission, confirming whether the problem lies with the third-party unit or not.

- Reception Verification: For reception tests, the dual-OBU setup enables double-checking to ensure that any issues with receiving messages are not due to the C-ITS SIM itself. The software allows users to configure expected parameter values for messages and verifies if these parameters are met. An example of receiving messages is shown in Figure 5, which displays the basic interface. On the left side, you can see all the captured C-ITS messages, including those transmitted by the C-ITS SIM. In the center, there is a regularly updated map displaying C-ITS messages according to their geographical location. On the right side, there is a list of OBUs connected to the software, along with the option to connect additional devices.

2.3. Testing Environment

2.4. Testing Methodology

2.4.1. CAM Scenarios

- Scenario 1: Third-party OBU as a receiver

- stationType set to “trailer”, vehicleRole set to “dangerousGoods”

- Scenario 2: Third-party OBU as a broadcaster

- stationType set to “cyclist”, vehicleRole set to “commercial”

2.4.2. DENM Scenarios

- Scenario 3: Third-party OBU as a receiver

- DENM 1: causeCode set to “Roadworks”, subCauseCode set to “streetCleaning”,relevanceTrafficDirection set to “allTrafficDirection”,TrafficFlowRule set to “NoPassingForTrucks”

- DENM 2: causeCode set to “slowVehicle”, subCauseCode set to “maintenanceVehicle”,informationQuality set to 6, speedLimit set to 30

- Scenario 4: Third party OBU as a broadcaster

- causeCode set to “stationaryVehicle”, relevanceTrafficDirection set to “upstreamTraffic”, informationQuality set to 4

3. Results

3.1. Scenario 1

- Station Type: “trailer”

- Vehicle Role: “dangerous goods”.

3.2. Scenario 2

- Station Type: “cyclist”,

- Vehicle Role: “commercial”.

- Confidence parameters missing: Parameters such as semiMajor, semiMinor, altitude, heading, speed, longitudinalAcceleration, curvature, and yawRate Confidence were not set. These confidence parameters are essential for precise data interpretation and should ideally be included to ensure accurate vehicle information.

- Parameters affected by stationary status: The parameters driveDirection, longitudinalAccelerationValue, and yawRate were absent or marked as unavailable. In this scenario, this is acceptable because the OBU was stationary during testing. For stationary OBUs, unavailable values for dynamic parameters are generally acceptable.

- Default values for vehicle-specific parameters: Parameters like vehicleLengthValue, vehicleLengthConfidenceIndication, and vehicleWidth were marked as unavailable. This is expected because the OBU was not configured for a specific vehicle. These parameters will be filled once the OBU is installed in an actual vehicle, with unavailable being the default value before proper installation.

3.3. Scenario 3

- DENM 1: causeCode set to “Roadworks”, subCauseCode set to “streetCleaning”,relevanceTrafficDirection set to “allTrafficDirection”, TrafficFlowRule set to “NoPassingForTrucks”

- DENM 2: causeCode set to “slowVehicle”, subCauseCode set to “maintenanceVehicle”, informationQuality set to 6, speedLimit set to 30

- If the parameter was always OK for all messages, it is added to the OK column (+1).

- If the parameter contained a warning in at least one case, it is always added to the Warning column (+1), and nothing is added to the OK column.

- If the parameter contained an error in at least one case, it is added to the Error column (+1), with no additions to the previous columns.

- informationQuality—see further;

- speedValue, speedConfidence—optional parameters, not included in the broadcast DENM;

- headingConfidence—the same case as CAM, in laboratory conditions set to unavailable.

3.4. Scenario 4

- causeCode set to “stationaryVehicle”

- relevanceTrafficDirection set to “upstreamTraffic”

- informationQuality set to 4

- altitudeValue, altitudeConfidence: The third-party OBU cannot adequately set the z-axis (altitude) of the event. For example, if an event occurs under a bridge, not having the altitude value could affect drivers on the bridge. Although this results in a warning, the value in the OBU is set to “unavailable”, indicating a limitation in the OBU’s ability to provide complete information.

- speedValue, speedConfidence: In DENM, the Event Speed part of the situation container is relevant for moving use-cases, such as a slow-moving vehicle. The third-party OBU omitted this information entirely. It could either include this part with a speed set to 0 or omit it. The omission leads to warnings in terms of Karlos.

- headingConfidence: The OBU set this parameter to “unavailable”, which Karlos flagged as a warning. While this is not a critical issue, it suggests that the OBU was unable to provide a confidence level for the heading.

- headingValue: The third-party OBU transmitted a headingValue of 4800, which is outside the acceptable range. According to ETSI standards, headingValue should be between 0 and 3600. This discrepancy is considered a critical error as it can lead to interoperability issues. The manufacturer should be contacted to correct this issue to ensure that the OBU meets the validation criteria and functions correctly within the system.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lokaj, Z.; Šrotyř, M.; Vaniš, M.; Mlada, M. Methodology of functional and technical evaluation of cooperative intelligent transport systems and its practical application. Appl. Sci. 2021, 11, 9700. [Google Scholar] [CrossRef]

- Ko, J.; Jang, J.; Oh, C. Assessing the safety benefits of in-vehicle warning information by vehicle interaction analysis in C-ITS environments. J. Korean Soc. Transp. 2021, 39, 1–13. [Google Scholar] [CrossRef]

- Studer, L.; Agriesti, S.; Gandini, P.; Marchionni, G.; Ponti, M. Impact Assessment of Cooperative and Automated Vehicles. In Cooperative Intelligent Transport Systems; The Institution of Engineering and Technology: Stevenage, UK, 2019; pp. 397–417. [Google Scholar] [CrossRef]

- Agriesti, S.A.M.; Studer, L.; Marchionni, G.; Gandini, P.; Qu, X. Roadworks Warning-Closure of a Lane, the Impact of C-ITS Messages. Infrastructures 2020, 5, 27. [Google Scholar] [CrossRef]

- Elhenawy, M.; Bond, A.; Rakotonirainy, A. C-ITS safety evaluation methodology based on cooperative awareness messages. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2471–2477. [Google Scholar]

- Hofer, M.; Bernadó, L.; Rainer, B.; Xu, Z.; Temme, G.; Khan, S.; Behnecke, D.; Utesch, F.; Mahmod, M.; Zemen, T. Evaluation of Vehicle-in-the-Loop Tests for Wireless V2X Communication. In Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall), Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Nilsson, M.; Hallbjörner, P.; Arabäck, N.; Bergqvist, B.; Tufvesson, F. Multipath propagation simulator for V2X Communication Tests on Cars. In Proceedings of the 2013 7th European Conference on Antennas and Propagation (EuCAP), Gothenburg, Sweden, 8–12 April 2013; pp. 1342–1346. [Google Scholar]

- Liu, Z.; Liu, Z.; Meng, Z.; Yang, X.; Pu, L.; Zhang, L. Implementation and performance measurement of a V2X communication system for vehicle and pedestrian safety. Int. J. Distrib. Sens. Netw. 2016, 12, 1550147716671267. [Google Scholar] [CrossRef]

- Soua, S.; Merdrignac, P.; Shagdar, O. Experimental Evaluation of Adjacent Channel Interferences Effects on Safety-related V2X Communications. In Proceedings of the 2019 IEEE Vehicular Networking Conference (VNC), Los Angeles, CA, USA, 4–6 December 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Sassi, A.; Charfi, F.; Kamoun, L.; Elhillali, Y.; Rivenq, A. Experimental measurement for vehicular communication evaluation using OBU ARADA System. In Proceedings of the 2015 International Wireless Communications and Mobile Computing Conference (IWCMC), Dubrovnik, Croatia, 24–28 August 2015; pp. 1358–1364. [Google Scholar] [CrossRef]

- Bae, J.-K.; Park, M.-C.; Yang, E.-J.; Seo, D.-W. Implementation and Performance Evaluation for DSRC-Based Vehicular Communication System. IEEE Access 2021, 9, 6878–6887. [Google Scholar] [CrossRef]

- Ge, Y.; Wu, Y.; Zeng, L.; Zu, H.; Yu, R.; Han, Q. Vehicle Density Oriented V2V Field Test Architecture and Test Procedure Design. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Duan, X.; Yang, Y.; Tian, D.; Wang, Y.; Li, T. A V2X communication system and its performance evaluation test bed. In Proceedings of the 2014 IEEE 6th International Symposium on Wireless Vehicular Communications (WiVeC 2014), Vancouver, British, 14–15 September 2014; pp. 1–2. [Google Scholar] [CrossRef]

- Ekedebe, N.; Lu, C.; Yu, W. Towards experimental evaluation of intelligent Transportation System safety and traffic efficiency. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 3757–3762. [Google Scholar] [CrossRef]

- Chen, L.; Wang, J.; Gao, Z.; Dong, J.; Luo, D.; Liu, Y.; Wang, B.; Xie, S. Research on Traffic Adaptability Testing and Assessment Method of Connected Vehicle Under Platoon Driving Scenario. IEEE Access 2021, 9, 121217–121239. [Google Scholar] [CrossRef]

- Weiß, C. V2X communication in Europe—From research projects towards standardization and field testing of vehicle communication technology. Comput. Netw. 2011, 55, 3103–3119. [Google Scholar] [CrossRef]

- Chen, S.; Hu, J.; Shi, Y.; Zhao, L.; Li, W. A Vision of C-V2X: Technologies, Field Testing, and Challenges with Chinese Development. IEEE Internet Things J. 2020, 7, 3872–3881. [Google Scholar] [CrossRef]

- Maglogiannis, V.; Naudts, D.; Hadiwardoyo, S.; van den Akker, D.; Marquez-Barja, J.; Moerman, I. Experimental V2X Evaluation for C-V2X and ITS-G5 Technologies in a Real-Life Highway Environment. IEEE Trans. Netw. Serv. Manag. 2021, 19, 1521–1538. [Google Scholar] [CrossRef]

- Brož, J.; Tichý, T.; Angelakis, V.; Bělinová, Z. Usage of V2X Applications in Road Tunnels. Appl. Sci. 2022, 12, 4624. [Google Scholar] [CrossRef]

- Bey, T.; Tewolde, G. Evaluation of DSRC and LTE for V2X. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 1032–1035. [Google Scholar] [CrossRef]

- Mavromatis, I.; Tassi, A.; Piechocki, R.J. Operating ITS-G5 DSRC over Unlicensed Bands: A City-Scale Performance Evaluation. In Proceedings of the 2019 IEEE 30th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Istanbul, Turkey, 8–11 September 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Shi, M.; Lu, C.; Zhang, Y.; Yao, D. DSRC and LTE-V communication performance evaluation and improvement based on typical V2X application at intersection. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 556–561. [Google Scholar] [CrossRef]

- ETSI EN 302 637-2 V1.3.1 (2014-09); Intelligent Transport Systems (ITS); Vehicular Communications; Basic Set of Applications; Part 2: Specification of Cooperative Awareness Basic Service. European Telecommunications Standards Institute (ETSI): Sophia-Antipolis, France, 2014.

- Lokaj, Z.; Srotyr, M.; Vanis, M.; Broz, J. Technical part of evaluation solution for cooperative vehicles within C-ROADS CZ project. In Proceedings of the 2020 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 25 June 2020; pp. 1–5. [Google Scholar]

- ETSI EN 302 637-3 V1.2.1 (2014-09); Intelligent Transport Systems (ITS); Vehicular Communications; Basic Set of Applications; Part 3: Specifications of Decentralized Environmental Notification Basic Service. European Telecommunications Standards Institute (ETSI): Sophia-Antipolis, France, 2014.

- Lokaj, Z.; Srotyr, M.; Vanis, M.; Broz, J.; Mlada, M. C-ITS SIM as a tool for V2X communication and its validity assessment. In Proceedings of the 2021 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2022; pp. 1–5. [Google Scholar]

| Container | Total | OK | Warning | Error |

|---|---|---|---|---|

| Header | 3 | 3 | 0 | 0 |

| CAM | 1 | 1 | 0 | 0 |

| basic | 8 | 2 | 6 | 0 |

| highFrequency | 15 | 3 | 12 | 0 |

| lowFrequency | 3 | 3 | 0 | 0 |

| dangerousGoods | 1 | 1 | 0 | 0 |

| Total | 31 | 13 | 18 | 0 |

| Container | Total | OK | Warning | Error |

|---|---|---|---|---|

| Header | 3 | 3 | 0 | 0 |

| CAM | 1 | 1 | 0 | 0 |

| basic | 8 | 2 | 6 | 0 |

| highFrequency | 15 | 3 | 12 | 0 |

| lowFrequency | 3 | 3 | 0 | 0 |

| Total | 30 | 12 | 18 | 0 |

| Container | Total | OK | Warning | Error |

|---|---|---|---|---|

| Header | 3 | 3 | 0 | 0 |

| Management | 10 | 10 | 0 | 0 |

| Situation | 3 | 2 | 1 | 0 |

| Location | 5 | 2 | 3 | 0 |

| Alacarte | 1 | 1 | 0 | 0 |

| Total | 22 | 18 | 4 | 0 |

| Container | Total | OK | Warning | Error |

|---|---|---|---|---|

| Header | 3 | 3 | 0 | 0 |

| Management | 10 | 8 | 2 | 0 |

| Situation | 3 | 3 | 0 | 0 |

| Location | 5 | 1 | 3 | 1 |

| Alacarte | 1 | 1 | 0 | 0 |

| Total | 22 | 16 | 5 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lokaj, Z.; Vaniš, M.; Holý, R.; Šrotýř, M.; Zajíček, M.; Huang, S.-C. Automated Evaluation of C-ITS Message Content for Enhanced Compliance and Reliability. Appl. Sci. 2024, 14, 9526. https://doi.org/10.3390/app14209526

Lokaj Z, Vaniš M, Holý R, Šrotýř M, Zajíček M, Huang S-C. Automated Evaluation of C-ITS Message Content for Enhanced Compliance and Reliability. Applied Sciences. 2024; 14(20):9526. https://doi.org/10.3390/app14209526

Chicago/Turabian StyleLokaj, Zdeněk, Miroslav Vaniš, Radek Holý, Martin Šrotýř, Martin Zajíček, and Shih-Chia Huang. 2024. "Automated Evaluation of C-ITS Message Content for Enhanced Compliance and Reliability" Applied Sciences 14, no. 20: 9526. https://doi.org/10.3390/app14209526

APA StyleLokaj, Z., Vaniš, M., Holý, R., Šrotýř, M., Zajíček, M., & Huang, S.-C. (2024). Automated Evaluation of C-ITS Message Content for Enhanced Compliance and Reliability. Applied Sciences, 14(20), 9526. https://doi.org/10.3390/app14209526